Abstract

In general, each country continuously focuses on improving the performance of the education system. Our study provides suggestions regarding the improvement by taking the data in the Indian context using data envelopment analysis (DEA). To the best of our knowledge, our paper is the first study which is using the National Institutional Ranking Framework (NIRF) data. In this study, we consider 61 educational institutions of India to rank them according to their performances. The ranking is done on the basis of the efficiency scores of the institutions. To rank the efficient institutions super-efficiency DEA model is applied. Finally, some suggestions and concluding remarks are given for inefficient institutions to make them efficient.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Education and research play a significant role in the progress of any Nation. After The United States of America and China, India has the third-largest higher education system in the world. Currently, In India there are more than 700-degree granting institutions and 35,500 affiliated colleges with 20 million students. Among these institutions, which one is the best? This can be the question of intense debate. Data envelopment analysis (DEA) can answer this question in an imposing manner. DEA is a data-driven technique that is used to measure the performance of decision making units (DMUs). DMUs are those entities which utilize multiple inputs to produce multiple outputs. Examples include the performance assessment problems of educational institutions, hospitals, libraries, banks, transport sector, airlines, telecom companies etc. (Cooper et al. (2000)), which have earlier been reported by Bhattacharyya et al. (1997), Arya and Yadav (2018), Puri and Yadav (2013) and Nigam et al. (2012).

To measure the performances of DMUs, there are several parametric approaches like stochastic frontier and non-parametric approaches like Free Disposal Hull (FDH) and DEA are available in literature. DEA is a methodology that is most suitable for assessing the achievements of non-profitable organizations because for them, performance signals such as profitability and income do not work convincingly. Numerous approaches for evaluating different efficiency measures such as technical efficiency measure, pure technical efficiency measure, scale efficiency measure, cost efficiency measure, revenue efficiency measure, profit efficiency measure, mix efficiency measure, etc. have been developed in DEA.

There are many studies done on academic institutions. Johnes (2006) studied the odds of measuring efficiency of higher educational institutions. In this study, he applied DEA to measure the performance of over 100 higher educational institutions in England by using the data for the year 2000–01. Sinuany-Stern et al. (1994) examined the relative performance of 21 departments of Ben-Gurion University, Israel. Arcelus and Coleman (1997) considered the academic units of the University of New Brunswick, Canada. Bessent et al. (1982) analyzed the performance of community college in the USA. Tomkins and Green (1988) examined the complete performance of accounting departments of the U.K. University with input as staff number and output as student numbers. Kantabutra and Tang (2010) evaluated the performance of Thai Universities using non-parametric approach DEA. In this study, two efficiency models, the research efficiency model and the teaching efficiency model, are considered. Eckles (2010) applied the DEA model developed for National Universities to 93 National liberal arts colleges. Special attention is paid in this study, to the construction of cost per undergraduate as an input variable. It is found that 18 institutes are efficient, and 75 are inefficient. In their study, Chen and Chen (2011) mentioned the quality of Taiwanese Universities is decreasing continuously in recent years. In this study they tried to improve the performance of universities by reducing the majority of cost expenditure. Türkan and Özel (2017) analyzed the productivity of the State Universities in Turkey with the help of DEA, and the universities are ranked according to their efficiencies using a super-efficiency model. Yang et al. (2018) evaluated the performance and evolution of 64 Chinese research universities for 2010–2013 through a two-stage DEA model. They proposed several policies and suggestions to improve the performance of these universities. In studies, it is found that the efficiency scores are sensitive to the choice of the approach. In the present study, we aim to evaluate the performance of top Indian educational institutions through DEA and to analyze the results.

In recent years, to examine the performance of educational institutions many studies have been done (Bowrey and Clements 2020; Bornmann et al. 2020; Singh and Pant 2017; Visbal-Cadavid et al. 2017). In 2015, The Ministry of Human Resource Development, launched and approved The National Institutional Ranking Framework (NIRF) to outline an approach to rank educational institutions in India. The MHRD setup a Core Committee to analyze the general parameters which can be essential in ranking of educational institutions. The committee recommended some important parameters as, “Learning, Teaching, and Resources,” “Graduation Outcomes,” “Inclusivity and Outreach ,” “Research and Professional Practices,” and “Perception.” On the basis of overall recommendations of the committee a method is proposed to rank the educational institutions. In this study, we applied DEA to the data provided by NIRF and analyzed the results.

The rest of the paper is organized as follows: DEA methodology and some DEA models are described in Sect. 2. In Sect. 3, data variables and input and output variables with their relevance to the study is discussed, respectively. The Isotonicity test is conducted in Sect. 4. Section 5 is devoted to the performance assessment of educational institutions. Performance improvement technique and input-output targets are discussed in Sect. 6. Finally, some concluding remarks are given in Sect. 7.

2 Methodology

We apply two basic DEA models in this study first one is the CCR model, and the second one is the BCC model.

2.1 The CCR model

In 1978, Charnes, Cooper, and Rhodes introduced first DEA model and it is often called the CCR DEA model, which is a generalization of Farrell’s (1957) technical efficiency measure.

Let us assume that the performance of a set of n DMUs (\({\textit{DMU}}_{j}; j=1,2,3,\ldots ,n\)) is to be evaluated such that each DMU uses m inputs (\(i=1,2,3,\ldots ,m\)) and produces s outputs (\(r=1,2,3,\ldots ,s\)). Then the efficiency score of the kth DMU can be defined with the help of the following CCR DEA model:

Model 1

subject to,

where \(y_{rj}\) is the amount of the rth output produced by the jth DMU; \(x_{ij}\) is the amount of the ith input used by the jth DMU; \(u_{ik}\) and \(v_{rk}\) are the weights corresponding to the ith input and rth output respectively. \({\textit{DMU}}_k\) is said to be CCR efficient if \(E_{k}=1\), otherwise CCR in-efficient. CCR model can be found in two froms: Input-Oriented and Output-Oriented CCR Model. These two forms of CCR Model are shown as follows (Table 1):

2.2 The BCC model

Banker et al. (1984) extended the CCR model known as the BCC (Banker, Charnes, and Cooper) model, by adding the convexity constraint, which represents returns to scale (RTS). The BCC model calculates the PTE of each DMU. The BCC model is given as follows:

For \(k=1,2,3,\ldots ,n\) we have

subject to,

The CCR and the BCC models work under constant returns to scale (CRS) and variable returns to scale (VRS), respectively.

CRS indicates that when we increase all the inputs by a factor of g, it leads to a g-fold increment in all the outputs as well.

VRS indicates that when we increase all the inputs by a factor of g, it leads to an h-fold (\(g \ne h\)) increment in all the outputs.

If \(h \le g\), then it is called decreasing returns to scale (DRS)

If \(h \ge g\), then it is called increasing returns of scale (IRS)

The efficiency score under CRS is known as overall technical efficiency (OTE) or simply technical efficiency (TE) score. A DMU is said to be CCR-efficient if \(\eta _k^*=1\) and corresponding slacks are zero in Model 5.

Efficiency score under VRS is known as pure technical efficiency (PTE) score. The PTE score reflects the managerial performance to organize the inputs in the production process. A DMU is said to be BCC efficient if \(\theta _k^*=1\) and corresponding slacks are zero in the BCC model.

The appropriate size of a DMU reflects the scale efficiency (SE). It is defined as follows:

The peer count for an efficient \({\textit{DMU}}_k\) is the number of inefficient DMUs that became efficient with the help of \({\textit{DMU}}_k\). The peer count of an inefficient DMU is 0.

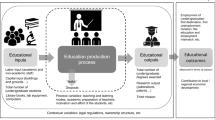

3 Data and variables

In the present work, we have considered 61 top educational institutions (established before the year 1980) of India as DMUs. The data has been collected from the website of NIRF (2018). Since the data has been collected from the Indian Govt. website , there is no necessity to cross-check the data. We have two types of variables in our study,

-

(a)

Output variables,

-

(b)

Input variables.

The detailed explanation of the variables is given in the follwing sub-section.

3.1 Selection of input and output variables

The selection of input and output variables is totally depends on the decision-maker. In the education sector, there is a great fluctuation in the opinions of decision-makers. Some decision-makers may consider A as input while some other may consider A as output. In DEA, an input variables set used for evaluating the performance of DMUs in the field of education is always picked apart due to its conductive nature. In our present study, we evaluate the performance of different institutions. We define input and output variables for each department as follows:

-

Input variables (NIRF 2018): We have considered two input variables for our study. Each input and the reason why it is a correct choice for input is explained below:

-

(i)

Student-Faculty ratio: For any institute, it is necessary that the student-faculty ratio should not be very high. According to the World Report and the U.S. News, it is beneficial to attend the smaller schools for better participation in classes. If you are in a large class where there are hundreds of students then it will be difficult for the professor to notice every student. So the choice of student-faculty ratio as an input in our study is quite relevant to our study. Breu and Raab (1994), Colbert et al. (2000) and Ray and Jeon 2008 also used faculty to student ratio as input variable in their study.

-

(ii)

Financial resources: Funds are needed for any institution to run its activities smoothly. The choice of financial resources is quite relevant to our study because every institute needs some money to perform activities like salary to the staff, to pay the electricity bill, water supply bill and many other activities. Allocation of financial support depends upon the number of research papers published in journals and conference proceedings, the number of students enrolled in each program. Arcelus and Coleman (1997), Abbott and Doucouliagos (2009), and Tyagi et al. (2009) also treated financial resources as input variable in their study.

-

(i)

-

Output variables (NIRF 2018): For our study, we have considered four output variables as Number of students who got the placement or went for higher studies, Number of publications, Patent details, and Perception. The choice of output variable totally depends on decision-maker. But the choices of output variables must be appropriate. The following shows the relevance of the output variables taken.

-

(i)

Growth Index: According to Blessinger (2017) one aim of education (at any level) is to teach students models, theories, and principles that have been developed over time through research and scholarships, in order for the students to develop higher levels of critical and creative thinking. In each institute, there are two types of students one who prefers placements after completion of courses of study and others who want to go for higher studies. By considering these two choices of students, we have constructed an index, called growth index. In this index, we have considered both kinds of students and we have weighted them equally because this is an individuals’ choice either he wants placement or want to go for higher study. Tyagi et al. (2009) also used this type of index in their research. The growth index is defined as,

Growth Index (G.I.) = The number of placed students + The number of students went for higher studies.

-

(ii)

Publicatons: The research is an important work of any institute. The choice of publications as an output variable is quite relevant to our study because higher the publications of any institution will mimic the involvement of that institution in research. Thursby (2000) and Kao and Hung (2008) also used publications as an output variable in their studies.

-

(iii)

Patent details: An intellectual property that entitles its holder to a limited time in making, selling, and using inventions for a limited period is called a patent. This average period is usually 20 years. Copyrights are honored by the exchange of public disclosure of the composer. At each institute, students work in their own labs and do specific activities for social welfare. It can easily be assumed that if any institution has a high number of patents then that institution is doing well in the field of most important inventions. Therefore the selection of copyright data as output variants is appropriate in our study.

-

(iv)

Perception: This is a very interesting output variable which we are going to consider in this study. NIRF has given some marks to each institute according to the following factors:

-

1.

Peer Perception: Employers and Research Investors (PREMP): PREMP is done through an online survey. In a time-bound fashion, the survey is conducted over a large category of Officials of Funding agencies in Govt., Professionals from Reputed Organizations, Employers and Research Investors, NGOs, private sectors, etc.

-

2.

Peer Perception: Academics (PRACD): PRACD is done with the help of a survey conducted over a large category of academics to confirm their choice for graduates of various educational institutions.

-

3.

Public Perception (PRPUB): In response to advertisements, online data is collected from the general public. Based on collected data, PRPUB is done. PRPUB confirms the choice of the general public for choosing institutions for their friends and wards.

-

4.

Competitiveness (PRCMP): By counting the number of PG and Ph.D. students admitted to top institutions in the previous year, PRCMP is calculated.

When an institution performs well, public opinion for that institute will be positive and the opinion creates a perception for that institution. So the choice of perception as an output variable is quite relevant to our study because the perception for any institution depends on its performance.

-

1.

-

(i)

4 The isotonicity test

Isotonicity test is based on the fact that with every increase in input, the output should not decrease i.e., the correlation coefficient between each input and output should be positive (Golany and Roll 1989). The correlation coefficients of all inputs-outputs for the years 2016–17 and 2017–18 are given in Table 2. Since all correlation coefficients are positive, the isotonicity test is passed.

5 Performance analysis for input and output based assessment

The TE, PTE, and SE scores are calculated for 61 Indian educational institutions for the years 2016–17 and 2017–18. The results are shown in Tables 3 and 4. The output-oriented DEA model is applied to evaluate the performances of educational institutions. There is not found any general significant pattern of distribution of efficiency scores for all the institutions.

5.1 TE estimates

The TE or OTE score is evaluated under CRS. With the help of the TE score, we can know the reason of the inefficiencies of the institutions. The score is affected by two factors.

-

(i)

Configuration of input and output,

-

(ii)

Size of operations.

The TE score tells us that which institute is on the efficiency frontier and which is not. If TE score for any institution is 1, then it is called relatively efficient with respect to other institutions and lies on the efficiency frontier. If the TE score is less than 1 for any institution, then it is called relatively inefficient and lies below the efficiency frontier. From Table 3 , it is clear that DMUs \(D_{3}\), \(D_{4}\), \(D_{5}\), \(D_{13}\), \(D_{21}\),\(D_{43}\), \(D_{55}\), \(D_{57}\), \(D_{58}\) and \(D_{61}\) are technically efficient and form the CRS efficiency frontier for the year 2016–17 as they have TE score 1. Table 4 says that DMUs \(D_{1}\), \(D_{3}\), \(D_{10}\), \(D_{19}\), \(D_{23}\),\(D_{25}\), \(D_{42}\) and \(D_{55}\) are efficient in the year 2017–18. DMUs \(D_{3}\) and \(D_{55}\) are the DMUs which are efficient for both the years. These institutions are the best examples for all the remaining inefficient institutions. We can call them as the ‘global leaders’ for inefficient institutions. The DMUs \(D_{13}\) and \(D_{19}\) have the highest peer count in the years 2016–17 and 2017–18 respectively. The higher peer count represents the robustness of the DMU with respect to other DMUs. So, the institutions with highest peer counts are role models for all other institutions. The institutions \(D_{1}\), \(D_{2}\), \(D_{3}\), \(D_{4}\), \(D_{5}\), \(D_{7}\), \(D_{8}\), \(D_{9}\), \(D_{10}\), \(D_{13}\), \(D_{19}\), \(D_{21}\), \(D_{24}\), \(D_{42}\), \(D_{55}\), \(D_{56}\), \(D_{58}\), \(D_{60}\) and \(D_{61}\) have TE scores above average for both the years. So, their performances can be considered relatively satisfactory from the other institutions which score below average. The institutions \(D_{12}\), \(D_{14}\), \(D_{15}\), \(D_{20}\), \(D_{27}\), \(D_{28}\), \(D_{30}\), \(D_{31}\), \(D_{33}\), \(D_{34}\), \(D_{37}\), \(D_{38}\), \(D_{39}\), \(D_{40}\), \(D_{41}\), \(D_{44}\), \(D_{45}\), \(D_{46}\), \(D_{47}\), \(D_{48}\), \(D_{52}\), \(D_{53}\) and \(D_{54}\) have TE score below average for both the years. So, their performance can be considered very poor in this period.

5.2 PTE estimates

The PTE score is evaluated under VRS. The BCC model is used to determine PTE score. The reason for using BCC model is that by this we can understand whether inefficiency in any institution is due to unfavorable size or inefficient use of resources. The PTE is a measure which tells us that how efficiently the DMU converts its multiple inputs into outputs. The results of PTE are shown in Tables 3 and 4. It is easy to see that many institutions which have low CRS scores have better PTE scores. In comparison with CCR model more number of institutions have PTE scores 1. Out of 61 institutions, 9 institutions \(D_{1}\), \(D_{2}\), \(D_{3}\), \(D_{10}\), \(D_{21}\), \(D_{46}\), \(D_{49}\), \(D_{55}\) and \(D_{58}\) are pure technically efficient and form VRS frontier for both years. The institutions \(D_{1}\), \(D_{2}\), \(D_{3}\), \(D_{4}\), \(D_{5}\), \(D_{7}\), \(D_{8}\), \(D_{9}\), \(D_{10}\), \(D_{11}\), \(D_{13}\), \(D_{17}\), \(D_{19}\), \(D_{21}\), \(D_{23}\), \(D_{42}\), \(D_{46}\), \(D_{55}\), \(D_{58}\), \(D_{60}\) and \(D_{61}\) have PTE score above average for both the years means they are utilizing their resources effectively for both the years. The institutions \(D_{12}\), \(D_{15}\), \(D_{20}\), \(D_{27}\), \(D_{28}\), \(D_{30}\), \(D_{32}\), \(D_{33}\), \(D_{34}\), \(D_{36}\), \(D_{37}\), \(D_{39}\), \(D_{41}\), \(D_{44}\), \(D_{47}\), \(D_{48}\), \(D_{51}\), \(D_{53}\), \(D_{54}\) and \(D_{59}\) have PTE scores below average for both the years which means they are not using their resources effectively. These institutions must utilize their resources wisely so that they can achieve higher efficiency scores.

5.3 SE estimates

TE can be decomposed into two components;

-

(i)

PTE

-

(ii)

SE

The first component of PTE is obtained by estimating the frontier under VRS. It is called pure technical efficiency because it is an evaluation of technical efficiency without scale efficiency. Thus, we can understand that PTE is used to evaluate the managerial performance of any DMU. The ratio of TE to PTE is called the scale efficiency (SE). Thus, SE represents the expertise of the management that how effectively it uses its resources. For our study, we can say that SE provides us the idea to decide the size of the institution. If the SE score is 1 for any department, then we can say that the institute is operating at optimal scale size and there is no antagonistic impact of scale size on the institution’s efficiency. If the SE score is below 1, then the institute size is not perfect. It is either too big or too small. Tables 3 and 4 shows that the institutions \(D_{3}\) and \(D_{55}\) have SE scores 1 for both years. Hence these institutes are operating at optimal scale size for both the years. All other institutes are not able to maintain good scores consistently.

5.4 Returns to scale (RTS)

The RTS depict whether the size of a DMU is too large or too small. Tables 3 and 4 show the institutions operating under CRS, IRS and DRS. If any institute is operating at IRS or DRS, then the efficiency of these institutes can be increased by adjusting their sizes. If any institute is operating at CRS, its efficiency cannot be improved because it is utilizing its resources efficiently. Tables 3 and 4 show that in the year 2016–17, the institutions \(D_{2}\), \(D_{6}\), \(D_{7}\), \(D_{8}\), \(D_{11}\), \(D_{12}\), \(D_{17}\), \(D_{18}\), \(D_{22}\), \(D_{24}\), \(D_{25}\), \(D_{28}\)

5.5 Ranking

In DEA, the DMU with efficiency score 1 is called the efficient DMU. To rank all those DMUs which have efficiency 1, we can apply any super-efficiency technique available in literature. Andersen and Petersen (1993), Du et al. (2010), and Noura et al. (2011) proposed super-efficiency models in their studies. In this study, we apply the super-efficiency model proposed by Andersen and Petersen (1993). We have shown rankings of DMUs in Tables 3 and 4. The ranking is done according to the efficiency scores of DMUs.

5.6 Descriptive statistics of efficiency scores

The descriptive statistics of TE, PTE, and SE scores are shown in Table 5. From this table, we find that out of 61 institutions 51 and 53 are technical inefficient in the year 2016–17 and 2017–18 respectively. In the year 2016–17, the average TE (ATE) score of all institutions is 0.6296, i.e., 62.96%. The TE score has a range between 0.141 and 1. The average technical inefficiency (ATI) is 37.04%. While in the year 2017–18, the ATE score of all institutions is 0.5201, i.e., 52.01%. The TE score has a range between 0.055 and 1. ATI is 47.99%.

Table 5 indicates that the average PTE (APTE) scores for all institutions are 0.6925 and 0.6039 for the years 2016–17 and 2017–18 respectively. In the year 2016–17, the PTE score varies between 0.146 and 1. The average pure technical inefficiency (APTI) is 30.75%. It means that 30.75% of APTI out of 37.04% of ATI is due to inappropriate management of institutes in utilizing resources. The remaining part of ATI is due to the fact that the institutions are not operating at their optimal size. While in the year 2017–18, the PTE score has the range between 0.07 and 1. APTI is 39.61%. It means that 39.61% of APTI out of 47.99% of ATI is due to inappropriate management of institutes in utilizing resources. The remaining part of ATI is due to the fact that the institutions are not operating at their optimal size.

The average scale efficiency (ASE) scores are 0.9106 and 0.8661 for all institutions in the years 2016–17 and 2017–18 respectively. In the year 2016–17, the SE score ranges between 0.398 and 1. The average scale inefficiency (ASI) score is 8.94%. While in the year 2017–18, the SE score of all institutions ranges between 0.24 and 1. The ASI score is 13.39%.

6 Efficiency improvements for inefficient DMUs

In DEA, there are mainly two ways to make inefficient \({\textit{DMU}}_{k}\) as efficient DMU.

-

(i)

Input reduction: Reduce inputs of \({\textit{DMU}}_{k}\) while maintaining the levels of outputs of \({\textit{DMU}}_{k}\).

-

(ii)

Output augmention: Increase outputs of \({\textit{DMU}}_{k}\) while maintaining the levels of inputs of DMUk.

Percentage change in outputs and inputs can be calculated as follows:

where change in the mth input of \({\textit{DMU}}_{k}\) = Actual mth input of \({\textit{DMU}}_{k}\) –Target of the mth input of \({\textit{DMU}}_{k}\); change in the sth output of \({\textit{DMU}}_{k}\) = Target of the sth output of \({\textit{DMU}}_{k}\) –Actual sth output of \({\textit{DMU}}_{k}\).

In the present study, output-oriented DEA model is applied. The average increase in outputs which is needed for inefficient DMUs to become efficient is given in Table 6 for the years 2016–17 and 2017–18 as follows:

7 Conclusions

This paper evaluates the performance efficiencies of educational institutions in India with the help of DEA. The main objective of our study is to analyze the year-wise performances of Indian educational institutions. In this study, we have considered 61 institutions and calculated TE, PTE, and SE scores for these institutions for the years 2016–17 and 2017–18. We have used 2 inputs (Student-faculty ratio, financial resources,) and 4 outputs (Growth index, Publications, Patent details, Perception) for our study.

The DEA results indicate that only 10 institutions in the year 2016–17 and 8 in the year 2017–18 are found to be technically efficient. To rank the technically efficient institutions, we applied the super-efficiency technique. Consequently, the institutions are ranked (see Tables 4 and 5). Finally, our study gives the following concluding remarks and suggestions which policymakers can use to improve the performance efficiencies of the institutions.

-

(i)

In the year 2016–17, all 61 institutions on average are 30.75% managerial inefficient and 8.94% scale inefficient. While in the year 2017–18, all 61 institutions are 39.61% managerial inefficient and 13.39% scale inefficient. Thus, more efforts should be made to improve managerial efficiency of all institutions as compared to scale efficiency in both the years.

-

(ii)

Output target summary is shown in Table 6. In the year 2016–17, the outputs 1, 2, 3 and 4 of inefficient institutions should be increased by the amount 14.308%, 16.059%, 73.764% and 55.832% respectively to make them efficient. While in the year 2017–18, the outputs \(y_{1}\), \(y_{2}\), \(y_{3}\) and \(y_{4}\) of inefficient institutions should be increased by the amount 0%, 5.373%, 157.855% and 77.310% respectively to make them efficient.

-

(iii)

The efficient institutions form efficiency frontier and known as best performing institutions. In the year 2016–17; \(D_{3}\), \(D_{4}\), \(D_{5}\), \(D_{13}\), \(D_{21}\), \(D_{43}\), \(D_{55}\), \(D_{57}\), \(D_{58}\) and \(D_{61}\) while in the year 2017–18; \(D_{1}\), \(D_{3}\), \(D_{10}\), \(D_{19}\), \(D_{23}\), \(D_{25}\), \(D_{42}\) and \(D_{55}\) are efficient in the year 2017-18. DMUs \(D_{3}\) and \(D_{55}\) are the efficient institutions.

-

(iv)

On the basis of TE score, it can be seen that the DMU \(D_{3}\) and \(D_{1}\) are the best performing institutions for the years 2016–17 and 2017–18 respectively while \(D_{20}\) is the worst performing institution for both the years.

-

(v)

On the basis of peer count \(D_{13}\) in 2016–17 and \(D_{19}\) in 2017–18 are highly robust institutions.

We can develop fuzzy DEA models to deal with the uncertainty present in data in real-life situations in future work.

References

Abbott M, Doucouliagos C (2009) Competition and efficiency: overseas students and technical efficiency in Australian and New Zealand universities. Educ Econ 17(1):31–57

Andersen P, Petersen NC (1993) A procedure for ranking efficient units in data envelopment analysis. Manag Sci 39(10):1261–1264

Arcelus F, Coleman D (1997) An efficiency review of university departments. Int J Syst Sci 28(7):721–729

Arya A, Yadav SP (2018) Development of FDEA models to measure the performance efficiencies of DMUs. Int J Fuzzy Syst 20(1):163–173

Banker RD, Charnes A, Cooper WW (1984) Some models for estimating technical and scale inefficiencies in data envelopment analysis. Manag Sci 30(9):1078–1092

Bessent A, Bessent W, Kennington J, Reagan B (1982) An application of mathematical programming to assess productivity in the Houston independent school district. Manag Sci 28(12):1355–1367

Bhattacharyya A, Lovell CK, Sahay P (1997) The impact of liberalization on the productive efficiency of Indian commercial banks. Eur J Oper Res 98(2):332–345

Blessinger P (2017) Reaching hard to reach students through student learning communities. J Educ Innov Partnersh Chang 3(1):259–261

Bornmann L, Gralka S, de Moya Anegón F, Wohlrabe K (2020) Efficiency of universities and research-focused institutions worldwide: an empirical DEA investigation based on institutional publication numbers and estimated academic staff numbers

Bowrey G, Clements M (2020) DEA analysis of performance efficiency in Australian Universities using student success rates against attrition, retention and student to staff ratios. J New Bus Ideas Trends 18(1):20–28

Breu TM, Raab RL (1994) Efficiency and perceived quality of the nation’s “top 25” National Universities and National Liberal Arts Colleges: an application of data envelopment analysis to higher education. Socio-Economic Plan Sci 28(1):33–45

Chen J-K, Chen I-S (2011) Inno-Qual efficiency of higher education: empirical testing using data envelopment analysis. Expert Syst Appl 38(3):1823–1834

Colbert A, Levary RR, Shaner MC (2000) Determining the relative efficiency of MBA programs using DEA. Eur J Oper Res 125(3):656–669

Cooper WW, Seiford L, Tone K (2000) Data envelopment analysis: theory, methodology, and applications, references and DEA-solver software. Kluwer Academic Publishers, Boston

Du J, Liang L, Zhu J (2010) A slacks-based measure of super-efficiency in data envelopment analysis: a comment. Eur J Oper Res 204(3):694–697

Eckles JE (2010) Evaluating the efficiency of top liberal arts colleges. Res High Educ 51(3):266–293

Farrell MJ (1957) The measurement of productive efficiency. J Roy Stat Soc Ser A (General) 120(3):253–281

Golany B, Roll Y (1989) An application procedure for DEA. Omega 17(3):237–250

Johnes J (2006) Data envelopment analysis and its application to the measurement of efficiency in higher education. Econ Educ Rev 25(3):273–288

Kantabutra S, Tang JC (2010) Efficiency analysis of public universities in Thailand. Tert Educ Manag 16(1):15–33

Kao C, Hung H-T (2008) Efficiency analysis of university departments: an empirical study. Omega 36(4):653–664

Nigam VS, Thakur T, Sethi V, Singh R (2012) Performance evaluation of Indian mobile telecom operators based on data envelopment analysis. J Inst Eng (India) Ser B 93(2):111–117

NIRF (2018) NIRF report 2017-18. Retrieved from https://www.nirfindia.org/Docs/RankingMethodology. Accessed July 15 2019

Noura A, Lotfi FH, Jahanshahloo GR, Rashidi SF (2011) Super-efficiency in DEA by effectiveness of each unit in society. Appl Math Lett 24(5):623–626

Puri J, Yadav SP (2013) Performance evaluation of public and private sector banks in India using DEA approach. Int J Oper Res 18(1):91–121

Ray SC, Jeon Y (2008) Reputation and efficiency: a non-parametric assessment of America’s top-rated MBA programs. Eur J Oper Res 189(1):245–268

Singh N, Pant M (2017) Evaluating the efficiency of higher secondary education state boards in India: a DEA-ANN approach. In: International conference on intelligent systems design and applications, pp 942–951

Sinuany-Stern Z, Mehrez A, Barboy A (1994) Academic departments efficiency via DEA. Comput Oper Res 21(5):543–556

Thursby JG (2000) What do we say about ourselves and what does it mean? Yet another look at economics department research. J Econ Lit 38(2):383–404

Tomkins C, Green R (1988) An experiment in the use of data envelopment analysis for evaluating the efficiency of UK university departments of accounting. Financial Account Manag 4(2):147–164

Türkan S, Özel G (2017) Efficiency of State Universities in Turkey during the 2014–2015 academic year and determination of factors affecting efficiency. Egitim ve Bilim 42(191)

Tyagi P, Yadav SP, Singh S (2009) Relative performance of academic departments using DEA with sensitivity analysis. Eval Program Plan 32(2):168–177

Visbal-Cadavid D, Martínez-Gómez M, Guijarro F (2017) Assessing the efficiency of public universities through DEA. A case study. Sustainability 9(8):1416

Yang G-L, Fukuyama H, Song Y-Y (2018) Measuring the inefficiency of Chinese research universities based on a two-stage network DEA model. J Informet 12(1):10–30

Acknowledgements

The authors would like to thank the reviewers for their valuable suggestions. The first author gratefully acknowledges financial support provided by The Ministry of Education (MOE), the Govt. of India, through grant no. MHR-01-23-200-428.

Funding

This study was funded by The Ministry of Education, India (MHR01-23-200-428).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Singh, A.P., Yadav, S.P. & Tyagi, P. Performance assessment of higher educational institutions in India using data envelopment analysis and re-evaluation of NIRF Rankings. Int J Syst Assur Eng Manag 13, 1024–1035 (2022). https://doi.org/10.1007/s13198-021-01380-9

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13198-021-01380-9