Abstract

Objectives

Many cognitive changes occur in later life, including declines in attentional control and executive functioning. These cognitive domains appear to be enhanced with mindfulness training, particularly focused attention mindfulness (FAM) meditation, suggesting this practice might slow age-related change. We hypothesized that FAM training would increase self-reported and behaviorally measured mindfulness; improve executive functioning, attentional control, and emotion regulation; and reduce self-reported daily cognitive errors in older adults. To address the call for higher methodological rigor in the field, we used a RCT design with an active control group, measured credibility and expectancy, used objective measures, and attempted to isolate mechanisms of action of mindfulness.

Methods

Fifty older adults aged 65 to 90 (M = 75.7, SD = 5.7) completed pre-training testing followed by 6 weeks of online daily FAM or mind-wandering (control) training and then returned for post-training testing.

Results

Conditions were comparable with respect to credibility and expectancy. However, most hypotheses were not supported. Though aspects of mindfulness, Fs(1,45) ≥ 7.42, ps ≤ .009, ηp2s ≥ 0.13, CIs90% = [0.02, 0.37] and inhibitory control, F(1,43) = 4.95, p = .031, ηp2 = 0.10, CI90% = [0.01, 0.25], increased, this was not specific to mindfulness training. There was modest evidence of an improvement in attentional control specific to the mindfulness group, F(1,43) = 4.59, p = .038, ηp2 = 0.10, CI90% = [0.01, 0.24], but this was not consistent across our two measures.

Conclusions

Results are encouraging for the continued study of mindfulness for improving aspects of attentional control in older adults. However, the present research requires replication in a larger, more diverse sample. Implications for future mindfulness studies with older adults are discussed.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

It is well established that cognition changes in later life. Cognitive domains such as executive functioning (EF) and attentional control reliably decline (Glisky 2007; Park and Reuter-Lorenz 2009), but emotional processing and regulation improve in later life (Kaszniak and Menchola 2012). These seemingly contradictory findings arise out of older adults’ reallocation of limited EF and attentional control resources towards emotionally relevant goals, leading to increased positive emotionality and regulation (Mather and Carstensen 2005). These cognitive changes have consequences for quality of life. Declines in EF and attentional control can reduce independence by limiting ability to successfully manage responsibilities and engage in purposeful and meaningful activities (e.g., managing finances, planning social activities; Royall et al. 2004). In contrast, better emotional regulation can result in greater reported well-being (Mather and Carstensen 2005). Finding ways to enhance EF and attentional control and capitalize on increased emotional processing in later life, even among otherwise healthy older adults, could help maintain higher levels of independence and quality of life for a longer period of time. One potential mechanism to do this is through mindfulness practice, specifically focused attention mindfulness (FAM). Many of the cognitive domains targeted by mindfulness practices overlap with domains of age-related changes, including attentional control, EF, and emotion regulation (Prakash et al. 2014). In fact, self-reported mindfulness tendencies appear to mediate the negative correlation between age and negative affect (Raes et al. 2015), suggesting practicing mindfulness may enhance or capitalize on this naturally occurring bias. Thus, mindfulness practice may be an avenue through which cognitive and emotional functioning can be enhanced in later life.

Research is mixed with respect to the potential utility of mindfulness practice for older adults. Correlational studies of long-term mindfulness meditation demonstrate more consistent cognitive and emotional benefits (Nielsen and Kaszniak 2006; Prakash et al. 2012; van Leeuwen et al. 2009). However, these individuals self-select into long-term meditation and are likely not representative of the general population, limiting generalizability. Further, these correlational studies cannot establish causation of the effects of mindfulness practice. Brief mindfulness-based interventions for previously non-meditating older adults (adults 55+ in both clinical and healthy samples) are mixed with some evidence of cognitive and emotional benefits (Wahbeh et al. 2016; Zellner Keller et al. 2014) and some null findings (Mallya and Fiocco 2016; Smart et al. 2016). Reviews of the potential cognitive and emotional implications of mindfulness for older adults suggest that while the body of literature is overall promising, several key issues may be contributing to heterogeneity in research findings and need to be addressed to move the field forward (Gard et al. 2014a; Geiger et al. 2016).

Although mindfulness training appears acceptable to and feasible for older adults, methodological issues with many current studies limit interpretability (Geiger et al. 2016). These issues include non-randomization, lack of appropriate active control groups (or no control group), small sample sizes combined with attrition rates, and inclusion of only self-report measures (Gard et al. 2014a; Geiger et al. 2016). Use of no-intervention control groups is particularly concerning. There is significant expectancy of improvement associated with mindfulness interventions (Prätzlich et al. 2016) and expectancy may bias post-intervention performance, particularly on self-report measures. To address many of these issues, some have argued that future studies should focus on conducting randomized controlled trials (RCTs) with appropriate controls (Gard et al. 2014a).

The call for higher methodological rigor in mindfulness studies with older adults is consistent with several recent “state of science” publications in mindfulness research more broadly (Davidson and Kaszniak 2015; Van Dam et al. 2018). Davidson and Kaszniak argued that it is necessary for the advancement of the field that studies use RCTs with proper active control groups, particularly control groups that are perceived to be just as credible and as likely to lead to improvement as the experimental (i.e., mindfulness) condition. To this end, it is important to measure participants’ perception of credibility and expectancy to verify that groups are comparable. Using objective measures of mindfulness to complement traditionally used self-report measures may address concerns of relying solely on self-report measures. These concerns include susceptibility to expectancy and social desirability factors and issues of construct validity of some mindfulness self-report measures (e.g., FFMQ; Van Dam et al. 2018). Finally, isolating the unique components of mindfulness (e.g., attentional control, non-judgment) from common factors (e.g., social interaction, relaxation) improves understanding of mechanism(s) of action and the construct of mindfulness (although there is debate about whether mindfulness can be understood by decontextualizing its components; Grossman and Van Dam 2011).

In the present study, we used a methodologically rigorous design to assess whether brief, online FAM training improves cognitive, emotional, and daily functioning in healthy, previously non-meditating older adults. We chose an online administration because this method is effective for inducing changes in well-being (Spijkerman et al. 2016), has been successfully used with older adults (Wahbeh et al. 2016), is easily accessible for many participants, and minimizes some common factors that may contribute to benefits but are not mindfulness-specific (e.g., social engagement). We hypothesized that compared to an active control group, only FAM would show (1a) an increase in self-reported mindfulness, (1b) improved performance on a behavioral measure of mindfulness, (2a and b) improved attentional control and executive function but not crystallized intelligence (a measure of discriminant validity), (3) improved emotion regulation, and (4) decreased self-reported cognitive errors in daily life.

Method

Participants

Participants were recruited via two methods; (1) older adults who previously participated in research at the University of Arizona were invited to participate in the present study and (2) older adults in the community were recruited via community presentations and flyers. Exclusion criteria were (a) < 65 years old, (b) non-native English speaker, (c) current meditation practice, (d) neurological diagnosis (e.g., dementia, head injury, stroke), (e) history of severe psychiatric illness (e.g., schizophrenia, bipolar disorder), (f) current psychiatric illness (e.g., depression, substance abuse), and (g) Mini-Mental Status Exam < 26. During the screening, participants were asked about their familiarity with meditation practices, including yoga, tai chi, formal sitting practice, and religious prayer. Participants with some prior exposure to meditation practices were included if it occurred at least 5 years prior and was not a regular (e.g., weekly) practice.

A priori power analysis revealed a sample size of 52 would adequately power ([1-β] = 0.80; ⍺ = 0.05, two-tailed) an effect size of f = 0.27 for a 2 (group) by 2 (time) mixed repeated-measures ANOVA interaction term (Faul et al. 2009). This effect size was based on a meta-analytic data for our variables of interest (i.e., cognition, attention, and self-reported mindfulness; Eberth and Sedlmeier 2012). Similar to the present study, the meta-analytic studies were repeated-measures designs, assessment occurred outside of a meditative state, and samples were drawn from healthy individuals. However, the present study diverged from the meta-analytic studies in that participants were not specifically (although included) older adults and they used inactive control groups.

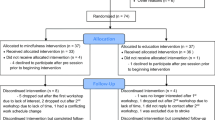

Fifty-seven older adults who met inclusion criteria were subjected to simple randomization using a random number generator to either FAM (even numbers; n = 29) or mind-wandering training (odd numbers; n = 28). Seven participants (FAM n = 2; mind wandering, n = 5) did not complete training and/or the follow-up testing (health-related, n = 3; travel, n = 1; did not enjoy the training, n = 3). There were no significant between-group differences in attrition rate, χ2 (1, N = 57) = .21, p = .25. The remaining 50 participants (88% of those randomized) were included in the analyses; 27 in the FAM condition and 23 in the mind-wandering condition (see Table 1 for demographics and Fig. 1 for CONSORT diagram). The two groups did not differ on age, education, or MMSE score, ts(49) < 1.26, ps > .213, or sex, recruitment method, or familiarity with meditation, χ2s(1, N = 49) < 2.24, ps > .33.

Procedure

Participants spent their initial 2-h visit in the lab completing a breath-counting task (behavioral measure of mindfulness) and several cognitive and emotional tasks. They completed questionnaires at home and returned to the lab within 7 days for randomization to a training condition. They were provided with 20–30 min (depending on participant need) of instruction and explanation of the goals of training followed by instructions for logging onto Qualtrics to access the training website and listen to the recording (via Soundcloud.com). Using Qualtrics should have allowed for an objective measure of training compliance but because participants frequently forgot to click “submit” at the end of their training session, it was not a reliable measure. Participants completed training once per day in a quiet room in their home. After the first training, participants completed the Credibility and Expectancy Questionnaire (CEQ; Devilly and Borkovec 2000) on Qualtrics. Forty participants completed the CEQ after the first training, 10 completed it between 2 and 4 days later. Following 6 weeks of training, participants returned to the lab and completed the same pre-training battery with a modified CEQ (that reflected on training experiences) and a post-training evaluation survey (see APPENDIX A in OSF). All participants attended this post-training session within a week of their final training day.

Training

Training conditions were referred to as “well-being training” rather than “meditation” or “mindfulness” to reduce expectancy bias. The FAM condition was called “breath-focused awareness training” and the mind-wandering condition “stream of consciousness visualization.” This also blinded participants to the experimental and control condition.

Consistent with Davidson and Kaszniak’s (2015) recommendations, we aimed to design a credible control condition that generated an expectancy of improvement in well-being. Both groups were repeatedly exposed to the idea that their training was designed to increase well-being through mechanisms consistent with their respective conditions; for the FAM condition, this was through enhanced attentional control and non-judgment and for the mind-wandering condition, this was through creativity and relaxation. Additionally, many nonspecific components were controlled in an attempt to isolate the proposed active ingredients of mindfulness—attention and non-judgment. Both training conditions were matched for (a) training length (22 min, daily for 6 weeks), (b) internet-based delivery of an audio-recorded script, (c) instructor who recorded both trainings and who was certified in cognitively based compassion training (Pace et al. 2009), (d) calming voice, and (e) identical beginning and ending instructions. We believe the only two ways the training conditions differed were (a) in the instructions to attend to the breath without judgment (FAM condition) versus letting the mind wander (mind-wandering condition) and (b) the amount of guidance received (i.e., amount of instruction provided during the recording, which was greater in the FAM condition than in the mind-wandering condition). The instructor who recorded the trainings did not facilitate any in-person training. Participants were encouraged to contact the study administrator with questions/comments. Participants could also speak to the instructor if desired, but no participants pursued this option.

FAM training (see APPENDIX B in OSF) was based on a modified script from Jon Kabat-Zinn’s (1990) guided practice for mindfulness-based stress reduction. The practice cultivates focused attention through awareness of the breath. In our audio-recorded training, participants were encouraged to notice when distracting thoughts arose (i.e., mind wandering) and, without judgment, return their attention back to the breath. Thus, the training encouraged practice in the core aspects of mindfulness: focused attention, awareness of mind wandering, and non-judgment.

In the mind-wandering training, (see APPENDIX C in OSF); participants listened to an audio recording designed to encourage mind wandering, fantasizing, and daydreaming. The training consisted of minimal guidance during which participants let their attention and minds wander, trying not to control any thoughts or feelings that arose. In this way, participants received no training in focused attention, awareness of lapses of attention, or non-judgment.

Measures

Mindfulness

The Five Facet Mindfulness Questionnaire (FFMQ; Baer et al. 2006) is a 39-item self-report questionnaire with a 5-point response scale (1 = never or very rarely true, 5 = very often or always true) measuring five latent factors comprising the higher-order construct of mindfulness. The total and five subscale scores were calculated with higher scores reflecting greater mindfulness. Of note, the Observe subscale does not reliably correlate with other subscales of the FFMQ or load onto the latent mindfulness variable in non-meditating samples (Baer et al. 2008). This suggests the Observe subscale may not reflect mindfulness in meditation-naïve individuals (unlike in meditators). Adequate to excellent internal consistency (0.72–0.92) was demonstrated on total and subscale scores in a variety of samples (age range = 18–93; Baer et al. 2008).

The Breath Counting task (Levinson et al. 2014; see APPENDIX D in OSF) is a behavioral measure of mindfulness. Participants counted their breaths from one to nine for 15 min. For breaths one through eight, they pressed a button in their right hand but on the ninth breath, they pressed a button in their left hand. If they lost track, they pressed both buttons simultaneously and started counting again at “1.” Accuracy was calculated with Levinson et al.’s (2014) formula: 100% − (no. of incorrect ongoing 9-counts + no. of self-caught miscounts)/(no. of ongoing 9-counts + no. of self-caught miscounts). Higher accuracy reflected greater mindfulness. Test-retest reliability over 1 week was 0.60 in a normative sample (age range = 18–26).

Cognition

The Keep Track task (Miyake et al. 2000) is a measure of updating and monitoring. The task was administered on a computer with E-Prime. Participants remembered the last item from a list of serially presented words (e.g., banana, sister, golf) that belonged to predetermined categories (e.g., fruits, relatives, sports). Categories to keep track of increased from one to four, with three trials of each and were always present at the bottom of the screen. The sum total of correct words recalled (max = 30) was calculated with higher total scores reflecting better updating and monitoring. Split-half reliability in a normative older adult sample was 0.90 (Hull et al. 2008).

The Simon task (Miyake et al. 2000) is a measure of inhibitory control. The task was administered on a computer with E-Prime. Participants responded to the direction of an arrow presented on the left side, right side, or middle, of a black screen. Trials were congruent (e.g., left-facing arrow on left side), incongruent (e.g., left-facing arrow on right side), or neutral (i.e., arrow in the middle). The proportion score—(average incongruent RT − average congruent RT) / average congruent RT (with RT outliers [i.e., > 2.5 SD] removed)—was the measure of interest, with smaller values indicating better inhibitory control. Split-half reliability in a normative older adult sample was 0.77 (Hull et al. 2008).

The Attentional Blink paradigm was previously used to study attention in meditators (van Leeuwen et al. 2009). The task was administered on a computer with DMDX software (Forster and Forster 2003). In our adapted version of the task, participants viewed strings of 13–17 letters, rapidly presented one at a time, and embedded within the strings were two digits (“T1” and “T2”). Presentation of T2 occurred at a distance of two (202 ms; T2202), four (370 ms; T2370), or eight (706 ms; T2706) items from T1. Although correct identification of T1 is high, T2 is less frequently identified if it appears between 200 and 500 ms after T1 (i.e., T2202 and T2370). This is the “attentional blink”; the refractory period during which attentional resources are minimally available to process T2.

We calculated a proportional accuracy score for T2202, T2370, and T2706 based on van Vugt and Slagter (2014): average T2 accuracy/average T1 accuracy. Attentional blink is demonstrated when detection at T2202 and T2370 is lower than at T2706 and T1. Higher values at T2202 and T2370 suggested reduced attentional blink and better attentional control. Test-retest reliability over a 7–10-day period was 0.59 and split-half reliability was adequate to good at both testing times (0.69–.89) in a normative university sample (Dale and Arnell 2013).

The Wechsler Adult Intelligence Scale-IV Similarities (WAIS-IV; Wechsler et al. 2008) subtest is a measure of verbal (crystallized) intelligence. Unlike fluid intelligence which appeared sensitive to mindfulness intervention in older adults (Gard et al. 2014b), crystallized intelligence was relatively stable in later life and typically unchanged by intervention (Nisbett et al. 2012). Standard WAIS-IV scoring procedures were used. High scores reflected better verbal intelligence. Good to excellent (0.88–0.91) internal consistency was found in normative older adult samples. The standardized administration protocol was used.

Emotion Regulation

The Emotional Interference Task (EIT) was previously used to study emotion regulation and reactivity in meditators (Ortner et al. 2007). The task was administered on a computer with DMDX software. In our adapted task, participants viewed 20 neutral, 12 pleasant, and 12 unpleasant pictures, from the International Affective Picture System (IAPS; Lang et al. 2008), one at a time in random order for 6000 ms (ISI 1000 ms). At either 1000 ms or 4000 ms after picture onset (i.e., 1 s and 4 s stimulus onset asynchrony [SOA]), a high (2000 Hz)- or low (400 Hz)-pitch tone was presented. Participants pressed a button as quickly as possible to indicate whether the tone was high or low. In non-meditators, unpleasant pictures produced emotional interference at 1 and 4 s SOAs, demonstrated by a reaction time (RT) increase with unpleasant compared to neutral pictures. However, meditators showed interference only at 1 s SOA (Ortner et al. 2007), suggesting mindfulness attenuated prolonged processing of unpleasant stimuli. Ortner et al. also found positive arousing pictures produced interference at the 1 s SOA but not the 4 s SOA.

Two sets of pictures (see APPENDIX E and F in OSF), based on Ortner et al. (2007) selections where possible, were used to counter-balance among participants and across time points (i.e., pre- and post- training). Neutral, pleasant, and unpleasant pictures in both sets were balanced for number of people versus “other” (e.g., landscapes, objects, animals). Pleasant and unpleasant pictures for both sets were balanced for arousal.

RTs for 1 s SOAs and 4 s SOAs tones were averaged separately across unpleasant, neutral, and pleasant pictures. Item-level RT outliers (i.e., > 2.5 SD) in each category were excluded. Emotional interference was measured by subtracting average RTs for neutral pictures from average RTs for (a) unpleasant and (b) pleasant pictures (each within 1 s SOA and 4 s SOA categories). Smaller values indicated better emotion regulation.

Daily Cognitive Errors

The Multifactorial Memory Questionnaire (MMQ; Troyer and Rich 2002) measures perceived everyday memory functioning on dimensions of Contentment, Ability, and Strategy Use/Knowledge. Only the Contentment and Ability subscales were administered as Strategy Use/Knowledge subscale captures compensatory behaviors rather than intrinsic memory functioning. The Contentment subscale items were rated on a 5-point scale from 0-Strongly Disagree to 4-Strongly Agree (score range: 0–72) and the Ability subscale items were rated on a 5-point scale from 0-All the time to 4-Never (score range: 0–76). Higher total scores on the Contentment subscale indicated greater contentment with memory ability while higher total scores on the Ability subscale indicated greater memory errors (i.e., forgetfulness) in daily life. Test-retest reliability over a 4-week period in a normative sample of older adults ranged from 0.88 to 0.93 and internal consistency ranged from 0.83 to 0.95.

The Cognitive Failures Questionnaire (CFQ; Broadbent et al. 1982) measures general functional errors in the domains of perception, memory, and motor functioning. Respondents indicated the frequency with which they experience these errors using a 5-point scale from 0-Never to 4-Very Often (score range: 0–100). Higher total scores indicated greater cognitive errors. Good internal consistency (0.89) has been demonstrated in a normative sample of 20–40-year-olds (Broadbent et al. 1982).

The Attention-Related Cognitive Errors Scale (ARCES; Cheyne et al. 2006) measures everyday mistakes resulting from inattention. Respondents indicated the frequency with which they experienced each type of mistake using a 5-point scale from 1-Never to 5-Very Often (score range: 12–60). Higher average scores indicated more attention lapses/errors. Good internal consistency (0.89) was demonstrated in a normative university sample.

We calculated a composite score for “daily cognitive errors” comprised of the CFQ, MMQ, and ARCES. MMQ Contentment was multiplied by − 1 so that performance was in the same direction as other measures. Using Pearson’s r, measures were significantly correlated with each other (r = .56 to .88, ps < .001). Exploratory factor analysis using Maximum Likelihood Estimation suggested one factor (loadings: MMQ Contentment, .83; MMQ Ability, 0.95; CFQ, 0.94; ARCES, 0.71). Raw scores were converted to z-scores and averaged to create a daily cognitive errors composite. Larger negative values reflected greater errors.

Credibility and Expectancy

The Credibility and Expectancy Questionnaire is a six-item measure of believability of an intervention and amount of benefit participants think and feel they will obtain from engaging in the intervention. Four questions used a 9-point scale (1 = not at all, 9 = very) and the other two asked for a percentage response ranging from 0 to 100%.

Data Analyses

All means and SDs for the outcome measures are presented in Table 2(a and b). We ran separate 2 (group: FAM, mind wandering) × 2 (time: pre-training, post-training) mixed repeated-measures ANOVAs controlling for main effects of age, sex, and education for each measure of interest. Most analyses, unless otherwise stated, were underpowered ([1-β] < .80, ⍺ < .05), as calculated by post hoc power analyses for F test main effects and interactions terms. In small sample sizes with underpowered analyses, non-significant results may not indicate absence of true effect (Lakens 2017). To assist in understanding null results as they relate to the above, we reported effect sizes (ηp2) and 90% confidence intervals (CI90%; equivalent to CI95% for d) around those effect sizes (Dziak et al. 2018).

Outliers

Prior to analyses, univariate outliers defined as z-scores exceeding ± 2.5 were removed (Daszykowski et al. 2007). We changed these extreme values to the next most non-outlier extreme value (i.e., Winsorization) to reduce skew of the distribution while relatively preserving overall data variation (Field 2009). Outliers for pre-training Emotional Interference Test (n = 3), Simon (n = 1), and Attentional Blink (n = 1) and post-training Attentional Blink (n = 3) and Emotional Interference Test (n = 1), were Windsorized to address extreme values.

Missing Data

We had missing data at pre-training—Attentional Blink (n = 1; program error) and EIT (n = 2; program error), and at post-training—Cognitive Errors Composite (n = 3; participants were unable to complete questionnaires within their lab session), Attentional Blink (n = 1; participant error), Simon (n = 2; program error) and EIT (n = 2, program error; n = 4, participant error). We did not impute data as Little’s MCAR test showed that data were missing at random, χ2(244, N = 50) = 249.93, p = .38, and we had < 5% total missing data. These missing data resulted in analyses with the following sample sizes: n = 50 for FFMQ, Breath Counting, Keep Track, and Similarities; n = 48 for Attentional Blink and Simon task; n = 47 for daily cognitive errors composite; and n = 43 for EIT.

Results

Compliance and Post-Training Questionnaire

There were no group differences in self-reported missed training days, t(45) = 0.09, p = .92, d = 0.03 (FAM, M = 5.42, SD = 7.35; mind wandering, M = 5.62, SD = 6.76), though three of 50 participants did not respond to this item. Forty-two of these 47 respondents completed at least 31 days (i.e., 75%) of the training (M = 36, SD = 7, range = 17–42). Based on data from the post-training questionnaire, the FAM group (M = 4.33, SD = 2.77) enjoyed the training less than the mind-wandering group (0 = not enjoyable at all to 10 = very enjoyable; M = 6.00, SD = 2.82), t(48) = 2.10, p = .041, d = 0.60). Both groups found the training similarly “somewhat intrusive” (0 = not intrusive at all to 10 = very intrusive; M = 4.75, SD = 2.70), t(48) = 0.54, p = .595, d = 0.15.

Credibility and Expectancy

We compared items on the CEQ at the beginning and end of training (see Table 2(a) for Ms and SDs). Groups did not differ on any items at the beginning, suggesting they were initially equally credible conditions with similar expectancy for improvement (ts[48] ≤ 0.63, ps ≥ .53, d ≤ 0.18). Although perceived logic (i.e., credibility) of the training was maintained over time in both groups, F(1,48) < 1, ηp2 < 0.01, CI90% = [0.00, 0.01], both groups declined over time in how much they thought and felt the training had (a) promoted well-being and (b) actually led to an improvement in their well-being, Fs(1,48) ≥ 6.67, ps < .05, ηp2s ≥ 0.12, CIs90% = [0.00, 0.32]. We also found a significant group by time interaction for the item “Would you recommend this training to a friend?,” F(1,48) = 4.11, p = .048, ηp2 = 0.08, CI90% = [0.00, 0.21]. The mind-wandering group was marginally more likely to recommend the training after completing training (M = 5.96, SD=2.51) compared to the beginning of training (M = 4.87, SD=2.44), t(22) = 1.81, p = .083, d = 0.38, whereas the FAM group showed no pre- (M = 5.04, SD = 2.41) to post- (M = 4.67, SD = 2.76) training differences, t(26) = 0.87, p = .39, d = 0.17.

Additionally, we explored whether there were potential differences on the CEQ between the two recruitment method groups. The community sample self-selected into the study after learning it was a well-being training while the research sample was invited to participate (i.e., did not self-identify as being interested in well-being training). At pre-training, the community sample was marginally more likely than the research sample to think (Mcommunity = 6.42, SDcommunity = 1.64, Mresearch = 5.26, SDresearch = 2.21), t(46) = 1.98, p = .053, d = 0.58, and significantly more likely to feel (Mcommunity = 5.95, SDcommunity = 2.04, Mresearch = 4.58, SDresearch = 2.38), t(46) = 2.08, p = .043, d = 0.61, that the training would be beneficial. Following training, the community sample (M = 5.21, SD = 2.53) reported greater improvement in well-being compared to the research sample (M = 3.48, SD = 2.47), t(46) = 2.37, p = .022, d = 0.69.

Only Two Aspects of Self-Reported Mindfulness Increased with Both Trainings

Cronbach’s alphas for the FFMQ scales were as follows: observe = 0.77, describe = 0.90, acting with awareness = 0.91, non-judgment = 0.91, and non-reactivity = 0.78. There was a main effect of time on FFMQ Observe, F(1,45) = 7.43, p = .009, ηp2 = 0.14, CI90% = [0.02, 0.29], with scores increasing from pre- to post-training. There was no significant main effect of group, F(1,45) = 1.78, p = .189, ηp2 = 0.04, CI90% = [0.00, 0.16] or group by time interaction, F(1,45) = 1.10, p = .300, ηp2 = 0.02, CI90% = [0.00, 0.13]. There was a significant main effect of time on FFMQ Describe, F(1,45) = 12.06, p = .001, ηp2 = 0.21, CI90% = [0.06, 0.37], power = 0.92, with scores increasing from pre- to post-training. There was no significant main effect of group, F(1,45) = 1.19, p = .282, ηp2 = 0.03, CI90% = [0.00, 0.14], and no significant group by time interaction, F(1,45) = .18, p = .672, ηp2 < 0.01, CI90% = [0.00, 0.08]. None of the other FFMQ scales (i.e., Act with Awareness, Nonreactivity, Nonjudgment) nor the breath counting task revealed any significant main effects of time, Fs(1,45) < 1, ps ≥ .552, ηp2s < 0.01, CIs90% = [0.00, 0.09], main effects of group, Fs(1,46) ≤ 3.93, ps ≥ .056, ηp2s ≤ 0.06, CIs90% = [0.00, 0.22], or group by time interactions, Fs(1,46) < 1, ps ≥ .360, ηp2s ≤ 0.02, CIs90% = [0.00, 0.12].

One Measure of Attentional Control Improved with Mindfulness Training and Inhibitory Control Improved with Both Trainings

At T2202ms on the Attentional Blink task, there was a significant main effect of time F(1,43) = 8.51, p = .006, ηp2 = 0.16, CI90% = [0.03, 0.31], power = 0.81, revealing accuracy improved from pre- to post-training. There was no significant main effect of group, F(1,43) < .01, p = .976, ηp2 < 0.01, CI90% = [0.00, 0.01] or significant group by time interaction, F(1,43) = 2.50, p = .121, ηp2 = 0.05, CI90% = [0.00, 0.19]. At T2370ms on the Attentional Blink task, there was no main effect of group, F(1,43) < 0.01, p = .923, ηp2 < 0.01, CI90% = [0.00, 0.01]. There was a significant main effect of time, F(1,43) = 10.51, p = .002, ηp2 = 0.20, CI90% = [0.04, 0.34], power = 0.89, revealing accuracy improved from pre- to post-training. This main effect was qualified to be a group by time interaction, F(1,43) = 4.59, p = .038, ηp2 = 0.10, CI90% = [0.01, 0.24]. The FAM group significantly improved following training, t(25) = 3.69, p = .001, d = 0.70, while the mind-wandering group did not significantly improve, t(25) = 1.62, p = .12, d = 0.19.

On the Simon task, there was a significant effect of time, F(1,43) = 4.95, p = .031, ηp2s = 0.10, CI90% = [0.01, 0.25], revealing reduced interference from pre- to post-training. There was no main effect of group, F(1,43) = 0.10, p = .758, ηp2 < 0.01, CI90% = [0.00, 0.07], or group by time interaction, F(1,43) < 0.01, p = .978, ηp2 < 0.01, CI90% = [0.00, 0.01]. There were no significant main effects of time, Fs(1,45) ≤ 1.53, ps ≥ .222, ηp2s ≤ 0.03, CIs90% = [0.00, 0.15], or group, Fs(1,45) ≤ 0.02, ps ≥ .888, ηp2s < 0.01, CIs90% = [0.00, 0.02], or group by time interaction, Fs(1,43) ≤ 0.53, ps ≥ .470, ηp2s ≤ 0.01, CIs90% = [0.00, 0.11], for the Keep Track task or WAIS-IV Similarities.

Emotion Regulation Did Not Improve with Training

At 4 s SOA unpleasant stimuli, there was a significant main effect of group, F(1,38) = 6.22, p = .017, ηp2 = 0.14, CI90% = [0.01, 0.30], such that the FAM group showed less interference (M = − 0.04, SD = 0.20) than the mind-wandering group (M = − 0.16, SD = 0.23). There was no significant main effect of time, F(1,38) = 0.01, p = .915, ηp2 < 0.01, CI90% = [0.00, 0.01], or group by time interaction, F(1,38) = 0.91, p = .347, ηp2 = 0.02, CI90% = [0.00, 0.14]. There were no significant main effects of time, Fs(1,38) < 1, ps ≥ .413, ηp2 ≤ 0.02, CIs90% = [0.00, 0.13], main effects of group, Fs(1,38) ≤ 1.37, ps ≥ .249, ηp2 ≤ 0.03, CIs90% = [0.00, 0.17], or group by time interactions, Fs(1,38) ≤ 2.75, ps ≥ .105, ηp2 ≤ 0.07, CIs90% = [0.00, 0.22] at 1 s SOA unpleasant and 1 s and 4 s SOA pleasant stimuli.

Self-Reported Cognitive Errors in Everyday Life Were Not Reduced by Training

Cronbach’s alphas for the individual scales were as follows: MMQ Ability = 0.90, MMQ Contentment = 0.96, ARCES = 0.83, and CFQ = 0.91. There was no significant main effect of group, main effect of time, or group by time interaction, Fs(1,42) ≤ 2.04, ps ≥ .161, ηp2s ≤ 0.05, CIs90% = [0.00, 0.18], for self-reported daily cognitive errors.

Discussion

This study examined the effects of a brief online FAM training for increasing mindfulness, enhancing cognitive and emotional functioning, and reducing everyday cognitive errors in healthy, non-meditating older adults. This study’s secondary aim was to address recent calls for higher methodological rigor in mindfulness research, including RCT design with an active control group, measuring credibility and expectancy, using objective measures, and isolating mechanisms of action of mindfulness. We found partial support for improvement in attentional control following mindfulness training, but overall, our hypotheses were not supported. Specifically, we did not find that training affected breath counting accuracy (behavioral measure of mindfulness), improved updating/working memory or emotion regulation, or reduced daily cognitive errors. Additionally, aspects of self-reported mindfulness and inhibitory control increased with both trainings, rather than only mindfulness training. However, the lack of statistically significant group differences on outcomes provides no affirmative evidence that the interventions were equal on these outcomes. There was likely insufficient power to detect potentially small differences between the groups. The confidence interval estimates of group differences suggested that reported effects overlapped in many cases with meaningful effect sizes.

Attentional blink accuracy improved from pre- to post-training on one of two measures in the FAM group, suggesting mindfulness training had a modest effect on attentional control. This finding is in line with what Malinowski (2013) proposed to be at the core of a mindfulness practice—development of attentional control. However, both training groups showed improvements without a significant interaction on the second measure of attentional blink, which tempers the confidence in our finding. These data require replication in appropriately powered studies to determine true effects.

Both trainings may have cultivated some aspects of mindfulness—specifically awareness of internal and external experiences (Observe) and labeling observed experiences with words (Describe), and improved inhibitory control. Baer et al. (2006, 2008) showed that Observe appears most sensitive to experience with mindfulness practice but also that it did not correlate with other subscales nor load onto the latent mindfulness variable in non-meditators. This makes it difficult to interpret change in Observe over time, as it is possible that mindfulness practice actually changes how questions are interpreted (Grossman and Van Dam 2011). Both trainings also increased in inhibitory control, although we cannot rule out the possibility of practice effects.

It is possible the choice of control condition contributed to the failure to find group differences on some of our measures. We asked participants to deliberately (i.e., in a controlled, focused way) engage in “mind wandering,” rather than spontaneously “mind-wander,” which is often automatic, task-unrelated thinking that sometimes occurs in the absence of full awareness. These two types of mind wandering are distinguishable at the neurobiological level in that they are associated with different brain structures and patterns of connectivity (Golchert et al. 2017). Asking participants to deliberately mind-wander may have inadvertently encouraged another type of meditation practice, that of open monitoring. In open monitoring-based practices, individuals become aware of thoughts, feelings, and sensations as they arise without trying to control them (Lutz et al. 2008). In some ways, this is what the mind wandering participants in the present study were asked to do and could explain some of the failure to find group differences.

In addition to a lack of group differences on a few of our measures that showed improvements from pre- to post-training, most measures did not actually change over time. There are several potential factors that may have led to null findings. The first concerns our sample characteristics. Brief mindfulness training may be particularly beneficial for individuals low in baseline cognition and dispositional mindfulness (Mallya and Fiocco 2016; Watier and Dubois 2016). Participants in our sample were high in both, suggesting they may have had less to gain from FAM training. Although there was no normative data for most tasks due to their experimental nature, mean performance on WAIS-IV Similarities was in the High Average/Superior range. Similarly, self-reported baseline mindfulness scores were numerically similar to individuals with prior meditation experience (e.g., Baer et al. 2008) and individuals who had completed mindfulness training (e.g., Wahbeh et al. 2016). This may have left little room for improvement over a relatively short period of time.

A second consideration in interpreting these mostly null findings is the issue of motivation. Older compared to younger adults are more selective about engaging in cognitively demanding tasks because of the psychological costs of effortful thinking. If the task is effortful and the perceived value/importance of the task is low, older adults are more likely to disengage (Ennis et al. 2013). In the present study, we had a highly effortful task (mindfulness practice) with potentially low perceived value/importance (e.g., not setting an intention or personally relevant reason for practice) and results of the CEQ suggest training did not meet expectations. These issues are discussed below.

First, mindfulness is a difficult skill for older adults to learn and practice (Lomas et al. 2016). We found that the mindfulness group enjoyed the training less than the control group, which we theorize could be related to the difficulty and effort of FAM, especially compared to the ease and familiarity of mind wandering. Second, participants did not set an intention for practice (i.e., a personally relevant reason for practice), which Shapiro et al. (2006) argued is one of the central tenants of mindfulness practice. Potentially hinting at the importance of intention (and establishing the value of the practice), we found that the community sample (self-selected to participate) was more likely than the research sample (invited to participate) to feel the training was beneficial and perceived greater improvement. A third and final consideration is that participants regardless of training declined in satisfaction with the training. Both groups rated training as somewhat intrusive on average, with comments suggesting it was difficult to incorporate into daily life due mainly to length (i.e., 22 min). Comments also included concerns that 6 weeks of guided practice was too repetitive and distracting to be relaxing/calming enough to optimally engage with the practice. These concerns may have resulted in lower expectancy ratings following completion of the 6 weeks of training. High effort, lack of formal intentions, and decreased training satisfaction may have lowered motivation to fully engage with the practice, limiting potential for gains.

Despite the generally null findings, the present study had a number of significant methodological strengths. We used an RCT design with an active control condition and measured and/or controlled for many of the extraneous factors that could potentially confound outcomes and complicate interpretation of results. According to Van Dam et al. (2018), studies using RCT designs with active, credible control groups are “absolutely crucial” to move mindfulness research forward. Both our training conditions were matched for length, duration, delivery method, and instructor (certified in Cognitively-Based Compassion Training). In this way, there were no group differences in the amount of training engaged in or the quality of instruction in the audio recording. We reduced expectancy bias by blinding participants to the experimental condition and referring to both conditions as well-being training rather than mindfulness or meditation training (Prätzlich et al. 2016). Additionally, we measured credibility of our training conditions and the expectancy of improvement pre- and post-training with the CEQ. From these data, we can confirm that we successfully created two training conditions that were equally credible and lead to similar expectancy for improvement in well-being.

Limitations and Future Research Directions

Our sample was relatively small, resulting in underpowered analyses. Replication with a larger sample and adequate power for interaction terms will be important for detecting the small to medium effects suggested by this and previous mindfulness studies (Eberth and Sedlmeier 2012; Gallant 2016; Gard et al. 2014a). Our sample of older adults was not representative, which limits the generalizability of results. Older adults failed to accurately use our training recording program, such that we were unable to confirm training compliance with objective data. This also raises the possibility that some participants found the training delivery mode challenging (e.g., technologically complex). We can also consider time of day effects. Yoon et al. (1999) found the morning was an optimal time of day for older adults’ performance on cognitive tasks. Though participants were able to pick their time of day for testing (and pre- and post-training testing occurred at similar times), it is possible that non-optimal time of day effects obscured results. Although all of our measures had previously been used with older adults, not all had been validated psychometrically with an older adult sample. Finally, our study was intentionally designed to minimize potential confounding “common factors” (e.g., social engagement) and isolate unique components of mindfulness. However, in doing so, we may have decontextualized its components (e.g., Grossman and Van Dam 2011), potentially diluting training and providing participants with an incomplete practice. For example, the full benefits of mindfulness practice may require face-to-face interactions with an instructor, particularly as they relate to supporting the practitioners’ inquiry during their practice (Spijkerman et al. 2016).

We believe that the present study highlighted some important considerations for future mindfulness studies, particularly with older adults. Future studies may consider the feedback we received about repetition, wordiness/distractibility, appropriate guidance, and sufficient reasons for training, feedback that was consistent with some of Lomas et al.’s (2016) published recommendations. Additionally, helping participants identify a specific intention for their practice may support motivation over an extended period of such an effortful practice.

References

Baer, R. A., Smith, G. T., Hopkins, J., Krietemeyer, J., & Toney, L. (2006). Using self-report assessment methods to explore facets of mindfulness. Assessment, 13(1), 27–45.

Baer, R. A., Smith, G. T., Lykins, E., Button, D., Krietemeyer, J., Sauer, S., et al. (2008). Construct validity of the five facet mindfulness questionnaire in meditating and nonmeditating samples. Assessment, 15(3), 329–342.

Broadbent, D. E., Cooper, P. F., FitzGerald, P., & Parkes, K. R. (1982). The cognitive failures questionnaire (CFQ) and its correlates. British Journal of Clinical Psychology, 21(1), 1–16.

Cheyne, J. A., Carriere, J., & Smilek, D. (2006). Absentmindedness: lapses of conscious awareness and everyday cognitive failures. Consciousness and Cognition, 15(3), 578–592.

Dale, G., & Arnell, K. M. (2013). How reliable is the attentional blink? Examining the relationships within and between attentional blink tasks over time. Psychological Research, 77(2), 99–105.

Daszykowski, M., Kaczmarek, K., Vander Heyden, Y., & Walczak, B. (2007). Robust statistics in data analysis—a review: Basic concepts. Chemometrics and Intelligent Laboratory Systems, 85(2), 203–219.

Davidson, R. J., & Kaszniak, A. W. (2015). Conceptual and methodological issues in research on mindfulness and meditation. American Psychologist, 70(7), 581–592.

Devilly, G. J., & Borkovec, T. D. (2000). Psychometric properties of the credibility/expectancy questionnaire. Journal of Behavior Therapy and Experimental Psychiatry, 31(2), 73–86.

Dziak, J.J., Dierker, L.C. & Abar, B. (2018). The interpretation of statistical power after the data have been gathered. Current Psychology. Advance of Print. https://doi.org/10.1007/s12144-018-00181.

Eberth, J., & Sedlmeier, P. (2012). The effects of mindfulness meditation: a meta-analysis. Mindfulness, 3(3), 174–189.

Ennis, G. E., Hess, T. M., & Smith, B. T. (2013). The impact of age and motivation on cognitive effort: implications for cognitive engagement in older adulthood. Psychology and Aging, 28(2), 495–504.

Faul, F., Erdfelder, E., Buchner, A., & Lang, A. (2009). Statistical power analyses using G*Power 3.1: tests for correlation and regression analyses. Behavior Research Methods, 41(4), 1149–1160.

Field, A. (2009). Discovering statistics using SPSS: and sex and drugs and rock ‘n’ roll (3rd ed.). Washington, DC: Sage Publications.

Forster, K. I., & Forster, J. C. (2003). DMDX: a windows display program with millisecond accuracy. Behavior Research Methods, Instruments, & Computers, 35(1), 116–124.

Gallant, S. N. (2016). Mindfulness meditation practice and executive functioning: Breaking down the benefit. Consciousness and Cognition, 40, 116–130.

Gard, T., Hölzel, B. K., & Lazar, S. W. (2014a). The potential effects of meditation on age-related cognitive decline: a systematic review. Annals of the News York Academy of Sciences, 1307(1), 89–103.

Gard, T., Taquet, M., Dixit, R., Hölzel, B. K., de Montjoye, Y. A., Brach, N., et al. (2014b). Fluid intelligence and brain functional organization in aging yoga and meditation practitioners. Frontiers in Aging Neuroscience, 6. https://doi.org/10.3389/fnagi.2014.00076.

Geiger, P. J., Boggero, I. A., Brake, C. A., Caldera, C. A., Combs, H. L., Peters, J. R., & Baer, R. A. (2016). Mindfulness-based interventions for older adults: a review of the effects on physical and emotional well-being. Mindfulness, 7(2), 296–307.

Glisky, E. L. (2007). Changes in cognitive function in human aging. In D. R. Riddle (Ed.), Brain aging: models, methods, and mechanisms (pp. 3–20). Boca Raton: CRC Press/Taylor & Francis.

Golchert, J., Smallwood, J., Jefferies, E., Seli, P., Huntenburg, J. M., Liem, F., et al. (2017). Individual variation in intentionality in the mind-wandering state is reflected in the integration of the default-mode, fronto-parietal, and limbic networks. Neuroimage, 146, 226–235.

Grossman, P., & Van Dam, N. T. (2011). Mindfulness, by any other name… trials and tribulations of sati in western psychology and science. Contemporary Buddhism: An Interdisciplinary Journal, 12(01), 219–239.

Hull, R., Martin, R. C., Beier, M. E., Lane, D., & Hamilton, A. C. (2008). Executive function in older adults: a structural equation modeling approach. Neuropsychology, 22(4), 508–522.

Kabat-Zinn, J. (1990). Full catastrophe living: using the wisdom of your body and mind to face stress, pain, and illness. New York: Delecorte.

Kaszniak, A. W., & Menchola, M. (2012). Behavioral neuroscience of emotion in aging. Current Topics in Behavioral Neuroscience, 10, 51–66.

Lakens, D. (2017). Equivalence tests: a practical primer for t tests, correlations, and meta-analyses. Social Psychological and Personality Science, 8(4), 355–362.

Lang, P. J., Bradley, M. M., & Cuthbert, B. N. (2008). International affective picture system (IAPS): Affective ratings of pictures and instruction manual. Gainesville: University of Florida.

Levinson, D. B., Stoll, E. L., Kindy, S. D., Merry, H. L., & Davidson, R. J. (2014). A mind you can count on: Validating breath counting as a behavioral measure of mindfulness. Frontiers in Psychology, 5. https://doi.org/10.3389/fpsyg.2014.01202.

Lomas, T., Ivtzan, I., & Yong, C. Y. (2016). Mindful living in older age: a pilot study of a brief, community-based, positive aging intervention. Mindfulness, 7(30), 630–641.

Lutz, A., Slagter, H. A., Dunne, J. D., & Davidson, R. J. (2008). Attention regulation and monitoring in meditation. Trends in Cognitive Sciences, 12(4), 163–169.

Malinowski, P. (2013). Neural mechanisms of attentional control in mindfulness meditation. Frontiers in Neuroscience, 7. https://doi.org/10.3389/fnins.2013.00008.

Mallya, S., & Fiocco, A. J. (2016). Effects of mindfulness training on cognition and well-being in healthy older adults. Mindfulness, 7(2), 453–465.

Mather, M., & Carstensen, L. L. (2005). Aging and motivated cognition: the positivity effect in attention and memory. Trends in Cognitive Sciences, 9(10), 496–502.

Miyake, A., Friedman, N. P., Emerson, M. J., Witzki, A. H., & Howerter, A. (2000). The unity and diversity of executive functions and their contributions to complex “frontal lobe” tasks: a latent variable analysis. Cognitive Psychology, 41(1), 49–100.

Nielsen, L., & Kaszniak, A. W. (2006). Awareness of subtle emotional feelings: a comparison of long-term meditators and nonmeditators. Emotion, 6(3), 392–405.

Nisbett, R. E., Aronson, J., Blair, C., Dickens, W., Flynn, J., Halpern, D. F., & Turkheimer, E. (2012). Intelligence: new findings and theoretical developments. American Psychologist, 67(2), 130–159.

Ortner, C. N. M., Kilner, S. J., & Zelazo, P. D. (2007). Mindfulness meditation and reduced emotional interference on a cognitive task. Motivation and Emotion, 31(4), 271–283.

Pace, T. W. W., Negi, L. T., Adame, D. D., Cole, S. P., Sivilli, T. I., Brown, T. D., et al. (2009). Effect of compassion meditation on neuroendocrine, innate immune and behavioral responses to psychosocial stress. Psychoneuroendocrinology, 34(1), 87–98.

Park, D. C., & Reuter-Lorenz, P. (2009). The adaptive brain: aging and neurocognitive scaffolding. Annual Review in Psychology, 60, 173–196.

Prakash, R., Rastogi, P., Dubey, I., Abhishek, P., Chaudhury, S., & Small, B. J. (2012). Long-term concentrative meditation and cognitive performance among older adults. Aging, Neuropsychology, and Cognition, 19(4), 479–494.

Prakash, R. S., De Leon, A. A., Patterson, B., Schirda, B. L., & Janssen, A. L. (2014). Mindfulness and the aging brain: a proposed paradigm shift. Frontiers in Aging Neuroscience, 6. https://doi.org/10.3389/fnagi.2014.00120.

Prätzlich, M., Kossowsky, J., Gaab, J., & Krummenacher, P. (2016). Impact of short-term meditation and expectation on executive brain functions. Behavioural Brain Research, 297, 268–276.

Raes, A. K., Bruyneel, L., Loeys, T., Moerkerke, B., & De Raedt, R. (2015). Mindful attention and awareness mediate the association between age and negative affect. Journals of Gerontology Series B: Psychological Sciences and Social Sciences, 70(2), 179–188.

Royall, D. R., Palmer, R., Chiodo, L. K., & Polk, M. J. (2004). Declining executive control in normal aging predicts change in functional status: the freedom house study. Journal of the American Geriatrics Society, 52(3), 346–352.

Shapiro, S. L., Carlson, L. E., Astin, J. A., & Freedman, B. (2006). Mechanisms of mindfulness. Journal of Clinical Psychology, 62(3), 373–386.

Smart, C. M., Segalowitz, S. J., Mulligan, B. P., Koudys, J., & Gawryluk, J. R. (2016). Mindfulness training for older adults with subjective cognitive decline: results from a pilot randomized controlled trial. Journal of Alzheimer’s Disease, 52(2), 757–774.

Spijkerman, M. P. J., Pots, W. T. M., & Bohlmeijer, E. T. (2016). Effectiveness of online mindfulness-based interventions in improving mental health: a review and meta-analysis of randomized controlled trials. Clinical Psychology Review, 45, 102–114.

Troyer, A. K., & Rich, J. B. (2002). Psychometric properties of a new metamemory questionnaire for older adults. The Journals Of Gerontology: Series B: Psychological Sciences And Social Sciences, 57B(1), P19–P27.

Van Dam, N. T., van Vugt, M. K., Vago, D. R., Schmalzl, L., Saron, C. D., Olendzki, A., et al. (2018). Mind the hype: a critical evaluation and prescriptive agenda for research on mindfulness and meditation. Perspectives on Psychological Science, 13(1), 36–61.

van Leeuwen, S., Müller, N. G., & Melloni, L. (2009). Age effects on attentional blink performance in meditation. Consciousness and Cognition, 18(3), 593–599.

van Vugt, M. K., & Slagter, H. A. (2014). Control over experience? Magnitude of the attentional blink depends on meditative state. Consciousness and Cognition, 23, 32–39.

Wahbeh, H., Goodrich, E., & Oken, B. S. (2016). Internet mindfulness meditation for cognition and mood in older adults: a pilot study. Alternative Therapies in Health and Medicine, 22(2), 44–53.

Watier, N., & Dubois, M. (2016). The effects of a brief mindfulness exercise on executive attention and recognition memory. Mindfulness, 7(3), 745–753.

Wechsler, D. (2008). WAIS-IV: Wechsler Adult Intelligence Scale. San Antonio: Psychological Corporation.

Yoon, C., May, C. P., & Hasher, L. (1999). Aging, circadian arousal, and cognition. In N. Schwartz, D. Park, B. Knauper, & S. Sudman (Eds.), Aging, cognition and self reports (pp. 117–143). Washington, DC: Psychological Press.

Zellner Keller, B., Singh, N. N., & Winton, A. S. W. (2014). Mindfulness-based cognitive approach for seniors (MBCAS): program development and implementation. Mindfulness, 5(4), 453–459.

Acknowledgments

We are grateful to our excellent team of research assistants, including Dimitri Macris, Hannah Ritchie, Sara Feld, Katelyn McVeigh, Christina Boudreau, Jacqueline Marquez, Stephen Barney, Katie Huynh, Saphire Miramontes, Natasha Moushegian, Miyla McIntosh, and Xunchang Fang. Thank you to Lindsey Knowles and Deanna Kaplan for invaluable feedback and suggestions and to the participants who generously contributed their time and efforts to this study.

Funding

This study was funded by Mind and Life Institute (grant number: 2015–1440-Polsinelli).

Author information

Authors and Affiliations

Contributions

AJP: designed and executed the study, analyzed the data, and wrote the manuscript. AWK: collaborated with the design of the study and final editing of the manuscript. ELG: collaborated with the design of the study and final editing of the manuscript. DA: collaborated with the design of the study and final editing of the manuscript.

Corresponding author

Ethics declarations

Conflict of Interest

The authors declare that they have no conflicts of interest.

Ethical Approval

All procedures performed in studies involving human participants were in accordance with the ethical standards of the institutional research committee and with the 1964 Helsinki Declaration and its later amendments or comparable ethical standards. The study was approved by the Human Research Ethics Committee of the University of Arizona (no. 1300000709).

Informed Consent

All participants provided written, informed consent.

Additional information

OSF Link

OSF link for supplementary materials (i.e., appendices) and data: https://osf.io/kw6yp/?view_only=e900dbd0acb945d5b645833a3c4a715a

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Polsinelli, A.J., Kaszniak, A.W., Glisky, E.L. et al. Effects of a Brief, Online, Focused Attention Mindfulness Training on Cognition in Older Adults: a Randomized Controlled Trial. Mindfulness 11, 1182–1193 (2020). https://doi.org/10.1007/s12671-020-01329-2

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12671-020-01329-2