Abstract

Students’ goal-setting skills are highly related to their academic learning performance and level of motivation. A review of the literature demonstrated limited research on both applicable goal-setting strategies in higher education and the support of technology in facilitating goal-setting processes. Addressing these two gaps, this study explored the use of digital badges as an innovative approach to facilitate student goal-setting. The digital badge is a digital technology that serves as both a micro-credential and a micro-learning platform. A digital badge is a clickable badge image that represents an accomplished skill or knowledge and includes a variety of metadata such as learning requirements, instructional materials, endorsement information, issue data and institution, which allows the badges to be created, acquired and shared in an online space. In higher education, digital badges have the potential for assisting students by promoting strategic management of the learning process, encouraging persistence and devoted behavior to learning tasks, and improving learning performance. A qualitative multiple case study design (n = 4) was used to answer the research question: how did the undergraduate student participants in this study use digital badges to facilitate their goal-setting process throughout a 16-week hybrid course? Results from this study contribute to understanding how to effectively integrate digital badges to meaningfully improve self-regulated learning in higher education.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Students’ lack of goal strategies can result in lower learning performances (Locke and Latham 1990). For example, students who set higher goals have more positive learning outcomes than those who just do their best, and students who set specific goals outperform those who set general goals (Hollenbeck and Klein 1987; Locke and Latham 1990, 2002). Although scholars in the field of psychology and education have extensively discussed the effects of goal-setting on learning performance, they have found few practical solutions and strategies for college students to facilitate goal-setting (Hakulinen and Auvinen 2014; Locke and Latham 1990, 2002). Recently, researchers predicted that digital badges (DBs), an innovative credentialing and pedagogical technology, may be an effective tool to facilitate the goal-setting process (Cheng et al. 2018; Chou and He 2017; Frederiksen 2013; Gamrat et al. 2014; McDaniel and Fanfarelli 2016; Randall et al. 2013). Despite the promise of DBs, little research has provided enough empirical evidence to support their integration and application into courses in higher education. Therefore, the purpose of this study was to address this gap in the literature by using a qualitative, multiple case study to investigate college students’ experiences in using instructional DBs to facilitate their goal-setting process, in order to achieve higher learning performance.

Theoretical framework

This study was built upon and guided by goal-setting theory (Locke and Latham 1990). According to this theory, a goal is an integration of objectives and evaluation standards (Locke 1991), whereas goal-setting is a process of establishing standards for performance (Locke and Latham 2002). Goal-setting is an essential part of the motivational and self-regulated learning process (Schunk 1990; Zimmerman 1989), because learners need to have both the capabilities and beliefs to self-observe, self-judge, and self-react to achieve specific goals (Bandura 1990; Zimmerman 2000; Zimmerman et al. 1992). The relation between goal-setting and performance is at the core of goal-setting theory (Locke and Latham 2002; Schunk et al. 2014; Zimmerman et al. 1992). Scholars have found three motivational mechanisms of goal-setting that have a beneficial effect on performance – effort, persistence, and concentration. Goal-setting encourages people to devote more effort and time on achieving tasks with fewer distractions (Locke and Latham 1990), contributing to better performance. However, when a goal related to a complex task is not achieved, dissatisfaction occurs, which might hurt the subsequent performance (Cervone et al. 1991; Strecher et al. 1995).

Goal-setting theorists have identified the key moderators of goal-setting effects on performance as feedback, commitment to the goal, and task complexity (Latham and Locke 1991; Locke and Latham 1990, 2006). The use of digital badges could be a potentially useful tool to facilitate the feedback process to moderate goal-setting effects. The success or failure of accomplishing a badge represents feedback given to a learner at certain points of their learning progress. Also, some digital badge systems include a mastery-based learning mechanism, which allows learners to receive multiple rounds of feedback about their learning progress towards achieving goals (Besser 2016). With these supports to feedback, learners who learn with digital badges could increase self-efficacy, keep track of their learning progress, reflect on their goals, and adjust accordingly. Previous research found that intrinsic motivation is positively correlated with students’ interaction with gamified learning interventions (Buckley and Doyle 2016). With gamified rewards and accomplishment presentation characteristics, digital badges could potentially enhance learners’ self-efficacy to achieve the goal. In addition, within each badge, instructional materials and interactive learning activities are included to scaffold the learning process. With these characteristics, the use of digital badges could also be practical in scaffolding complex tasks to amplify goal-setting effects.

In this study, we examined learners’ experiences using digital badges to facilitate their goal-setting process in order to achieve better learning outcomes. Goal-setting theory informed our study by providing one explanation of the relation between goals and performance, and more importantly, offering a framework of how different components of the goal-setting process that technology could act on may improve learning performance. Therefore, we drew on goal-setting theory when discussing the students’ perspectives on using digital badges as a goal-setting facilitating tool.

Literature review

As higher education becomes more open and digital, institutions of higher education are searching for new ways to verify the accomplishment of learning and make learning more accessible to all learners (Matkin 2012). The application of digital badges is one current endeavor being utilized to achieve this goal. Human beings have a long history of using badges (Ahn et al. 2014), from the shield emblems in Roman imperial armies (Kwon et al. 2015), to many aspects of our modern life, such as merit-badges in scouting (Abramovich et al. 2013), and digital games (Kriplean et al. 2008). Digital badges have gained increasing recognition as micro credentials and pedagogical tools over the last decade (Gibson et al. 2015; Grant 2016; Halavais 2012). Just as the name implies, digital badges are digitalized symbols that represent differentiated levels of knowledge or experience to encourage, recognize, and communicate achievements (Halavais et al. 2014). What makes this type of technology unique and valuable in education is that a digital badge includes important credentialing and learning metadata, such as badge issue date and authority, criteria to demonstrate the accomplishment of a skill or acquisition of knowledge, supporting instructional materials and activities, duration of credential effectiveness, and endorsement information.

Types and roles of digital badges in education

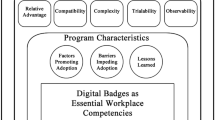

Different types of digital badges have different metadata. There are four different types of DBs used in education—candy badges for positive reinforcements, recognition badges for recognizing accomplishments, credential badges for certification qualifications, and instructional badges that serve as a micro-learning platform and content management system (Cheng et al. 2018). The four types of DBs serve three major roles in education—as motivators of behavior, as pedagogical tools, and as verification of credentials (Ahn et al. 2014). Prior studies have found positive perceptions on using DBs as credentials to recognize and document achievements (Dyjur and Lindstrom 2017). Learner engagement and participation increased when DBs were used as recognition and rewards for the attainment of specific levels of performance (Denny 2013; Hamari 2017). However, researchers found mixed results on the utilization of badges as extrinsic rewards (Abramovich et al. 2013; Hakulinen et al. 2013).

Digital badges used in this study were instructional badges that encapsulated metadata about subject skills or knowledge, the required instructional materials and activities for its accomplishment, and links to evidence showing how the badge was earned (Cheng et al. 2018; Grant 2016; Newby et al. 2016). They were used to deliver various forms of learning modules, validate prior learning experiences, trace learning progress, reflect on prior achievement, and motivate learners to pursue higher goals (Ahn et al. 2014; Cucchiara et al. 2014). Detailed information about the instructional badges used in this study is included in the methods section.

Goal effects on learning performance

Goal effects on learning performance have been found in previous research within both laboratory and field settings (Locke and Latham 1990, 2006). There are three main moderators in this goal-performance relationship—goal commitment, feedback, and task complexity. When people are more committed to specific goal(s), they devote more significant effort, persistence and focus toward accomplishing those goals. There are several ways to enhance goal commitment; one is publicness. When goals are made more public than private, learners have a greater commitment to their goals, especially the difficult ones (Salancik 1977). Feedback enhances goal effects because learners need to know how they are performing, whether they are on target, and how to adjust their performance strategies to match the goal. The complexity of tasks also influences the goal effects, often negatively, because individuals cannot easily find appropriate strategies when their higher-level skills have yet to be developed to handle complex tasks beyond their capabilities (Locke and Latham 2002).

Integrating the use of digital badges with the goal-setting process

There have been many in-depth discussions on the relation of digital badges and goal-setting in education (Antin and Churchill 2011; Chou and He 2017; Gamrat et al. 2014; Randall et al. 2013). For example, some researchers have proposed that DBs usually serve as external goals that are related to pursing external rewards, such as wealth and fame (Deci and Ryan 1982; Vansteenkiste et al. 2004), which may serve to inhibit students’ intrinsic motivation. In contrast, others have argued that DBs can be used as hooks or reasons to engage in a learning activity (Rughiniș 2013). Scholars have also proposed that DBs may support goal-setting by providing alternative ways to set personalized learning paths and provide feedback (Antin and Churchill 2011; Chou and He 2017; McDaniel and Fanfarelli 2016; Randall et al. 2013). In the meantime, a number of organizations have started to practice using DBs as sub-goals or stepping stones in the learning and goal-setting process to help students foresee and reflect on their learning. For example, Computer Science Social Network (CS2 N) utilizes badges as curriculum maps and learning pathways for students to visualize and track their learning progress (Higashi et al. 2012). Similarly, Khan Academy encourages learners to earn different skill badges and publish their badges on Facebook; each badge becomes a public statement of one’s learning goals (Khan Academy 2012). Keller (2009) notes that goal orientation is important for individuals to be motivated for a task. Based on the ARCS model of motivation, DBs could potentially be structured to help individuals recognize the relevance of a learning task by highlighting what the goal is and orienting the learner to that goal.

Although researchers and practitioners foresee great potential in how DBs could be used to facilitate goal-setting, we found little empirical evidence showing how and why DBs serve as a tool for learners to guide and facilitate their goal-setting process. Thus, this study will address this gap by exploring undergraduate students’ experiences of using DBs as a part of their goal-setting process in a college-level technology integration course. Based upon literature on DBs and goal-setting theory (Locke and Latham 2002), this multiple case study focused on answering this research question:

How did the undergraduate student participants in this study use digital badges to facilitate their goal-setting process throughout a 16-week hybrid course?

Methods

Study design

Informed by goal-setting theory that considers goal-setting as an important part of the motivational and self-regulated learning process (Schunk 1990; Zimmerman 1989), this study adopted a multiple-case (n = 4) research design (Yin 2018) to compare the experiences of two undergraduate students who had high self-efficacy for self-regulated learning (SESR) with two undergraduate students who had low self-efficacy for self-regulated learning in using digital badges to facilitate their goal-setting process. Self-efficacy for self-regulated learning is the perceptions and belief about one’s capabilities to use specified strategies to self-observe, self-judge, and self-react and there are highly related to how well one can reach their goals (Fontana et al. 2015). According to goal-setting theories, goal-setting and perceived self-efficacy are two related self-regulated learning processes (Schunk 1990). The perceptions and belief about one’s capabilities to use specified strategies to self-observe, self-judge, and self-react is highly related to how well one can reach the expected level of performance (goals).

Participants (cases) and context

The two groups of students, one group with high and the other group with low self-efficacy for self-regulated learning were selected as cases in this study. A theoretical replication logic was used to select cases across groups aimed at predicting contrasting results for anticipatable reasons derived from theories and literature. And a literal replication logic was used to identify two identical cases within each group (Yin 2018). In this study, Kevin and Anna (pseudonyms) had high SESR scores while Kate and Liza (pseudonyms) had low SESR scores.

Considering the lack of comprehensive measurement on the level of goal-setting, we selected Self-Efficacy for Self-Regulated Learning Subscale of Multidimensional Scales of Perceived Self-Efficacy (SESR-MSPSE) (Bandura 1990) as the case selection criteria in this study. This scale was selected based on two primary reasons. First, students perceive self-regulatory efficacy, goal-setting, and academic achievement as influential factors to each other (Zimmerman et al. 1992), providing an approximation for students’ goal-setting characteristics. Second, self-efficacy for self-regulated learning is a key factor that facilitates goal commitment (Locke and Latham 2002), and a strong predictor of academic persistence and performance (Zimmerman 2000). The validity and reliability of MSPSE scale has been established in previous research (Williams and Coombs 1996, p. 9). The scale was found to be reliable (11 items; α = .86) (Choi et al. 2001). The items of the self-regulated learning sub-scale are listed in “Appendix 1”. For each item, students rated their perceived self-efficacy according to a 7-point scale (Bandura 1990). Although this scale is typically applied to study childhood depression and middle school students’ academic achievement, research shows that this scale is also appropriate to be used with college students (Choi et al. 2001).

Context

This study was conducted in a 16-week hybrid instructional technology course in a large, land grant, Midwestern university. This course was mandatory for pre-service teachers to take in their first or second year. The goal of the course was to help students plan, implement, and evaluate technology for teaching and learning. Students were expected to evaluate various instructional technologies and determine how, when, and why such technologies could/should be used for their teaching and learning. A total of 150 pre-service teachers were enrolled in this course and four of them were selected as cases in this study. The course was comprised of three components: case discussions, lab practice, and online content. It used a flipped instructional model, where students engaged in course content in both the learning management system (Blackboard Learn) and the digital badge platform (Passport) before and after attending weekly lectures where they discussed course content in a face-to-face format. Students also spent time in weekly lab sessions to individually work on learning with DBs. A teaching assistant (TA) was in the weekly lab sessions for student questions, grading badges, and giving feedback.

Passport badging system and instructional digital badges

The Passport digital badge system is where the digital badges were created, stored, displayed and delivered. After starting a badge, the student was presented with introductory materials, prerequisites, instructions, guidelines, and specific criteria to complete each challenge. Instructional materials were presented by text, hyperlinks, images, or multimedia. In each badge, a learning task was presented as a challenge. Different badges had a different number of challenges depending on the complexity of that knowledge/skill. For most of the badges, students were able to finish in a week while some other badges were more complex in nature and typically took students 2 or 3 weeks to accomplish. Once a student submitted a challenge, the TA would get an email notification and was required to provide feedback within 24 h. A student could submit multiple times before a specific deadline. Each submission received some form of feedback. The badge was awarded once all challenges had been achieved. Each challenge was graded with a specific number of points. A student’s grade was based on the overall performance on each challenge level.

Students were required to complete eight badges in total as the main bulk of assignments in this course, in addition to four case studies, two online discussions, three quizzes, and a final exam. Students completed four required and four elective badges throughout the entire semester. Each week a badge was assigned, and students were asked to complete it in a specific duration of time, which varied depending on the complexity of that badge. The four required badges included a digital literacy badge, an information literacy badge, a writing learning objectives badge, and an interactive e-learning module badge. These required badges focused on understanding specific content topics by asking students to read texts or watch videos, to apply knowledge by engaging in some activities like finding specific journal articles in the university library website or creating an e-learning module by integrating different types of technologies, and then to internalize the knowledge/skill with the students’ own experiences by asking them to write reflections. In addition to the four required badges, students were also asked to select four additional badges from a total of 24 optional tool badges in categories such as research tools, brainstorming tools, video production tools, presentation tools, and audio editing tools. In each of these tool badges, students were asked to explore one instructional technology, practice the use of this tool by creating a project, and then reflect on the value of using this badge in teaching and learning by writing a reflective paragraph.

Procedure

Case selection

The SESR-MSPSE was included in a questionnaire sent to and completed by all students (N = 150) at the beginning of the semester (MSPSE scale survey M = 2.4877, Min = 1, Max = 4, SD = .52187). In addition, the students were asked to provide demographic information. An email invitation for the interview with a consent form was sent to students who scored the highest on MSPSE sub-scale. If the student agreed to participate in the interview, he/she would be selected as one case in the high SESR group. If he/she did not agree to participate, we would invite the student with the second highest score. This selection process continued until the two cases were found. The same selection logic applied to the low SESR group. Each participant was assigned a pseudonym to provide anonymity and protect confidentiality. Participants’ basic information and their scores on the SESR-MSPSE scale were included in Table 1.

Data collection

The primary type of data included in this study was semi-structured interviews. The interview protocol was created to elicit the learning experiences of the four students (see “Appendix 2” for interview items). To increase face validity, the interview protocol was reviewed by two students enrolled in this same course to evaluate the extent to which the questions made sense to them. To increase content validity, a professor in instructional technology evaluated the extent to which the interview questions reflected students’ application of digital badges in their goal-setting processes (Polit and Beck 2006). One of the researchers conducted one interview with each of the four participants 2 weeks following the conclusion of the course. Each interview lasted approximately 45 min and was recorded and transcribed verbatim. One of the researchers transcribed the interviews, and another researcher checked the accuracy of the transcripts. Each transcript was between 8 and 12 pages.

Data analysis

One researcher served as the coder. Each specific case was coded first as a whole and then by responses per question. Pseudonyms were given prior to the coding process. The coder knew that there were two participants with high SESR and the other two participants had low SESR but was not aware of the correspondence between scores and pseudonyms.

Two major stages of coding were conducted: the first and second cycle of coding (Miles et al. 2014). In the first cycle, four predetermined code categories were derived from goal-setting theory—goal difficulty, goal commitment, task complexity, and feedback. The coder used a holistic coding strategy to make sense of the overall meaning of the responses to each question in an interview transcript, before a more detailed coding of the sentences was applied.

A descriptive coding strategy was also used to assign labels to sentences and group them in general main categories and sub-categories. There were six first-level categories, 13 s-level categories, and four third-level categories. The first-level included personal and professional goals, persistence and commitment, task complexity, the design of the badge, course structure, and TA feedback.

In the second-level cycle of coding, inferential codes (or pattern codes) were created to regroup the main categories and sub-categories and identify emergent themes. Four themes were identified: goal identification, digital badges and goal commitment, digital badges and task complexity, as well as digital badges and feedback. Pattern-matching was then used to compare the identified themes with the goal-setting theoretical propositions (Miles et al. 2014). After that, we compared patterns of similarities and differences across the four cases.

Results

After two cycles of coding, we generated four major themes focusing on our research questions and theoretical propositions: goal identification, DBs and goal commitment, DBs and task complexity, as well as DBs and feedback. Three of the major themes had two sub-themes.

Theme 1: goal identification

Personal goals

Each of the four students came to the course with a general professional goal in mind. Their perceptions of personal goals were similar to professional goals in the context of university life. Kevin wants to teach on the primary level, specifically as a physical education teacher. Anna wants to teach third- or fourth-graders in public schools because she has been in private school most of her life as a student and wants to make a difference in the future in improving students’ lives. Lisa is in an exploratory program and chose the Hospitality and Tourism Management program this semester because she loves to travel and thought it might be a good fit for her. Kate wants to be a preschool or a special education teacher because she loves children and enjoys teaching.

Course-related goals

The students indicated that they did not formulate course-related goals at the beginning of the semester but, instead, formulated them gradually as the course progressed. Although all four students identified passing or getting a high grade as their primary goal in this course, students with different SESR varied in their ability to connect their course-related goals to other personal goals. The two students who had high SESR (Kevin, Anna) could make more explicit connections among intrinsic values, past experiences, and course-related goals than the two that had low SESR (Kate, Lisa). For example, Kevin connected his goal of passing the course to his professional goal of becoming a teacher in the future. He said, “[I wanted] to pass the class because it is a required class, so I have to take it … and because it is technology-based maybe [it will be] useful in my teaching.”

Similarly, Anna connected her goal of getting a high grade in this course to her prior experiences and passion for learning more about technology. She said, “So in the past, I have taken many technology courses, but with this course, it was like some applications that I have never used before. So it was a great way to learn more about that.” In comparison, the two students with low SESR had more difficulty in formulating course-related goals on their own and relied on other people to help them identify what they wanted to accomplish in the course. For example, Kate found the question, “What did you want to get out of the course?” hard to answer, repeating, “I do not know” twice in the interview with intermittent silence. The students with low SESR seldom connected their related goals to other personal goals and extended interests. Lisa, for example, did not know what courses to take at the beginning of the semester, so she followed peers’ suggestions to take this course, which was required for all undergraduate students in the college. She claimed, “My goal for all classes is to get an A.”

Theme 2: DBs and goal commitment

DBs as external rewards

Students’ commitment to their course-related goals was affected by rewarding badges or accomplishing challenges as external incentives. Although all four students considered DBs as assignments to complete for credits, the impact of DBs as an external reward for the two students with high SESR was not as strong as for the two students with low SESR. The two students with high SESR perceived being rewarded a badge as “Checking one assignment off the to-do list,” while the two students with low SESR perceived the reward as a signal of accomplishment. For example, Lisa, a student with low SESR, felt a sense of accomplishment when a challenge had been accepted. She said, “I just feel [something has been] accomplished because then I can put the badge on a little accepted page.” Similarly, Kate expressed “feeling really good” when an email showed a challenge had been accepted.

In comparison, Kevin liked being awarded a badge because it signaled completing a mandatory task rather than accomplishing something exciting. He said, “It [being awarded a badge] was like completing anything, like a to-do list. You know you’re just, like, I can move on and do more exciting stuff. Passport [DBs] isn’t like the most fun thing to do.” Similarly, Anna considered being awarded a badge more like a work evaluation than an accomplishment. She said, “It’s nice knowing that you did everything correctly.”

DBs as internal rewards

All four students found the interactive, hands-on activities included in the badge motivating. For example, Lisa “thought this [badge] was more motivating than, like, writing a paper (…) because it is more interactive and creative.” Also, Kevin said, “I love the visual, hands-on stuff.” Similarly, Anna said, “I never made a screen cast… that [DB] was kind of cool for me to play with. That one was pretty helpful to me.” Likewise, Kate also loved DBs that allowed her to create something, as she described in the interview: “I like the Powtoon [an animated online presentation tool] badge or some other badges where you can create something, and it gives you a result.”

Moreover, for the two students with high SESR, using DBs made their learning more flexible, and they had more autonomy in learning. DBs provided them with opportunities to plan their learning ahead of time and finish work in advance based on their self-driven learning paths. For instance, Anna said “… I am somebody who likes to get stuff done right away. So, I would submit those [DBs] in advance. It was nice that you had the opportunity to do badges before or, at any point.” Kevin liked working with DBs because he had more choices on what to learn. In one example, he observed, “So for the website badge, we could do, like…three or four websites…I tried Weebly [one website building tool] first…but I never really liked the layout of Weebly… but I believe it will be easy but then I was, like, wait a second, let me look at the other ones…and then I finally go with Wix [another website building tool].” The structure of the DB encouraged him to explore multiple learning paths, which he might not otherwise have done. In comparison, the two students with low SESR became more persistent in pursuing learning goals because DBs gave them specific deadlines for each granular learning task. For example, Kate said, “[DBs] were helpful…they clarified what should you do at certain weeks.” And Lisa said, “I waited until [the badge for that week] was given to us. So I didn’t start and get them done in advance like some other people in my class. It just worked for me.”

Theme 3: DBs and task complexity

There were two different types of learning tasks that students needed to complete in this course: basic applications of instructional technologies and the creation of an online learning module. Both were delivered via badges. Applications of instructional technologies asked students to know how to use the basic functions of some software that could be used in teaching and learning, such as website builders and video production tools. The creation of an online learning module was more complex to learn compared with basic applications of instructional technologies because it involved an integration of different skills and knowledge such as analysis, design, development, and implementation of an interactive module (an online lesson).

Instructions and activities in each badge were organized and “simplified” to provide necessary scaffoldings to the learning process. The specific layout of instructions in each badge encouraged students to use cognitive strategies to accomplish the learning task step-by-step. For example, Kate said, “[DBs] showed both what to do and how to do [instructional technology tools]. Likewise, Kevin said:

[DBs] simplified [the learning task] because [each badge] gets all the details on everything you need to do. So you can review if you don’t know [during the learning process] and then it gives you, like, examples and instructions. For example, the screen cast badge I was, like, “I have no idea how to do this!” And then [the instructions in each challenge] says look at all this and that. [DBs] just go step-by-step instructions and that’s what I just kept referring back to.

DBs not only provided effective scaffoldings in instructions within each badge for a complex task like designing an interactive module (an online lesson), they also acted as stepping stones for learners to plan out learning ahead of time in order to accomplish a big project. This helped these four students with both high and low SESR to better plan, monitor, and complete the more complex learning tasks. As an example, Lisa said:

After I was told that we could use badges that we have done before to develop the interactive module, I was, like, oh perfect! I already did this and that and I could use them to build up my interactive module (…) that makes developing the interactive module much easier.

DBs also helped students to connect simple learning tasks with the complex ones in a course that was designed to encourage students to make good connections among different course components. For example, when Anna was working on the complex task of designing an interactive module (an online course), she reflected back on one of the simple learning tasks (application of video production tools) and improved it, based on what she learned while working on the complex learning task (designing an interactive module). She said:

The video production badge corresponded to my interactive module. I feel like starting the interactive module helped me make that video. So after [doing a couple of challenges in the interactive module badge] I knew exactly what I wanted to talk about in the video; [doing the interactive module badge] was very helpful for me [to complete the video production badge].

Theme 4: DBs and feedback

The digital badge system used in this course had a circulatory feedback function, with which students could have multiple chances to get feedback on their work and resubmit multiple times within deadlines. When students submitted a challenge, their TA would get a submission email and he/she could go to the system to evaluate the student’s work. If the work was acceptable, then the TA could accept it and the student would be notified by an email that the challenge had been accepted. If the work needed some improvement, the TA could deny this submission with feedback for improvement and then send it back to the student for correction and resubmission. The two students with high SESR in this study had different perspectives than the other two students with low SESR on the feedback given by their TAs through the badge system.

Feedback for checking basic requirements

The two students with low SESR perceived the feedback given by their TAs as more of check-off reminders. They loved having their TAs check if their work had met the basic requirements, and having the opportunity to resubmit multiple times until their work could be accepted. For example, Kate said:

I know most of the badges were developed in a way that you can always go back and resubmit. [My TA] always gave you feedback, especially when you first submit it, and then you can do what you have to fix. Like the first part of the interactive module [designing an interactive module], [My TA] said things like “you need to have citations!” And he would explain the requirements again just like refreshing something.

Similarly, Lisa said:

Whenever [my TA provided feedback to me], it would be like clarifying the deliverables that I don’t do them exactly right. Or like I don’t understand the deliverables and she repeated them again. (…) and if I don’t do it right again she’ll correct it again.”

Feedback for improving understanding

The two students with high SESR also liked their TAs to help them check their work; however, they wanted more detailed feedback on clarifying confusion and helping them understand why things should be done in certain ways. For example, Anna said:

Yeah, that’s really helpful because some badges I was really confused on. And [my TA] gave me, like, a long paragraph on what I could fix and then if I had any questions on that I could always ask her and she would really help me with that”.

Kevin was aware of the situation, especially when he completed the assignments without understanding exactly what he was doing. He wanted more feedback from his TA to confirm how he was doing with the learning task. For example, he said:

It’s just, like, you do the homework and then the professor doesn’t really make sure you understand it. [For] a lot of the badges I’ve done before, (…) I don’t really know what I’m doing for them. I wanted just, like, the TA to come around [and explain] why you completed this badge or why you didn’t get accepted for this badge.

Discussion and implication

The purpose of this multiple case study was to explore students’ experiences using digital badges to facilitate their goal-setting process throughout an undergraduate technology integration course. Results found in this study aligned with three functions of DBs proposed by Cucchiara et al. 2014: capturing (validate and trace learning progress), signaling (review and reflect on learning process), and motivating (award achievements). In addition, we also found that DBs were especially effective in facilitating students’ goal-setting processes. These findings helped us draw implications for educators and practitioners who are interested in applying this innovative technology in their educational practices.

Capturing and signaling learning

Similar to what was proposed in previous studies about using DBs to validate and recognize learning (Bowen and Thomas 2014; Devedžić and Jovanović 2015), we also found that DBs could make learning visible to students who looked for gaps in learning or extended content areas to explore. Research on goal-setting showed that making a public statement of the achieved badges could increase goal commitment and thus improve effort devotion and persistence (Salancik 1977). Some commercial digital badge platforms also encourage students to publish their badges on social websites like Facebook (Morrison and DiSalvo 2014). However, we found that students did not want to publish the DBs on their social websites because they thought the work they had done to achieve the badges was not of high enough quality to be shown to the public, especially to potential employers. In other words, from the students’ perspective, the completed badges with metadata did not add value to the presentation of their perceived level of competency.

Although demonstrating what skills had been accomplished has long been considered as an important benefit of using digital badges in education because the process of learning granular skills or knowledge could be recorded and presented to potential employers (e.g. Cheng et al. 2018; Jovanovic and Devedzic 2014; Ostashewski and Reid 2015), educators and practitioners should notice that students have concerns about letting employers see their whole learning processes. For badge design considerations, there could be two categories for digital badges, one for learning, and one for competency presentation. In this scenario, learners could be given options to choose what to show to different audiences.

Motivating learning

Prior research found mixed or even conflicting results on the motivational effects of using DBs (Denny 2013; Kwon et al. 2015; Stetson-Tiligadas 2016). For example, researchers investigated the digital badges implemented in a computer science course and found that DBs overall did not have a significant effect on course results or student behavior, mainly because students were satisfied once the desired grade had been achieved (Haaranen et al. 2014). However, another group of researchers found that the number of badges a student earned was positively correlated with performance-avoidance-motivation (Abramovich et al. 2013). In our study, we found that using DBs had different motivational effects on students with different self-efficacy for self-regulated learning (SESR) levels. The motivational effect of using DBs as external rewards for students with higher SESR was not as strong as it was for students with lower SESR. In other words, students with low SESR felt more motivated than those with high SESR to learn after being rewarded a badge.

For educators and practitioners who are interested in applying DBs in their educational practices, it is important to design and use DBs in a way that fits the needs of students with different levels of self-efficacy for self-regulated learning. It is also important for them to know that the type of instructional DBs that are embedded with interactive learning activities could be more impactful on student motivation than DBs that are only embedded with basic competency metadata. More research is needed to investigate the relation between self-efficacy for self-regulated learning and the use of digital badges.

Facilitating the goal-setting process

In addition to the three basic functions of DBs on learning, we found four specific functions of DBs on facilitating goal-setting process: connecting multiple goals, affecting goal commitment, scaffolding complex tasks, and providing personalized feedback.

Connecting multiple goals

DBs could help students combine performance and learning goals together in a learning environment where both self-decided and assigned goals were present. Each badge not only provides students with a specific performance outcome to achieve, but it also assigns a learning goal, which each student could pursue at his/her own pace. Course-related goals were mixed with assigned goals and personal goals. On the one hand, the course was mandatory to take; on the other hand, the formation of course-related goals was also influenced by factors like satisfying degree requirements, building confidence, pursuing personal interests or long-term professional goals. In this course, the instructor and the TA in each lab were authority figures who provided the assigned goals. All four participants mentioned the help of their TAs who provided feedback in different stages of the course, helping them to be more committed to the course objectives.

According to Keller (2009), learners are more motivated when they perceive the learning goals are relevant to their personal goals for present or future. In this study, we found that students with high SESR could make better connections to their long-term personal goals than those with low SESR. From the comparison of course-related goals between the two pairs of students, we also found that students with high SESR formulated more of a learning goal that focused on the development of their ability or the mastery of new skills. Students with low SESR, on the other hand, formulated more of a performance goal that focused on seeking positive evaluation of their ability and avoiding negative ones (Elliott and Dweck 1988). Research found that learning goals had a positive impact on learning in terms of strategy formulation, mastery-oriented response to obstacles, and sustained performance (Bryan and Locke 1967; Reader and Dollinger 1982).

Affecting goal commitment

The simple use of DBs did not increase goal commitment. Because learners recognized the importance of DBs as assignments that were closely related to grade points, they felt more committed to completing the learning tasks. Prior research arrived at similar conclusions (Haaranen et al. 2014; Reid et al. 2015). In addition, we also found that DBs with interactive activities could trigger intrinsic motivation to devote more time and effort on learning tasks. Using DBs as pure external rewards was more motivating to students with lower SESR than those with high SESR. Future research needs to more accurately measure students’ goal commitment under the impact of using DBs and investigate the reasons behind the differences identified.

Scaffolding complex tasks

Each DB could serve as a sub-goal or stepping stone in the learning process (Cheng et al. 2018). This function was especially salient when a package of DBs consisted of a number of knowledge/skills-related simple and complex learning tasks. With this package of DBs, students could design their own learning paths, choose alternative approaches to complete the tasks, and identify personalized learning trajectories. Specifically, they could choose to use simple tasks as scaffolds to develop skills and knowledge until accomplishing the complex task, or they could improve comprehension of the simple tasks by connecting to advanced skills or knowledge acquired when completing the complex tasks. In either way, DBs helped students to make better connections among different learning components.

Providing personalized feedback

Prior research found that DBs could provide both summative and formative feedback (Besser 2016; Fanfarelli and McDaniel 2017). For example, Besser (2016) found that digital badge systems could provide students with prompt personalized feedback. Similarly, in this study, we found that the circulatory feedback system adopted by DBs that were used for pedagogical purposes could be used to provide more personalized and prompt feedback to students, especially students with different SESR. This is particularly important because we found that students with low and high SESR had different needs for feedback. Those with low SESR wanted more outcome-based feedback, in other words, feedback that could help them satisfy the task requirements. In contrast, those with high SESR wanted more comprehension-based feedback, in other words, feedback that explained why things should be done in certain ways. Future research may explore how to personalize feedback in DBs to satisfy the needs of different groups of students.

For educators and practitioners who are interested in applying DBs to facilitate student goal-setting and help them with self-regulated learning skills, we provide the following recommendations generated from this study:

- 1.

Design pedagogical or system strategies that can help learners, especially those with low SESR to connect their learning objectives to multiple goals in life, to optimize the effects of using DBs on learner motivation.

- 2.

Incorporate interactive activities in the instructions within each badge to trigger learners’ intrinsic motivation to devote more time and effort to learning tasks.

- 3.

Try to encourage learners to review all available DBs at the beginning and personalize learning paths according to their individual needs.

- 4.

Customize feedback to students with different levels of SESR.

Conclusion

Digital badges have been used for a variety of purposes, such as representing accomplishments (Bowen and Thomas 2014; Erickson 2015), motivating learner participation and interaction (Chou and He 2017; Stetson-Tiligadas 2016), professional development (Diamond and Gonzalez 2014; Wallis and Martinez 2013), gamification (Haaranen et al. 2014; Hakulinen and Auvinen 2014), and teaching (Fanfarelli and McDaniel 2017; Newby et al. 2016). This study focused on exploring the application of DBs as goal-setting facilitators. Findings from this study confirmed the predictions made by previous research that DBs could be a useful tool to facilitate goal-setting processes (Cheng et al. 2018; Gamrat et al. 2014; McDaniel and Fanfarelli 2016; Randall et al. 2013). This study contributed further by examining how students use this technology to facilitate different parts of their goal-setting processes including identifying goals, improving goal commitment, controlling task complexity, and receiving feedback. In addition, the study found students with different levels of self-efficacy for self-regulated learning skills use this tool differently in their goal-setting processes.

There were limitations in this study. Although we included multiple cases and different perspectives in the study, semi-interview data was the only source of information, which might decrease the credibility of study. Also, the participants in this study represent a small sample of learners in one tertiary-level context, and thus a generalization of the research findings to a large population is restricted. However, this exploratory case study provided insights for future research to investigate (1) the relationship between the use of DBs and self-regulated learning, possible mediators include but are not restricted to gender, motivation level, and learning styles; and (2) the relationship between the use of DBs and actual learning performance. In addition, this study also provided practical implications for educators who are interested in applying digital badges in a higher education context and practitioners who want to implement this technology in similar learning environments. Future research is necessary to explore the relation of using digital badges with goal-setting from different methodological perspectives, using varying data sources, to provide a holistic picture. Future research could also explore how to implement goal-setting strategies into the design of a digital badge-supported learning environment. Finally, it is also worthwhile to explore other types of technologies, in addition to digital badges, that could support learners’ goal-setting.

References

Abramovich, S., Schunn, C., & Higashi, R. M. (2013). Are badges useful in education? It depends upon the type of badge and expertise of learner. Educational Technology Research and Development,61(2), 217–232. https://doi.org/10.1007/s11423-013-9289-2.

Ahn, J., Pellicone, A., & Butler, B. S. (2014). Open badges for education: What are the implication at the intersetion of open sytems and badging? Research in Learning Technology,63(1), 87–110. https://doi.org/10.1111/edth.12011.

Antin, J., & Churchill, E. F. (2011). Badges in social media: A social psychological perspective. In Proceedings of CHI 2011 gamification workshop (pp. 1–4). Canada.

Bandura, A. (1990). Perceived self-efficacy in the exercise of personal agency. Journal of Applied Sport Psychology,2(2), 128–163. https://doi.org/10.1080/10413209008406426.

Besser, E. (2016). Exploring the role of feedback and impact with a digital badge system from multiple perspectives: A case study of preservice teachers. (Doctoral dissertation). Retrieved from ProQuest Dissertations and Thesis (ProQuest No. 10151547).

Bowen, K., & Thomas, A. (2014). Badges: A common currency for learning. Change: The Magazine of Higher Learning,46(1), 21–25. https://doi.org/10.1080/00091383.2014.867206.

Bryan, J. F., & Locke, E. A. (1967). Goal setting as a means of increasing motivation. Journal of Applied Psychology,51(3), 274–277. https://doi.org/10.1037/h0024566.

Buckley, P., & Doyle, E. (2016). Gamification and student motivation. Interactive Learning Environments,24(6), 1162–1175. https://doi.org/10.1080/10494820.2014.964263.

Cervone, D., Jiwani, N., & Wood, R. (1991). Goal setting and the differential influence of self-regulatory processes on complex decision-making performance. Journal of Personality and Social Psychology,61(2), 257–266. https://doi.org/10.1037/0022-3514.61.2.257.

Cheng, Z., Watson, S. L., & Newby, T. J. (2018). Goal setting and open digital badges in higher education. TechTrends,62(2), 190–196. https://doi.org/10.1007/s11528-018-0249-x.

Choi, N., Fuqua, D. R., & Griffin, B. W. (2001). Exploratory analysis of the structure of scores from the multidimensional scales of perceived self-efficacy. Educational and Psychological Measurement,61(3), 475–489. https://doi.org/10.1177/00131640121971338.

Chou, C. C., & He, S.-J. (2017). The effectiveness of digital badges on student online contributions. Journal of Educational Computing Research,54(8), 1092–1116. https://doi.org/10.1177/0735633116649374.

Cucchiara, S., Giglio, A., Persico, D., & Raffaghelli, J. E. (2014). Supporting self-regulated learning through digital badges: A case study. New Horizons in Web Based Learning,8699, 133–142. https://doi.org/10.1007/978-3-319-13296-9_15.

Deci, E. L., & Ryan, R. M. (1982). Intrinsic motivation to teach: Possibilities and obstacles in our colleges and universities. In J. Bess (Ed.), New directions for teaching and learning (pp. 27–35). Jossey-Bass.

Denny, P. (2013). The effect of virtual achievements on student engagement. In Proceedings of the SIGCHI conference on human factors in computing systems—CHI’13 (p. 763). https://doi.org/10.1145/2470654.2470763.

Devedžić, V., & Jovanović, J. (2015). Developing open badges: A comprehensive approach. Educational Technology Research and Development,63(4), 603–620. https://doi.org/10.1007/s11423-015-9388-3.

Diamond, J., & Gonzalez, P. C. (2014). Digital badges for teacher mastery: An exploratory study of a competency-based professional development badge system. Retrieved from https://files.eric.ed.gov/fulltext/ED561894.pdf.

Dyjur, P., & Lindstrom, G. (2017). Perceptions and uses of digital badges for professional learning development in higher education. TechTrends,61(4), 386–392. https://doi.org/10.1007/s11528-017-0168-2.

Elliott, E. S., & Dweck, C. S. (1988). Goals: An approach to motivation and achievement. Journal of Personality and Social Psychology,54(1), 5–12. https://doi.org/10.1037/0022-3514.54.1.5.

Erickson, C. C. (2015). Digital credentialing: A qualitative exploratory investigation of hiring directors’ perceptions. https://doi.org/10.1017/CBO9781107415324.004.

Fanfarelli, J. R., & McDaniel, R. (2017). Exploring digital badges in university courses: Relationships between quantity, engagement, and performance. Online Learning,21(2), n2. https://doi.org/10.24059/olj.v21i2.1007.

Fontana, R. P., Milligan, C., Littlejohn, A., & Margaryan, A. (2015). Measuring self-regulated learning in the workplace. International Journal of Training and Development,19(1), 32–52. https://doi.org/10.1111/ijtd.12046.

Frederiksen, L. (2013). Digital badges. Public Services Quarterly,9(4), 321–325. https://doi.org/10.1080/15228959.2013.842414.

Gamrat, C., Zimmerman, H. T., Dudek, J., & Peck, K. (2014). Personalized workplace learning: An exploratory study on digital badging within a teacher professional development program. British Journal of Educational Technology,45(6), 1136–1149. https://doi.org/10.1111/bjet.12200.

Gibson, D., Ostashewski, N., Flintoff, K., Grant, S., & Knight, E. (2015). Digital badges in education. Education and Information Technologies,20(2), 403–410. https://doi.org/10.1007/s10639-013-9291-7.

Grant, S. (2016). Foundation of Digital Badges and Micro-Credentials. In Foundation of digital badges and micro-credentials (pp. 97–114). https://doi.org/10.1007/978-3-319-15425-1.

Haaranen, L., Ihantola, P., Hakulinen, L., & Korhonen, A. (2014). How (not) to introduce badges to online exercises. In Proceedings of the 45th ACM technical symposium on computer science education—SIGCSE’14 (pp. 33–38). https://doi.org/10.1145/2538862.2538921.

Hakulinen, L., & Auvinen, T. (2014). The effect of gamification on students with different achievement goal orientations. In Proceedings—2014 International conference on teaching and learning in computing and engineering, LATICE 2014 (pp. 9–16). https://doi.org/10.1109/LaTiCE.2014.10.

Hakulinen, L., Auvinen, T., & Korhonen, A. (2013). Empirical study on the effect of achievement badges in TRAKLA2 online learning environment. In Proceedings—2013 learning and teaching in computing and engineering, LaTiCE 2013 (pp. 47–54). https://doi.org/10.1109/LaTiCE.2013.34.

Halavais, A. M. C. (2012). A genealogy of badges: Inherited meaning and monstrous moral hybrids. Information Communication and Society,15(3), 354–373. https://doi.org/10.1080/1369118X.2011.641992.

Halavais, A., Kwon, K. H., Havener, S., & Striker, J. (2014, January). Badges of friendship: Social influence and badge acquisition on stack overflow. In 2014 47th Hawaii international conference on System Sciences (pp. 1607–1615). Waikoloa, HI: IEEE. Retrieved from https://ieeexplore.ieee.org/stamp/stamp.jsp?arnumber=6758803.

Hamari, J. (2017). Do badges increase user activity? A field experiment on the effects of gamification. Computers in Human Behavior,71, 469–478. https://doi.org/10.1016/j.chb.2015.03.036.

Higashi, R., Abramovich, S., Shoop, R., & Schunn, C. (2012). The roles of badges in the computer science student network. Proceedings GLS,8(Drl 1029404), 423–430.

Hollenbeck, J. R., & Klein, H. J. (1987). Goal commitment and the goal-setting process: Problems, prospects, and proposals for future research. Journal of Applied Psychology,72(2), 212–220. https://doi.org/10.1037/0021-9010.72.2.212.

Jovanovic, J., & Devedzic, V. (2014). Open badges: Challenges and opportunities. In Lecture notes in computer science (including subseries lecture notes in artificial intelligence and lecture notes in bioinformatics), 8613 LNCS (pp. 56–65). https://doi.org/10.1007/978-3-319-09635-3_6.

Keller, J. M. (2009). Motivational design for learning and performance: The ARCS model approach. Springer Science & Business Media.

Khan Academy. (2012). Share your Khan Academy badges on Facebook in style. Retrieved February 14, 2018, from https://www.khanacademy.org/about/blog/post/23071271279/facebook-opengraph.

Kriplean, T., Beschastnikh, I., & McDonald, D. W. (2008). Articulations of wikiwork: Uncovering valued work in wikipedia through barnstars. In CSCW (pp. 47–56). https://doi.org/10.1145/1460563.1460573.

Kwon, K. H., Halavais, A., & Havener, S. (2015). Tweeting badges: User motivations for displaying achievement in publicly networked environments. Cyberpsychology, Behavior, and Social Networking,18(2), 93–100. https://doi.org/10.1089/cyber.2014.0438.

Latham, G. P., & Locke, E. A. (1991). Self-regulation through goal setting. Organizational Behavior and Human Decision Processes,50(2), 212–247.

Locke, E. A. (1991). The motivation sequence, the motivation hub, and the motivation core. Organizational Behavior and Human Decision Processes,50(2), 288–299. https://doi.org/10.1016/0749-5978(91)90023-M.

Locke, E. A., & Latham, G. P. (1990). A theory of goal setting and task performance. Englewood Cliffs, NJ: Prentice Hall.

Locke, E. A., & Latham, G. P. (2002). Building a practically useful theory of goal setting and task motivation: A 35-year odyssey. American Psychologist,57(9), 705–717. https://doi.org/10.1037/0003-066X.57.9.705.

Locke, E. A., & Latham, G. P. (2006). New directions in goal-setting theory. Current Directions in Psychological Science,15(5), 265–268. https://doi.org/10.1111/j.1467-8721.2006.00449.x.

Matkin, G. W. (2012). The opening of higher education. Change The Magazine of Higher Learning,44(3), 6–13. https://doi.org/10.1080/00091383.2012.672885.

McDaniel, R., & Fanfarelli, J. (2016). Building better digital badges: Pairing completion logic with psychological factors. Simulation and Gaming,47(1), 73–102. https://doi.org/10.1177/1046878115627138.

Miles, M. B., Huberman, A. M., & Saldaña, J. (2014). Qualitative data analysis: A methods sourcebook. Los Angeles: SAGE.

Morrison, B. B., & DiSalvo, B. (2014). Khan academy gamifies computer science. In Proceedings of the 45th ACM technical symposium on computer science education—SIGCSE’14 (September 2015) (pp. 39–44). https://doi.org/10.1145/2538862.2538946.

Newby, T., Wright, C., Besser, E., & Beese, E. (2016). Passport to designing, developing and issuing digital instructional badges. In D. Ifenthaler, N. Bellin-Mularski, & D.-K. Mah (Eds.), Foundation of digital badges and micro-credentials: demonstrating and recognizing knowledge and competencies (pp. 179–201). Cham: Springer. https://doi.org/10.1007/978-3-319-15425-1_10.

Ostashewski, N., & Reid, D. (2015). A history and frameworks of digital badges in education. In T. Reiners & L. Woods (Eds.), Gamification in education and business (pp. 187–200). Cham: Springer. https://doi.org/10.1007/978-3-319-10208-5_10.

Polit, D. F., & Beck, C. T. (2006). The content validity index: Are you sure you know what’s being reported? Critique and recommendations. Research in Nursing & Health,29(5), 489–497. https://doi.org/10.1002/nur.20147.

Randall, D. L., Harrison, J. B., & West, R. E. (2013). Giving credit where credit is due: Designing open badges for a technology integration course. TechTrends,57(6), 88–95. https://doi.org/10.1007/s11528-013-0706-5.

Reader, M. J., & Dollinger, S. J. (1982). Deadlines, self-perceptions, and intrinsic motivation. Personality and Social Psychology Bulletin,8(4), 742–747. https://doi.org/10.1177/0146167282084022.

Reid, A. J., Paster, D., & Abramovich, S. (2015). Digital badges in undergraduate composition courses: effects on intrinsic motivation. Journal of Computers in Education,2(4), 377–398. https://doi.org/10.1007/s40692-015-0042-1.

Rughiniș, R. (2013). Talkative objects in need of interpretation: Re-thinking digital badges in education. In CHI’13 extended abstracts on human factors in computing systems, 2099–2108. https://doi.org/10.1145/2468356.2468729.

Salancik, G. R. (1977). Commitment is too easy! Organizational Dynamics,6(1), 62–80. https://doi.org/10.1016/0090-2616(77)90035-3.

Schunk, D. H. (1990). Goal setting and self-efficacy during self-regulated learning. Educational Psychologist,25(1), 71–86. https://doi.org/10.1207/s15326985ep2501_6.

Schunk, D. H., Meece, J. L., & Pintrich, P. R. (2014). Motivation in education: Theory, research and applications. London: Pearson.

Stetson-Tiligadas, S. (2016). The impact of digital achievement badges on undergraduate learner motivation (Doctoral dissertation). Retrieved from ProQuest Dissertations and Thesis (ProQuest No. 10037460).

Strecher, V. J., Seijts, G. H., Kok, G. J., Latham, G. P., Glasgow, R., Devellis, B., et al. (1995). Goal setting as a strategy for health behavior change. Health Education and Behavior,22(2), 190–200. https://doi.org/10.1177/109019819502200207.

Vansteenkiste, M., Simons, J., Lens, W., Sheldon, K. M., & Deci, E. L. (2004). Motivating learning, performance, and persistence: The synergistic effects of intrinsic goal contents and autonomy-supportive contexts. Journal of Personality and Social Psychology, 87(2), 246–260. https://doi.org/10.1037/0022-3514.87.2.246.

Wallis, P., & Martinez, M. S. (2013). Motivating skill-based promotion with badges. In Proceedings of the 2013 ACM annual conference on Special interest group on university and college computing services—SIGUCCS’13 (pp. 175–180). New York: ACM Press. https://doi.org/10.1145/2504776.2504805.

Williams, J. E., & Coombs, W. T. (1996). An analysis of the reliability and validity of Bandura’s multidimensional scales of perceived self-efficacy. In Annual meeting of the american educational research association (Vol. 671). New York, NY. https://doi.org/10.1037/t06802-000.

Yin, R. K. (2018). Case study research and applications: Design and methods (6th ed.). Thousand Oaks, CA: SAGE Publications.

Zimmerman, B. J. (1989). A social cognitive view of self-regulated academic learning. Journal of Educational Psychology,81(3), 329–339. https://doi.org/10.1037/0022-0663.81.3.329.

Zimmerman, B. J. (2000). Attaining self-regulation: A social cognitive perspective. In M. Boekaerts, P. R. Pintrick, & M. Zeidner (Eds.), Handbook of self-regulation (pp. 13–40). Amsterdam: Elsevier.

Zimmerman, B. J., Bandura, A., & Martinez-pons, M. (1992). Self-motivation for academic attainment: The role of self-efficacy beliefs and personal goal setting. American Educational Research Journal,29(3), 663–676.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Cheng, Z., Richardson, J.C. & Newby, T.J. Using digital badges as goal-setting facilitators: a multiple case study. J Comput High Educ 32, 406–428 (2020). https://doi.org/10.1007/s12528-019-09240-z

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12528-019-09240-z