Abstract

Anthropomorphism, the attribution of humanlike characteristics to nonhuman entities, may be resulting from a dual process: first, a fast and intuitive (Type 1) process permits to quickly classify an object as humanlike and results in implicit anthropomorphism. Second, a reflective (Type 2) process may moderate the initial judgment based on conscious effort and result in explicit anthropomorphism. In this study, we manipulated both participants’ motivation for Type 2 processing and a robot’s emotionality to investigate the role of Type 1 versus Type 2 processing in forming judgments about the robot Robovie R2. We did so by having participants play the “Jeopardy!” game with the robot. Subsequently, we directly and indirectly measured anthropomorphism by administering self-report measures and a priming task, respectively. Furthermore, we measured treatment of the robot as a social actor to establish its relation with implicit and explicit anthropomorphism. The results suggested that the model of dual anthropomorphism can explain when responses are likely to reflect judgments based on Type 1 and Type 2 processes. Moreover, we showed that the social treatment of a robot, as described by the Media Equation theory, is related with implicit, but not explicit anthropomorphism.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Anthropomorphism—the attribution of “humanlike properties, characteristics, or mental states to real or imagined nonhuman agents and objects” [14, p. 865]—is a common phenomenon. Anthropomorphic inferences can already be observed in infants. To illustrate, infants perceive actions as goal-directed, and this perception is not limited to human actions, but also includes object actions [25, 26]. This shows that as soon as they are able to interpret behavior as intentional and social, infants are also able to attribute mental states to objects [3]. However, children’s attribution of human features to objects is not a result of their lack of sufficient cognitive development since depending on a context and their subjective interpretation of an interaction, the same object may be treated as an alive being or merely an inanimate thing, e.g. a child may play with LEGO figures as if it were real knights, but in the next second drop them in a box.

Furthermore, it has been shown that adults make anthropomorphic inferences when describing a wide range of targets, such as weather patterns [28], animals [12] or moving geometrical figures [19, 30]. Humanlike form is also widely used to sell products [1, 2]. In spite of people commonly treating technology in a social manner, people are reluctant to admit any anthropomorphic attributions when they are explicitly debriefed about it [36]. Nass and Moon [36] have argued that treating technological gadgets as social actors occurs independent of the degree to which we anthropomorphize them. However, there are alternative interpretations of social responses to technology that have not been previously considered. Firstly, if people perceive anthropomorphism as socially undesirable, they may be unwilling to disclose that they attribute humanlike qualities to objects. Secondly, people may anthropomorphize objects without being consciously aware of it. Thirdly, both these processes may occur simultaneously. This issue could be a concern especially in the field of Human–Robot Interaction (HRI), since people tend to anthropomorphize robots more than other technology [22]. Therefore, the question how to adequately measure anthropomorphism remains to be examined since different measures may produce inconsistent outcomes.

1.1 Anthropomorphism in HRI

The notion of anthropomorphism has received a significant amount of attention in HRI research. From a methodological perspective, various measurements of anthropomorphism have been proposed, such as questionnaires [7], cognitive measures [54], physiological measures [34] or behavioral measures [6]. Empirical work has focused on identifying factors that affect the extent to which a robot is perceived as humanlike. These studies have proposed that anthropomorphic design of a robot can be achieved by factors such as movement [46], verbal communication [42, 45], gestures [41], emotions [18, 55] and embodiment [21]. These factors affect not only the perception of robots, but also human behavior during HRI (for an overview of positive and negative consequences of anthropomorphic robot appearance and behavior design please see [56]).

To go beyond the investigation of single determinants of anthropomorphic inferences, several comprehensive theoretical accounts of anthropomorphism have been proposed. For example, in their Three-Factor Theory of Anthropomorphism, [14] suggest three core psychological determinants of anthropomorphic judgments: elicited agent knowledge (i.e., the accessibility and use of anthropocentric knowledge), effectance motivation (i.e., the motivation to explain and understand the behavior of other agents), and sociality motivation (i.e., the desire for engaging in social contact).

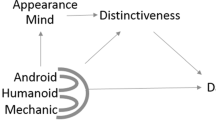

Focusing on HRI in particular, von Zitzewitz et al. [53] have introduced a network of appearance and behavior related parameters, such as smell, visual appearance or movement, that affect the extent to which a robot is perceived as humanlike. Furthermore, they have suggested that the importance of appearance related parameters increases with decreased proximity between a human and a robot, while the importance of behavior related parameters increases for social HRI. Another model proposed by Lemaignan and colleagues [35] has focused on cognitive correlates that may explain the non-monotonic nature of anthropomorphism in long-term HRI. In particular, they argue that three phases of anthropomorphism can be distinguished: initialization (i.e., an initial peak of anthropomorphism due to the novelty effect that lasts from a couple of seconds to a couple of hours), familiarization (i.e., human building a model of a robot’s behavior that lasts up to several days) and stabilization (i.e., the long-term lasting, sustained level of anthropomorphism).

While these theories provide insights into the range of factors that may affect anthropomorphic judgments and changes in judgments as a function of an ongoing interaction, so far, previous work has not yet considered whether anthropomorphism is a result of a single or multiple cognitive processes that can lead to distinct attributions of humanlike characteristics. However, understanding the nature of this phenomenon is necessary in order to choose appropriate measurement tools as well as can influence the design of robotic platforms. Previous studies have indicated that anthropomorphic conceptualizations of robots differ interindividually on varying levels of abstraction. According to [23], people were more willing to exhibit anthropomorphic responses when freely describing a robot’s behavior in a specific context or when attributing properties to the robot performing these actions. They were reluctant, though, to ascribe properties that would characterize robots in general. However, we suggest that interindividual differences in anthropomorphism could not only depend on levels of abstraction, but also on the distinct cognitive processes involved.

1.2 Dual Process Theories and Anthropomorphism

Some researchers have proposed that anthropomorphism is a conscious process [36, 48]. However, currently, there is no empirical evidence supporting this claim. Since anthropomorphism has cognitive, social, and affective correlates it is possible that anthropomorphic attributions, like attributions in general, are formed as a result of distinct processes. According to [16], attributions can be interpreted as the result of two core cognitive processes: Type 1 and Type 2 (see also [11, 24, 44]). The key distinction between both processes is their use of working memory and cognitive decoupling [16]. A so-called Type 1 process is autonomous and does not require working memory. Its typical correlates are being fast, unconscious, having high capacity, involving parallel processing, resulting in automatic and biased responses. On the other hand, a Type 2 process involves cognitive decoupling and requires working memory. Its typical correlates are being slow, serial processing, conscious and with limited capacity, which result in controlled and normative responses.

There is no agreement regarding how Type 1 and Type 2 processes work together. A parallel-competitive form [43] proposes that Type 1 and Type 2 processing occurs in parallel with both processes affecting an outcome with conflict resolved if necessary. On the other hand, the default interventionist theories propose that Type 1 process generates an initial response and Type 2 process may or may not modify it [16]. To date, there is no agreement as to which form predominates. Moreover, there are individual differences regarding dispositions to engage in rational thinking, which may affect Type 2 processes. However, there are fewer dispositional correlates of Type 1 processes [16]. It has also been proposed that there could be multiple different Type 1 processes [17].

There are three major sources of evidence supporting the existence of Type 1 and Type 2 processes: experimental manipulations (e.g., providing instructions to increase motivation for Type 2 processing or suppressing Type 2 processing by using a concurrent task that limits capacity of working memory), neural imaging and psychometric approaches that show a relationship between Type 2, but not Type 1 processing and cognitive ability [16].

Humans are hardwired to interpret an ambiguous object as human [5] and this may be a result of Type 1 processing. However, when people are motivated to provide accurate judgments and Type 2 processing is activated, it is possible that they may not necessarily attribute human characteristics to these objects. Based on this assumption we predicted that Type 1 and Type 2 processes are involved in anthropomorphic judgments about robots.

In order to distinguish between anthropomorphism that is a result of Type 1 and Type 2 processing, we will refer to implicit anthropomorphism as an outcome of a Type 1 process and explicit anthropomorphism as an outcome of a Type 2 process. Furthermore, we will use the distinction proposed by [13] for direct and indirect measures. Measures that are self-assessments of participants’ thoughts represent direct measures. On the other hand, indirect measures involve cognition that is inferred from behavior other than self-assessments of participants. Due to the higher controllability of direct measure responses, our hypotheses are based on the assumption that direct measures predominantly reflect explicit anthropomorphism while indirect measures reflect implicit anthropomorphism.

From the work by [55] we know that a robot’s capability to express emotions during HRI affects direct measures of anthropomorphism. However, in that study, no indirect measures of anthropomorphism were administered. In order to conclude that anthropomorphism would be a result of dual processing, it is necessary to show that implicit and explicit anthropomorphism are independently affected, which should be exhibited by an independent effect of the experimental manipulation on direct and indirect measures of anthropomorphism. We therefore hypothesized that the manipulation of a robot’s anthropomorphism through its ability to express emotions would affect both direct and indirect measures of anthropomorphism. On the other hand, the motivation for Type 2 processing would solely affect direct measures of anthropomorphism (Hypothesis 1).

1.3 Social Responses to a Robot

From the work of Nass et al. [37, 40] on the Media Equation effect and the “Computers are Social Actors” (CASA) paradigm, we know that people interact with technology in a social way. Thus far, social responses to a robot have been interpreted as evidence for the fact that a robot is being anthropomorphized [6]. However, Nass and Moon [36] have argued that treating technology as a social actor does not equate to anthropomorphizing it. This is because, participants in their studies apparently have explicitly expressed that they do not attribute humanlike characteristics to technology, while at the same time behaving socially towards it. A distinction between implicit and explicit anthropomorphism could explain this paradox: it is possible that people’s social reactions toward technology (the Media Equation effect [40]) are driven by the implicit anthropomorphism. On the other hand, when people are asked to explicitly declare that they perceive a machine as a human, Type 2 process gets involved and people deny that they behave toward a machine as if it is a human.

This potential interpretation can be supported by the work of Fischer [20], who found that there are individual differences in the extent to which verbal communication in HRI is similar to human–human communication and only some participants respond socially to robots. If their responses were fully mindless and automatic, as proposed by Nass, people subjective interpretation of an interaction context should not affect their social responses. Since these subjective interpretations presumably reflect Type II processing, which is exhibited on direct measures, we formulated Hypothesis 2 as follows: social responses toward robots are related with indirect, but not direct measures of anthropomorphism.

1.4 Summary and Current Study

Our review of the existing literature on anthropomorphism has shown that multiple factors potentially have an impact on the extent to which robots are humanized. However, to date, the process of anthropomorphism has been under-researched. Therefore, the current study aimed to explore further whether anthropomorphism, just like many other cognitive and social processes, may be a result of dual processing (i.e., Type 1 and Type 2 processing). To do so, we investigated whether the manipulation of participants’ motivation for Type 2 processing and a robot’s ability to express emotions affect direct and indirect measures of anthropomorphism. Furthermore, we analyzed the relationship between social responses toward robots, and implicit and explicit anthropomorphism.

2 Methods

2.1 Participants

We recruited 40 participants (14 females and 26 males) who were undergraduate students of various universities and departments from the Kansai area in Japan. They were all native Japanese speakers with a mean age of 21.52 years, ranging in age from 18 to 30 years. They were paid ¥2000 (\(\sim \, \EUR 17\)). The study took place on the premises of Advanced Telecommunications Research Institute International (ATR). Ethical approval was obtained from the ATR Ethics Committee and informed consent forms were signed by the participants.

2.2 Materials and Apparatus

All questionnaires and the priming task were presented on a computer that was placed 50 cm in front of the participants. The robot used in this experiment was Robovie R2 [52]—a machinelike robot that has humanlike features, such as arms or head (see Fig. 1).

2.3 Direct Measures

In the present research, we used several questionnaires as direct measures. Japanese version of questionnaires was used when it was available or otherwise back-translation was done from English to Japanese.

2.3.1 IDAQ

We measured individual differences in anthropomorphism using the Individual Differences in Anthropomorphism Questionnaire (IDAQ) [47] as it could affect Type 2 processing. Participants reported the extent to which they would attribute humanlike characteristics to non-human agents including nature, technology and the animal world. (e.g., “To what extent does a car have free will?”). A 11-point Likert scale ranging from 0 (not at all) to 10 (very much) was used to record responses.

2.3.2 Rational-Experiential Inventory

In order to establish if people would have a preference for either Type 1 or Type 2 processing, we administered the Rational-Experiential Inventory [15] that consists of two subscales: need for cognition (NFC) and faith in intuition (FII). Items such as “I prefer complex to simple problems” (NFC) or “I believe in trusting my hunches” (FII) were rated on 5-point Likert scales ranging from 1 (completely false) to 5 (completely true).

2.3.3 Humanlikeness

We included the 6-item humanlikeness scale by [31] to measure perceived humanlikeness of Robovie R2. Items such as inanimate—living or human-made—humanlike, were rated on a 5-point semantic differential scale.

2.3.4 Human Nature: Anthropomorphism

To directly measure anthropomorphism we used the Japanese version of human nature attribution [33] that is based on [29]. We used Human Nature (HN) traits that distinguish people from automata. Human Nature implies what is natural, innate, and affective. [55] have found that only this dimension of humanness was affected by a robot’s emotionality. Thus, 10 HN traits were rated on a 7-point Likert scale from 1 (not at all) to 7 (very much)(e.g. “The robot is...sociable”).

2.4 Indirect Measures

2.4.1 Rating of the Robot’s Performance

We measured the extent to which Robovie R2 was treated as a social actor with the robot asking the participants to rate its performance on a scale from 1 (the worst) to 100 (the best). Nass et al. [37] showed that people rate a computer’s performance higher if they are requested to rate it using the very same computer rather than another computer, which Nass and colleagues interpret as an indication that people adhere to norms of politeness and treat computers as social actors. Therefore, rating of a technology performance is a measure of the extent to which it is being treated as a social actor when the evaluation is provided directly to that technology. In our study, the robot performed exactly the same in all experimental conditions and any differences in the rating are interpreted as a result of changed treatment as a social actor.

2.4.2 Priming Task

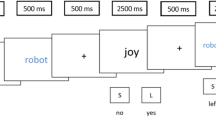

We measured anthropomorphism indirectly using a priming task [27, 39] implemented in PsychoPy v1.80.05. In this computerized task participants were instructed that their speed and accuracy would be evaluated. They were informed that they would see pairs of images appear briefly on the screen. The first picture (i.e., the prime) always depicted a robot and indicated the onset of the second picture (i.e., the target) which either showed the silhouette of a human or an object. Participants had to classify this target image as either a human or object by pressing one of two keys on the keyboard. The experimenter explained that speed and accuracy would be important, but if participants would make a mistake they should not worry and continue with the task. Participants completed 48 practice trials prior to critical trials to familiarize with the task. During practice trials, no primes (i.e., images of robots) appeared on the screen.

In the critical trials, the prime was either a picture of Robovie R2 or PaPeRo (see Fig. 2). We used PaPeRo as stimulus in order to explore whether participants would implicitly anthropomorphize only the robot with which they interacted or whether the effect would generalize to other robots. We used four silhouettes of humans and objects as the target images. Therefore, there were four possible prime \(\times \) target combinations (Robovie \(\times \) human, Robovie \(\times \) object, PaPeRo \(\times \) human, PaPeRo \(\times \) object). The prime image was displayed for 100 ms and it was immediately replaced by the target image that was visible until a participant classified the target image or for maximum of 500 ms (see Fig. 3). If participants did not classify the target image within that threshold, a screen with a message stating that they would have to respond faster appeared. For each trial, there was a 500 ms gap before the next prime appeared on the screen. There were 192 trials in total, and the order of pairs of images was random.

2.5 Design

We conducted an experiment based on 2 \(\times \) 2 between-subjects design with the following factors: first, we manipulated a robot’s emotionality (unemotional vs. emotional) to change the degree of the robot’s anthropomorphism [55]. Second, we manipulated participants’ motivation for Type 2 processing (low vs. high) [16].

We implemented an experimental setup similar to the one described in [55]. The robot emotionality manipulation took place after the robot received feedback for response for each question during “Jeopardy!” game. In the emotional condition, we manipulated the robot’s response to positive or negative feedback, respectively. In the unemotional condition, the robot always reacted unemotionally. The robot we used in this experiment does not have the capability to convey facial expressions. However, according to [4] body cues are more important than facial expressions when it comes to discriminating intense positive and negative emotions. Therefore, we implemented positive reactions by making characteristic gestures, such as rising hands, and sounds, such as “Yippee” to indicate a positive emotional state. Similarly, negative reactions were expressed verbally (e.g., by exclaiming “Ohh”) and by using gestures (e.g., lowering the head). Previous studies have shown that body language [8], speech [50] and their combination [32] are suitable and recognizable means for robot emotion expression. In the unemotional condition the robot said in Japanese “Wakarimasu” (literally “I understand”, meaning “OK” in this context) and moved its hands randomly in order to ensure a similar level of animacy compared with the other condition. In both conditions, the robot reactions slightly varied each time.

The manipulation of participants’ motivation to engage in Type 2 processing was realized by changing the instructions provided to participants after they finished interacting with the robot. In the high motivation condition, participants were instructed that their task performance and responses to the subsequent questionnaires would be discussed after completing them, and that they would be asked to explain them (see [16]). In the low motivation condition, participants were told that the responses would be anonymized. No actual discussion took place in any condition.

2.6 “Jeopardy!” Game

In the “Jeopardy!” game contestants are presented with general knowledge clues that are formed as answers and they are asked to formulate an adequate question for these clues. In the present study, participants were assigned the role of the host and Robovie R2 served as the contestant in the interaction. For example, if a participant read a clue “There are no photographs, only illustrations of this symbol of Mauritius”, the robot should respond “What is a dodo?”.

A table with cards that featured clues and names of categories of these clues was placed next to the participants. The cards were presented upside-down, with the bottom side showing clues and the correct response. On the top side, each card featured a money value that served to reflect the alleged difficulty of the item. There were six categories and five questions within each category. All participants were told that they would be assigned to read five questions from category “National Animals”. We assigned participants to one category in order to ensure that there would be no differences in difficulty between the categories. Participants were asked to read clues in normal pace and they were asked to provide feedback regarding the robot’s response. After that they were asked to proceed to the next question. Within the assigned category, they were allowed to ask questions in any order desired.

2.7 Procedure

Participants were told that they would participate in two ostensibly unrelated studies. In the alleged first study they were asked to report demographics and they completed IDAQ and the Rational–Experiential Inventory. Upon completion, they were taken to the experimental room where the ostensible second study took place (Fig. 4). They were seated 1.2 m away from Robovie R2, facing it. Participants were instructed that they would play a game with the robot that was based on “Jeopardy!” TV show. After ensuring that participants had understood the instructions, the experimenter started the robot and left the room.

To ensure that the robot’s responses and actions were in line with the respective condition, we used a Wizard of Oz approach. This implies that the robot was controlled by a research assistant who was sitting in an adjacent room. The responses were prepared before the experiment and they were identical for all participants. The robot would always answer three questions correctly and would get two other questions wrong. Incorrect answers would still appear logically possible as the robot named a wrong animal. After the fifth question, the robot asked participants to rate its performance on a scale from 1 (the worst performance) to 100 (the best performance). After that, it thanked them and asked them to call the experimenter. The experimenter took participants back to the computer where they completed the priming task and remaining questionnaires (humanlikeness and anthropomorphism). Although the order in which direct and indirect measures were administered is believed to have little impact on the measures [38], at least in some situations self-reports can activate the concepts evaluated by indirect measures [9]. Therefore, in our study, questionnaires were administered after the priming task. After filling out the questionnaires, participants were debriefed and dismissed. The entire study took approximately 20 min including a 5-min interaction time with the robot.

3 Results

3.1 Preliminary Analyses

Firstly, we checked the reliability of the used scales and then computed mean scores on participants’ responses to form indices for further statistical analyses. Higher mean scores reflect higher endorsement of the measured construct. Reliability analyses revealed that internal consistency was very low for FII, given a Cronbach’s \(\alpha = .38\). Due to this fact, we did not analyze this dimension further. Moreover, we removed one item from several scales in order to meet the criterion for sufficient reliability (NFC (i.e. “Thinking hard and for a long time about something gives me little satisfaction.”), humanlikeness (i.e. “Without Definite Lifespan - Mortal”) and HN (i.e. “nervous”), so that the scales would reach adequate levels of reliability (see Table 1). IDAQ had excellent reliability (Cronbach’s \(\alpha = .92\)). In the priming task, the internal consistency in recognition accuracy for human and object target images was high, Cronbach’s \(\alpha = .98\) and \(\alpha = 0.97\), respectively.

Secondly, we explored the role of demographic variables and individual difference measures as covariates for humanlikeness, HN, and the robot rating. In order to do that, we included gender, IDAQ and NFC as covariates in two-way ANCOVAs with emotionality and motivation as between-subjects factors and humanlikeness, HN and robot rating as dependent variables. None of these covariates had a statistically significant effect on dependent variables and they were dropped from further analyses.

3.2 Direct Measures

A two-way ANOVA with emotionality and motivation as between-subjects factors showed that there were no statistically significant main effects of emotionality (\(F(1,36) = 0.83, p = .37, \eta _G^2 = .02\)), motivation (\(F(1,36) = 0.11, p = .74, \eta _G^2 <.01\)) or an interaction effect on perceived humanlikeness (\(F(1,36) = 0.83, p = .37, \eta _G^2 = .02\)), see Fig. 5.

A two-way ANOVA with emotionality and motivation as between-subjects factors revealed a main effect of emotionality on attribution of HN traits to the robot, \(F(1,36) = 5.26, p = .03, \eta _G^2 = .13\) (see Fig. 6). In the emotional condition, participants attributed more HN to the robot (\(M = 3.70, SD = 0.46\)) than in the unemotional condition (\(M = 3.11, SD = 1.06\)). The main effect of motivation (\(F(1,36) = 1.71, p = .20, \eta _G^2 = .05\)) and the interaction effect were not statistically significant (\(F(1,36) = 0.04, p = .85, \eta _G^2 <.01\)).

3.3 Indirect Measures

A two-way ANOVA with emotionality and motivation as between-subjects factors indicated a significant main effect of robot emotionality on the rating of its performance, \(F(1,36) = 5.67, p = .02, \eta _G^2 = .14\), see Fig. 7. Participants rated the performance of the robot in the emotional condition (\(M = 72.60, SD = 10.02\)) higher than in the unemotional condition (\(M = 63.85, SD = 12.87\)). The main effect of motivation (\(F(1,36) = 1.47, p = .23, \eta _G^2 = .04\)) and the interaction effect were not statistically significant (\(F(1,36)<0.01, p = .95, \eta _G^2 <.01\)).

In the priming task, responses with reaction times of less than 100 ms or more than 500 ms were excluded from the analyses. Two-way ANOVAs with emotionality and motivation as between-subjects factors revealed that neither the main effects of emotionality, motivation nor an interaction effect were statistically significant for any prime \(\times \) target pair, see Table 2.

Table 3 shows that there was a weak, negative and statistically non-significant correlation between recognition accuracy for the Robovie \(\times \) human pair, and direct and indirect measures of anthropomorphism. There was also a statistically significant positive correlation for recognition accuracy between Robovie \(\times \) human and Robovie \(\times \) object pairs, \(r(38) = .34, p = .03\).

Priming tasks are robust against faking responses and we deliberately administered a task that would only allow for a response window of 500 ms. We did so to undermine controlled responses. However, to make sure that our results were not due to participants who had artificially slowed down their responses, we analyzed reaction times and did not find statistically significant differences for any prime \(\times \) target pairs. Therefore, this alternative explanation can be discarded.

3.4 The Role of Motivation for Type 2 Processing on Anthropomorphism

Despite the lack of statistically significant differences of motivation for Type 2 processing manipulation on anthropomorphism measures, it is still possible that participants in the high motivation condition provided normative responses compared with low motivation condition. Therefore, we expected that the relation between the direct measures of anthropomorphism and robot rating would be stronger in the low motivation than high motivation condition, i.e. the direct measures of anthropomorphism would be stronger predictors of robot rating in the low motivation than high motivation condition. We tested it by fitting linear regression lines separately for the low and high motivation conditions with HN and humanlikeness as predictors of robot rating.

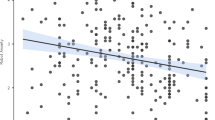

A simple linear regression indicated that there was a statistically significant effect of HN on robot rating in the low motivation condition, \(F(1,18) = 5.00, p = .04, R^2 = .22\) with \(\beta = 7.74\). However, the same linear regression in the high motivation condition was not statistically significant, \(F(1,18)<0.01, p = .95, R^2 <.01\) with \(\beta = -0.2\) (Fig. 8).

A simple linear regression indicated that there was no statistically significant effect of humanlikeness on robot rating in the low motivation condition, \(F(1,18) = 5.25, p = .14, R^2 = .12\) with \(\beta = 5.25\). Similarly, a simple linear regression in the high motivation condition was not statistically significant for humanlikeness as a predictor of robot rating, \(F(1,18) = 1.03, p = .32, R^2 = .05\) with \(\beta = 3.91\) (Fig. 8).

4 Discussion

In the present research we investigated whether anthropomorphism would be a result of Type 1 and Type 2 processing. Furthermore, we explored the relationship between treatment of a robot as a social actor and anthropomorphizing it. To do so, we manipulated a robot’s capability to express emotions in order to affect the extent to which it is being anthropomorphized and participants’ motivation for Type 2 processing to differentiate between implicit and explicit anthropomorphism.

In particular, we hypothesized that a manipulation of a robot’s anthropomorphism through its ability to express emotions will affect both direct and indirect measures of anthropomorphism, but a motivation for Type 2 processing would solely affect direct measures of anthropomorphism (Hypothesis 1). This hypothesis was not supported by our results. We did not find statistically significant differences in responses between participants in the high and the low motivation conditions. Neither the attribution of HN traits nor perceived humanlikeness were affected by this manipulation. Similarly, we did not find statistically significant differences on the indirect measure of anthropomorphism between the low and high motivation conditions.

We found that the robot emotionality manipulation affected the extent to which it was anthropomorphized with people attributing more HN traits when the robot was capable of expressing emotions. This is consistent with the previous research [55], which shows that a robot’s capability to express emotions during HRI affects direct measures of anthropomorphism. However, compared with that study we did not find a significant difference in the rating of Robovie R2’s humanlikeness. Nevertheless, the robot in the emotional condition had higher mean humanlikeness score than in the unemotional condition, which was expected based on the previous findings [55]. On the other hand, Robovie R2 was not significantly more strongly associated with the human category in the emotional than the unemotional condition, as shown by the lack of difference for Robovie x human pair between these two conditions. Therefore, based on our hypothesis that would suggest that people did not anthropomorphize the robot implicitly.

Hypothesis 2 stated that social responses toward robots would be related with indirect, but not direct measures of anthropomorphism. This hypothesis was not supported. The indirect measure of anthropomorphism (priming task) was not correlated with the robot rating. On the other hand, the direct measure of anthropomorphism was linearly related with robot rating in the low motivation, but not the high motivation condition. If people treat technology as social actors, but do not see it as a human, as suggested by Nass and Moon [36] for the Media Equation theory, the relation between anthropomorphism and social treatment of a robot should not be affected by the motivation manipulation. Moreover, manipulation of a robot’s emotionality should not affect its social treatment. However, our results reject these assumptions. This lead us to consideration if our focus on direct and indirect measures instead of explicit and implicit anthropomorphism was not too simplistic.

The above discussion is based on the assumption that direct measures reflect only explicit anthropomorphism while indirect measures reflect only implicit anthropomorphism. However, some of the obtained results do not fit this interpretation. If anthropomorphism was driven purely by the Type 2 process, the relation between explicit anthropomorphism and participants social responses toward robots should be the same for both motivation conditions. Although participants’ motivation for Type 2 processing did not statistically significantly affect direct measures, we observed that participants social responses toward robots were related with direct measures of anthropomorphism only in the low motivation condition. The effect was stronger for attribution of HN traits, which was a significant predictor of robot rating in the low motivation condition, while HN and robot rating were unrelated in the high motivation condition. While not statistically significant, a similar pattern can be observed for humanlikeness and robot rating relationship as a function of motivation manipulation.

4.1 Model of Dual Anthropomorphism

We suggest that differentiating between direct/indirect measures and implicit/explicit anthropomorphism provides a better explanation of the past and current research findings. In particular, the model of dual attitudes proposed by Wilson et al. [51] can be seen as analogue to the model of anthropomorphism that we propose. In particular, the following four properties of their model are relevant in the current discussion:

-

1.

Explicit and implicit attitudes toward the same object can coexist.

-

2.

The implicit attitude is activated automatically, while the explicit attitude requires more capacity and motivation. A motivated person with cognitive capacity can override an implicit attitude and report an explicit attitude. However, when people do not have capacity or motivation, they report implicit attitude.

-

3.

Even when an explicit attitude has been retrieved, the implicit attitude affects implicit responses (uncontrollable responses or those viewed as not representing ones attitude).

-

4.

Implicit attitudes are more resistant to change than explicit attitudes.

Parallel properties of a model of dual anthropomorphism would lead to the following interpretation of our findings. People can anthropomorphize an object explicitly and implicitly at the same time. The implicit anthropomorphism is activated automatically and it is reported by people, unless a person has a cognitive capacity and motivation to retrieve the explicit anthropomorphism. The important implication of this is that the direct measures of anthropomorphism can reflect either the implicit or explicit anthropomorphism. In the low motivation condition participants were not motivated to engage in an effortful task of retrieving explicit anthropomorphism and reported the implicit anthropomorphism even in questionnaires. On the other hand, these measures in the high motivation condition reflect the explicit anthropomorphism.

If we entertain this idea, it becomes possible to understand why social responses to a robot are related with direct measures of anthropomorphism only in the low motivation condition, but not in the high motivation condition. The indirect responses are still controlled by implicit anthropomorphism despite the explicit anthropomorphism being retrieved. In the low motivation condition, the direct measures reflect implicit anthropomorphism, which also drives the uncontrolled responses (robot rating) and a positive relationship between the direct measures of anthropomorphism and social treatment of the robot can be observed. On the other hand, in the high motivation condition, the direct measures reflect the explicit anthropomorphism, while the robot rating is still driven by the implicit anthropomorphism. As a result, the relation between the direct measures and social treatment of the robot is weaker or non-existent.

The last aspect to consider, is the meaning of the indirect measure of anthropomorphism (priming task). Wilson et al. [51] proposed that implicit attitudes are harder to change than explicit attitudes, which would translate into explicit anthropomorphism being easier to change than an implicit anthropomorphism. On the surface our results are consistent with this hypothesis, emotionality manipulation affected the direct, but not the indirect measures of anthropomorphism. However, if social responses to robots are driven by implicit anthropomorphism, there should not be an effect of emotionality manipulation on the robot rating and that is inconsistent with our data. Alternatively, Wilson and colleagues [51] hinted that it is possible that a person can have several implicit attitudes at the same time and social responses to a robot could be related with a different implicit anthropomorphism than the one measured with the priming task. The third possibility that should not be discarded, reflects the priming task as a measure itself. In the present experiment we used a priming task with stimuli that featured silhouettes of humans to measure implicit associations. A question regarding validity of this indirect measurement remains open. Currently, there are no validated indirect measurement tools with which we could compare our measurement. We expected to find some positive correlations between the direct and indirect measures at least in the low motivation condition, and between the priming task and robot rating. However, the correlations were weak and negative, which makes the construct validity of our measurement questionable. Future work should focus on development of indirect measures that can help to disentangle the influence of implicit and explicit anthropomorphism.

After discussing how the model of dual anthropomorphism can explain the results of our study, we would like to shift the focus to place it in the broader context of anthropomorphism literature and Media Equation theory. Airenti [3] in her discussion on anthropomorphism brings an example of ELIZA [49], a program developed to interact with a user that had a role of a psychotherapist. It had a rather simple functionality, yet users interacted with it at length. Interestingly, these users were students and researchers from the same laboratory as the author of the program and were fully aware of the nature of the program. Airenti reasoned that while “people may act toward objects as if they were endowed with mental states and emotions but they do not believe that they really have mental states and emotions” [3, p. 9]. She argued that the interaction context would be key for activation of anthropomorphic attributions and responses. This view is also echoed in the writings of Damiano et al. [10]. The results of our study and the model proposed by us are in line with their proposals that treatment of a robot during an interaction as if it had mental states can differ from beliefs that it has them as long as these beliefs represent explicit anthropomorphism. According to Airenti [3] and Damiano et al. [10] people should not anthropomorphize images of objects since they do not engage in an interaction with them. However, there are numerous studies that show that people attribute human characteristics and mind to images and videos of different types of robots, e.g. [33, 54]. We argue that the interaction context enables to exhibit anthropomorphic judgments, but is not necessary for anthropomorphism to occur. These responses during an interaction are driven by implicit anthropomorphism. Both implicit and explicit anthropomorphism can drive attribution of human characteristics to images of robots. We find this interpretation as more plausible since it can explain anthropomorphization of non-interactive objects.

The findings of this study also shed light on the Media Equation theory [40]. Nass and Moon [36] refuted the idea that social treatment of technology is a result anthropomorphism. They based their discussion on the fact that the users of their studies were adult, experienced computer users who denied anthropomorphizing computers when they were debriefed. As shown in our study, even the mere expectation that participants would have to justify their ratings of anthropomorphism was sufficient to weaken the relation between anthropomorphism and social responses. It is likely that participants in the “Computers are Social Actors” studies would have an even higher motivation to provide responses that represent explicit anthropomorphism when they were asked directly and verbally by an experimenter. Instead, Nass and Moon [36] termed social responses as ethopoeia, which involves direct responses to an object as if it was human while knowing that the object does not warrant human treatment. However, while ethopoeia does accurately describe human behavior, it does not provide an explanation as to why the behavior occurs. On the other hand, this supposedly paradoxical behavior can be explained by the model of dual anthropomorphism.

5 Conclusions

To our knowledge this study was the first empirical research that has investigated the involvement of Type 1 and Type 2 processing in the anthropomorphization of robots. The results thus represent a unique and relevant contribution to the existing literature on anthropomorphism of objects in general. The proposed model of dual anthropomorphism serves best in explaining the present findings as well as previous results regarding anthropomorphism and the Media Equation. In particular, we propose that people anthropomorphize non-human agents both implicitly and explicitly. Implicit and explicit anthropomorphism may differ from each other. Implicit anthropomorphism is activated automatically through Type 1 process, while activation of the explicit anthropomorphism requires more cognitive capacity and motivation and is a result of Type 2 process. In situations when a person does not have the capacity or motivation to engage in deliberate cognitive processes, the implicit anthropomorphism will be reported. On the other hand, a motivated person with cognitive capacity can override an implicit anthropomorphism and report an explicit anthropomorphism. Nevertheless, even if a person retrieves explicit anthropomorphism, uncontrollable responses or those viewed as not representing a person’s beliefs will be still driven by the implicit anthropomorphism.

The proposed model of dual anthropomorphism can explain anthropomorphic judgments about non-interactive objects, which was not possible based on the previous ideas that regarded interaction as a key component of anthropomorphism. Furthermore, we were able to show that social responses to a robot are related with anthropomorphism as long as people are not motivated to provide ratings based on the explicit anthropomorphism. This distinction between implicit and explicit anthropomorphism provides also an explanation for the Media Equation. The previously refuted link [36] between the Media Equation and anthropomorphism is an artefact of narrowing the latter to a conscious, mindful process. Considering the ease with which people attribute human characteristics to objects, it becomes improbable that such a process of anthropomorphism is an accurate representation of human cognition. According to our model, social treatment of technology reflects the implicit anthropomorphism. This can also explain why even people who develop robotic platforms regularly describe them in anthropomorphic terms despite knowing that these machines do not feel or have emotions.

Finally, the proposed model has implications for the measurement of anthropomorphism. Obviously, there is a clear need for development of valid indirect measures of implicit anthropomorphism. These measures will permit researchers to go beyond the existing direct measures and to shed light on the unique consequences of both types of anthropomorphism on human–robot interaction.

References

Aaker JL (1997) Dimensions of brand personality. J Market Res 34(3):347–356 http://www.jstor.org/stable/3151897

Aggarwal P, McGill AL (2007) Is that car smiling at me? Schema congruity as a basis for evaluating anthropomorphized products. J Consum. Res. 34(4):468–479

Airenti G (2015) The cognitive bases of anthropomorphism: from relatedness to empathy. Int J Soc Robot 7(1):117–127

Aviezer H, Trope Y, Todorov A (2012) Body cues, not facial expressions, discriminate between intense positive and negative emotions. Science 338(6111):1225–1229. https://doi.org/10.1126/science.1224313

Barrett J (2004) Why would anyone believe in god?. AltaMira Press, Lanham

Bartneck C, Verbunt M, Mubin O, Al Mahmud A (2007) To kill a mockingbird robot. In: HRI 2007—Proceedings of the 2007 ACM/IEEE conference on human-robot interaction—robot as team member, Arlington, VA, United states, pp 81–87. https://doi.org/10.1145/1228716.1228728

Bartneck C, Kulic D, Croft E, Zoghbi S (2009) Measurement instruments for the anthropomorphism, animacy, likeability, perceived intelligence, and perceived safety of robots. Int J Soc Robot 1(1):71–81. https://doi.org/10.1007/s12369-008-0001-3

Beck A, Caamero L, Bard KA (2010) Towards an affect space for robots to display emotional body language. In: 19th International symposium in robot and human interactive communication, pp 464–469. https://doi.org/10.1109/ROMAN.2010.5598649

Bosson JK, Swann WB, Pennebaker JW (2000) Stalking the perfect measure of implicit self-esteem: the blind men and the elephant revisited? J Pers Soc Psychol 79(4):631–643

Damiano L, Dumouchel P, Lehmann H (2015) Towards human-robot affective co-evolution overcoming oppositions in constructing emotions and empathy. Int J Soc Robot 7(1):7–18

Darlow A, Sloman S (2010) Two systems of reasoning: architecture and relation to emotion. Wiley Interdiscip Rev Cogn Sci 1(3):382–392. https://doi.org/10.1002/wcs.34

Darwin C (1872) 1998. The expression of the emotions in man and animals. Oxford University Press, New York

De Houwer J (2006) What are implicit measures and why are we using them? In: Wiers RW, Stacy AW (eds) The handbook of implicit cognition and addiction. Thousand Oaks, CA, pp 11–29

Epley N, Waytz A, Cacioppo JT (2007) On seeing human: a three-factor theory of anthropomorphism. Psychol Rev 114(4):864–886. https://doi.org/10.1037/0033-295X.114.4.864

Epstein S, Pacini R, Denes-Raj V, Heier H (1996) Individual differences in intuitive–experiential and analytical–rational thinking styles. J Pers Soc Psychol 71(2):390–405. https://doi.org/10.1037/0022-3514.71.2.390

Evans J, Stanovich K (2013) Dual-process theories of higher cognition: advancing the debate. Perspect Psychol Sci 8(3):223–241. https://doi.org/10.1177/1745691612460685

Evans JSB (2008) Dual-processing accounts of reasoning, judgment, and social cognition. Annu Rev Psychol 59:255–278

Eyssel F, Hegel F, Horstmann G, Wagner C (2010) Anthropomorphic inferences from emotional nonverbal cues: a case study. In: Proceedings of the IEEE international workshop on robot and human interactive communication, Viareggio, Italy, pp 646–651. https://doi.org/10.1109/ROMAN.2010.5598687

Eyssel FA, Schiffhauer B, Dalla Libera F, Yoshikawa Y, Złotowski J, Wullenkord R, Ishiguro H (2016) Mind perception: from simple shapes to social agents. In: Proceedings of the 25th IEEE international symposium on robot and human interactive communication (RO-MAN 2016), pp 916–917

Fischer K (2011) Interpersonal variation in understanding robots as social actors. In: Proceedings of the 6th international conference on human-robot interaction, ACM, New York, NY, USA, pp 53–60. https://doi.org/10.1145/1957656.1957672

Fischer K, Lohan KS, Foth K (2012) Levels of embodiment: Linguistic analyses of factors influencing HRI. In: HRI’12—Proceedings of the 7th annual ACM/IEEE international conference on human-robot interaction, pp 463–470. https://doi.org/10.1145/2157689.2157839

Friedman B, Kahn PH Jr, Hagman J (2003) Hardware companions?: what online AIBO discussion forums reveal about the human-robotic relationship. In: Proceedings of the SIGCHI conference on human factors in computing systems, ACM, New York, NY, USA, CHI ’03, pp 273–280. https://doi.org/10.1145/642611.642660

Fussell SR, Kiesler S, Setlock LD, Yew V (2008) How people anthropomorphize robots. In: Proceedings of the 3rd ACM/IEEE international conference on human-robot interaction: living with robots, Amsterdam, Netherlands, pp 145–152. /https://doi.org/10.1145/1349822.1349842

Gawronski B, Bodenhausen GV (2006) Associative and propositional processes in evaluation: an integrative review of implicit and explicit attitude change. Psychol Bull 132(5):692–731

Gergely G, Csibra G (2003) Teleological reasoning in infancy: the naıve theory of rational action. Trends Cogn Sci 7(7):287–292

Gergely G, Nádasdy Z, Csibra G, Bíró S (1995) Taking the intentional stance at 12 months of age. Cognition 56(2):165–193

Greenwald AG, Banaji MR (1995) Implicit social cognition: attitudes, self-esteem, and stereotypes. Psychol Rev 102(1):4–27

Hard R (2004) The Routledge handbook of greek mythology: based on HJ Rose’s “Handbook of Greek Mythology”. Psychology Press, London

Haslam N (2006) Dehumanization: an integrative review. Pers Soc Psychol Rev 10(3):252–264. https://doi.org/10.1207/s15327957pspr1003_4

Heider F, Simmel M (1944) An experimental study of apparent behavior. Am J Psychol 57(2):243–259. https://doi.org/10.2307/1416950

Ho C, MacDorman K (2010) Revisiting the uncanny valley theory: developing and validating an alternative to the Godspeed indices. Comput Hum Behav 26(6):1508–1518. https://doi.org/10.1016/j.chb.2010.05.015

Johnson DO, Cuijpers RH, Pollmann K, van de Ven AA (2016) Exploring the entertainment value of playing games with a humanoid robot. Int J Soc Robot 8(2):247–269

Kamide H, Eyssel F, Arai T (2013) Psychological anthropomorphism of robots. In: Herrmann G, Pearson M, Lenz A, Bremner P, Spiers A, Leonards U (eds) Social robotics, lecture notes in computer science, vol 8239. Springer International Publishing, pp 199–208. https://doi.org/10.1007/978-3-319-02675-6_20

Kuchenbrandt D, Riether N, Eyssel F (2014) Does anthropomorphism reduce stress in hri? In: Proceedings of the 2014 ACM/IEEE international conference on human-robot interaction, ACM, New York, NY, USA, HRI ’14, pp 218–219. https://doi.org/10.1145/2559636.2563710

Lemaignan S, Fink J, Dillenbourg P, Braboszcz C (2014) The cognitive correlates of anthropomorphism. In: 2014 Human-robot interaction conference, workshop” HRI: a bridge between robotics and neuroscience”. http://infoscience.epfl.ch/record/196441

Nass C, Moon Y (2000) Machines and mindlessness: social responses to computers. J Soc Issues 56(1):81–103. https://doi.org/10.1111/0022-4537.00153

Nass C, Steuer J, Tauber ER (1994) Computers are social actors. In: Proceedings of the SIGCHI conference on human factors in computing systems, ACM, New York, NY, USA, CHI ’94, pp 72–78. https://doi.org/10.1145/191666.191703

Nosek BA, Greenwald AG, Banaji MR (2005) Understanding and using the implicit association test: Ii. method variables and construct validity. Pers Soc Psychol Bull 31(2):166–180

Payne B (2001) Prejudice and perception: the role of automatic and controlled processes in misperceiving a weapon. J Pers Soc Psychol 81(2):181–192. https://doi.org/10.1037//0022-3514.81.2.181

Reeves B, Nass C (1996) How people treat computers, television, and new media like real people and places. CSLI Publications and Cambridge University Press, Cambridge

Salem M, Eyssel F, Rohlfing K, Kopp S, Joublin F (2011) Effects of gesture on the perception of psychological anthropomorphism: a case study with a humanoid robot. In: Mutlu B, Bartneck C, Ham J, Evers V, Kanda T (eds) Social robotics, Lecture Notes in Computer Science, vol 7072. Springer, Berlin Heidelberg, pp 31–41. https://doi.org/10.1007/978-3-642-25504-5_4

Sims VK, Chin MG, Lum HC, Upham-Ellis L, Ballion T, Lagattuta NC (2009) Robots’ auditory cues are subject to anthropomorphism. Proc Hum Factors Ergonom Soc 3:1418–1421. https://doi.org/10.1177/154193120905301853

Smith E, DeCoster J (2000) Dual-process models in social and cognitive psychology: conceptual integration and links to underlying memory systems. Pers Soc Psychol Rev 4(2):108–131. https://doi.org/10.1207/S15327957PSPR0402_01

Strack F, Deutsch R (2004) Reflective and impulsive determinants of social behavior. Pers Soc Psychol Rev 8(3):220–247

Walters ML, Syrdal DS, Koay KL, Dautenhahn K, Te Boekhorst R (2008) Human approach distances to a mechanical-looking robot with different robot voice styles. In: Proceedings of the 17th IEEE international symposium on robot and human interactive communication, RO-MAN, pp 707–712. https://doi.org/10.1109/ROMAN.2008.4600750

Wang E, Lignos C, Vatsal A, Scassellati B (2006) Effects of head movement on perceptions of humanoid robot behavior. In: HRI 2006: proceedings of the 2006 ACM conference on human-robot interaction, vol 2006. pp 180–185. https://doi.org/10.1145/1121241.1121273

Waytz A, Cacioppo J, Epley N (2010) Who sees human? The stability and importance of individual differences in anthropomorphism. Perspect Psychol Sci 5(3):219–232. https://doi.org/10.1177/1745691610369336

Waytz A, Klein N, Epley N (2013) Imagining other minds: Anthropomorphism is hair-triggered but not hare-brained. In: Taylor M (ed) The Oxford handbook of the development of imagination, pp 272–287

Weizenbaum J (1966) Eliza-a computer program for the study of natural language communication between man and machine. Commun ACM 9(1):36–45

Wesson CJ, Pulford BD (2009) Verbal expressions of confidence and doubt. Psychol Rep 105(1):151–160

Wilson TD, Lindsey S, Schooler TY (2000) A model of dual attitudes. Psychol Rev 107(1):101–126

Yoshikawa Y, Shinozawa K, Ishiguro H, Hagita N, Miyamoto T (2006) Responsive robot gaze to interaction partner. In: Proceedings of robotics: science and systems

von Zitzewitz J, Boesch PM, Wolf P, Riener R (2013) Quantifying the human likeness of a humanoid robot. Int J Soc Robot 5(2):263–276. https://doi.org/10.1007/s12369-012-0177-4

Złotowski J, Bartneck C (2013) The inversion effect in HRI: Are robots perceived more like humans or objects? In: Proceedings of the 8th ACM/IEEE international conference on human-robot interaction, IEEE Press, Tokyo, Japan, HRI ’13, pp 365–372. https://doi.org/10.1109/HRI.2013.6483611

Złotowski J, Strasser E, Bartneck C (2014) Dimensions of anthropomorphism: from humanness to humanlikeness. In: Proceedings of the 2014 ACM/IEEE international conference on human-robot interaction, ACM, Bielefeld, Germany, HRI ’14, pp 66–73. https://doi.org/10.1145/2559636.2559679

Złotowski J, Proudfoot D, Yogeeswaran K, Bartneck C (2015) Anthropomorphism: opportunities and challenges in human-robot interaction. Int J Soc Rrobot 7(3):347–360

Acknowledgements

The authors would like to thank Kaiko Kuwamura, Daisuke Nakamichi, Junya Nakanishi, Masataka Okubo and Kurima Sakai for their help with data collection. The authors are very grateful for the helpful comments from the anonymous reviewers. This work was partially supported by JST CREST (Core Research for Evolutional Science and Technology) research promotion program “Creation of Human-Harmonized Information Technology for Convivial Society” Research Area, ERATO, ISHIGURO symbiotic Human–Robot Interaction Project and the European Project CODEFROR (FP7-PIRSES-2013-612555).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Rights and permissions

About this article

Cite this article

Złotowski, J., Sumioka, H., Eyssel, F. et al. Model of Dual Anthropomorphism: The Relationship Between the Media Equation Effect and Implicit Anthropomorphism. Int J of Soc Robotics 10, 701–714 (2018). https://doi.org/10.1007/s12369-018-0476-5

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12369-018-0476-5