Abstract

Interactive Sonification is a well-known guidance method in navigation tasks. Researchers have repeatedly suggested the use of interactive sonification in neuronavigation and image-guided surgery. The hope is to reduce clinicians’ cognitive load through a relief of the visual channel, while preserving the precision provided through image guidance. In this paper, we present a surgical use case, simulating a craniotomy preparation with a skull phantom. Through auditory, visual, and audiovisual guidance, non-clinicians successfully find targets on a skull that provides hardly any visual or haptic landmarks. The results show that interactive sonification enables novice users to navigate through three-dimensional space with a high precision. The precision along the depth axis is highest in the audiovisual guidance mode, but adding audio leads to higher durations and longer motion trajectories.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Image guided surgery is an umbrella term for surgical interventions where planing and execution are supported by pre-operative patient images, like magnetic resonance imaging or computed tomography [1,2,3]. This is necessary for complicated interventions, for example, when a tumor lies close to a risk-structure, such as a large vessel, sensitive membrane, or important nerve, or when an ablation needle has to reach a tumor past impenetrable bones. To plan the intervention, the image is segmented into parts that belong together, like muscles, vessels, bones, nerves, tumor and surrounding healthy tissue. Segmented images are augmented, e.g., by giving structures individual colors and opacity levels. With the help of such an augmented, three-dimensional image, the surgical procedure is planned. For example, the locations of burr holes are planned such that a very specific part of the skull can be removed for a craniotomy. In many interventions, visual, computer assisted guidance tools are utilized: the position of the surgical tool is being tracked in relation to the patient anatomy. A computer screen displays a pseudo-3D image of the augmented patient anatomy, overlaid by the planned points or path, and a depiction of the surgical tool.

Auditory display has been suggested as an auditory navigation aid for computer assisted surgical procedures for decades [4,5,6,7,8,9]. It has been recognized that computer assisted navigation enables clinicians to carry out complicated interventions with a high precision, even in minimally invasive surgery, where there is little or no direct view on the lesion. One shortcoming of visual guidance is that visual attention is captured by the screen instead of the patient. This can cause an unergonomic posture, may potentially reduce the precision of the natural hand-eye coordination, and extracting three-dimensional navigation information from a two-dimensional computer screen causes a high cognitive load [10]. Interactive sonification has the potential to overcome these issues.

Experiments under laboratory conditions [11,12,13,14,15,16,17] as well as case reports of clinical implementations [18,19,20] exist. An overview is provided in [21]. These studies have led to similar conclusions: interactive sonification is a successful guidance tool for image guided surgery. Auditory guidance tends to take significantly longer than visual and audiovisual guidance, and leads to a lower precision and a higher subjective workload. In turn, it leaves the visual channel completely unoccupied. Audiovisual guidance often enables the highest precision, while the workload is similar to visual guidance. It allows users to take their visual focus off the monitor towards the patient and their own hands from time to time. These are very promising results that clearly show the potential of sonification in image-guided surgery.

However, all these studies utilized sonifications that only provide very little navigation information. None of the sonifications provides (1) three dimensions that are (2) orthogonal, (3) continuous, (4) exhibit a high resolution, (5) two polarities (e.g., left and right, front and back), and an (6) absolute coordinate origin.

For example, [15, 19, 20] do provide three discrete distance cues to assist clinicians in marking a cutting trajectory for cancer resection and in avoiding hitting critical nerves and membranes. But the sonifications tend to have discontinuous dimensions, only one polarity, a low resolution and no absolute coordinate origin. [18] provides a one-dimensional sonification with one polarity, indicating the distance to the nearest critical structure to help clinicians avoid harming sensitive membranes, important nerves or large vessels. [17] describe multiple one-dimensional sonifications with one polarity that should serve as a distance indicator for many kinds of surgical interventions. [14] provide two-dimensional sonifications with one polarity each, and no absolute coordinate origin, which is why they added a confirmation earcon whenever the target is reached. One sonification helped find the location on the patient’s back (simplified to a two-dimensional surface), and a second sonification helped identify the two incision angles in a pedicle screw placement task. [13] implemented a two-dimensional sonification that has to take turns with a reference tone in order to provide an absolute coordinate origin. Taking turns with a reference tone interrupts the navigation. Furthermore, one dimension only provides discrete steps and no continuous dimension. The sonification aims at navigating a clinician towards the incision point of a needle on a (more or less) two-dimensional surface, like the abdomen. [22] provide two continuous dimensions, both with two polarities and an absolute coordinate origin. Again, the contemplated use case is to guide a clinician towards a pre-planned needle-insertion point on a comparably plane surface, like the abdomen.

Motivated by the results of the presented studies, we implemented and experimentally evaluated our psychoacoustic sonification. This is the first study in which the sonification simultaneously provides continuous navigation information about three dimensions, each with two polarities, a high resolution and an absolute coordinate origin. Some surgical procedures cannot be narrowed down to one- or two-dimensional tasks. For such procedures, a three-dimensional sonification is needed.

The surgical use case is: finding bone drilling points on the skull for a craniotomy. In a craniotomy, a constellation of burr holes is drilled at specific points on the skull. After drilling, the holes are connected via manually pulled craniotomy saw wires, so that a region of the skull can be removed. This procedure is often image-guided to ensure that the opening enables removal of the correct portion of the skull, e.g., right above a cerebral edema, or at the optimal location to reach a tumor from a pre-planned angle. This procedure is a three-dimensional (three degrees of freedom) task, where the three-dimensional location on the skull needs to be found, while the orientation of the drill is not crucial.

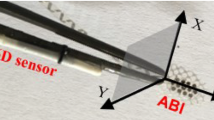

Experiment setup with a styrofoam skull and an electromagnetic transmitter glued on a board. The position of the stylus tip is tracked. In the auditory guidance mode, the direction between the tip position and the target position is sonified through loudspeakers, and the screen is black. In the visual guidance mode, the tip position and the target is visualized together with a pseudo 3D-model of the skull. In the audiovisual guidance mode, both sonification and visualization are active. Photo taken from [7]

1.1 Aim and research question

In the study at hand, we evaluate how well a three-dimensional sonification can guide users towards a target in comparison to visual and audiovisual guidance.

The potential danger of adding more guidance information to a sonification is that it may overwhelm the user, preventing him or her from navigating to the desired target. But the potential benefit is that it enables clinicians to carry out procedures that cannot easily be narrowed down to one- or two-dimensional tasks, like navigation through a three-dimensional space. Even though quite different in nature, the results of previous studies serve as a reference to judge whether the three-dimensional sonification is an effective guidance tool.

2 Method

We invited 24 non-clinicians to a phantom experiment (mostly computer science and digital media students and staff, age \(= 19 \text { to } 42\), median \(= 28\), 19 male, 5 female). All participants reported normal hearing and normal vision. We explained the participants the task, showed and described the setup, the auditory, the visual and the audiovisual guidance modes to them and let them explore freely. This introduction and training phase took about one hour, mostly to learn how to interpret the sonification.

The experiment setup can be seen in Fig. 1. A styrofoam skull is glued on a board, together with a transducer from the electromegnetic tracking system Polhemus Fastrak. The tracking system is certified for use in operating rooms. We produced a virtual model of the skull using a Siemens MRI scanner. Participants hold a stylus whose tip position is tracked in relation to the skull. Just like a real skull, the phantom hardly provides visual landmarks that could serve as orientation cues. We distributed 6 times 5 targets on the surface of the skull as illustrated in Fig. 2. The participants’ main task was to move the stylus from one target to the next, and click the button on the stylus when they think they have reached it. The path was pseudo-random, but it was the same path for every participant. The participants were not aware of the distribution of targets. They started at one tiny mark on the skull. The guidance method only indicated the position of the target to reach.

Three guidance modes existed: visual guidance (visualization), auditory guidance (sonification) and audiovisual guidance (both). These are described in detail in the subsequent section. In the visual mode (v), the target was indicated as a small, green sphere on the computer model of the skull, while the sonification was muted. In the auditory mode (a), the target location was indicated by the described interactive sonification, while the computer screen was black. In the audiovisual mode (av), both the green sphere and the sonification were active. The participants started with either guidance mode. Every 10 trials (decade), the guidance method changed. To eliminate the effect of the order of guidance modes, we divided the participants into 6 different groups, a–v–av, a–av–v, av–a–v, av–v–a, v–a–av, and v–av–a. This way, 8 participants navigated to each decade of targets using auditory guidance, 8 using visual guidance, and 8 using audiovisual guidance. We instructed them to reach the targets as quickly and precisely as possible, which is a common task in surgical experiments [23]. We tracked the location of the stylus tip during the complete experiment.

Distribution of targets along the skull. Six groups of five circularly arranged targets have to be found in pseudo-random order. Only the target is being sonified and/or visualized. The participants never got to see or hear this distribution. Graphic taken from [24]

We compare six objective measures between the three navigation modes for each decade of targets:

-

1.

Trajectory length.

-

2.

Task duration.

-

3.

Absolute precision and the precision along each of the 3 dimension.

2.1 Visual guidance

The visualization could be seen on the photo in Fig. 1 and in the screenshot, Fig. 2. The computer screen shows a two-dimensional projection of the three-dimensional skull model as measured with magnetic resonance imaging. The screen shows a top view of the pseudo three-dimensional skull, comparable to the transverse plane. This was the only viewpoint from which no target was occluded by any part of the skull. Targets were represented by green spheres. The tip of the stylus was represented by a slightly larger, semi-transparent, orange sphere. When moving the stylus to the left or right, the orange sphere would move to the left or right, too. When moving the stylus to the front or back, the orange sphere would move up or down. This is due to the virtual perspective. When moving the stylus to the front or back, the orange sphere may slightly move along the left/right and up/down dimension, and change its size. Participants know that they have reached the target, when the green sphere lies completely within the orange sphere. During the visual guidance, the sonification was automatically muted.

2.2 Auditory guidance

A three-dimensional, interactive sonification serves as auditory guidance tool. The sonification is based on our psychoacoustic sonification as introduced for two dimensions in [25] and implemented in the Tiltification spirit level app [26]. In [27], we described how to expand it to a three-dimensional sonification, and in [28] we modified it and provided theoretical and experimental evidence that the three sonification dimensions are in fact continuous, linear, orthogonal, and provide a clear coordinate origin. The sonification is in mono to ensure compatibility with common devices in the operating room, as well as to ensure that clinicians can use it without the need to wear headphones, or the restriction to stay still on the sweet spot of a stereo triangle. The sound is based on additive synthesis, frequency modulation synthesis, amplitude modulation, and subtractive synthesis. In its core, the sonification is a Shepard-Tone [29] as illustrated in Fig. 3. Here, the vertical black bars indicate the carrier frequencies, the colorful envelope represents the frequency-dependent amplitude. Arrows indicate how frequencies and the envelope can change. These changes affect the perception of chroma, loudness fluctuation or beats, brightness, roughness, and fullness. Each of these characteristics stands for another spatial direction. The intensity of the characteristic, or the frequency at which it varies, indicates how far a target lies along the respective direction. The easiest way to describe the sonification design and the resulting sound is by referring to Fig. 4, which represents the perceptual result of the signal changes.

Magnitude spectrum of the sonification. Five sound characteristics represent the six orthogonal directions in three-dimensional space. The arrows indicate how the spectrum changes when the target lies in either of the 6 directions. The legend indicates how these changes affect the auditory perception. Graphic modified from [27]

Sound impression of the sonification depending on the location of the target. Chroma changes indicate that the target lies to the left or right. Loudness fluctuation indicates that the target lies above, roughness indicates that the target lies below. Brightness indicates that the target lies to the rear, a narrow bandwidth indicates that the target lies to the front. Graphic taken from [30]

The current position is represented by a cursor in the center of the coordinate system. The left/right dimension is represented by the blue spring, whose coils get denser with increasing distance. When the target lies to the right, all frequencies rise. When the target lies just slightly to the right, the frequencies rise slowly. The further the target lies to the right, the quicker the frequencies rise. When rising slowly, this sounds like an ever-rising pitch. When rising faster, you can hear that something cyclic is happening. This is referred to as a clockwise movement of chroma. Accordingly, when the target lies to the left, all frequencies decrease. When the target lies just slightly to the left, the frequencies decrease slowly. The further the target lies to the left, the quicker the frequencies fall. When decreasing slowly, this sounds like an ever-decreasing pitch. When decreasing faster, you can hear that something cyclic is happening. This is the counter-clockwise movement of chroma. Only when the target lies neither to the left nor to the right, the pitch and chroma remain steady. The exact pitch is not important. Any steady pitch indicates that the target has been approached along the left/right dimension. In Fig. 3, a clockwise-motion of chroma looks like a continuous motion of the vertical black bars towards the right, indicated by the blue double arrows (\(\omega \)). Their length is restricted by the colorful envelope. Whenever a bar leaves the plot on the right, it will be re-introduced on the left-hand side of the plot. The further a target lies to the right, the faster the bars move.

The up/down dimension is divided in two. When the target lies above, the gain of the Shepard-Tone is modulated sinusoidally. This sounds like a loudness fluctuation, also referred to as beats. The further the target lies above, the faster the beating. In Fig. 3, this is indicated by the purple double-arrow (g), which scales the height of the envelope by a factor that sinusoidally fluctuates between 0.5 and 1. The distance to the target is proportional to the frequency of that fluctuation. When the target lies below, all frequencies are modulated with an 80 Hz modulation frequency. This frequency modulation produces sidebands that give the Shepard-Tone a rough timbre. The further the target lies below, the higher the modulation depth, i.e., the rougher the sound. In Fig. 3, this is indicated by the orange arrows (\(\beta \)) that appear between the vertical bars. In the sonification, these sidebands occur around all carrier frequencies (not only around one frequency, as in the figure). The further the target lies below, the more sidebands occur, and the higher their amplitudes become, increasing the roughness. Only at the target height, the Shepard-Tone exhibits a steady loudness and no roughness. In addition, a click is being triggered every time the target height is surpassed.

The front/back dimension is also divided in two. When the target lies to the front, the bandwidth of the signal is reduced. One can say that this decreases the fullness of the Shepard-Tone, i.e., the sound becomes thinner. The further the target lies to the front, the thinner the respective sound gets. In Fig. 3, this is indicated by the dashed envelope and the colored double arrows between them. When the target lies far to the front, the Gaussian bell shape becomes much steeper. At the same time, the peak becomes higher. This is necessary, because reducing the sounds’ bandwidth would reduce its loudness, which could be confused with beats. Increasing the peak amplitude counter-balances this effect. When the target lies to the back, the amplitude-envelope of the Shepard-Tone is shifted towards higher frequencies, making the sound brighter. The further the target lies to the rear, the brighter the sound gets. In Fig. 3, this is indicated by the gray arrow. Only at the target depth, the sound is both full and dull. In addition, a major chord is triggered every time the target depth is surpassed.

Altogether, you know that you have reached the target when the chroma and the loudness are steady and the Shepard-Tone is neither rough nor thin nor very bright. In addition, pink noise is triggered as soon as the target lies nearer than 3 cm away. During the auditory guidance, the computer screen automatically turned black.

The three-dimensional sonification used in this experiment can be explored in the CURAT sonification game [31]Footnote 1. A demo video of the sonification can be found on https://youtu.be/CbLoQ8LECGw. The formulas of the digital signal processing are given in [25] and [27], its implementation in Pure Data is provided in the open source code of Sonic Tilt [32].

2.3 Audiovisual guidance

The audiovisual guidance mode was a combination of the visual and the auditory guidance.

3 Results

A visual inspection of the calculated measures showed that the data were not normally distributed but exhibited outliers that led to a large variance, as indicated already in [24]. Therefore, outliers were removed for the data analysis.

We considered data points above \(\textrm{Q3} + 1.5 \text {IQR}\) and below \(Q1 - 1.5 IQR\) as outliers and excluded them from further analyses. Here, Q1 is the lower quartile, i.e., the 25th percentile, Q3 is the upper quartile, i.e., the 75th percentile, and IQR is the interquartile range, defined as \(\text {IQR} = \textrm{Q3} - \textrm{Q1}\). For each measure, between 0 and 4 values have been excluded this way. Results of the filtered data are presented in the following.

3.1 Results of filtered data

For each decade, MANOVA with a significance level of 0.05 and a 95% confidence interval is carried out to identify whether the performance difference are significant. Tables 1, 2, 3 show the mean values ± standard deviation of all measures for targets 1 to 10 (decade 1), 11 to 20 (decade 2) and 21 to 30 (decade 3). The best performance is highlighted in bold font. The superscripts indicate which guidance modes achieved a significantly better result. With the cleaned data, all MANOVAs revealed significant differences between the guidance modes.

The cleaned decade 1 showed a significant difference (\(F(12,22)=3.26\), \(p<0.01\), Wilk’s \(\Lambda =0.031\), partial \(\eta ^2=0.824\)) between the guidance modes. For the test of between-subject effects, we carry out Bonferroni’s alpha correction, i.e., the \(p<0.01\) significance level is reduced to \(p<0.0017\) and the \(p<0.05\) significance level to \(p<0.008\). The test of between-subject effects revealed that the guidance mode had a significant effect, not only on the time needed (\(F(2,16)=26.54\), \(p<0.0017\) after Bonferroni correction, partial \(\eta ^2=0.77\)), but also on the trajectory length (\(F(2,16)=15.369\), \(p<0.0017\), partial \(\eta ^2=0.66\)), the precision along the x-dimension (\(F(2,16)=23.51\), \(p<0.0017\), partial \(\eta ^2=0.75\)), the precision along the y-dimension (\(F(2,16)=17.72\), \(p<0.0017\), partial \(\eta ^2=0.69\)), the precision along the z-dimension (\(F(2,16)=15.43\), \(p<0.0017\), partial \(\eta ^2=0.66\)), and the overall precision (\(F(2,16)=24.54\), \(p<0.0017\), partial \(\eta ^2=0.754\)). Tukey post-hoc analysis revealed that the time difference is significant between auditory and audiovisual guidance (\(p<0.01\)) as well as between auditory and visual guidance (\(p<0.01\)), but not the difference between audiovisual and visual guidance (\(p=0.558\)). The length difference is significant between auditory and audiovisual guidance (\(p<0.01\)) as well as between auditory and visual guidance (\(p<0.01\)), but not the difference between audiovisual and visual guidance (\(p=0.453\)). The difference of precision along the x-dimension is significant between auditory and audiovisual guidance (\(p<0.01\)), auditory and visual guidance (\(p<0.01\)) as between audiovisual and visual guidance (\(p<0.01\)). The difference of precision along the y-dimension is significant between auditory and audiovisual guidance (\(p<0.01\)) and between visual and audiovisual guidance \((p<0.01)\), but not between auditory and visual guidance (\(p=0.987\)). The difference of precision along the z-dimension is significant between auditory and visual guidance (\(p<0.01\)) and between auditory and audiovisual guidance (\(p<0.01\)), but not between visual and audiovisual guidance (\(p=0.893\)). The difference of overall precision is significant between all guidance methods (\(p<0.01\)).

Decade 2 revealed a significant difference between the guidance modes (\(F(12,24)=6.59\), \(p<0.01\), Wilk’s \(\Lambda =0.054\), partial \(\eta ^2=0.767\)). The test of between-subject effects revealed that the guidance mode had a significant effect on the time needed (\(F(2,17)=7.65\), \(p<0.008\), partial \(\eta ^2=0.47\)) and the trajectory length (\(F(2,17)=10.883\), \(p<0.0017\), partial \(\eta ^2=0.56\)). The difference in precision along the y-axis is close to the desired significance level (\(F(2:17)=5.16\), \(p=0.018\), partial \(\eta ^2=0.38\)). Tukey post-hoc analysis revealed that the time difference is significant between auditory and visual guidance (\(p<0.01\)), but not between auditory and audiovisual (\(p=0.176\)) nor between visual and audiovisual guidance (\(p=0.118\)). The length difference is significant between auditory and visual guidance (\(p<0.01\)), but neither between auditory and audiovisual guidance (\(p=0.157\)), nor between visual and audiovisual guidance (\(p=0.453\)). The difference in precision along the y-axis is significant between auditory and audiovisual guidance (\(p<0.05\)) and between visual and audiovisual guidance (\(p<0.05\)), but not between auditory and visual guidance (\(p=0.95\)).

Decade 3 showed a significant difference (\(F(12,18)=15.31\), \(p<0.01\), Wilk’s \(\Lambda =0.008\), partial \(\eta ^2=0.91\)) between the guidance modes. The test of between-subject effects revealed that the guidance mode had a significant effect on the time needed (\(F(2,14)=178,74\), \(p<0.0018\), partial \(\eta ^2=0.96\)), the trajectory length (\(F(2,14)=27.28\), \(p<0.0018\), partial \(\eta ^2=0.80\)), and the precision along the y-axis (\(F(2,14)=11.13\), \(p<0.0018\), partial \(\eta ^2=0.61\)). Tukey post-hoc analysis revealed that the time difference is significant between auditory and audiovisual guidance (\(p<0.01\)) as well as between auditory and visual guidance (\(p<0.01\)), but not the difference between audiovisual and visual guidance (\(p=0.055\)). The length difference is significant between auditory and audiovisual guidance (\(p<0.01\)) as well as between auditory and visual guidance (\(p<0.01\)), but not the difference between audiovisual and visual guidance (\(p=0.081\)).

3.2 Summary of results

Auditory guidance was significantly slower than visual guidance in all three decades, and trajectories were significantly longer. In one decade, auditory guidance was significantly less precise than visual guidance. In one decade, it was even more precise, but not significantly.

Audiovisual guidance was significantly more precise along the y-axis than visual guidance in all decades, and not significantly slower. In one decade, the precision was significantly higher than in the other guidance modes. In one decade, the trajectory was significantly longer compared to visual guidance.

All significant findings were supported by a large effect size (partial \(\eta ^2\ge 0.38\)).

4 Discussion

From the analysis of the results, three aspects turned out to be important to reflect on. These aspects are discussed in the following three sections.

4.1 Individual performance versus guidance method

The large variance between individuals concerning the six measures, and the qualitatively observes performance differences discussed in [24], indicate that for naive users, the individual skills are much more important than the guidance mode. Almost no significant differences between guidance modes could be observed.

However, it is likely that the individual performances of clinicians would vary less, at least for the visual guidance, as they have expertise in the presented task. We take this into consideration by eliminating outliers. Arguably, results of the cleaned data, as discussed in the following sections, transfer better to clinicians.

4.2 Effectiveness of auditory guidance

All participants found all targets, regardless of the guidance mode. Participants tended to perform significantly worse with auditory guidance compared to audiovisual guidance, and, less often, compared to visual guidance. This is true for the time it takes to find the targets, for the length of the trajectories, and for the overall precision. One exception is the precision along the y-axis. This is the axis which is hardly recognizable on the screen, due to the virtual viewing angle. Here, audiovisual guidance leads to a significantly higher precision than pure auditory or pure visual guidance. A close look at the trajectories of the audiovisual guidance mode reveals why. As can be seen in Fig. 5, many participants carried out micro-corrections near the target. Obviously, participants realized that their current position looked right but did not sound right. So they adjusted their position.

Note that the inferior performance using auditory guidance can have various reasons. Maybe the sonification is less informative. But maybe the participants just lack auditory experience and education and can improve their performance with more training. One should keep in mind that users have a life-long experience interpreting visualizations, such as graphical user interfaces on computers, smartphones and TVs, rail network plans and maps and/or pseudo 3D graphics in computer games, but less than one hour of experience using sonification. Longitudinal studies may reveal how much the performance improves after weeks of training.

4.3 Usefulness of three-dimensional sonification

In image-guided surgery, some tasks are two-dimensional, such as finding the two desired angles for inserting a needle. Some three-dimensional problems can be simplified as two-dimensional tasks, such as finding a position on a fairly plane surface, like the abdomen. Previous studies have investigated the usefulness of two-dimensional sonifications for such purposes [13, 14].

The fact that the presented results are in line with the results from these studies indicate that adding even more navigation information to the sonification does not degrade its usefulness. Consequently, sonification can even be utilized for three-dimensional guidance tasks without simplifying or subdividing it into two-dimensional subtasks.

One can draw two quite contrary conclusions from the results: 1.) As adding the sonification mostly improved the precision along the y-dimension, it may be beneficial to reduce the sonification to one dimension and add it to the existing visualization. This may improve the navigation precision without the need for much training. After all, the most striking benefit of the presented three-dimensional sonification is the same as the most striking benefit of earlier presented one- or two-dimensional sonifications. 2) One can recognize that the three-dimensional sonification guided all participants through a three-dimensional space with a precision fairly similar to visual guidance. This has not been reported before. It allows speculating that one day sonifying all six degrees of freedom (e.g., three-dimensional location of a needle tip, two-dimensional incision angles and one-dimensional incision depth) will be possible. Until then, longitudinal studies with three-dimensional sonification should reveal with how much training the performance improves by how much.

5 Conclusion

In this paper, we described a three-dimensional sonification as an auditory guidance tool for image-guided surgery. In a phantom study, novice users were able to find targets through auditory, visual, and audiovisual guidance. Visual guidance tended to enable the users to find the targets fastest, on the shortest path, and with the highest precision. Adding sonification to the visualization mostly improved the precision along the y-dimension. When replacing visualization completely with sonification, all targets are still found, with a precision that is only significantly lower in one out of three trials.

The result in is line with previous studies that showed the suitability of sonification as a guidance tool in image-guided surgery. The novelty here is that the presented sonification is the first that enabled continuous guidance through a three-dimensional space. The results show that this increase of sonified information is well interpretable by novice users. The results indicate that sonification may become not only a useful supplement, but even a substitute for graphical guidance methods. Yet it is unclear if, and with what amount of training, sonification can become a guidance tool that is as effective as visual guidance. Furthermore, the results clearly show that some people can use sonification more readily than others. It is possible that some clinicians may master sonification guided surgery after a short intensive course and some practice, while others cannot. Ultimately, longitudinal studies with clinicians are necessary to reveal the true potential of three-dimensional sonification as an assistance tool for image-guided surgery. Such a study should include qualitative assessments, too, like the subjective confidence, stress, and cognitive demand, and should tackle the integration of sonification in the surgical workflow.

Notes

Available for Android, Windows, Mac and Linux.

References

Arnolli MM, Hanumara NC, Franken M, Brouwer DM, Broeders IAMJ (2015) An overview of systems for ct- and mri-guided percutaneous needle placement in the thorax and abdomen. Int J Med Robot Comput Assist Surg 11(4):458–475. https://doi.org/10.1002/rcs.1630

Azagury DE, Dua MM, Barrese JC, Henderson JM, Buchs NC, Ris F, Cloyd JM, Martinie JB, Razzaque S, Nicolau S, Soler L, Marescaux J, Visser BC (2015) Image-guided surgery. Curr Probl Surg 52(12):476–520. https://doi.org/10.1067/j.cpsurg.2015.10.001

Perrin DP, Vasilyev NV, Novotny P, Stoll J, Howe RD, Dupont PE, Salgo IS, del Nido PJ (2009) Image guided surgical interventions. Curr Probl Surg 46(9):730–766. https://doi.org/10.1067/j.cpsurg.2009.04.001

Wegner CM, Karron DB (1997) Surgical navigation using audio feedback. In: Morgan KS, Hoffman HM, Stredney D, Weghorst SJ (eds) Medicine meets virtual reality: global healthcare grid. Studies in health technology and informatics, vol 39. IOS Press, Ohmsha, Washington D.C., pp 450–458. https://doi.org/10.3233/978-1-60750-883-0-450

Jovanov E, Starcevic D, Wegner K, Karron D, Radivojevic V (1998) Acoustic Rendering as Support for Sustained Attention During Biomedical Procedures. In: ICAD, Glasgow. http://hdl.handle.net/1853/50712

Vickers P, Imam A (1999) The use of audio in minimal access surgery. In: Alty J (ed) XVIII European annual conference on human decision making and manual control. Group D Publications, Loughborough, pp 13–22. https://doi.org/10.5281/zenodo.398801

Ziemer T, Nuchprayoon N, Schultheis H (2020) Psychoacoustic sonification as user interface for human-machine interaction. In: International journal of informatics society, vol 12, pp 3–16. http://www.infsoc.org/journal/vol12/12-1

Black D, Plazak J (2017) Guiding clinicians with sound: investigating auditory display in the operating room. In: ISCAS cutting edge. http://news.iscas.co/guiding-clinicians-sound-investigating-auditory-display-operating-room/ Accessed 2019-02-19

Miljic O, Bardosi Z, Freysinger W (2019). Audio guidance for optimal placement of an auditory brainstem implant with magnetic navigation and maximum clinical application accuracy. In: International Conference on Auditory Display (ICAD2019), Newcastle Upon Tyne, pp 313–316. https://doi.org/10.21785/icad2019.075

Vajsbaher T, Ziemer T, Schultheis H (2020) A multi-modal approach to cognitive training and assistance in minimally invasive surgery. Cogn Syst Res 64:57–72. https://doi.org/10.1016/j.cogsys.2020.07.005

Black D, Ziemer T, Rieder C, Hahn H, Kikinis R (2017) Auditorydisplay for supporting image-guided medical instrument navigationin tunnel-like scenarios. In: Proceedings of image-guided interventions (IGIC), Magdeburg, Germany

Black D, Issawi JA, Hansen C, Rieder C, Hahn H (2013) Auditory Support for Navigated Radiofrequency Ablation. In: Freysinger W (ed) CURAC—12. Jahrestagung der Deutschen Gesellschaft Für Computer- und Roboter Assistierte Chirurgie, Innsbruck, pp 30–33

Black D, Hettig J, Luz M, Hansen C, Kikinis R, Hahn H (2017) Auditory feedback to support image-guided medical needle placement. Int J Comput Assist Radiol Surg 12(9):1655–1663. https://doi.org/10.1007/s11548-017-1537-1

Matinfar S, Salehi M, Suter D, Seibold M, Navab N, Dehghani S, Wanivenhaus F, Fürnstahl P, Farshad M, Navab N (2023) Sonification as a reliable alternative to conventional visual surgical navigation. Sci Rep 13:5930

Hansen C, Black D, Lange C, Rieber F, Lamadé W, Donati M, Oldhafer KJ, Hahn HK (2013) Auditory support for resection guidance in navigated liver surgery. Int J Med Robot Comput Assist Surg 9(1):36–43. https://doi.org/10.1002/rcs.1466

Plazak J, DiGiovanni DA, Collins DL, Kersten-Oertel M (2019) Cognitive load associations when utilizing auditory display within image-guided neurosurgery. Int J Comput Assist Radiol Surg 14(8):1431–1438. https://doi.org/10.1007/s11548-019-01970-w

Plazak J, Drouin S, Collins L, Kersten-Oertel M (2017) Distance sonification in image-guided neurosurgery. Healthc Technol Lett 4(5):199–203

Strauß G, Schaller S, Zaminer B, Heininger S, Hofer M, Manzey D, Meixensberger J, Dietz A, Lüth TC (2011) Klinische erfahrungen mit einem kollisionswarnsystem. HNO 59(5):470–479. https://doi.org/10.1007/s00106-010-2237-0

Cho B, Matsumoto N, Komune S, Hashizume M (2014) A surgical navigation system for guiding exact cochleostomy using auditory feedback: a clinical feasibility study. Biomed Res Int 2014:769659–7. https://doi.org/10.1155/2014/769659

Cho B, Oka M, Matsumoto N, Ouchida R, Hong J, Hashizume M (2013) Warning navigation system using real-time safe region monitoring for otologic surgery. Int J Comput Assist Radiol Surg 8(3):395-405. https://doi.org/10.1007/s11548-012-0797-z

Black D, Hansen C, Nabavi A, Kikinis R, Hahn H (2017) A survey of auditory display in image-guided interventions. Int J Comput Assist Radiol Surg 12(9):1665–1676. https://doi.org/10.1007/s11548-017-1547-z

Ziemer T, Black D, Schultheis H (2017) Psychoacoustic sonification design for navigation in surgical interventions. Proc Meet Acoust 30:1. https://doi.org/10.1121/2.0000557

Batmaz AU, de Mathelin M, Dresp-Langley B (2016) Getting nowhere fast: trade-off between speed and precision in training to execute image-guided hand-tool movements. BMC Psychol. https://doi.org/10.1186/s40359-016-0161-0

Ziemer T (2023) Three-dimensional sonification for image-guided surgery. In: International Conference on Auditory Display (ICAD2023), Norrköping, Sweden, pp 8–14. https://doi.org/10.21785/icad2023.2324

Ziemer T, Schultheis H (2018) A psychoacoustic auditory display for navigation. In: 24th International conference on auditory displays (ICAD2018), Houghton, MI, pp 136–144. https://doi.org/10.21785/icad2018.007

Asendorf M, Kienzle M, Ringe R, Ahmadi F, Bhowmik D, Chen J, Huynh K, Kleinert S, Kruesilp J, Lee YY, Wang X, Luo W, Jadid NM, Awadin A, Raval V, Schade EES, Jaman H, Sharma K, Weber C, Winkler H, Ziemer T (2021) Tiltification—an accessible app to popularize sonification. In: Proceedings of the 26th international conference on auditory display (ICAD2021), Virtual Conference, pp 184–191. https://doi.org/10.21785/icad2021.025

Ziemer T, Schultheis H (2019) Psychoacoustical signal processing for three-dimensional sonification. In: 25th international conference on auditory displays (ICAD2019), Newcastle, pp 277–284. https://doi.org/10.21785/icad2019.018

Ziemer T, Schultheis H (2019) Three orthogonal dimensions for psychoacoustic sonification. ArXiv Preprint. https://doi.org/10.48550/arXiv.1912.00766

Shepard RN (1964) Circularity in judgments of relative pitch. J Acoust Soc Am 36(12):2346–2353. https://doi.org/10.1121/1.1919362

Ziemer T, Schultheis H (2020) Psychoakustische sonifikation zur navigation in bildgeführter chirurgie. In: Jaeschke N, Grotjahn R (eds) Freie Beiträge zur Jahrestagung der Gesellschaft Für Musikforschung 2019, vol 1, pp 347–358. https://doi.org/10.25366/2020.42

Ziemer T, Schultheis H (2021) The CURAT sonification game: gamification for remote sonification evaluation. In: 26th international conference on auditory display (ICAD2021), Virtual conference, pp 233–240. https://doi.org/10.21785/icad2021.026

Asendorf M, Kienzle M, Ringe R, Ahmadi F, Bhowmik D, Chen J, Hyunh K, Kleinert S, Kruesilp J, Wang X, Lin YY, Luo W, Mirzayousef Jadid N, Awadin A, Raval V, Schade EES, Jaman H, Sharma K, Weber C, Winkler H, Ziemer T (2021) Tiltification/sonic-tilt: first release of sonic tilt. In: https://github.com/Tiltification/sonic-tilt. https://doi.org/10.5281/zenodo.5543983

Acknowledgements

Many thanks to my colleagues for fruitful discussions and advice: Holger Schultheis, Ron Kikinis, David Black, Peter Haddawy and Nuttawut Nuchprayoon

Funding

Open Access funding enabled and organized by Projekt DEAL.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ziemer, T. Three-dimensional sonification as a surgical guidance tool. J Multimodal User Interfaces 17, 253–262 (2023). https://doi.org/10.1007/s12193-023-00422-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12193-023-00422-9