Abstract

Background

Despite straightforward guidelines on brain death determination by the American Academy of Neurology (AAN), substantial practice variability exists internationally, between states, and among institutions. We created a simulation-based training course on proper determination based on the AAN practice parameters to address and assess knowledge and practice gaps at our institution.

Methods

Our intervention consisted of a didactic course and a simulation exercise, and was bookended by before and after multiple-choice tests. The 40-min didactic course, including a video demonstration, covered all aspects of the brain death examination. Simulation sessions utilized a SimMan 3G manikin and involved a complete examination, including an apnea test. Possible confounders and signs incompatible with brain death were embedded throughout. Facilitators evaluated performance with a 26-point checklist based on the most recent AAN guidelines. A senior neurologist conducted all aspects of the course, including the didactic session, simulation, and debriefing session.

Results

Ninety physicians from multiple specialties have participated in the didactic session, 38 of whom have completed the simulation. Pre-test scores were poor (41.4 %), with attendings scoring higher than residents (46.6 vs. 40.4 %, p = 0.07), and neurologists and neurosurgeons significantly outperforming other specialists (53.9 vs. 38.9 %, p = 0.003). Post-test scores (73.3 %) were notably higher than pre-test scores (45.4 %). Participant feedback has been uniformly positive.

Conclusion

Baseline knowledge of brain death determination among providers was low but improved greatly after the course. Our intervention represents an effective model that can be replicated at other institutions to train clinicians in the determination of brain death according to evidence-based guidelines.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

The American Academy of Neurology (AAN) published updated practice parameters in 2010 [1] to guide clinicians in the determination of brain death. To make this applicable in clinical practice, the AAN created a four-step protocol for providers specifying (1) clinical prerequisites for beginning the determination process, (2) the appropriate neurological examination (including apnea testing), (3) ancillary testing (if needed), and (4) documentation in the medical record.

Despite these guidelines, significant variations in policies exist across US hospitals. In 2008, Greer et al. [2] evaluated differences in brain death guidelines among leading US hospitals and found that many centers did not adhere to the AAN guidelines with respect to the clinical examination, apnea testing, and ancillary tests. Furthermore, the clinical competence of physicians performing brain death examinations has not been well studied [3], and chart audits of patients diagnosed with brain death reveal inadequate documentation [4, 5]. Given the complexity of the examination and the medical-legal ramifications of declaring death, this laxity is alarming.

We created a two-part training course—with both didactic and simulation sessions—to help close this knowledge gap. We chose a simulation-based approach given its superiority over traditional medical education techniques in achieving specific clinical skills goals [6]. It also allowed us to evaluate competence in performing the clinical exam under varied circumstances. We hypothesized that baseline knowledge of brain death determination among providers would be low but would improve substantially after the intervention.

Methods

We implemented a program to train physicians in the determination of brain death according to the 2010 AAN Practice Parameters. The intervention consisted of a two-part training course: a didactic session and a simulation exercise.

Evaluation

Knowledge was assessed using 20-question, multiple-choice pre- and post-tests. The pre-test was given immediately before the didactic session to assess baseline knowledge, and the post-test was given immediately after the simulation to assess the course’s efficacy in improving knowledge. Questions formulated by experts at our institution were based on the AAN practice parameters as well as common pitfalls described in the literature.

Simulation performance was evaluated according to a 26-point checklist that closely mirrors the AAN practice parameters. In addition to completion of a standard brain death examination, points were awarded for recognizing and responding to several embedded confounders and signs, as described below.

Didactic

The didactic session covered the following aspects of brain death determination: (1) history and definition, (2) clinical examination, (3) apnea testing, (4) ancillary testing, (5) confounders, and (6) common pitfalls. To illustrate the technical aspects of the examination, a video of a proper brain death exam was shown.

Simulation

Preparation and Equipment

We utilized the SimMan 3G simulation manikin (SimMan 3G®, Laerdal Medical, Wappingers Falls, NY). This model was selected for its pupil reactivity and seizure functionalities, both used in our scenario. We adapted the manikin with an onlaid earpiece that allowed for injection of water into the ear canal to assess the oculovestibular reflex without compromising the electronics. The manikin was intubated with a 7.0 mm cuffed endotracheal tube with an in-line suction catheter in place. The monitor displayed heart rate, oxygen saturation, blood pressure, temperature, respiratory rate, and end tidal CO2. We provided ice water and a 60 cc syringe with tubing to assess the oculovestibular reflex (OVR); cotton swabs for corneal reflex testing; a reflex hammer to assess deep tendon reflexes, Babinski sign and responsiveness to noxious stimuli; a flashlight for pupillary assessment; and a suction catheter and oxygen tubing for the apnea test. The scenario was scripted and programmed using Laerdal SimMan 3G software and progressed based on the participant performing critical actions and/or the facilitator offering cues to move forward.

Staff

A simulation technician prepared the environment and controlled the simulator from a control room. The facilitator—a senior neurologist versed in brain death—conducted the session, including the orientation, simulation, and debriefing. Facilitators were required to participate in an 8-h faculty development course run by our institution’s simulation center (SYN:APSE Center for Learning, Transformation and Innovation).

The Script

We created a three-page document containing a checklist alongside necessary prompts and laboratory values (Figs. 1, 2, and 3). This allowed the facilitator to simultaneously grade performance and provide necessary prompting and instruction.

Orientation and Initial Prompt

Participants were read a scripted orientation to the simulator’s capabilities and limitations, the environment and equipment, and the process and expectations for the session. The facilitator provided a scenario of a 54-year-old man who suffered a prolonged cardiac arrest 48 h earlier, not treated with therapeutic hypothermia. Vital signs, oxygen saturation, ventilator settings, as well as recent chest X-ray, head computed tomography results, and arterial blood gas (ABG) values were provided. We immediately provided information ruling out several confounders, rather than having the participant seek this information independently, including the absence of paralytics, prior therapeutic hypothermia, sedating medications, cervical spine injury, hyperammonemia, or significant acid–base, endocrine, or electrolyte disorders. In practice, eliminating these confounders is obviously of critical importance. However, we eliminated them to save time so that the simulation could be spent practicing the technical aspects of the clinical exam and apnea test, which better lent themselves to simulation-based learning.

Participants were told to perform a complete brain death exam, including an apnea test. They were informed that time was adjusted for the purposes of the exercise and that the patient might not be brain dead on initial evaluation. They were asked to verbalize their examination and thought process.

The Clinical Exam

Participants were allowed to complete the examination in whatever order they preferred, although the apnea test was to be performed last. A checklist adapted from the most recent AAN guidelines was used to track and evaluate performance (Fig. 2). If a participant omitted a component of the clinical exam, we did not notify them until after the exercise was completed.

The manikin was fully covered with a sheet. The physician was expected to uncover the extremities (maintaining decency on the manikin) to facilitate observation of any movement in response to stimulation. There were three findings on the examination that prevented the initial declaration of brain death. The first was communicated in the initial prompt—the patient’s temperature was 34 °C, requiring warming to achieve at least 36 °C. The second was recognition that the patient was having a seizure, manifested by spontaneous vigorous shaking of the manikin 1 min into the exercise. If the physician correctly recognized that a seizure is incompatible with a determination of brain death, we then instructed that that 1 day had passed without any further witnessed seizures. The last incompatible finding was a reactive pupil. If the examiner correctly chose to stop the examination, we would indicate that 1 day had passed with no evidence of pupillary reactivity. Upon further examination, the pupil would no longer react. The remainder of the clinical examination was consistent with a clinical diagnosis of brain death. The expected components of a complete examination, along with their associated findings, are outlined in the script (Fig. 2).

With completion of the clinical examination, we provided an additional prompt: the urine bag was filling rapidly, implicating possible central diabetes insipidus. The correct response was to give intravenous fluids and/or DDAVP to correct hypovolemia. If the participant did not respond appropriately, we explained the correct response to ensure that they could move forward with apnea testing.

The Apnea Test

The script and evaluation checklist for the apnea test (Fig. 3) were also adapted from the AAN guidelines. The facilitator began by reorienting the participant with the most recent ventilator settings, blood pressure, and ABG values. The ABG reflected any changes in minute ventilation or FiO2 the participant may have made earlier, such as pre-oxygenation or establishing normocarbia. The initial ABG values were pH 7.54, pCO2 30 mm Hg, and pO2 110 mm Hg (henceforth abbreviated as pH/pCO2/pO2), with the ventilator set on assist control ventilation (respiratory rate 20/min, tidal volume 750 mL, FiO2 50 %, PEEP 5 cm H2O). The facilitator asked the participant if they wished to modify the ventilator settings before the apnea test. We listed three potential ABG values in the script based on these modifications (Fig. 1). If the participant chose to decrease the minute ventilation, the ABG would be 7.38/42/90, and if they also chose to increase the FiO2 to 100 %, the ABG would be 7.38/42/270. The appropriate action was to decrease minute ventilation via respiratory rate and/or tidal volume reduction, and to increase the FiO2 to 100 % for pre-oxygenation.

At the equivalent of 3 min into the apnea test, the participant was informed that the blood pressure was slightly lower (but still within an acceptable range); no action was warranted. At the equivalent of 6 min into the apnea test, the participant was notified that the patient’s blood pressure had dropped to 98/50 mmHg. The correct response was not to terminate the exam, but rather to administer a vasopressor or fluid bolus. At the equivalent of 10 min into the apnea test, the participant was notified that 10 min had passed and that the patient’s pulse oximetry reading was 88 %. This reading is within the acceptable range, and the correct response was to ask for an ABG and reconnect the ventilator. The final ABG result was 7.10/66/65, and the participant was expected to declare brain death.

Unlike the clinical examination, the facilitator guided the participants through the apnea test if they were unsure of the next step to ensure that every participant had the experience of conducting a full apnea test. Furthermore, in the context of a simulation exercise, anticipating and realistically simulating the results of an incorrectly conducted apnea test was tedious and of little educational value, since the results of an apnea test are only meaningful if conducted according to established guidelines. For these reasons, we chose to only offer three possible ABG values based on the correct changes in the ventilator settings (i.e., decreasing minute ventilation, increasing FiO2 to 100 %, or both).

Debriefing

Following the simulation, the participant and facilitator debriefed according to the 3-phased approach prominent in the simulation literature. This approach includes: (1) a description phase during which participants offered initial reactions and their understanding of the clinical facts of the case; (2) an analysis phase during which the facilitator and participant discussed performance gaps; and (3) a synthesis phase during which the facilitator and participant summarized key take home points to apply to clinical practice [7–9]. The checklist was used during the analysis phase to provide specific feedback on performance. Subsequent to the debriefing, participants completed an evaluation of the session and facilitator for quality improvement purposes. They then completed the 20-question post-test.

Statistical Analysis

Test and simulation scores between groups were compared using Student’s t test for continuous variables. Statistical significance was established at p < 0.05 (2-tailed). The Pearson product-moment correlation coefficient was calculated based on matched pre-test and simulation scores of all simulation participants.

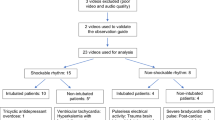

Results

Thus far, 90 clinicians have taken the course (Table 1), 38 of whom have completed the simulation. Our highest participation rates came from neurology attendings (16/32 practicing faculty), trauma and surgical critical care attendings (10/11 practicing faculty), neurology residents (19/23 from PGY2-4), neurosurgery residents (9/14 from PGY1-7), and emergency medicine residents (13/38 from PGY2-4).

Overall, pre-test scores (Fig. 4) were higher among attendings (n = 37) than residents (n = 42), with scores of 46.6 versus 40.4 %, respectively (p = 0.07). Among physicians in neurology and neurosurgery, attendings scored significantly higher than residents (53.9 vs. 42.1 %, p = 0.008). Attendings in neurology and neurosurgery scored significantly higher than those in other specialties (53.9 vs. 38.9 %, p = 0.003), and the same comparison among residents showed a similar, but non-significant trend (42.1 vs. 37.1 %, p = 0.24). Interestingly, pre-test scores among the 38 participants who have completed the simulation exercise were significantly higher than the 52 who have not (45.3 vs. 38.6 %, p = 0.04).

Simulation performance (Fig. 5) was weakly correlated (r = 0.30) with pre-test scores, and differences between groups were not significant. Attendings (n = 21) scored higher than residents (n = 15) on the 26-point evaluation (72.2 vs. 64.4 %, p = 0.15), and physicians in neurology and neurosurgery scored higher than those in other fields (69.8 vs. 65.7 %, p = 0.47). Common omissions (Fig. 6) included the requirement to uncover the extremities during the clinical exam (79 % omitted), to uncover the chest and abdomen during the apnea test (79 % omitted), and to test blink to visual threat (76 % omitted).

Common omissions in the simulation exercise. Uncover ext uncovers extremities during clinical exam, Uncover torso uncovers torso during apnea test, Blink tests blink to visual threat, Position HOB: positions head of bed at 30° for oculovestibular reflex testing, Bolus/DDAVP provides fluid bolus or DDAVP to correct central diabetes insipidus

The simulation cohort’s post-test scores were significantly higher than their pre-test scores (Fig. 7), improving from a mean of 45.4 % to a mean of 73.3 % (p < 0.001). Participants improved significantly in all categories, with the exception of ancillary testing, where there was a non-significant decrease in scores.

On the post-course feedback form, participants gave the course an average rating of “excellent,” and selected “strongly agree” in response to the statements “the course was realistic” and “I will apply what I learned to my job.”

Discussion

Results

Herein we describe a didactic and simulation-based intervention for caregivers to increase competence in clinical brain death determination. The necessity of this intervention was evidenced by participants scoring an average of 41.5 % (n = 90) on the pre-course test, which tested fundamental knowledge required to perform an accurate brain death evaluation. These results are even more striking when considering that the majority of participants are specialists in fields in which the brain death examination features prominently.

Based on pre-course tests, attendings in neurology and neurosurgery are more familiar with brain death concepts and guidelines than specialists in other fields commonly involved in brain death determination. This discrepancy is particularly important when considering that most brain death examinations are not performed by neurologists or neurosurgeons [5], and that many leading US hospitals’ guidelines do not require these specialists to perform the examination [2]. Level of training also seems to play an important role: despite scoring higher than residents in other specialties, neurology and neurosurgery residents scored significantly lower than attending physicians in their field. Again, this is not reflected in hospital policies: among the above-mentioned hospitals that require a neurologist or neurosurgeon to perform the brain death examination, the majority do not specify that an attending physician must perform the examination [2].

No group performed significantly better than another in the simulation exercise, and simulation scores were weakly correlated (r = 0.30) with pre-test score. This is likely because the pre-test was administered without warning, providing a truer assessment of baseline knowledge. Before the simulation, however, all participants benefited from the didactic session and significant time for preparation, which served to mitigate this initial knowledge gap.

Overall, the success of our intervention is evidenced by a 27.9 % improvement in mean score from pre-test to post-test, and by the uniformly positive feedback we received from our participants, who routinely emphasized the importance and utility of training in brain death determination.

Methods

A critical issue throughout the implementation of this training course was that the number of completed simulations lags behind the number of didactic attendees. This happened for several reasons. First, the didactic was usually scheduled during a lecture slot requiring attendance, including grand rounds, noon conference, or special invited lectures to a specific group. However, simulation required participants to schedule separate 30-min sessions. Furthermore, the prospect of being evaluated by a senior physician can be daunting, and it is possible that fear of criticism—especially among those unfamiliar with the brain death exam—may have led to avoidance of the simulation session, a point supported by significantly lower pre-test scores among those who did not sign up for the simulation. We have also been limited by our ability to provide enough time slots for participants. At 30 min per participant, it was unrealistic to train all 90 participants within a few months. This problem could be overcome by involving more instructors in the training course. At our institution, a single physician administered the entire training course, including didactics and simulations, in order to ensure uniformity across the study population. Implementing this course at other institutions would require much less time and effort if additional instructors participate, opening up more simulation time slots and expediting the training process.

In considering how to best implement our intervention at other sites, we should contextualize it within existing programs. At present, there are two emerging training courses representing opposite ends of the spectrum in terms of rigor and generalizability. The free, online Cleveland Clinic course [10] covers all aspects of brain death determination—ranging from the examination to relevant laws to family discussions—clearly and concisely and takes about 1 h to complete. Such a web-based approach has potential for wide impact at minimal cost, but it cannot allow participants hands-on experience in the context of an evolving clinical scenario.

On the opposite end of the spectrum is the University of Chicago Brain Death Simulation Workshop [11]. This yearly course accepts 20 physicians for a full day of highly structured simulation stations, lectures, and case studies staffed by expert faculty members who provide personalized feedback. While the course is very comprehensive and provides extensive hands-on learning, it can only train a small number of physicians, at a cost of $500–1000 each. We believe, however, that these workshop participants can have the greatest impact by operating smaller courses—such as the one described here—at their home institutions.

Our course lies between these two with regard to resource and learning intensity, as it combines a short, easily replicable didactic session with a hands-on learning experience in a manner that can be delivered to substantial numbers of participants at once. However, limitations to our intervention include that it was implemented at a major teaching hospital with resources such as a staffed simulation center, which may limit applicability to other institutions. We hope that other institutions can draw from aspects of our training course and consider partnerships with neighboring hospitals with these resources.

Our immediate goal is to complete the training of all physicians involved in brain death determination at our institution. In doing so, we will work to further hone our intervention to ensure its optimal effectiveness, with the ultimate hope of implementing it widely to help fill existing knowledge gaps.

References

Wijdicks EFM, Varelas PN, Gronseth GS, Greer DM. Evidence-based guideline update: determining brain death in adults: report of the Quality Standards Subcommittee of the American Academy of Neurology. Neurology. 2010;74:1911–8.

Greer DM, Varelas PN, Haque S, Wijdicks EF. Variability of brain death determination guidelines in leading U.S. neurologic institutions. Neurology. 2008;70:284–9.

Wijdicks EF. The diagnosis of brain death. N Engl J Med. 2001;344:1215–21.

Wang M, Wallace P, Gruen JP. Brain death documentation: analysis and issues. Neurosurgery. 2002;51:731–6.

Shappell CN, Frank JI, Husari K, Sanchez M, Goldenberg F, Ardelt A. Practice variability in brain death determination: a call to action. Neurology. 2013;81:2009–14.

McGaghie WC, Issenberg SB, Cohen ER, Barsuk JH, Wayne DB. Does simulation-based medical education with deliberate practice yield better results than traditional clinical education? A meta-analytic comparative review of the evidence. Acad Med. 2011;86:706–11.

Zigmont JJ, Kappus L, Sudikoff S. The 3D model of debriefing: defusing, discovering, and deepening. Semin Perinatol. 2011;35:52–8.

Dismukes RK, Gaba DM, Howard SK. So many roads: facilitated debriefing in healthcare. Simul Healthc. 2006;1:23–5.

Fanning RM, Gaba DM. The role of debriefing in simulation-based learning. Simul Healthc. 2007;2:115–25.

The Cleveland Clinic Death by Neurologic Criteria course website: https://www.cchs.net/onlinelearning/cometvs10/dncPortal/default.htm.

University of Chicago International Brain Death Simulation Workshop website: http://events.uchicago.edu/cal/event/showEventMore.rdo;jsessionid=C50E5D92211CB65C99BED86509B148FB.bw06.

Conflict of interest

Benjamin MacDougall, Jennifer Robinson, Liana Kappus, Stephanie Sudikoff, and David Greer declare that they have no conflicts of interest.

Author information

Authors and Affiliations

Corresponding author

Additional information

All Simulation expertise and resources including, but not limited to, simulation enhanced curricular construction, scenario design, simulator adaptation and technical support, were provided by the SYN:APSE Center for Learning, Transformation and Innovation at Yale-New Haven Health System.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Supplementary material 1 (MOV 7874 kb)

Rights and permissions

About this article

Cite this article

MacDougall, B.J., Robinson, J.D., Kappus, L. et al. Simulation-Based Training in Brain Death Determination. Neurocrit Care 21, 383–391 (2014). https://doi.org/10.1007/s12028-014-9975-x

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12028-014-9975-x