Abstract

A large proportion of cardiovascular events occur in individuals classified by traditional risk factors as “low-risk.” Efforts to improve early detection of coronary artery disease among low-risk individuals, or to improve risk assessment, might be justified by this large population burden. The most promising tests for improving risk assessment, or early detection, include the coronary artery calcium (CAC) score, the ankle-brachial index (ABI), and the high-sensitivity C-reactive protein (hsCRP). Data regarding the role of additional testing in low-risk populations to improve early detection or to enhance risk assessment are sparse but suggest that CAC and ABI may be helpful for improving risk classification and detecting the higher-risk people from among those at lower risk. However, in the absence of clinical trials in this patient population, such as has recently been proposed, we do not recommend routine use of any additional testing or screening in low-risk individuals at this time.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Despite considerable progress in developing new treatment strategies for coronary heart disease (CHD) and better prevention of incident disease, CHD continues to be the leading cause of death in the United States [1–3]. Approximately one-half of the improvement in CHD mortality during the past 30 years is attributed to improved preventive measures including medications (e.g., statins) and risk factor control [1]. Given the importance of CHD as a public health problem and the widely recognized effectiveness of available preventive measures, it is logical to consider early detection, or screening for coronary artery disease, in order to begin prevention measures earlier. Yet, in its most recent review of the role of testing for CHD risk and early detection of CHD, the United States Preventive Services Task Force (USPSTF) concluded that evidence available in 2009 was insufficient to make any recommendations about testing for such “non-traditional” risk factors as high-sensitivity C-reactive protein (hsCRP), carotid intima-media thickness (c-IMT), and coronary artery calcium (CAC) score [4]. In some circles, the topic of screening for subclinical atherosclerosis to improve cardiovascular risk assessment has been vigorously debated, with experts claiming that the time for early disease detection is already here [5], while others contend that the case for screening for asymptomatic CHD is unproven and requires further research [6].

Risk assessment, as contrasted with screening for early signs of CHD, has been an accepted component of preventive cardiology for at least 20 years. In 2003, the Third Report of the Adult Treatment Panel (ATP III) of the National Cholesterol Education Program [7] in the U.S. recommended use of the Framingham risk score (FRS) to estimate the 10-year risk of CHD in all adults [8]. Following this traditional risk factor-based calculation, the ATP III guidelines recommended initiation of lipid-lowering therapy for individuals who are classified as high-risk (>20 % risk of hard CHD over 10 years) but only selective use of lipid-lowering therapy in people with 10-year risk estimate between 10 % and 20 %. These guidelines did not recommend use of lipid-lowering drugs when the risk estimate is low, i.e., less than 10 % risk in ten years. Such treatment decisions are largely based on judgments about cost-effectiveness of lipid-lowering and other drugs when used among the lower risk members of the general population, and no further risk-related testing is called for.

The rationale for allocating drug interventions according to patient risk (“matching intensity of treatment to severity of the risk” [9]) is based on a cost-benefit approach. Providing preventive therapies that have cost and risk will be more readily justified in higher-risk patients who also have the greatest potential for benefit from risk-reducing treatments. However, high-risk individuals (>20 % 10-year risk of hard CHD) comprise a relatively small proportion of the population, so a prevention strategy that is limited to high-risk individuals fails to address the potential benefits of preventive interventions to the large segment of the population estimated to be at lower risk [10].

In one study, among men aged 50–59 years in the Systematic Coronary Risk Evaluation (SCORE) project in Europe, far more than half of cardiovascular deaths in middle-aged men occurred in individuals with estimated 10-year risk of ≤10 % [11, 12] (Fig. 1). This somewhat unexpected truth is due to the fact that the lower risk individuals are susceptible to CHD, and despite their lower risk, their sheer numbers account for this high disease burden [13]. In addition, the way we conventionally define CHD risk is weighted heavily by chronologic age rather than biological age. This large disease burden in apparently “low risk” people raises the question as to whether there are better methods of detection of risk than traditional risk factor scores alone.

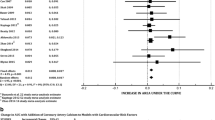

A comparison of the added discrimination for risk assessment using selected tests beyond Framingham Risk Score (FRS). CAC plus FRS is the most highly useful test in this comparison from the multi-ethnic study of atherosclerosis. Was reprinted with permission from: Yeboah J., McClelland, R.L. et al. Comparison of novel risk markers for improvement in cardiovascular risk assessment in intermediate-risk individuals. JAMA. 2012; 308(8): 792

Various guidelines differ on the best approach to screening and risk assessment in lower-risk individuals. In the 2009 statement from the USPSTF, while not recommending any tests for screening or “non-traditional testing,” the Task Force did recommend the routine use of traditional risk factors for risk assessment in all adults [4]. In contrast, recent guidelines from the American Heart Association/American College of Cardiology Foundation (AHA/ACCF) [14••] endorsed both traditional risk factor assessment, such as the Framingham Risk Score (FRS) as well as use of additional biomarkers such as CAC and hsCRP as “reasonable” for improving risk stratification in individuals at intermediate risk for CHD (10 %–20 % ten-year risk). The 2012 updated Canadian Prevention Guidelines [15••] also recommended that a cardiovascular risk assessment, using the “10-Year Risk” provided by the global FRS model (looking at total CVD events rather than MI alone as was done in the ATP III FRS) be completed every 3–5 years for men age 40–75, and women age 50–75 years.

Under special circumstances, the FRS was also advised in other kinds of patients, including in younger individuals with at least one risk factor for premature cardiovascular disease. The Canadian guideline stated that additional testing could be considered for more refined risk assessment in patients with intermediate (10 %–19 % ten-year) FRS, especially when treatment decisions are “uncertain.” They noted that use of additional tests should be viewed as optional and only to be used where decision-making will be directly affected (i.e., not in those in the high risk or lower risk groups [<5 %]). The choice of which test to use was judged to depend on the clinical situation and local expertise. In appropriate situations, the Canadian guideline stated that hsCRP can be helpful, is safe and inexpensive, and should be considered. For noninvasive testing, a clinical suspicion of peripheral vascular disease “should prompt” ankle brachial index (ABI) testing. In regard to CAC testing with computed tomography, the Canadian guideline concluded that CAC is superior to c-IMT for risk assessment. However, given expense and radiation exposure from CAC, until further data are available, its widespread use was not advocated.

Since the publication of these guidelines in 2009–2012, additional data from prospective cohort studies such as the Multi-Ethnic Study of Atherosclerosis (MESA) in the United States and the Heinz Nixdorf Recall (HNR) study in Germany have provided additional insights into the predictive capacity of various risk assessment strategies. In the balance of this review, we present an update of the most promising clinical, laboratory, and imaging tests that have been studied for CHD risk assessment or for early testing/screening of asymptomatic adults.

Traditional Risk Factors and Limitations of Risk Prediction Scoring Systems

In addition to age and sex, the factors that currently comprise the foundation of cardiovascular risk assessment are blood pressure, diabetes, cholesterol, smoking status, and family history of premature CHD. Risk scores such as the FRS incorporate all of these “traditional” risk factors and are useful for discriminating CHD risk within populations [8]. Therefore, the rationale for using CHD risk factors and risk scores to estimate an individual’s risk as the initial basis for preventive treatment is widely recognized, well-established over many years, and generally agreed upon [4, 7]. But, as discussed previously, using traditional risk factors alone to estimate CHD risk classifies large numbers of at-risk people as “low risk” which suggests the rationale for better risk assessment and/or better screening approaches.

Potential Biomarkers for Improved Identification of Early Coronary Artery Disease or CHD Risk

hsCRP

Inflammation is known to play an important role in the initiation and progression of atherosclerosis, and hsCRP is considered to be a useful marker of systemic inflammation [16]. Analysis of the Air Force/Texas Coronary Atherosclerosis Prevention Study showed that reduction in coronary events with lovastatin was proportional to the reduction in baseline hsCRP [17]. However, this effect was not observed in the Anglo-Scandinavian Outcomes Trial [18]. The JUPITER trial (Justification for the Use of Statins in Primary Prevention: an Intervention Trial Evaluating Rosuvastatin [19]) randomly assigned individuals with no prior history of coronary artery disease, low density lipoprotein cholesterol less than 130 mg/dl, and hsCRP greater than 2.0 mg/L to receive rosuvastatin or placebo. Over a median follow up of 1.9 years, assignment to the rosuvastatin group was associated with a 54 % relative risk reduction in the incidence of fatal or nonfatal myocardial infarction. Based on these data, the 2010 AHA/ACCF guidelines concluded that measurement of hsCRP for consideration of statin therapy in patients who meet the entry criteria for the JUPITER trial was reasonable (class IIa recommendation) [14••]. The AHA/ACCF did not recommend use of hsCRP testing in otherwise low-risk individuals.

In terms of risk assessment or screening, a key question is whether the addition of hsCRP has utility in improving risk prediction beyond traditional risk factors, whether measured by the C-statistic (discrimination) or by the net reclassification improvement (NRI) index [20••]. The addition of hsCRP to prediction models based on traditional risk factors is associated with only modest improvement in risk prediction, with C-statistic changes ranging from 0.0039 in a pooled cohort of over 252,000 patients [21] to 0.015 in single study of 3,435 European men [22]. The NRI, with addition of CRP to traditional risk factors, was also relatively modest at 1.5 % in the large pooled cohort analysis [21]. Therefore, we would agree with the AHA/ACCF recommendation that hsCRP should not be used as a potential means of modifying risk assessment in asymptomatic low-risk individuals.

Ankle-Brachial Index (ABI)

As noted above, the Canadian guideline [15••] stated that a clinical suspicion of peripheral vascular disease (PVD) “should prompt” ankle brachial index (ABI) testing for risk assessment purposes. The guideline did not specifically address the role of ABI in low-risk individuals but offered the view that any additional testing in low-risk individuals was not recommended. The AHA/ACCF guideline [14••] also assigned a class IIa recommendation to measurement of ABI for cardiovascular risk assessment in asymptomatic adults at intermediate risk. The ABI was also not recommended by AHA/ACCF for use in low-risk individuals. The ABI is an office-based test to check for the presence of PAD. It is performed by Doppler measurement of blood pressure in all four extremities at the brachial, posterior tibial, and dorsalis pedis arteries. The highest lower-extremity blood pressure is divided by the highest of the upper extremity blood pressures, with a value of <0.9 indicating the presence of PVD.

Many epidemiological studies have demonstrated that an abnormal ABI in otherwise asymptomatic individuals is associated with cardiovascular events. A collaborative study [23] combined data from 16 studies and included a total of nearly 25,000 men and 23,000 women without a history of CHD. The study included a wide representation of various components of the general population, including blacks, American Indians, persons of Asian descent, and Hispanics as well as whites. The mean age in the studies ranged from 47 to 78 years, and the FRS-predicted rate of CHD ranged from 11 % (intermediate risk) to 32 % (high-risk) in men and from 7 % (low-risk) to 15 % (intermediate risk) in women.

For an ABI of <0.9 compared to an ABI in the normal range (1.11 to 1.4), the unadjusted hazard ratios for cardiovascular mortality and major cardiovascular events were 3.3 for men and 2.7 for women. When adjusted for the FRS, the hazard ratios were attenuated, but still elevated, at 2.3 in both men and women. The greatest incremental benefit of ABI for predicting risk in men was in those with a high FRS (>20 %), in whom a normal ABI reduced risk to intermediate. In women the greatest risk assessment benefit was in those with a low FRS (<10 %), in whom an abnormally low or high ABI reclassified them as high risk, and in those with an intermediate FRS, who were reclassified as high risk with a low ABI. Based on these impressive data, especially in lower risk individuals, the ABI appears to be a reasonable test to employ when there is any suspicion of vascular disease in otherwise asymptomatic individuals [23].

Coronary Artery Calcification (CAC)

CAC has demonstrated consistent improvement in risk prediction when added to traditional risk factors across multiple studies and cohorts. Analysis of the HNR study examined the reclassification improvement with CAC and hsCRP [24]. The addition of CAC and hsCRP individually to traditional risk factors resulted in an NRI of 0.238 % and 0.105 %, respectively. The addition of CAC to a model that included hsCRP further improved reclassification, whereas when CAC was already included in the model, there was no further improvement with hsCRP. Thus, although both markers improved risk prediction, this important recent analysis showed that the vast majority of the improvement was driven by CAC.

An analysis from the MESA Study compared the improvement in prediction of six different risk markers when added to traditional risk factors for CHD risk prediction in intermediate risk individuals (FRS 10 %–20 % in ten years) [25••]. The tests included CAC, c-IMT, ABI, brachial artery flow-mediated dilation, hsCRP, and family history of premature CHD [25••]. This study has the major strength of comparing the various risk markers within a single population, allowing for head-to-head evaluations, but the analysis was restricted to intermediate risk individuals. After multivariable adjustment, c-IMT and brachial flow-mediated dilation were not associated with incident CHD. The area under the receiver operator characteristic curve (AUC) with the traditional risk prediction model was 0.623. The AUC values with the addition of CAC, ABI, flow-mediated dilation, and hsCRP were 0.784, 0.650, 0.639, and 0.640, respectively. The continuous NRI [26] was used to measure the improvement in risk prediction; CAC was associated with the greatest improvement in risk prediction (NRI = 0.659) and the greatest number of correctly reclassified MESA participants (Fig. 2).

More than half of CVD events were noted in men aged 50-59 years, with CVD risk < 10% in 10 years. Was reprinted with permission from Cooney M.-T., Dudina A., Whincup P., et al. Re-evaluating the Rose approach: comparative benefits of the population and high-risk preventive strategies. Eur J Cardiovasc Prev Rehabil. 2009; 16(541): 542

A similar analysis was conducted in the Rotterdam (Netherlands) study of older adults to compare the effectiveness of 12 non-traditional risk markers for improvement in CHD risk prediction when added to traditional risk models [27••]. CAC, c-IMT, and hsCRP were among the risk markers that were evaluated when added to traditional risk factors. CAC was again associated with the greatest improvement in risk prediction, with an overall NRI of 0.193. There was no statistically significant improvement in risk prediction beyond traditional risk factors with the addition of hsCRP or C-IMT [27••]. This analysis was conducted across the entire spectrum of the Rotterdam cohort and was not restricted to intermediate risk individuals.

Very few studies have evaluated the role of non-traditional tests for risk assessment in low-risk individuals (FRS < 10 % in ten years). An analysis of women in MESA with 10-year risk for CHD < 10 % found that CAC was present in 32 % of these individuals [28]. Women with CAC > 0 had a greater than 6-fold increased risk of CHD during a relatively short follow up period of 3.75 years, compared to women with no CAC. In addition, advanced CAC (CAC score >300) was highly predictive of future CHD and total cardiovascular (CVD) events compared with women with nondetectable CAC and identified a group of “low-risk” women with a 6.7 % and 8.6 % absolute CHD and CVD risk, respectively, over a 3.75-year period. Other risk factors were not specifically evaluated in this report [28].

The HNR Study also evaluated the potential role of CAC for modifying risk prediction in a low-risk sub-cohort defined by lack of indication for statin therapy according to Canadian Guidelines [29]. In 1934 participants, traditional CHD risk variables and CAC were measured and outcomes determined over eight years. In multiple Cox regression analysis including age, sex, total-/HDL-cholesterol ratio, and antihypertensive medication, log2(CAC + 1) remained an independent predictor of cardiovascular events (HR = 1.21 (1.09–1.33), p < 0.001). Measures of discrimination improved with the addition of CAC into the model: the incremental discrimination improvement was 0.0167, p = 0.014. Net reclassification improvement using risk categories of 0–< 3 %, 3–10 % and >10 % was 0.251 %, p = 0.01, largely driven by a 32 % correct up-classification in persons with events. Yet, only 38 (2 %) of participants were identified being at high risk using CAC imaging in addition to traditional risk factor assessment.

Our group is currently completing a meta-analysis of low-risk women in MESA, HNR, the Rotterdam Study, and the Dallas Heart Study to further determine the utility of CAC for CHD risk improvement in low-risk women. In preliminary (presented [30]) results, the addition of CAC to a baseline prediction model containing traditional risk factors resulted in a continuous NRI of 0.40 (P < 0.001). Thus, among the currently available biomarkers for coronary artery disease screening, CAC provides the greatest improvement in risk prediction and appears to have the greatest potential to identify individuals at higher risk for CHD, even among those initially considered to be low-risk.

Effectiveness of Treatment in Individuals with Subclinical Coronary Atherosclerosis

Among the World Health Organization’s classical criteria for evaluating the value of disease screening, one of the most important is that the outcome of disease treatment is better when detected earlier rather than later [31]. At present, there are no randomized, controlled trial data that have examined clinical outcomes with drug treatment interventions based on CAC testing or any other approach to risk assessment in low risk individuals. However, there are observational data that provide insight into this question.

A retrospective analysis of 849 patients who were at intermediate risk for CHD with a mean CAC score of 336 Agatston units were treated with aggressive risk factor modification in a preventive cardiology clinic [32]. Treatment of risk factors resulted in 60 % of patients meeting an low-density lipoprotein cholesterol goal of <70 mg/dl and 94.2 % of patients met a blood pressure goal of <140/90. There was no significant difference in the 10-year adjusted mortality rates between this cohort compared with 850 age-matched and sex-matched controls (9.3 % and 10.6 %, P = 0.80). The rate of major adverse coronary events (including revascularization) in the study group was 7.9 %, for an annual rate of <1 %, which is substantially lower than what would be expected based on the CAC score. These data suggest that aggressive risk factor modification may attenuate the risk of CHD associated with a high CAC score.

Other data that suggest a benefit of treating low-risk individuals with statins derives from the Cholesterol Treatment Trialists collaborative group [33]. In a meta-analysis that included individual participant data from 22 trials of statin versus control (n = 134,537) and five trials of more versus less statin (n = 39,612), overall risk reduction was estimated at 21 % for major vascular events in the statin-treated individuals. Importantly, the proportional reduction in major vascular events was at least as great in the two lowest risk categories (<5 % and ≥5 % to <10 %) as in the higher risk categories. Specifically, the relative risk per 1.0 mmol/L reduction in the lowest (<5 % risk) category was 0.62 [99 % CI 0.47–0.81] compared to 0.81 [99 % CI 0.77–0.86] in the traditionally high-risk category (≥20 %). There was no evidence that reduction of low-density lipoprotein cholesterol with a statin increased cancer incidence.

Future Directions

There is a paucity of evidence to support use of widespread testing for coronary artery disease presence (screening) or risk assessment in low-risk individuals. The current evidence, as reviewed above, favors the selective use of CAC and ABI in low-risk individuals, but there is no definitive evidence that this approach will lead to improved patient outcomes from earlier treatment or that it will be cost-effective. A large-scale trial of CAC testing in low-risk individuals has been proposed but has not yet been launched [34•]. A cost-effectiveness analysis would be an essential component of such a trial.

Conclusions

Strong evidence suggests that a large proportion of all cardiovascular events occur in the segment of the population classified by traditional risk factors as “low-risk” [12]. In our opinion, the available evidence regarding the role of additional testing in low-risk populations to improve early detection or to enhance risk assessment suggests that CAC and ABI may be helpful for improving risk classification and detecting the higher-risk people from among those at lower risk. In the absence of a clinical trial, such as that proposed by Ambrosius, et al. [34•] we do not recommend routine use of any additional testing or screening in low-risk individuals at this time. We strongly favor a clinical trial to address this large population burden.

References

Papers of particular interest, published recently, have been highlighted as: • Of importance •• Of major importance

Ford ES, Ajani UA, Croft JB, et al. Explaining the decrease in U.S. deaths from coronary disease, 1980–2000. N Engl J Med. 2007;356(23):2388–98.

Ford ES, Capewell S. Coronary heart disease mortality among young adults in the U.S. from 1980 through 2002: concealed leveling of mortality rates. J Am Coll Cardiol. 2007;50(22):2128–32.

Lozano R, Naghavi M, Foreman K, et al. Global and regional mortality from 235 causes of death for 20 age groups in 1990 and 2010: a systematic analysis for the Global Burden of Disease Study 2010. Lancet. 2012;380(9859):2095–128.

Force USPS. Using nontraditional risk factors in coronary heart disease risk assessment: U.S. Preventive Services Task Force recommendation statement. Ann Intern Med. 2009;151(7):474–82.

Shah PK. Screening asymptomatic subjects for subclinical atherosclerosis: can we, does it matter, and should we? J Am Coll Cardiol. 2010;56(2):98–105.

Lauer MS. Screening asymptomatic subjects for subclinical atherosclerosis: not so obvious. J Am Coll Cardiol. 2010;56(2):106–8.

Expert Panel on Detection E, Treatment of High Blood Cholesterol in A. Executive summary of the third report of the National Cholesterol Education Program (NCEP) expert panel on detection, evaluation, and treatment of high blood cholesterol in adults (adult treatment panel III). JAMA J Am Med Assoc. 2001;285(19):2486–97.

Wilson PW, D’Agostino RB, Levy D, Belanger AM, Silbershatz H, Kannel WB. Prediction of coronary heart disease using risk factor categories. Circulation. 1998;97(18):1837–47.

Califf RM, Armstrong PW, Carver JR, D’Agostino RB, Strauss WE. 27th Bethesda Conference: matching the intensity of risk factor management with the hazard for coronary disease events. Task Force 5. Stratification of patients into high, medium and low risk subgroups for purposes of risk factor management. J Am Coll Cardiol. 1996;27(5):1007–19.

Rose G. Sick individuals and sick populations. Int J Epidemiol. 2001;30(3):427–32. discussion 433–424.

Conroy RM, Pyorala K, Fitzgerald AP, et al. Estimation of ten-year risk of fatal cardiovascular disease in Europe: the SCORE project. Eur Heart J. 2003;24(11):987–1003.

Cooney MT, Dudina A, Whincup P, et al. Re-evaluating the Rose approach: comparative benefits of the population and high-risk preventive strategies. Eur J Cardiovasc Prev Rehabil Off J Eur Soc Cardiol Work Group Epidemiol Prev Card Rehabil Exerc Physiol. 2009;16(5):541–9.

Rose G. Sick individuals and sick populations. Int J Epidemiol. 1985;14(1):32–8.

Greenland P, Alpert JS, Beller GA, et al. 2010 ACCF/AHA guideline for assessment of cardiovascular risk in asymptomatic adults: a report of the American College of Cardiology Foundation/American Heart Association Task Force on Practice Guidelines. Circulation. 2010;122(25):e584-636. This is an important review of all potential approaches to risk assessment in asymptomatic patients. It is a good source of detailed background on this subject.

Anderson TJ, Gregoire J, Hegele RA, et al. 2012 update of the Canadian Cardiovascular Society guidelines for the diagnosis and treatment of dyslipidemia for the prevention of cardiovascular disease in the adult. Can J Cardiol. 2013;29(2):151–67. This update to previous Canadian Guidelines is an important reference source on the topics of prevention and risk assessment.

Libby P, Ridker PM, Hansson GK, Leducq Transatlantic Network on A. Inflammation in atherosclerosis: from pathophysiology to practice. J Am Coll Cardiol. 2009;54(23):2129–38.

Ridker PM, Rifai N, Clearfield M, et al. Measurement of C-reactive protein for the targeting of statin therapy in the primary prevention of acute coronary events. N Engl J Med. 2001;344(26):1959–65.

Sever PS, Poulter NR, Chang CL, et al. Evaluation of C-reactive protein before and on-treatment as a predictor of benefit of atorvastatin: a cohort analysis from the anglo-scandinavian cardiac outcomes trial lipid-lowering arm. J Am Coll Cardiol. 2013;62(8):717–29.

Ridker PM. High-sensitivity C-reactive protein and cardiovascular risk: rationale for screening and primary prevention. Am J Cardiol. 2003;92(4B):17K–22K.

Yousuf O, Mohanty BD, Martin SS, et al. High-sensitivity C-reactive protein and cardiovascular disease: a resolute belief or an elusive link? J Am Coll Cardiol. 2013;62(5):397–408. This is a comprehensive review of the utility of C-reactive protein for cardiovascular risk. It supports a limited role for hsCRP in risk assessment.

Emerging Risk Factors C, Kaptoge S, Di Angelantonio E, et al. C-reactive protein, fibrinogen, and cardiovascular disease prediction. N Engl J Med. 2012;367(14):1310–20.

Koenig W, Lowel H, Baumert J, Meisinger C. C-reactive protein modulates risk prediction based on the Framingham Score: implications for future risk assessment: results from a large cohort study in southern Germany. Circulation. 2004;109(11):1349–53.

Ankle Brachial Index C, Fowkes FG, Murray GD, et al. Ankle brachial index combined with Framingham Risk Score to predict cardiovascular events and mortality: a meta-analysis. JAMA J Am Med Assoc. 2008;300(2):197–208.

Mohlenkamp S, Lehmann N, Moebus S, et al. Quantification of coronary atherosclerosis and inflammation to predict coronary events and all-cause mortality. J Am Coll Cardiol. 2011;57(13):1455–64.

Yeboah J, McClelland RL, Polonsky TS, et al. Comparison of novel risk markers for improvement in cardiovascular risk assessment in intermediate-risk individuals. JAMA J Am Med Assoc. 2012;308(8):788–95. This is one of a small number of analyses from a large cohort comparing risk assessment approaches using different tests. It supports a conclusion that CAC is the leading contender for improving risk assessment in intermediate risk populations.

Pencina MJ, D’Agostino Sr RB, Steyerberg EW. Extensions of net reclassification improvement calculations to measure usefulness of new biomarkers. Stat Med. 2011;30(1):11–21.

Kavousi M, Elias-Smale S, Rutten JH, et al. Evaluation of newer risk markers for coronary heart disease risk classification: a cohort study. Ann Intern Med. 2012;156(6):438–44. This paper is similar to reference 26 but applies across the entire Rotterdam cohort of mostly elderly men and women.

Lakoski SG, Greenland P, Wong ND, et al. Coronary artery calcium scores and risk for cardiovascular events in women classified as “low risk” based on Framingham risk score: the multi-ethnic study of atherosclerosis (MESA). Arch Intern Med. 2007;167(22):2437–42.

Mohlenkamp S, Lehmann N, Greenland P, et al. Coronary artery calcium score improves cardiovascular risk prediction in persons without indication for statin therapy. Atherosclerosis. 2011;215(1):229–36.

Desai CS, Ayers CR, Budoff M, et al. Improved coronary heart disease risk prediction with coronary artery calcium in low-risk women: a meta-analysis of four cohorts. J Am Coll Cardiol. 2013;61(10):E995–5.

Wilson JM. The evaluation of the worth of early disease detection. J R Coll Gen Pract. 1968;16 Suppl 2:48–57.

Bhatti SK, Dinicolantonio JJ, Captain BK, Lavie CJ, Tomek A, O’Keefe JH. Neutralizing the adverse prognosis of coronary artery calcium. Mayo Clin Proc Mayo Clin. 2013;88(8):806–12.

Cholesterol Treatment Trialists C, Mihaylova B, Emberson J, et al. The effects of lowering LDL cholesterol with statin therapy in people at low risk of vascular disease: meta-analysis of individual data from 27 randomised trials. Lancet. 2012;380(9841):581–90.

Ambrosius WT, Polonsky TS, Greenland P, et al. Design of the value of imaging in enhancing the wellness of your heart (VIEW) trial and the impact of uncertainty on power. Clin Trials. 2012;9(2):232–46. This is a provocative proposal for a large-scale trial of coronary artery calcium testing (screening) in asymptomatic low-risk individuals. It is an important “thought-piece” on this topic.

Compliance with Ethics Guidelines

Conflict of Interest

Chintan S. Desai, Roger S. Blumenthal, and Philip Greenland declare that they have no conflict of interest.

Human and Animal Rights and Informed Consent

This article does not contain any studies with human or animal subjects performed by any of the authors.

Author information

Authors and Affiliations

Corresponding author

Additional information

This article is part of the Topical Collection on Coronary Heart Disease

Rights and permissions

About this article

Cite this article

Desai, C.S., Blumenthal, R.S. & Greenland, P. Screening Low-Risk Individuals for Coronary Artery Disease. Curr Atheroscler Rep 16, 402 (2014). https://doi.org/10.1007/s11883-014-0402-8

Published:

DOI: https://doi.org/10.1007/s11883-014-0402-8