Abstract

Summary

Automated screening for vertebral fractures could improve outcomes. We achieved an AUC-ROC = 0.968 for the prediction of moderate to severe fracture using a GAM with age and three maximal vertebral body scores of fracture from a convolutional neural network. Maximal fracture scores resulted in a performant model for subject-level fracture prediction. Combining individual deep learning vertebral body fracture scores and demographic covariates for subject-level classification of osteoporotic fracture achieved excellent performance (AUC-ROC of 0.968) on a large dataset of radiographs with basic demographic data.

Purpose

Osteoporotic vertebral fractures are common and morbid. Automated opportunistic screening for incidental vertebral fractures from radiographs, the highest volume imaging modality, could improve osteoporosis detection and management. We consider how to form patient-level fracture predictions and summarization to guide management, using our previously developed vertebral fracture classifier on segmented radiographs from a prospective cohort study of US men (MrOS). We compare the performance of logistic regression (LR) and generalized additive models (GAM) with combinations of individual vertebral scores and basic demographic covariates.

Methods

Subject-level LR and GAM models were created retrospectively using all fracture predictions or summary variables such as order statistics, adjacent vertebral interactions, and demographic covariates (age, race/ethnicity). The classifier outputs for 8663 vertebrae from 1176 thoracic and lumbar radiographs in 669 subjects were divided by subject to perform stratified fivefold cross-validation. Models were assessed using multiple metrics, including receiver operating characteristic (ROC) and precision-recall (PR) curves.

Results

The best model (AUC-ROC = 0.968) was a GAM using the top three maximum vertebral fracture scores and age. Using top-ranked scores only, rather than all vertebral scores, improved performance for both model classes. Adding age, but not ethnicity, to the GAMs improved performance slightly.

Conclusion

Maximal vertebral fracture scores resulted in the highest-performing models. While combining multiple vertebral body predictions risks decreasing specificity, our results demonstrate that subject-level models maintain good predictive performance. Thresholding strategies can be used to control sensitivity and specificity as clinically appropriate.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

In 2010, an estimated 158 million individuals were at high risk of osteoporotic fracture worldwide, doubling by 2040 [1]. Despite the US Preventive Services Task Force recommendations, osteoporosis screening is underutilized [2]. Incident osteoporotic vertebral compression fractures increase the risk of future fractures, including hip fractures which have a 20–40% 1-year mortality [3, 4].

Given the morbidity and mortality associated with osteoporotic fractures and the high prevalence of disease, better detection could benefit many subjects. Fractures are often incidental, subtle, and under-reported, resulting in under-diagnosis and under-treatment [5]. Automated opportunistic screening of existing data could markedly improve the standard of care by improving screening rates and osteoporosis diagnosis at minimal added cost. Unlike prior predictive models that use covariates to predict future risk of fracture based on risk factors, or use CT data to predict BMD and osteopenia, or use CT data to screen for existing fracture, we seek to use radiographs to screen large populations for the presence of current or prior osteoporotic fracture [6,7,8]. In this body of work, we take fracture scores for each vertebra on a given radiograph from a deep learning model and subject data to predict whether there are one or more fractures on a radiograph.

Previously, we used a dataset from The Osteoporotic Fractures in Men (MrOS) study consisting of images of individual vertebra from each radiograph (vertebral patches) and a dichotomized Genant Semiquantitative method classification system to train a vertebral fracture detecting deep learning model [9, 10]. Final AUC-ROC performance was > 0.990 at the vertebral level. However, for clinical use as an automated screening tool, the decision to alert a clinician must be calculated at the subject (not vertebral) level. Careful consideration is needed to minimize false positives and the associated unnecessary workup, cost, effort, and stress.

One strategy for combining vertebral fracture scores into a binary subject-level output (zero versus one or more vertebral fractures) is to threshold the predicted fracture probabilities and then aggregate all vertebrae for a subject. The drawback of this approach is that the predictive accuracy established for a single vertebral model could be decreased in the subject-level model, because multiple outputs are combined into a summary prediction. For example, if we consider a ten-vertebrae model with an initial fracture model that controlled the false-positive rate at 1%, then using a rule of “any fractures” across the ten vertebrae would at best preserve the 1% error rate if outcomes are highly correlated across individual vertebra, or it could inflate the subject-level false-positive rate to nearly 10% if false-positive predictions across multiple vertebrae were independent (Supplementary Information Fig. SI1). Therefore, a subject-level analysis is needed to quantify and control the subject-level error rate to minimize false positives.

With the demographic data available for the MrOS subjects, separate vertebral fracture scores can be combined into subject-level fracture predictions. For clinical use, we focused on two objectives: (1) comparison of vertebral fracture score modeling strategies for subject-level fracture prediction and (2) incremental predictive value of readily available demographic covariates.

Methods

Data

The MrOS study, a prospective longitudinal cohort study, began in 2000 [11,12,13]. Six US academic medical centers contributed data under local IRB approval with written consent. A total of 5994 males, 65 and older, contributed clinical, laboratory, and imaging data at an initial (Visit 1) and follow-up visit (Visit 2), 4.5 years apart on average. Since there are roughly two visits for every subject, there are approximately double the number of vertebral patches compared to vertebral bodies. Covariates (age, ethnicity) were self-reported and collected at baseline. Summary data and demographics for the 669 subjects in the randomly selected test dataset (from MrOS) used in this study are shown in Table 1. The binarized form of classification used is Class 0 defined as Genant SQ class 0, Class 1 everything else.

This secondary analysis uses individual vertebral fracture scores for the randomly selected 15% held-out test set from a deep learning (GoogLeNet) model described in a separate body of work [10, 14]. That vertebral model was trained and validated on the segmented thoracic and lumbar lateral radiographs from the other 85% of MrOS subjects with transfer learning from ImageNet [15], where training data was subsampled to enrich the ratio of class 0 to class 1 vertebrae to 2.5:1. Each radiograph was converted to a 16-bit image and, if necessary, flipped so the subject faced left. For each lateral spine radiograph, each vertebral body and a margin of surrounding pixels, often including the adjacent endplates, were extracted to create vertebral patches. The patches were resized to 224 × 224 pixels. Pixel intensities were normalized. The pre-processed vertebral patches were then used to train a GoogLeNet model using several techniques including image augmentation, transfer learning, Adam learning rate optimization, and early stopping. Hyper-parameters were tuned using a random search.

Vertebra above T4 and below L4 are excluded by MrOS, resulting in 13 usable levels. Ground truth for the presence of one or more fractures in a subject was defined by the maximum SQ annotation across these 13 vertebrae. Details on the Genant semiquantitative fracture classification system and the distribution of anatomic fractures across vertebral levels are in Fig. 1.

The Genant semiquantitative osteoporotic fracture classification system classifies osteoporotic vertebral compression fractures by the degree of height loss and the change in vertebral morphology with either loss of height anteriorly, centrally, or posteriorly. The degree of height loss is estimated visually and is complicated by the narrow range of height loss inherent to the different rows (20 to 25%, 25 to 40%, or > 40%). To focus on the clinically relevant endpoint of whether there is confidence that an osteoporotic fracture is present, this classification system is simplified into a binary classification system by grouping the moderate and severe deformities together (orange class 0: SQ 3, 4, 4.5, 5, 6, 7) and grouping the normal and mild deformity classes together (yellow class 1: SQ 0, 1, 2, 2.5). The frequency of different Genant class fractures (SQ 1, 2, 2.5, 3, 4, 5, 6, 7) is plotted by spinal anatomic level. A bimodal distribution of fractures can be seen centered around T12-L1 and T7. The division of anatomic levels into five distinct regions used in model development is demonstrated on the radiograph

Missing data

Due to technical or anatomic factors, 111 (0.73%) of the vertebrae did not have associated scores. To build subject-level models, missing vertebral fracture scores were imputed [16]. Multiple strategies were compared: random forests and either predictive mean matching (PMM) or Bayesian linear regression through multivariate imputation via chained equations (Supplementary Information Fig. SI2) [17,18,19].

Model classes

There are many ways to combine the individual vertebral scores into a subject-level prediction of fracture. To increase generalizability and interpretability, simpler methods with fewer inputs and less flexibility were attempted first.

We fit logistic regression (LR) and generalized additive models (GAMs) using selected inputs [20, 21]. That is, for each subject \(i\) and \(p\) inputs \({x}_{i1},\dots .,{x}_{ip}\), we fit models of the form:

where \(P\left({y}_{i}=1|{x}_{i1},\dots ,{x}_{ip}\right)\) is the conditional probability of any vertebral fracture given the inputs, \(g\left(\bullet \right)\) is the logit function, and \({f}_{1},\dots ,{f}_{p}\) are the nonlinear functions estimated using scatterplot smoothers. LR is the most common model for binary outcomes. GAMs are a popular extension, where the assumption that the log-odds are linear is replaced by the more general form above [22, 23]. By relaxing the linearity constraint, GAMs increase model flexibility and capture nonlinearities in the covariate effects. We focused on regression models because of the low dimensionality of the predictor space, which avoids overfitting and facilitates explainability and ease of implementation.

Model inputs

For inputs, we compared performance using the individual vertebral scores or their order statistics (the top k fracture scores at the vertebral level). Order statistics, like the maximum, are nonlinear functions of the individual scores. Interaction terms for neighboring anatomic levels were also modeled, since fractures can be subtle and adjacent intermediate predictions should increase the confidence of a fracture as multiple osteoporotic compression fractures typically are adjacent. Fracture adjacency is explored in Fig. 1 and Supplementary Information Fig. SI3.

The effect on model performance by incorporation of demographic covariates was explored. When working with medical imaging data, basic subject demographics are embedded within the DICOM metadata of the file. Thus, subjects’ age and gender are readily available. MrOS subjects are exclusively male, so age but not gender was explored as a covariate for prediction. The incidence of osteoporotic fractures has been shown to vary by race [24, 25]. Race/ethnicity was extracted (GIERACE) and tested as a predictive covariate.

Model assessment

This dataset is 15% of the original MrOS dataset and comprises 1176 radiographs on 669 subjects, with 83 subjects having one or more moderate to severe fractures. To fairly report model performance and maximize the limited data available, fivefold cross-validation was performed [26]. The dataset contains two sets of radiograph images (lumbar and thoracic radiographs from two visits for a total of four radiographs per subject) and demographic covariates for each subject. These measurements are not independent across visits. To perform fivefold cross-validation, radiographs were split into five “folds” or non-overlapping subsets by subject (Supplementary Information Fig. SI4), so measurements between the distinct subsets remained independent. Folds were stratified to ensure (1) class balance within the folds (similar rates of vertebral fracture in each subset) and (2) test sets represent the population as closely as possible. Models were then fit five times, holding out each subset (or fold) in turn as test data. Performance metrics were collected, and their means and standard errors were reported.

Several metrics were computed, but AUC-ROC was used for summary comparison. AUC-ROC was chosen, as it more completely describes a classification model’s performance and tradeoff between true-positive and false-positive rates, and is threshold- and scale-invariant. The AUC-ROC can be optimistic or misleading in settings with large class imbalances such as the one considered here, where about 12% of subjects had moderate to severe fractures. Therefore, both ROC and PR curves were calculated for models of interest. The PR curve, which considers the tradeoff between sensitivity and positive predictive value (PPV), is useful for “rare events,” as it can be more challenging to balance PPV and sensitivity appropriate for a given application of the model [27, 28]. Simply put, large differences in false positives result in more noticeable changes to the PR than the ROC curve.

Implementation

Imputation through random forests was generated with R package “missForest,” PMM, or Bayesian linear regression used “mice,” and missingness was simulated as missing completely at random using code from “R-miss-tastic” [X, X, X]. LR models were fit using the R “stats” package [X]. GAMs were built using the “gam” package [X]. Smoothing splines were fit with default parameters. ROC and PR curves were generated with the “precrec” package [X]. The code for this project is available as an R package on github.

Results

Imputation

The optimal imputation method was determined by evaluating the mean squared error on the complete data with simulated missingness (Supplementary Information Fig. SI2). PMM averaged over five imputations was selected and used to impute the small number of missing vertebral fracture scores.

Model performance

Table 2 provides an overview of our results for subject fracture prediction models using fivefold cross-validation, with multiple classification metrics listed. We report the mean and standard errors of the AUC-ROC, AUC-PR, precision or PPV, sensitivity (recall/true-positive rate), specificity, and model accuracy. In addition, the same AUC-ROC for all models across each of the fivefolds is shown to provide full transparency and capture test performance. Figures 2, 3, and 4 afford additional insights into model performance by comparing ROC and PR curves between models of interest.

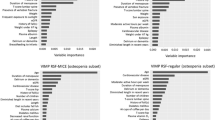

Average ROC and PR curves for LR models using as input fracture scores from all vertebrae, the maximum vertebral fracture score, all vertebral fracture scores ranked, and the maximum of each vertebral region (as described in Fig. 1). Overall, we can see the best performance was achieved with the maximum vertebral score as input, which had better false-positive rates (FPR = 1-specificity) and precision across all levels of sensitivity

The average ROC and PR curves for the top models of both classes, LR with maximum vertebral body score and GAM with the top three maximum vertebral body scores plus age as inputs. The ROC curves are nearly indistinguishable, but slight performance differences are more noticeable on the PR curve. While the GAM was the top model in ROC-AUC, the LR with only the maximum score is worth considering for its simplicity and interpretability

ROC and PR curves for the fivefolds of the top model, a GAM using the top three maximum vertebral body fracture scores and age as input. We used fivefold cross-validation to better assess model performance, as we see here predictive performance is relatively consistent across the folds for the top model

Model inputs determined performance more than model class (LR or GAM). The increased complexity of the GAMs allowed them to match the AUCs of the top LR models, but this flexibility resulted in lower AUCs for the less informative inputs (Table 2).

The baseline model using all vertebral fracture scores as input achieved an average AUC-ROC of 0.908 with LR and 0.816 with a GAM and AUC-PR of 0.672 and 0.615, respectively. Summary statistics of those same inputs proved even more informative; using only the maximum vertebral fracture score increased the AUC-ROC to 0.966 (LR) and 0.965 (GAM) and AUC-PR to 0.859 and 0.857, respectively. Adding the next one to two highest vertebral fracture scores resulted in comparably high-performing GAMs, but adding additional order statistics beyond k = 3 showed diminishing returns. In both model classes, using all ranked vertebral body fracture predictions outperformed the baseline, but did not match models using fewer order statistics as seen in Table 2 and Fig. 2. The maximum fracture score of each vertebral region (upper thoracic, mid thoracic, lower thoracic, thoraco-lumbar junction, and lower lumbar) was also a more informative input than the baseline of all vertebral predictions, but, in LR models, was less informative than order statistics that ignored vertebral location. Specifically including vertebral location information (in the form of an indicator variable for the anatomic level of the maximum vertebral body fracture score) decreased model performance, dropping the AUC-ROC from 0.966 to 0.923. This demonstrated that the rank of the vertebral fracture predictions may be more important than the specific vertebral location.

Although the models based on all individual vertebral fracture scores were not the highest performing, incorporating interaction terms for neighboring vertebral pairs into our linear model showed minor improvements above the baseline input of all vertebra, increasing the AUC-ROC from 0.908 to 0.909 and AUC-PR from 0.672 to 0.695 (curves attached in Supplementary Information Fig. SI5 and SI6). Likewise, models based on top maximum vertebral scores, including interactions between maximum scores, produced improvement in AUC-PR but not in AUC-ROC. In the LR model using the top three maximum vertebral fracture scores including interaction terms between maxima, AUC-ROC dropped slightly from 0.960 to 0.958 but brought up AUC-PR from 0.792 to 0.828. The improvement can be most easily seen on the PR curve in Supplementary Information Fig. SI6.

Demographic covariates (age and race/ethnicity) were incorporated into multiple models. In both model classes with all vertebrae as input or in GAMs with multiple maximum vertebral body scores, adding age improved AUC-ROCs slightly but also decreased AUC-PR. While the top-performing model included age as an input, the additional benefit of including age was minimal. Incorporating race/ethnicity decreased performance in both model classes. As the dataset is composed exclusively of males over the age of 65, and mostly white, these covariates may be more informative in other populations.

Top models

The results demonstrate high performance using the top-ranked predicted vertebral body scores, with the maximum vertebral body score producing one of the best models of either class in terms of the largest average area under the receiver operating characteristic or precision-recall curves (AUC-ROC or AUC-PR). The highest AUC-ROC overall was a GAM with the top three maximum predictions and age as inputs, and the performance of the GAMs using the top one to three maximum vertebral bodies with or without age all achieved similar predictive ability. For both model classes, the ranked vertebral body predictions or the maximum prediction by region as inputs outperformed all vertebrae, but were notably lower than the maximum.

The highest average AUC-ROC (0.968) was achieved by the GAM with the top three maximum vertebral fracture scores and age as inputs (Fig. 3). The experimental results for this model across all fivefolds are shown in Fig. 4 and demonstrated similar performance across all test sets. We saw almost identical AUC-ROCs in the GAMs with the highest one to three maximum vertebral fracture scores, either with or without age as an input, and LR with the single maximum vertebra. This is the best-case scenario discussed above, where misclassification error rates are controlled at the subject-level models to nearly match the performance of the original vertebral body fracture prediction model. ROC and especially PR curves are useful here to evaluate or select the best models, as many of the top models achieved nearly identical ROCs but have distinguishable PR curves. This is most notable in the models using top-ranked vertebral fracture scores, where the addition of interaction terms in the LR models showed improved PR curves while age in the GAMs did not. In clinical settings, the ROC and PR curves can also help to determine classification thresholds to achieve desirable error rates.

Discussion

Compared to other medical AI models, we achieved a high predictive performance (AUC-ROC) of 0.97 at the subject level compared to the vertebral level of 0.99 for the use case of osteoporotic vertebral fracture screening. While the upstream vertebral model is highly accurate, baseline subject models simply combining those vertebral scores proved less so (AUC-ROC 0.91). a problem not solved with increased model complexity (AUC-ROC 0.82). The decreased performance of the baseline subject-level classifier compared to the vertebral level demonstrates the concern described in the introduction and a motivation for this work. Simply combining multiple model outputs, even from highly predictive models, propagates error.

Key experimental takeaways are as follows: (1) Using maximal vertebral fracture scores produced superior predictive performance with good subject-level error control, (2) additional performance gains in the GAMs were provided by using the top three vertebral fracture scores, although (3) simple LR using only maximum score performed comparably, and (4) incorporating age and ethnicity had minimal impact.

The improved performance of the maximal vertebral fracture scores compared to using all vertebrae is unsurprising, as a practitioner diagnosing fracture in an individual subject would weigh more heavily the information gained from the most abnormal vertebra for that case. This also simplifies the application and development of the model, as imputation for missing vertebra can be avoided.

Age may have a role in predicting fracture, as experience and data would confirm, but the relationship may be nonlinear and was not particularly strong here after accounting for the maximum vertebral score. The predictive utility of demographic covariates, however, was not adequately evaluated in this study because of the limited diversity of the MrOS dataset (age > 65, male, mostly white). Additional MrOS covariates were not used in model development because clinical data has a paucity of readily available, reliable, high-quality covariates.

Strengths and limitations

This study has additional limitations. The use of multicenter data from a prior clinical trial may not generalize well due to differences in clinical and research imaging techniques, or in acquired images due to new modalities or imaging hardware. Clinical acquisitions are also performed in a variety of settings including inpatient and emergency departments where patients suffering acute illness are less able to cooperate with instructions and there can be superimposed instruments and objects, which were rare in MrOS data. The lack of publicly annotated spinal radiograph datasets limited the scope of this work, but future work could further evaluate performance and demographic predictors on additional, external datasets.

This work targeted moderate to severe fractures, which means that mild fractures may be missed. However, it should be noted that there is often poor clinical agreement on mild fractures and the severity of the fracture does not necessarily correlate with bone mineral density.

We focused on AUC-ROC for model evaluation, but recognize that model selection and thresholding are part of decision-making, which is outside the context of this paper and should incorporate specific population and domain information. For example, partial AUCs may be more clinically useful for model selection by thresholding false-positive rates, and the simplicity and ease of interpretability of LR may be worth minor performance decreases. In the prior vertebral fracture model, metrics were calculated with thresholds to balance PPV and specificity. This is appropriate in a setting where sample prevalence resembles the population and the focus is controlling the false discovery rate. Using the same strategy, the subject model here resulted in 0.624 sensitivity, 0.994 specificity, 0.94 PPV, and 0.955 NPV. Creating downstream models that rely on outputs from other models runs the risk of propagating errors. In the worst case, they may be additive when aggregating multiple sources of error. That is, even with an extremely accurate vertebral body fracture prediction model as input, the subject-level models may not result in comparable performance. Additionally, bias may be introduced upstream, for example subsampling to enrich the ratio of fracture in the training procedure for vertebral fracture scores, which could affect generalizability. To quantify performance most accurately, we used a 15% (unenriched) held-out test dataset, which by necessity limits the data size. Extensions of this work could quantify the subject model improvement with better vertebral body models. These methods of subject-level modeling can also be extended, incorporating additional structured or unstructured data sources like electronic medical records or using outputs from multiple models as a form of ensembling.

This project’s fundamental approach generalizes well in various healthcare AI settings. While many published projects perform a task on a complex piece of data (entire radiograph, entire CT scan, etc.), we show that by breaking the task into smaller units (vertebral patch classification) and achieving good performance on each subcomponent, you can further improve performance by thoughtfully combining predictions for a clinical task. By taking the maximum predicted value, we minimize error from our individual predictions and also facilitate explainable AI results by knowing the region of the radiograph responsible for the prediction of fracture. Supplementary Fig. SI7 shows how the output from this work could be displayed in a manner to facilitate easy interpretation by providers. Future work tying all components together by incorporating an external dataset and decision-making can further quantify pipeline performance, as well as provide insight into model failure cases.

Deep learning in medical imaging is in a nascent state. As the complexity of models and multi-model pipelines grows to solve more difficult image analysis problems, there needs to be nuanced manipulation and a combination of model outputs and covariates to reach useful clinical endpoints. The task of classifying spinal radiographs for osteoporotic compression fracture is challenging even for humans to attempt in a single classification step, and the performance may not be good enough for screening large populations [29]. With thoughtful incorporation of model inputs and covariates, careful analysis, and some clinical insight, error rates for individual steps can be mitigated and overall performance improved, as demonstrated here.

Conclusion

Using the outputs from a deep learning fracture classification of individual vertebral bodies from radiographs and basic demographic data, multiple methods for modeling patient-level outcomes were tested to find the most performant mechanism while keeping errors small. The best strategy demonstrates high performance by classifying patients with fractures. This research draws attention to one of the challenges and mitigation strategies for future clinical AI, where researchers wanting to improve the performance of predictive models break complex predictions into smaller tasks and downstream models must minimize error propagation while using smaller portions of reserved testing data.

Data availability

Data is available through collaboration and data use agreement, please contact authors.

Abbreviations

- AUC:

-

Area under the curve

- GAM:

-

Generalized additive model

- LR:

-

Logistic regression

- PPV:

-

Positive predictive value

- PR:

-

Precision recall

- PMM:

-

Predictive mean matching

- ROC:

-

Receiver operating characteristic

References

Meadows ES, Whangbo A, McQuarrie N, Gilra N, Mitchell BD, Mershon JL (2011) Compliance with mammography and bone mineral density screening in women at least 50 years old. Menopause 18(7):794–801. https://doi.org/10.1097/gme.0b013e3182083f28

Center JR, Nguyen TV, Schneider D, Sambrook PN, Eisman JA (1999) Mortality after all major types of osteoporotic fracture in men and women: an observational study. Lancet 353(9156):878–882. https://doi.org/10.1016/S0140-6736(98)09075-8

Guzon-Illescas O, Perez Fernandez E, Crespi Villarias N, Quiros Donate FJ, Pena M, Alonso-Blas C, Garcia-Vadillo A, Mazzucchelli R (2019) Mortality after osteoporotic hip fracture: incidence, trends, and associated factors. J Orthop Surg Res 14(1):203. https://doi.org/10.1186/s13018-019-1226-6

Pickhardt PJ, Pooler BD, Lauder T, del Rio AM, Bruce RJ, Binkley N (2013) Opportunistic screening for osteoporosis using abdominal computed tomography scans obtained for other indications. Ann Intern Med 158(8):588–595. https://doi.org/10.7326/0003-4819-158-8-201304160-00003

Carberry GA, Pooler BD, Binkley N, Lauder TB, Bruce RJ, Pickhardt PJ (2013) Unreported vertebral body compression fractures at abdominal multidetector CT. Radiology 268(1):120–126. https://doi.org/10.1148/radiol.13121632

Kanis JA, Johnell O, Odén A, Johansson H, McCloskey EF (2008) FRAX™ and the assessment of fracture probability in men and women from the UK. Osteoporos Int 19:385–397

Sebro R, De la Garza-Ramos C (2022) Machine learning for the prediction of osteopenia/osteoporosis using the CT attenuation of multiple osseous sites from chest CT. Eur J Radiol 1(155):110474

Lee SJ, Binkley N, Lubner MG, Bruce RJ, Ziemlewicz TJ, Pickhardt PJ (2016) Opportunistic screening for osteoporosis using the sagittal reconstruction from routine abdominal CT for combined assessment of vertebral fractures and density. Osteoporos Int 27:1131–1136

Genant HK, Wu CY, van Kuijk C, Nevitt MC (1993) Vertebral fracture assessment using a semiquantitative technique. J Bone Miner Res 8(9):1137–1148. https://doi.org/10.1002/jbmr.5650080915

Dong Q, Luo G, Lane NE, Lui LY, Marshall LM, Kado DM, Cawthon P, Perry J, Johnston SK, Haynor D, Jarvik JG (2022) Deep learning classification of spinal osteoporotic compression fractures on radiographs using an adaptation of the Genant semiquantitative criteria. Acad Radiol 29(12):1819–1832

Cawthon PM, Haslam J, Fullman R, Peters KW, Black D, Ensrud KE, Cummings SR, Orwoll ES, Barrett-Connor E, Marshall L, Steiger P, Schousboe JT, Osteoporotic Fractures in Men Research G (2014) Methods and reliability of radiographic vertebral fracture detection in older men: the osteoporotic fractures in men study. Bone 67:152–155. https://doi.org/10.1016/j.bone.2014.06.039

Orwoll E, Blank JB, Barrett-Connor E, Cauley J, Cummings S, Ensrud K, Lewis C, Cawthon PM, Marcus R, Marshall LM, McGowan J, Phipps K, Sherman S, Stefanick ML, Stone K (2005) Design and baseline characteristics of the osteoporotic fractures in men (MrOS) study–a large observational study of the determinants of fracture in older men. Contemp Clin Trials 26(5):569–585. https://doi.org/10.1016/j.cct.2005.05.006

Blank JB, Cawthon PM, Carrion-Petersen ML, Harper L, Johnson JP, Mitson E, Delay RR (2005) Overview of recruitment for the osteoporotic fractures in men study (MrOS). Contemp Clin Trials 26(5):557–568

Szegedy C, Liu W, Jia Y, Sermanet P, Reed S, Anguelov D, Erhan D, Vanhoucke V, Rabinovich A (2015) Going deeper with convolutions. Proceedings of the IEEE conference on computer vision and pattern recognition 1–9. https://doi.org/10.1109/CVPR.2015.7298594

Deng J, Dong W, Socher R, Li L-J, Li K, Fei-Fei L (2009) Imagenet: a large-scale hierarchical image database. In: 2009 IEEE conference on computer vision and pattern recognition 248–55

Mayer I, Sportisse A, Josse J, Tierney N, Vialaneix N (2019) R-miss-tastic: a unified platform for missing values methods and workflows. arXiv preprint arXiv:1908.04822

Donders AR, Van Der Heijden GJ, Stijnen T, Moons KG (2006) A gentle introduction to imputation of missing values. J Clin Epidemiol 59(10):1087–1091

Stekhoven DJ, Bühlmann P (2012) MissForest—non-parametric missing value imputation for mixed-type data. Bioinformatics 28(1):112–118. https://doi.org/10.1093/bioinformatics/btr597

van Buuren S, Groothuis-Oudshoorn K (2011) Mice: multivariate imputation by chained equations in R. J Stat Softw 45(3):1–67. https://doi.org/10.18637/jss.v045.i03

R Core Team (2021) R: a language and environment for statistical computing (Version 4.1.1). R Foundation for Statistical Computing, Vienna, Austria. https://www.R-project.org/

Hastie T(2020) GAM: generalized additive models (Version 1.20). R package. https://cran.r-project.org/web/packages/gam/gam.pdf1

Hastie T, Tibshirani R (1995) Generalized additive models for medical research. Stat Methods Med Res 4(3):187–196. https://doi.org/10.1177/096228029500400302

Hastie TJ, Tibshirani R (1990) Generalized Additive Models. 1st ed. Boca Raton: Routledge 352

Bow CH, Cheung E, Cheung CL, Xiao SM, Loong C, Soong C, Tan KC, Luckey MM, Cauley JA, Fujiwara S, Kung AW (2012) Ethnic difference of clinical vertebral fracture risk. Osteoporos Int 23(3):879–885. https://doi.org/10.1007/s00198-011-1627-9

Cauley JA, Chalhoub D, Kassem AM, Fuleihan GH (2014) Geographic and ethnic disparities in osteoporotic fractures. Nat Rev Endocrinol 10(6):338–351. https://doi.org/10.1038/nrendo.2014.51

Kohavi R (1995) A study of cross-validation and bootstrap for accuracy estimation and model selection. InIjcai 14(2):1137–1145

Saito T, Rehmsmeier M (2021) Precrec: calculate accurate precision-recall and ROC (receiver operator characteristics) curves (Version 0.12.7). R package. https://cran.r-project.org/web/packages/precrec/precrec.pdf

Davis J, Goadrich M (2006) The relationship between Precision-Recall and ROC curves. Proceedings of the 23rd international conference on machine learning. Pittsburgh, Pennsylvania, USA: Association for Computing Machinery 233–240. https://doi.org/10.1145/1143844.1143874

Murata K, Endo K, Aihara T, Suzuki H, Sawaji Y, Matsuoka Y, Nishimura H, Takamatsu T, Konishi T, Maekawa A, Yamauchi H, Kanazawa K, Endo H, Tsuji H, Inoue S, Fukushima N, Kikuchi H, Sato H, Yamamoto K (2020) Artificial intelligence for the detection of vertebral fractures on plain spinal radiography. Sci Rep 10(1):20031. https://doi.org/10.1038/s41598-020-76866-w

Acknowledgements

Lingga Adidharma

Funding

Research reported in this publication was supported by the University of Washington Clinical Learning, Evidence and Research (CLEAR) Center for Musculoskeletal Disorders Methodological Core, and the National Institute of Arthritis and Musculoskeletal and Skin Diseases (NIAMS)/NIH P30AR072572. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Institutes of Health. Research is supported in part by the GE-Association of University Radiologists Radiology Research Academic Fellowship (GERRAF), which is a career development award co-sponsored by GE Healthcare and the Association of University Radiologists. The Osteoporotic Fractures in Men (MrOS) Study is supported by the National Institutes of Health funding. The following institutes provide support: the National Institute on Aging (NIA), the National Institute of Arthritis and Musculoskeletal and Skin Diseases (NIAMS), the National Center for Advancing Translational Sciences (NCATS), and NIH Roadmap for Medical Research under the following grant numbers: U01 AG027810, U01 AG042124, U01 AG042139, U01 AG042140, U01 AG042143, U01 AG042145, U01 AG042168, U01 AR066160, R01 AG066671, and UL1 TR002369. Research and results reported in this publication were partially facilitated by the generous contribution of computational resources from the Department of Radiology of the University of Washington.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

Nathan M. Cross MD MS reports financial support provided by the GE-Association of University Radiologists Radiology Research Academic Fellowship (GERRAF).

Jeffrey G. Jarvik MD MPH reports Springer Publishing: Royalties as a book co-editor; GE Healthcare for the GE-Association of University Radiologists Radiology Research Academic Fellowship (GERRAF): Travel reimbursement for Faculty Board of Review; Wolters Kluwer/UpToDate: Royalties as a chapter author.

Jessica Perry MS, Qifei Dong MS, Gang Luo PhD, Sandra K Johnston PhD RN, David R Haynor MD PhD, and Patrick J. Heagerty PhD report support from the University of Washington Clinical Learning, Evidence and Research (CLEAR) Center for Musculoskeletal Disorders Methodological Core and the National Institute of Arthritis and Musculoskeletal and Skin Diseases (NIAMS)/NIH P30AR072572.

Jonathan Renslo was supported by institutional funding through the Dean’s Research Scholars program at the Keck School of Medicine at the University of Southern California.

Brian C. Chang MD reports grants from the National Library of Medicine training grant T15LM007442 during the conduct of the study.

Nancy E. Lane MD reports financial support from NIH/NIA and NIH/NIAMS during the conduct of the study.

Lynn Marshall ScD declares that she has no conflict of interest.

Nathan Cross, Jeffrey Jarvik, David Haynor, Gang Luo, Sandra Johnston, Qifei Dong, Jonathan Renslo, Brian Chang, and Jessica Perry have patent #AUTOMATED OSTEOPOROTIC FRACTURE SCREENING PIPELINE FOR RADIOGRAPHS 63/463,823 pending to the University of Washington.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Nathan M. Cross and Jessica Perry are co-first authors.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Cross, N.M., Perry, J., Dong, Q. et al. Subject-level spinal osteoporotic fracture prediction combining deep learning vertebral outputs and limited demographic data. Arch Osteoporos 19, 87 (2024). https://doi.org/10.1007/s11657-024-01433-z

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11657-024-01433-z