Abstract

The purpose of this study was (a) to develop an instructional design model for preservice teachers’ learning of technological pedagogical content knowledge (TPACK) in multidisciplinary technology integration courses and (b) to apply the model to investigate its effects when used in a preservice teacher education setting. The model was applied in a technology integration course with fifteen participants from diverse majors. Data included individual participants’ written materials and TPACK survey responses, group lesson plans, and the researchers’ field notes. The data analysis results revealed that: (1) the participants had difficulties understanding pedagogical knowledge (PK), which hindered their learning of integrated knowledge of TPACK and (2) their learning of TPACK was the combination rather than the integration of PK, technological knowledge, and content knowledge. Suggestions and implications for refining the model and future research possibilities are discussed.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Many argue that the use of technology is a promising way to enhance effective teaching and learning (Sandholtz et al. 1997; Voogt et al. 2009; Williams et al. 2004). Educational associations have acknowledged the importance of technology and set forth standards for the use of technology to enhance teaching and learning (e.g., Association for Educational Communications and Technology 2012; International Society for Technology in Education 2008; National Science Teachers Association 2003). However, research shows that technology-equipped classrooms do not always lead to effective applications of technology (Kim et al. 2013; Polly et al. 2010b). For instance, many teachers use interactive whiteboards to project the content of a lesson without interacting with students (Hall 2010). Even when technology is used in teaching, some teachers tend to have students use technology for low-level searches rather than for inquiry-based learning activities (Kim et al. 2007). Technology is often considered an add-on instrument rather than a critical element integrated into teaching activities (Davis and Falba 2002; Jimoyiannis 2010).

The limited use of technology for teaching (e.g., a mere tool for content presentation or a classroom management tool) rather than effective technology integration for learning (e.g., a facilitative tool for students’ inquiry-based learning) has been attributed to numerous factors such as the inadequate pedagogical beliefs of teachers (Ertmer 2005; Kim et al. 2013), their lack of motivation and volition (Kim and Keller 2011), and teacher efficacy (Tschannen-Moran and Woolfolk Hoy 2001). Recently, many researchers have turned their attention to teacher knowledge (Hew and Brush 2007; Koehler and Mishra 2009). Specifically, they are considering not just technological knowledge but integrative knowledge, which is necessary for effective technology integration. Recent research indicates that the lack of teachers’ content knowledge, content-supported pedagogical knowledge, and knowledge of technology integration leads to poor use of technology in education (Kim et al. 2007; Polly et al. 2010a, b). It has also been acknowledged that teacher training programs should provide teachers with the opportunity to develop integrated knowledge of the subject matter, technology, and pedagogy (Niess 2005; Polly et al. 2010a).

Along this line, there has been an attempt to provide a theoretical foundation that highlights the need for the integrated development of teacher knowledge for technology integration—Technological Pedagogical Content Knowledge (TPCK, Mishra and Koehler 2006). The TPACK framework (changed for easier pronunciation, Thompson and Mishra 2007) is designed to facilitate teachers’ understanding about how to use technology constructively to support students’ learning. Within the framework, teachers’ professional development of technology integration should go beyond just technology; the integration of technology, pedagogy, and content is emphasized (Koehler and Mishra 2009; Mishra and Koehler 2006).

The learning by design approach was suggested to develop teachers’ TPACK (Koehler and Mishra 2005; Koehler et al. 2007). In the study of Koehler et al. (2007), university faculty members and graduate students worked together to design technology-infused programs to be taught for the following years. Such a design and the collaboration process in small groups was suggested as a design approach for TPACK learning as follows in Koehler and Mishra (2005):

The Learning by Design approach requires teachers to navigate the necessarily complex interplay between tools, artifacts, individuals and contexts. This allows teachers to explore the ill-structured domain of educational technology and develop flexible ways of thinking about technology, design and learning and, thus, develop Technological Pedagogical Content Knowledge. (p. 25).

There have been studies that draw on a design-based approach to improve preservice teachers’ TPACK or technology integration in specific subject areas (Angeli 2005; Angeli and Valanides 2005; Jang and Chen 2010; Jimoyiannis 2010). However, according to the National Center for Education Statistics (2008), around 51 % of teacher education programs offer three- or four-credit stand-aloneFootnote 1 educational technology courses in which student teachers from diverse majors learn to use technologies in teaching. In other words, a large percentage of teacher training programs do not offer technology courses that are tailored to certain content teaching (e.g., technology for mathematics teaching). There is a need to provide guidelines for utilizing the learning by design approach to instructors of multidisciplinary technology integration courses so that preservcie teachers from diverse majors learn TPACK.

An instructional design (ID) model built on the TPACK framework in which the learning by design approach is integrated can be useful in promoting preservice teachers’ TPACK. An ID model could amplify the effectiveness of the learning by design approach and promote TPACK learning because it can offer explicit and systematic directions for instructors (Gustafson and Branch 2002). Thus, the current study was conducted to (a) develop an ID model for preservice teachers’ learning of TPACK in multidisciplinary technology integration courses (b) apply the model to investigate its effects when used in an actual preservice teacher training setting, and (c) plan to improve the model. To do so, the following research questions guided the study:

-

1. What are the effects of the initial TPACK-based ID model on preservice teachers’ TPACK?

-

2. How do the results of the initial TPACK-based ID model inform the revision of the model?

The case study approach was applied to address Research Question 1, and the analysis of the findings from the case study was used to address Research Question 2. In the following sections, first, we introduce the theoretical foundations of the study and a TPACK-based ID model that was developed based on the theoretical foundations. We then report on a study in which the model was implemented.

Developing a TPACK-based ID model for multidisciplinary technology integration courses

This study was grounded in the TPACK framework, the learning by design approach, and ID models. There are a few studies using ID models to teach TPACK in specific subject areas such as earth science and mathematics (Angeli 2005; Angeli and Valanides 2005; Jang and Chen 2010; Jimoyiannis 2010; Polly et al. 2010a). However, using ID models to teach TPACK in a multidisciplinary technology integration course has been rarely studied. Thus, in this study, we developed a multidisciplinary ID model that can be used in teaching TPACK in a multidisciplinary technology integration course. In so doing, we reviewed existing ID models (Angeli 2005; Angeli and Valanides 2005; Jang and Chen 2010), synthesized the model components, and integrated TPACK and the learning by design approach to develop the multidisciplinary ID model.

The theoretical foundation

TPACK

TPACK is the framework that describes the interplay of three knowledge bases: content, pedagogy, and technology (Mishra and Koehler 2006). According to the interplay of knowledge, seven types of knowledge are included: content knowledge (CK), pedagogical knowledge (PK), technological knowledge (TK), pedagogical content knowledge (PCK), technological content knowledge (TCK), technological pedagogical knowledge (TPK), and technological pedagogical content knowledge (TPCK). Teachers with TPACK understand how to apply suitable technologies to teach specific content with appropriate pedagogy (Mishra and Koehler 2006).

The learning by design approach

Design is a process of solving problems that are complex and ill-structured (Jonassen 2008). Such problems include a series of cognitive tasks that require designers to identify and analyze problems, explore and evaluate solutions, and make decisions (Jonassen 2008). The learning by design approach allows teachers to take the role of designers of learning activities (Kalantzis and Cope 2005; Yoon et al. 2006). This approach has been used in teaching teachers to integrate digital technologies into the classroom (e.g., Kalantzis and Cope 2005). Through learning by design, teachers’ pedagogical repertoires can be expanded (Güler and Altun 2010; Hjalmarson and Diefes-Dux 2008; Koehler and Mishra 2005). During the design process, teachers are engaged in an authentic environment and experience the complexity of learning and teaching contexts. For example, in Koehler and Mishra (2005), when faculty members and their graduate students designed an online course, they had to consider the complexity of online teaching, especially the complexity of integrating technology, pedagogy, and content into the online teaching context. The learning by design approach also facilitates learning through collaboration in which learner-centered activities are supported. In Jimoyiannis (2010), when teachers in a professional development workshop worked in a small group to design technology-integrated lessons, direct instruction from the workshop instructor rarely happened and teachers’ discussions with the instructor and other teachers were emphasized.

The advantages of using the learning by design approach can be summarized as follows: (a) it responds to the call for educational innovation concerning new approaches for students to learn in a digital environment (Kalantzis and Cope 2005); (b) it promotes teachers’ professional development by allowing them to create artifacts for students’ learning needs (Bers et al. 2002; Güler and Altun 2010; Hjalmarson and Diefes-Dux 2008; Kalantzis and Cope 2005); (c) it creates an authentic learning environment for teachers to experience the complexity of learning and teaching (Koehler and Mishra 2005; Koehler et al. 2007); and (d) it encourages collaborative work between researchers and teachers and among teachers (Jimoyiannis 2010).

ID models for technology integration

While instructional design (ID) is a set of systematic procedures to provide instructional programs, an ID model describes how to practice these procedures (Gustafson and Branch 2002). There have been many ID models developed for different purposes but they are usually too generic to provide explicit guidance for teaching technology integration (Angeli and Valanides 2005). Gustafson and Branch (2002) classified ID models into three types—classroom, product, and system. Classroom models are designed in consideration of the environment of teachers. In 2011, when developing the TPACK-based ID model described in the current study, we searched for classroom-type ID models in educational databases such as EBSCO, ERIC, and PsycINFO. We found only three studies (i.e., Angeli 2005; Angeli and Valanides 2005; Jang and Chen 2010) in which an ID model was applied to improve preservice teachers’ technology integration; each was conducted in a science teaching context. Thus, in 2011, we found no evidence for an existing ID model for a multidisciplinary technology integration course. To provide systematic teaching procedures for multidisciplinary technology integration courses, we analyzed the three ID models (Angeli 2005; Angeli and Valanides 2005; Jang and Chen 2010) to synthesize critical elements from each model for teaching technology integration. We also drew on characteristics of a traditional ID model consisting of analysis, design, development, implementation, and evaluation elements to develop our model. In this section, first, we reviewed the three ID models used to support preservice science teachers’ technology integration—Angeli’s (2005) ID model, Angeli and Valanides’ (2005) ID model, and Jang and Chen’s (2010) TPACK-COPR model. Second, we synthesized the characteristics of the three models and also revised elements of the three models to meet the needs of a multidisciplinary technology integration course. Table 1 summarizes the comparison of the three ID models in terms of theoretical frameworks and model elements. The similarities and the adjustments for the development of our model are also specified.

Angeli’s (2005) ID model and Angeli and Valanides’ (2005) ID model were both developed based on the frameworks of an ID model and PCK (Shulman 1987) but have different foci. Angeli’s (2005) ID model specifies practical steps for applying technology in teaching, whereas Angeli and Valanides’ (2005) ID model offers a conceptual guidance that focuses on theoretical principles. Specifically, Angeli’s (2005) ID model was built on the expanded view of PCK—teachers’ understanding of pedagogy should include technology so as to provide digital support for transforming content. According to expanded PCK, Angeli presented a nine-stage ID model that guided preservice teachers in developing technology-integrated lesson plans (see Table 1). Before the implementation of the model, she suggested that instructors model the use of technology and explain its pedagogical potentials about how the technology represents particular learning content. In contrast, Angeli and Valanides’ (2005) ID model includes four instructional principles to consider both individual and contextual factors that can impact technology integration (see Table 1). Therefore, Angeli’s (2005) ID model informs explicit instructional stages while Angeli and Valanides’ (2005) ID model focuses on conceptual elements that designers should consider when supporting teachers’ technology integration.

These two models were applied only in science education courses. Since technology and pedagogy are selected per which content is taught (Mishra and Koehler 2006), elements of the two ID models were modified so as not to be science-specific. Two adjustments were made to develop an ID model that can be used in teaching TPACK in multidisciplinary technology integration courses. First, a stage called introducing TPACK was set as the first stage in the model because preservice teachers need knowledge bases (e.g., TK, PK, and CK) before identifying and selecting suitable topics for technology integration. Second, more emphases were put on helping preservice teachers develop, implement, and revise educational technological products (the 3rd and 4th principles of Angeli and Valanides’ (2005) model) than on asking them to consider school contexts, previous classroom experiences, personal beliefs, and learners’ backgrounds (the 1st and 2nd principles). This was because preservice teachers’ lack of prior teaching experience would limit their understanding of how such contextual factors affect their teaching and student learning (Kagan 1992).

The third ID model reviewed is Jang and Chen’s (2010) TPACK-COPR model highlighting four elements: Comprehension TPACK, Observation of instruction, Practice of instruction, and Reflection on TPACK. In this model, the first element, comprehension, is emphasized to provide a theoretical foundation to teachers before they engage in the practical activities in the later stages, which is aligned with the stage, introducing TPACK, that we included as the first stage in the model for teaching TPACK in this study. However, in TPACK-COPR model, the TPACK learning process ends with the stage of reflection. In Jang and Chen’s (2010) study, preservice teachers were not required to revise their lesson plans after the stages of practice and reflection. However, the revision process promotes the refinement of a lesson plan and a digital design, facilitates another cycle of design-based activities (Fernández 2005, 2010), and helps preservice teachers transfer their reflections to the revising activity so as to deepen their understanding of TPACK. Thus, we added a stage for revisions after practice and reflection to the model for the current study.

We also found that a crucial element of the ID model—the “iterative” characteristic—was not practiced in the implementation of these three models although the iterative feature was included in all three models. Participants in the implementation studies went through the process of the model only once. However, the iterative design and practice is necessary to enhance preservice teachers’ learning of TPACK (Jimoyiannis 2010; Kalantzis and Cope 2005; Koehler and Mishra 2005).

The design guidelines emerged from the review of the three ID models teaching TPACK are summarized below. These guidelines were used in developing an ID model for a multidisciplinary technology integration course in the current study:

-

(1)

Explicit, systematic procedures should be included in the ID model to provide practical solutions for teacher training programs to enhance preservice teachers’ TPACK.

-

(2)

Stages to introduce the TPACK framework and to demonstrate TPACK examples should be included in the ID model to build preservice teachers’ knowledge base of technology integration and to prepare them to design technological artifacts for teaching.

-

(3)

Design-based learning activities such as creating a lesson plan and associated digital artifacts should be included in the ID model to prompt preservice teachers to analyze the content and student learning needs.

-

(4)

A cyclic design-based learning process should be included in the ID model to offer the opportunities for preservice teachers to go through the design process more than once.

The TPACK-IDDIRR model

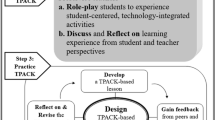

Based on the four design guidelines discussed above, we developed the TPACK-IDDIRR (Introduce, Demonstrate, Develop, Implement, Reflect, and Revise) model as shown in Fig. 1. The IDDIRR model served as a practical framework that embodies the afore-mentioned design guidelines and illustrates practical procedures that can be used in a multidisciplinary technology integration course.

Applying the TPACK-IDDIRR model in a multidisciplinary technology integration course, the instructor starts at the Introduce (I) stage to help preservice teachers understand TPACK (Jang and Chen 2010). The purpose of this stage is to build preservice teachers’ knowledge base of TPACK to facilitate their learning in the design activities later on. The instructor explains the meaning of the seven domains of TPACK and provides examples for each domain. However, this stage focuses mainly on familiarizing preservice teachers with CK, PK, and TK because the mastery of these three domains is the basis for integrated understanding of TPACK.Footnote 2 Second, the instructor demonstrates (D) a TPACK-based teaching example to preservice teachers. Preservice teachers are expected to enhance their understanding of TPACK by observing the demonstrated teaching example (Bandura 1977; Jang and Chen 2010; Merrill 2007).

The next four stages are carried out mainly by preservice teachers: Develop, Implement, Reflect, and Revise. These stages are iterative learning activities that comprise Learning TPACK by Design as shown in Fig. 1 (Angeli 2005; Angeli and Valanides 2005; Fernández 2005, 2010; Jimoyiannis 2010). During the third stage, preservice teachers are divided into small groups and each group develops (D) a TPACK-based lesson plan based on what they learned in the previous two stages. They are expected to encounter multi-faceted difficulties such as identifying suitable subject topics, selecting technological tools accompanied with pedagogical methods, and foreseeing possible problems. Fourth, a member from each group implements (I) the lesson as the process is videotaped. Other preservice teachers act as students and provide feedback to the member who teaches the lesson. Next, after reviewing the videotape, each group reflects (R) on the lesson and discusses the pros and cons of the lesson. Finally, each group revises (R) the lesson plan based on their collective reflection. Then, the next member from each group implements (I) the revised lesson and each group goes through the reflect (R) and revise (R) stages again. The IRR stages work iteratively until all the members of each group have a chance to implement the lesson (Fernández 2005, 2010). There was no criterion to specify which group member should be the first implementer although the order could be important. Our intention was to give some autonomy to students while working on required tasks.

The systematic stages of IDDIRR respond to the first design guideline that provides practical solutions to the learning of TPACK. The Introduce and Demonstrate stages of the model respond to the second design guideline that helps preservice teachers understand the TPACK concept. The elements of the Learning TPACK by Design activities—Develop, Implement, Reflect, and Revise—are design-based activities and should be carried out iteratively, which respond to the third and the fourth design guidelines respectively.

Implementation study

Methodology

The purposes of this study were not only to develop a TPACK-based ID model but also to apply the model and investigate how the model could be used to improve preservice teachers’ TPACK. A case study approach was chosen for this study because “case study is an in-depth exploration from multiple perspectives of the complexity and uniqueness of a particular project, policy, institution, programme or system in a ‘real life’ context” (Simons 2009, p. 21). Since this study attempted to generate an in-depth understanding of the model when it is implemented in the target context, a case study was considered an appropriate methodology. Mixed methods (Simons 2009) included the following data sources—small-scale surveys, participants’ written documents, groups’ lesson plans, and the researcher’s observation notes.

Context and participants

This IDDIRR model was applied in a technology integration course in a southeastern university in the United States in which students were from diverse majors. Since one of the researchers was also the instructor of the class, the setting allowed direct access and a semester-long investigation. This context satisfied the criteria for choice of settings recommended by Spradley (1980): simplicity, accessibility, unobtrusiveness, permissibleness, and frequently recurring activities. The course was modified to include the IDDIRR stages of the TPACK-based ID model to teach TPACK in the fall semester of 2011. The course was 15 weeks long and the class met three times per week for an hour. Fifteen out of twenty students enrolled in the course voluntarily participated in the study. The ages of the participants ranged from 19 to 21 and ten of them were female.

The title of the course indicated that the course was for preservice teachers. However, the course was open also to the students who were not in education programs. During the semester the current study was conducted, the participants’ majors included: child and family development, communication science and disorders, pre nursing, and recreation and leisure studies. Only three out of fifteen participants had taken education-related courses previously and none of them had a practicum experience in a preK-12 classroom.

Technological tools taught in the course included (a) communication and collaboration tools (Google Docs, in2Books, podcasting tools, the Globe Program, Blogging tools, etc.), (b) graphic software (floorplanner), (c) a social bookmarking tool (e.g., Delicious), (d) video-making tools, (e) concept-mapping tools (Inspiration & Kidspiration), (f) Web 2.0 tools (Google Site, WebQuest, etc.), and (g) presentation tools (PowerPoint games). Students were informed that they would not only learn technology but also learn how to use technologies in teaching activities.

Data collection

Data included the following: (1) the mid- and post-TPACK survey responses, (2) students’ written materials, (3) groups’ lesson plans and corresponding digital products, and (4) the researchers’ field observation notes. The five stages of IDDIRR were divided into two big parts to collect data—one is Introduce and Demonstrate TPACK and the other is the Learning TPACK by Design activities.

The TPACK survey was modified based on the four TPACK-related surveys: (a) Survey of Pre-service Teachers’ Knowledge of Teaching and Technology (Schmidt et al. 2009), (b) Survey of Technological Pedagogical and Content Knowledge (Sahin 2011), (c) Assessing Students’ Perceptions of College Teachers’ PCK (Jang et al. 2009), and (d) TPACK in Science Survey Questions (Graham et al. 2009). Schmidt et al.’s (2009) survey provided a foundational structure for the survey of this study, but items from the other three surveys were adopted to supplement items for assessing the seven TPACK domains. For example, in Schmidt et al.’s (2009) survey, there is only one item for each subject area (mathematics, literacy, science, and social studies) in the PCK domain. We adopted PCK-related items in the Sahin’s (2011) and Jang et al.’s 2009 surveys to supplement items in the PCK domain (e.g., “I have knowledge in making connections between my content area and other related courses” (Sahin 2011), and “I can use a variety of teaching approaches to transform content into comprehensible knowledge” (Jang et al. 2009)). Similarly, items of Graham et al.’s (2009) survey were adopted to supplement items in the TCK domain (e.g., “I know about technologies that allow me to represent things that would otherwise be difficult to teach”).

The modified TPACK survey contains 55 items measuring 7 knowledge domains of TPACK: 16 TK items, 8 CK items, 9 PK items, 7 PCK items, 6 TCK items, 5 TPK items, and 4 TPACK items. The participants responded to each item using a 5-point Likert scale from (1) “strongly disagree” to (5) “strongly agree”. In this study, the Cronbach’s alpha values on the various sub-scales ranged from .55 to .91 for the mid-survey and from .73 to .89 for the post-survey. The Cronbach’s alpha value of the 55 items was .94 for the mid-survey and .93 for the post-survey. Table 2 presents the Cronbach’s alpha values of items for each TPACK domain.

Students written materials, groups’ lesson plans, and the researchers’ field observation notes served as qualitative data. Students’ written materials included their class assignments and discussion worksheets that were related to TPACK. Groups’ lesson plans were the teaching plans that each group developed, implemented, and revised based on the procedures of the model. Since one of the researchers was also the instructor, the field notes were taken after teaching the classes and reviewed with the other researcher. The field notes included descriptive (e.g., settings, participants’ reactions) and reflective information (e.g., thoughts, ideas) about what happened in the classroom (Bogdan and Biklen 2003).

Procedures

Introduce and demonstrate TPACK

Data collected during these stages were the instructor’s field notes, students’ written materials, and the mid-TPACK survey. The introduction of TPACK began in Week 2 after the participants were given an introduction of this course during Week 1. The instructor used videos and PowerPoint presentations to introduce (I-Introduce) TPACK. As described in the TPACK-IDDIRR model section earlier, the focus of Introduction was on the three core domains of TPACK—TK, CK, and PK. The participants were given the definitions of TK, CK,Footnote 3 and PKFootnote 4 to learn the concepts. TK was defined as the knowledge that a teacher possessed to use a variety of educational technologies. The examples of TK such as a teacher with TK using an interactive whiteboard, were then introduced to the participants. Note that the provided examples were simple and basic, not meeting the expectation such as using technology to support higher-order thinking skills because the purpose of this stage was to help preservice teachers grasp the basic concepts of TPACK and have confidence to learn more.

In Week 7, the instructor demonstrated (D-Demonstrate) a TPACK-integrated teaching example to show the practice in which the integrated knowledge of TPACK was applied (e.g., TPK, TCK, and TPACK). The example was demonstrated after the participants learned Microsoft Photo Story—a free tool for creating slideshows. The instructor demonstrated a lesson “the American Civil War” using two technological tools: one was the use of Microsoft Photo Story to tell the story about the American Civil War and the other was the use of Wikipedia to show the relevant history. Then, the participants were asked to compare the two technological tools based on how the tools presented the content (TCK) and to evaluate which tool could better help students learn the content (TPK and TPACK). After the TPACK Introduce and Demonstrate stages, a mid-TPACK survey was conducted to assess the effects of applying the two stages to teaching TPACK to the participants.

Learning TPACK by design activities: develop (D), implement (I), reflect (R) on, and revise (R) a TPACK-based lesson plan

To facilitate the participants’ TPACK discussion when they engaged in the Learning TPACK by Design activities with group members, as well as to facilitate data collection, an online learning environment in Google Docs (i.e., Google Drive) was created to allow the participants to develop, discuss, reflect on, and revise their lesson plans. Members of every group submitted the group’s lesson plan and associated digital products to their Google Site pages—also a space to submit digital assignments and create individual portfolio for the semester. The participants’ written materials regarding TPACK were also collected. Finally, the post-TPACK survey was conducted when these activities were completed.

Based on the model, four activities in Learning TPACK by Design were carried out: every group developed (D) a TPACK-based lesson plan; groups implemented (I) their lesson plans in class; members of every group discussed the implementation and reflected on (R) the lesson plan; and every group revised (R) the lesson plan accordingly. The participants themselves created four five-member groups. Since the participants had different majors, every group decided on a subject/topic that they were able to integrate best with technology. Topics decided by the four groups were: days of the week, holidays, and months of the year; living and non-living things; cells; and multiplication.

Then, the groups took turns to go through the stages of the IDDIRR model. Members from Groups 1 and 2 developed (D) the groups’ lesson plans in Week 7 and went through the IRR stages during Week 7 to Week 9, and members from Groups 3 and 4 developed (D) the groups’ lesson plans in Week 11 and went through the IRR stages during Week 12 to Week 15. The TPACK-based lesson plan developed by each group was 25–30 min long and divided into three approximately 10-min sections for the implementation purpose. Each member of every group was required to teach one section in class. Thus, some sections were taught independently, while some were taught in pairs. The teaching process was videotaped for all the groups. After implementing (I) the first section of the lesson plan, members of every group watched the teaching video, reviewed feedback provided by the group members, and reflected (R) on the lesson plan. Then, every group revised (R) the group’s lesson plan. Then, the next group member(s) implemented (I) the second section of the group’s lesson plan in class. Every group went through the Reflect (R) and Revise (R) stages again. The IRR stages in this study worked a total of three times in every group, which gave the participants multiple opportunities to experience learning by design.

Data analysis

Only the data from the students who agreed to participate in this study were analyzed. To examine how the implementation of the IDDIRR model impacted the participants’ TPACK learning, data were analyzed in two ways. First, we used the straightforward description approach (Wolcott 1994) to present the researchers’ observation field notes collected during the Introduce and Demonstrate stages. Simons (2009) suggested that description, analysis, and interpretation are the methods to present qualitative data. We applied the description method, by which the data were shown as the observation field notes were originally recorded (Simons 2009, p. 121) without making further analysis or interpretation. In this preliminary study of a TPACK-based ID model for multidisciplinary technology integration courses, we intended to mainly describe “what is going on” (Simons 2009, p. 121) in class. Thus, the researchers’ observation of the participants’ learning process was presented to readers as the events occurred in class. The participants’ written materials that were also collected during these two stages were used as supplemental resources to enhance the observation data. Then, descriptive statistics were used to present the results of the mid-TPACK survey that was conducted after Introduce and Demonstrate to understand the effects of the two stages on the participants’ TPACK learning.

Second, we applied content analysis to analyze data collected from the Learning TPACK by Design activities, including groups’ lesson plans, digital artifacts, and individual students’ written materials. Since this study was to understand the participants’ learning processes of TPACK, the precoding strategy was considered suitable to analyze data (Simons 2009). The precoding strategy is also known as the deductive category application (Mayring 2000) by which precodes can be generated from the theoretical framework. Accordingly, the categories of this study were derived from the seven domains of TPACK. The definitions, examples, and coding rules for each deductive category are shown in Table 3. After the data were coded based on the categories, we examined and compared the categories carefully to identify themes and find patterns from the data. Then, the post-TPACK survey was used to triangulate the findings from the qualitative data. Finally, we also employed a paired t test to compare the participants’ responses to the mid- and post-TPACK surveys to examine the effects of the Learning TPACK by Design activities on their TPACK learning.

Validity and reliability

To increase the reality (internal validity) and dependability (reliability) of this study, we applied three strategies—data triangulation, peer examination, and the statement of researchers’ subjectivity (Merriam 1995; Simons 2009). First, data triangulation refers to the usage of several data sources. We triangulated qualitative data such as lesson plans, written materials, etc. with TPACK surveys. Second, peer examination served as a confirmatory approach to improve the research reality. The two researchers discussed regularly the theoretical foundation of the study, the design and implementation of the model, and the plausibility of emerging findings over 2 years. Third, we reflected on our roles in this study; specifically one of the researchers’ role was dual—a researcher and an instructor. It is likely that the interpretation of the case included the perspective of both a researcher and that of an instructor since it was difficult to place a particular dual role outside of the study because they both emanated from the same source. Simons (2009) suggested that a colleague examines the values the researcher brought to the research to indicate possible biases. Accordingly, the other researcher played the role of examining the roles and the subjectivity that the instructor brought to the study, which helped the researchers as a team identify possible biases.

Findings

The findings are organized around the two main learning activities of the IDDIRR model: one is the Introduce and Demonstrate stages and the other is the Learning TPACK by Design activities (see Fig. 1).

The introduce and demonstrate stages

Introduce

The TPACK figure, definitions, examples, and brief explanations for the seven domains of TPACK were introduced in this stage. However, this stage focused on the instruction of CK, PK, and TK mainly because most of the participants had not taken education-related courses prior to this class. Helping them understand the three knowledge domains was to facilitate their later learning of integrated knowledge of TPACK (e.g., TCK, TPK.).

Although the instructor had planned to teach the concepts within 2 weeks (Week 2–Week 3), the teaching was prolonged (Week 2–Week 5) because the participants had difficulties understanding PK. The participants had no difficulty understanding TK. They could easily relate the concept of TK to their everyday technologies and the technologies that they were learning at that time such as Web 2.0 tools, Internet search engines, and Google Site.

With regard to CK, the participants were taught to connect CK to the content in their majors. The state performance standards were used to explain how a teacher with CK taught specific content. Then, questions relevant to CK were asked to assess the participants’ learning, such as “When you apply state performance standards to design a lesson, what knowledge do you need to use, and why?” Ten out of 15 participants demonstrated their understanding of CK by responding to the question accurately. For example, “When a teacher finds standards they use content knowledge because they are finding the content they wish to address” (Participant 12, written material).

Participants demonstrated incomplete understanding of PK. The meaning of PK was explained along with a list of teaching strategies such as the jigsaw method, class discussions, and group discussions. Participants were prompted to discuss why different teaching strategies could result in different learning outcomes and how appropriate strategies could be applied to promote student learning. However, the discussion did not take off. They were thus asked to select the teaching strategies that they had experienced in class and reflect and discuss their experiences. An assignment in which they designed a teaching activity later on illustrated their basic understanding of PK as follows:

I will first introduce fractions, decimals, and percentages to the students…I will also be sure to make it clear that not only do I want the students to be able to accomplish these tasks, but I want them to be able to explain their solutions to me and to their peers… They will each be expected to explain their thought processes to their partner so that each student can understand how others might think about a particular problem. (Participant 6, written material)

I introduce the lesson by showing a news clip on the health issue at hand. After the clip is finished I explain to the class what the news clip was trying to say to clarify any loose ends. Then, I assign the class into groups to research different parts of the health issue to prepare for the debate [activity]. As the students are in groups researching, I will walk around the class asking the students what they are researching and answer any questions they may have. (Participant 10, written material)

Nonetheless, a deep understanding of PK and CK was not observed in class discussions. For instance, after the participants had a basic understanding of PK and CK, the instructor selected a state performance standard from mathematics (e.g., recognize and apply mathematics in contexts outside of mathematics) and explained that, for a teacher to be proficient in the standard, the teacher should possess not only CK but also PK, using appropriate teaching strategies to facilitate students’ application of mathematics knowledge to non-mathematical contexts. However, the participants were confused with the pedagogical elements (PK) within a content standard (CK). In other words, they had difficulties differentiating PK from CK when the two concepts were integrated in one example (e.g., in the PCK format).

Demonstrate

Integrated knowledge of TPACK (e.g., TCK, TPK.) was covered in the Demonstrate stage in Week 7. The instructor offered a technology-integrated teaching example (the comparison of using Photo Story and Wikipedia as tools to teach the American Civil War) in addition to definitions of integrated domains of TPACK. Photo Story was chosen because the participants had just completed a Photo Story project in which they created a story about themselves to acquire TK.

After demonstrating the example, the instructor asked the participants to compare and evaluate the two technological tools with regard to how the tools were used to support student learning of the content. During the class discussion, an evaluation of the two tools in consideration of students’ learning needs (i.e., connecting the tool to pedagogy and content) was not observed. For example, with regard to Wikipedia, the participants mentioned that the tool provided comprehensive information for learning about the American Civil War. With regard to Photo Story, they tended to evaluate it based on its characteristics that were not necessarily relevant to the current pedagogy and content (e.g., Photo Story being a more fun tool than Wikipedia). None of discussion was on the pedagogical affordances of the tools (relevant to the domains of TCK, TPK, and TPACK), such as Photo Story being the tool that could be more effective than Wikipedia because it could be used to personalize the content per student grade level and interest and to engage students in a virtual context of a historical event aligned with their existing knowledge. Thus, it was concluded that integrated knowledge of TPACK was not evident among participants in the Demonstrate stage.

Quantitative data from the mid TPACK survey that was conducted after the Introduce and Demonstrate stages were analyzed to examine the effects of the stages as well as triangulate the qualitative data. The means and standard deviations of the mid-survey scores are listed in Table 4. However, the scores seemed to have measured the participants’ perceptions about their knowledge rather than their actual knowledge because of the following reasons. The mean scores of all the seven domains were high (around 4 out of 5) but these scores were not consistent with the participants’ TPACK performances observed in their written materials and class discussions. As mentioned before, the participants had a basic understanding of PK and CK but failed to differentiate the two concepts and their integrated knowledge was not observed during class discussions.

The learning TPACK by design activities

The class was divided into four groups with five people each to carry out the Learning TPACK by Design activities—Develop, Implement, Reflect, and Revise. The report of the findings here focuses on the participants’ learning of TCK, TPK, and TPACK to present the participants’ learning of the integrated knowledge.

-

1.

The majority of the participants chose to teach the content that could be taught without technology (TCK was not observed).

In terms of the coding scheme, the category of TCK refers to the knowledge including whether the participants identify the content that can be effectively taught using technologyFootnote 5 and whether the participants apply appropriate technologies to teach the content. Table 5 shows the content that the four groups identified for their teaching practice and the technologies used in teaching. The content identified by Group 1 (days of the week, holidays, and months of the year), Group 2 (living and non-living things), and Group 4 (multiplication for five, nine, and ten) were the content that were not too difficult to teach in traditional classrooms without technologies. Group 2 could have used technology to support higher-order thinking if questions such as “Why is water a non-living thing even though it can flow?” or “Why are trees living things even though they cannot move?” were designed. However, the group used technology such as simple online games on discriminating living objects from non-living objects for lower-level cognitive activities.

Group 3 chose the topic of cell structures of plants and animals to the class, which could be taught effectively with technology. However, evidence of their TCK learning was not observed. This group played videos to introduce the content regarding cell structures and used PowerPoint slides to present the summary of comparing cell structures between plants and animals although abstract concepts of cell structures could have been taught effectively using other technology such as concept mapping tools, animation, etc. In the reflection on the lesson, this group noted, “because of the content, it was difficult to find interactive activities and games for the students.”

-

2.

All used teacher-centered strategies when using technology (TPK was not observed).

The category of TPK in the coding scheme refers to the knowledge whether the participants apply appropriate technology in teaching based on students’ learning needs. Table 5 shows the technology used by each group when they implemented teaching in class. Technologies used by the four groups were limited to videos, PowerPoint, online games, and online quizzes. In addition, these technologies were applied in a similar pattern across the four groups – videos for gaining students’ attention, PowerPoint slides for introducing the content, online games and online quizzes for assessing students’ low-level knowledge (e.g., verbal information). Students in the participants’ lessons were not provided opportunities to manipulate technologies to develop higher-order cognitive skills and/or create artifacts to show their learning processes and outcomes. In other words, their lessons were characterized by teacher-centered strategies and technologies were used to present content or enhance lecture efficiency.

-

3.

The participants’ understanding of TPACK was the combination rather than the integration of knowledge.

The lesson plans created by the four groups demonstrated the combination of technology, pedagogy, and content, instead of the integration of the three. Examples are shown as follows:

I think we incorporated all three aspects: technology clearly in the video and game, pedagogy in the mind mapping together and content knowledge in the teaching of the months, seasons, and holidays. (Group 1, lesson plan)

We had students use technology by watching a video and identifying living and nonliving things. Pedagogy was addressed in that we taught the students through technology and an interactive game. (Group 2, lesson plan)

The first two videos were a great way to help the students get interested in the subject and to immediately capture their attention… They seemed to add a lighthearted and engaging mood to the lesson, which is definitely needed in 7th grade students. (Group 3, lesson plan)

Technology was the video and PowerPoint which all parts of the group had. Pedagogy was teaching the tricks to multiplication and using a worksheet to review. Content was the multiplication tables. (Group 4, lesson plan)

[TPK is] having students use Inspiration [tool] to make a mind-map. (Participant 14, written material)

[TCK is] using PPT to teach content lesson. (Participant 11, written material)

[TPACK is] having students work in groups to make a PowerPoint about the content they’re learning. (Participant 14, written material)

These excerpts suggest that the participants’ TPACK were at the stage of combining the core knowledge of TK, PK, and CK. The issues such as why and how technologies could help students understand the content were not described. With regard to quantitative data, most of the means among the seven knowledge domains increased (see Table 4); however, a paired t-test indicated that only TPK (t [15] = −3.075; p = .005) revealed a significant increase. The self-report TPACK survey may have assessed the participants’ perceptions of TPACK rather than their actual acquisition of TPACK. For example, their mid- and post-test scores of TPK (M = 3.81 from the mid-survey and M = 4.2 from the post-survey) and TPACK (M = 3.92 from the mid-survey and M = 4.32 from the post-survey) were high. However, as discussed above, findings from the qualitative data analysis indicate that such high levels of TPK and TPACK were not evidenced in the participants’ class discussions, lesson plans, and implementations.

Discussion

This study drew on the learning by design approach and attempted to provide preservice teachers with iterative opportunities to design, develop, implement, reflect, and revise a lesson plan so as to help them acquire TPACK. The summary of the findings is as follows.

-

1.

In the Introduce and Demonstrate stages, a basic understanding of TK, PK, and CK was observed among the participants rather than integrated knowledge of TPACK (e.g., TCK, TPK, & TPACK).

-

2.

In the Learning TPACK by Design activities, the participants’ understanding of TCK and TPK was not observed; their understanding of TPACK was the combination of TK, PK, and CK rather than the integration of the three.

These findings from the implementation of the TPACK-based ID model are not aligned with our expectations. However, this study provides initial guidelines not only for revising the model but also for teaching TPACK in a multidisciplinary technology integration course and informs future research of the barriers in similar settings. If the participants had prior knowledge of pedagogy, the effects of the model may have been different. However, when the majority of students in a technology integration course are not from education majors, their pedagogy-related knowledge could vary. As described earlier, more than 50 % of teacher training programs have provided technology integration courses that are not based on educational methods or subject matters (National Center for Education Statistics (NCES) 2008), which implies that education majors from different subject areas with different levels of pedagogy-related knowledge can be mixed in the course. Thus, the disparity of pedagogy-related knowledge among learners should be considered in such settings (e.g., multidisciplinary technology integration courses) to provide them with appropriate learning activities.

Second, the presupposition of IDDIRR was that TPACK acquisition should be built on the mastery of the seven domains of TPACK. That is, learners should clearly understand the isolated domains and then they can understand the interplay among the domains. The findings suggest that this presupposition may have been the case in this study because preservice teachers’ lack of pedagogy-related knowledge seemed to have affected their TPACK learning. For example, as reported earlier, preservice teachers tended to evaluate technological tools (i.e., Photo Story vs. Wikipedia) from its external characteristics that were not relevant to pedagogical affordances to the content. These findings suggest that pedagogy-related knowledge is critical in acquiring TPACK and that TPACK acquisition requires a progressive process of learning from isolated knowledge to integrated knowledge.

Finally, data from the lesson plans created and implemented by the participants were not consistent with the data from the TPACK surveys. It was likely that the participants’ lack of pedagogy-related knowledge limited the self-assessment of their actual TPACK capacity. The assessment of learning should include the opportunity for learners to apply their new knowledge or skills in actual settings (Gagné et al. 2005; Gustafson and Branch 2002; Merrill 2002, 2007, 2009). In this study, because the participants were provided opportunities to develop and teach lessons, their actual understanding of TPACK was empirically examined.

Re-design of the model

In addition to investigating how the IDDIRR model could be applied to TPACK teaching, another purpose of this study was to refine the model based on the empirical findings. Participants’ teaching-related background or knowledge was not taken into account when the model was developed, which seemed to have affected their learning of the interrelated knowledge of TPACK. To refine the model, each stage of IDDIRR will be modified to involve pedagogy-enhancing elements so as to facilitate the learning of the domains of TPACK. For example, during the Introduce stage, instead of telling preservice teachers the meanings and examples of TPACK, one could encourage preservice teachers to actively discuss meanings and create examples. During the Demonstrate stage, more examples such as lesson plans and TPACK-integrated teaching examples for different subject areas could be added (Shute et al. 2009; Seel 2003). This would provide the opportunity for preservice teachers to identify and discuss how technology affords teaching the content in the examples. This revision aligns with the principles that effective instruction should demonstrate how-to do the task as well as show what-happens of the tasks that learners will engage in Merrill (2007). When preservice teachers carry out the Learning TPACK by Design activities, they learn to develop a lesson plan integrating one technology into their choice of subjects each time after they have learned a new tool in class. By developing several lesson plans that involve a variety of technologies, preservice teachers may be able to improve their teaching-related knowledge (Hew and Brush 2007).

Limitations of the study and future research directions

There are limitations in the present study that should be considered in future research. First, as mentioned in the Re-design of the Model section, participants’ insufficient teaching-related knowledge was not considered when designing the IDDIRR model. If the model included stages for activities to enhance pedagogy-related knowledge, the participants’ difficulties with TPACK learning in each IDDIRR stage may have been more clearly identified. Future research should involve pedagogy-enhancing elements in the model and its implementation to facilitate the acquisition of TPACK.

In addition, this study did not include a treatment to deal with the lack of target participants (i.e., preservice teachers) as well as the mix of education and non-education majors. To better understand the effects of the model in a multidisciplinary technology integration course, future research should apply other methodologies of sampling. For example, sampling can be conducted in different class sections of a multidisciplinary course to group participants into same subject majors, different subject majors, different grade levels, mixing with non-education majors, etc. It should be noted that the focus of the design is to respond to the various conditions that may happen in multidisciplinary courses (e.g., including non-education majors). The sampling approach is also conducive to improving the validity of the study.

Third, the mid TPACK survey was used to examine the effects of Introduce (I) and Demonstrate (D) stages and the post TPACK survey was used to examine the Develop (D), Implement (I), Reflect (R), and Revise (R) stages. However, since the participants had different major and background, a pre TPACK survey should be included to understand individual differences in prior knowledge.

Fourth, this study did not include the component of teacher beliefs. However, many studies have identified that teacher beliefs affect technology integration (Ertmer 1999; Hew and Brush 2007; Kim et al. 2013). Future research should gradually proceed to include beliefs in the model for thorough improvements in TPACK learning and implementation.

Last, if more course instructors implemented the model and student participants conducted member check of the data, the validity of the study may have been improved (Merriam 1995). In addition, more peer reviewers or investigators (Merriam 1995) should also be included so as to examine the plausibility of the emerging findings.

Implications for research and practice

It is a challenging task to teach preservice teachers’ TPACK when they do not possess pedagogy-related background and their subject majors were diverse. Although the model used in this study had limited impact on preservice teachers’ TPACK learning, this study contributed to identifying critical difficulties in practice and offered potential methods to overcome them as suggested in the Re-design of the model section. While TPACK is a conceptual framework that emphasizes the interplay of the seven domains of knowledge, the study findings suggest that a lack of isolated knowledge in any domain hinders the understanding of the whole knowledge, TPACK.

Notes

Stand-alone courses refer to the courses that are not provided under specific methods or content courses or field experience courses for teacher candidates.

The assumption of this study was that preservice teachers should well understand the meaning of the three core domains (TK, PK, and CK) and then they can relate the understanding to integrative knowledge—the integrated domains of TPACK (e.g., PCK, TPK, TCK, and TPACK).

CK was defined as the knowledge that a teacher possesses for a deep understanding of her/his subject and the content standards of the subject. An example of CK given to participants: The knowledge of the events that led to Civil War.

PK was defined as the knowledge that a teacher possesses to understand and address students’ learning needs. An example of PK: Group discussion.

Technologies can be used as supportive tools in any subject area or topic for efficiency purposes. However, in this study, we emphasized effective use of technology for student learning than efficient use of technology for teachers.

References

Angeli, C. (2005). Transforming a teacher education method course through technology: Effects on preservice teachers’ technology competency. Computers & Education, 45(4), 383–398.

Angeli, C., & Valanides, N. (2005). Preservice elementary teachers as information and communication technology designers: An instructional systems design model based on an expanded view of pedagogical content knowledge. Journal of Computer Assisted Learning, 21(4), 292–302.

Association for Educational Communications and Technology (2012). AECT Standards, 2012 version. Retrieved June 26, 2014 from http://ocw.metu.edu.tr/pluginfile.php/3298/course/section/1171/AECT_Standards_adopted7_16_2.pdf.

Bandura, A. (1977). Social learning theory. Upper Saddle River, NJ: Prentice Hall.

Bers, M. U., Ponte, I., Juelich, C., Viera, A., Schenker, J., & AACE. (2002). Teachers as designers: Integrating robotics in early childhood education. Information Technology in Childhood Education Annual, 1, 123–145.

Bogdan, R. C., & Biklen, S. K. (2003). Qualitative research for education: An introduction to theories and methods (4th ed.). New York: Pearson Education group.

Davis, K. S., & Falba, C. J. (2002). Integrating technology in elementary preservice teacher education: orchestrating scientific inquiry in meaningful ways. Journal of Science Teacher Education, 13(4), 303–329.

Ertmer, P. A. (1999). Addressing first- and second-order barriers to change: Strategies for technology integration. Educational Technology Research and Development, 47(4), 47–61.

Ertmer, P. A. (2005). Teacher pedagogical beliefs: The final frontier in our quest for technology integration? Educational Technology Research and Development, 53(4), 25–40.

Fernández, M. L. (2005). Exploring “lesson study” in teacher preparation. In H. L. Chick & J. L. Vincent (Eds.), Proceedings of the 29th PME International Conference (Vol. 2, pp. 305–310). Melbourne.

Fernández, M. L. (2010). Investigating how and what prospective teachers learn through microteaching lesson study. Teaching and Teacher Education, 26, 351–362.

Gagné, R. M., Wager, W. W., Golas, K. C., & Keller, J. M. (2005). Principles of instructional design. Belmont, CA: Wadsworth/Thomson Learning.

Graham, R. C., Burgoyne, N., Cantrell, P., Smith, L., St. Clair, L., & Harris, R. (2009). Measuring the TPCK confidence of inservice science teachers. TechTrends, 53(5), 70–79.

Güler, C., & Altun, A. (2010). Teacher trainees as learning object designers: Problems and issues in learning object development process. Turkish Online Journal of Educational Technology - TOJET, 9(4), 118–127.

Gustafson, K. L., & Branch, R. M. (2002). What is instructional design? In R. A. Reiser & J. A. Dempsey (Eds.), Trends and issues in instructional design and technology (pp. 16–25). Saddle River, NJ: Merrill/Prentice-Hall.

Hall, G. E. (2010). Technology’s achilles heel: Achieving high-quality implementation. Journal of Research on Technology in Education, 42(3), 231–253.

Hew, K., & Brush, T. (2007). Integrating technology into K-12 teaching and learning: Current knowledge gaps and recommendations for future research. Educational Technology Research and Development, 55(3), 223–252.

Hjalmarson, M. A., & Diefes-Dux, H. A. (2008). Teacher as designer: A framework for the analysis of mathematical model-eliciting activities. The Interdisciplinary Journal of Problem-based Learning, 2(1), 57–78.

International Society for Technology in Education (ISTE) (2008). ISTE Standards. Retrieved June 26, 2014 from http://www.iste.org/docs/pdfs/20-14_ISTE_Standards-T_PDF.pdf.

Jang, S. J., & Chen, K. C. (2010). From PCK to TPACK: Developing a transformative model for preservice science teachers. Journal of Science Education and Technology, 19(6), 553–564.

Jang, S. J., Guan, S. Y., & Hsieh, H. F. (2009). Developing an instrument for assessing college students’ perceptions of teachers’ pedagogical content knowledge. Procedia Social and Behavioral Sciences, 1(1), 596–606.

Jimoyiannis, A. (2010). Developing a technological pedagogical content knowledge framework for science education: Implications of a teacher trainers’ preparation program. Proceedings of the Informing Science & IT Education Conference (In SITE 2010) (pp. 597–607). Cassino, Italy.

Jonassen, D. H. (2008). Instructional design as design problem solving: An iterative process. Educational Technology, 48(3), 21–26.

Kagan, D. M. (1992). Professional growth among preservice and beginning teachers. Review of Educational Research, 62, 129–170.

Kalantzis, M., & Cope, B. (2005). Learning by design. Melbourne: Victorian Schools Innovation Commission.

Kim, C., & Keller, J. M. (2011). Towards technology integration: The impact of motivational and volitional email messages. Educational Technology Research and Development, 59(1), 91–111.

Kim, M. C., Hannafin, M. J., & Bryan, L. A. (2007). Technology-enhanced inquiry tools in science education: An emerging pedagogical framework for classroom practice. Science Education, 91(6), 1010–1030.

Kim, C., Kim, M., Lee, C., Spector, J. M., & DeMeester, K. (2013). Teacher beliefs and technology integration. Teaching and Teacher Education, 29, 76–85.

Koehler, M. J., & Mishra, P. (2005). Teachers learning technology by design. Journal of Computing in Teacher Education, 21(3), 94–102.

Koehler, M. J., & Mishra, P. (2009). What is technological pedagogical content knowledge? Contemporary Issues in Technology and Teacher Education, 9(1), 60–70.

Koehler, M. J., Mishra, P., & Yahya, K. (2007). Tracing the development of teacher knowledge in a design seminar: Integrating content, pedagogy, & technology. Computers & Education, 49(3), 740–762.

Mayring, P. (2000). Qualitative content analysis. Forum: Qualitative Social Research, 1(2). Retrieved June 26, 2014 from http://217.160.35.246/fqs-texte/2-00/2-00mayring-e.pdf.

Merriam, S. B. (1995). What can you tell from an N of 1? Issues of validity and reliability in qualitative research. PAACE Journal of Lifelong Learning, 4, 51–60.

Merrill, M. D. (2002). First principles of instruction. Educational Technology Research and Development, 50(3), 43–59.

Merrill, M. D. (2007). The future of instructional design: the proper study of instructional design. In R. A. Reiser & J. V. Dempsey (Eds.), Trends and issues in instructional design and technology (2nd ed., pp. 336–341). Upper Saddle River, NJ: Pearson Education Inc.

Merrill, M. D. (2009). First principles of instruction. In C. M. Reigeluth & A. Carr (Eds.), Instructional design theories and models: Building a common knowledge base (Vol. III). New York: Routledge Publishers.

Mishra, P., & Koehler, M. J. (2006). Technological pedagogical content knowledge: A new framework for teacher knowledge. Teachers College Record, 108(6), 1017–1054.

National Center for Education Statistics (NCES). (2008). Educational technology in teacher education programs for initial licensure. Retrieved June 26, 2014 from http://nces.ed.gov/pubs2008/2008040.pdf.

National Science Teachers Association (NSTA). (2003). Standards for science teacher preparation. Retrieved June 26, 2014 from http://www.nsta.org/preservice/docs/NSTAstandards2003.pdf.

Niess, M. L. (2005). Preparing teachers to teach science and mathematics with technology: developing a technology pedagogical content knowledge. Teaching and Teacher Education, 21, 509–523.

Polly, D., McGee, J. R., & Sullivan, C. (2010a). Employing technology-rich mathematical tasks to develop teachers’ technological, pedagogical, and content knowledge (TPACK). Journal of Computers in Mathematics and Science Teaching, 29(4), 455–472.

Polly, D., Mims, C., Shepherd, C. E., & Inan, F. (2010b). Evidence of impact: transforming teacher education with preparing tomorrow’s teachers to teach with technology. Teaching and Teacher Education, 26, 863–870.

Sahin, I. (2011). Development of survey of technological pedagogical and content knowledge (TPACK). Turkish Online Journal of Educational Technology, 10(1), 97–105.

Sandholtz, J. H., Ringstaff, C., & Dwyer, D. C. (1997). Teaching with technology: Creating student-centered classrooms. New York: Teachers College Press.

Schmidt, D., Baran, E., Thompson, A., Koehler, M. J., Shin, T, & Mishra, P. (2009). Technological pedagogical content knowledge (TPACK): The development and validation of an assessment instrument for preservice teachers. Paper presented at the 2009 Annual Meeting of the American Educational Research Association. April 13–17, San Diego, California.

Seel, N. M. (2003). Model-centered learning and instruction. Technology, Instruction, Cognition and Learning, 1(1), 59–85.

Shulman, L. (1987). Knowledge and teaching: Foundations of the new reform. Harvard Educational Review, 57(1), 1–22.

Shute, V. J., Jeong, A. C., Spector, J. M., Seel, N. M., & Johnson, T. E. (2009). Model-based methods for assessment, learning, and instruction: Innovative educational technology at Florida State University. In M. Orey, V. J. McClendon, & R. Branch (Eds.), Educational media and technology yearbook (p. 61). Westport, CT: Greenwood Publishing Group.

Simons, H. (2009). Case study research in practice. Los Angeles, CA: Sage.

Spradley, J. P. (1980). Participant observation. Fort Worth, TX: Harcourt Brace College Publishers.

Thompson, A. D., & Mishra, P. (2007). Breaking news: TPCK becomes TPACK! Journal of Computing in Teacher Education, 24(2), 38.

Tschannen-Moran, M., & Woolfolk Hoy, A. (2001). Teacher efficacy: Capturing and elusive construct. Teaching and Teacher Education, 17, 783–805.

Voogt, J., Tilya, F., & Van den Akker, J. (2009). Science teacher learning for MBL-supported student-centered science education in the context of secondary education in Tanzania. Journal of Science and Education and Technology, 18, 428–429.

Williams, M., Linn, M. C., Ammon, P., & Gearhart, M. (2004). Learning to teach inquiry science in a technology-based environment: A case study. Journal of Science Education and Technology, 13(2), 189–206.

Wolcott, H. (1994). Transforming qualitative data: Description, analysis and interpretation. Thousand Oaks, CA: Sage.

Yoon, F. S., Ho, J., & Hedberg, J. G. (2006). Teachers as designers of learning environments. Computers in the Schools, 22(3/4), 145–157.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Lee, CJ., Kim, C. An implementation study of a TPACK-based instructional design model in a technology integration course. Education Tech Research Dev 62, 437–460 (2014). https://doi.org/10.1007/s11423-014-9335-8

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11423-014-9335-8