Abstract

Purpose

Carbon monoxide (CO) is one of the most important toxic gases in the atmosphere. Its high affinity for hemoglobin has made carboxyhemoglobin (COHb) the most appropriate biomarker for CO poisoning. COHb is measured using spectrophotometric (ultraviolet-spectrophotometry, CO-oximetry) or gas chromatographic (GC) methods combined with flame ionization or mass spectrometry (MS) detectors. However, inconsistencies in many cases have been reported between measured values and reported symptoms, raising doubts as to the suitability of COHb as a biomarker and the accuracy and reliability of its measurement methods. Therefore, we aimed to review the accuracy of current methods used to measure CO and to determine their sources of error and their effects on the interpretation process.

Methods

A detailed search of PubMed was performed in November 2018 using relevant keywords. After exclusion criteria were applied, 46 articles out of 191 initial hits were carefully reviewed.

Results

While optical methods are highly influenced by changes in blood quality due to degradation of samples during storage, GC methods are less affected. However, measurement of COHb does not quantify free CO, which is mainly responsible for toxicity mechanisms other than hypoxia, such as inhibition of hemoproteins, thus underestimating the true CO burden. Therefore, measurement of COHb is not sufficiently accurate for diagnosis of CO poisoning.

Conclusions

An alternative biomarker is needed, such as determining the total amount of CO in blood. Although further research is required, we recommend that toxicologists consider all sources of error that can alter COHb concentrations, and in more challenging cases, they should use GC–MS methods to confirm the results obtained by spectrophotometry.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

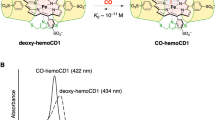

Carbon monoxide (CO) concentrations may be measured in exhaled breath, ambient air or blood. Because of the high affinity of CO for hemoglobin (Hb), it has been assumed that the majority, if not all, of CO binds with Hb when introduced into the blood circulation. As a result, carboxyhemoglobin (COHb) has traditionally been considered the most appropriate clinical marker of exposure in CO poisoning [1]. However, COHb does not represent the only reservoir of CO in the human body; CO may be found in a free state dissolved in blood and can bind to other heme-containing respiratory globins, such as myoglobin in muscle, neuroglobin in the nervous system and, to a lesser extent, cytoglobin [2]. Although CO dissolved in blood in free form is acknowledged to have a role in the pathophysiology of CO poisoning [3, 4], its influence may be more substantial than what has been revealed in studies thus far. This would result in under- or overestimation of the true level of CO present in the analyzed blood sample, potentially elucidating some of the cases where inconsistencies between measured COHb levels and reported symptoms were found. However, there is currently little data available on free CO.

COHb in blood is measured directly or indirectly using either optical methods, such as CO-oximetry, ultraviolet (UV)-spectrophotometry and pulse oximetry, or gas chromatography (GC) in combination with a variety of detectors (flame ionization detector, mass spectrometer). In clinical cases, the “gold standard” for the measurement of COHb in blood is by CO-oximetry (or pulse oximetry), either as a separate instrument or integrated in what is commonly known as a blood gas analyzer (BGA) or radiometer [5]. Although UV-spectrophotometry remains the most frequently used method in forensic cases, CO-oximetry and GC methods are also widely employed in this field.

Like any biomarker, the quantitative measurement of COHb is subject to a variety of factors that influence the measurement. Measurement error in analytical studies is defined as “uncertainty” or “bias”. Uncertainty originates when several predictable, but not always controllable factors affect the measured values and potentially alter the values obtained, resulting in a deviation from the true value. In medical practice, and especially for toxicologists, the correct and accurate determination of a biomarker is crucial in order to make the correct diagnosis and initiate the proper treatment in clinical cases, and to determine the correct cause of death in forensic cases. Failure to do so can have severe clinical and legal consequences. Therefore, in this paper, we aim to review the accuracy of current methods for measuring CO and to determine their potential sources of error and effects on the interpretation process.

Method of literature search

PubMed was searched in November 2018 using the keywords (“carbon monoxide” or “carboxyhemoglobin”) and (“poisoning”) and (“measurement” or “determination” or “quantification” or “analysis” or “breath” or “blood” or “oximet*” or “spectro*” or “gas chromatography” or “storage”); this produced 191 hits. Systematic reviews, meta-analyses, general review articles, and retrospective, prospective, observational and clinical cohort studies were excluded as well as case reports, limiting articles included in those which were focused specifically on describing a method for analysis of CO or COHb in various tissues and those describing issues related to analysis of samples (storage, sample pretreatment, etc.). This left 49 relevant articles on measurement methods and sources of errors.

Measurement of CO in breath

Analytical techniques

Analysis of CO in exhaled breath was evaluated as a measurement method for clinical cases, because a good correlation between alveolar breath CO and COHb was found by several research groups [6,7,8,9]. Portable devices, called MicroCOmeters or CO monitors, are often used in smoking cessation programs [8, 10] and may be useful when a rapid on-site assessment in multiple casualties is necessary, enabling the most severe cases to be identified [11]. This measurement is based on an electrochemical fuel cell sensor, which works through the reaction of CO with an electrolyte on one electrode and oxygen (from ambient air) on the other. This reaction generates an electrical current proportional to a CO concentration. The output from the sensor is monitored by a microprocessor, which detects a peak at expired concentrations of CO in the alveolar gas [12]. These are then converted to COHb% using the mathematical relationships described by Jarvis et al. [8] for concentrations below 90 parts per million (ppm) and by Stewart et al. [13] for higher levels.

Sources of error

Measurement of CO in breath cannot account for the total CO concentration present in the blood at the time of exposure. The method is very susceptible to the influence of a variety of factors that can easily alter the result, leading to under- or overestimation of the true concentration (Table 1). One major factor is the variability in subjects' breath-holding ability. To obtain the alveolar gas, it was found that the breath needs to be held for 20 s, and then only the end-tidal expired air is used for CO measurement. Given the individual differences in pulmonary function, capillary diffusion surface, and inspiration and expiration rates, coupled with the inability to fully control whether a subject is properly holding their breath, the portion of expired alveolar gas sampled and the results obtained can have a high degree of variability [6, 8, 13]. This can also pose an issue in susceptible groups of the population, such as the elderly, children or those with respiratory diseases. Furthermore, because they were initially designed for smoking cessation programs, the accuracy of CO monitors is better in lower CO concentrations and might therefore not be sufficiently accurate for acute intoxication [14]. Nevertheless, CO monitors are highly useful on sites of mass casualties or for first responders. They are portable and can provide an indication of the gravity of the case, enabling both the appropriate treatment of the patient and proper precautions to be taken by first responders.

Measurement of CO in blood: optical techniques CO-oximetry and spectrophotometry

Analytical techniques

Spectrophotometric or optical methods measure the concentration of COHb based on the quantity of light absorbed when the compound is exposed to light of different wavelengths. Early methods involved single-beam UV or double-wavelength spectrophotometry, and were developed for use due to the spectral absorbance of the Hb structures and to the distinct spectral differences between oxyhemoglobin (O2Hb) and COHb [15,16,17]. A similar method measures differences in absorbance in the visible spectra between reduced Hb (HHb) and COHb, where a reducing agent is added to the blood sample that reduces O2Hb but not COHb [18, 19].

However, double-wavelength spectrophotometry was not a very accurate and specific method [16], because results were based on the measurement of only two wavelengths. Automated differential spectrophotometry was later developed, which uses double-laser beams to determine the difference in absorbance of a sample compared to a negative sample; thus with this method, matrix effects are accounted for, resulting in better accuracy.

CO-oximetry is a measurement technique based on multiple-wavelength spectrophotometry, which uses up to the full range of wavelengths for analysis, allowing for more accurate measurement of COHb [20,21,22]. They are currently the standard analytical technique used for measurement of COHb, either with a separate instrument or, for hospital cases, integrated into a BGA [18, 23, 24].

Despite the advantages of CO-oximetry, for the sake of cost-efficiency, UV and double-wavelength spectrophotometers are currently still used in many developing countries and are also listed in the International Organization for Standardization (ISO) standard 27368:2008, “Analysis of blood for asphyxiant toxicants—carbon monoxide and hydrogen cyanide” [25].

Sources of error

Several issues can alter the measurement results from optical methods, mainly due to the susceptibility of these methods to changes in sample quality as a result of poor sample handling techniques and storage conditions (e.g., temperature, preservative) and biochemical alterations that occur over time [26]. Some of the most important potential errors for COHb determination are as follows:

- 1.

Type of preservative: the type of preservative used in the blood tube used to store the sample can alter the results due to biochemical reactions that can take place, which can either increase or decrease the concentration of CO [27, 28].

- 2.

Storage temperature: the use of different storage temperatures was shown to alter the results; storage over prolonged periods of time can lead to degradation of the sample, which can lead to in vitro CO production, resulting in overestimation of the concentration; storage at room or hot temperatures leads to faster degradation as compared to storage in the fridge or freezer [26, 28, 29].

- 3.

Dead volume: the different amounts of headspace (HS) volume in the sampling tube (which is known as dead volume) can alter the results because of the reversibility of the bond between CO and Hb; the more dead volume in the tube, the more likely the dissociation of CO from Hb and release into the HS [30].

- 4.

Freeze-and-thaw cycles: whether a sample has been frozen and then thawed one or more times can also alter the resulting measurement, due to the breakdown of the erythrocytes [28].

- 5.

Reopening of the sampling tubes: the repeated opening of the tube can lead to substance loss (in gaseous state when CO is not bound to Hb) with increasing number and time of reopening as well as increased exposure of the sample to oxygen [23, 28].

- 6.

Postmortem (PM) changes: thermocoagulation, putrefaction and PM CO production are all known sources of error, but they cannot be quantified due to their biologically unpredictable nature [27, 31, 32].

- 7.

Instrument and personal error: errors due to the instrument or the operator are random, but they can be corrected by using an internal standard when possible, which minimizes the error [33].

These factors are applicable to both optical measurements of COHb and GC measurements of CO. Specifically for spectrophotometric methods, several of the factors listed in Fig. 1 have been investigated and are described in more detail as follows.

Studies performed earlier by Chace et al. [28] and later by Kunsman et al. [27] evaluated a number of storage conditions, including the amount of air present in the sampling tube (known as dead volume, which can alter the results because of the reversibility of the bond between CO and Hb and potential dissociation of the gas into the HS of the tube), storage temperatures, preservatives and initial COHb saturation levels. They observed that decreased COHb levels were related to the ratio of the exposed surface area to the volume of blood (the higher the exposed surface area, the greater the loss), the storage temperature (the higher the temperature, the greater the loss) and the initial COHb% saturation level (the higher the COHb level, the greater the loss). A hypothesis was proposed whereby the influence of the HS volume in the sampling tube was explained by the formation of an equilibrium between CO in the blood and the air above the blood sample in the tube [28]. Storage of blood at room temperature or higher leads to faster degradation and lower sample stability, affecting spectrophotometric measurement of CO, which was also confirmed by other research groups [26, 34]. Additionally, they found no effect from the preservative used; however, testing was performed with an insufficient number of preservatives [only two, namely sodium fluoride (NaF) and ethylenediaminetetraacetic acid (EDTA)], which were compared to samples with no preservative, and only on samples stored frozen immediately after sampling over a period of 2 years. Analysis of the samples on only two significantly distant time points might fail to detect changes in short-term storage due to the use of preservatives, which is more relevant than long-term storage, since in the majority of cases samples are analyzed within a few hours to days. Nevertheless, these findings are especially relevant for forensic or legal cases, where retrospective analyses can still provide sufficiently reliable information. The resulting lack of impact from the preservative might however be biased because the measurements were performed with optical methods only, which are known to be influenced by the blood state. Therefore, smaller changes due to the preservatives might not have been detected by this less sensitive measurement method. However, Vreman et al. [35] were able to determine that the use of EDTA as preservative led to falsely increased COHb values when measured by CO-oximetry. Nevertheless, these findings would have been more significant with confirmation by another measurement method, such as GC.

Furthermore, these conditions may influence not only the CO levels present in the blood, but also the blood quality [28]. For samples that cannot be readily analyzed and are not stored under optimal conditions, degradation of the sample occurs, which was confirmed to hamper optical measurement methods used to determine COHb levels [36]. This can be a major issue for many laboratories where optical techniques are routinely used for sample analysis.

Additional factors influencing the measurement of COHb-levels that have been reported in the literature include the presence and amount of oxygen in air [23] and, in PM samples, thermocoagulation in fire victims [34], putrefaction during a prolonged PM interval (PMI) [37], contamination due to hemolysis, high lipid concentrations or thrombocytosis, all of which result in turbidity of the sample, hampering measurements performed with optical techniques. Another frequent and significant phenomenon to consider during evaluation of the results is the PM production of CO in the organism [32, 38]. CO was found to be produced in significant quantities in cases that were not related to fire or CO exposure. However, the cases in which this occurs are mostly cases of putrefied bodies. It was confirmed that CO is formed due to the decomposition of various substances present in the body, such as erythrocyte catabolism, a phenomenon that also occurs in living organisms [32]. Therefore, it is important to differentiate those cases from the real CO intoxication cases, which can be done with the help of autopsy-determined cause of death, even though it is not always a simple task to completely exclude the possibility of the role played by CO in these cases [23]. As a result, PM decomposition currently constitutes a field with open questions that require further investigation.

Antemortem COHb measurement by pulse CO-oximetry

Analytical techniques

In clinical settings and generally for living patients, a noninvasive alternative to venous or arterial blood COHb measurement by BGA or CO-oximetry that has been widely investigated is pulse CO-oximetry [39,40,41,42,43]. Similarly to standard CO-oximetry, pulse CO-oximetry is a spectrophotometric method that quantifies multiple types of hemoglobin, including COHb, based on the absorbance of light after exposure to different wavelengths [43]. As opposed to regular CO-oximeters, pulse CO-oximeters have the ability to measure COHb continuously and without the need for blood sampling, thus allowing the monitoring of COHb levels in real time and simultaneously with the administration of treatment.

Sources of error

Noninvasiveness and cost- and time-efficiency are some evident advantages of using pulse CO-oximeters. However, for CO poisoning diagnosis, there are more important factors from a medical perspective, such as accuracy, precision and reliability. The ability to diagnose a CO poisoning case quickly is necessary, but if the results obtained over- or underestimate the true COHb levels, this can have severe and potentially fatal consequences. Several studies have reported low precision and accuracy as well as elevated false-positive and false-negative rates in comparison with regular blood measurements [5, 39,40,41,42]. Especially for COHb levels above 10%, pulse CO-oximeters significantly underestimated the COHb levels [39].

Furthermore, factors such as blood pressure, oxygen saturation and body temperature also appear to affect the accuracy of pulse CO-oximeters [42]. Feiner et al. [40] reported that the pulse CO-oximeter always gave low signal quality errors and did not report COHb levels when oxygen saturation decreased below 85%, which is indicative of hypoxia. Considering that hypoxia is one of the main effects of CO poisoning, it is a severe disadvantage not to be able to accurately measure COHb in hypoxic states. However, a more recent study by Kulcke et al. [43] reported good accuracy in measuring COHb even during hypoxemia by use of an upgraded/revised version of the pulse CO-oximeter, although slightly greater underestimation of COHb levels was reported for COHb concentrations above 10%. This confirms that pulse CO-oximeters can be useful for monitoring exposure to low CO levels, but accuracy and precision are not guaranteed for more severe poisoning or for smokers, who generally have baseline COHb levels that range from 3 to 8% in normal smokers but can easily reach 10–15% in heavy smokers [1, 2].

In contrast to postmortem CO-oximetry, antemortem COHb measurement by pulse CO-oximetry is not affected by storage or sampling parameters, thus reducing the potential sources of error. Additionally, no laborious and time-consuming calibration of the device seems to be needed based on what is reported in the literature, leading to a more simplified routine analysis, although there is scarce information regarding device maintenance. Similar to general CO-oximetry, and despite good accuracy and precision, measurement of only CO bound to Hb can lead to underestimation of the total CO burden and thus to misdiagnosis. Another relevant point from a juristic perspective is that pulse CO-oximetry does not provide samples that can be used for confirmation or counter-expertise in legal disputes.

Measurement of CO in blood: gas chromatography

Analytical techniques

The principle behind GC CO detection is based on the measurement of the released CO dissolved in blood as well as the one bound to Hb through a liberating agent (after red cell lysis). Therefore, the sample is firstly treated with a hemolytic agent, such as saponin, Triton X-100 or other detergents, and subsequently acidified to liberate the CO in blood [34, 44,45,46,47]. The reaction of COHb with a powerful acid/oxidizing agent was found to efficiently release CO and water as products. The releasing agents commonly used are sulfuric acid (H2SO4), hydrochloric acid (HCl) and potassium ferricyanide (K3Fe(CN)6). Other acids including lactic acid [48], citric acid [48, 49] and phosphoric acid [49] have also been tested.

In the studies performed in earlier years (1970s, 1980s and 1990s), potassium ferricyanide was introduced for the release of CO and became very popular due to its easy availability, since it was already used in spectrophotometric methods as hemolytic agent. It was also found to be efficient in liberating the CO, and the extent of its reaction was not influenced by the presence of O2 or O2Hb over a wide pH range, as compared to other acids tested [30, 46, 48, 50, 51]. However, in more recent studies, sulfuric acid has been preferred, mostly because it is more readily available and cheaper than other acids of the same efficiency, and allows the simultaneous liberation of CO and production of 13CO from formic acid-13C used as internal standard [4, 30, 31, 47, 49, 52,53,54]. After successful liberation, CO is analyzed by GC and then detected with one of the below-mentioned detectors.

For the GC separation, a capillary column with a 5 Å molecular sieve has been found to be specific for the separation of CO from other interfering gases such as nitrogen (N2), oxygen (O2) and methane (CH4) [51]. Various packed columns have been used previously, but have been replaced by capillary columns because of the significantly reduced size.

To enhance sensitivity and accuracy and increase the range of analysis, GC methods have been studied with various types of detection, such as thermal conductivity detection (TCD), flame ionization detection (FID), mass spectrometry (MS) and reduction gas analyzers (RGA) [55,56,57,58,59,60,61,62,63,64,65,66]. The most commonly used and investigated detector was FID, first reported in relation to CO determination in 1968 [51]. After GC separation, the CO was chemically reduced to methane (CH4) with a methanizer and subsequently analyzed via FID.

Sources of error

The most important sources of error for GC techniques are found in the process of calibration before analysis and the methods for correlating measured CO concentrations to COHb levels that have previously been linked to the symptomatology. Generally, calibration of the instrument is performed either with pure CO gas, which is diluted to obtain the desired CO concentrations, or with fortification of blood with CO to reach different COHb% saturation levels. Additionally, excess CO has been removed by performing a “flushing” step, in which the calibrators are flushed with a stream of inert gas (usually N2). This step enabled the removal of unbound CO from the sample, thus leaving only CO bound to Hb to be analyzed, but thereby deliberately neglecting the potential toxicity of free CO.

The first changes in the calibration method were made in 1993, when Cardeal et al. [49] took advantage of the reaction of formic acid with sulfuric acid to form CO for calibration. However, no details were given on how the analyzed blood was saturated with CO, nor was it explained how the formula was created for back-calculation of the measured CO concentration to a COHb level.

Czogala and Goniewicz [67] proposed a GC–FID-based method which directly correlated the CO levels in air to COHb in blood through back-calculation and extrapolated it to the other factors assessed (exposure time, smoking frequency, number of smoked cigarettes and ventilation conditions). The technique was designed to ensure complete release of CO from the blood samples by performing the reaction and subsequent analysis in an airtight reactor. Similarly, the air samples were directly transferred from the room to the analysis instrument, thus avoiding time delays and possible loss of CO, and allowing for direct correlation of the results to the other measurements. However, no details about the procedure for obtaining 100% CO-saturated blood used for calibration were described, which is necessary to assess whether the method is reliable and reproducible. Furthermore, the formula used to back-calculate the COHb saturation levels from the measured CO concentrations contained a Hüfner factor of 1.51, which differs from the factor reported by other studies [30, 46]. The Hüfner factor expresses the maximum amount of CO that can be bound to 1 g of Hb [68, 69]. A detailed list of additional pitfalls of GC methods is found in Table 1.

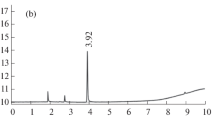

Measurement of CO in blood: GC–MS and HS–GC–MS

Analytical techniques

MS is the method of choice for detecting CO because identification is based on both the retention time and the mass spectrum. Middleberg et al. [31] developed a method which combined GC–MS with flame atomic absorption spectroscopy (FAAS). CO was determined by GC–MS after release with sulfuric acid and heating, while FAAS was used to determine the total iron content of the blood, which was used to calculate a more precise total amount of available Hb. It should be mentioned that with this assay, it was assumed that all the iron present in blood was part of the heme protein and was capable of binding to CO; however, this is not completely true, as it depends on the state of the organs, tissues and possible diseases present. Therefore, the obtained values may not accurately reflect the real CO levels.

Sources of error

Similar to other GC methods, the main errors in MS also derive from calibration of the methods, the subsequent back-calculation of COHb from CO, and extrapolation of already existing COHb% saturation–symptom correlation (Table 1).

Hao et al. [37] published an approach built on an HS–GC–MS method for analysis of CO in putrefied PM blood. The standard curve was constructed from putrefied blood, which was saturated by CO-bubbling to reach 100% COHb and then flushed to remove excess CO. COHb% levels were then calculated from the ratio of saturated to untreated blood. In PM cases, to prevent the variation in Hb levels from affecting the results, direct blood saturation was performed. The authors reported that 30 min of pure CO exposure was necessary to fully saturate blood, although the procedures used to assess complete saturation, putrefied blood state and PMI were not described [37]. Furthermore, according to the results for the storage condition tests (possible loss of sealing parts of the HS vial, water bath temperature, stability, interval and temperature), the storage temperature did not affect COHb% levels. This appears to contradict findings in the majority of previously published studies, although they were obtained using other approaches, such as optical methods and other GC detections.

Varlet et al. [52] developed and validated a new method which used isotopically labeled formic acid (H13COOH) to produce 13CO as internal standard for HS–GC–MS. This was very advantageous, because formic acid (HCOOH) was already used for the calibration, and sulfuric acid could be used to react with both types of formic acid, forming a mixture of CO and 13CO, from which the CO concentration could be derived mathematically and correlated to the COHb levels using previously published formulae [46, 49]. However, these formulae describing back-calculation of COHb from CO concentrations measured by GC could be debatable due to the random finding of a good correlation between the spectrophotometrically measured COHb levels and the CO levels measured by GC–MS [52]. Varlet et al. [36] improved their method and compared it with results obtained through the CO-oximeter. They were able to obtain cutoff values for different categories of back-calculated COHb% levels as compared to those directly measured by the CO-oximeter. However, while this approach seems to show reliability for both clinical and forensic cases, only a limited number were tested. Oliverio and Varlet [4, 70] further developed this approach by validating both clinical and PM settings for the measurement of the total amount of CO in blood (TBCO) by GC–MS with the use of an airtight gas syringe for sampling, which minimized any potential loss that could occur with a normal syringe or HS sampler. Application to PM samples showed relevant differences between the content of CO and COHb when applying formulae in the literature for back-calculation. Significant differences were also observed between flushed and non-flushed samples from a clinical cohort exposed to CO [70]. This demonstrates the presence of free CO and confirms the weaknesses of COHb for accurate CO poisoning determination, even though the number of subjects in the cohort was limited. Thus, the measurement of TBCO should be performed as an alternative to COHb and the current routinely used spectrophotometric methods for the determination of CO.

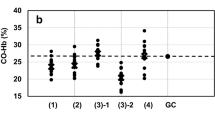

Interpretation of results and choice of biomarker

After analysis of the samples, an important and challenging aspect in CO determination is the interpretation of the results. There is no consensus on cutoff values for the different levels of exposure and severity of poisoning. According to the World Health Organization (WHO), COHb levels in blood of the healthy non-smoking population should not exceed 2.5–3%, while for smokers, levels above 10% are considered abnormal [11, 71,72,73]. Values of 30–35% COHb are the upper extreme reportedly found in clinical poisoning cases. Above this limit, irreversible damage to the organs is expected, thus initiating a cascade of events eventually leading to death.

However, these values are interpreted differently according to different cases. Various parameters can affect perimortem COHb% levels and in the agonal period before death, which include the presence of oxidative smoke or other gases that can interfere and/or compete with the CO absorption mechanism, such as nitrogen dioxide (NO2) (increased methemoglobin), or the formation of other toxic gases such as hydrogen cyanide (HCN) [74]. Pre-existing cardiovascular, hemolytic and respiratory diseases also can alter the mechanism and magnitude of CO absorption, with the potential to both decrease and increase the resulting COHb% levels [11, 23]. Therefore, each case must be analyzed and interpreted individually, based on all relevant information available. For example, a COHb level of 25% in a PM case may be considered a contributing factor to the cause of death, but should not be considered exclusively as cause of death. Similarly, in clinical cases, 15% COHb can be considered a poisoning case, but in heavy smokers, levels up to 18% have been found [72] in individuals who showed no symptoms of CO poisoning. Overall, there seem to be some significant discrepancies between COHb values and reported symptoms, which makes the correct diagnosis of CO poisoning in clinical cases and the determination of the cause of death in forensic cases challenging.

A possible explanation for these phenomena is that a diagnosis of CO poisoning based only on COHb% levels might actually underestimate the real CO burden. There may be an unknown amount of CO that on the one hand dissociates back from COHb, and on the other hand is dissolved in the blood without being bound to Hb, resulting in higher total CO content than that determined by CO-oximetry. The conventional assumption that the part of CO bound to Hb causes the most significant adverse health effects has been repeatedly debated [3, 4, 75,76,77,78]. Free CO in blood could constitute a toxic reservoir of CO for the organism and could also have major implications for the central nervous system (CNS) by the known binding to other globins such as myoglobin, neuroglobin and cytoglobin [79, 80]. The ratio of COHb to dissolved and dissociated CO is also probably subject to interpersonal variability, which includes factors such as metabolic rate and age [11], and needs to be taken into account when interpreting the results obtained by CO-oximetry.

Another issue is that GC assays, with the exception of Varlet et al. [36, 52] and Oliverio and Varlet [4, 70], include the “flushing” step in their sample preparation procedure. The excess CO which is not bound to Hb is flushed away with inert gas, allowing the determination of only CO bound to Hb. This procedure is performed under the assumption that only CO bound to Hb is relevant and responsible for the adverse effects of CO poisoning. However, this point has been widely debated, raising the possibility that additional CO found in the blood and not bound to Hb could have an effect on an intoxicated individual. Furthermore, in routine clinical COHb analysis, blood samples are not flushed, because it is usually considered not to comply with the pathophysiology of CO poisoning. In general, the use of formulae to back-calculate GC-measured CO to COHb may be prone to additional errors and could lead to misestimation of the true amount of CO present in the blood of an individual.

All these issues raise doubt as to whether the measurement of COHb is the most appropriate method for determination of CO poisoning. It seems plausible that a more accurate biomarker of CO poisoning may be found. Several alternative biomarkers have been proposed, including lactate [81,82,83], bilirubin [84], S100β [85] and troponin concentrations in blood. Some of these demonstrated positive and good correlations with COHb and were reported to be potentially helpful for diagnosing CO poisoning. However, none of these biomarkers is specific to CO poisoning; rather they are indirect biomarkers derived from toxicity caused by CO in the cardiovascular system, nervous system and cellular levels, which can also be attributable to other diseases.

The development of an alternative biomarker specific to CO should be directed toward finding a novel measurement approach that not only focuses on the CO bound to Hb, but also takes into consideration the role and toxicity of CO at the cellular level, by measuring the total amount of CO present in the sample, such as TBCO. Mainly because of the dependence of spectrophotometric methods on good-quality samples, which in forensic cases in particular is not always available, it seems that GC methods are currently the most suitable techniques to be further explored. With regard to detectors, the MS is the most versatile, accurate and user-friendly, and is nowadays routinely present in the majority of laboratories. The ability to determine the true CO exposure and to correlate this with the symptoms reported by patients would allow for more conclusive and comprehensive CO poisoning determination, reducing the number of misdiagnosed cases and falsely determined causes of death.

Conclusions

Although COHb is routinely measured by spectrophotometric methods, several issues concerning sample stability and the dependence of optical methods on sample quality have led to the search for alternative ways to measure CO, such as GC. In addition, there is evidence that a significant amount of CO present in blood is in free form. Free CO has major toxic effects at a cellular level, affecting not only the respiratory system, but also especially the CNS. However, it is not quantified with current methods, which focus only on COHb; hence the back-calculation of COHb from CO leads to misestimation. Therefore, an alternative approach for quantifying the total amount of CO in blood directly instead of using CO in breath or COHb in blood should be used for determination of CO poisoning, such as the proposed TBCO measurement by GC–MS. Although blood CO concentration cutoffs and their correlation with symptomatology are not yet available, and GC–MS is more time-consuming, we recommend that toxicologists use GC–MS methods to verify the results obtained by CO-oximetry or spectrophotometry, especially for doubtful or very challenging cases. This leads to results closer to the true CO burden, reducing the underestimation caused by COHb measurement and thus the risk and number of misdiagnoses. Especially if the analysis is delayed from sampling requiring storage, we further recommend that toxicologists document information about sampling time, analysis time and storage conditions, as these factors can significantly influence the final interpretation.

References

Bleecker ML (2015) Carbon monoxide intoxication. Handb Clin Neurol 131:191–203. https://doi.org/10.1016/B978-0-444-62627-1.00024-X

Raub JA, Mathieu-Nolf M, Hampson NB, Thom SR (2000) Carbon monoxide poisoning: a public health perspective. Toxicology 145:1–14. https://doi.org/10.1016/S0300-483X(99)00217-6

Ouahmane Y, Mounach J, Satte A, Bourazza A, Soulaymani A, Elomari N (2018) Severe poisoning with carbon monoxide (CO) with neurological impairment, study of 19 cases. Toxicol Anal Clin 30:50–60. https://doi.org/10.1016/j.toxac.2017.10.003

Oliverio S, Varlet V (2018) Carbon monoxide analysis method in human blood by airtight gas syringe-gas chromatography-mass spectrometry (AGS-GC-MS): relevance for postmortem poisoning diagnosis. J Chromatogr B 1090:81–89. https://doi.org/10.1016/j.jchromb.2018.05.019

Piatkowski A, Ulrich D, Grieb G, Pallua N (2009) A new tool for the early diagnosis of carbon monoxide intoxication. Inhal Toxicol 21:1144–1147. https://doi.org/10.3109/08958370902839754

Ogilvie CM, Forster RE, Blakemore WS, Morton JW (1957) A standardized breath holding technique for the clinical measurement of the diffusing capacity of the lung for carbon monoxide. J Clin Invest 36:1–17. https://doi.org/10.1172/JCI103402

Jarvis MJ, Russell M, Salojee Y (1980) Expired air carbon monoxide: a simple breath test of tobacco smoke intake. Br Med J 281:484–485. https://doi.org/10.1136/bmj.281.6238.484

Jarvis MJ, Belcher M, Vesey C, Hutchison DCS (1986) Low cost carbon monoxide monitors in smoking assessment. Thorax 41:886–887. https://doi.org/10.1136/thx.41.11.886

Middleton ET, Morice AH (2000) Breath carbon monoxide as an indication of smoking habit. Chest 117:758–763. https://doi.org/10.1378/chest.117.3.758

MacIntyre N, Crapo RO, Viegi G, Johnson DC, van der Grinten CPM, Brusasco V, Burgos F, Casaburi R, Coates A, Enright P, Gustafsson P, Hankinson J, Jensen R, McKay R, Miller MR, Navajas D, Pedersen OF, Pellegrino R, Wanger J (2005) Standardisation of the single-breath determination of carbon monoxide uptake in the lung. Eur Respir J 26:720–735. https://doi.org/10.1183/09031936.05.00034905

Penney DG (2007) Carbon monoxide poisoning, 1st edn. CRC Press, Boca Raton

Yan H, Liu CC (1994) A solid polymer electrolyte-bases electrochemical carbon monoxide sensor. Sens Actuators B Chem 17:165–168. https://doi.org/10.1016/0925-4005(94)87045-4

Stewart RD, Stewart RS, Stamm W, Seelen RP (1976) Rapid estimation of carbon monoxide level in fire fighters. J Am Med Assoc 235:390–392. https://doi.org/10.1001/jama.1976.03260300016021

Vreman HJ, Stevenson DK, Oh W, Fanaroff AA, Wright LL, Lemons JA, Wright E, Shankaran S, Tyson JE, Korones SB (1994) Semiportable electrochemical instrument for determining carbon monoxide in breath. Clin Chem 40:1927–1933 (PMID: 7923774)

Wigfield DC, Hollebone BR, MacKeen JE, Selwin JC (1981) Assessment of the methods available for the determination of carbon monoxide in blood. J Anal Toxicol 5:122–125. https://doi.org/10.1093/jat/5.3.122

Ramieri A Jr, Jatlow P, Seligson D (1974) New method for rapid determination of carboxyhemoglobin by use of double-wavelength spectrophotometry. Clin Chem 20:278–281 (PMID: 4813007)

Winek CL, Prex DM (1981) A comparative study of analytical methods to determine postmortem changes in carbon monoxide concentration. Forensic Sci Int 18:181–187. https://doi.org/10.1016/0379-0738(81)90158-4

Boumba VA, Vougiouklakis T (2005) Evaluation of the methods used for carboxyhemoglobin analysis in postmortem blood. Int J Toxicol 24:275–281. https://doi.org/10.1080/10915810591007256

Fukui Y, Matsubara M, Takahashi S, Matsubara K (1984) A study of derivative spectrophotometry for the determination of carboxyhemoglobin in blood. J Anal Toxicol 8:277–281. https://doi.org/10.1093/jat/8.6.277

Fujihara J, Kinoshita H, Tanaka N, Yasuda T, Takeshita H (2013) Accuracy and usefulness of the AVOXimeter 4000 as routine analysis of carboxyhemoglobin. J Forensic Sci 58:1047–1049. https://doi.org/10.1111/1556-4029.12144

Bailey SR, Russell EL, Martinez A (1997) Evaluation of the avoximeter: precision, long-term stability, linearity, and use without heparin. J Clin Monit 13:191–198. https://doi.org/10.1023/A:1007308616686

Olson KN, Hillyer MA, Kloss JS, Geiselhart RJ, Apple FS (2010) Accident or arson: is CO-oximetry reliable for carboxyhemoglobin measurement postmortem? Clin Chem 56:515–519. https://doi.org/10.1373/clinchem.2009.131334

Widdop B (2002) Analysis of carbon monoxide. Ann Clin Biochem 39:122–125. https://doi.org/10.1258/000456302760042146

Mahoney JJ, Vreman HJ, Stevenson DK, Van Kessel AL (1993) Measurement of carboxyhemoglobin and total hemoglobin by five specialized spectrophotometers (CO-oximeters) in comparison with reference methods. Clin Chem 39:1693–1700 (PMID: 8353959)

International Organization for Standardization (2008) Analysis of blood for asphyxiant toxicants—carbon monoxide and hydrogen cyanide. ISO 27368:2008. https://www.iso.org/standard/44127.html. Accessed 30 May 2019

Hampson NB (2008) Stability of carboxyhemoglobin in stored and mailed blood samples. Am J Emerg Med. https://doi.org/10.1016/j.ajem.2007.04.028

Kunsman GW, Presses CL, Rodriguez P (2000) Carbon monoxide stability in stored postmortem blood samples. J Anal Toxicol 24:572–578. https://doi.org/10.1093/jat/24.7.572

Chace DH, Goldbaum LR, Lappas NT (1986) Factors affecting the loss of carbon monoxide from stored blood samples. J Anal Toxicol 10:181–189. https://doi.org/10.1093/jat/10.5.181

Ocak A, Valentour JC, Blanke RV (1985) The effects of storage conditions on the stability of carbon monoxide in postmortem blood. J Anal Toxicol 9:202–206. https://doi.org/10.1093/jat/9.5.202

Vreman HJ, Wong RJ, Stevenson DK, Smialek JE, Fowler DR, Li L, Vigorito RD, Zielke HR (2006) Concentration of carbon monoxide (CO) in postmortem human tissues: effect of environmental CO exposure. J Forensic Sci 51:1182–1190. https://doi.org/10.1111/j.1556-4029.2006.00212.x

Middleberg RA, Easterling DE, Zelonis SF, Rieders F, Rieders MF (1993) Estimation of perimortal percent carboxy-heme in nonstandard postmortem specimens using analysis of carbon monoxide by GC/MS and iron by flame atomic absorption spectrophotometry. J Anal Toxicol 17:11–13. https://doi.org/10.1093/jat/17.1.11

Wu L, Wang R (2005) Carbon monoxide: endogenous production, physiological functions, and pharmacological applications. Pharmacol Rev 57:585–630. https://doi.org/10.1124/pr.57.4.3

Tworoger SS, Hankinson SE (2006) Use of biomarkers in epidemiologic studies: minimizing the influence of measurement error in the study design and analysis. Cancer Causes Control 17:889–899. https://doi.org/10.1007/s10552-006-0035-5

Seto Y, Kataoka M, Tsuge K (2001) Stability of blood carbon monoxide and hemoglobins during heating. Forensic Sci Int 121:144–150. https://doi.org/10.1016/S0379-0738(01)00465-0

Vreman HJ, Stevenson DK (1994) Carboxyhemoglobin determined in neonatal blood with a CO-oximeter unaffected by fetal oxyhemoglobin. Clin Chem 40:1522–1527 (PMID: 7519133)

Varlet V, De Croutte EL, Augsburger M, Mangin P (2013) A new approach for the carbon monoxide (CO) exposure diagnosis: measurement of total CO in human blood versus carboxyhemoglobin (HbCO). J Forensic Sci 58:1041–1046. https://doi.org/10.1111/1556-4029.12130

Hao H, Zhou H, Liu X, Zhang Z, Yu Z (2013) An accurate method for microanalysis of carbon monoxide in putrid postmortem blood by head-space gas chromatography–mass spectrometry (HS/GC/MS). Forensic Sci Int 229:116–121. https://doi.org/10.1016/j.forsciint.2013.03.052

Kojima T, Nishiyama Y, Yashiki M, Une I (1982) Postmortem formation of carbon monoxide. Forensic Sci Int 19:243–248. https://doi.org/10.1016/0379-0738(82)90085-8

Zaouter C, Zavorsky GS (2012) The measurement of carboxyhemoglobin and methemoglobin using a non-invasive pulse CO-oximeter. Respir Physiol Neurobiol 182:88–92. https://doi.org/10.1016/j.resp.2012.05.010

Feiner JR, Rollins MD, Sall JW, Eilers H, Au P, Bickler PE (2013) Accuracy of carboxyhemoglobin detection by pulse CO-oximetry during hypoxemia. Anesth Analg 117:847–858. https://doi.org/10.1213/ANE.0b013e31828610a0

Weaver LK, Churchill SK, Deru K, Cooney D (2013) False positive rate of carbon monoxide saturation by pulse oximetry of emergency department patients. Respir Care 58:232–240. https://doi.org/10.4187/respcare.01744

Wilcox SR, Richards JB (2013) Noninvasive carbon monoxide detection: insufficient evidence for broad clinical use. Respir Care 58:376–379. https://doi.org/10.4187/respcare.02288

Kulcke A, Feiner J, Menn I, Holmer A, Hayoz J, Bickler P (2016) The accuracy of pulse spectroscopy for detecting hypoxemia and coexisting methemoglobin or carboxyhemoglobin. Anesth Analg 122:1856–1865. https://doi.org/10.1213/ANE.0000000000001219

Coburn RF, Danielson GK, Blakemore WS, Forster RE 2nd (1964) Carbon monoxide in blood: analytical method and sources of error. J Appl Physiol 19:510–515. https://doi.org/10.1152/jappl.1964.19.3.510

Ayres SM, Criscitiello A, Giannelli S Jr (1966) Determination of blood carbon monoxide content by gas chromatography. J Appl Physiol 21:1368–1370. https://doi.org/10.1152/jappl.1966.21.4.1368

Vreman HJ, Kwong LK, Stevenson DK (1984) Carbon monoxide in blood: an improved microliter blood-sample collection system, with rapid analysis by gas chromatography. Clin Chem 30:1382–1386 (PMID: 6744592)

Walch SG, Lachenmeier DW, Sohnius E-M, Madea B, Musshoff F (2010) Rapid determination of carboxyhemoglobin in postmortem blood using fully-automated headspace gas chromatography with methaniser and FID. Open Toxicol J 4:21–25. https://doi.org/10.2174/1874340401004010021

Rodkey FL, Collison HA (1970) An artifact in the analysis of oxygenated blood for its low carbon monoxide content. Clin Chem 16:896–899 (PMID: 5473549)

Cardeal ZL, Pradeau D, Hamon M, Abdoulaye I, Pailler FM, Lejeune B (1993) New calibration method for gas chromatographic assay of carbon monoxide in blood. J Anal Toxicol 17:193–195. https://doi.org/10.1093/jat/17.4.193

Oritani S, Zhu B-L, Ishida K, Shimotouge K, Quan L, Fujita MQ, Maeda H (2000) Automated determination of carboxyhemoglobin contents in autopsy materials using head-space gas chromatography/mass spectrometry. Forensic Sci Int 113:375–379. https://doi.org/10.1016/S0379-0738(00)00227-9

Collison HA, Rodkey FL, O’Neal JD (1968) Determination of carbon monoxide in blood by gas chromatography. Clin Chem 14:162–171

Varlet V, De Croutte EL, Augsburger M, Mangin P (2012) Accuracy profile validation of a new method for carbon monoxide measurement in the human blood using headspace–gas chromatography–mass spectrometry (HS–GC–MS). J Chromatogr B 880:125–131. https://doi.org/10.1016/j.jchromb.2011.11.028

Sundin A-M, Larsson JE (2001) Rapid and sensitive method for the analysis of carbon monoxide in blood using gas chromatography with flame ionisation detection. J Chromatogr B 766:115–121. https://doi.org/10.1016/S0378-4347(01)00460-1

Anderson CR, Wu W-H (2005) Analysis of carbon monoxide in commercially treated tuna (Thunnus spp.) and mahi-mahi (Coryphaena hippurus) by gas chromatography/mass spectrometry. J Agric Food Chem 53:7019–7023. https://doi.org/10.1021/jf0514266

Lewis RJ, Johnson RD, Canfield DV (2004) An accurate method for the determination of carboxyhemoglobin in postmortem blood using GC/TCD. J Anal Toxicol 28:59–62. https://doi.org/10.1093/jat/28.1.59

Luchini PD, Leyton JF, Strombech MdLC, Ponce JC, Jesus MdGS, Leyton V (2009) Validation of a spectrophotometric method for quantification of carboxyhemoglobin. J Anal Toxicol 33:540–544. https://doi.org/10.1093/jat/33.8.540

Dubowski KM, Lu JL (1973) Measurement of carboxyhemoglobin and carbon monoxide in blood. Ann Clin Lab Sci 3:53–65 (PMID: 4691500)

Costantino AG, Park J, Caplan YH (1986) Carbon monoxide analysis: a comparison of two CO-oximeters and headspace gas chromatography. J Anal Toxicol 10:190–193. https://doi.org/10.1093/jat/10.5.190

Levine B, Green D, Saki S, Symons A, Smialek JE (1997) Evaluation of the IL-682 CO-oximeter; comparison to the IL-482 CO-oximeter and gas chromatography. J Can Soc Forensic Sci 30:75–78. https://doi.org/10.1080/00085030.1997.10757089

Lee C-W, Yim L-K, Chan DTW, Tam JCN (2002) Sample pre-treatment for CO-oximetric determination of carboxyhaemoglobin in putrefied blood and cavity fluid. Forensic Sci Int 126:162–166. https://doi.org/10.1016/S0379-0738(02)00052-X

Lee C-W, Tam JCN, Kung L-K, Yim L-K (2003) Validity of CO-oximetric determination of carboxyhaemoglobin in putrefying blood and body cavity fluid. Forensic Sci Int 132:153–156. https://doi.org/10.1016/S0379-0738(03)00011-2

Brehmer C, Iten PX (2003) Rapid determination of carboxyhemoglobin in blood by oximeter. Forensic Sci Int 133:179–181. https://doi.org/10.1016/S0379-0738(03)00066-5

Marks GS, Vreman HJ, McLaughlin BE, Brien JF, Nakatsu K (2002) Measurement of endogenous carbon monoxide formation in biological systems. Antioxid Redox Signal 4:271–277. https://doi.org/10.1089/152308602753666325

Van Dam J, Daenens P (1994) Microanalysis of carbon monoxide in blood by head-space capillary gas chromatography. J Forensic Sci 39:473–478 (PMID: 8195758)

Oritani S, Nagai K, Zhu B-L, Maeda H (1996) Estimation of carboxyhemoglobin concentrations in thermo-coagulated blood on a CO-oximeter system: an experimental study. Forensic Sci Int 83:211–218. https://doi.org/10.1016/S0379-0738(96)02039-7

Guillot JG, Weber JP, Savoie JY (1981) Quantitative determination of carbon monoxide in blood by head-space gas chromatography. J Anal Toxicol 5:264–266. https://doi.org/10.1093/jat/5.6.264

Czogala J, Goniewicz ML (2005) The complex analytical method for assessment of passive smokers’ exposure to carbon monoxide. J Anal Toxicol 29:830–834. https://doi.org/10.1093/jat/29.8.830

Vaupel P, Zander R, Bruley DF (eds) (1994) Oxygen transport to tissue XV. Springer Science and Business Media, New York

Penney DG (ed) (2000) Carbon monoxide toxicity, 1st edn. CRC Press, Boca Raton

Oliverio S, Varlet V (2019) Total blood carbon monoxide: alternative to carboxyhemoglobin as biological marker for carbon monoxide poisoning determination. J Anal Toxicol 43:79–87. https://doi.org/10.1093/jat/bky084

Pojer R, Whitfield JB, Poulos V, Eckhard IF, Richmond R, Hensley WJ (1984) Carboxyhemoglobin, cotinine, and thiocyanate assay compared for distinguishing smokers from non-smokers. Clin Chem 30:1377–1380 (PMID: 6744590)

Wald NJ, Idle M, Boreham J, Bailey A (1981) Carbon monoxide in breath in relation to smoking and carboxyhaemoglobin levels. Thorax 36:366–369. https://doi.org/10.1136/thx.36.5.366

Turner A, McNicol MW, Sillet RW (1986) Distribution of carboxyhaemoglobin concentrations in smokers and non-smokers. Thorax 41:25–27. https://doi.org/10.1136/thx.41.1.25

Einhorn IN (1975) Physiological and toxicological aspects of smoke produced during the combustion of polymeric materials. Environ Health Perspect 11:163–189. https://doi.org/10.1289/ehp.7511163

Roderique JD, Josef CS, Feldman MJ, Spiess BD (2015) A modern literature review of carbon monoxide poisoning theories, therapies, and potential targets for therapy advancement. Toxicology 334:45–58. https://doi.org/10.1016/j.tox.2015.05.004

Hampson NB, Hauff NM (2008) Carboxyhemoglobin levels in carbon monoxide poisoning: do they correlate with the clinical picture? Am J Emerg Med 26:665–669. https://doi.org/10.1016/j.ajem.2007.10.005

Hampson NB (2016) Myth busting in carbon monoxide poisoning. Am J Emerg Med 34:295–297. https://doi.org/10.1016/j.ajem.2015.10.051

Weaver LK, Hopkins RO, Churchill SK, Deru K (2008) Neurological outcomes 6 years after acute carbon monoxide poisoning. In: Abstracts of the Undersea and Hyperbaric Medical Society 2008 Annual Scientific Meeting, Salt Lake City. http://archive.rubicon-foundation.org/7823. Accessed 30 May 2019

Hankeln T, Ebner B, Fuchs C, Gerlach F, Haberkamp M, Laufs TL, Roesner A, Schmidt M, Weich B, Wystub S, Saaler-Reinhardt S, Reuss S, Bolognesi M, De Sanctis D, Marden MC, Kiger L, Moens L, Dewilde S, Nevo E, Avivi A, Weber RE, Fago A, Burmester T (2005) Neuroglobin and cytoglobin in search of their role in the vertebrate globin family. J Inorg Biochem 99:110–119. https://doi.org/10.1016/j.jinorgbio.2004.11.009

Raub JA, Benignus VA (2002) Carbon monoxide and the nervous system. Neurosci Biobehav Rev 26:925–940. https://doi.org/10.1016/S0149-7634(03)00002-2

Sokal JA, Kralkowska E (1985) The relationship between exposure duration, carboxyhemoglobin, blood glucose, pyruvate and lactate and the severity of intoxication in 39 cases of acute carbon monoxide poisoning in man. Arch Toxicol. https://doi.org/10.1007/BF00290887

Moon JM, Shin MH, Chun BJ (2011) The value of initial lactate in patients with carbon monoxide intoxication: in the emergency department. Hum Exp Toxicol 30:836–843. https://doi.org/10.1177/0960327110384527

Cervellin G, Comelli I, Rastelli G, Picanza A, Lippi G (2014) Initial blood lactate correlates with carboxyhemoglobin and clinical severity in carbon monoxide poisoned patients. Clin Biochem 47:298–301. https://doi.org/10.1016/j.clinbiochem.2014.09.016

Cervellin G, Comelli I, Buonocore R, Picanza A, Rastelli G, Lippi G (2015) Serum bilirubin value predicts hospital admission in carbon monoxide-poisoned patients. Active player or simple bystander? Clinics (Sao Paulo) 70:628–631. https://doi.org/10.6061/clinics/2015(09)06

Cakir Z, Aslan S, Umudum Z, Acemoglu H, Akoz A, Turkyilmaz S, Öztürk N (2010) S-100β and neuron-specific enolase levels in carbon monoxide-related brain injury. Am J Emerg Med 28:61–67. https://doi.org/10.1016/j.ajem.2008.10.032

Acknowledgements

The authors are grateful to Dr Ariana Zeka from Brunel University London for providing assistance in reviewing the manuscript and Dr Giovanni Leonardi from Public Health England for fruitful discussion on the topic as part of a collaborative project on carbon monoxide measurement error. This research received funding from the Gas Safety Trust, a UK-based grant-giving charity, grant number 2015-GST-01.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

There are no financial or other relations that could lead to a conflict of interest.

Ethical approval

No ethical approval was required for the preparation of this review article.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Oliverio, S., Varlet, V. What are the limitations of methods to measure carbon monoxide in biological samples?. Forensic Toxicol 38, 1–14 (2020). https://doi.org/10.1007/s11419-019-00490-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11419-019-00490-1