Abstract

Artificial neural network (ANN) mathematical models, such as the radial basis function neural network (RBFNN), have been used successfully in different environmental engineering applications to provide a reasonable match between the measured and predicted concentrations of certain important parameters. In the current study, two RBFNNs (one conventional and one based on particle swarm optimization (PSO)) are employed to accurately predict the removal of chemical oxygen demand (COD) from polluted water streams using submerged biofilter media (plastic and gravel) under the influence of different variables such as temperature (18.00–28.50 °C), flow rate (272.16–768.96 m3/day), and influent COD (55.50–148.90 ppm). The results of the experimental study showed that the COD removal ratio had the highest value (65%) when two plastic biofilter media were used at the minimum flow rate (272.16 m3/day). The mathematical model results showed that the closeness between the measured and obtained COD removal ratios using the RBFNN indicates that the neural network model is valid and accurate. Additionally, the proposed RBFNN trained with the PSO method helped to reduce the difference between the measured and network outputs, leading to a very small relative error compared with that using the conventional RBFNN. The deviation error between the measured value and the output of the conventional RBFNN varied between + 0.20 and − 0.31. However, using PSO, the deviation error varied between + 0.058 and − 0.070. Consequently, the performance of the proposed PSO model is better than that of the conventional RBFNN model, and it is able to reduce the number of iterations and reach the optimum solution in a shorter time. Thus, the proposed PSO model performed well in predicting the removal ratio of COD to improve the drain water quality. Improving drain water quality could help in reducing the contamination of groundwater which could help in protecting water resources in countries suffering from water scarcity such as Egypt.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Currently, water scarcity is one of the most serious problems facing Egypt. The limited availability of supply resources is the main challenge facing the water resource system in Egypt, where the total water supply is 66 Billion Cubic Meters (BCM) while the total current water demand for different sectors is 79.5 BCM/year (MWRI 2014). Thus, the gap between the demand and availability of water is approximately 13.5 BCM/year. This gap could be decreased by rationing consumption and searching for alternative sources of water, such as the reuse and recycling of drainage water and wastewater (Mohie El Din and Moussa 2016).

In Egypt, the Nile River represents the main source of freshwater, with a share of more than 95%, while agriculture is the main consumer of fresh water resources; agriculture and irrigation in Egypt are virtually fully dependent on Nile water (80% of the irrigation requirements) (Osman et al. 2016). On the other hand, the increase in the Egyptian population and changes in lifestyle in recent decades represent the main causes of the rise in water consumption for drinking and health uses (Elbana et al. 2017). Furthermore, developments in Sudan, Ethiopia, and other riparian countries may reduce the availability of water for Egypt (Abdel-Dayem 2011; Abdel-Gawad 2008; MWRI 2015).

On the other hand, the extensive use of chemical fertilizer and sand drainage contributing to surface and groundwater sources along the Nile, in addition to the presence of nitrogen and phosphorous salts, puts drinking water at risk (Asempa 2010). Furthermore, the heavy implementation of pesticides poses serious environmental risks, and some pesticide residues have been found in canals and drains (Di Baldassarre et al. 2011). Moreover, the discharge of poorly treated wastewater and uncontrolled mixing of water from polluted drains deteriorates the water quality (Gad 2017). Abd-Elhamid et al. (2019) studied the effect of polluted drains on groundwater resources and how to protect it using different lining materials. Therefore, high levels of several pollutants have been found in drains, including fecal bacteria, heavy metals, and pesticides (Gad 2017).

A submerged biofilter, usually made of gravel or plastic media, used to improve the water quality of polluted water streams was recommended by several investigators (Abdel daiem et al. 2019; Abd-Elhamid et al. 2017; El-Shatoury et al. 2014; El Monayeri et al. 2007a, 2007b; Galal et al. 2018; Loutfy 2010). In addition, artificial neural networks (ANNs) are a mathematical model that can find missing values, support multiple inputs and model nonlinear/complex relationships. Moreover, they have the ability to recognize patterns in input/output datasets and find the nonlinear relations between the input and output variables (Kumar 2004).

Many models of ANNs have been successfully used in environmental engineering applications, such as water and wastewater treatments and water resources (Akratos et al. 2009; Çinar et al. 2006; Çoruh et al. 2014; Ezzeldin and Hatata 2018). Akratos et al. (2009) applied the ANN backpropagation algorithm to derive the design equation for total nitrogen removal in constructed wetlands (CWs). Moreover, it was used to compare the performance of surface and subsurface flow CWs. Furthermore, Naz et al. (2009) proposed an ANN model to provide a reasonable match between the measured and predicted concentrations of pollutants, such as the biological oxygen demand (BOD) and chemical oxygen demand (COD). Yalcuk (2012) modeled phenol removal in horizontal and vertical planned and unplanned CWs using an ANN. Tufaner et al. (2017) used an ANN to estimate the biogas production from laboratory-scale (UASB) reactors treating cattle manure with codigestion of different organic wastes. The results indicated that the proposed ANN was able to effectively predict biogas production. Tufaner and Demirci (2020) used four machine learning techniques, an ANN, linear regression (LR), a support vector machine (SVM), and a decision tree (DT), to determine the Palmer drought Z index values from meteorological data. The best R value and minimum mean-square error (MSE) were obtained using the ANN technique. Three-layer feed forward ANNs were introduced to estimate the biogas production performance of an anaerobic hybrid reactor (AHR) (Tufaner and Özbeyaz 2020). The results indicated that the proposed ANN model has better performance in predicting the biogas production rate of an AHR.

The publications related to the application of the ANN model to accurately predict different parameters to improve drain water quality are limited. To increase the knowledge in this field, an ANN (RBFNN) will be applied in this study for the accurate prediction of COD removal from polluted water streams under the influence of different variables. The COD removal data are collected according to a field experimental model using submerged biofilter media to improve drain water quality. The proposed RBFNN is trained using two methods: the conventional method (trial and error (TE)) and the optimization method (the particle swarm optimization (PSO) algorithm). The results of the two neural networks are obtained and then compared using different indices.

Generating the training data

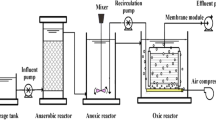

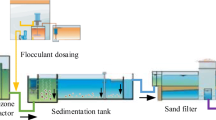

The experimental model used in this study was constructed for Bilbeas drain, Egypt, as shown in Fig. 1 and detailed in previous work (El-Gohary 2007). The drain water contains a mixture of treated and untreated wastewater (domestic, industrial, and agricultural). Three different biofilter media (star-shaped, pall ring, and gravel) were used in the model to improve the drain water quality; they had cross-sections of (0.37 m × 0.20 m) and different lengths (10, 20, and 26 m, respectively). The plastic media was supplied from the Egyptian Packaging and Plastic System (Makka), Cairo, Egypt. The characteristics of these media are presented in Table 1.

The drain water quality was evaluated in terms of the COD as an important indicator of pollution. The influent COD (Li), flow rate (Q), and temperature (T) were investigated using the three different media biofilters with different volumes (V) and specific surface areas (S), but the three biofilters had the same total surface area of 130 m2. These different types were mutually compared with a reference channel (without any media) to obtain the optimum conditions used in this study. In the present study, four experimental channels with dimensions of 40 m length × 0.38 m width × 0.75 m height were used, as shown in Fig. 1. Table 2 shows all the variables studied during the experiment and the conditions over 78 runs. Statistical analysis of the data was performed using Statistica software release 7 and is presented in Table 3. The T values ranged between 18.00 and 28.50 °C because the experiments were performed in the summer and winter; moreover, the Q values varied between 272.16 and 148.96 m3/day for each medium to investigate its effect on the COD removal ratio. The Li concentration measurements ranged from 55.50 to 148.90 ppm with a mean value of 105.61 ppm.

Radial basis function neural network model

The RBFNN is an FFNN type where the network is seen as a curve-fitting problem in a high-dimensional space. It consists of three layers: the input layer, hidden layer, and output layer (Kumar 2004). An RBF is used as the activation function of the hidden layer. Generally, the Gaussian function is used in the hidden layer. The RBF can be learned using supervised and unsupervised methods in the training process (Jia et al. 2014).

The RBF is represented by a width and a center position parameter. When the width and center are obtained, the input vectors are assigned to the hidden space. The neurons in the input layer represent the input pattern of the network, while the hidden layer in the network performs a nonlinear transformation from the input variable space to the hidden space. The output layer processes the output of the neurons from the hidden layer and calculates the final network output (Kasiviswanathan and Agarwal 2012; Mahanta et al. 2016a, b). Figure 2 illustrates the proposed RBFNN scheme. It has five neurons in the input layer (temperature (T), flow rate (Q), influent concentration of COD to the biofilter (Li), volume of the media used (V), and specific surface area of the media used (S)), one neuron in the output layer (COD removal ratio, RR), and k neurons in the hidden layer.

The outputs of the hidden layer are based on the distance between the input vector and the center of each RBF. The output of the network can be defined as in Kasiviswanathan and Agarwal (2012):

where Zl is the lth output of the neural network, k is the number of hidden neurons, \( \overline{X} \) is the input vector of the neural network, γm is the center of the mth radial basis function, and ∅m is the radial basis function for the mth hidden neuron. The hidden neurons use the Gaussian function as an activation function (Dash et al. 2016). However, the output neurons implement a linear summation function.

where σm is the width of the mth radial basis function and \( \left\Vert \overrightarrow{X}-{\overrightarrow{\gamma}}_m\right\Vert \) represents the norm. Thus, Equation (1) can be expressed by the following equation (Dash et al. 2016):

The output of the hidden layer is weighted by ωml and transmitted to the output layer. Hence, m (m ∈{1, 2, …, k}) represents the number of hidden neurons. The number of hidden neurons affects the accuracy of the RBFNN. Using a small number of neurons leads to an inability to reach the exact solution, and using a large number of neurons may cause overlearning and greater complexity for the network. The number of neurons can be determined by the CTE method, starting from a low number and increasing it until the maximum accuracy for the neural network is reached. Recently, meta-heuristic optimization methods were used to determine the optimal values of the neural network parameters to obtain the maximum performance or minimum error (Talaat et al. 2020). The parameters that were been optimized were the number of neurons in the hidden layer, the connected weights between the input layer and hidden layer, the connected weights between the hidden layer and the output layer, the center of the radial basis function, and the width of the radial basis function. Therefore, the parameters and the structure of the proposed RBFNN are simultaneously determined using the PSO algorithm.

To obtain a good performance for the neural network, the MSE is calculated by finding the difference between the RBFNN and the experimental data (ED) and then modifying the network parameters. Hence, the MSE can be calculated using the following equation (Talaat et al. 2020):

where N is the number of training patterns, th is the actual value of the output, and Zh is the RBFNN output. The fitness value of the training pattern is computed using the following equation (Talaat et al. 2020):

Training of the Proposed RBFNN

The RBFNN is trained to obtain the optimum values of its parameters, including the number of neurons in the hidden layer, the width and center of the RBF, and the weights of the networks, using two methods. These methods are the conventional method (TE) and PSO.

RBFNN training based on the TE method

The number of neurons in the hidden layer and their weights can be obtained by the TE method. In this method, initially low numbers of neurons are selected, and then, they are increased until the maximum accuracy (minimum MSE) for the neural network or the maximum number of iterations is reached. The procedures for training the RBFNN using the TE method include the following steps: first, data patterns are linearly normalized to the range [− 1, 1] using the command “mapminmax” in the neural network toolbox in MATLAB. Then, the data patterns are divided into three parts, training, validating, and testing, and the center list of the RBF is selected from the data patterns randomly. Moreover, the width parameter of the center is determined using Equation (6) (Dash et al. 2016) the ωnm and ωml weights are generated randomly depending on the number of selected centers in the hidden layer, the Gaussian function (RBF),∅m, is computed using Equation (2), the RBFNN output is calculated using Equation (3), and the RBFNN is trained under supervised techniques. Therefore, the error is calculated using the actual and computed output values. Equation (4) readjusts the weights with the computed error using the expression from Mahanta et al. (2016b), the center set is updated using the new weights and error expression from Mahanta et al. (2016a), and the steps related to Equations (2), (3), (4), (7), and (8) are repeated until the terminating conditions are reached. The terminating conditions are the number of iterations and the error value; finally, the error is measured using the mean absolute error.

where C is the number of centers.

where r is the learning parameter and iter is the iteration number.

RBFNN training based on particle swarm optimization

The performance of the RBFNN depends on the network parameters and structure: the center of the RBF (\( {\overrightarrow{\gamma}}_m \)), width of the RBF (σm), number of neurons in the hidden layer (M), connection weights between the input and hidden layers (ωnm), and connection weights between the hidden and output layers (ωml). Optimizing these parameters will improve the performance and minimize the network error. In this study, the PSO algorithm is applied to obtain the optimal network parameters to minimize the error (Mahanta et al. 2016a, 2016b).

PSO is an evolutionary algorithm, that is, a population-based stochastic technique for solving optimization problems. It has been used in many applications for solving engineering problems due to its implementation ability and high performance. The concept of the technique is to mimic swarm intelligence based on the observation of the congregation habits of birds and fish and the field of evolutionary computation (Mahanta et al. 2016a, 2016b). The candidate solution in this technique is applied to determine the objective function. A possible solution to the optimization problem or the fitness function appears as a particle located at a point.

The PSO algorithm uses a random number for each variable. A combination of the random numbers for all variables is called a population. Therefore, each particle in the population is considered a possible solution to the optimization problem. Additionally, a set of particles is obtained in the PSO algorithm and then ordered according to the fitness value of the objective function. For each iteration, the value of the objective function is calculated for each particle. If the value of the objective function for particle i (M, ωnm, ωml, \( {\overrightarrow{\upgamma}}_{\mathrm{m}} \), and σm) is better than the value of the previous iteration, this particle (variable) is taken as the local best solution (Pbest, i(Pi)); otherwise, the previous Pbest, i(Pi) is kept unchanged. The best position of the ith particle is stored and expressed as Pi = [ pi1, pi2, …., pin].

The best value of the particles in the population is checked. If this value is better than the value of the previous iteration, it is taken as the global best (gbest(G)); otherwise, the previous gbest(G) is kept unchanged. Therefore, the best position of the best particle in the swarm is expressed as G = [ g1, g2, …., gn]. Finally, the particles’ positions and velocities are updated in the next iteration (t + 1) by the following equations:

where \( {V}_{\mathrm{i}}^{\mathrm{t}} \) and \( {V}_{\mathrm{i}}^{\mathrm{t}+1} \) are the old and updated velocities of particle i, \( {X}_{\mathrm{i}}^{\mathrm{t}} \) and \( {X}_{\mathrm{i}}^{\mathrm{t}+1} \) are the old and updated positions of particle i, and c1 and c2 are the cognitive and social parameters, respectively. rand1 and rand2 are random numbers [0,1]. I is the inertia coefficient. In this study, both c1 and c2 are set to 2, and the inertia coefficient values are set to 0.4 and 0.9 for the minimum and maximum values, respectively. Additionally, the maximum number of iterations is 100.

The procedures of the PSO algorithm can be summarized in the following steps. First, set a random number for the RBFNN and PSO parameters as the population or search space. Then, initialize the swarm size and each particle’s position and velocity. Additionally, initialize the range of weights (ωnm and ωml) and the center and width parameters (γm and σm) of the RBFNN as a particle. Second, determine the individual fitness and the error according to Equations (4) and (5) and determine whether the parameter values meet the condition limits. Third, if they do not meet the condition limits assigned, this solution is the best solution; modify the values of the RBF parameters and recalculate the fitness functions. Finally, if the parameter values reach the condition limits, assign the solution as the global best solution and return it. Figure 3 explains the RBFNN-PSO procedures as a flowchart.

Results and discussion

Field experiment

Table 4 shows the effluent COD (Le) and removal ratio (RR) of the COD values obtained from the experiments (ED) due to the variation of the different parameters and the influent values of COD, which ranged between 50 and 150 ppm. The removal ratios of COD showed higher values at low influent values of the flow rate due to their low load of organic matter, which could help in increasing removal efficiency. The highest removal ratio of COD occurred at the lowest flow rate (272.16 m3/day). It was 48.7% in the case of gravel biofilters, whereas it reached a higher value of 56.6% in the case of the star-shaped media. The pall ring biofilter recorded the highest removal ratio of COD (65%) among the different media used.

Proposed RBFNN results

The proposed RBFNN for modeling the removal ratio of COD in this study is trained using two methods: the conventional method (TE) and PSO. The software used for implementing and executing the proposed RBFNN is MATLAB. The training datasets consist of approximately 72 patterns representing the different cases, so that a wide range of possible cases are included in the study. These datasets are used to train the proposed RBFNN and are divided into three groups:

-

The training group was 66% of the dataset (48 patterns).

-

The validation group was 17% of the dataset (12 patterns).

-

The testing group was 17% of the dataset (12 patterns).

Figures 4 and 5 show the results of the regression factor R for the RBFNN with the two training methods, the TE method and the PSO method, respectively. The figures show the regression factors for the training, validation, and test datasets. They show that the conversion and fitting results of the models are good based on the illustrated regression factors. Moreover, using the PSO method to optimize the parameters of the RBFNN results in regression factors that are closer to one than those obtained using the TE method.

Figure 6 shows a comparison between the measured removal ratio of COD and the RBFNN removal ratio of COD output when all the data sets are used (72 patterns). This figure highlights the closeness between the measured and the obtained removal ratio of COD using the RBFNN, indicating that the neural network model is valid and accurate. Additionally, the proposed RBFNN trained with the PSO method helps to reduce the difference between the measured and network outputs, leading to a very small relative error compared with that of using the conventional RBFNN (TE training method), as illustrated in Fig. 6 and 7.

Figure 7 shows the value of the relative error between the measured COD removal ratio and that obtained with the RBFNN. The deviation error between the measured values and the output of the conventional RBFNN varied between 0.2 and − 0.31. However, by using the PSO to train the RBFNN, the deviation error is reduced to between 0.058 and − 0.07.

Once the desired performance was achieved, the number of neurons in the hidden layer and their weights was frozen. Then, the RBFNN was tested with different independent test patterns. The proposed RBFNN was tested using different measured data than the datasets used to train the network (72 patterns). To validate the extrapolation capacity of the proposed ANN model, we selected 8% (6 patterns) of the total patterns (78%) to test the proposed RBFNN. These test patterns were excluded from the training process of the RBFNN. They were chosen to represent different cases of the problem (experiments no. 7, 15, 32, 46, 62, and 77), as shown in Table 5. Figure 8 shows a comparison between the conventional and optimized RBFNN, which indicate the ability of the optimized RBFNN to predict the true values of the COD removal ratio.

The statistical measures used to check the accuracy of the proposed RBFNN for modeling the removal ratio of COD are the MSE, root-mean-square error (RMSE), mean absolute error (MAE), and mean absolute percentage error (MAPE), which can be expressed as follows (Ezzeldin and Hatata 2018):

The performance of the proposed RBFNN removal ratio of the COD model is analyzed for different media, flow rates, and temperatures with the four mentioned indices (RMSE, MAE, and MAPE (%)). From the results presented in Table 6, it can be seen that the proposed RBFNN based on the PSO model performance is better than that of the conventional RBFNN model. This is because the PSO can obtain the optimal centers of the RBF, the number of hidden neurons, and the appropriate weight factors and biases. Moreover, the minimum MSE is achieved after 38 and 19 iterations for the conventional and PSO-based RBFNNs, respectively, so the PSO is able to reduce the number of iterations and reach the optimum solution in less time. Moreover, the proposed PSO model performs well in predicting the removal ratio of COD to improve the drain water quality.

Conclusion

The reuse of treated drainage water and wastewater in irrigation could be a feasible option for solving water scarcity problems in Egypt. The use of submerged biofilters for improving the drain water quality has been recommended by several studies. ANN mathematical models such as an RBF can be applied to provide reasonable matches between the measured and predicted variables and can find missing values, support multiple inputs, and model nonlinear or complex relationships. As an important indicator of water pollution, the COD of drain water is measured before and after treatment using plastic and gravel biofilter media. The RBF model is employed for accurate prediction of the removal ratio of the COD under the influence of different variables, such as Q (272.16–768.96 m3/day) and Li (50–150 ppm). The highest removal ratio of the COD (65%) was obtained using plastic biofilter media (pall ring shape) at a minimum Q value (272.16 m3/day). The results showed that the neural network model using an RBFNN is valid and accurate. Moreover, the proposed RBFNN trained with the PSO method helped to decrease the difference between the measured and network outputs, leading to a very small relative error compared with that using the conventional RBFNN (trial-and-error training method). The deviation error between the measured value and the output of the conventional RBFNN varied between 0.2 and − 0.31. However, by using the PSO to train the RBFNN, the deviation error is reduced to between 0.058 and − 0.07. Consequently, the proposed RBFNN based on the PSO model performance is better than that of the conventional RBFNN model. Although this study focused on the COD as an important indicator of water pollution, it is recommended to study and apply the model to other biochemical parameters related to the quality of reused drain water for different purposes. Also, such studies could help to reduce surface and groundwater contamination which protects the available water resources.

References

Abdel daiem MM, Said N, El-Gohary EH, Mansi AH, Abd-Elhamid HF (2019) The effects of submerged biofilter on water quality of polluted drains in Egypt. Int J Civ Struct Eng 6(2):52–55

Abdel-Dayem S (2011) Water quality management in Egypt. Int J Water Resour Dev 27:181–202

Abdel-Gawad S (2008) Actualizing the right to water: an Egyptian perspective for an action plan. Water as a Hum. right Middle East North Africa. Int Dev Res Centre, Ottawa, Canada, pp 133–146

Abd-Elhamid HF, Abdel daiem MMA, El-Gohary EH, Abou Elnaga Z (2017) Safe reuse of treated wastewater for agriculture in Egypt. EXCEED-SWINDON EXPERT WORKSHOP, Water Efficient Cities", Marrakesh, Morocco, November 03-08, 2017

Abd-Elhamid HF, Abdelaal GM, Abd-Elaty I, Said AM (2019) Efficiency of using different lining materials to protect groundwater from leakage of polluted streams. J Water Supply: Res Technol AQUA 68(6):448–459

Akratos CS, Papaspyros JNE, Tsihrintzis VA (2009) Total nitrogen and ammonia removal prediction in horizontal subsurface flow constructed wetlands: use of artificial neural networks and development of a design equation. Bioresour Technol 100:586–596

Asempa (2010) The Battle of the Nile, North-East Africa. Afr Confid 51:6–8

Çinar Ö, Hasar H, Kinaci C (2006) Modeling of submerged membrane bioreactor treating cheese whey wastewater by artificial neural network. J Biotechnol 123:204–209

Çoruh S, Geyikçi F, Kılıç E, Çoruh U (2014) The use of NARX neural network for modeling of adsorption of zinc ions using activated almond shell as a potential biosorbent. Bioresour Technol 151:406–410

Dash CSK, Behera AK, Dehuri S, Cho S-B (2016) Radial basis function neural networks: a topical state-of-the-art survey. Open Comput Sci 1

Di Baldassarre G, Elshamy M, van Griensven A, Soliman E, Kigobe M, Ndomba P, Mutemi J, Mutua F, Moges S, Xuan Y (2011) Future hydrology and climate in the River Nile basin: a review. Hydrol Sci J 56:199–211

El Monayeri DS, Atta NN, El Mokadem S, Aboul-Fotoh AM (2007a) Effect of organic loading rate and temperature on the performance of horizontal biofilters, in: Proceedings of Eleventh International Water Technology Conference, IWTC11.

El Monayeri DS, Atta NN, El Mokadem S, Gohary E (2007b) Enhancement of bilbeas drain water quality using submerged biofilters (SBS). In: Eleventh International Water Technology Conference, IWTC11

Elbana TA, Bakr N, Elbana M (2017) Reuse of treated wastewater in Egypt: challenges and opportunities, in: Unconventional Water Resources and Agriculture in Egypt. Springer, pp 429–453

El-Gohary EH (2007) Enhancement of streams water quality using in-situ filters, master’s thesis, Zagazig University, Zagazig, Egypt

El-Shatoury S, El-Baz A, Abdel Daiem M, El-Monayeri D (2014) Enhancing wastewater treatment by commercial and native microbial Inocula with factorial design. Life Sci J 11

Ezzeldin R, Hatata A (2018) Application of NARX neural network model for discharge prediction through lateral orifices. Alexandria Eng J 57:2991–2998

Gad WA (2017) Water scarcity in Egypt: causes and consequences. IIOAB J 8:40–47

Galal TM, Eid EM, Dakhil MA, Hassan LM (2018) Bioaccumulation and rhizofiltration potential of Pistia stratiotes L. for mitigating water pollution in the Egyptian wetlands. Int J Phytoremediation 20:440–447

Jia W, Zhao D, Shen T, Su C, Hu C, Zhao Y (2014) A new optimized GA-RBF neural network algorithm. Comput Intell. Neurosci 2014

Kasiviswanathan KS, Agarwal A (2012) Radial basis function artificial neural network: spread selection. Int J Adv Comput Sci 2:394–398

Kumar S (2004) Neural networks: a classroom approach. Tata McGraw-Hill Education

Loutfy NM (2010) Reuse of wastewater in Mediterranean region, Egyptian experience, in: Waste Water Treatment and Reuse in the Mediterranean Region. Springer, pp 183–213

Mahanta B, Singh TN, Ranjith PG (2016a) Influence of thermal treatment on mode I fracture toughness of certain Indian rocks. Eng Geol 210:103–114

Mahanta R, Pandey TN, Jagadev AK, Dehuri S (2016b) Optimized radial basis functional neural network for stock index prediction, in: 2016 International Conference on Electrical, Electronics, and Optimization Techniques (ICEEOT). IEEE, pp 1252–1257

Mohie El Din MO, Moussa AMA (2016) Water management in Egypt for facing the future challenges. J Adv Res 7:403–412

MWRI: Ministry of Water Resources and Irrigation (2014) Water scarcity in Egypt: the urgent need for regional cooperation among the Nile Basin countries, Technical report Egypt Water Resources Management Program.

MWRI: Ministry of Water Resources and Irrigation (2015) EGYPT 2012 State of the water report, monitoring evaluation for water in North Africa (MEwina) project, resources & irrigation. Egypt Water Resources Management Program

Naz M, Uyanik S, Yesilnacar MI, Sahinkaya E (2009) Side-by-side comparison of horizontal subsurface flow and free water surface flow constructed wetlands and artificial neural network (ANN) modelling approach. Ecol Eng 35:1255–1263

Osman R, Ferrari E, McDonald S (2016) Water scarcity and irrigation efficiency in Egypt. Water Econ Policy 2:1650009

Talaat M, Farahat MA, Mansour N, Hatata AY (2020) Load forecasting based on grasshopper optimization and a multilayer feed-forward neural network using regressive approach. Energy 196:117087

Tufaner F, Demirci Y (2020) Prediction of biogas production rate from anaerobic hybrid reactor by artificial neural network and nonlinear regressions model. Clean Techn Environ Policy 22:713–724

Tufaner F, Özbeyaz A (2020) Estimation and easy calculation of the Palmer Drought Severity Index from the meteorological data by using the advanced machine learning algorithms. Environ Monit Assess 192:576–1–14

Tufaner F, Avsar Y, Gonullu MT (2017) Modeling of biogas production from cattle manure with co-digestion of different organic wastes using an artificial neural network. Clean Techn Environ Policy 19:2255–2264

Yalcuk A (2012) The macro nutrient removal efficiencies of a vertical flow constructed wetland fed with demineralized cheese whey powder solution. Int J Phytoremediation 14:114–127

Funding

This study is funded by the Zagazig University and the National Organization for Potable Water and Sanitary Drainage (NOPWASD) of Egypt and the Ministry of Housing, Utilities and Urban Communities of the Egyptian government.

Author information

Authors and Affiliations

Contributions

Abdel daiem, Said, and Hatata planned and designed the research; Hatata and El-Gohary conducted the field experiments and modeling; Said, Abdel daiem, and Hatata conducted the statistical analysis and wrote the first draft; Abdel daiem, Hatata, Said, Abd-Elhamid, and El-Gohary wrote, reviewed, and edited the manuscript. All authors read and approved the manuscript.

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Ethical approval

Not applicable

Consent to participation

Not applicable

Consent to publish

Not applicable

Additional information

Responsible Editor: Marcus Schulz

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Abdel daiem, M.M., Hatata, A., El-Gohary, E.H. et al. Application of an artificial neural network for the improvement of agricultural drainage water quality using a submerged biofilter. Environ Sci Pollut Res 28, 5854–5866 (2021). https://doi.org/10.1007/s11356-020-10964-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11356-020-10964-0