Abstract

Facial expression detection (FED) and extraction show the most important role in face recognition. In this research, we proposed a new algorithm for automatic live FED using radial basis function support Haar Wavelet Transform is used for feature extraction and RBF-SVM for classification. Edges of the facial image are detected by genetic algorithm and fuzzy-C-means. The experimental results used CK+ database and JAFEE database for facial expression. The other database used for face detection process namely FEI, LFW-a, CMU + MIT and own database. In this algorithm, the face is detected by fdlibmex technique but we improved the limitations of this algorithm using contrast enhancement. In the pre-processing stage, apply median filtering for removing noise from an image. This stage improves the feature extraction process. Finding an image from the image components is a typical task in pattern recognition. The detection rate has reached up to approximately 100% for expression recognition. The proposed system estimates the value of precision and recall. This algorithm is compared with the previous algorithm and our proposed algorithm is better than previous algorithms.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Face detection is the technique used to detect any human face in given image or video. Most of the human–computer interaction uses face detection as the pre-processing step. Currently, four face detection techniques are available. First, one being knowledge base techniques which have certain defined rules about facial features of human but it has some trouble in their algorithm about various head poses. The second one is a feature based technique which will detect human with eyebrows or eyes. This method generally gives good detection but depends upon the head and background variation and this approach may fail due to poor image resolution [1, 2]. Most of the time face detection is restricted to get the data from still image or some short length video. These approaches didn’t inherent 3D technologies so, the changes in this susceptible. This problem is overcome by generating 3D models based on various still image or video data and it can be used in testing any probe image. Most of the algorithms, they produced a low-quality image even if initially it was high; this approach is not used for facial recognition because the face model generated by this approach is not equal to the perfect [3]. To get better algorithm on face detection, this article uses the cascade classifier which is built with the help of existing Haar-Like features by the AdaBoost algorithm to select features along with new structure feature relatively more like face recognition feature. These new features form good classifiers which will eventually remove the non-face part and also try to keep the original resolution of the algorithm by recognizing texture features in ordinary human faces [4,5,6].

2 Related Work

Dey et al. [7] proposed the Robert edge detector and with the set of arithmetic operation face, edges are detected between an initial frame and the nearest ones. With the help of Gaussian Filtering Technique, non desired edges and noise are removed in the pre-processing step. The author described a novel algorithm and this algorithm is performed on face Image/video to identify the face part. This proposed algorithm cannot work on the complex background and on different lighting condition [7]. Lin et al. [8] described a multi-scale histograms algorithm for face recognition. This proposed strategy gives precision rates equivalent to the LBP-based algorithm and speed of this proposed algorithm is 10 times more than the previous techniques [8]. Liuliu et al. [9] proposed a novel skin shading algorithm was used to identify the human face. This algorithm demonstrates the high calculation rate of identifying the human face as well as false location rate is lower. This algorithm gives to outline of real face detection to some degree of angle [9]. Chihaoui et al. [10] described a combination of neural network, skin color, and the Gabor filter techniques to identify the human face. The main focus of this proposed methodology is skin color segmentation before Gabor filters and neural networks algorithm were used to reduce computation time. The proposed algorithm gives high computational time but the rate of identifying the human face is poor [10]. Muttu et al. [11] proposed an algorithm for identifying the parts of the human face as well as the complete human face. The Viola–Jones technique was used to identify the human face [11]. Kulkarni et al. [12] proposed a Weighted Least Square sifting algorithm to identify the human Face Expression. The Gabor and Log Gabor function are used for the facial components. In the proposed algorithm SVM classifier is used for recognizing the human face [12].

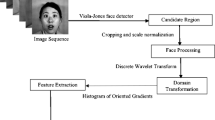

In the proposed work, there are five stages of processing:

-

1.

Image acquisition

-

2.

Face detection

-

3.

Pre-processing

-

4.

Feature extraction

-

5.

Classification

-

1.

Image acquisition In this input is taken by using a webcam or still image from folder. If the input selected by us webcam it returns image frame using get snapshot function. Or else browse image from the various database [2, 3]. The math works MATLAB provides live video capturing as a one library function and it can be used in calling camera or webcam. Matlab built the library which is used in applications [13].

-

2.

Face detection After image acquisition, image enhancement has to be applied to face image for low illumination, poor contrast, and long distance. The image visibility will be enhanced by this. Now, use fdlibmex to detect the face [2]. In this research, solve the issues of dull like. Cropping the face image and save into the database is the final step. This algorithm has the capacity to detect human face up to 60-° rotation [14].

-

3.

Pre-processing stage Resizing the image in the standard size is has to be done after face detection. Noisy pixels from an image are removed by median filtering. With the help of genetic algorithm and fuzzy-C means (GAFCM), we can identify the value of two neighbor class levels are nearly same pixel values [6, 15, 16].

-

4.

Feature extraction In this stage, filtered image is used for the process of feature extraction. Extraction of the features of an image is performed using DWT method. DWT decompose the image into four bands: low pass filter and high pass filter. It is a lossless compression method which means that this method does not degrade the quality of an image [15].

-

5.

Classification In the process of classification data is classified using RBF-SVM. It empowers the example acknowledgment between two classes via hunting down a choice surface that has most extreme separation from the nearest focuses in the preparation set which are named as bolster vectors.

3 Proposed Methodology

3.1 Contrast Enhancement

This is one of the most important stages in face recognition and detection will help to improve the face image before performing face detection as shown in Fig. 1. There are some variations in face images when compared to other methods. In the proposed work, the contrast stretching process is used and it adjusts the brightness of the objects which were in the image to get the clean visibility. The Grey level values in high contrast images are span over the full range. So, the remapping or structuring the gray values of low contrast image makes it to high contrast image with the gray values span over the full image [17].

3.2 Face Detector

The Nilsson algorithm is used as a base for the fdlibmex [6, 18] algorithm comprises a dull library containing methods. Successive Mean Quantization Transform (SMQT) and Sparse Network of Winnows (SNOW) are the methods available in this library. The result obtained from the original snow classifier was very well utilized by the split up snow and it can perform rapid detection by creating cascade classifier. In the proposed methodology three folds technique is used. Firstly, for the illumination and sensor insensitive operation in object recognition the local SMQT features are proposed, secondly, the speed up of original classifiers are maintained by the split up snow which was present in it. Finally, the combination of both classifier and features performs the frontal face and face poses detection [19].

3.3 Median Filtering

The noises found in the image are reduced by using a median filter [14, 20]. The median is the center value of all the values of the pixels in the neighborhood as shown in Fig. 2. In median filter, replace all the neighboring pixel value with the median of those values i.e.

-

Neighbourhood values: 05, 12, 19, 28, 31, 49, 69, 75, 87

-

Median Value: 31

Noise is any undesirable or unwanted signal. The electrical system used for storage, transmission and processing of the data and all the steps are the main contributor to add the noise in data. Most of the times we want to improve the quality of the image that was corrupted by noise.

3.4 Genetic Algorithm (GA)

GA is an optimization technique based on population and GA act as an alternative methodology for traditional optimization techniques [21, 22]. The main solution for this problem is the chromosome that was present in the population. The series of populations for each upcoming and succeeding generation are designed by GA and crossover and mutation are used as operators for principal search mechanisms—The optimization of given objective or fitness function is the main aim of the algorithm. Each perfect or effective solution to a chromosome is mapped by an encoding mechanism. Each chromosome that provides a satisfactory solution to the problem is evaluated by objective functions [23].

3.5 Fuzzy C-Means (FCM) Methods

In this proposed Method, calculating membership function can be carried out by using GA with FCM method [24]. The membership degree calculation and the cluster centers update are the main procedures of the FCM algorithm. The extent of each data point belongs to each cluster is indicated by membership degree and the values of cluster centers are updated by using this method.

uik denotes a degree of membership of xk in the ith cluster. A degree of fuzziness is controlled by the parameter m > 1. This denotes that in every cluster each data pattern has a degree of membership.

3.6 Feature Extraction Using Haar Wavelet Features

The feature extraction method called the domain of digital image processing frequently uses Two-dimensional Haar wavelet method. The 2-D Haar wavelets can take on several types, each used to detect edges along different directions. Different types of Haar wavelets are used by us to compute the feature vector [25]. The wavelet feature vector of the sub-image is calculated by us using these wavelets that were convolved with the cropped sub-image. Numbers of Haar feature vector was obtained by us for each sub-image. SVM receives this feature vector for classification [10, 26,27,28].

4 Result Analysis

MATLAB is a powerful tool through which we can analysis and visualization the operations. The proposed method was evaluated in two different steps:

-

Face detection

-

Face recognition

In face detection, the proposed methodology was evaluated in five standard databases such as JAFFE [29], FEI [30,31,32,33,34], LFW-a [35,36,37], CMU + MIT [38,39,40,41,42] and own database Figs. 3, 4, 5, 6, 7, 8, 9, 10, 11 and 12. In face recognition, the proposed methodology was evaluated in Cohn–Kanade standard database [43] (CK+) and JAFFE database [29] as shown in Figs. 13, 14, 15, 16, 17 and 18. In the proposed methodology 5-fold cross-validation was used to evaluate the performance. Finally, we used SVM classifier for trained the data with concatenated the Haar wavelet features extracted from the discriminative characteristics between the given pair of expression classes.

Calculate the average precision (P) and recall (R) of the system using below formula:

-

1.

Calculate matching rate (MR) of the system using this formula:

where TP stands for true positive and TN stands for true negative and N is the size of data set.

4.1 Face Recognition Result

In Fig. 16, it shows that the proposed methodology gives better performance of existing model on CK+ dataset. If we choose the best results among the results, the highest classification rate of the Proposed can reach approximately 100%. According to our experimental results, as shown in Tables (1, 2 and 3), the proposed methodology gives the better performance of existing model on CK+ dataset.

According to Fig. 18, it shows that the proposed methodology gives better performance on JAFEE dataset as compared with existing methodology. In the same manner, our experimental results as shown in Tables (4 and 5), it shows that the proposed methodology gives better performance on JAFEE dataset as compared with the existing methodology.

5 Conclusion

In this paper, we proposed an algorithm for face detection and recognition. In this methodology RBF-SVM classifier and Haar Wavelet Transform (HWT) with GAFCM is used for feature extraction. The RBF-SVM classifier used attributes such as left eye, right eye, mouth, face and nose for identify the various expression of particular face. Finally we got better result on both the datasets 95.6% and 88.08% respectively CK+ and JAFEE dataset. Average time is also less as compared to existing methodology. In the other part Face detection on various databases is reached approximate 100% in all the standard databases. In the future work, we apply this algorithm for feature extraction and classification for the various dataset.

References

Sheikh, C.-S., & Sharma, S. (2016). A Survey paper on face detection and recognization with genetic algorithm. International Journal of Research in Applied Science and Engineering Technology (IJRASET), 4(6), 370–375.

Kumar, S., Singh, S., & Kumar, J. (2017). A study on face recognition techniques with age and gender classification. In IEEE international conference on computing, communication and automation (ICCCA).

Ma, S., & Bai, L. (2016). A face detection algorithm based on AdaBoost and new Haar-Like feature. In 7th IEEE international conference on software engineering and service science (ICSESS) (pp. 651–654).

Saad, I. A., George, L. E., & Tayyar, A. A. (2014). Accurate and fast pupil localization using contrast stretching, seed filling, and circular geometrical constraints. Journal of Computer Science, 10(2), 305–315.

Kumar, S., Singh, S., & Kumar, J. (2017). A comparative study on face spoofing attacks. In IEEE international conference on computing, communication and automation (ICCCA).

Kumar, S., Singh, S., & Kumar, J. (2017). Automatic face detection using genetic algorithm for various challenges. International Journal of Scientific Research and Modern Education, 2(1), 197–203.

Dey, A. (2016). A contour-based procedure for face detection and tracking from the video. In 3rd IEEE international conference on recent advances in information technology (RAIT) (pp. 483–488).

Lin, C.-Y., Fu, J. T., Wang, S.-H., & Huang, C.-L. (2016). New face detection method based on multi-scale histograms. In Second IEEE international conference on multimedia big data (BigMM) (pp. 229–232).

Liuliu, W., & Mingyang, L. (2016). Multi-pose face detection research based on AdaBoost. In Eighth IEEE international conference on measuring technology and mechatronics automation (ICMTMA) (pp. 409–412).

Chihaoui, M., Elkefi, A., Bellil, W., & Amar, C. B. (2015). Implementation of skin color selection prior to Gabor filter and neural network to reduce the execution time of face detection. In 15th IEEE international conference on intelligent systems design and applications (ISDA) (pp. 341–346).

Muttu, Y., & Virani, H. G. (2015). Effective face detection, feature extraction & neural network based approaches for facial expression recognition. In IEEE international conference on information processing (ICIP) (pp. 102–107).

Kulkarni, K. R., & Bagal, S. B. (2015). Facial expression recognition. In IEEE international conference on information processing (ICIP) (pp. 535–539).

Das, S., & De, S. (2016). Multilevel color image segmentation using modified genetic algorithm (MfGA) inspired fuzzy c-means clustering. In Second IEEE international conference on research in computational intelligence and communication networks (ICRCICN) (pp. 78–83).

Kumar, S., Deepika, & Kumar, M. (2017). An improved face detection technique for a long distance and near-infrared images. International Journal of Engineering Research and Modern Education, 2(1), 176–181.

Ren, X. (2009). An optimal image thresholding using a genetic algorithm. In IEEE international forum on computer science-technology and applications (IFCSTA’09) (pp. 169–172).

Xie, S., & Nie, H. (2013). Retinal vascular image segmentation using genetic algorithm Plus FCM clustering. In Third IEEE international conference on intelligent system design and engineering applications (ISDEA) (pp. 1225–1228).

Face Detection. http://www.mathworks.com/matlabcentral/fileexchange/20976.

Sangeetha, Y., Latha, P. M., Narasimhan, Ch., & Prasad, R. S. (2012). Face detection using SMQT techniques. International Journal of Computer Science and Engineering Technology (IJCSET), 2(1), 780–783.

Hwang, H., & Haddad, R. A. (1995). Adaptive median filters: New algorithms and results. IEEE Transactions on Image Processing, 4(4), 499–502.

Srinivas, M., & Patnaik, L. M. (1994). Genetic algorithms: A survey. Computer, 27(6), 17–26.

Goldberg, D. E. (1989). Genetic algorithms in search, optimization an machine learning. Boston: Addison-Wesley.

Halder, A., Kar, A., & Pramanik, S. (2012). Histogram-based evolutionary dynamic image segmentation. In 4th international conference on electronics computer technology (pp. 585–589).

Wikaisuksakul, S. (2014). A multi-objective genetic algorithm with fuzzy c-means for automatic data clustering. Applied Soft Computing, 24(3), 679–691.

Li, J., Yan, X., & Zhang, D. (2010). Optical braille recognition with haar wavelet features and support-vector machine. In International conference on computer, mechatronics, control and electronic engineering (CMCE) (pp. 64–67).

Cortes, C., & Vapnik, V. (1995). Support-vector networks. Machine Learning, 20(3), 273–297.

Hsu, C.-W., & Lin, C.-J. (2002). A comparison of methods for multiclass support vector machines. IEEE Transactions on Neural Networks, 13(2), 415–425.

Happy, S. L., & Routray, A. (2015). Automatic facial expression recognition using features of salient facial patches. IEEE Transactions on Affective Computing, 6(1), 1–12.

Viola, P., & Jones, M. (2004). Robust real-time face detection. International Journal of Computer Vision, 57(2), 137–154.

Lyons, M. J., Akamatsu, S., Kamachi, M., & Gyoba, J. (1998). Coding facial expressions with Gabor wavelets. In 3rd IEEE international conference on automatic face and gesture recognition (pp. 200–205).

Tenorio, E. Z., & Thomaz, C. E. (2011). Analise multilinear discriminate de formas frontalis de imagens 2D deface. In Proceedings of the X Simposio Brasileiro de Automacao Inteligente (SBAI) (pp. 266–271), Universidade Federal de Sao Joao del Rei, Sao Joao del Rei, Minas Gerais, Brazil, 18th–21st 2011.

Thomaz, C. E., & Giraldi, G. A. (2010). A new ranking method for principal components analysis and its application to face image analysis. Image and Vision Computing, 28(6), 902–913.

Amaral, V., Figaro-Garcia, C., Gattas, G. J. F., & Thomaz, C. E. (2009). Normalizacao espacial de imagens frontais de face em ambientes controlados e nao-controlados (Vol. 1, no. 1). Periodico Cientifico Eletronico da FATEC Sao Caetano do Sul (FaSCi-Tech) (in Portuguese).

Amaral, V., & Thomaz, C. E. (2008) Normalizacao Espacial de Imagens Frontais de Face. Technical report 01/2008, Department of Electrical Engineering, FEI, São Bernardo do Campo, São Paulo, Brazil (in Portuguese).

L. L. de Oliveira Jr., & Thomaz, C. E. (2006). Captura e Alinhamento de Imagens: Um Banco de Faces Brasileiro. Undergraduate technical report, Department of Electrical Engineering, FEI, São Bernardo do Campo, São Paulo, Brazil (in Portuguese).

Wolf, L., Hassner, T., & Taigman, Y. (2011). Effective face recognition by combining multiple descriptors and learned background statistics. IEEE Transactions on Pattern Analysis and Machine Intelligence (TPAMI), 33(10), 1978–1990.

Wolf, L., Hassner, T., & Taigman, Y. (2009). Similarity scores based on background samples. In Asian conference on computer vision (ACCV), Xi’an.

Taigman, Y., Wolf, L., & Hassner, T. (2009). Multiple one-shots for utilizing class label information. In The British machine vision conference (BMVC), London.

Schneiderman, H., & Kanade, T. (2000). A statistical method for 3D object detection applied to faces and cars. In IEEE conference on computer vision and pattern recognition (pp. 746–751).

Schneiderman, H., & Kanade, T. (2000). A histogram-based method for detection of faces and cars. In International conference on image processing (pp. 504–507).

Schneiderman, H., & Kanade, T. (1998). Probabilistic modeling of local appearance and spatial relationships for object recognition. In IEEE computer society conference on computer vision and pattern recognition (pp. 45–51).

Rowley, H. A., Baluja, S., & Kanade, T. (1998). Neural network-based face detection. IEEE Transactions on Pattern Analysis and Machine Intelligence, 20(1), 23–38.

Rowley, H. A., Baluja, S., & Kanade, T. (1998) Rotation invariant neural network-based face detection. In IEEE computer society conference on computer vision and pattern recognition (pp. 38–44).

Lucey, P., Cohn, J. F., Kanade, T., Saragih, J., Ambadar, Z., & Matthews, I. (2010). The extended Cohn–Kanade dataset (ck +): A complete dataset for action unit and emotion-specified expression. In IEEE computer society conference on computer vision and pattern recognition-workshops (pp. 94–101).

Lajevardi, S. M., & Wu, H. R. (2012). Facial expression recognition in perceptual color space. IEEE Transactions on Image Processing, 21(8), 3721–3732.

Lekdioui, K., Messoussi, R., Ruichek, Y., Chaabi, Y., & Touahni, R. (2017). Facial decomposition for expression recognition using texture/shape descriptors and SVM classifier. Signal Processing: Image Communication. https://doi.org/10.1016/j.image.2017.08.001.

Vo, D. M., & Le, T. H. (2016). Deep generic features and SVM for facial expression recognition. In 2016 3rd national foundation for science and technology development conference on information and computer science (pp 80–84). IEEE.

Zhi, R., Flierl, M., Ruan, Q., & Kleijn, W. B. (2011). Graph-preserving sparse nonnegative matrix factorization with application to facial expression recognition. IEEE Transactions on Systems, Man, and Cybernetics, Part B (Cybernetics), 41(1), 38–52.

Zhong, L., Liu, Q., Yang, P., Liu, B., Huang, J., & Metaxas, D. N. (2012). Learning active facial patches for expression analysis. In 2012 IEEE conference on computer vision and pattern recognition (CVPR) (pp. 2562–2569).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Kumar, S., Singh, S. & Kumar, J. Automatic Live Facial Expression Detection Using Genetic Algorithm with Haar Wavelet Features and SVM. Wireless Pers Commun 103, 2435–2453 (2018). https://doi.org/10.1007/s11277-018-5923-y

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11277-018-5923-y