Abstract

In this study, seven types of first-order and one-variable grey differential equation model (abbreviated as GM (1, 1) model) were used to predict hourly particulate matter (PM) including PM10 and PM2.5 concentrations in Banciao City of Taiwan. Their prediction performance was also compared. The results indicated that the minimum mean absolute percentage error (MAPE), mean squared error (MSE), root mean squared error (RMSE), and maximum correlation coefficient (R) was 14.10%, 25.62, 5.06, and 0.96, respectively, when predicting PM10. When predicting PM2.5, the minimum MAPE, MSE, RMSE, and maximum R value of 15.24%, 11.57, 3.40, and 0.93, respectively, could be achieved. All statistical values revealed that the predicting performance of GM (1, 1, x (0)), GM (1, 1, a), and GM (1, 1, b) outperformed other GM (1, 1) models. According to the results, it revealed that GM (1, 1) GM (1, 1) was an efficiently early warning tool for providing PM information to the inhabitants.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In the past two decades, air pollution has improved in most cities in Western Europe, North American, as well as Asia. Air pollution reductions have resulted mainly from greater efficiency and pollution-control technologies in factories, power plants, and other facilities (Cunningham and Cunningham 2006). But the problems of serious air pollution are still reported frequently in many countries.

Among all air pollutants, the particulate matter (PM) concentrations are of particular concern, because high PM concentrations can cause human health problems (Pisoni and Volta 2009). Epidemiological researches have shown an association between ambient concentration of PM pollution and negative effects on human health (Pope 2000; Jaecker-Voirol and Pelt 2000). Pope (2000) reported that when 24-h average ambient PM10 concentrations increased by 10 mg m−3, the daily all-cause mortality would increase by 1% approximately, especially for cardiovascular and respiratory mortality. Therefore, developing an efficient forecasting and early warning system for providing air-quality information to the inhabitants becomes relatively important.

Typically, environmental data are very complex for modeling because interrelations between various components result in a complicated combination of relations. Models with reasonable accuracy have to consider physical and chemical relations among PM and other pollutants under various meteorological conditions simultaneously. However, the uncertainty problem will occur when above modeling approaches were adopted. One of the most important problems is the uncertainty from input data, including source identification, meteorological conditions, and relevant reaction mechanisms. No matter how good the inventory investigation was carried out in a large-scale modeling analysis, the uncertainties of input data in the mechanistic modeling process cannot be eliminated in reality.

Many other attempts to model the interrelations have also been carried out. Linear regression methods, for instance, have been widely employed for decades (Ryan 1995; Shi and Harrison 1997; Slini et al. 2006). Additionally, to adequately model complex, nonlinear phenomena, and chemical procedures, artificial neural networks (ANN) and fuzzy logic approach have been widely applied because they could simulate nonlinear data well (Pérez et al. 2000; Kolehmainen et al. 2001; Wang et al. 2003; Slini et al. 2006).

Although ANN could predict air pollutant concentrations successfully, it required a large quantity of data for constructing model. In order to simplify statistical complexity and gain consistent results from the investigation data for predicting air pollutant, the grey system theory (GST) is a suitable method.

The GST can resolve the problem of incomplete data and has been applied in our previous works (Deng 2002, 2005; Pai et al. 2007a, b 2008a, b, c). GST focuses on the relational analysis, model construction, and prediction of the indefinite and incomplete information. It requires only a small amount of data, and the better prediction results can be obtained.

There are many analysis methods in GST including grey model (GM). GM can be used to establish the relationship between many sequences of data, and its coefficients can be used to evaluate which sequence of data affects system significantly. If an efficient predicting method could be developed, a better control strategy could be sought.

The objectives of this study are listed as follows: (1) construct seven types of first-order and one-variable grey differential equation model (abbreviated as GM (1, 1) model) for predicting hourly PM10 and PM2.5 concentrations in Banciao City of Taiwan; (2) compare the prediction performance of seven types of GM (1, 1) model.

2 Materials and Methods

2.1 Dataset

The monitoring data from air-quality monitoring station located in Banciao City was selected for study (Fig. 1). The concentrations of PM10 and PM2.5 from 1st to 19th of April 2008 were investigated. They were sampled and investigated every hour, and their total number of data was 456. Among the data, 384 data points were used to obtain the coefficients of the models, and 72 data points were used as the observed values when evaluating the performance of the model. The mean value, standard deviation, maximum value, and minimum value of PM10 was 61.43, 28.90, 150.00, and 6.00 μg m−3, respectively. That of PM2.5 was 40.68, 20.36, 102.00, and 5.00 μg m−3, respectively. The meteorological condition was ignored in this study.

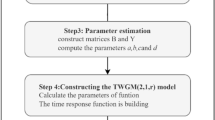

2.2 Grey Modeling Process

In a situation where information is lacking, using fewer (at least four) system information, one can create a GM to describe the behavior of the few outputs. By means of accumulated generating operation (AGO), the disorderly and the unsystematic data may become exponentially behaved such that a first-order differential equation can be used to characterize the system behavior. Solving the differential equation will yield a time response solution for prediction. Through inverse accumulated generating operation (IAGO), the forecast can be transformed back to the sequence of original series. A grey modeling process is described as follows.

Assume that a series of data with n samples is expressed as:

where the superscription (0) of X (0) represents the original series. Let X (1) be the first-order AGO of X (0), whose elements are generated from X (0):

where \( {x^{(1)}}(k) = \sum\limits_{i = 1}^k {{x^{(0)}}(i),} \;for\;k = 1,2, \cdots, n \). Further operation of AGO can be conducted to reach the r-order AGO series, X (r):

where \( {x^{(r)}}(k) = \sum\limits_{i = 1}^k {{x^{(r - 1)}}(i),} \;for\;k = 1,2, \cdots, n \). The IAGO is the inverse operation of AGO. It transforms the AGO-operational series back to the one with a lower order. The operation of IAGO for the first-order series is defined as follows: x (0)(1) = x (1)(1) and \( {x^{(0)}}(k) = {x^{(1)}}(k) - {x^{(1)}}(k - 1)\;for\;{\hbox{k}} = {2,3,} \cdots, {\hbox{n}} \). After extending this representation to the IAGO of r-order series, we have \( {x^{(r - 1)}}(k) = {x^r}(k) - {x^r}(k - 1)\;for\;{\hbox{k}} = {2,3,} \cdots, {\hbox{n}} \). The tendency of AGO can be approximated by an exponential function. Its dynamic behavior resembles differential equation. The grey model GM (1, 1) thus adopts a first order differential equation to fit the AGO-operational series,

where the parameter a is the developing coefficient and b is the grey input. According to the definition, GM (1, 1) is that the order in grey differential equation is equal to 1 and defined as follows:

where \( {z^{(1)}}(k) = 0.5{x^{(1)}}(k - 1) + 0.5{x^{(1)}}(k) \) k = 2, 3, 4,…, n. Expanding Eq. 5, we have

Transforming Eq. 6 into matrix form, we have

Then, the coefficients can be estimated by solving matrix, \( p = \left[ {\begin{array}{*{20}{c}} a \\b \\\end{array} } \right] = {({B^T}B)^{ - 1}}{B^T}Y \), where \( p = \left[ {\begin{array}{*{20}{c}} a \\b \\\end{array} } \right] \), \( B = \left[ {\begin{array}{*{20}{c}} { - {z^{(1)}}(2)} \hfill & 1 \hfill \\{ - {z^{(1)}}(3)} \hfill & 1 \hfill \\{\quad \; \vdots } \hfill & \vdots \hfill \\{ - {z^{(1)}}(n)} \hfill & 1 \hfill \\\end{array} } \right] \), and \( Y = \left[ {\begin{array}{*{20}{c}} {{x^{(0)}}(2)} \hfill \\{{x^{(0)}}(3)} \hfill \\{\quad \vdots } \hfill \\{{x^{(0)}}(n)} \hfill \\\end{array} } \right] \).

Sometimes, singularity would be encountered when treating the increasingly accumulated data. Then, the inverse matrix could not be found. In our study, the increasingly accumulated data would not result in singularity due to their values, and numbers were not too high. Additionally, the whitening type of GM (1, 1) model (or in terms of GM (1, 1, W)) that can be used for prediction is described as:

Additionally, there still are several types of GM (1, 1) model which is derived from Eq. 4 as follows.

Connotation type of GM (1, 1): GM (1, 1, C)

Grey difference type of GM (1, 1): GM (1, 1, x (1))

where \( \beta = \frac{b}{{1 + 0.5a}} \) and \( \alpha = \frac{a}{{1 + 0.5a}} \).

IAGO type of GM (1, 1): GM (1, 1, x (0))

Parameter-a type of GM (1, 1): GM (1, 1, a)

Parameter-b type of GM (1, 1): GM (1, 1, b)

Exponent type of GM (1, 1): GM (1, 1, e)

When adopting GM (1, 1, x (0)), GM (1, 1, a), GM (1, 1, b), and GM (1, 1, e), x (0), (2) must be calculated as follows:

All seven types of GM (1, 1) model and their denotation are summarized in Table 1. Details for derivation of these GM (1, 1) model can be referred to Deng (2002, 2005).

2.3 Error Analysis

In order to evaluate the prediction accuracy of GM (1, 1), the mean absolute percentage error (MAPE), mean square error (MSE), root mean square error (RMSE), and correlation coefficient (R) were employed and described as,

where obs i is the observed value, pre i is the prediction value, and \( \overline {\hbox{obs}} \) and \( \overline {\hbox{pre}} \) are the average values of observed values and prediction values, respectively.

3 Results and Discussion

3.1 Determination of Grey Parameters

For determining the parameters of GM (1, 1), the observed PM10 and PM2.5 data were substituted into Eq. 6 and the grey parameters were calculated by solving Eq. 7. When predicting PM10, parameters a and b were equal to 0.00032527 and 65.452, respectively. When predicting PM2.5, a = 0.0010902 and b = 49.911. According to Eq. 4, the parameter a (developing coefficient) will determine the predicting trend; meanwhile, parameter b (grey input) will determine the interception of Eq. 4.

3.1.1 Simulation of PM10

Table 2 shows all the values of MAPE, MSE, RMSE, and R using seven types of GM (1, 1) model. The 1st to 384th data were used for constructing model, 385th to 456th data were used to evaluate the fitness. All statistical values revealed that the predicting performance of GM (1, 1, x (0)), GM (1, 1, a), and GM (1, 1, b) prevailed. Figure 2a–c depict the prediction results of PM10 using seven types of GM (1, 1) model.

As shown in Table 2, when constructing, MAPEs between the predicted and observed values of PM10 were between 13.77% and 13.98% using GM (1, 1, x (0)), GM (1, 1, a), and GM (1, 1, b), but they were 54.33–55.05% using other GM (1, 1) models. When predicting, the MAPEs were 14.10–14.11% when adopting GM (1, 1, x (0)), GM (1, 1, a), and GM (1, 1, b), but they were between 56.27% and 151.14% when using other GM (1, 1) models.

The MSE values of 91.03–91.17 using GM (1, 1, x (0)), GM (1, 1, a), and GM (1, 1, b) were lower than those of 826.57–2069.41 using other GM (1, 1) models when model constructing. When predicting, the values of 25.62–25.69 using GM (1, 1, x (0)), GM (1, 1, a), and GM (1, 1, b) were also lower than those of 405.33–882.76 using other GM (1, 1) models. When constructing, the RMSE values of 9.54–9.55 using GM (1, 1, x (0)), GM (1, 1, a), and GM (1, 1, b) were lower than those of 28.75–45.49 using other GM (1, 1) models. The RMSE value of 5.06–5.07 using GM (1, 1, x (0)), GM (1, 1, a), and GM (1, 1, b) were also lower than those of 20.13–29.71 using other GM (1, 1) models when predicting.

When constructing, R value between the predicted and observed values of PM10 was 0.95 using GM (1, 1, x (0)), GM (1, 1, a), and GM (1, 1, b), but they were −0.01–0.08 using other GM (1, 1) models. When predicting, the R was 0.96 when adopting GM (1, 1, x (0)), GM (1, 1, a), and GM (1, 1, b), but they were between −0.03 and −0.01 when using other GM (1, 1) models.

Comparable observations were similarly made by Slini et al. (2006). Slini et al. (2006) adopted linear regression analysis (LRA), classification and regression trees (CART), principal component analysis (PCA), and neural network (NN) for predicting daily concentration levels of PM10. The RMSE values for LRA, CART, PCA, and NN were 11.236, 33.55, 8.142, and 7.126, respectively. Díaz-Robles et al. (2008) used four types of model including multiple linear regression (MLR) model, Box–Jenkins time series (ARIMA) model, ANN, and a hybrid ARIMA-ANN model to forecast PM in urban areas. The RMSE values for MLR, ARIMA, ANN, and hybrid model were 28.39, 28.46, 28.57, and 8.80, respectively. In our study, the RMSE value of 5.06 using GM (1, 1, x (0)), GM (1, 1, a), and GM (1, 1, b) could be obtained to predict hourly PM10 concentrations.

3.1.2 Simulation of PM2.5

The prediction results of PM2.5 using seven types of GM (1, 1) model are shown in Table 3. As the results of PM10, all statistical values revealed that the predicting performance of GM (1, 1, x (0)), GM (1, 1, a), and GM (1, 1, b) outperformed other models. Figure 3a–c shows their results.

As shown in Table 3, when constructing, MAPEs between the predicted and observed values of PM2.5 were between 13.62% and 13.86% using GM (1, 1, x (0)), GM (1, 1, a), and GM (1, 1, b), but they were 51.36–54.38% using other GM (1, 1) models. When predicting, the MAPE was 15.24–15.25% when adopting GM (1, 1, x (0)), GM (1, 1, a), and GM (1, 1, b), but they were between 41.53% and 109.94% when using other GM (1, 1) models.

The MSE values of 42.00–42.46 using GM (1, 1, x (0)), GM (1, 1, a), and GM (1, 1, b) were lower than those of 386.32–1033.61 using other GM (1, 1) models when model constructing. When predicting, the values of 11.57–11.60 using GM (1, 1, x (0)), GM (1, 1, a), and GM (1, 1, b) were also lower than those of 152.55–232.98 using other GM (1, 1) models. When constructing, the RMSE values of 6.48–6.52 using GM (1, 1, x (0)), GM (1, 1, a), and GM (1, 1, b) were lower than those of 19.66–32.15 using other GM (1, 1) models. The RMSE values of 3.40–3.41 using GM (1, 1, x (0)), GM (1, 1, a), and GM (1, 1, b) were also lower than those of 12.35–15.26 using other GM (1, 1) models when predicting.

When constructing, R value between the predicted and observed values of PM2.5 was 0.95 using GM (1, 1, x (0)), GM (1, 1, a), and GM (1, 1, b), but they were 0.16–0.25 using other GM (1, 1) models. When predicting, the R was 0.93 when adopting GM (1, 1, x (0)), GM (1, 1, a), and GM (1, 1, b), but they were between 0.04 and 0.05 when using other GM (1, 1) models.

The reason why the predicting performances of GM (1, 1, x (0)), GM (1, 1, a), and GM (1, 1, b) outperformed other GM (1, 1) model could be explained as follows. In the structure of GM (1, 1, x (0)), GM (1, 1, a), and GM (1, 1, b), the point at time k is strongly influenced by the point at time k − 1. For the time series of hourly PM, the value of PM did not vary significantly between hours. Therefore, the predicting performances of GM (1, 1, x (0)), GM (1, 1, a), and GM (1, 1, b) outperform other GM (1, 1) model.

Additionally, in the mechanistically based modeling technique, the source identification, meteorological conditions, and relevant reaction mechanisms were adopted as the input variables. Then, the mechanistic models which consisted of lots of differential equations and kinetic reactions outputted the results which were calculated according to mass balance concept. In the GM (1, 1)-based modeling technique, the source identification, meteorological conditions, and relevant reaction mechanisms were not chosen as the input variables. Although the mechanisms were unclear, the whitening part of the GM (1, 1)-based model could serve as useful references to help observer realize more PM variation.

In the study proposed by Pérez et al. (2000), ANN, linear perceptron model, and persistence model were used to predict hourly average concentrations of PM2.5. The MAPE values for these three types of model were between 20% and 80%. In our study, the MAPEs lay between 15.24% and 15.25% for predicting hourly PM2.5 concentrations when adopting GM (1, 1, x (0)), GM (1, 1, a), and GM (1, 1, b).

According to the results, the GM (1, 1) model required very small sample size, as little as four sample points, but the modeling could result in very high predictability. Furthermore, the parameter estimation in GM (1, 1) model was only a procedure to fit a simple regression. Therefore, GM could be applied successfully in predicting PM when the information was not sufficient.

4 Conclusions

Seven types of GM (1, 1) model were used to predict hourly PM10 and PM2.5 concentrations in Banciao City of Taiwan. Their prediction performance was also compared. The conclusions can be drawn as follows. All statistical values revealed that the predicting performance of GM (1, 1, x (0)), GM (1, 1, a), and GM (1, 1, b) outperformed other models. When predicting PM10, the minimum MAPE, MSE, RMSE, and maximum R was 14.10%, 25.62, 5.06, and 0.96, respectively. When predicting PM2.5, the minimum MAPE, MSE, RMSE, and maximum R value of 15.24%, 11.57, 3.40, and 0.93, respectively, could be achieved. According to the results, it revealed that GM (1, 1) could predict the hourly PM variation even comparing with MLR and ANN. Additionally, GM (1, 1) was an efficiently early warning tool for providing PM information to the inhabitants.

References

Cunningham, W. P., & Cunningham, M. A. (2006). Principles of environmental science. Inquiry & applications. New York: McGraw-Hill.

Deng, J. (2002). The foundation of grey theory. Wuhan: Huazhang University of Science and Technology Press.

Deng, J. (2005). The primary methods of grey system theory. Wuhan: Huazhang University of Science and Technology Press.

Díaz-Robles, L. A., Ortega, J. C., Fu, J. S., Reed, G. D., Chow, J. C., Watson, J. G., et al. (2008). A hybrid ARIMA and artificial neural networks model to forecast particulate matter in urban areas: the case of Temuco, Chile. Atmospheric Environment, 42(35), 8331–8340.

Jaecker-Voirol, A., & Pelt, P. (2000). PM10 emission inventory in Ile de France for transport and industrial sources: PM10 re-suspension, a key factor for air quality. Environmental Modelling and Software, 15(6–7), 575–581.

Kolehmainen, M., Martikainen, H., & Ruuskanen, J. (2001). Neural networks and periodic components used in air quality forecasting. Atmospheric Environment, 35, 815–825.

Pai, T. Y., Hanaki, K., Ho, H. H., & Hsieh, C. M. (2007). Using grey system theory to evaluate transportation on air quality trends in Japan. Transportation Research Part D: Transport and Environment, 12(3), 158–166.

Pai, T. Y., Tsai, Y. P., Lo, H. M., Tsai, C. H., & Lin, C. Y. (2007). Grey and neural network prediction of suspended solids and chemical oxygen demand in hospital wastewater treatment plant effluent. Computers & Chemical Engineering, 31(10), 1272–1281.

Pai, T. Y., Chiou, R. J., & Wen, H. H. (2008). Evaluating impact level of different factors in environmental impact assessment for incinerator plants using GM (1, N) model. Waste Management, 28(10), 1915–1922.

Pai, T. Y., Chuang, S. H., Ho, H. H., Yu, L. F., Su, H. C., & Hu, H. C. (2008). Predicting performance of grey and neural network in industrial effluent using online monitoring parameters. Process Biochemistry, 43(2), 199–205.

Pai, T. Y., Chuang, S. H., Wan, T. J., Lo, H. M., Tsai, Y. P., Su, H. C., et al. (2008). Comparisons of grey and neural network prediction of industrial park wastewater effluent using influent quality and online monitoring parameters. Environmental Monitoring and Assessment, 146(1–3), 51–66.

Pérez, P., Trier, A., & Reyes, J. (2000). Prediction of PM2.5 concentrations several hours in advance using neural networks in Santiago, Chile. Atmospheric Environment, 34(8), 1189–1196.

Pisoni, E., & Volta, M. (2009). Modeling Pareto efficient PM10 control policies in Northern Italy to reduce health effects. Atmospheric Environment, 43(20), 3243–3248.

Pope, C. A. (2000). What do epidemiological findings tell us about health effects of environmental aerosols? Journal of Aerosol Medicine, 55, 1350–1354.

Ryan, W. F. (1995). Forecasting severe ozone episodes in the Baltimore metropolitan area. Atmospheric Environment, 29(17), 2387–2398.

Shi, J. P., & Harrison, R. M. (1997). Regression modelling of hourly NOx and NO2 concentrations in urban air in London. Atmospheric Environment, 31(24), 4081–4094.

Slini, T., Kaprara, A., Karatzas, K., & Moussiopoulos, N. (2006). PM10 forecasting for Thessaloniki, Greece. Environmental Modelling and Software, 21, 559–565.

Wang, W., Lu, W., Wang, X., & Leung, A. Y. T. (2003). Prediction of maximum daily ozone level using combined neural network and statistical characteristics. Environment International, 1049, 1–8.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Pai, TY., Ho, CL., Chen, SW. et al. Using Seven Types of GM (1, 1) Model to Forecast Hourly Particulate Matter Concentration in Banciao City of Taiwan. Water Air Soil Pollut 217, 25–33 (2011). https://doi.org/10.1007/s11270-010-0564-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11270-010-0564-0