Abstract

Inertial sensors based on micro-electro-mechanical systems (MEMS) technology, such as accelerometers and angular rate sensors, are cost-effective solutions used in inertial navigation systems in a broad spectrum of applications that estimate position, velocity and orientation of a system with respect to an inertial reference frame. Although they present several advantages in terms of cost and form factor, they are prone to various disturbances such as noise, biases, and random walk that degrade their orientation estimation. The task of an orientation filter is to compute an optimal solution for the attitude state, consisting of roll, pitch and yaw, through the fusion of angular rate, accelerometer, and magnetometer measurements, regardless of the underlying environmental constraints. The aim of this paper is threefold: first, it serves researchers and practitioners in the signal processing community seeking the most appropriate attitude estimators that fulfills their needs, shedding light on the drawbacks and the advantages of a wide variety of designs. Second, it serves as a survey and tutorial for existing estimator designs in the literature, assessing their design aspects and components, and dissecting their hidden details for the benefit of researchers. Third, a comprehensive list of algorithms is discussed for a fully functional inertial navigation system, starting from the navigation equations and ending with the filter equations, keeping in mind their suitability for power-limited embedded processors. The source code of all algorithms is published, with the aim of it being an out-of-box solution for researchers in the field. The reader will take away the following concepts from this article: understand the key concepts of an inertial navigation system; be able to implement and test a complete stand alone solution; be able to evaluate and understand different algorithms; understand the trade-offs between different filter architectures and techniques; and understand efficient embedded processing techniques, trends and opportunities.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

An integrated navigation system exploits the complementary characteristics of different navigation sensors, such as gyroscopes, accelerometers, magnetometers, and global navigation satellite systems GNSS, to increase the precision of the navigation solution. For example, it can compute a high quality pose estimate of a vehicle’s position and orientation (up to an accuracy of 0.00l degrees per roll, pitch and yaw axis [1]). Specifically, the underlying attitude (orientation) estimation problem is common to a wide area of applications, ranging from unmanned aerial vehicles (UAVs), virtual reality applications, underwater submersible systems, robots and ground vehicles, to medical instruments and surveying equipment. In addition, recent advances in MEMS have led to a very wide range of low-cost, light-weight and accurate components that increase the reliability of the navigation solution significantly. Nowadays, the navigation technique common to almost all integrated navigation systems is the strapdown inertial navigation technique. In a strapdown inertial navigation system (INS), an inertial measurement unit (IMU), mounted to the vehicle, senses accelerations and angular rates for all six degrees of freedom of the vehicle. From this data, a strapdown algorithm (SDA) can compute a navigation solution, assuming that initial position, velocity and attitude are known [2]. INS-SDAs must be reliable and computationally-efficient in order to suit low-end applications with power-limited processing capabilities [3].

The brain of a typical robot vehicle is called a navigation computer or controller [4]. The controller uses on-board sensors to estimate its current position and orientation. Figure 1 shows a simplified block diagram of a generic autonomous vehicle computer, including a mission controller, a state estimator and a command controller. State estimation is implemented by fusing the raw measurements from a set of state-observing sensors, and forming an estimate of the vehicle’s state (position and attitude) [5]. The vehicle controller algorithms automatically manipulate the actuators on-board the vehicle to achieve a set of trajectory commands using the system states (position and orientation) as feedback. The trajectory commands are generated by the mission controller, which could be a human operator or a set of algorithms that convert mission objectives into trajectory commands [6] (Fig. 1).

This paper aims at providing an overview of various attitude estimation techniques, building up the knowledge of the reader from basic principles, as well as providing insight in an intuitive manner for concepts hidden between the lines of sophisticated equations governing the system as a whole. The rest of the article is organized as follows:

-

Section 2 provides background on the context of why attitude estimation filters are important.

-

Section 3 gives a basic overview of attitude estimators currently in use, their components and applications.

-

Section 4 describes various coordinate systems used in inertial navigation systems and defines the transformation of coordinates from one frame to another. Of primary concern is their relative orientation (Fig. 2).

-

Section 5 discusses and elaborates on applied inertial navigation algorithms, and presents efficient pseudo-codes of various INS algorithms.

-

Section 6 develops the basic INS error equations and gives insights on deriving a simplified system model of the same error equations without delving deeply into rigorous mathematical proofs. This aims at giving the reader intuition into one of the most basic components of an inertial navigation system.

-

Section 7 discusses the components and the computational aspects of Kalman filters. Also efficient algorithms targeted for embedded processors are presented.

-

Section 8 is dedicated to insights of the Kalman filter implementations. It points the readers’ attention to some of the practical aspects to be considered when designing an attitude estimation filter.

-

Section 9 presents different approaches to solving the attitude estimation problem, which are very efficient in terms of computational load and power consumption.

2 Background on Filters

In this section, we provide a background introduction of the position of attitude estimation filters in the context of inertial navigation systems in general. We also demonstrate the development phases, and provide a brief description of the major mile-stones in its history.

2.1 Attitude Estimation Filters and Inertial Systems

The heart of any inertial navigation system is a fully calibrated and embedded inertial measurement unit (IMU) like the 3DM-CV5 from LORD MicroStrain or the series of IMUs from XSens Technologies BV (Fig. 3.). IMUs should deliver accurate temperature-compensated (see Section 8 for sensor calibration and compensation) inertial sensor data from three gyroscopes and three accelerometers to a navigation floating-point digital signal processor (DSP) [7, 8].

Commercial attitude and heading reference systems with built-in IMUs from XSens Technologies BV and LORD MicroStrain, with three accelerometers, three gyroscopes, three magnetometers and three temperature sensors for sensor calibration. These IMUs include coning and sculling compensation (see Section 5), which enables them to deliver attitude data at low rates without loosing accuracy.

Small angular increments (dϕFootnote 1) terms obtained from gyroscope sensors are compensated for fine gyro-biasFootnote 2 corrections and then integrated using fast quaternion algorithms (Section 5) to derive a 3 × 3 (9 element) direction cosine matrix (Fig. 4), which defines the instantaneous orientation of the vehicle relative to the local level earth-centered coordinates (North, East, Down) [9, 10]. Sensed velocity increments (dVFootnote 3) obtained from accelerometers are then transformed into delta velocity incremental components (with the aid of the direction cosine matrix) in the local-level earth-referenced coordinate frame.

The inertial measurement unit is the basic element in any inertial navigation system. Raw data acquired from the IMU in the form of delta velocities and delta angles are integrated and converted to the navigation frame. When in the navigation frame, delta velocities can be added to estimated wind speeds to predict true airspeed and thus predict air-distances. Air distances are essential in estimating the amount of energy consumption (fuel or battery) for any flying vehicle.

Since the IMU also senses gravity, the delta velocities contain components of integrated gravity [11]. To compensate for this gravity component in the down direction, we subtract it from the delta-velocity components and then integrate to give velocity components in the local-earth referenced coordinate frame (Vn, Ve and Vd), which are subsequently integrated further to produce updated values of latitude, longitude and altitude. Using the direction cosine matrix, heading, roll and pitch values are computed [12].

Usually a high speed Kalman filter propagated and updated at a predefined computation rate is used to estimate, align, and correct system computed states and residual fine inertial sensor bias values. This is done using measurement aiding from multiple sources including, GPS-receiver’s position and velocity, barometric pressure sensors and magnetic heading. Velocity measurements is required to enable the system to maintain a mathematical representation of horizontal [13]. When the vehicle is moving, and especially when velocity is changing, you have no means of separating sensed gravitational acceleration from true acceleration, and a slight mathematical misalignment in your horizontal model will result in erroneous measurements of acceleration (see Section 6) since you will be sensing a component of gravity. If you have a velocity reference (from airspeed sensors, wheel-encoders or GPS, etc.) you can correct the misalignment, maintain a true representation of horizontal, and integrate acceleration and velocity correctly [14]. The way one maintains alignment in modern day navigation systems is to use a Kalman filter [15].

2.2 Development History

The fundamental principles (laws of mechanics and gravitation) on which inertial navigation is based was discovered by Isaac Newton in the seventeenth century [16]. Despite of this, it was about another two centuries before inertial navigation techniques could be demonstrated. A brief chronology of the history of inertial navigation systems is given as follows:

-

1852 - The gyroscopic effect was discovered by Foucault who was the first to use this word.

-

1923 - Schuler invents a device that enables a vertical reference to be defined [17].

-

1920 - Directional gyroscopes and artificial horizon instruments were produced for aircrafts.

-

1930 Boykow introduced the idea of using accelerometers and gyroscopes to build a functional inertial navigation system.

-

1949 - The first publication suggesting the strapdown inertial navigation concepts.

-

1950’s - The accuracy of gyroscopes increases incredibly, reducing their errors from 15∘/hour to about 0.01∘/hour.

-

1960’s - The start of the ring laser gyroscope and wide spread of the so-called stable platform technology.

-

1961 - NASA awarded MIT laboratory (later to become the Charles Stark Draper Laboratory), a contract for preliminary design study of a guidance and navigation system for Apollo [18, 19].

-

1970’s - Advances in technology converged to make strapdown systems available [20].

-

1980’s - Developments of higher-order gravity models enabled trajectory accuracy improvements of approximately an order of magnitude [21, 22]

-

1990’s -A non-gyroscopic inertial measurement unit was proposed that consisted of a triad of accelerometers mounted on three orthogonal platforms rotating at constant angular velocities [23].

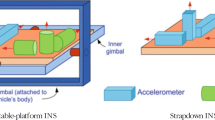

Nearly all IMUs fall into one of the two categories; stable platform systems Fig. 5b or, strapdown systems, Fig. 5a. The difference between the two categories is the frame of reference in which the rate-gyroscopes and accelerometers operate.

The strapdown system replaces gimbals with a computer that simulates their presence electronically. In the strapdown system, the gyroscopes and accelerometers are rigidly mounted to the vehicle structure so that they move with the vehicle. In a three axis gimballed platform, the gyros alone will try to maintain the platform aligned in inertial space. If the platform is operating in local-level coordinates, the navigation computer must keep the platform horizontal. It does this by sending command signals to the gyros that otherwise would fight the gimbals motion.

2.2.1 Stable Platform Systems

In stable platform system types, the inertial sensors are mounted on a platform which is isolated from any external rotational motion. In other words the platform is held in alignment with the global frame. This is achieved by mounting the platform using gimbals (frames) which allow the platform freedom in all three axes. The platform mounted gyroscopes detect any platform rotations. These signals are fed back to torque motors which rotate the gimbals in order to cancel out such rotations, hence keeping the platform aligned with the global frame. To track the orientation of the device the angles between adjacent gimbals can be read using angle pick-offs. To calculate the position of the device the signals from the platform mounted accelerometers are double integrated. Note that it is necessary to subtract acceleration due to gravity from the vertical channel before performing the integration.

2.2.2 Strapdown Systems

In strapdown systems, the inertial sensors are mounted rigidly onto the device, and therefore output quantities are measured in the body frame rather than the global frame. To keep track of orientation the signals from the rate gyroscopes are ‘integrated’, as described in Section 5. To track position the three accelerometer signals are resolved into global coordinates using the known orientation, as determined by the integration of the gyro signals. The global acceleration signals are then integrated as in the stable platform algorithm.

Stable platform and strapdown systems are both based on the same underlying principles. Strapdown systems have reduced mechanical complexity and tend to be physically smaller than stable platform systems. These benefits are achieved at the cost of increased computational complexity. As the cost of computation has decreased strapdown systems have become the dominant type of INS.

More recently, there has been significant developments in inertial sensors, especially gyroscopes with large dynamic range giving the strapdown principles opportunity to be realized. This has enabled the complexity and size of inertial navigation systems to be reduced, as well as enabling reliable [24] inertial sensors to be produced at a relatively inexpensive price, which led to significant advancements in a diversity of applications (see Section 3).

3 Aided Inertial Navigation Systems

The inspiration of any system integration concept, is to get superior execution than would be conceivable by any of the stand-alone systems. This section starts with a look at the qualities of GNSS and INS systems that make them so appropriate to combine together [25]. The subtleties for combining the systems together will be examined afterward within this section.

One of the imperative points of interest of inertial (gyroscopes and accelerometers) systems is that they require no interaction with the environment past the client. This is attractive particularly to clients where outside supporting cannot be depended upon or is rare. In another sense, no external interference besides the client is needed for the INS to work properly. On the contrary, GNSS systems, which depend on signals transmitted from satellites, obviously, can’t be ensured in all cases, and transitory blackouts going from seconds to minutes might be conceivable, contingent upon the application and working environment (see Fig. 6).

The only restriction concerning the output rate of the inertial system, is the computational power of the INS host computer. Some INS’s are able of delivering the navigation state vector at 100 Hz or more. On the other hand, most GNSS receivers have data rates of 1 to 20 Hz, in spite of the fact that a few specialized receivers can give yield up to 100 Hz. Expressed in an another way, the bandwidth of the navigation states delivered by inertial system is regularly much higher than with GNSS, which is vital in guidance and control and for high-dynamic applications.

GNSS and INS are complementary in terms of the information they provide. In particular, despite the fact that GNSS can provide an attitude solution, this is usually dodged in practice because it includes employing multiple receiver antennas and expensive equipment, while attitude is the main output of INS algorithms.

Most critically, GNSS and INS systems are also complementary in terms of their errors (Table 1). While low-cost INS inertial sensors are error unbounded, GNSS provides velocity and position estimates that are limited and bounded in terms of their errors. Also, GNSS systems are dominated by high-frequency errors while INS systems are susceptible to low-frequency errors due to the integration (effectively a low-pass filter) of the mechanization equations (see Section 6).

With respect to what has been mentioned, whenever GNSS and INS systems are fused, the GNSS can deliver high-fidelity position and velocity measurements that can bound the INS system generated errors, which in turn delivers high frequency navigation states (attitude, position and velocity) needed for guidance and control of vehicles. The INS system can also maintain good accuracy in case of outages of GNSS during temporary blockage of receiver antennas. These are the main reasons that motivate the integration of both systems nowadays.

In this article, we will demonstrate to the reader how both systems (INS and GNSS) can be fused together for an optimal navigation solution. It is helpful to always remember that the navigation solution is obtained by integrating the acceleration readings to obtain velocity and by double integrating the sensed accelerometers to obtain position.

3.1 Applications of Inertial Navigation Systems

Inertial navigation systems are used extensively in every day applications, covering aircraft navigation, spacecrafts, robots, unmanned aerospace vehicles and ships. As well, many novel applications include, active suspension of high performance racing cars, Stewart platform simulators and surveying of underground oil pipelines and wells. They can also be applied to many advanced medical equipment, such as MRI devices, surgical robots and intelligent beds. The use of inertial navigation systems is widely spreading in the medical field, for example, in the manufacturing of wheel chairs based on inertial systems. They have been placed on head trackers of disabled people where they can choose where to go and in what direction solely by moving their heads.

Due to such diversity of fields where inertial systems may be applied, a broad range of inertial sensor accuracy is required (especially for gyroscopes, see Fig. 7).

Also, since inertial systems differ in the amount of time they will be required to report accurate data, it is necessary to choose the sensors accordingly. For example, many airborne systems may need to provide accurate position and attitude data for several hundreds of kilometers or several hours. In this instance, it is necessary to rely on inertial sensors having very low residual gyroscope biases, having the order of 0.001 degrees per hour. Other cases involving marine or space applications may be required to provide accurate data for weeks or even months. In these extreme cases, gyroscopes having bias errors on the order of 0.0001 degrees per hour are mandatory. In some cases such as torpedo guidance operating for a few minutes, it is sufficient to rely on sensors with moderate accuracy (0.1 to 100 degrees per hour, see Fig. 7).

4 Coordinate Frames and Transformations

The attitude of a vehicle is defined as its orientation with respect to a reference frame. It is substantial to understand the different coordinate frames used in inertial navigation systems and their transformations to grasp its concepts. In this section we will discuss the basic coordinate frames that have three orthogonal unit vectors and that follow the right-hand rule [29].

4.1 Coordinate Frames

The measurement sensed by an Inertial Measurement Unit (IMU) are three orthogonal components of the body rotation rates and three accelerations in a coordinate frame, which is not directly related to any coordinate frame. These measurements have to be analytically integrated and transformed through several coordinate frames. It is important therefore that all coordinate frames involved in the transformation of the measurements, and results of integration are well defined before any discussion of an inertial navigation system is presented.

4.1.1 Earth-Centered Inertial (ECI) Frame

Newton defines the inertial frame as the frame of reference that does not rotate or accelerate.

Such a frame is not practically realized although, theoretically well defined. It is best approximated as one that is fixed with respect to the distant stars. For all practical purposes the inertial frame can be treated as the frame that has the following (see Fig. 8):

-

xi-axis towards the mean vernal equinox.

-

yi-axis completes a right handed system.

-

zi-axis towards the north celestial pole.

4.1.2 Earth-Centered, Earth-Fixed (ECEF) Frame

It is a right-handed coordinate system that rotates with and is attached to the earth, which is why it is called earth fixed. This frame is not inertial since, it revolves around the sun at an average orbital speed of 29.78 km/sec and rotates at a rate of 7.292115.10− 5 rad/sec. The Earth-fixed frame can be defined as follows:

-

Its origin at the mass center of the earth.

-

xe-axis pointing towards the Greenwich meridian in the equatorial plane.

-

ye-axis 90 degrees of Greenwich meridian, in the equatorial plane.

-

ze-axis is the axis of rotation of the earth and passes through the north pole.

In fact, it is important to note that the Global Positioning System (GPS) reports the position and velocity of the satellites in the ECEF coordinates system (Fig. 9).

4.1.3 Local-Level or Navigation Frame

It is a non-inertial frame that is commonly used to describe the navigation of a vehicle in a local-level frame. Its axes are aligned along the geodetic directions defined by the earth’s surface. Is is defined as follows:

-

Its origin is at the mass center of the vehicle under study.

-

The xn-axis points north parallel to the geoid surface.

-

The ye-axis points east parallel to the geoid surface, along a latitude curve.

-

The zd-axis points downward, toward the Earth surface, anti-parallel to the surface outward normal N.

4.1.4 Body Frame

The body frame is a non-inertial reference frame, in which the measurements of a strapdown inertial navigation system are reported. Its axes are aligned with the output axes of the gyroscopes and accelerometers of the Inertial Measurement Unit (IMU). Thus, the raw data composed of the rotation rates and the accelerations experience by the body are coordinatized along the body axes. It is noted that the navigation frame can be rotated to the body frame by three consecutive right-handed rotations about its three axes (see Fig. 10). The definition of the body frame of an inertial navigation system can be summarized a follows:

-

Its origin is at the mass center of the inertial navigation system.

-

The xb-axis points towards the front of the INS.

-

The yb-axis points towards the right of the INS.

-

The zb-axis points downwards and perpendicular to the x-y plane.

4.1.5 Platform Frame

The platform frame is a virtual frame created mainly for the derivation of the error equations. It is an image of the navigation frame which is recognized on an on-board computer using the outputs from the sensors. Since these sensors are dominated by noise, the platform frame does not coincide with the navigation frame and has a small deviation error from the navigation frame. The definition of the platform frame is as follows:

-

Its origin at the mass center of the vehicle under study.

-

xp-axis slightly misaligned due to attitude errors with the xn-axis of the navigation frame.

-

yp-axis slightly misaligned with the xe-axis of the navigation frame and perpendicular to the xp-axis.

-

zp-axis completes an orthogonal right-handed system.

4.1.6 Sensor Frame

Due to installation errors, the body frame does not coincide with the sensitivity axes of the sensors (accelerometers and gyros) used in our inertial navigation system. These errors can be compensated during manufacturing by appropriate calibration. For this reason, we assume here that the body frame and the sensor frame coincide (they are interchangeable).

4.2 Transformations

In this section, the basic mathematical tools that define the transformation between orthogonal coordinate systems are introduced. We will focus mainly on the concepts of Rotation Matrix, Quaternions, and Rotation Vectors.

4.2.1 Rotation Matrix

A common coordinate transformation in this article is the rotation from the North-East-Down coordinate frame to the body x-y-z coordinate frame via the ordered Euler angles (see BOX A) yaw (ψ), pitch (𝜃), and roll (ϕ).

A sequence of such distinctive rotations is often called a Euler angle sequence of rotations. The restriction stated above that successive axes of rotations be distinct still permits at least 12 Euler angle sequences. The sequence xzy means a rotation about the x-axis, followed by a rotation about the new z-axis, followed by a rotation about the newer y-axis.

Specifically the first rotation is ψ about z which is denoted here as Cz(ψ). The second rotation is Cy(𝜃), or 𝜃 about y. Finally, the third rotation is Cx(ϕ) or ϕ about x (see Fig. 11). These three single axis rotations are written as:

Thus, the transformation from body x-y-z coordinate frame coordinate frame to the n-frame (North-East-Down) is written as a cascade of the three single-axis rotations above, which can be solved using standard matrix multiplication:

4.2.2 Quaternions

The rotation matrix describes the rotation of 3 degrees of freedom with 9 quantities, with redundancy. Euler angles and rotation vectors are compact but with singularity as mentioned before.

Normal complex numbers can describe rotations in a plane. Recall that in order to rotate a two degrees-of-freedom vector represented be complex number in the plane by an angle 𝜃, we multiply by ei𝜃. It can be written in the usual form

A quaternion q has a real part and three imaginary parts. Usually the real part is written first and the three imaginary parts next, as

where i,j,k are three imaginary parts of the quaternion. These imaginary parts satisfy the following equations:

Alternatively, quaternions are often represented using a scalar and a vector as:

Here s is the real part of the quaternion and v is its imaginary part. If the imaginary part of the quaternion is 0 it is called a real quaternion and if the real part is 0 it is called imaginary quaternion.

4.2.3 Rotation Vector

In fact, a rotation can be described by a rotation vector and a rotation angle. Thus we can use a vector whose direction is parallel to the axis of rotation and whose magnitude is equal to the angle of rotation.

Let us introduce a rotation vector Φ, which is directed along the axis of rotation and has a magnitude equal to the rotation angle in radians. The equation of the rotation vector can be defined as

where n is the unit vector in the direction of the rotation vector. α,β,γ are the angles between the rotation vector and the coordinate frame axis. The quaternion elements can be represented through the parameters of the rotation vector Φ as

We can show using Fig. 14 that the image of the vector v under rotation around the vector part of the quaternion q, and through an angle “2𝜃” where \(q_{0}=\cos \limits \theta \) (ϕ = 2𝜃 in Eq. 9) is the scalar part of the quaternion, q, to be the vector w.

The vector v can be resolved into a vector a along the quaternion and a vector n perpendicular to a, such that, v = a + n. Since a is aligned with q it is invariant under rotation. On the other hand, it can be easily proved geometrically that \(\boldsymbol {m}= \cos \limits 2\theta \boldsymbol {n} + \sin \limits 2\theta \boldsymbol {n}_{\perp }\).

5 Applied Inertial Navigation

The main steps in obtaining a solution for a navigation system problem are as follows (Fig. 15):

-

Gyro bias corrections

-

Quaternion integration

-

Direction cosine computation

-

Heading, Roll and Pitch computation

-

Delta velocity transformations to earth coordinates

-

Sensed gravity component removal

-

Velocity integration

-

Position and altitude integration

5.1 Gyro Bias Corrections

When looking at the output of inertial sensors like gyroscopes and accelerometers, you observe that there is a small offset in the average signal output even if the sensors are not moving. This phenomena is known as sensor bias. This bias is the result of physical properties of the sensors that change over time which often lead to increase in sensor bias. The physical properties of sensors are different and so each sensor needs to be calibrated individually. Usually, gyroscopes are factory calibrated for coarse biases but even though, residual biases still remain in the gyroscope outputs. Remember that bias changes so there is no constant value that can be used to compensate for bias. Advanced algorithms are run in real-time to estimate and adjust for these biases.

If not corrected for bias, the output orientation of the system will drift with time. Consider a bias of 0.1 deg/s in the gyroscope output. This means we would have a drift of, 0.1 × 60 = 6 degrees in the orientation after one minute. Thus, it is crucial to estimate theses biases and compensate for them in the host computer of your inertial navigation system. On-board filters use sensor fusion to predict the biases and correct for them. The dϕ terms extracted from the IMU are compensated for gyroscope biases by a simple subtraction operation as shown in Algorithm 1.Footnote 4

5.2 Coning Correction

In old navigation systems, the inertial measurement unit (IMU) was mounted on a gimballed platform that was maintained in a horizontal position whenever the vehicle rotated, so that the gyroscopes and the accelerometers did not rotate with the vehicle. Nowadays, in strap-down systems, the gyroscopes and accelerometers are attached and rotate with the vehicle. To obtain velocities and angles you have to time integrate the reported acceleration and angular rates which is a highly non-linear operation. In particular, if you have high speed motion occurring you have to do the integration really fast to prevent errors from creeping in. This implies the IMU should be sampled at a really high rate (e.g., 1000 Hz) to provide raw angular rates and accelerometer data for the integration process. Since this integration is part of the Kalman filter (see Section 7) process, it places a heavy burden on your processor. This forms a major confliction. On the one hand you need to get data a high rate to preserve integration accuracy. On the other hand you can’t afford dealing with this high throughput of data. So what the coning algorithm does is to reduce the heavy burden on the navigation processor by performing accurate high speed integration on-board the IMU processor. The output is in the form of delta theta which is the integration of the raw angular rate data. The benefit is that the delta theta quantity has already captured the integration non-linearities using a high speed coning algorithm. The resulting output still retains accuracy even at a slow rate (e.g., 100 Hz). These delta theta quantities are used by the quaternion integration block of the navigation processor to find the attitude of the system.

In order to implement the quaternion integration, the delta theta quantities which form components of the rotation vector ϕ = [dϕx, dϕy, dϕz] for one time step should be calculated. The general equation for the dynamics of this vector \(\dot {\boldsymbol {\phi }}\) can be expressed by the following equation:

where ϕ is the rotation vector that defines the attitude of the body frame B at general time t relative to frame B at time tm− 1, and ω is the angular rotation rate of frame B relative to inertial space coordinatized in frame B.

A more convenient form for practical implementation would require writing the sine and cosine terms as series expansions and ignoring any terms higher than third order. For example, through a series expansion, the scalar multiplier of the ϕ × (ϕ ×ω) term in Eq. 14 can be written as:

Hence, the rate of change of the rotation vector is given by

It can be shown through analysis that, to second order accuracy of ϕ,

with

where α is the integral of ω from time tm− 1 to time t. Thus, Eq. 14 becomes to second order accuracy:

Using Eq. 19, it is possible to determine the attitude rotation vector that relates the body B frame attitude at time tm relative to time tm− 1

with

where βm is by definition, the coning attitude motion from time tm− 1 to time tm. The variable βm has been named coning term since it measures the effects of coning motion present in ω. Coning motion is the condition where the angular velocity vector is itself rotating. As can be easily seen from Eq. 21, α and ω remain parallel when the angular velocity vector does not rotate. Hence, the βm terms zeroes out since the cross product in its integrand is zero. In this case, Eq. 20 reduces to

This condition can also be seen directly from Eq. 14 since the second and third terms on the right-hand-side zero out.

5.2.1 Coning algorithm

In this section, we will develop an efficient digital algorithm for calculating the coning term. The integration time in Eq. 22 can be divided into a time up to and after tl− 1, where tl− 1 is between tm− 1 and tm. From Eq. 22,

.

A similar process can be utilized to digitize (21) giving the following

Substituting α = αl− 1 + Δα(t) in Δβl of Eq. 24 we obtain

Equations 25 and 26 form the basis for a recursive digital algorithm at the high l rate of the on-board IMU processor to calculate the αm and the coning term βm of the low m rate of Eq. 20. What remains is to determine a digital integration algorithm for the integral term in Eq. 26.

In order to digitize the integral term in Eq. 26, it is wise to consider an linear analytical form for the angular rate vector ω between any two time steps tl− 1 and tl. Approximating ω profile as a constant a added to a linear build-up in time having rate b, we obtain

where both a and b are constant vectors. Therefore, both constants can be determined from current and previous values of Δαl

Substituting (28) in Eq. 27 and the integral part of Eq. 26 gives

When substituted in Eq. 26, the final result is

The overall digital algorithm for αm and the coning term βm is determined from the above results and abbreviated in Algorithm 2.

5.3 Sculling Compensation

Sculling on the other hand is basically analogous to coning but it has to do with the accelerometers instead of the gyroscopes. Coning relates specifically to an error in your angle measurement and so fundamentally it is coming from gyro data. On the other hand, sculling happens when you have a cyclic linear acceleration in combination with cyclic rotation. We call this sculling because it results in an apparent but erroneous velocity, and the characteristic motion that gives you this erroneous velocity looks like the sculling type of oar, where the oar sweeps back and forth. Without compensation, this would come out in the delta velocity quantity and the output would have that corruption built into it. For example, if you have very fast motion, especially a vibration-like oscillating motion at the same time that you have a slow sampling rate, you will be in trouble without the sculling compensation provided.

Therefore, in order to prevent delta velocity errors from creeping in, it is convenient to account for the body frame rotation \(C_{B_{ (t)}}^{B_{m-1}}\) during the m th computer cycle index period. To find delta velocities, we integrate the reported accelerometer measurements according to the following

where aSF is the accelerometer reported values and \(C_{B_{(t)}}^{B_{m-1}}\) the general direction cosine matrix defining the attitude of Frame B relative to Frame Bm− 1 for time t greater than tm− 1.

The \(C_{B_{(t)}}^{B_{m-1}}\) term in Eq. 31 can be expressed as:

where ϕ(t) = Rotation vector that defines the attitude of the body frame B at general time t relative to frame Bm− 1 at time tm− 1, and ϕ(t) = Magnitude of ϕ(t).

A first order approximation for Eq. 32 neglects \({\left [\boldsymbol {\phi }(t)\times \right ]}^{2}\) and approximates \({\sin \limits \phi (t)}/{\phi (t)}\) by unity. Assuming that the m cycle rate is selected fast enough to maintain ϕ(t) small, e.g., less that 0.05 radians, we can write ϕ(t) ≈α(t). In this case (32) becomes

Substituting (33) in Eq. 31 then yields to first order

Equation 34 can be further synthesized if we work on the integral term by first noting that:

Upon re-arranging this equation, we obtain

Trivially,

We now substitute for one of the terms on the right to obtain

Knowing that \(\dot {\boldsymbol {\alpha }}(t) = \boldsymbol \omega \) and \(\dot {\boldsymbol {v}}(t)= \boldsymbol {a}_{SF}\), Eq. 38 becomes

Substituting (37) for the integrand in Eq. 34 yields the following

It is easily verified that the integrand in Eq. 40 vanishes for the cases where the angular velocity term ω and the specific force aSF are non-rotating and having constant magnitudes. We conclude that the integral term in Eq. 40 represents a contribution from rotating high frequency components in Δvm.

The integral term in Eq. 40, denoted as “sculling”, measures the “constant” contribution to Δv under classical sculling motion (mariners propel boats using a single oar with an undulating motion) where the α(t) angular excursion term about one body frame axis is at the same frequency and in phase with the specific force aSF along another axis.

The other terms in Eq. 40, \(\boldsymbol {v}_{m}+\frac {1}{2}\left (\boldsymbol {\alpha }_{m} \times \boldsymbol {v}_{m} \right )\), represent a combination of both low-frequency and high frequency effects. In particular, \(\frac {1}{2}\left (\boldsymbol {\alpha }_{m} \times \boldsymbol {v}_{m} \right )\) is denoted as velocity rotation compensation term. With this terminology, Eq. 40 can be re-written as

and

where \({\Delta }\boldsymbol {v}_{Rot_{m}}=\) “Velocity Rotation Compensation” term, and \({\Delta }\boldsymbol {v}_{Scul_{m}}= \) “Sculling” term. In order to develop a digital algorithm for calculating the terms in Eq. 41, we follow an identical procedure to that used for the coning algorithm. We consider the integration in Eq. 41 as divided into portions up to and after a general time tl− 1 within the tm− 1 to tminterval so that it becomes

Now let us define the next l cycle time within the tm− 1 to tm interval so that at tl we can write

Substituting for the terms α and v using Eq. 34 and incorporating the definition for Δαl and Δvl, Eq. 44 becomes

As in the coning algorithm design process, we base our development on an assumed form for the angular rate and specific-force vectors during the tl− 1 to tl time interval. In this case, we propose a linearly changing angular rate and specific-force vector over the tl− 1 to tl time interval, where its coefficients are computed from current and past l cycle sensor samples.Thus we have:

where a.b,c,d = Constant vectors. With Eq. 46 and the Δα and Δv definitions in Eq. 44

Substituting (46) and (47) for the integrand in Eq. 45 yields:

where Tl = time interval tl − tl− 1, i.e., the l cycle computation period. The constants a,b,c, and d can be calculated for each tl− 1 to tl time interval using successive measurements of integrated angular rate and specific force acceleration increments from the inertial sensors. To determine the constants a,b,c, and d uniquely, it is required to take sample measurements from two successive intervals. For sensor samples taken at the l cycle rate the results are as follows:

Substituting the terms in Eq. 49 in Eq. 48 we obtain the desired equation for \(\delta \boldsymbol {v}_{Scul_{l}}\):

A digital algorithm from the above results and from the coning equations yields the sculling Algorithm 3.

5.4 Velocity Increments Transformation

The velocity increments (dvx, dvy, dvz) output from the sculling compensation algorithm are described in the body frame. In order to perform the velocity integration in the navigation coordinate frame, it is essential that we transform their values to the NED frame. This can be easily done with help of the direction-cosine-matrix \({C_{b}^{n}}\) as shown in Algorithm 4.

5.5 Quaternion Integration

Quaternion integration deals with the determination of the quaternion between the body and the navigation frame. A primary advantage of using the quaternion technique lies in the fact that only four unknowns are necessary for calculation of the transformation matrix, while the direction cosine method requires nine. The quaternion can also be expressed as a 4x4 matrix. Thus

where, as before, q0, q1, q2, q3 are quaternion components.

It can be shown that the quaternion analog of Puasson equation (see Section 6 (64)) has the form

where [w×] is the skew-symmetric form of the angular velocity vector w. The recurrent solution of the above equation can be determined (to first order) as

or

where dT is the sampling period and \(d{Q}=(I+ \frac {1}{2}[w\times ] dT)\) is usually called the update quaternion. It is the quaternion of a small rotation that can be represented using Eq. 9 as follows

Substituting in Eq. 54 the expression obtained is:

But \(\sin \limits \frac {{\|d\mathbf {\Phi }\|}}{2}\) and \(\cos \limits \frac {{\|d\mathbf {\Phi }\|}}{2}\) can be approximated using a third order expansion of the Taylor series:

The series expansion of Eq. 55 gives the following formula for the quaternion components:

where \(r=\frac {1}{2}-\frac {{\|d\mathbf {\Phi }\|}^{2}}{48}+\frac {{\|d\mathbf {\Phi }\|}^{4}}{3840}\). Substituting (58) into Eq. 51 we obtain Algorithm 5.

5.6 Normalizing Quaternion Parameters

According to the quaternion properties, its norm should be always equal to one, which means:

But unfortunately, the above condition can be violated due to calculation errors or rounding approximations. In order to remove this effect it is necessary to apply a normalization procedure.Since \( {q_{0}^{2}}+{q_{1}^{2}}+{q_{2}^{2}}+{q_{3}^{2}}\approx 1\) then we have:

is a very small number. Then normalizing each quaternion parameter by dividing by \(\sqrt {1-{\Delta }}\), and expanding using a Taylor’s series formula we obtain:

5.7 Direction Cosine Matrix Computation

The quaternions compose a four-element unit vector (q0, q1, q2, q3) obtained from the quaternion integration step. They can be efficiently used to find the elements of the 3-by-3 Direction Cosine Matrix (DCM). The outputted DCM performs the coordinate transformation of a vector in body axes to a vector in local-level navigation frame axes. The following algorithm will be used in the embedded processor to find the 9-elements of the DCM:

5.7.1 Roll, Pitch, and Heading Calculation (Euler Angles)

The Euler angles are three angles introduced by Leonhard Euler to describe the orientation of a rigid body with respect to a fixed coordinate system. Leonard Euler (1707-1783) was one of the giants inn mathematics [30]. Euler stated and proved a theorem that states that:

Any two independent orthonormal coordinate frames can be related by a sequence of rotations (not more than three) about coordinate axes, where no two successive rotations may be about the same axis.

When we say that two independent frames are related, we mean that a sequence of rotations about successive coordinate axes will rotate the first frame into the second. The angle of rotation about a coordinate axis is called an Euler angle. A sequence of such rotations is often called a Euler angle sequence of rotations. The restriction stated in the above theorem that successive axes of rotations be distinct still permits at least 12 Euler angle sequences. The sequence xzy means a rotation about the x-axis, followed by a rotation about the new z-axis, followed by a rotation about the newer y-axis.

We are specifically interested in the well-known Euler sequence called the Aerospace sequence. This sequence (zyx) is commonly used in aircraft and aerospace applications. For example, a primary flight instrument used in aircrafts, continuously relates the orientation of the aircraft to the local-level n-frame mentioned above.

From the n-frame,first a rotation through the angle ψ about the z-axis defines the aircraft heading. This is followed by a rotation about the new y-axis through an angle 𝜃 which defines the aircraft pitch. Finally, the aircraft roll angle ϕ, is a rotation about the newest x-axis. These three Euler angle rotations relate the body coordinate frame of the aircraft to the local-level n-frame.

6 Principles of Inertial Navigation

6.1 Navigation Equations

It is desirable to formulate the navigation equations in the earth-centered, earth-fixed frame (e-frame), since usually the measurements of the GNSS receiver are given in the e-frame. But usually we are more comfortable in dealing with n-frame coordinates since it is more trivial to deal with the North-East-Down directions. Recall that the coordinate directions of the n-frame are defined by the local horizon and by the vertical, and centered on the vehicle center of gravity (cog). Strictly speaking, no horizontal motion takes place in this frame since it is attached and fixed to the vehicle. Therefore, the navigation equations are not coordinatized in the n-frame because no horizontal motion takes place in this frame. Nevertheless, we will still refer to the n-frame coordinatization of the navigation equations as an Earth-referenced formulation in which the velocity components are transformed along the n-frame coordinate directions. This concept is rarely discussed in the literature and usually is a source of confusion.

A vector in the e-frame (navigation frame) has coordinates in the i-frame (inertial frame) given by

where \({C_{e}^{i}}\) is the transformation matrix from the e-frame to the i-frame. The time derivative of this matrix is given by [31]

where \({\Omega }_{ie}^{e}\) denotes a skew-symmetric matrix with elements from \(\boldsymbol {\omega }_{ie}^{e}=(\omega _{1}, \omega _{2}, \omega _{3})\)Footnote 5 is then given by

We also need the second time derivative which from Eq. 64 and the chain rule for differentiation is given by

Now differentiating (63) twice with respect to time yields

Solving for \({\ddot {\boldsymbol {x}}}^{n}\) and combining with \({\ddot {\boldsymbol {x}}}^{i}= {\boldsymbol {g}}^{i}+{\boldsymbol {a}}^{i}\) gives the system dynamics for position in the e-frame:

Since the earth has a constant angular velocity with respect to the inertial frame, then \({\dot {\Omega }}_{ie}^{e}=0\) and we obtain

We can transform the navigation equation above into the n-frame merely by substituting \({\dot {\boldsymbol {x}}}^{e} = {C}_{n}^{e}{\boldsymbol {v}}^{n}\) on the right-hand side of Eq. 69

and on the right hand side of Eq. 69 we use the formula \({\Omega }_{ie}^{n}={C_{e}^{n}}{\Omega }_{ien}^{e}{C_{n}^{e}}\), and the result is:

The last two terms are, respectively, the gravitational vector and the centrifugal acceleration due to the Earth’s rotation, coordinatized in the n-frame. Together they define the gravity vector:

The distinction between the terms gravitation and gravity, refers to the difference between the acceleration due to mass attraction, alone, and the total acceleration, gravitational and centrifugal, that is measured at a fixed point on the rotating earth. Gravity has a direction that coincides with the direction of a plumb line at any given point in space. The direction of a plumb line coincides with the direction of a string to which a freely suspended weight, or plumb bob, is attached.

Finally, by writing \({\Omega }_{en}^{n}={\Omega }_{in}^{n}+{\Omega }_{ei}^{n}={\Omega }_{in}^{n}-{\Omega }_{ie}^{n}\)Footnote 6 and by manipulating the subscripts, we also obtain

Substituting (73) and (72) into Eq. 71, the desired form of the n-frame navigation equations becomes:

The components of the Earth-referenced velocity, vn, the sensed acceleration, an, and the gravity vector, ḡn, can be described by their north, east, and down components in the n-frame as follows:

6.2 Error Dynamic Equations in the n-Frame

The equations of motion depict the time development of the user’s position, speed, and attitude under perfect conditions. The way in which errors proliferate in an INS can be computed by applying a first-order Taylor series development (linearization), or perturbation investigation, to the equations of motion derived in the previous sub-section. The coordinatization of the error dynamics in the n-frame represents the traditional and most intuitive scheme of analyzing INS errors.

6.2.1 Orientation Error Dynamics in the n-frame

The perturbation δan is interpreted as the error in the expression in the n-frame of the sensed acceleration. It represents not only accelerometer errors but also orientation errors that are committed when transforming sensed accelerations from the sensor frame (s-frame) to the n-frame. Taking differentials of the relation \(\boldsymbol {a}^{n}={C_{s}^{n}}\boldsymbol {a}^{s}\), we obtain:

The differential \(\delta {C_{s}^{n}}\) is caused by errors in the orientation of the s-frame with respect to the n-frame. It is convenient to represent \(\delta {C_{s}^{n}}\) in terms of small error angles, one for each of the n-frame axes: ψn = (ψn, ψe, ψd)T. This can be represented in the equivalent form of a skew-symmetric matrix:

Since ψn, ψe and ψd represent small angle rotation errors, the transformation matrix from the true n-frame to the erroneously computed n-frame (inside the INS computer), can be written as I −Ψn. This can be easily achieved by substituting the small angle errors (see Fig. 16) inside the DCM (4) on page 7, and approximating it to first order. Therefore, the computed transformation may be represented as a sequence of two transformations comprising first the true transformation from the body frame (n-frame or s-frame ) to the erroneously computed n-frame followed by another transformation from the true n-frame to the erroneously computed n-frame:

The platform frame \(\hat {N}\hat {E}\hat {D}\) is a virtual frame that is slightly misaligned from the true navigation frame. It is mainly created for the derivation of the error equations. It is only recognized by the INS on-board computer and it results from the erroneous gyroscope sensors that are integrated to give this false navigation frame.

It is now clear that:

Substituting (79) in Eq. 76, we obtain

We now establish the dynamic behavior of the error angles as in the form of a differential equation. Taking the differential of \(\dot {C}_{s}^{n}={C}_{s}^{n} {\Omega }_{ns}^{s} \)

where the perturbation in angular rate, \(\delta {\Omega }_{ns}^{s}\), is interpreted as the error in the corresponding computed value, denoted by \(\hat {\Omega }_{ns}^{s}\):

Differentiating the second line of Eq. 80 with respect to time and setting the result equal to the right side of Eq. 81, we get

Substituting for \(\boldsymbol {\Psi }^{n} {C_{s}^{n}}\) and solving for \(\dot {\boldsymbol {\Psi }}^{n}\) yields

in terms of vectors, it is easily verified that this is equivalent to

where \(\delta \boldsymbol \omega _{ns}^{s}\) is the error in the rotation rate of the s-frame with respect to the n-frame. For small vehicle velocities, the angular velocity of the navigation frame is negligible,Footnote 7 consequently, \(\boldsymbol \omega _{ns}^{s}\approx \boldsymbol \omega _{is}^{s} \), where \(\boldsymbol \omega _{is}^{s}\) is the angular velocity vector delivered by the gyroscope.Footnote 8

Equation 85 can be discovered directly with no need for any rigorous derivation, simply by recognizing that, the errors in the orientation angular rates, \(\dot \psi _{n}, \dot \psi _{e}\), and \(\dot \psi _{d}\) are nothing but the transformations of the gyroscope angular rate errors, \(\delta \omega _{Gx}^{s}, \delta \omega _{Gy}^{s}\), and \(\delta \omega _{Gz}^{s}\),Footnote 9 (gyroscope biases in practice) from the body frame to the navigation frame, which when expanded can be written in the form (for convenience the body frame and the sensor frame are considered coincident):

In Eq. 86 the 3 × 3 matrix can be determined by using Algorithm 7, and the δω terms represent the gyroscope biases along the three axes x, y, and z of the body frame.

Since we are interested in implementing our algorithms on an embedded processor we need to discretize (86). With the help of BOX B on page 9, we obtain the following discretized version:

6.2.2 Velocity Error Dynamics in the n-Frame

The objective in the n-frame coordinatization is to formulate the error dynamics with respect to the geodetic coordinates (ϕ,λ,h). We write the perturbation from the compact form of the velocity navigation (74).

Of note in the above equation are the errors in the computation of the local gravity vector, ḡ. This vector is not a constant, and varies as a function of location as identified in the matrix of gravity gradients, Γ̄n = ∂ḡn/∂pn. Position errors, δpn, lead to errors in ḡ which, in turn, lead to velocity errors. Using Eqs. 12 and 13 in Box B on page 9 it can be easily shown that:

Since our intention is to give a hands-on experience for the reader of this manuscript, it is instructive to provide the approximations usually applied in commercial integrated navigation systems to the error equations. Typically, for general applications where n-frame velocities don’t exceed, say, 120 m/s, the term \(\delta ({{\Omega }}_{in}^{n}+{{\Omega }}_{ie}^{n}){\boldsymbol {v}}^{n}\) (in view (89) is lower than 10− 5m/s2. This can be neglected in case of low-cost MEMS are being used in the INS, since the the acceleration errors, δan, due to accelerometer biases and gyro drift are much higher. It is important to stress that gyro drifts lead to an erroneous transformation of sensed acceleration from body to navigation frame as shown later in this section. For small navigational velocities, the angular rate of the vehicle \({\Omega }_{en}^{n}\), is relatively much smaller than the angular rate of the Earth \({\Omega }_{ie}^{n}\), which is already lower than the noise level found in typical MEMS sensors. Thus for an elementary analysis we may consider the second term on the right hand side to be zero in the error dynamics of Eq. 88. Neglecting the gravity relatedFootnote 10 terms in Eq. 88 we obtain:

Taking the second part of Eq. 80 and using an ≈ (an, ae,−ḡ) since in case of low vehicle velocity in the navigation frame the accelerometer in the down direction is overshadowed by the gravity vector, \(a_{d}\approx -\bar g\), we obtain:

where we have used the notation \( \delta \boldsymbol {a}_{A}={C_{s}^{n}} \delta \boldsymbol {a}^{s}\) (notation adopted from [33]) to identify the accelerometer error vector produced in the body frame, but projected on the navigation frame.

It is instructive to prove the velocity error dynamics, simply by relying on insights into Fig. 17. We shall re-prove the error dynamics for the first component in Eq. 91, δvn. The interested reader is invited to prove the error dynamic equations of the other terms. From a geometrical viewpoint of Fig. 17a, we have:

For ψe ≈ 0 and \(a_{d} \approx -\bar g\), Eq. 92 becomes:

which implies that:

Similarly, looking at Fig. 17b, we have:

For ψd ≈ 0, Eq. 95 becomes to first order:

which implies that:

So the total error in an, is the superposition of the terms produced by ψd and ψe, thus we have:

Adding to Eq. 98 the error in acceleration caused by the bias term δaAn we obtain:

which is nothing but the first row in Eq. 91.Footnote 11

Discretizing (91) with the aid of BOX C, we can easily obtain the following discrete velocity error equations

6.2.3 Position Error Dynamics in the n-Frame

Referring to footnote 7 on page 19 we can write:

but since \(\lambda = { v_{e}}/{R\cos \limits \phi }\) and \(\dot \lambda \approx 0\) (for small vehicle velocity), then Eq. 101 becomes:

The radius of the Earth, R, is taken to be constant neglecting the ellipsoidal nature of the Earth.

We should also include altitude h in our position error dynamic equations. To do so we can simply write:Footnote 12

In order to discretize (102) we approximate the derivatives by a finite difference, resulting in:

7 Kalman Filter

The Kalman filter is an estimation strategy, instead of being a filter. The fundamental strategy was designed by R. E. Kalman in 1960 [34], and has been improved further by various researchers since. The filter refreshes the estimates of the state vector which is persistently changing. These estimates are then updated using a set of measurements which are subject to noise [35]. The measurements should be written in terms of the parameters estimated, yet the measurements at a given time need not contain adequate information to uniquely decide the values of the state vector at the time. This is related to the concept of observability of the system. It closely mimics the case of solving a set of equations where the number of unknown variables exceeds the number of equations.

The Kalman filter utilizes information of statistical properties of the system in order to get ideal estimates of the data available. It maintains a set of uncertainties about the estimates that is carried from one iteration to another. It also carries a measure of correlation between the errors in the estimates of the states from iteration to iteration.

The Kalman filter is an efficient algorithm from computing point of view, since it is a recursive algorithm that only processes the latest measurements and forgets the old ones. In contrast, non-recursive algorithms waits until all measurements are available before beginning any estimate which is time and memory consuming.

7.1 Components of the Kalman Filter

The calculation scheme of the Kalman filter algorithm is shown in Fig. 18. The Kalman filter has five major components:

-

The state vector and its covariance matrix

-

The system model

-

The measurement vector and its covariance

-

The measurement model

-

The algorithm

The state vector is a set of parameters that the filter estimates. It is usually composed of the position, velocity and other navigation states or their errors. In our demonstration of the Kalman filter algorithm, we will estimate the errors in the parameters of an INS system, δ(⋅), instead of the parameters them self, (⋅). This implementation is called an error-state implementation. In contrast, when estimating absolute states of the system such as position, velocity, and orientation, the system is known as a total-state implementation. The error-state implementation separates the state into a “large” nominal state \(\hat {x}\), and a “small” error state, δx, such that \(x=\hat {x}+\delta x\). The error-state implementation can perform better due to the fact that the error dynamic equations we derived are linearized versions of their true equations (due to approximations) and therefore are more accurately evolved in time for small quantities.

The error covariance matrix P, represents the expectation of the square of the deviation of the state vector estimate from the true value of the state vector. The diagonal elements are the variance of the state estimates, while the off-diagonal elements represent the correlation between the errors in the different state estimates. In a Kalman filter, it is required to initialize the state vector and the covariance matrix. Usually, in error-state implementations the state vector is initialized to zero, while the covariance matrix elements are chosen by the designer to reflect the level of confidence of his a priori estimates of the initial state vector.

Each complete iteration of a Kalman filter consists of a propagation and an update step. The state vector and covariance matrix after being propagated in time and before updating, are denoted by, \(\hat {\boldsymbol {x}}_{k}^{-}\), and \(P_{k}^{-}\), respectively. Their counterparts following the measurement update are denoted by \(\hat {\boldsymbol {x}}_{k}^{+}\), and \(P_{k}^{+}\).

The vector z consists of a set of measurements related to the state-vector through a deterministic matrix H and with added noise v:

The measurement innovation, δz−, is the difference between the true measurement vector and the one computed from the state vector before a measurement update:

The measurement residual, δz+, is the difference between the true measurement vector and the one computed from the updated state-vector:

The standard Kalman filter assumes that the measurement errors form a zero-mean Gaussian distribution, uncorrelated in time, and with a noise covariance matrix R. The covariance matrix R is nothing but the expectation of the square of the measurement noise:

7.2 Kalman Filter Algorithm

The data flow of the Kalman filter algorithm is shown in Fig. 19

The following steps constitute the Kalman filter algorithm [36]:

-

1.

Calculate the transition matrix, Φk− 1;

-

2.

Compute the noise covariance matrix, Qk− 1;

-

3.

Propagate the state vector estimate from \(\hat {\boldsymbol {x}}_{k-1}^{+}\) to \(\hat {\boldsymbol {x}}_{k}^{-}\);

-

4.

Propagate the error covariance matrix from \(P_{k-1}^{+}\) to \(P_{k}^{-}\);

-

5.

Compute the measurement matrix, Hk;

-

6.

Calculate the measurement noise covariance matrix, Rk;

-

7.

Calculate the Kalman gain matrix Kk;

-

8.

Extract the measurement, zk;

-

9.

Update the state vector estimate from \(\hat {\boldsymbol {x}}_{k}^{-}\) to \(\hat {\boldsymbol {x}}_{k}^{+}\);

-

10.

Update the error covariance matrix from \(P_{k}^{-}\) to \(P_{k}^{+}\);

The first four steps comprise the system propagation phase of the Kalman filter. The last two steps comprise the update phase of the Kalman filter. Later we will see that it is not necessary to execute the update phase of the Kalman filter with every propagation step of the state-vector. On the other hand, the system propagation phase should be executed in every iteration of the Kalman filter.

7.2.1 Transition matrix

The transition matrix describes the dynamics of the system. It defines how the state-vector is propagated with time. It is not a function of any of the state vector parameters. If its elements are a function of time, then it should be updated with every iteration of the Kalman filter. Since we will derive the equations of an error-state Kalman filter, the elements of the state-vector x, will take the form of error terms (see Section 6).

The first three terms are gyroscope biases in the x,y, and z directions of the IMU sensor frame. They are considered as random constants, and they are easily modeled as unchanging elements in the state vector. For this reason, we have not derived their error dynamics in the previous section. The fourth term in the state vector is the accelerometer bias in the z direction. It is also modeled as a random constant. We have deleted the accelerometer biases in the x and y directions since they did not improve the accuracy of our estimated state vector. The collected set of transition elements are collected in one transition matrix (110) in a convenient form to directly observe which parameters are correlated, simply by looking at the first row and the first column entries. In contrast, it is very important to estimate the bias in the z direction to prevent the vertical channel, “h”, in our Kalman filter from diverging. The transition matrix as seen in BOX C is written as Φk− 1 = I + A ∗ dT. It is wise to write the transition matrix without the identity matrix due to the efficiency in calculations achieved in our Kalman filter as will be seen in this section. The elements of the transition matrix will written in Algorithm 9.

7.2.2 Error Propagation Matrix

In order to propagate the covariance matrix we have to apply the following equation:

In order to compute the propagation matrix efficiently, it is beneficial to consider the sparsity of the transition matrix. We shall apply a divide-and-conquer strategy where only the matrix multiplications involving non-zero elements of the transition matrix are executed. We will first compute the matrix, \(l={\Phi }_{k-1} P_{k-1}^{+}\), and then multiply the resulting matrix (we call it intermediate matrix) with \({\Phi }_{k-1}^{T}\).

To elaborate on the matrix multiplication issue, let us consider that we want to multiply two matrices, T and P, where T is sparse and P is not, such as:

The entry t12 contributes only to the first row of the resulting matrix, since the first row of the product uses the terms, t12 ∗ p21, t12 ∗ p22, and t12 ∗ p23, respectively, in its computations.

This strategy decreases the number of accesses to memory where the matrices are stored, since the relevant entries are only loaded once for each non-zero transition matrix entry. This greatly reduces the execution time for the Kalman filter on low-cost embedded processors where resources are limited.

The detailed steps involved in the computation of l, will be shown in Algorithm 10.

To continue the propagation computation of the covariance matrix we shall write the algorithm for the second part of the matrix multiplication and then finally add the system noise covariance matrix, Qk− 1. We apply the same strategy as above in Algorithm 11. The final stage in propagating the error covariance matrix is adding system noise as seen in Algorithm 12.

7.2.3 Measurement Matrix and Kalman Gain

The measurement matrix defines how the measurement vector varies with the state vector. This relation in Eq. 105 is repeated here for convenience:

The measurement noise vector in most applications is considered white with a few exceptions. It has a measurement noise covariance matrix, Rk, that may be assumed constant. In our typical implementation we are directly measuring some state vector elements (GNSS positions and velocities).

The Kalman gain matrix is used to determine the weighting of the measurement information in updating the state estimates. It is a function of the ratio of the uncertainty of the true measurement, zk to the uncertainty of the measurements predicted from the state estimates, \(H\boldsymbol {x}_{k}^{-}\).

The Kalman gain matrix is:

7.2.4 State Vector and Error Covariance Update

When ever we obtain a measurement the state vector is updated by the measurement vector using this formula:

Similarly, the error covariance matrix is updated with:

As the updated state vector estimate is based on more information, the updated state uncertainties are smaller than before the update.

We will continue this section by providing the detailed algorithms for the second (update) phase of the Kalman filter. This phase is comprised of calculating the matrix gain, Kk, updated state-vector \(\hat {\boldsymbol {x}}_{k}^{+}\), and updated error covariance matrix, \(P_{k}^{+}\). These three steps will be implemented for each new measurement obtained (theoretical details deferred to Section 8).

8 Filter Insights

8.1 Inverse Matrix Calculation

The most complex and time consuming part of the Kalman filter algorithm is finding the inverse of the matrix in the Kalman filter gain, Kk. In order to avoid this tedious calculation, we will prove that it is possible to avoid this inverse matrix calculation by a small trick.

We can write the Kk and the Hk matrices as follows:

and

Using this notation, we have, KkHk = K1H1 + K2H2 + ⋯. Thus, it is easily shown that the error covariance matrix can be written as follows:

Note that the sum of the first two terms is nothing but the updated error covariance matrix associated with one measurement. Adding the third term to it, we obtain the error covariance matrix after the second measurement is manipulated. As shown, it is possible to update the error covariance matrix after each reported measurement. This is legitimate as long as we do not propagate the error covariance matrix while manipulating the measurements. It is only required to compute the Kalman gain and correct the state vector after each update of the covariance matrix. The benefit of following this strategy is that the matrix inversion in the Kalman gain computation is transformed to a simple scalar inversion. So by taking any single measurement element only, we can update the covariance matrix and derive and apply system corrections for that single measurement element, much simpler than we can with multiple measurements. In this case, we avoid matrix inversion. It is important that we update everything with a single measurement before using the next measurement, and that we use the updated status before applying the next measurement. All updates must be performed before the next navigation integration cycle. We should always propagate the Kalman filter after every integration of the navigation equations, and only update the Kalman filter (and also make system corrections) whenever we have measurements. The frequency of these updates could be the same or less than the Kalman filter propagation frequency. It depends on the source of our measurements.

8.2 Error Dynamics Approximations

It is also beneficial to address the approximations we made in deriving the error dynamic equations, Eqs. 85 and 90, for those readers who may be concerned. With low-grade IMU we simply forget the Earth’s rotation and consider a local flat Earth. It is impossible to detect the very slow rotation of the earth with all the noise and random drift of the gyroscopes. There is no harm in including these factors but they are unlikely to make any improvements.

8.3 Error-State Kalman Filter

It is necessary to remember that we are performing an error-state Kalman filter where the estimated states are INS errors. After each Kalman filter iteration, the estimated states are applied to the corresponding navigation parameters, and thus the state vectors are reset to zero. Consequently, the state vector itself does not need to be propagated forward in time. This type of implementation is called a closed-loop implementation. It is still critical however that the state covariance be propagated using the following equation:

In this closed-loop technique, since the estimated errors are fed back every iteration, the Kalman filter states are zeroed which keeps the errors of the filter small. This has the effect of minimizing the errors introduced in linearizing the system or process model, since higher order terms in the Taylor series expansion gets smaller and smaller. This is in contrast to the open-loop implementation where there is no feedback, and thus, the states will get larger as time progresses.

We shall also explain a concept which is not very well common between Kalman filter practitioners, related to the idea of how velocity measurements (vn, ve, vd) are essential in estimating tilt errors ψn, ψe, and ψd.

Suppose that the INS is stationary (zero-velocity), and that we have a positive ψn error, which means that the system model does not have an exact representation of the rotation of the IMU relative to the local north pointing axis. Instead, it is rotated clockwise by the angle ψn about the north pointing axis. Then we will not have the correct transformation value for dve after performing the direction cosine transformation of dvx, dvy and dvz (Algorithm 4). The effect is that the east velocity will increment by an error equal to (ψn ∗ g ∗ dT). This is the same term relating the error δve to ψn in the transition matrix (110). Thus, the system computed velocity ve is no longer zero. But we know it is zero, so we give a measurement to the Kalman filter equal to − ve. This generates a whole set of corrections for the system, including highly weighted corrections to ve, ψn and gyroscope biases. The other terms are only weakly coupled to δve in the Kalman filter and have only very small, almost negligible corrections (for these corrections, you need the vn and vd measurements). We then apply quaternion correction, which reduces the error in ψn (as well as the other terms) so that, next time round, the error in ve is smaller. This way, after several cycles, we reduce the error in ψn until the roll and pitch values and ve are correct (gyroscope biases take a bit longer).

So as we can see, it is the accelerometers (delta velocities) which determine the alignment and not the gyroscopes. The gyroscopes are only there so that sensed rotation can be immediately applied to the attitude solution instead of waiting until the Kalman filter, aided by velocity measurements, eventually finds the new attitude angles (direction cosine matrix).

These are some recommendations for Kalman filter designers:

-

Check that you can read and display the IMU data with your software.

-

Prove that quaternion integration, body to navigation direction-cosine-matrix computation and roll-pitch-heading routines work without the Kalman filter, by starting level and manually rotating for a short time. To do this you do not need to transform and integrate delta velocities.

-

Transform, integrate and display velocities; they should rapidly become very large.

-

Develop your Kalman filter by displaying all the variances adjacent to their respective state matrix elements. Note that the typical error of the of a state element “i” (squared) should approximately equal to its corresponding i’th diagonal entry in the covariance matrix Pk.

8.4 System Model Error Sources

In the last few years, Microelectromechanical system (MEMS) gyroscopes and accelerometers are beginning to take market away from traditional inertial sensors like fiber optic gyroscopes (FOG) and ring laser gyroscopes (RLG). This take over has been occurring due to improved error characteristics, environmental stability, better bandwidth, enhanced g-sensitivity, and the plethora of of embedded computational power that can run advanced fusion and sensor error modeling algorithms. This transition couldn’t have been achieved if not for the advancements in MEMS technology which had remarkable improvements on the error characteristics of the sensors [37,38,39].

In system modeling in general, we only consider the main error sources. We ignore the terms which have little effect on system performance, but allow for these small effects as additional noise in other terms. This is not perfectly correct, but its is a good compromise, since it simplifies the mathematics significantly, minimises the size of the Kalman filter and works surprisingly well.

For example dϕx, dϕy, and dϕz are actual measurements and their values would be absolutely correct if there were no errors in scale factors, biases and mis-alignments, and therefore we do not consider errors in these terms (corrected for by rate-table Fig. 20). However, if there were additional errors in dϕx, dϕy, and dϕz, then these errors would come from other sources such as measurement electronics, g-sensitivity and non-linearity, which we have ignored. We have not modelled these errors in the mathematics but instead we do account for these additional errors by having larger values of system noise in the other terms.

Rate-tables are used for gyroscopes/IMU calibration or hardware-in-loop (HWIL) testing. Payloads are mounted on the table top platen. A pattern of threaded holes accept a variety of test loads. It is often equipped with high speed optical or electrical slip-rings to transmit data to and from the payload under test. It contains a direct drive brushless motor with dedicated amplifiers, controllers and a heavy duty power supply. Some of the main error sources that may be acquired from the calibration process include: sensor-to-axis misalignments, gyroscope and accelerometer scale factor errors, and bias errors. The rate-table is usually mounted inside a temperature chamber in order for the payload unit to be calibrated at equally spaced temperatures ranging from − 40∘C to + 85∘C [40].

A good example where we add ”unnecessary” noise in the Kalman filter is in δvn, δve, and δvd. Where could noise appear in these terms? A very minute amount could come from computing noise (e.g., loss of numerical values which are smaller than the least-significant-bit when integrating), but that’s all. So why do we add velocity noise in the Kalman filter? The answer is, it compromises for errors caused by non-considered effects and thus prevents the mathematics in the Kalman filter from exploding due to a reduced mathematical model.

8.5 IMU and GNSS Time Synchronization