Abstract

Learning to reliably perceive and understand the scene is an integral enabler for robots to operate in the real-world. This problem is inherently challenging due to the multitude of object types as well as appearance changes caused by varying illumination and weather conditions. Leveraging complementary modalities can enable learning of semantically richer representations that are resilient to such perturbations. Despite the tremendous progress in recent years, most multimodal convolutional neural network approaches directly concatenate feature maps from individual modality streams rendering the model incapable of focusing only on the relevant complementary information for fusion. To address this limitation, we propose a mutimodal semantic segmentation framework that dynamically adapts the fusion of modality-specific features while being sensitive to the object category, spatial location and scene context in a self-supervised manner. Specifically, we propose an architecture consisting of two modality-specific encoder streams that fuse intermediate encoder representations into a single decoder using our proposed self-supervised model adaptation fusion mechanism which optimally combines complementary features. As intermediate representations are not aligned across modalities, we introduce an attention scheme for better correlation. In addition, we propose a computationally efficient unimodal segmentation architecture termed AdapNet++ that incorporates a new encoder with multiscale residual units and an efficient atrous spatial pyramid pooling that has a larger effective receptive field with more than \(10\,\times \) fewer parameters, complemented with a strong decoder with a multi-resolution supervision scheme that recovers high-resolution details. Comprehensive empirical evaluations on Cityscapes, Synthia, SUN RGB-D, ScanNet and Freiburg Forest benchmarks demonstrate that both our unimodal and multimodal architectures achieve state-of-the-art performance while simultaneously being efficient in terms of parameters and inference time as well as demonstrating substantial robustness in adverse perceptual conditions.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Humans have the remarkable ability to instantaneously recognize and understand a complex visual scene which has piqued the interest of computer vision researches to model this ability since the 1960s (Fei-Fei et al. 2004). There are numerous ever-expanding applications to this capability ranging from robotics (Xiang and Fox 2017; Boniardi et al. 2019; Radwan et al. 2018b) and remote sensing (Audebert et al. 2018) to medical diagnostics (Ronneberger et al. 2015) and content-based image retrieval (Noh et al. 2017). However, there are several challenges imposed by the multifaceted nature of this problem including the large variation in types and scales of objects, clutter and occlusions in the scene as well as outdoor appearance changes that take place throughout the day and across seasons.

Deep Convolutional Neural Network (DCNN) based methods (Long et al. 2015; Chen et al. 2016; Yu and Koltun 2016) modelled as a Fully Convolutional Neural Network (FCN) have dramatically increased the performance on several semantic segmentation benchmarks. Nevertheless, they still face challenges due to the diversity of scenes in the real-world that cause mismatched relationship and inconspicuous object classes. Figure 1 shows two example scenes from real-world scenarios in which misclassifications are produced due to the decal on the train which is falsely predicted as a person and a traffic sign (first row), and overexposure of the camera caused by the vehicle exiting a tunnel (second row). In order to accurately predict the elements of the scene in such situations, features from complementary modalities such as depth and infrared can be leveraged to exploit properties such as geometry and reflectance, respectively. Moreover, the network can exploit complex intra-modal dependencies more effectively by directly learning to fuse visual appearance information from RGB images with learned features from complementary modalities in an end-to-end fashion. This not only enables the network to resolve inherent ambiguities and improve reliability but also obtain a more holistic scene segmentation.

Example real-world scenarios where current state-of-the-art approaches demonstrate misclassifications. The first row shows an issue of mismatched relationship as well as inconspicuous classes where a decal on the train is falsely predicted as a person and the decal text is falsely predicted as a sign. The second row shows misclassifications caused by overexposure of the camera due to car exiting a tunnel (Color figure online)

While most existing work focuses on where to fuse modality-specific streams topologically (Hazirbas et al. 2016; Schneider et al. 2017; Valada et al. 2016c) and what transformations can be applied on the depth modality to enable better fusion with visual RGB features (Gupta et al. 2014; Eitel et al. 2015), it still remains an open question as to how to enable the network to dynamically adapt its fusion strategy based on the nature of the scene such as the types of objects, their spatial location in the world and the present scene context. This is a crucial requirement in applications such as robotics and autonomous driving where these systems run in continually changing environmental contexts. For example, an autonomous car navigating in ideal weather conditions can primarily rely on visual information but when it enters a dark tunnel or exits an underpassage, the cameras might experience under/over exposure, whereas the depth modality will be more informative. Furthermore, the strategy to be employed for fusion also varies with the types of objects in the scene, for instance, infrared might be more useful to detect categories such as people, vehicles, vegetation and boundaries of structures but it does not provide much information on object categories such as the sky. Additionally, the spatial location of objects in the scene also has an influence, for example, the depth modality provides rich information on objects that are at nearby distances but degrades very quickly for objects that are several meters away. More importantly, the approach employed should be robust to sensor failure and noise as constraining the network to always depend on both modalities and use noisy information can worsen the actual performance and lead to disastrous situations.

Due to these complex interdependencies, naively treating modalities as multi-channel input data or concatenating independently learned modality-specific features does not allow the network to adapt to the aforementioned situations dynamically. Moreover, due to the nature of this dynamicity, the fusion mechanism has to be trained in a self-supervised manner in order to make the adaptivity emergent and to generalize effectively to different real-world scenarios. As a solution to this problem, we present the Self-Supervised Model Adaptation (SSMA) fusion mechanism that adaptively recalibrates and fuses modality-specific feature maps based on the object class, its spatial location and the scene context. The SSMA module takes intermediate encoder representations of modality-specific streams as input and fuses them probabilistically based on the activations of individual modality streams. As we model the SSMA block in a fully convolutional fashion, it yields a probability for each activation in the feature maps which represents the optimal combination to exploit complementary properties. These probabilities are then used to amplify or suppress the representations of the individual modality streams, followed by the fusion. As we base the fusion on modality-specific activations, the fusion is intrinsically tolerant to sensor failure and noise such as missing depth values.

Our proposed architecture for multimodal segmentation consists of individual modality-specific encoder streams which are fused both at mid-level stages and at the end of the encoder streams using our SSMA blocks. The fused representations are input to the decoder at different stages for upsampling and refining the predictions. Note that only the multimodal SSMA fusion mechanism is self-supervised, the semantic segmentation is trained in a supervised manner. We employ a combination of mid-level and late-fusion as several experiments have demonstrated that fusing semantically meaningful representations yields better performance in comparison to early fusion (Eitel et al. 2015; Valada et al. 2016b; Hazirbas et al. 2016; Xiang and Fox 2017; Radwan et al. 2018a). Moreover, studies of the neural dynamics of the human brain has also shown evidence of late-fusion of modalities for recognition tasks (Cichy et al. 2016). However, intermediate network representations are not aligned across modality-specific streams. Hence, integrating fused multimodal mid-level features into high-level features requires explicit prior alignment. Therefore, we propose an attention mechanism that weighs the fused multimodal mid-level skip features with spatially aggregated statistics of the high-level decoder features for better correlation, followed by channel-wise concatenation.

As our fusion framework necessitates individual modality-specific encoders, the architecture that we employ for the encoder and decoder should be efficient in terms of the number of parameters and computational operations, as well as be able to learn highly discriminative deep features. State-of-the-art semantic segmentation architectures such as DeepLab v3 (Chen et al. 2017) and PSPnet (Zhao et al. 2017) employ the ResNet-101 (He et al. 2015a) architecture which consumes 42.39M parameters and 113.96B FLOPS, as the encoder backbone. Training such architectures requires a large amount of memory and synchronized training across multiple GPUs. Moreover, they have slow run-times rendering them impractical for resource constrained applications such as robotics and augmented reality. More importantly, it is infeasible to employ them in multimodal frameworks that require multiple modality-specific streams as we do in this work.

With the goal of achieving the right trade-off between performance and computational complexity, we propose the AdapNet++ architecture for unimodal segmentation. We build the encoder of our model based on the full pre-activation ResNet-50 (He et al. 2016) architecture and incorporate our previously proposed multiscale residual units (Valada et al. 2017) to aggregate multiscale features throughout the network without increasing the number of parameters. The proposed units are more effective in learning multiscale features than the commonly employed multigrid approach introduced in DeepLab v3 (Chen et al. 2017). In addition, we propose an efficient variant of the Atrous Spatial Pyramid Pooling (ASPP) (Chen et al. 2017) called eASPP that employs cascaded and parallel atrous convolutions to capture long range context with a larger effective receptive field, while simultaneously reducing the number of parameters by 87% in comparison to the originally proposed ASPP. We also propose a new decoder that integrates mid-level features from the encoder using multiple skip refinement stages for high resolution segmentation along the object boundaries. In order to aid the optimization and to accelerate training, we propose a multiresolution supervision strategy that introduces weighted auxiliary losses after each upsampling stage in the decoder. This enables faster convergence, in addition to improving the performance of the model along the object boundaries. Our proposed architecture is compact and trainable with a large mini-batch size on a single consumer grade GPU.

Motivated by the recent success of compressing DCNNs by pruning unimportant neurons (Molchanov et al. 2017; Liu et al. 2017; Anwar et al. 2017), we explore pruning entire convolutional feature maps of our model to further reduce the number of parameters. Network pruning approaches utilize a cost function to first rank the importance of neurons, followed by removing the least important neurons and fine-tuning the network to recover any loss in accuracy. Thus far, these approaches have only been employed for pruning convolutional layers that do not have an identity or a projection shortcut connection. Pruning residual feature maps (third convolutional layer of a residual unit) also necessitates pruning the projected feature maps in the same configuration in order to maintain the shortcut connection. This leads to a significant drop in accuracy, therefore current approaches omit pruning convolutional filters with shortcut connections. As a solution to this problem, we propose a network-wide holistic pruning approach that employs a simple and yet effective strategy for pruning convolutional filters invariant to the presence of shortcut connections. This enables our network to further reduce the number of parameters and computing operations, making our model efficiently deployable even in resource constrained applications.

Finally, we present extensive experimental evaluations of our proposed unimodal and multimodal architectures on benchmark scene understanding datasets including Cityscapes (Cordts et al. 2016), Synthia (Ros et al. 2016), SUN RGB-D (Song et al. 2015), ScanNet (Dai et al. 2017) and Freiburg Forest (Valada et al. 2016b). The results demonstrate that our model sets the new state-of-the-art on all these benchmarks considering the computational efficiency and the fast inference time of 72 ms on a consumer grade GPU. More importantly, our dynamically adapting multimodal architecture demonstrates exceptional robustness in adverse perceptual conditions such as fog, snow, rain and night-time, thus enabling it to be employed in critical resource constrained applications such as robotics where not only accuracy but robustness, computational efficiency and run-time are equally important. To the best of our knowledge, this is the first multimodal segmentation work to benchmark on these wide range of datasets containing several modalities and diverse environments ranging from urban city driving scenes to indoor environments and unstructured forested scenes.

In summary, the following are the main contributions of this work:

- 1.

A multimodal fusion framework incorporating our proposed SSMA fusion blocks that adapts the fusion of modality-specific features dynamically according to the object category, its spatial location as well as the scene context and learns in a self-supervised manner.

- 2.

The novel AdapNet++ semantic segmentation architecture that incorporates our multiscale residual units, a new efficient ASPP, a new decoder with skip refinement stages and a multiresolution supervision strategy.

- 3.

The eASPP for efficiently aggregating multiscale features and capturing long range context, while having a larger effective receptive field and over \(10\,\times \) reduction in parameters compared to the standard ASPP.

- 4.

An attention mechanism for effectively correlating fused multimodal mid-level and high-level features for better object boundary refinement.

- 5.

A holistic network-wide pruning approach that enables pruning of convolutional filters invariant to the presence of identity or projection shortcuts.

- 6.

Extensive benchmarking of existing approaches with the same input image size and evaluation setting along with quantitative and qualitative evaluations of our unimodal and multimodal architectures on five different benchmark datasets consisting of multiple modalities.

- 7.

Implementations of our proposed architectures are made publicly available at https://github.com/DeepSceneSeg and a live demo on all the five datasets can be viewed at http://deepscene.cs.uni-freiburg.de.

2 Related Works

In the last decade, there has been a sharp transition in semantic segmentation approaches from employing hand engineered features with flat classifiers such as Support Vector Machines (Fulkerson et al. 2009), Boosting (Sturgess et al. 2009) or Random Forests (Shotton et al. 2008; Brostow et al. 2008), to end-to-end DCNN-based approaches (Long et al. 2015; Badrinarayanan et al. 2015). We first briefly review some of the classical methods before delving into the state-of-the-art techniques.

Semantic Segmentation Semantic segmentation is one of the fundamental problems in computer vision. Some of the earlier approaches for semantic segmentation use small patches to classify the center pixel using flat classifiers (Shotton et al. 2008; Sturgess et al. 2009) followed by smoothing the predictions using Conditional Random Fields (CRFs) (Sturgess et al. 2009). Rather than only relying on appearance based features, structure from motion features have also been used with randomized decision forests (Brostow et al. 2008; Sturgess et al. 2009). View independent 3D features from dense depth maps have been shown to outperform appearance based features, that also enabled classification of all the pixels in an image, as opposed to only the center pixel of a patch (Zhang et al. 2010). Plath et al. (2009) propose an approach to combine local and global features using a CRF and an image classification method. However, the performance of these approaches is largely bounded by the expressiveness of handcrafted features which is highly scenario-specific.

The remarkable performance achieved by CNNs in classification tasks led to their application for dense prediction problems such as semantic segmentation, depth estimation and optical flow prediction. Initial approaches that employed neural networks for semantic segmentation still relied on patch-wise training (Grangier et al. 2009; Farabet et al. 2012; Pinheiro and Collobert 2014). Pinheiro and Collobert (2014) use a recurrent CNN to aggregate several low resolution predictions for scene labeling. Farabet et al. (2012) transforms the input image through a Laplacian pyramid followed by feeding each scale to a CNN for hierarchical feature extraction and classification. Although these approaches demonstrated improved performance over handcrafted features, they often yield a grid-like output that does not capture the true object boundaries. One of the first end-to-end approaches that learns to directly map the low resolution representations from a classification network to a dense prediction output was the Fully Convolutional Network (FCN) model (Long et al. 2015). FCN proposed an encoder-decoder architecture in which the encoder is built upon the VGG-16 (Simonyan and Zisserman 2014) architecture with the inner-product layers replaced with convolutional layers. While, the decoder consists of successive deconvolution and convolution layers that upsample and refine the low resolution feature maps by combining them with the encoder feature maps. The last decoder then yields a segmented output with the same resolution as the input image.

DeconvNet (Noh et al. 2015) propose an improved architecture containing stacked deconvolution and unpooling layers that perform non-linear upsampling and outperforms FCNs but at the cost of a more complex training procedure. The SegNet (Badrinarayanan et al. 2015) architecture eliminates the need for learning to upsample by reusing pooling indices from the encoder layers to perform upsampling. Oliveira et al. (2016) propose an architecture that builds upon FCNs and introduces more refinement stages and incorporates spatial dropout to prevent over fitting. The ParseNet (Liu et al. 2015) architecture models global context directly instead of only relying on the largest receptive field of the network. Recently, there has been more focus on learning multiscale features, which was initially achieved by providing the network with multiple rescaled versions of the image (Farabet et al. 2012) or by fusing features from multiple parallel branches that take different image resolutions (Long et al. 2015). However, these networks still use pooling layers to increase the receptive field, thereby decreasing the spatial resolution, which is not ideal for a segmentation network.

In order to alleviate this problem, Yu and Koltun (2016) propose dilated convolutions that allows for exponential increase in the receptive field without decrease in resolution or increase in parameters. DeepLab (Chen et al. 2016) and PSPNet (Zhao et al. 2017) build upon the aforementioned idea and propose pyramid pooling modules that utilize dilated convolutions of different rates to aggregate multiscale global context. DeepLab in addition uses fully connected CRFs in a post processing step for structured prediction. However, a drawback in employing these approaches is the computational complexity and substantially large inference time even using modern GPUs that hinder them from being deployed in robots that often have limited resources. In our previous work (Valada et al. 2017), we proposed an architecture that introduces dilated convolutions parallel to the conventional convolution layers and multiscale residual blocks that incorporates them, which enables the model to achieve competitive performance at interactive frame rates. Our proposed multiscale residual blocks are more effective at learning multiscale features compared to the widely employed multigrid approach from DeepLab v3 (Chen et al. 2017). While in this work, we propose several new improvements for learning multiscale features, capturing long range context and improving the upsampling in the decoder, while simultaneously reducing the number of parameters and maintaining a fast inference time.

Overview of our proposed Adapnet++ architecture. Given an input image, we use the full pre-activation ResNet-50 architecture augmented with our proposed multiscale residual blocks to yield a feature map 16-times downsampled with respect to the input image resolution, then our proposed efficient atrous spatial pyramid (eASPP) module is employed to further learn multiscale features and to capture long range context. Finally, the output of the eASPP is fed into our proposed deep decoder with skip connections for upsampling and refining the semantic pixel-level prediction (Color figure online)

Multimodal Fusion The availability of low-cost sensors has encouraged novel approaches to exploit features from alternate modalities in an effort to improve robustness as well as the granularity of segmentation. Silberman et al. (2012) propose an approach based on SIFT features and MRFs for indoor scene segmentation using RGB-D images. Subsequently, Ren et al. (2012) propose improvements to the feature set by using kernel descriptors and by combining MRF with segmentation trees. Munoz et al. (2012) employ modality-specific classifier cascades that hierarchically propagate information and do not require one-to-one correspondence between data across modalities. In addition to incorporating features based on depth images, Hermans et al. (2014) propose an approach that performs joint 3D mapping and semantic segmentation using Randomized Decision Forests. There has also been work on extracting combined RGB and depth features using CNNs (Couprie et al. 2013; Gupta et al. 2014) for object detection and semantic segmentation. In most of these approaches, hand engineered or learned features are extracted from individual modalities and combined together in a joint feature set which is then used for classification.

More recently, there has been a series of DCNN-based fusion techniques (Eitel et al. 2015; Kim et al. 2017; Li et al. 2016) that have been proposed for end-to-end learning of fused representations from multiple modalities. These fusion approaches can be categorized into early, hierarchical and late fusion methods. An intuitive early fusion technique is to stack data from multiple modalities channel-wise and feed it to the network as a four or six channel input. However, experiments have shown that this often does not enable the network to learn complementary features and cross-modal interdependencies (Valada et al. 2016b; Hazirbas et al. 2016). Hierarchical fusion approaches combine feature maps from multiple modality-specific encoders at various levels (often at each downsampling stage) and upsample the fused features using a single decoder (Hazirbas et al. 2016; Kim et al. 2017). Alternatively, Schneider et al. (2017) propose a mid-level fusion approach in which NiN layers (Lin et al. 2013) with depth as input are used to fuse feature maps into the RGB encoder in the middle of the network. Li et al. (2016) propose a Long-Short Term Memory (LSTM) context fusion model that captures and fuses contextual information from multiple modalities accounting for the complex interdependencies between them. (Qi et al. 2017) propose an interesting approach that employs 3D graph neural networks for RGB-D semantic segmentation that accounts for both 2D appearance and 3D geometric relations, while capturing long range dependencies within images.

In the late fusion approach, identical network streams are first trained individually on a specific modality and the feature maps are fused towards the end of network using concatenation (Eitel et al. 2015) or element-wise summation (Valada et al. 2016b), followed by learning deeper fused representations. However, this does not enable the network to adapt the fusion to changing scene context. In our previous work (Valada et al. 2016a), we proposed a mixture-of-experts CMoDE fusion scheme for combining feature maps from late fusion based architectures. Subsequently, in (Valada et al. 2017) we extended the CMoDE framework for probabilistic fusion accounting for the types of object categories in the dataset which enables more flexibility in learning the optimal combination. Nevertheless, there are several real-world scenarios in which class-wise fusion is not sufficient, especially in outdoor scenes where different modalities perform well in different conditions. Moreover, the CMoDE module employs multiple softmax loss layers for each class to compute the probabilities for fusion which does not scale for datasets such as SUN RGB-D which has 37 object categories. Motivated by this observation, in this work, we propose a multimodal semantic segmentation architecture incorporating our SSMA fusion module that dynamically adapts the fusion of intermediate network representations from multiple modality-specific streams according to the object class, its spatial location and the scene context while learning the fusion in a self-supervised fashion.

3 AdapNet++ Architecture

In this section, we first briefly describe the overall topology of the proposed AdapNet++ architecture and our main contributions motivated by our design criteria. We then detail each of the constituting architectural components and our model compression technique.

Our network follows the general fully convolutional en-coder-decoder design principle as shown in Fig. 2. The encoder (depicted in blue) is based on the full pre-activation ResNet-50 (He et al. 2016) model as it offers a good trade-off between learning highly discriminative deep features and the computational complexity required. In order to effectively compute high resolution feature responses at different spatial densities, we incorporate our recently proposed multiscale residual units (Valada et al. 2017) at varying dilation rates in the last two blocks of the encoder. In addition, to enable our model to capture long-range context and to further learn multiscale representations, we propose an efficient variant of the atrous spatial pyramid pooling module known as eASPP which has a larger effective receptive field and reduces the number of parameters required by over \(87\%\) compared to the originally proposed ASPP in DeepLab v3 (Chen et al. 2017). We append the proposed eASPP after the last residual block of the encoder, shown as green blocks in Fig. 2. In order to recover the segmentation details from the low spatial resolution output of the encoder section, we propose a new deep decoder consisting of multiple deconvolution and convolution layers. Additionally, we employ skip refinement stages that fuse mid-level features from the encoder with the upsampled decoder feature maps for object boundary refinement. Furthermore, we add two auxiliary supervision branches after each upsampling stage to accelerate training and improve the gradient propagation in the network. We depict the decoder as orange blocks and the skip refinement stages as gray blocks in the network architecture shown in Fig. 2. In the following sections, we discuss each of the aforementioned network components in detail and elaborate on the design choices.

The proposed encoder is built upon the full pre-activation ResNet-50 architecture. Specifically, we remove the last downsampling stage in ResNet-50 by setting the stride from two to one, therefore the final output of the encoder is 16-times downsampled with respect to the input. We then replace the residual units that follow the last downsampling stage with our proposed multiscale residual units. The legend enclosed in red lines show the original pre-activation residual units in the bottom left (yellow, light green and dark green), while our proposed multiscale residual units are shown in the bottom right (cyan and purple) (Color figure online)

3.1 Encoder

Encoders are the foundation of fully convolutional neural network architectures. Therefore, it is essential to build upon a good baseline that has a high representational ability conforming with the computational budget. Our critical requirement is to achieve the right trade-off between the accuracy of segmentation and inference time on a consumer grade GPU, while keeping the number of parameters low. As we also employ the proposed architecture for multimodal fusion, our objective is to design a topology that has a reasonable model size so that two individual modality-specific networks can be trained in a fusion framework and deployed on a single GPU. Therefore, we build upon the ResNet-50 architecture with the full preactivation residual units (He et al. 2016) instead of the originally proposed residual units (He et al. 2015a) as they have been shown to reduce overfitting, improve the convergence and also yield better performance. The ResNet-50 architecture has four computational blocks with varying number of residual units. We use the bottleneck residual units in our encoder as they are computationally more efficient than the baseline residual units and they enable us to build more complex models that are easily trainable. The output of the last block of the ResNet-50 architecture is 32-times downsampled with respect to the input image resolution. In order to increase the spatial density of the feature responses and to prevent signal decimation, we set the stride of the convolution layer in the last block (res4a) from two to one which makes the resolution of the output feature maps 1/16-times the input image resolution. We then replace the residual blocks that follow this last downsampling stage with our proposed multiscale residual units that incorporate parallel atrous convolutions (Yu and Koltun 2016) at varying dilation rates.

A naive approach to compute the feature responses at the full image resolution would be to remove the downsampling and replace all the convolutions to atrous convolutions having a dilation rate \(r\ge 2\) but this would be both computation and memory intensive. Therefore, we propose a novel multiscale residual unit (Valada et al. 2017) to efficiently enlarge the receptive field and aggregate multiscale features without increasing the number of parameters and the computational burden. Specifically, we replace the \(3\times 3\) convolution in the full pre-activation residual unit with two parallel \(3\times 3\) atrous convolutions with different dilation rates and half the number of feature maps each. We then concatenate their outputs before the following \(1\times 1\) convolution.

By concatenating their outputs, the network additionally learns to combine the feature maps of different scales. Now, by setting the dilation rate in one of the \(3\times 3\) convolutional layers to one and another to a rate \(r\ge 2\), we can preserve the original scale of the features within the block and simultaneously add a larger context. While, by varying the dilation rates in each of the parallel \(3\times 3\) convolutions, we can enable the network to effectively learn multiscale representations at different stages of the network. The topology of the proposed multiscale residual units and the corresponding original residual units are shown in the legend in Fig. 3. The lower left two units show the original configuration, while the lower right two units show the proposed configuration. Figure 3 shows our entire encoder structure with the full pre-activation residual units and the multiscale residual units.

We incorporate the first multiscale residual unit with \(r_1=1, r_2=2\) before the third block at res3d (unit before the block where we remove the downsampling as mentioned earlier). Subsequently, we replace the units res4c, res4d, res4e, res4f with our proposed multiscale units with rates \(r_1=1\) in all the units and \(r_2=2, 4, 8, 16\) correspondingly. In addition, we replace the last three units of block four res5a, res5b, res5c with the multiscale units with increasing rates in both \(3\times 3\) convolutions, as \((r_1=2, r_2=4)\), \((r_1=2, r_2=8)\), \((r_1=2, r_2=16)\) correspondingly. We evaluate our proposed configuration in comparison to the multigrid method of DeepLab v3 (Chen et al. 2017) in Sect. 5.5.

Depiction of the ASPP module from DeepLab v3 and our proposed efficient eASPP module. eASPP reduces the number of parameters by \(87.87\%\) and the number of FLOPS by \(89.88\%\), while simultaneously achieving improved performance. Note that all the convolution layers have batch normalization and we change the corresponding dilation rates in the \(3\,\times \,3\) convolutions in ASPP to 3, 6, 12 as the input feature map to the ASPP is of dimensions \(48\,\times \,23\) in our network architecture (Color figure online)

3.2 Efficient Atrous Spatial Pyramid Pooling

In this section, we first describe the topology of the Atrous Spatial Pyramid Pooling (ASPP) module, followed by the structure of our proposed efficient Atrous Spatial Pyramid Pooling (eASPP). ASPP has become prevalent in most state-of-the-art architectures due to its ability to capture long range context and multiscale information. Inspired by spatial pyramid pooling (He et al. 2015c), the initially proposed ASPP in DeepLab v2 (Liang-Chieh et al. 2015) employs four parallel atrous convolutions with different dilation rates. Concatenating the outputs of multiple parallel atrous convolutions aggregates multi-scale context with different receptive field resolutions. However, as illustrated in the subsequent DeepLab v3 (Chen et al. 2017), applying extremely large dilation rates inhibits capturing long range context due to image boundary effects. Therefore, an improved version of ASPP was proposed (Chen et al. 2017) to add global context information by incorporating image-level features.

The resulting ASPP shown in Fig. 4a consists of five parallel branches: one \(1\times 1\) convolution and three \(3\times 3\) convolutions with different dilation rates. Additionally, image-level features are introduced by applying global average pooling on the input feature map, followed by a \(1\times 1\) convolution and bilinear upsampling to yield an output with the same dimensions as the input feature map. All the convolutions have 256 filters and batch normalization layers to improve training. Finally, the resulting feature maps from each of the parallel branches are concatenated and passed through another \(1\times 1\) convolution with batch normalization to yield 256 output filters. The ASPP module is appended after the last residual block of the encoder where the feature maps are of dimensions \(65\times 65\) in the DeepLab v3 architecture (Chen et al. 2017), therefore dilation rates of 6, 12 and 18 were used in the parallel \(3\times 3\) atrous convolution layers. However, as we use a smaller input image, the dimensions of the input feature map to the ASPP is \(24\times 48\), therefore, we reduce the dilation rates to 3, 6 and 12 in the \(3\times 3\) atrous convolution layers respectively.

Our decoder consists of three upsampling stages that recover segmentation details using deconvolution layers and two skip refinement stages that fuse mid-level features from the encoder to improve the segmentation along object boundaries. Each skip refinement stage consists of concatenation of mid-level features with the upsampled decoder feature maps, followed by two \(3\,\times \,3\) convolutions to improve the discriminability of the high-level features and the resolution of the refinement (Color figure online)

The biggest caveat of employing the ASPP is the extremely large amount of parameters and floating point operations per second (FLOPS) that it consumes. Each of the \(3\times 3\) convolutions have 256 filters, which in total for the entire ASPP amounts to 15.53 M parameters and 34.58 B FLOPS which is prohibitively expensive. To address this problem, we propose an equivalent structure called eASPP that substantially reduces the computational complexity. Our proposed topology is based on two principles: cascading atrous convolutions and the bottleneck structure. Cascading atrous convolutions effectively enlarges the receptive field as the latter atrous convolution takes the output of the former atrous convolution. The receptive field size F of an atrous convolution is be computed as

where r is the dilation rate of the atrous convolution and N is the filter size. When two atrous convolutions with the receptive field sizes as \(F_1\) and \(F_2\) are cascaded, the effective receptive field size is computed as

For example, if two atrous convolutions with filter size \(F=3\) and dilation \(r=3\) are cascaded, then each of the convolutions individually has a receptive field size of 7, while the effective receptive field size of the second atrous convolution is 13. Moreover, cascading atrous convolutions enables denser sampling of pixels in comparison to parallel atrous convolution with a larger receptive field. Therefore, by using both parallel and cascaded atrous convolutions in the ASPP, we can efficiently aggregate dense multiscale features with very large receptive fields.

In order to reduce the number of parameters in the ASPP topology, we employ a bottleneck structure in the cascaded atrous convolution branches. The topology of our proposed eASPP shown in Fig. 4b consists of five parallel branches similar to ASPP but the branches with the \(3\times 3\) atrous convolutions are replaced with our cascaded bottleneck branches. If c is the number of channels in the \(3 \times 3\) atrous convolution, we add a \(1\times 1\) convolution with c / 4 filters before the atrous convolution to squeeze only the most relevant information through the bottleneck. We then replace the \(3 \times 3\) atrous convolution with two cascaded \(3 \times 3\) atrous convolutions with c / 4 filters, followed by another \(1\times 1\) convolution to restore the number of filters to c. The proposed eASPP only has 2.04 M parameters and consumes 3.62 B FLOPS which accounts to a reduction of \(87.87\%\) of parameters and \(89.53\%\) of FLOPS in comparison to the ASPP. We evaluate our proposed eASPP in comparison to ASPP in the ablation study presented in Sect. 5.5.2 and show that it achieves improved performance while being more than 10 times efficient in the number of parameters.

3.3 Decoder

The output of the eASPP in our network is 16-times downsampled with respect to the input image and therefore it has to be upsampled back to the full input resolution. In our previous work (Valada et al. 2017), we employed a simple decoder with two deconvolution layers and one skip refinement connection. Although the decoder was more effective in recovering the segmentation details in comparison to direct bilinear upsampling, it often produced disconnected segments while recovering the structure of thin objects such as poles and fences. In order to overcome this impediment, we propose a more effective decoder in this work.

Depiction of the two auxiliary softmax losses that we add before each skip refinement stage in the decoder in addition to the main softmax loss in the end of the decoder. The two auxiliary losses are weighed for balancing the gradient flow through all the previous layers. While testing the auxiliary branches are removed and only the main stream as shown in Fig. 5 is used (Color figure online)

Our decoder shown in Fig. 5 consists of three stages. In the first stage, the output of the eASPP is upsampled by a factor of two using a deconvolution layer to obtain a coarse segmentation mask. The upsampled coarse mask is then passed through the second stage, where the feature maps are concatenated with the first skip refinement from Res3d. The skip refinement consists of a \(1\times 1\) convolution layer to reduce the feature depth in order to not outweigh the encoder features. We experiment with varying number of feature channels in the skip refinement in the ablation study presented in Sect. 5.5.3. The concatenated feature maps are then passed through two \(3\times 3\) convolutions to improve the resolution of the refinement, followed by a deconvolution layer that again upsamples the feature maps by a factor of two. This upsampled output is fed to the last decoder stage which resembles the previous stage consisting of concatenation with the feature maps from the second skip refinement from Res2c, followed by two \(3\times 3\) convolution layers. All the convolutional and deconvolutional layers until this stage have 256 feature channels, therefore the output from the two \(3\times 3\) convolutions in the last stage is fed to a \(1\times 1\) convolution layer to reduce the number of feature channels to the number of object categories C. This output is finally fed to the last deconvolution layer which upsamples the feature maps by a factor of four to recover the original input resolution.

3.4 Multiresolution Supervision

Deep networks often have difficulty in training due to the intrinsic instability associated with learning using gradient descent which leads to exploding or vanishing gradient problems. As our encoder is based on the residual learning framework, shortcut connections in each unit help propagating the gradient more effectively. Another technique that can be used to mitigate this problem to a certain extent is by initializing the layers with pretrained weights, however our proposed eASPP and decoder layers still have to be trained from scratch which could lead to optimization difficulties. Recent deep architectures have proposed employing an auxiliary loss in the middle of encoder network (Lee et al. 2015; Zhao et al. 2017), in addition to the main loss towards the end of the network. However, as shown in the ablation study presented in Sect. 5.5.1 this does not improve the performance of our network although it helps the optimization to converge faster.

Unlike previous approaches, in this work, we propose a multiresolution supervision strategy to both accelerate the training and improve the resolution of the segmentation. As described in the previous section, our decoder consists of three upsampling stages. We add two auxiliary loss branches at the end of the first and second stage after the deconvolution layer in addition to the main \(\mathsf {softmax}\) loss \(\mathcal {L}_{main}\) at the end of the decoder as shown in Fig. 6. Each auxiliary loss branch decreases the feature channels to the number of category labels C using a \(1\times 1\) convolution with batch normalization and upsamples the feature maps to the input resolution using bilinear upsampling. We only use simple bilinear upsampling which does not contain any weights instead of a deconvolution layer in the auxiliary loss branches as our aim is to force the main decoder stream to improve its discriminativeness at each upsampling resolution so that it embeds multiresolution information while learning to upsample. We weigh the two auxiliary losses \(\mathcal {L}_{aux1}\) and \(\mathcal {L}_{aux2}\) to balance the gradient flow through all the previous layers. While testing, the auxiliary loss branches are discarded and only the main decoder stream is used. We experiment with different loss weightings in the ablation study presented in Sects. 5.5.3 and in 5.5.1 we show that each of the auxiliary loss branches improves the segmentation performance in addition to speeding-up the training.

3.5 Network Compression

As we strive to design an efficient and compact semantic segmentation architecture that can be employed in resource constrained applications, we must ensure that the utilization of convolutional filters in our network is thoroughly optimized. Often, even the most compact networks have abundant neurons in deeper layers that do not significantly contribute to the overall performance of the model. Excessive convolutional filters not only increase the model size but also the inference time and the number of computing operations. These factors critically hinder the deployment of models in resource constrained real-world applications. Pruning of neural networks can be traced back to the 80s when LeCun et al. (1990) introduced a technique called Optimal Brain Damage for selectively pruning weights with a theoretically justified measure. Recently, several new techniques have been proposed for pruning weight matrices (Wen et al. 2016; Anwar et al. 2017; Liu et al. 2017; Li et al. 2016) of convolutional layers as most of the computation during inference is consumed by them.

These approaches rank neurons based on their contribution and remove the low ranking neurons from the network, followed by fine-tuning of the pruned network. While the simplest neuron ranking method computes the \(\ell ^1\)-norm of each convolutional filter (Li et al. 2016), more sophisticated techniques have recently been proposed (Anwar et al. 2017; Liu et al. 2017; Molchanov et al. 2017). Some of these approaches are based on sparsity based regularization of network parameters which additionally increases the computational overhead during training (Liu et al. 2017; Wen et al. 2016). Techniques have also been proposed for structured pruning of entire kernels with strided sparsity (Anwar et al. 2017) that demonstrate impressive results for pruning small networks. However, their applicability to complex networks that are to be evaluated on large validation sets has not been explored due its heavy computational processing. Moreover, until a year ago these techniques were only applied to simpler architectures such as VGG (Simonyan and Zisserman 2014) and AlexNet (Krizhevsky et al. 2012), as pruning complex deep architectures such as ResNets requires a holistic approach. Thus far, pruning of residual units has only been performed on convolutional layers that do not have an identity or shortcut connection as pruning them additionally requires pruning the added residual maps in the exact same configuration. Attempts to prune them in the same configuration have resulted in a significant drop in performance (Li et al. 2016). Therefore, often only the first and the second convolutional layers of a residual unit are pruned.

Our proposed AdapNet++ architecture has shortcut and skip connections both in the encoder as well the decoder. Therefore, in order to efficiently maximize the pruning of our network, we propose a holistic network-wide pruning technique that is invariant to the presence of skip or shortcut connections. Our proposed technique first involves pruning all the convolutional layers of a residual unit, followed by masking out the pruned indices of the last convolutional layer of a residual unit with zeros before the addition of the residual maps from the shortcut connection. As masking is performed after the pruning, we efficiently reduce the parameters and computing operations in a holistic fashion, while optimally pruning all the convolutional layers and preserving the shortcut or skip connections. After each pruning iteration, we fine-tune the network to recover any loss in accuracy. We illustrate this strategy adopting a recently proposed greedy criteria-based oracle pruning technique that incorporates a novel ranking method based on a first order Taylor expansion of the network cost function (Molchanov et al. 2017). The pruning problem is framed as a combinatorial optimization problem such that when the weights B of the network are pruned, the change in cost value will be minimal.

where \(\mathcal {T}\) is the training set, \(\mathcal {W}\) is the network parameters and \(\mathcal {C}(\cdot )\) is the negative log-likelihood function. Based on Taylor expansion, the change in the loss function from removing a specific parameter can be approximated. Let \(h_i\) be the output feature maps produced by parameter i and \(h_i=\{z_{0}^{1},z_{0}^{2},\cdots ,z_{L}^{C_l}\}\). The output \(h_i\) can be pruned by setting it to zero and the ranking can be given by

Approximating with Taylor expansion, we can write

where M is the length of the vectorized feature map. This ranking can be easily computed using the standard back-propagation computation as it requires the gradient of the cost function with respect to the activation and the product of the activation. Furthermore, in order to achieve adequate rescaling across layers, a layer-wise \(\ell ^2\)-norm of the rankings is computed as

The entire pruning procedure can be summarized as follows: first the AdapNet++ network is trained until convergence using the training protocol described in Sect. 5.1. Then the importance of the feature maps is evaluated using the aforementioned ranking method and subsequently the unimportant feature maps are removed. The pruned convolution layers that have shortcut connections are then masked at the indices where the unimportant feature maps are removed to maintain the shortcut connections. The network is then fine-tuned and the pruning process is reiterated until the desired trade-off between accuracy and the number of parameters has been achieved. We present results from pruning our AdapNet++ architecture in Sect. 5.4, where we perform pruning of both the convolutional and deconvolutional layers of our network in five stages by varying the threshold for the rankings. For each of these stages, we quantitatively evaluate the performance versus number of parameters trade-off obtained using our proposed pruning strategy in comparison to the standard approach.

4 Self-Supervised Model Adaptation

In this section, we describe our approach to multimodal fusion using our proposed self-supervised model adaptation (SSMA) framework. Our framework consists of three components: a modality-specific encoder as described in Sect. 3.1, a decoder built upon the topology described in Sect. 3.3 and our proposed SSMA block for adaptively recalibrating and fusing modality-specific feature maps. In the following, we first formulate the problem of semantic segmentation from multimodal data, followed by a detailed description of our proposed SSMA units and finally we describe the overall topology of our fusion architecture.

We represent the training set for multimodal semantic segmentation as \({\varvec{\mathcal {T}}} = \{(I_n,K_n,M_n) \mid n = 1,\dots ,N\}\), where \(I_n=\{u_r \mid r=1,\ldots ,\rho \}\) denotes the input frame from modality a, \(K_n=\{k_r \mid r=1,\ldots ,\rho \}\) denotes the corresponding input frame from modality b and the groundtruth label is given by \(M_n=\{m_{r} \mid r=1,\ldots ,\rho \}\), where \(m_{r} \in \{1,\ldots ,C\}\) is the set of semantic classes. The image \(I_n\) is only shown to the modality-specific encoder \(E_a\) and similarly, the corresponding image \(K_n\) from a complementary modality is only shown to the modality-specific encoder \(E_b\). This enables each modality-specific encoder to specialize in a particular sub-space learning their own hierarchical representations individually. We assume that the input images \(I_n\) and \(K_n\), as well as the label \(M_n\) have the same dimensions \(\rho = H\times W\) and that the pixels are drawn as i.i.d. samples following a categorical distribution. Let \(\theta \) be the network parameters consisting of weights and biases. Using the classification scores \(s_j\) at each pixel \(u_r\), we obtain probabilities \(\mathbf{P } = (p_1, \dots , p_C)\) with the \(\mathsf {softmax}\) function such that

denotes the probability of pixel \(u_r\) being classified with label j. The optimal \(\theta \) is estimated by minimizing

for \((I_n, K_n, M_n) \in {\varvec{\mathcal {T}}}\), where \(\delta _{m_r, j}\) is the Kronecker delta.

4.1 SSMA Block

In order to adaptively recalibrate and fuse feature maps from modality-specific networks, we propose a novel architectural unit called the SSMA block. The goal of the SSMA block is to explicitly model the correlation between the two modality-specific feature maps before fusion so that the network can exploit the complementary features by learning to selectively emphasize more informative features from one modality, while suppressing the less informative features from the other. We construct the topology of the SSMA block in a fully-convolutional fashion which empowers the network with the ability to emphasize features from a modality-specific network for only certain spatial locations or object categories, while emphasizing features from the complementary modality for other locations or object categories. Moreover, the SSMA block dynamically recalibrates the feature maps based on the input scene context.

The structure of the SSMA block is shown in Fig. 7. Let \(\mathbf{X }^{a} \in \mathbb {R}^{C \times H\times W}\) and \(\mathbf{X }^{b} \in \mathbb {R}^{C \times H\times W}\) denote the modality-specific feature maps from modality A and modality B respectively, where C is the number of feature channels and \(H \times W\) is the spatial dimension. First, we concatenate the modality-specific feature maps \(\mathbf{X }^{a}\) and \(\mathbf{X }^{b}\) to yield \(\mathbf{X }^{ab} \in \mathbb {R}^{2\cdot C \times H\times W}\). We then employ a recalibration technique to adapt the concatenated feature maps before fusion. In order to achieve this, we first pass the concatenated feature map \(\mathbf{X }^{ab}\) through a bottleneck consisting of two \(3\times 3\) convolutional layers for dimensionality reduction and to improve the representational capacity of the concatenated features. The first convolution has weights \(\mathcal {W}_1 \in \mathbb {R}^{\frac{1}{\eta } \cdot C\times H\times W}\) with a channel reduction ratio \(\eta \) and a non-linearity function \(\delta (\cdot )\). We use ReLU for the non-linearity, similar to the other activations in the encoders and experiment with different reductions ratios in Sect. 5.10.2. Note that we omit the bias term to simplify the notation. The subsequent convolutional layer with weights \(\mathcal {W}_2 \in \mathbb {R}^{2 \cdot C\times H\times W}\) increases the dimensionality of the feature channels back to concatenation dimension 2C and a sigmoid function \(\sigma (\cdot )\) scales the dynamic range of the activations to the [0, 1] interval. This can be represented as

The resulting output \(\mathbf{s }\) is used to recalibrate or emphasize/de-emphasize regions in \(\mathbf{X }^{ab}\) as

where \(F_{scale} (\mathbf{X }^{ab},\mathbf{s })\) denotes Hadamard product of the feature maps \(\mathbf{X }^{ab}\) and the matrix of scalars \(\mathbf{s }\) such that each element \(x_{c,i,j}\) in \(\mathbf{X }^{ab}\) is multiplied with a corresponding activation \(s_{c,i,j}\) in \(\mathbf{s }\) with \(c \in \{1,2,\dots ,2C\}\), \(i \in \{1,2,\dots ,H\}\) and \(j \in \{1,2,\dots ,W\}\). The activations \(\mathbf{s }\) adapt to the concatenated input feature map \(\mathbf{X }^{ab}\), enabling the network to weigh features element-wise spatially and across the channel depth based on the multimodal inputs \(I_n\) and \(K_n\). With new multimodal inputs, the network dynamically weighs and reweighs the feature maps in order to optimally combine complementary features. Finally, the recalibrated feature maps \(\hat{\mathbf{X }}^{ab}\) are passed through a \(3\times 3\) convolution with weights \(\mathcal {W}_3 \in \mathbb {R}^{C \times H\times W}\) and a batch normalization layer to reduce the feature channel depth and yield the fused output \(\mathbf{f }\) as

Topology of our Adapnet++ encoder for multimodal fusion. The encoder employs a late fusion technique to fuse feature maps from modality-specific streams using our proposed SSMA block. The SSMA block is employed to fuse the latent features from the eASPP as well as the feature maps from the skip refinements (Color figure online)

As described in the following section, we employ our proposed SSMA block to fuse modality-specific feature maps both at intermediate stages of the network and towards the end of the encoder. Although we utilize a bottleneck structure to conserve the number of parameters consumed, further reduction in the parameters can be achieved by replacing the \(3\times 3\) convolution layers with \(1\times 1\) convolutions, which yields comparable performance. We also remark that the SSMA blocks can be used for multimodal fusion in other tasks such as scene classification as shown in Sect. 5.9.

4.2 Fusion Architecture

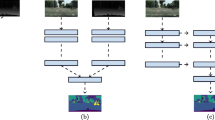

We propose a framework for multimodal semantic segmentation using a modified version of our AdapNet++ architecture and the proposed SSMA blocks. For simplicity, we consider the fusion of two modalities, but the framework can be easily extended to arbitrary number of modalities. The encoder of our framework shown in Fig. 8 contains two streams, where each stream is based on the encoder topology described in Sect. 3.1. Each encoder stream is modality-specific and specializes in a particular sub-space. In order to fuse the feature maps from both streams, we adopt a combination of mid-level and late fusion strategy in which we fuse the latent representations of both encoders using the SSMA block and pass the fused feature map to the first decoder stage. We denote this as latent SSMA fusion as it takes the output of the eASPP from each modality-specific encoder as input. We set the reduction ratio \(\eta = 16\) in the latent SSMA. As the AdapNet++ architecture contains skip connections for high-resolution refinement, we employ an SSMA block at each skip refinement stage after the \(1\times 1\) convolution as shown in Fig. 8. As the \(1\times 1\) convolutions reduce the feature channel depth to 24, we only use a reduction ratio \(\eta = 6\) in the two skip SSMAs as identified from the ablation experiments presented in Sect. 5.10.2.

Topology of the modified AdapNet++ decoder used for multimodal fusion. We propose a mechanism to better correlate the fused mid-level skip refinement features with the high-level decoder feature before integrating into the decoder. The correlation mechanism is depicted following the fuse skip connections (Color figure online)

In order to upsample the fused predictions, we build upon our decoder described in Sect. 3.3. The main stream of our decoder resembles the topology of the decoder in our AdapNet++ architecture consisting of three upsampling stages. The output of the latent SSMA block is fed to the first upsampling stage of the decoder. Following the AdapNet++ topology, the outputs of the skip SSMA blocks would be concatenated into the decoder at the second and third upsampling stages (skip1 after the first deconvolution and skip2 after the second deconvolution). However, we find that concatenating the fused mid-level features into the decoder does not substantially improve the resolution of the segmentation, as much as in the unimodal AdapNet++ architecture. We hypothesise that directly concatenating the fused mid-level features and fused high-level features causes a feature localization mismatch as each SSMA block adaptively recalibrates at different stages of the network where the resolution of the feature maps and channel depth differ by one half of their dimensions. Moreover, training the fusion network end-to-end from scratch also contributes to this problem as without initializing the encoders with modality-specific pre-trained weights, concatenating the uninitialized mid-level fused encoder feature maps into the decoder does not yield any performance gains, rather it hampers the convergence.

With the goal of mitigating this problem, we propose two strategies. In order to facilitate better fusion, we adopt a multi-stage training protocol where we first initialize each encoder in the fusion architecture with pre-trained weights from the unimodal AdapNet++ model. We describe this procedure in Sect. 5.1.2. Secondly, we propose a mechanism to better correlate the mid and high-level fused features before concatenation in the decoder. We propose to weigh the fused mid-level skip features with the spatially aggregated statistics of the high-level decoder features before the concatenation. Following the notation convention, we define \(\mathbf{D } \in \mathbb {R}^{C \times H\times W}\) as the high-level decoder feature map before the skip concatenation stage. A feature statistic \(\mathbf{s } \in \mathbb {R}^C\) is produced by projecting \(\mathbf{D }\) along the spatial dimensions \(H\times W\) using a global average pooling layer as

where \(s_c\) represents a statistic or a local descriptor of the \(c^{th}\) element of \(\mathbf{D }\). We then reduce the number of feature channels in \(\mathbf{s }\) using a \(1\times 1\) convolution layer with weights \(\mathcal {W}_{4} \in \mathbb {R}^{C \times H\times W}\), batch normalization and an ReLU activation function \(\delta \) to match the channels of the fused mid-level feature map \(\mathbf{f }\), where \(\mathbf{f }\) is computed as shown in Eq. (12). We can represent resulting output as

Finally, we weigh the fused mid-level feature map \(\mathbf{f }\) with the reduced aggregated descriptors \(\mathbf{z }\) using channel-wise multiplication as

As shown in Fig. 9, we employ the aforementioned mechanism to the fused feature maps from skip1 SSMA as well as skip2 SSMA and concatenate their outputs with the decoder feature maps at the second and third upsampling stages respectively. We find that this mechanism guides the fusion of mid-level skip refinement features with the high-level decoder feature more effectively than direct concatenation and yields a notable improvement in the resolution of the segmentation output.

5 Experimental Results

In this section, we first describe the datasets that we benchmark on, followed by comprehensive quantitative results for unimodal segmentation using our proposed AdapNet++ architecture in Sect. 5.3 and the results for model compression in Sect. 5.4. We then present detailed ablation studies that describe our architectural decisions in Sect. 5.5, followed by the qualitative unimodal segmentation results in Sect. 5.6. We present the multimodal fusion benchmarking experiments with the various modalities contained in the datasets in Sect. 5.7 and the ablation study on our multimodal fusion architecture in Sect. 5.10. We finally present the qualitative multimodal segmentation results in Sect. 5.11 and in challenging perceptual conditions in Sect. 5.12.

All our models were implemented using the TensorFlow (Abadi et al. 2015) deep learning library and the experiments were carried out on a system with an Intel Xeon E5 with 2.4 GHz and an NVIDIA TITAN X GPU. We primarily use the standard Jaccard Index, also known as the intersection-over-union (IoU) metric to quantify the performance. The IoU for each object class is computed as IoU = TP/(TP + FP + FN), where TP, FP and FN correspond to true positives, false positives and false negatives respectively. We report the mean intersection-over-union (mIoU) metric for all the models and also the pixel-wise accuracy (Acc), average precision (AP), global intersection-over-union (gIoU) metric, false positive rate (FPR), false negative rate (FNR) in the detailed analysis. All the metrics are computed as defined in the PASCAL VOC challenge (Everingham et al. 2015) and additionally, the gIoU metric is computed as \(\text {gIoU} = \sum _{\text {C}} \text {TP}_{\text {C}}/\sum _{\text {C}} (\text {TP}_{\text {C}} + \text {FP}_{\text {C}} + \text {FN}_{\text {C}})\), where C is the number of object categories. The implementations of our proposed architectures are publicly available at https://github.com/DeepSceneSeg and a live demo can be viewed at http://deepscene.cs.uni-freiburg.de.

5.1 Training Protocol

In this section, we first describe the procedure that we employ for training our proposed AdapNet++ architecture, followed by the protocol for training the SSMA fusion scheme. We then detail the various data augmentations that we perform on the training set.

5.1.1 AdapNet++ Training

We train our network with an input image of resolution \(768\times 384\) pixels, therefore we employ bilinear interpolation for resizing the RGB images and the nearest-neighbor interpolation for the other modalities as well as the groundtruth labels. We initialize the encoder section of the network with weights pre-trained on the ImageNet dataset (Deng et al. 2009), while we use the He initialization (He et al. 2015b) for the other convolutional and deconvolutional layers. We use the Adam solver for optimization with \(\beta _1=0.9, \beta _2=0.999\) and \(\epsilon =10^{-10}\). We train our model for 150K iterations using an initial learning rate of \(\lambda _0 = 10^{-3}\) with a mini-batch size of 8 and a dropout probability of 0.5. We use the cross-entropy loss function and set the weights \(\lambda _1 =0.6\) and \(\lambda _2 =0.5\) to balance the auxiliary losses. The final loss function can be given as \(\mathcal {L} = \mathcal {L}_{main} + \lambda _1 \mathcal {L}_{aux1} + \lambda _2 \mathcal {L}_{aux2}\).

5.1.2 SSMA Training

We employ a multi-stage procedure for training the multimodal models using our proposed SSMA fusion scheme. We first train each modality-specific Adapnet++ model individually using the training procedure described in Sect. 5.1.1. In the second stage, we leverage transfer learning to train the joint fusion model in the SSMA framework by initializing only the encoders with the weights from the individual modality-specific encoders trained in the previous stage. We then set the learning rate of the encoder layers to \(\lambda _0 = 10^{-4}\) and the decoder layers to \(\lambda _0 = 10^{-3}\), and train the fusion model with a mini-batch of 7 for a maximum of 100 K iterations. This enables the SSMA blocks to learn the optimal combination of multimodal feature maps from the well trained encoders, while slowly adapting the encoder weights to improve the fusion. In the final stage, we fix the learning rate of the encoder layers to \(\lambda _0 = 0\) while only training the decoder and the SSMA blocks with a learning rate of \(\lambda _0 = 10^{-5}\) and a mini-batch size of 12 for 50 K iterations. This enables us to train the network with a larger batch size, while focusing more on the upsampling stages to yield the high-resolution segmentation output.

5.1.3 Data Augmentation

The training of deep networks can be significantly improved by expanding the dataset to introduce more variability. In order to achieve this, we apply a series of augmentation strategies randomly on the input data while training. The augmentations that we apply include rotation (\(-\,13^{\circ }\) to \(13^{\circ }\)), skewing (0.05–0.10), scaling (0.5–2.0), vignetting (210–300), cropping (0.8–0.9), brightness modulation (\(-\,40\) to 40), contrast modulation (0.5–1.5) and flipping.

5.2 Datasets

We evaluate our proposed AdapNet++ architecture on five publicly available diverse scene understanding benchmarks ranging from urban driving scenarios to unstructured forested scenes and cluttered indoor environments. The datasets were particularly chosen based on the criteria of containing scenes with challenging perceptual conditions including rain, snow, fog, night-time, glare, motion blur and other seasonal appearance changes. Each of the datasets contain multiple modalities that we utilize for benchmarking our fusion approach. We briefly describe the datasets and their constituting semantic categories in this section.

Cityscapes The Cityscapes dataset (Cordts et al. 2016) is one of the largest labeled RGB-D dataset for urban scene understanding. Being one of the standard benchmarks, it is highly challenging as it contains images of complex urban scenes, collected from over 50 cities during varying seasons, lighting and weather conditions. The images were captured using a automotive-grade 22 cm baseline stereo camera at a resolution of \(2048\times 1024\) pixels. The dataset contains 5000 finely annotated images, of which 2875 are provided for training, 500 are provided for validation and 1525 are used for testing. As a supplementary training set, 20,000 coarse annotations are also provided. The testing images are not publicly released, they are used by the evaluation server for benchmarking on 19 semantic object categories. We report results on the full 19 class label set for both the validation and test sets. Additionally, in order to facilitate comparison with previous fusion approaches we also report results on the reduced 11 class label set consisting of: sky, building, road, sidewalk, fence, vegetation, pole, car/truck/bus, traffic sign, person, rider/bicycle/motorbike and background.

In our previous work (Valada et al. 2017), we directly used the colorized depth image as input to our network. We converted the stereo disparity map to a three-channel colorized depth image by normalizing and applying the standard jet color map Fig. 10a, c show an example image and the corresponding colorized depth map from the dataset. However, as seen in the figure, the depth maps have considerable amount of noise and missing depth values due to occlusion, which are undesirable especially when utilizing depth maps as an input modality for pixel-wise segmentation. Therefore, in this work, we employ a recently proposed state-of-the-art fast depth completion technique (Ku et al. 2018) to fill any holes that may be present. The resulting filled depth map is shown in Fig. 10d. The depth completion algorithm can easily be incorporated into our pipeline as a preprocessing step as it only requires 11ms while running on the CPU and it can be further parallelized using a GPU implementation. Additionally, Gupta et al. (2014) proposed an alternate representation of the depth map known as the HHA encoding to enable DCNNs to learn more effectively. The authors demonstrate that the HHA representation encodes properties of geocentric pose that emphasizes on complementary discontinuities in the image which are extremely hard for the network to learn, especially from limited training data. This representation also yields a three-channel image consisting of: horizontal disparity, height above ground, and the angle between the local surface normal of a pixel and the inferred gravity direction. The resulting channels are then linearly scaled and mapped to the 0 to 255 range. However, it is still unclear if this representation enables the network to learn features complementary to that learned from visual RGB images as different works show contradicting results (Hazirbas et al. 2016; Gupta et al. 2014; Eitel et al. 2015). In this paper, we perform in-depth experiments with both the jet colorized and the HHA encoded depth map on a larger and more challenging dataset than previous works to investigate the utility of these encodings.

Synthia The Synthia dataset (Ros et al. 2016) is a large-scale urban outdoor dataset that contains photo realistic images and depth data rendered from a virtual city built using the Unity engine. An example image and the corresponding modalities from this dataset is shown in Fig. 11. It consists of several annotated label sets. In this work, we use the Synthia-Rand-Cityscapes and the video sequences which have images of resolution \(1280\times 760\) with a \(100^{\circ }\) horizontal field of view. This dataset is of particular interest for benchmarking the fusion approaches as it contains diverse traffic situations under different weather conditions. Synthia-Rand-Cityscapes consists of 9000 images and the sequences contain 8000 images with groundtruth labels for 12 classes. The categories of object labels are the same as the aforementioned Cityscapes label set.

SUN RGB-D The SUN RGB-D dataset (Song et al. 2015) is one of the most challenging indoor scene understanding benchmarks to date. It contains 10,335 RGB-D images that were captured with four different types of RGB-D cameras (Kinect V1, Kinect V2, Xtion and RealSense) with different resolutions and fields of view. This benchmark also combines several other datasets including 1449 images from the NYU Depth v2 (Silberman et al. 2012), 554 images from the Berkeley B3DO (Janoch et al. 2013) and 3389 images from the SUN3D (Xiao et al. 2013). An example image and the corresponding modalities from this dataset is shown in Fig. 12. We use the original train-val split consisting of 5285 images for training and 5050 images for testing. We use the refined in-painted depth images from the dataset that were processed using a multi-view fusion technique. However, some refined depth images still have missing depth values at distances larger than a few meters. Therefore, as mentioned in previous works (Hazirbas et al. 2016), we exclude the 587 training images that were captured using the RealSense RGB-D camera as they contain a significant amount of invalid depth measurements that are further intensified due to the in-painting process.

This dataset provides pixel-level semantic annotations for 37 categories, namely: wall, floor, cabinet, bed, chair, sofa, table, door, window, bookshelf, picture, counter, blinds, desk, shelves, curtain, dresser, pillow, mirror, floor mat, clothes, ceiling, books, fridge, tv, paper, towel, shower curtain, box, whiteboard, person, night stand, toilet, sink, lamp, bathtub and bag. Although we benchmark on all the object categories, 16 out of the 37 classes are rarely present in the images and about \(0.25\%\) of the pixels are not assigned to any of the classes, making it extremely unbalanced. Moreover, as each scene contains many different types of objects, they are often partially occluded and may appear completely different in the test images.

ScanNet The ScanNet RGB-D video dataset (Dai et al. 2017) is a recently introduced large-scale indoor scene understanding benchmark. It contains 2.5M RGB-D images accounting to 1512 scans acquired in 707 distinct spaces. The data was collected using an iPad Air2 mounted with a depth camera similar to the Microsoft Kinect v1. Both the iPad camera and the depth camera were hardware synchronized and frames were captured at 30 Hz. The RGB images were captured at a resolution of \(1296\times 968\) pixels and the depth frames were captured at \(640\times 480\) pixels. The semantic segmentation benchmark contains 16,506 labelled training images and 2537 testing images. From the example depth image shown in Fig. 13b, we can see that there are a number of missing depth values at the object boundaries and at large distances. Therefore, similar to the preprocessing that we perform on the cityscapes dataset, we use a fast depth completion technique (Ku et al. 2018) to fill the holes. The corresponding filled depth image is shown in Fig. 13c. We also compute the HHA encoding for the depth maps and use them as an additional modality in our experiments.

The dataset provides pixel-level semantic annotations for 21 object categories, namely: wall, floor, chair, table, desk, bed, bookshelf, sofa, sink, bathtub, toilet, curtain, counter, door, window, shower curtain, refrigerator, picture, cabinet, other furniture and void. Similar to the SUN RGB-D dataset, many object classes are rarely present making the dataset unbalanced. Moreover, the annotations at the object boundaries are often irregular and parts of objects at large distances are unlabelled as shown in Fig. 13e. These factors make the task even more challenging on this dataset.

Freiburg Forest In our previous work (Valada et al. 2016b), we introduced the Freiburg Multispectral Segmentation benchmark, which is a first-of-a-kind dataset of unstructured forested environments. Unlike urban and indoor scenes which are highly structured with rigid objects that have distinct geometric properties, objects in unstructured forested environments are extremely diverse and moreover, their appearance completely changes from month to month due to seasonal variations. The primary motivation for the introduction of this dataset is to enable robots to discern obstacles that can be driven over such as tall grass and bushes to obstacles that should be avoided such as tall trees and boulders. Therefore, we proposed to exploit the presence of chlorophyll in these objects which can be detected in the Near-InfraRed (NIR) wavelength. NIR images provide a high fidelity description on the presence of vegetation in the scene and as demonstrated in our previous work (Valada et al. 2017), it enhances border accuracy for segmentation.

The dataset was collected over an extended period of time using our Viona autonomous robot equipped with a Bumblebee2 camera to capture stereo images and a modified camera with the NIR-cut filter replaced with a Wratten 25 A filter for capturing the NIR wavelength in the blue and green channels. The dataset contains over 15,000 images that were sub-sampled at 1 Hz, corresponding to traversing over 4.7 km each day. In order to extract consistent spatial and global vegetation information we computed vegetation indices such as Normalized Difference Vegetation Index (NDVI) and Enhanced Vegetation Index (EVI) using the approach presented by Huete et al. (1999). NDVI is resistant to noise caused due to changing sun angles, topography and shadows but is susceptible to error due to variable atmospheric and canopy background conditions (Huete et al. 1999). EVI was proposed to compensate for these defects with improved sensitivity to high biomass regions and improved detection though decoupling of canopy background signal and reduction in atmospheric influences. Figure 14 shows an example image from the dataset and the corresponding modalities. The dataset contains hand-annotated segmentation groundtruth for six classes: sky, trail, grass, vegetation, obstacle and void. We use the original train and test splits provided by the dataset.

5.3 AdapNet++ Benchmarking