Abstract

Recently, graph databases have been received much attention in the research community due to their extensive applications in practice, such as social networks, biological networks, and World Wide Web, which bring forth a lot of challenging data management problems including subgraph search, shortest path queries, reachability verification, pattern matching, and so on. Among them, the graph pattern matching is to find all matches in a data graph G for a given pattern graph Q and is more general and flexible compared with other problems mentioned above. In this paper, we address a kind of graph matching, the so-called graph matching with δ, by which an edge in Q is allowed to match a path of length ≤ δ in G. In order to reduce the search space when exploring G to find matches, we propose a new index structure and a novel pruning technique to eliminate a lot of unqualified vertices before join operations are carried out. Extensive experiments have been conducted, which show that our approach makes great improvements in running time compared to existing ones.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Nowadays, in numerous applications, including social networks, biological networks, and WWW networks, as well as geographical networks, data are normally organized into graphs with vertices for objects and edges for their relationships. The burgeoning size and heterogeneity of networks have inspired extensive interests in querying a graph in different ways, such as subgraph search [1,2,3,4], shortest path queries [5], reachability queries [6,7,8,9], and pattern matching queries [10,11,12,13,14,15]. Among them, the pattern matching is very challenging, by which we are asked to look for all matches of a certain pattern graph Q in a data graph G, each of which is isomorphic to Q or satisfies certain conditions related to Q. As a key ingredient of many advanced applications in large networks, the graph matching is conducted in many different domains: (1) in the traditional relational database research, a schema is often represented as a graph. By matching of data instances, we are required to map a schema graph to part of a data graph to check any updating of data for consistency [16]; (2) in a large metabolic network, it is desirable to find all protein substructures that contain an α–β-barrel motif, specified as a cycle of β strands embraced by an α-helix cycle [17]; (3) in the computer vision, a scene is naturally represented as a graph G(V, E), where a feature is a vertex in V and an edge in E stands for a geographical adjacency of two features [18]. Then, a scene recognition is just a matching of a graph standing for part of a scene to another stored in databases.

The first two applications mentioned above are typically exact matching, by which the graph isomorphism checking, or subgraph isomorphism is required. In other words, the mapping between two graphs must be both vertex label preserving and edge preserving in the sense that if two vertices in the first graph are linked by an edge, they are mapped to two vertices in the second by an edge as well. It is well known that the subgraph isomorphism checking is NP-complete [19]. A lot of work has been done on this problem, but most of them are for special kind of graphs, such as [20] for planar graphs and [21] for valence graphs, or by establishing indexes [17, 22,23,24,25,26,27], or using some kind of heuristics [28, 29] to speed up the working process. The third application is a kind of inexact matching. First, two matching features from two graphs may disagree in some way due to different observations of a same object. Secondly, between two adjacent features in a graph may there be some more features in another graph [18] figured out by a more detailed description. This leads to a new kind of queries, called pattern matching with δ (or graph matching with δ), where δ is a number, by which an edge in a query graph is allowed to match a path of length ≤ δ in a data graph. More specifically, two adjacent vertices v and v′ in a query graph Q can match two vertices u and u′ in a data graph G with label(v) = label(u) and label(v′) = label(u′) if the distance between u and u′ is ≤ δ. Here, the distance between u and u′ is defined to be the weight of the shortest path connecting these two vertices, denoted as dist(u, u′). Note that when δ = 1, the problem reduces to the normal subgraph isomorphism.

Tong et al. [11] discussed the first algorithm, based on joins, for evaluating such queries with δ = 2. Its worst time complexity is bounded by O(∏i|Di|), where Di is a subset of vertices in G with the same label as label(vi) (vi ∈ Q). Cheng et al. [12] extended this algorithm to evaluate the same kind of queries, but with δ unlimited. For such more general and more useful queries, an index structure is introduced, called a 2-hop cover [30], which can be used to facilitate the construction of relations Rij each corresponding to an edge (vi, vj) ∈ Q such that for each <u, u′ > ∈ Rij the shortest distance between u and u′ is bounded by δ. But its running time is also bounded by O(∏i|Di|).

Cheng’s algorithm has been greatly improved by Zou et al. [13]. They map all vertices in G(V, E) into the points in a vector space of k-dimensions by using the so-called LLR embedding technique discussed in [31, 32], where k is selected to be O(log2|V|) to save space. In this way, the computation of distance between each pair of vertices can be done a little bit faster. However, the worst time complexity remains unchanged.

The main disadvantage of [12, 13] is due to the usage of 2-hop covers as their indexes, which needs to first construct the whole transitive closure of G(V, E) as a pre-data structure and requires O(|V|3) time and O(|V|2) space in the worst case, obviously not scaling to larger graphs which are common in contemporary applications. For example, in our experiments, to create the 2-hop cover of a real graph roadNetPA of 1,088,092 vertices and 1,541,898 edges on a desktop with a 2.40-GHz Intel Xeon E5-2630 processor and 32 GB RAM, we need 33.4 h. The space requirement is about 27.023G bytes. For another real graph roadNetTX of 1, 379, 917 vertices and 1,921,660 edges, we are not able to get the result within 3 days!

In this paper, we address this limitation and propose a new method to evaluate pattern matching with δ based on a new concept of δ-transitive closure of G, used as an index, as well as a filtering method to remove useless data before a join is conducted. Besides, the bit mapping technique is also integrated into our filtering method to speed up the computation.

-

1.

(δ-transitive closure) For a weighted (directed or undirected) graph G, we will construct an index over it for a given constant δ, called the δ-transitive closure of G and denoted as Gδ, which is a graph with V(Gδ) = V(G) and has an edge between vertex u and vertex u′ if and only if the shortest distance between these two vertices is ≤ δ.

-

2.

(relation filtering) Let e = (vi, vj) be an edge in Q. Let Rij be a relation corresponding to <label(vi), label(vj)> such that for each <u, u′> in Rij there is a path from u to u′ with their shortest distance ≤ δ in G, label(u) = label(vi) and label(u′) = label(vj). A tuple <u, u′> in Rij is said to be triangle consistent if for any vk ∈ Q incident to vi, vj, or both of them there exists at least a vertex u′′ ∈ Dk such that <u′, u′′> (<u′′, u′>) ∈ Rjk (resp. Rkj) and <u, u′′> (<u′′, u>) ∈ Rik (resp. Rki). If vi or vj is not incident to vk, we consider that a virtual relation between vi (or vj) and vk exists and for any u ∈ Di (or u′ ∈ Dj) and any u′′ ∈ vk we have <u, u′′> (or <u′, u′′>) belonging to this virtual relation. For example, if we do not have (vj, vk) in Q, then u′ need not be checked against any vertex u′′ in Dk as if <u′, u′′> always exists in the corresponding virtual relation Rjk. Our goal is to remove all those tuples which are not triangle consistent before join operations are actually conducted, which enables us to improve efficiency by one order of magnitude or more.

-

3.

(bit mapping) The relation filtering works in a propagational way. That is, eliminating a tuple from an Rij may lead to some more tuples from some other relations removed. To expedite this process, a kind of bit mapping techniques is used, which further decreases the time complexity.

The reminder of the paper is organized as follows: In Sect. 2, we give the basic concepts of the problem. In Sect. 3, we discuss the related work. Section 4 is devoted to the index construction. In Sects. 5 and 6, we discuss the relation filtering and the join ordering, respectively. In Sect. 7, we report the experiment results. Finally, a short conclusion is set forth in Sect. 8.

2 Problem statements

In this section, we give a formal definition of the pattern matching queries with δ over directed weighted graphs G. First of all, G should be a Weakly Connected Component (WCC) (i.e., the undirected version of G is connected); otherwise, we can decompose G into a collection of WCCs and perform pattern matching over each WCC in turn. Secondly, we will use the shortest path length to measure the distance between two vertices. However, our approach is not restricted to this distance function, and it can also be applied to other metrics without any difficulty.

Definition 2.1

(Data Graph G) A data graph G = (V(G), E(G), Σ) is a vertex-labeled, directed, weighted, and weakly connected graph. Here, V(G) is a set of labeled vertices, E(G) is a set of edges (ordered pairs) each with a weight represented as a nonnegative number, and Σ is a set of vertex labels. Each vertex u ∈ V(G) is assigned a label l ∈ Σ, denoted as label(v) = l.

Definition 2.2

(Query Graph Q) A query Q is a vertex-labeled and directed graph, Q = (V(Q), E(Q)). Here, V(Q) is a set of labeled vertices, and E(Q) is a set of edges. Each vertex v ∈ V(Q) is also assigned a label l ∈ Σ.

Definition 2.3

(List D[l]) Given a data graph G, we use D[l] to represent a list that includes all those vertices u in G whose labels are l ∈ Σ, i.e., label(u) = l for each u ∈ D[l].

Sometimes we also use the notation D, instead of D[l], if its label l is clear from the context. Let v be a vertex in Q with label(v) = l. We also call D[l] the domain of v.

Definition 2.4

(Edge Query) Given a data graph G, an edge e = (vi, vj) in a query graph Q, and a parameter δ, the evaluation of e reports all matching pairs <ui, uj> in G if the following conditions hold:

-

(1)

label(ui) = label(vi) and label(uj) = label(vj);

-

(2)

The distance from ui to uj in G is not larger than δ. That is, Dist(ui, uj) ≤ δ.

Definition 2.5

(Pattern Matching Query with δ) Given a data graph G, a query graph Q with n vertices {v1,…, vn} and a parameter δ, the evaluation of Q reports all matching results <u1,…un> in G if the following conditions hold:

-

1.

label(ui) = label(vi) for 1 ≤ i ≤ n;

-

2.

For any edge (vi, vj) ∈ Q, the distance between ui and uj in G is no larger than δ, i.e., Dist(ui, uj) ≤ δ.

In Fig. 1a, we show a simple graph, in which the numbers inside the vertices are their IDs and the letters attached to them are their labels. There are altogether 4 labels: Σ = {A, B, C, D}. Each edge is also associated with a number, representing its weight. In Fig. 1b, we show a simple query Q.

Since Q contains three vertices labeled with A, B, C ∈ Σ, respectively, three lists: D[A], D[B], D[C] from G will be constructed. For example, D[A] = {u6, u7, u8}.

In addition, the query Q also has 3 query edges, {(v1, v2), (v2, v3), (v3, v1)}. If the parameter δ = 5, the pairs matching a query edge (v1, v2) (with labels (A, B)) are {<u6, u3>, <u7, u9>, <u8, u9>, <u8, u5>}. The matching result of the whole query Q is {<u8, u9, u10>, <u7, u9, u4>}. If δ = 6, the pairs matching (v1, v2) is the same as δ = 5. But the matching result of the whole query Q will be augmented by adding {<u8, u9, u4>}.

The common symbols used in this paper are summarized in Table 1.

In a similar way, we can also define the problem for undirected, weighted graphs, by simply removing directions of edges when calculating the distance between two vertices.

Finally, we should notice that if G contains negative-weight cycles the shortest path from a vertex to another may not be well defined. It is because by a negative-weight cycle C, we have some edges associated with negative weights and all the weights of the edges on C can sum up to a negative value. Thus, we can always find a path with lower weight by following such a cycle. In this paper, negative-weight cycles will not be considered.

3 Related work

The first algorithm for handling pattern matching with δ was discussed in [11], in which Tong et al. proposed a method called G-Ray (or Graph X-ray) to find subgraphs that match a query pattern Q either exactly or inexactly. If the matching is inexact, an edge (vi, vj) in Q is allowed to match a path of length 2. That is, vi, vj can match, respectively, two vertices u and u′, which are separated by an intermediate vertex. This algorithm is based on a basic graph searching, but with two heuristics being used:

-

Seed selection Each time to search a graph G, a set of starting points will be determined. Normally, they are some vertices having the same label as a vertex v, but with the largest degree in Q.

-

A goodness score function g(u) (u ∈G) is used to guide the searching of G such that only those subgraphs with good measurements will be explored.

Although the G-Ray can efficiently find the best-effort subgraphs that qualify for Q, it is not as general and flexible as the graph pattern matching with δ defined in the previous section.

The method discussed in [12] is general, in which an index called a 2-hop cover is used to speed up the computation of distances between each pair of vertices u and u′ with u ↝u′, where ↝ represents that u is reachable to u′ through a path. By the 2-hop labeling method, each vertex u in G will be assigned a label L(u) = (Lin(u), Lout(u)), where Lin(u), Lout(u) ⊆ V(G) and u ↝u′ if and only if Lout(u) ∩ Lin(u′) ≠ Φ. To find the minimum size of a 2-hop cover for each u in G proves to be NP-hard [6]. Therefore, in practice, only a nearly optimal solution is used [6, 18].

The vertices in both Lin (u) and Lout (u) are called centers. By using the centers, Dist(u, u′) can be computed as follows [12]:

Clearly, the distances between a vertex u and a center w (i.e., Dist(u, w) or Dist(u, w)) can be pre-computed. As shown in [6], for each vertex the average size of distance label is bounded by O(|E(G)|1/2). Thus, by using Eq. (1), we need O(|E(G)|1/2) time to compute distances for each pair of reachable vertices in the worst case.

According to (1), a join algorithm was discussed in [12]. The main idea of this algorithm is as follows: based on the 2-hop labeling method [6], for each center w, two clusters F(w) and T(w) of vertices are defined and via w every vertex u in F(w) can reach every vertex u′ in T(w). Then, an index structure is built based on these clusters, by which for each vertex label pair (l, l′), all those centers w will be stored in a W-Table and labeled l if w is in F(w) or labeled l′ if it is in T(w). Thus, when a query Q is submitted, for each edge (v, v′) labeled, for example, with (A, B) in Q, all those centers w will be searched such that in the W-table there exists at least a vertex u labeled A in F(w) and at least a vertex u′ labeled B in T(w). The Cartesian Product of vertices labeled A in F(w) and vertices labeled B in T(w) will form the matches of (v, v′) in Q. This operation is called an R-join or reachability-join. When the number of edges in Q is larger than one, a series of R-joins need to be conducted. The worst time complexity of this method is bounded by O(∏i|Di|), where Di is a subset of vertices in G with the same label as label(vi) with vi ∈ Q (i = 1, …, n).

Zou et al. [13] extended the idea of [12] by proposing a general framework for handling pattern matching queries with δ. By this method, for each edge (vi, vj) in Q with label (A, B), a D-join (distance-based join) algorithm is conducted to get all the matches in G, according to Eq. 2 given below, where Di and Dj are two lists, respectively, for two vertices vi and vj in Q, while u and u′ are two vertices, respectively, in the two lists.

In order to reduce the cost of this join, the so-called LLR embedding technique discussed in [31, 32] is utilized to map all vertices of G into the points of a k-dimensional vector space. Here k is selected to be O(log2|V(G)|) to save space. Then, the Chebyshev distance [33] between each pair of points u and u′ in the vector space, referred to as L∞(u, u′), is computed. In comparison with the approach discussed in [12], this method is more efficient since the Chebyshev distance is easy to calculate. Furthermore, the k-medoids algorithm [34] is used to divide each Ri (more exactly, the points corresponding to Ri) into different clusters Cik (k = 1,…, l for some l). For each cluster Cik, a center cik is determined and then the radius of Cik, denoted as r(Cik), is defined to be the maximal L∞-distance between cik and a point in Cik. During a D-join process between lists Di and Dj, such clusters can be used to reduce computation by checking whether L∞(cik, cjk′) > r(Cik) + r(Cjk′) + δ. If it is the case, the corresponding join (i.e., Cik ⋈ Cik′) need not be carried out since the L∞-distance between any two points u ∈ Cik and u′ ∈ Cjk′ must be larger than δ. By using the above main pruning method along with Neighbor Area Pruning and Triangle Inequality Pruning [13], all candidate matching results are evaluated, which will be further checked by the 2-hop labeling technique to get the final results. In addition, in the case of multiple edges of Q, another procedure, called the MD-join (distance-based multi-way join), needs to be invoked to filter all the unmatched vertices each time a new query edge is visited. The worst time complexity of this method is the same as [12].

Although a lot of work has been done on subgraph search [1,2,3,4], shortest path queries [5], and reachability queries [6,7,8], none of them can be used or extended to evaluate pattern matching queries with δ since all the pruning techniques developed for them are based on an exact edge matching with δ set to be 1. In addition, the graph simulation is a kind of graph matching quite different from the graph isomorphism [35], by which a pattern vertex is allowed to map to multiple vertices in a data graph and therefore can be easily solved in polynomial time. More recently, the so-called bounded simulation has been discussed in [36, 37], by which some more constraints are imposed on a matching and even can be solved in a cubic time [37]. The techniques developed for both of them cannot be employed for our purpose.

4 Construction of indexes

In this section, we begin to describe our method. In a nutshell, our algorithm comprises three steps. In the first step, we will construct offline a δ-transitive closure for a certain δ-value as an index over G, by which a set of binary relations as illustrated in Fig. 2b will be constructed. (In general, how large δ is set is determined according to historical query logs.) Thus, when a query Q is submitted, for each edge e = (vi, vj) in Q, a relation corresponding to <label(vi), label(vj)>, referred to as Rij, will be loaded from hard disk to main memory. In the second step, we will do a relation filtering. Finally, in the third step, a series of equi-joins on the reduced relations will be conducted to get the final results.

In the subsequent discussion, we mainly concentrate on the index construction. The second and third steps will be shifted to Sects. 5 and 6, respectively.

4.1 Constructing δ-transitive closures

As with [12], we will construct an index for graphs to speed up the computation. However, instead of 2-hop covers, we will construct δ-transitive closures for them.

Definition 4.1

(δ-Transitive closures) Let G = (V, E, Σ) be a directed, weighted graph. Let δ > 0 be a positive number. A δ-transitive closure of G, denoted Gδ, is a graph such that V(Gδ) = V, and there is an edge <u, u′> ∈ E(Gδ) if and only if dist(u, u′) ≤ δ.

Example 1

Consider the graph shown in Fig. 1a again. For δ = 5, its δ-transitive closure G5 is a graph as shown in Fig. 2a, which can be stored as a set of binary relations as shown in Fig. 2b, in which each tuple is a pair of vertices, but associated with the corresponding shortest distance ≤ 5 for computation convenience.

This concept is motivated by an observation that δ tends to be small in practice. For instance, in a social network where each vertex u represents a person and each edge represents a relationship like parent-of, brother-of, sister-of, uncle-of, and so on, if the weight of each edge is set to be 1, then to check whether a person is a relative of somebody else, setting δ = 6 or larger, is not so meaningful. As another example, we consider an application in forensics and assume that a detective may want to investigate an individual who is related to a known criminal through some relationships, such as money laundry and human trafficking. Then, setting δ > 5 may not be quite helpful for the detective to find some significant evidence.

Clearly, for different applications, we can adjust the values of δ to pre-compute Gδ. One choice for this task is to use an algorithm for finding all-pair shortest paths [38]. However, since such an algorithm needs to store the output in a matrix, it is not so suitable for very large graphs. In this case, we can utilize a single-source shortest path algorithm [39] and run it to generate Gδ. Specifically, the following two steps will be conducted:

-

1.

Remove all those edges from G(V, E), whose weight is > δ.

-

2.

Run the single-source shortest path algorithm |V| times each with a different vertex as a source. (The algorithm is slightly modified in such a way that when every value in the heap used by the algorithm is larger than δ, the process stops.)

If all the edge weights are nonnegative, the best algorithm for this problem uses Fibonacci heap and needs only O(|V|2lgδ + |V||E|) time [39].

In comparison with 2-hop covers, the main advantage of δ-transitive closures is threefold:

-

Less space is required to store a δ-transitive closure than a 2-hop cover if δ is not set so large.

-

Much less time is needed to create a δ-transitive closure of G than a 2-hop cover of G. It is because to generate a 2-hop cover the entire transitive closure of G has to be first created, which requires O(|V|3) time in the worst case, much higher than the time complexity for creating Gδ.

-

No online time is needed to form Rij’s while by the methods with 2-hop covers Rij’s have to be generated on-the-fly when a query Q arrives. This is done by using the 2-hop data structures for the relevant edges in Q. Obviously, the response time to queries can be greatly delayed.

4.2 Relation signatures and vertex counters

For each relation in a δ-transitive closure, we can also establish two extra data structures for efficiency: one is a set of bit string pairs with each associated with an Rij, called the relation signature of Rij; and the other is a set of counters each for a single vertex in G.

4.2.1 Relation signatures

Consider G(V, E, Σ). Let Σ = {l1, …, lk}. We will divide all the vertices into |Σ| disjoint lists, denoted as D[l1], …, D[lk] such that D[l1] ∪ …, ∪ D[lk] = V, D[li] ∩ D[lj] = ϕ for i ≠ j, and all the vertices in a D[li] have the same label li. Then, we sort all vertices in D[li] in ascending order by their vertex IDs and then refer to each vertex by its order number. For example, for the graph shown in Fig. 1a, we have D[A] = {u6, u7, u8}. Then, u6 is the first vertex, u7 the second, and u8 the third in D[A]. In this way, a vertex in G can be referred to as a pair (l, i), where l is a label, and i is the order number of the vertex in D[l]. For example, u6 can be represented as (A, 1). Let R be a relation in Gδ corresponding to a pair of vertex labels (l, l′). Denote by R[1] and R[2] all vertices in the first and the second columns, respectively. Then, all the vertices in R[1] (R[2]) can be represented by a bit string s of length |D[l]| (resp. |D[l′]|) such that s[i] = 1 if the ith vertex of D[l] (resp. D[l′]|) appears in R[1] (resp. R[2]). Otherwise, s[i] = 0. Let s, s′ be the bit string for R[1] and (R[2]), respectively. We call S =[s | s′] the signature of R, respectively, referred to as R·S[1] = s and R·S[2] = s′.

Example 2

Continued with Example 1. In Fig. 3a, we show all the lists each associated with a label in Gδ. In Fig. 3b, we redraw the relations shown in Fig. 2b with each vertex replaced with its order number in the corresponding list. Their signatures are shown in Fig. 3c.

4.2.2 Counters

By using relation signatures, we are able to indicate whether a vertex appears in a column of a certain relation. However, the information on how many times it appears in that column is missing. So, for each vertex u in a D[l], we will also associate it with a set of counters each for a column in a different relation, in which it occurs. Assume that u is a vertex appearing in the first column of some Ri. Then, it will have a counter, denoted as u·Ci [1], to record how many times it appears in that column of Ri. In general, if it appears in k columns (respectively, from different relations), it will be associated with k counters. For example, u6 (represented by (A, 1)) appears in 6 columns: R1 [1], R3 [1], R4 [2], R6 [2], R8 [1], and R8 [2] (see Fig. 3b). Thus, u6 will be associated with 6 counters: u6·C1 [1] = 1, u6·C3 [1] = 1, u6·C4 [2] = 1, u6·C6 [2] = 1, u6·C8 [1] = 1, u6·C8 [2] = 1. But u8 (represented by (A, 3)) appears only in 5 columns. Thus, it has 5 counters: u8·C1 [1] = 2, u8·C4 [2] = 1, u8·C6 [2] = 1, u8·C8 [1] = 1, u8·C8 [2] = 1.

Obviously, both relation signatures and counters for vertices can be established offline as part of indexes.

5 Relation filtering

In this section, we present our algorithm for relation filtering. First, we give the algorithm in 5.1. Then, we prove the correctness and analyze the computational complexity in 5.2.

5.1 Algorithm description

When a query Q with parameter δ′ arrives, a simple way to evaluate it can be described as follows: First, we locate all the relevant relations (in Gδ). Then, for each edge (vi, vj) ∈ Q, remove all those tuples <u, u′> with dist(u, u′) > δ′ from the corresponding relation Rij = R(label(vi), label(vj)). Next, we join such Rij’s to form the final result.

However, we can do better by filtering all those tuples which cannot contribute to the final result before the joins are carried out. To this end, we need a new concept of triangle consistency.

Definition 5.1

(Triangle consistency) Let Q be a query with parameter δ′. Let (vi, vj) be an edge in Q. A tuple t =<u, u′> ∈ Rij in Gδ with δ ≥ δ′ is said to be triangle consistent with respect to a vertex vk (k ≠ i, j) in Q if for Rjk, or Rkj, and Rik, or Rki in Gδ, one of the following conditions is satisfied:

-

1.

if vk is incident to vj but not to vi, then there exists u′′ such that <u′, u′′> ∈ Rjk if (vj, vk) ∈ Q, or <u′′, u′> ∈ Rkj if (vk, vj) ∈ Q;

-

2.

if vk is incident to vi but not to vj, then there exists u′′ such that <u′, u′′> ∈ Rik if (vi, vk) ∈ Q, or <u′′, u′> ∈ Rki if (vk, vi) ∈ Q;

-

3.

if vk is incident to both vi and vj, then there exists u′′ such that

-

<u, u′′> ∈ Rik and <u′′, u′> ∈ Rkj if (vi, vk), (vk, vj) ∈ Q; or

-

<u, u′′> ∈ Rik and <u′, u′′> ∈ Rjk if (vi, vk), (vj, vk) ∈ Q; or

-

<u′′, u> ∈ Rki and <u′′, u′> ∈ Rjk if (vk, vi), (vk, vj) ∈ Q; or

-

<u′′, u> ∈ Rki and <u′, u′′> ∈ Rkj if (vk, vi), (vj, vk) ∈Q.

In each of the above three cases, u′′ is said to be consistent with t. The motivation of this concept is that if <u, u′> in Rij is not triangle consistent with some vk (k ≠ i, j) in Q incident to vi or vj, it cannot be part of any answer to Q. Thus, only if <u, u′> in Rij is triangle consistent with any vertex in Q incident to vi, vj, or both, <u, u′> can be possibly part of an answer. In this case, we say, <u, u′> is triangle consistent. Notice that if neither vi nor vj is incident to any vertex in Q, all the tuples in Rij are trivially considered to be triangle consistent.

The following example helps for illustration.

Example 3

Consider the query with parameter δ′ = 5 shown in Fig. 1b. To evaluate this query against the graph shown in Fig. 1a, we will first load three relations into main memory: R1, R5, and R6 (shown in Fig. 3b) from Gδ. For illustration, we show the data in the three relations as a graph in Fig. 4a.

In this graph, the tuple represented by edge (u8, u9) ∈ R12 (= R1 shown in Fig. 3b) is triangle consistent with respect to v3 in Q. But edge (u3, u2) ∈ R23 (= R5 shown in Fig. 3b) is not triangle consistent with v1 since we have an edge (v3, v1) labeled with <C, A> in Q, but we do not have an edge going from u2 to a vertex in D(label(v1)) = D(A). (Note that edge (u6, u2) in Fig. 4a is just in the reverse direction of edge (v3, v1) in Q.)

In fact, only the tuples represented by the edges in the subgraph shown in Fig. 4b are triangle consistent, while all the other edges not in this subgraph are not. From this example, we can see that by a relation filtering the sizes of relations can be dramatically decreased. Our purpose is to find an efficient way to remove all the triangle inconsistent data from the relevant relations before the joins over them are actually performed.

In the following, we will first devise a procedure to check the triangle consistency of each tuple t = <u, u′> in every Rij with respect to a single vertex vk (k ≠ i, j) in Q. Then, a general algorithm for removing all the useless data will be presented.

Below is the formal description of these algorithms. Besides the data structures described in 4.2, each tuple t in every relation Rij (corresponding to (vi, vj) ∈ Q) is additionally associated with two kinds of extra data structures for efficient computation:

-

α[t, k]—the number of vertices u in D[k] (for each k ≠ i, j), each of which is triangle consistent with t. Initially, each α[t, k] = 0.

-

β[t]—a set containing all those vertices u in D[k] (for each k ≠ i, j) such that each of them is triangle consistent with t. In β[t], each element is referred to as (k, u), indicating that it is a vertex in D[k]. Initially, each β[t] = ϕ (empty).

In addition, a global variable L is used to store all the tuples which are found not triangle consistent with some v in Q, to facilitate the propagation of inconsistency.

The above algorithm is a general control to check the triangle consistency for all the tuples in Rij (relation for edge (vi, vj) ∈ Q) with respect to a certain vertex vk ∈ Q. In general, we will distinguish between two cases: (1) vk is incident only to one of the two vertices: vi or vj (see line 1), and (2) vk is incident to both vi and vj (see line 7). For case 1, we further have four sub-cases (see lines 2, 3, 4, 5, respectively). For each of them, a sub-procedure check-1( ) is invoked (see line 6), in which three tasks will be performed:

-

make a bit-wise and operation over the corresponding relation signatures,

-

change the counters of the corresponding vertices according to the result of the bit-wise and operation, and

-

change the relevant relation signatures themselves.

For case 2, we also distinguish among four sub-cases (see lines 8, 9, 10, 11, respectively). But for each of them, we will call a different sub-procedure check-2( ) to do the same tasks as check-1( ), but in different ways. Finally, in line 12, Δ-consistency will be invoked to check the triangle consistency involving vi, vj, and vk.

As mentioned above, in check-1( ), we will first calculate s = R1·S[b] ∧ R2·S[a] (see line 1), where R1 and R2 represent two relations corresponding to two edges incident to a common vertex in Q. Then, in terms of s, we will make changes, respectively, with respect to R1 and R2 as described above by calling tuple-removing( ) given below.

Special attention should also be paid to the modulo operations given in line 2: y = (x mod 2) + 1, by which y = 1 if x = 2 and y = 2 if x = 1.

In the following algorithm, we use R(l, a) to represent the value appearing in the l-th tuple, a-th column of relation R. For a tuple t, t(i) (i = 1, 2) stands for its i-th value.

In tuple-removing(s, R, R′, a, b, c), we will first eliminate inconsistent tuples from R according to s (lines 2–5). Then, for each removed <x, y>, we will change the counters associated with x, y, respectively (see lines 6 and 8). In particular, if the counter of a vertex becomes 0, the bit for that vertex in the corresponding relation signature will also be modified to 0 (see lines 7 and 9).

In check-2( ), we need to do three bit-wise and operations, with each corresponding to a pair of joint edges within a triangle (see lines 1, 4, and 7). Each of them can be simply done by calling check-1( ) (see lines 3, 6, and 12). But we should notice the difference between the third case (line 7) and the first two cases (line 1 and line 4). For the third case, we need to distinguish among 4 sub-cases (lines 8–11), while for each of the first two cases only two sub-cases (see lines 2 and 5) need to be handled.

Now, we give the formal description of Δ-consistency(i, j, k), which is invoked in line 12 in tcControl( ). For simplicity, however, we only show the algorithm for the case (vj, vk), (vk, vi) ∈ Q. For this, we will check, for each tuple <u′, u′′> ∈ Rjk, whether there exists l with s[l] = 1 such that <l, u′> ∈ Rij, (u′′, l) ∈ Rki, where s = Rij·S[2] ∧ Rjk·S[1]. For the other three cases (i.e., (vi, vk), (vj, vk) ∈ Q, (vi, vk), (vj, vk) ∈ Q, (vk, vi), (vk, vj) ∈ Q), a similar process can be established for each of them.

The above algorithm mainly comprises two steps. In the first step (lines 1–7), we create s : = Rij·S[2] ∧ Rjk·S[1] and then scan s bit by bit. For each s[l] = 1, we will check, for each pair of tuples: <u′, l> ∈ Rij and <l, u′′> ∈ Rij, whether <u′′, u′> appears in Rij. If such a tuple exist, α[] and β[] will be accordingly changed (lines 6–7). In the second step (lines 8–15), for each α[t, x] = 0 (for some x) we remove t from the corresponding relation (see lines 10–12) and accordingly change relevant counters and relation signatures (see lines 13–14).

Example 4

Applying Δ-consistency(2, 3, 1) to the three edges of Q shown in Fig. 1b against the δ-transitive closure shown in Fig. 3b, three relations: R5, R6, and R1 will be loaded into main memory. The following computation will be conducted. (Since R5, R6, and R1 correspond to the three edges in Q, they are also referred to as R23, R31, and R12, respectively.)

-

1.

s: = R23·S[2] ∧ R31·S[1] = R5·S[2] ∧ R6·S[1] = 111 ∧ 011 = 011.

-

2.

s[2] = 1. For t8 = <2, 2> (<u5, u4>) ∈ R23 = R5 and t12 = <2, 2> (<u4, u7>) ∈ R31 = R6, we will check whether <2, 2> (<u7, u5>) is in R12 = R1. Since <2, 2> (<u7, u5>) ∉ R12, lines 6 and 7 will not be executed and therefore β[t8] = β[t12] = ϕ and α[t8, 1] = α[t12, 2] = 0.

For t11 = <3, 2> (<u9, u4>) ∈ R23 = R5 and t12 = <2, 2> (<u4, u7>) ∈ R31 = R6, we will check whether <2, 3> (<u7, u9>) is in R12 = R1. Since t10 = <2, 3> (<u7, u9>) ∈ R12, lines 6 and 7 will be executed and therefore β[t11] = {(1, u7)}, β[t12] = {(2, u9)}, β[t10] = {(3, u4)}; and α[t11, 1] = α[t12, 2] = α[t10, 3] = 1.

-

3.

s[3] = 1. For t9 =<3, 3> (<u9, u10>) ∈ R23 = R5 and t5 = <3, 3> (<u10, u8>) ∈ R31 = R6, we will check whether <3, 3> (<u8, u9>) is in R12 = R1. Since t7 = <3, 3> (<u8, u9>) ∈ R12, lines 6 and 7 will be executed and therefore β[t9] = {(1, u8)}, β[t5] = {(2, u9)}, β[t7] = {(3, u10)}; and α[t9, 1] = α[t5, 2] = α[t7, 3] = 1.

After the above steps, we must have:

-

α[t5, 2] = 1 β[t5] = {(2, u9)} α[t2, 3] = 0 β[t2] = ϕ

-

α[t7, 3] = 1 β[t7] = {(3, u10)} α[t3, 1] = 0 β[t3] = ϕ

-

α[t9, 1] = 1 β[t9] = {(1, u8)} α[t4, 2] = 0 β[t4] = ϕ

-

α[t10, 3] = 1 β[t10] = {(3, u4)} α[t6, 3] = 0 β[t6] = ϕ

-

α[t11, 1] = 1 β[t11] = {(1, u7)} α[t8, 1] = 0 β[t8] = ϕ

-

α[t12, 2] = 1 β[t12] = {(2, u9)}

Then, all those tuples, whose α-values equal 0, will be first stored in L (see line 9) and then checked to propagate inconsistency before they are finally removed (see lines 10–13). Accordingly, the counters of the corresponding vertices and also possibly relation signatures will be changed (see lines 13–14).

In this way, the graph shown in Fig. 4a will be reduced to the graph shown in Fig. 4b.

Based on tcControl(), a general algorithm for the relation filtering can be easily designed. It works in two phases.

In the first phase (lines 1–5), we check the triangle consistency for each edge in Q. In the second phase (lines 6–14), we propagate inconsistency among all those triangles which share one edge. To see this, we consider a larger query shown in Fig. 5a. Obviously, by checking the consistency with respect to triangle Δ143 tuple t5 ∈ R31 (= R6 shown in Fig. 3c) will be removed. Then, (2, u9) in β[t5] will be checked (see line 9), leading to the elimination of t7 = <u8, u9> from R12 (= R1) and t9 = <u9, u10> from R23 (= R5) see lines 11–14). For this query, only five edges <u7, u9>, <u9, u4>, <u4, u7>, <u7, u1>, and <u1, u4> (in Fig. 2b) will survive the relation filtering. (See Fig. 5b for illustration.)

5.2 Correctness and computational complexity

In this section, we prove the correctness of the algorithm and analyze its computational complexity.

Lemma 1

Let (vi, vj) be an edge ∈ Q. Assume that vj is a vertex incident to vi or vj, but not to both of them. Let <u, u′> ∈ Rij be an tuple surviving the relation filtering. Then, there exists at least a tuple <u′, u′′> in Rjk (or <u′′, u′> in Rkj); or tuple <u, u′> in Rik (or <u′, u> in Rki).

Proof

The lemma holds in terms of Algorithm check-1().□

Lemma 2

Denote by Rij the relation corresponding to (vi, vj) ∈ Q. Let Rij′ be the reduced relation of Rij by applying rFiltering() to Q and all the relevant relations. Then, for each <u, u′> ∈ Rij′ and each vk ∈ Q (k ≠ i, j), there exists at least a vertex u′′ satisfying the conditions stated in Definition 5.1

Proof

We prove the lemma for the case (vj, vk), (vk, vi) ∈ Q. The correctness for the other cases can be established in a similar way. In that case, we will first call check-2(i, j; k, i; j, k) (see line 11 in tcControl()) to remove all those tuples <u1, u2> from Rjk such that u1 does not appear in Rij[2] or u2 does not appear in Rij[1]. The same claims apply to the tuples in both Rki and Rij. Then, by executing Δ-consistency(i, j, k), we further remove all those tuples from Rij, Rki, and Rjk, which are not triangle consistent.□

Proposition 1

Let Rij be a relation reduced by executing rFiltering(Q, Rij′s). A tuple t = <u, u′> is in Rij if and only if t is triangle consistent.

Proof

If part. If a tuple is triangle consistent, it will definitely not be removed according to Lemma 1, Lemma 2, and the inconsistency propagation process (the second phase, lines 6–14) in rFiltering().

Only-if part. Any tuple which is not triangle consistent will be removed by check-1( ), check-2( ), Δ-consistency( ), or through the inconsistency propagation done in the second phase in rFiltering( ).□

The time complexity of the algorithms rFiltering( ) can be easily analyzed.

We mainly estimate the cost of tcControl( ), which comprises three parts: the running time of check-1( ), check-2( ), and Δ-consistency, respectively, referred to as c1, c2, and c3. Denote by r and s the largest size among all Rij’s, and the maximum length of relation signatures, respectively. First, we have c1 = O(max{r, s}). It is because in check-1( ) we have only a bit-wise and operation over two relation signatures and one for-loop done in tuple-removing( ), which needs only O(r) time.

Since in check-2( ) we have only three calls of check-1( ), c2 is also bounded by max{r, s}.

Let d be the largest degree of a vertex in Gδ. Then, the cost of line 1 in Δ-consistency is bounded by O(s·d2). So, we have c3 = O(s·d2). Since in rFiltering() we will call tcControl( ) O(n·m) times. The total cost of the whole working process is bounded by

The cost for the inconsistency propagation is also bounded by O(n·m·s·d2) since we can put at most so many elements into L.

Now we estimate the space complexity of the algorithm. First, we need a space to accommodate all the relevant relations from Gδ, and their signatures, as well as the vertex counters. This part of space is obviously bounded by O(mr). The dominant space requirement is due to the storage of β[t]’s, which is bounded by:

where Di is D[l(vi)] and D = maxi{D[l(vi)] | vi ∈ Q}. It is because for each t in a relation Rij β[t] can have up to O(|Dk|) elements for each vk ∈ Q. However, the space for storing α[t, k]’s is much less, which is bounded by

since corresponding to each t we can have at most n α-values each for a vertex in Q. Therefore, the total space overhead is bounded by O(nmr|D|).

6 On join ordering

After the relation filtering, we will join all the reduced Rij’s to get the final results. To do this efficiently, we need first to determine an optimal order of joins.

6.1 Cost model

It is well known that different orders will lead to different performances. But the join order selection is normally based on the cost estimate of every join involved, which needs to know both the cardinality of each Rij and the selectivity of each join, where Rij is the relation after the relation filtering is conducted. We assume that intermediate results should be stored in a temporary table on disk. We also use Bij to represent the number of pages on disk that hold tuples of the relation Rij. Thus, the cost of joining Rij and Rjk can be estimated as follows [40]:

where M is the number of pages available in main memory, and σijk is the selectivity of the join between Rij and Rjk, calculated as follows:

Here, u·Cij[x] is a counter of u to record the number of its appearances in the x-th column in Rij.

In (5), we assume that Rjk is the smaller relation and is handled as the inner operand while Rij as the outer operand. In fact, this cost comprises two parts. The first part is for the join computation:

The second part is for storing the result:

6.2 Join order selection

The join order selection can be performed by adopting the traditional dynamic programming algorithm [41] using the cost model described above. However, this solution is inefficient since a very large solution space has to be searched, especially when |E(Q)| is relatively large. So, we will modify the linearizing algorithm proposed in [42] for this task, but with the above cost model being used.

We will handle Q as an undirected graph Q′ and explore Q′ in the depth-first manner to generate a spanning tree (or forest) T. With respect to T, all the edges in Q′ will be classified into tree edges and forward edges. Any tree edge is an edge on T. Otherwise, it is a forward edge. The order of all joins between relations represented by the tree edges can be determined by using the linearizing algorithm proposed in [42]. But we need to incorporate the forward edges into the estimation of the costs and sizes of intermediate results.

Let vi be a vertex in T. Let vi1, …,vik be the children of vi. Assume that we have a forward edge from vi to a vertex vl in T[vij] (sub-tree rooted at vij, 1 ≤ j ≤ k. See Fig. 6 for illustration).

Denote by sij the estimated size of the intermediate result by joining all the relations in T[vij]. Then, to estimate the cost and the size for T[vi] (in a way suggested in [42]), sij should be changed to γ·sij, where

In general, assume that there are r forward edges from vi to r different vertices in T[vij]. Then, sij should be changed to γ1 …·γr·sij, where γp (p = 1, …, r) is the γ-value for the p-th forward edge from vi to a vertex in T[vij]. Each forward edge also corresponds to a join operation. Then, we will form a subsequence of joins for these forward edges, sorted increasingly by their γ-values and insert it just after the subsequence generated for T[vij] in the whole join sequence generated for T.

7 Experiments

In our experiments, we have tested altogether three different methods:

-

2-hop-based [12] (2Hb for short),

-

LLR-embedding-based [13] (LLRb for short),

-

Our method discussed in this paper.

All the three methods have been implemented in C++, compiled by GNU make utility with optimization of level 2. In addition, all of our experiments are performed on a 64-bit Ubuntu operating system, run on a single core of a 2.40-GHz Intel Xeon E5-2630 processor with 32 GB RAM.

We divide the experiments into two groups. In the first group, we test all the methods against real data, while the second group is on synthetic data. The first group is further divided into three parts. In the first part, we report the offline time for constructing indexes over all the real graphs for all the three methods. In the second part, we compare the query times of the three methods over the real graphs for different query patterns. In the third part, we study in depth the performance using a specific query pattern, but with different δ values.

The second group is also further divided into three parts. As with the first group, we will first measure the indexing time for two types of synthetic graphs: Erdos–Renyi model (ER) and Scale-Free model (SF). Then, in the second, we vary the number of vertices and δ values in order to study the effect of these two parameters. Finally, in the third part, we study the effect of the number of labels in a graph.

Datasets We used both real and synthetic datasets. Table 2 provides an overview of the real datasets used in the experiments. These datasets have been taken from various sources. Among them, yeast is a protein-to-protein interaction network in budding yeast, taken from vlado (http://vlado.fmf.uni-lj.si/pub/networks/d-atas/); citeseer is from KONECT (http://konect.uni-koblenz.de/ networks/citeseer); all the other real graphs are taken from SNAP (http://snap.stanford.edu/data/index.html). Six of the graphs, e.g., webStanford, already have natural labels for vertices. The last column indicates whether or not we synthetically generated labels. In addition, six graphs are also undirected. For them, by the computation of the shortest distance between two vertices, the direction is not considered.

All these real graphs are not weighted. So, we choose six of them and assign each edge in them a weight = 1. In all the remaining graphs, each edge is randomly assigned a number from {1,…, 10} as its weight.

Two types of synthetic datasets are used in our experiments. One is the ER-model. It is a random graph of |V| vertices and |E| edges. To create a set of edges, we randomly select vertex u and vertex v from set V. Then, a directed edge is created from u to v and a random label from a label set Σ is assigned to each of them. When creating edges, it is guaranteed that an edge is not repeatedly generated, and the weight assigned to it is different from its parent edge if multiple weights are used for a graph. This method simulates many real-world problems and may contain many large strongly connected components (SCCs). For all the created ER graphs, |Σ| is set to 10. The second dataset is the SF-model. It is another random graph satisfying the power law distribution of vertex out-degrees d: P(d) = αd−l, where α and l are two constants. This dataset is created by the graph generator gengraphwin (http://fabien.viger.free.fr/liafa/generation/). For the SF graphs, we fix α to 1, but set l to different values (either 2.2, 2.4, 2.6, or 2.8) to change the distribution of vertices’ out-degrees. We notice that the larger l is, the smaller the number of edges in a graph. To study the scalability in these two kinds of graphs, we vary graph size from 100 k to 400 K vertices. In addition, the density |E|/|V| of the ER graphs varies from 2 to 5, and the density of the SF graphs varies by changing the l-value.

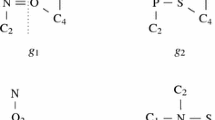

Pattern queries In the experiments, we have used two kinds of pattern queries: trees and graphs as shown in Fig. 7. They do look somehow arbitrarily selected, but for our method tree pattern queries are quite different from graph patterns since for tree patterns only check-1( ) is useful while for graph patterns all the procedures check-1( ), check-2( ), and Δ-consistency( ) work together to eliminate useless tuples. Therefore, we expect that more speedup can be obtained for graph pattern queries than for trees, due to more checked constraints.

7.1 Tests on real data

In this subsection, we report the test results on real data. First, we show the indexing time in Sect. 7.1.1. Then, we show the query time in Sect. 7.1.2. In Sect. 7.1.3, we study in depth the performance by testing a specific query pattern against all the real graphs, but with different δ values.

7.1.1 Indexing time and space

The indexing time and space of our method for all the real graphs are shown in Table 3. From this, we can see that for different δ values, both indexing time and space for storing indexes are different. In general, as the δ-value increases, both time and space requirements are gradually enlarged.

In Table 4, we show the indexing time and space of the 2-hop-based method [12] and the LLR-embedding-based method. From this, we can see that more time and space are needed by the LLR embedding than the 2-hop. It is because to transform 2-hop covers into vectors some extra time and space are required. In particular, after the vectors are created by the LLRb, the 2-hop covers cannot be simply discarded since they have to be kept for the verification of final join results.

Comparing Table 4 with Table 3, we can also see that in general much time and space are needed by the 2Hb (and the LLRb) than ours. In addition, for both roadNetTX and citePatterns, we have tried to establish their 2-hop covers. But within 3 days we are not able to get results.

7.1.2 Query time over tree patterns and graph patterns

In this subsection, we show the query time of all the tested methods over the real graphs. In Figs. 8 and 9, we show average times on all the four tree pattern queries shown in Fig. 8a and the four graph pattern queries shown in Fig. 8b, respectively. From these two figures, we can see that our method uniformly outperforms both the 2Hb and LLRb, for the following two reasons:

-

1.

By the 2Hb and LLRb, relations have to be constructed on-the-fly from 2-hop covers, or from vectors which are built by mapping a 2-hop cover to a vector space using the LLR embedding while by ours the relations are directly loaded from hard disk.

-

2.

By our method, the relation filtering can greatly reduce the size of relations before they take part in joins.

In addition, we notice that the LLRb can be much better than the 2Hb since by using LLR embeddings not only a relation can be established more quickly than by using 2-hop covers, but also less time is spent to do joins by checking Chebyshev distances and clustering points of a vector space, which is constructed from a 2-hop cover by the LLR embedding.

Finally, by comparing Figs. 8 and 9, we can see that the time used for evaluating a tree pattern query is generally much larger than for evaluating a graph pattern query of the same vertices. It is because the number of matching subgraphs to a tree pattern is normally much larger than the number of matching subgraphs to a graph pattern, as shown in Table 5.

From Table 5, we cannot only see that the space overhead for evaluating the two different kinds of queries, but also the power of tuple filtering based on the triangle consistency checking.

7.1.3 Query time over a specific query pattern

In order to deliver a deep insight into the performance of all the tested methods, we report here a breakdown of their query time for evaluating a specific query pattern, which is a complete graph containing five vertices shown in Fig. 7b. The test results are shown in Table 6 for small graphs and in Table 7 for large graphs. From these two tables, we can see that for both the 2Hb and LLRb, their query time each comprises two parts: RC time (for constructing relations from 2-hop covers or vectors) and NJ time (for doing natural joins to get final results.) For ours, the query time is made up of two parts: RF (for relation filtering) and NJ time. We see that the relation filtering itself does not need much time, but can dramatically reduce the time for the subsequent joins for almost all the tested graphs. In general, they can get 2 times to 10 times speedup.

In Table 7, we show the test results for large graphs.

7.1.4 Tests on large δ

For small δ, our method is obviously superior to the other strategies. However, for large δ, the performance of our method will definitely be degraded due to the large size of generated δ-transitive closures. To observe how fast the performance is degenerated as the value of δ increases, we have tested a specific graph roadNetPA by setting the weight of each edge being 1, but changing δ from 8 to 24. The result is shown in Table 8.

From Table 8, we can see that for large δ both the indexing time and index space by our method are significantly increased. However, they are still somehow better than the 2Hb and LLRb (see Table 4). The reason for this is that the generated δ-transitive closures are in general smaller than the whole transitive closures that have to be created by them. The query time is also incremented, but mitigated to some extent by using the tuple filtering.

7.2 Tests on synthetic data

In our second experiment, we compare the performance of our method with the 2Hb and LLRb on some synthetic data graphs. We choose n = 100,000 vertices for the ER graphs, but vary the number of edges m from 200,000 up to 1,000,000. Accordingly, the largest degree of a vertex in ER graphs increases from 2 to 10. For the SF graphs, we also choose 100,000 vertices, but set l to different values 2.2, 2.4, 2.6, or 2.8 with α = 1 to generate different probabilistic distributions P(d) = αd−l of out-degrees d of vertices. For simplicity, the weight of any edge in both ER graphs and SF graphs is set to 1.

Our aim here is to study the impact of graph density on performance using two significantly different synthetic graph models, as density is a basic property of graphs. We expect that building indexes on denser graphs will cost relatively more time and space for all methods, as the number of possible paths to explore increases with density.

In Fig. 10a and b, we show the indexing time and space for the ER graphs, where G(i) represents a δ-transitive closure with δ-value set to i. From this, we can see that the LLRb needs a little bit more time to establish indexes than the 2Hb. But its index size is smaller than the 2Hb’s. However, for δ ≤ 8, both the construction time and the sizes of δ-transitive closures are much smaller than both the 2Hb’s and LLRb’s.

The same claim applies to the SF graphs, as shown in Fig. 11, in which we report the indexing time and space for the generated graphs.

In Figs. 12, 13, 14, and 15, we show the average time on evaluating all the tree patterns and graph patterns shown in Fig. 7 against both the ER graphs and SF graphs. From them, we have three findings: (1) Our method is much better than both the 2Hb and the LLRb in query time due to the direct loading of relations and the relation filtering; (2) as the values of δ increases, the query time of all the three methods dramatically grows up; (3) the LLRb generally works better than the 2Hb due to the efficient checking of Chebyshev distances and the clustering of vertices.

Finally, we show the impact of the join ordering in Fig. 16. In this test, we run two versions of our algorithm: with and without join ordering, to find matches of the four graph patterns shown in Fig. 7b (referred to as P1, P2, P3, and P4, respectively) in an SF graph with |V| = 100,000, |E| = 500,000, and l set to be 2.8. Although, after the relation filtering, the sizes of the relations have been greatly reduced, the difference of join ordering can still be observed.

8 Conclusion

In this paper, we have proposed a novel algorithm for pattern match problem over large data graphs G. The main idea behind it is the concept of δ-transitive closures and a relation filtering method based on the concept of triangle consistency. As part of an index, a δ-transitive closure Gδ of G will be constructed offline for a certain δ-value. Then, for a query with δ′ ≤ δ, the relevant relations in Gδ can be directly loaded into main memory, instead of constructing them on-the-fly from some auxiliary data structures such as 2-hops [30] and LLR-embedding vectors [31]. In particular, the useless tuples in the relations will be filtered before they take part in joins, which enables us to achieve high performance. In addition, the bit mapping technique has been integrated into the relation filtering to expedite the working process. Also, extensive experiments have been conducted, which shows that our method is promising.

References

Shasha D, Wang JTL, Giugno R (2002) Algorithmics and applications of tree and graph searching. In: ACM SIGMOD-SIGACT-SIGART Symposium Principles Database Systems, p 39

Cheng J, Ke Y, Ng W, Lu A (2007) Fg-index: towards verification-free query processing on graph databases. In: Proceedings of the ACM SIGMOD International Conference on Management of Data, pp 857–872

Jiang H, Wang H, Yu PS, Zhou S (2007) GString: a novel approach for efficient search in graph databases. In: Proceedings of the 23rd International Conference on ICDE, pp 566–575. IEEE

Tian Y, McEachin RC, Santos C, States DJ, Patel JM (2007) SAGA: a subgraph matching tool for biological graphs. Bioinformatics 23(2):232–239

Cheng J, Yu JX (2009) On-line exact shortest distance query processing. In: Proceedings of the 12th International Conference on Extending Database Technology Advances Database Technology, EDBT 09, pp 481–492

Cohen E, Halperin E, Kaplan H, Zwick U (2003) Reachability and distance queries via 2-Hop labels. SIAM J Comput 32:1338–1355

Chen Y, Chen Y (2008) An efficient algorithm for answering graph reachability queries. In: Proceedings of the ICDE, pp 893–902

Wang H, He H, Yang J, Yu PS, Yu JX (2006) Dual labeling: answering graph reachability queries in constant time. In: Proceedings of the International Conference on ICDE, pp 75–86

Chen Y, Chen YB (2011) Decomposing DAGs into spanning trees: a new way to compress transitive closures. In: Proceedings of the 27th International Conference on Data Engineering (ICDE 2011), IEEE, April 2011, pp 1007–1018

Moustafa WE, Kimmig A, Deshpande A, Getoor L (2014) Subgraph pattern matching over uncertain graphs with identity linkage uncertainty. In: Proceedings of the International Conference on ICDE, pp 904–915

Tong H, Gallagher B, Faloutsos C, Eliassi-Rad T (2007) Fast best-effort pattern matching in large attributed graphs. In: Proceedings of the 13th ACM SIGKDD International Conference on Knowledge Discovery Data Mining, pp 737–746

Cheng J, Yu JX, Ding B, Yu PS, Wang H (2008) Fast graph pattern matching. In: Proceedings of the International Conference on ICDE, pp 913–922

Zou L, Chen L, Özsu M (2009) Distance-join: pattern match query in a large graph. VLDB 2(1):886–897

Tian Y, Patel JM (2008) TALE: a tool for approximate large graph matching. In: Proceedings of the International Conference on ICDE, pp 963–972

Conte D, Foggia P, Sansone C, Vento M (2004) Thirty years of graph matching in pattern recognition. Int J Pattern Recognit Artif Intell 18(3):265–298

Melnik S, Garcia-Molina H (2002) Similarity flooding: a versatile graph matching algorithm and its application to schema matching. In: Proceedings of the ICDE

He H, Singh AK (2008) Closure—tree: an index structure for graph queries. In: Proceedings of the ICDE, pp 405–418

Toresen S (2007) An efficient solution to inexact graph matching with applications to computer vision. Ph.D. thesis, Department of Computer and Information Science. Norwegian University of Science and Technology

Garey, Johnson DS (1990) Computers and intractability: a guide to the theory of Np-completeness. W.H. Freeman & Co, New York

Hopcroft JE, Wong J (1974) Linear time algorithm for isomorphism of planar graphs. In: Proceedings of the 6th Annual ACM Symposium Theory of Computing, pp 172–184

Luks EM (1982) Isomorphism of graphs of bounded valence graphs can be tested in polynomial time. J Comput Syst Sci 25:42–65

Yan X, Yu PS, Han J (2004) Graph indexing: a frequent structure-based approach. In: Proceedings of the ACM SIGMOD, pp 335–346

Zhang S, Hu M, Yang J (2007) TreePi: a novel graph indexing method. In: Proceedings of the International Conference on Data Engineering, pp 966–975

Williams DW, Huan J, Wang W (2007) Graph database indexing using structured graph decomposition Department of Computer Science. In: Proceedings of the 23rd International Conference on ICDE, pp 976–985

Zhao P, Yu JX, Yu PS (2007) Graph indexing: tree + delta > = graph. In: Proceedings of the International Conference on VLDB, October 2007, pp 938–949

Zhao P, Jiawei H (2010) On graph query optimization in large networks. In: Proceedings of the VLDB, pp. 340–351

Trißl S, Leser U (2007) Fast and practical indexing and querying of very large graphs. In: Proceedings of the SIGMOD’2007, pp. 845–856

Cordella LP, Foggia P, Sansone C, Vento M (2000) Fast graph matching for detecting CAD image components. In: Proceedings of the 15th International Conference Pattern Recognition, pp 1034–1037

Cordella LP, Foggia P, Sansone C, Tortorlla F, Vento M (1998) Graph matching: a fast algorihm and its evaluation. In: Proceedings of the 15th International Conference on Pattern Recognition, pp 1852–1854

Cohen E, Halperin E, Kaplan H, Zwick U (2003) Reachability and distance queries via 2-hop labels. SIAM J Comput 32(5):1338–1355

Linial N, London E, Rabinovich Y (1995) The geometry of graphs and some of its algorithmic applications. Combinatorica 15(2):215–245

Shahabi C, Kolahdouzan MR, Sharifzadeh M (2003) A road network embedding technique for K-nearest neighbor search in moving object databases. Geoinformatica 7(3):255–273

Abello JM, Pardalos PM, Resende MGC (eds) (2002) Handbook of massive data sets. Springer, Berlin

Jiawei H, Kamber M, Pei J (2012) Data mining: concepts and techniques. Elsevier/Morgan Kaufmann, Amsterdam

Henzinger MR, Henzinger T, Kopke P (1995) Computing simulations on finite and infinite graphs. In: Proceedings of the FOCS

Li J, Cao Y, Ma S (2017) Relaxing graph pattern matching with explanations. In: Proceedings of the International Conference CIKM’17, November 6–10, Singapore

Fan W, Wang X, Wu Y (2013) Incremental graph pattern matching. ACM Trans Database Syst 38(3):18.1–18.44

Fredman ML (1976) New bounds on the complexity of the shortest path problem. SIAM J Comput 5(1):83–89

Ahuja RK, Mehlhorn K, Orlin JB, Tarjan RE (1990) Faster algorithms for the shortest path problem. J ACM 37:213–223

Steinbrunn M, Moerkotte G, Kemper A (1997) Heuristic and randomized optimization for the join ordering problem. VLDB J 6(3):191–208

Wu Y, Patel JM, Jagadish HV (2003) Structural join order selection for xml query optimization. In: Proceedings of the ICDE

Krishnamurthy R, Boral H, Zaniolo C (1986) Optimization of non-recursive queries. In: Proceedings of the VLDB, Kyoto, Japan, pp 128–137

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Chen, Y., Guo, B. & Huang, X. δ-Transitive closures and triangle consistency checking: a new way to evaluate graph pattern queries in large graph databases. J Supercomput 76, 8140–8174 (2020). https://doi.org/10.1007/s11227-019-02762-4

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11227-019-02762-4