Abstract

In this work, we present an efficient and portable sorting operator for GPUs. Specifically, we propose an algorithmic variant of the bitonic merge sort which reduces the number of processing stages and internal steps, increasing the workload per thread and focusing on a multi-batch execution for multiple problems of a small size. This proposal is well matched to current GPU architectures and we apply different CUDA optimizations to improve performance. For portability, we use a library based on tuning building blocks. Thanks to this parametrization, the library can easily be tuned for different CUDA GPU architectures. Our proposals obtain competitive performance on two recent NVIDIA GPU architectures, providing an improvement of up to 11,794\(\times \) over CUDPP and up to 6467\(\times \) over ModernGPU.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Sorting is a computational building block of high importance, being one of the most studied algorithms due to its impact. Many algorithms rely on the efficiency of sorting routines as core pillars of their own efficiency. For example, sorting is widely used in computer graphics and geographic information systems for building spatial data structures, and also acts as a basis for solving sparse matrix operations or MapReduce patterns [4].

There are several parallel sorting algorithms such as Radix sort [18], Mergesort [8], Bitonic sort [1], and Quicksort [7]. Furthermore, many of these algorithms have been developed for GPUs. Radix sort for GPUs was efficiently implemented in [6]. Quicksort algorithm in GPU was first implemented in [16], being improved in [3]. A hybrid algorithm that combines Mergesort and Bucketsort [2] was presented in [17], whereas new implementations based on Radix sort and Mergesort were developed in [15]. There are several accelerated libraries that integrate sorting within a set of different algorithms. As an example of these libraries, we can find CUDPP [13], CUB [14] and ModernGPU [12]. Performing a comparison of sorting primitives performance, currently ModernGPU is the fastest on small problem sizes, although all of these libraries were developed with large problem sizes in mind.

In [5], we proposed an algorithmic variant of BMS, called bitonic merge comb sort (BMCS), for solving limited-size problems that fit directly into the high bandwidth of GPU scratchpad memory (called shared memory in CUDA). Nowadays, the simultaneous execution of several problems of small size has a large impact in the most complicated simulations. For example, combustion, chemical and high-order finite-element models take advantage of this execution. On the other hand, BPLG [11] is a library which uses a tuned template library with a simple and unified interface for parallel prefix algorithms [9] with a set of skeletons or building blocks as a basis. These building blocks, predefined generic components, are parameterized where efficient implementation and specialization may exist for given CUDA architectures and algorithms, constraining programmers to using only the given set of skeletons. In this work, we propose a new version called BPLG–BMCS that has been built based on tuning building blocks with BPLG and it has been improved by considering different CUDA optimizations. Our experimental results demonstrate that our implementation is faster than all previously published GPU sorting techniques for a multi-batch execution of small problem sizes. Future work will entail developing our library focused on dealing with many problems of medium and large sizes.

The remainder of this paper is organized as follows: Sect. 2 presents BMCS and its CUDA implementation. Section 3 presents our new BMCS proposal using BPLG library. Experimental results are discussed in Sect. 4, whereas the main conclusions of our work are explained in Sect. 5.

2 Bitonic merge comb sort (BMCS)

In this section, we present an algorithmic variant of bitonic merge sort (BMS), called bitonic merge comb sort (BMCS), which achieves high parallelism and efficiency in GPU using CUDA. An initial version of BMCS was proposed in [5], matching well to current NVIDIA GPU architectures. Furthermore, it adapts thread workload to available registers, obtains coalescing accesses, avoids bank conflicts, reduces synchronization barriers and uses shuffle instructions.

BMS is a parallel algorithm for sorting [1]. The classic complexity is of \(N\cdot (\log {N})^2\). Figure 1 shows the classic algorithm for \(N=16\) where each horizontal line represents a key value, starting on the left and finishing at the outputs on the right. Vertical segments are comparators which make the comparison of the two selected keys, swapping their values if necessary. The sorting is processed along \(\mathrm{log}_2 N\) stages (grey boxes) where stage k has also k internal steps (marked by rectangles inside each stage).

The Node operator is the responsible for performing computation in parallel prefix algorithms. In more detail, the node \({ Node}_{{ ALG}}\) is a computational operator defined by four aspects: fan_in which is the number of input data; fan_out, the number of output data; sizeof_data which represents the size in bytes of each data, and the specific operation depending on the ALG algorithm. Furthermore, R is a factor specific to each algorithm where \(\frac{N}{R}\) indicates the number of nodes per stage.

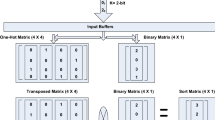

In BMS, the node fan_in and fan_out are both 2, processing two elements per thread. The best results are achieved when each thread works with four elements to increase thread workload, hence we have modified the algorithm, so fan_in and fan_out are both 4. We propose an algorithm with \(\log _2 N -1 \) external stages, each with half the number of the internal steps. In the case of external stages, four consecutive elements from global memory are read and computed directly in registers, thus first two external stages are reduced to one. Figure 2 contains a scheme of our algorithm for a problem of \(N=16\) with four threads where each thread computes a node of fan_in \(=\) fan_out \(=\) 4. Reducing the number of stages and steps involves reducing synchronization barriers. Furthermore, increasing the fan_in and fan_out implies reducing the amount of threads needed per problem. Nevertheless, fan_in or fan_out in excess of four results in the use of more registers, decreasing occupancy and performance.

To develop this algorithm, we have taken into account avoiding dynamic indexing as well as achieving coalesced global memory accesses and preventing shared memory bank conflicts. When working with arrays as in this case, attention needs to be paid to accesses. Here we should point out that highest performance is achieved with static accesses where the compiler can derive constant indices in all accesses, placing elements into registers. If the compiler cannot determine the index at compile time, array elements are placed in local memory in a process known as dynamic indexing. Our proposal avoids dynamic indexing with index calculations that can be understood as static accesses by compiler.

On the other hand, if each thread computes several elements, it is also necessary to specify which elements are accessed per thread to avoid bank conflicts or uncoalesced accesses (see Fig. 2). The first and last steps are designed to enable the use of customized data types such as Int4 which reduce the number of memory transactions and allow coalescing accesses.

The matching of threads with elements is not trivial in remaining stages to reduce the number of internal steps and to avoid shared memory bank conflicts. Depending on the specific external stage, the number of internal steps could be a non-power of two. In this case, it is necessary a mixed computation of fan_in 2 is necessary in the first step of stage k, whereas the remaining steps are processed by nodes of fan_in 4. Furthermore, each thread has to operate with the corresponding four elements which makes it possible to reduce the two steps in the fan_in 2 naive approach to one step in the fan_in 4 approach. This is shown clearly in Fig. 2 when \(k=3\). In the first step of stage \(k=3\), the first, fifth, twelfth and sixteenth elements have to be processed by thread zero to reduce the first two steps of the naive algorithm to just one in our algorithm. Actually, thread zero could select other elements to perform the computation in other steps. However, this pattern is selected to reduce the number of shared memory bank conflicts. Figure 2 clearly shows how the pattern used in the internal steps allows consecutive threads to access to adjacent shared memory banks (without bank conflicts), except in last internal step where each thread loads four consecutive elements.

Figure 3 represents BMCS but using nodes \({ Node}_{{ BMCS}}=\{4,4,4,{ operator}\}\). In this figure, the “comb” configuration can easily be seen. Increasing fan_in/out entails reducing the number of stages, i.e., reducing the number of synchronization barriers. It should be noticed that the naive algorithm has \(n+\frac{n(n+1)}{2}\) synchronization barriers where \(N=2^n\), whereas our proposal has \(n-1 + \frac{n/2 (n/2+1)}{2}\). In general, performance is increased by reducing the number of synchronization barriers. Here, we should emphasize the case of Fermi CUDA architectures where each SM has only 32 SPs. In this case reducing the number of barriers has a greater impact, synchronizing large thread block sizes implies a lot of warp-context switchings per SM, wasting a lot of time on this task.

Finally, it is also possible to reduce synchronization barriers and memory latencies with shuffle instructions which allow information to be exchanged between threads in the same warp using registers instead of shared memory. This approach avoids warp-synchronization barriers, although they are limited to the warp scope. BMCS consists of a hybrid strategy in which the 6 initial stages are computed using shuffle instructions, sorting \({ fan}\_in \times { warpSize} \) elements in each warp, and then using shared memory as a communication channel between warps. Therefore, 128-data chunks are sorted without synchronization barriers. Thus, if \(N \le 128\), then any synchronization barrier is needed in the execution. For larger sizes, this technique saves nine synchronization barriers as this is the number of barriers there are in the six initial stages. Even though it was also possible to use shuffle instructions in internal steps with chunks of up to 128 elements, empirically greater performance was obtained working with shared memory.

3 BPLG–BMCS

We are currently developing an efficient library for parallel prefix algorithms based on tuning building blocks, called butterfly processing library for GPUs (BPLG) [11]. This library enables algorithms to be designed with flexibility and adaptability, so far other parallel algorithms, such as FFT or tridiagonal system solvers, have been implemented in BPLG [10]. To this end, we use a set of functions as building blocks, high level blocks of code in several layers where each function computes a small part of the whole work. This flexibility does not compromise performance as a tuned strategy is followed, obtaining a set of parameters to achieve the optimal level of parallelism to be exploited on each GPU architecture.

The idea behind tuning building blocks is to write the same kernel or a very similar one for all parallel prefix algorithms using skeletons, with the body of kernel remaining constant throughout different algorithms, but changing the implementation of the compute building block. Both computation and manipulation building blocks have common features, such as the use of templates, which allows generic programming and template metaprogramming. Thanks to this behaviour many optimizations take place at compile time, for example fully unrolling statics loops. Additionally, all functions have been designed to operate in any GPU memory space. Data are loaded from one buffer to another with the copy function. In contrast, computing blocks modify their input data. The fundamental function of computing building blocks is the compute function, which performs computations in each stage. The interface of the compute function is implemented by different algorithms, each one with its corresponding operation, which can be defined either recursively or using specializations depending on N.

The current BPLG version focuses on a multi-batch execution for multiple problems of small size. Specifically, G problems of size N are simultaneously computed. A kernel is invoked with B number of blocks where each block is executed with L threads. Each thread computes Q nodes per stage; therefore, each problem is processed by \(\frac{N}{R \times Q}\) threads where R is a specific factor in which \(\frac{N}{R}\) indicates the number of nodes per stage. However, instead of executing only one problem per block, \(L_G\) problems are computed per block using batch execution to increase the thread parallelism. Thus, the total number of threads per block is expressed as \( L=\frac{N}{R \times Q} \times L_G\). In addition to aforesaid definitions, threads within a block have access to S data stored in shared memory and P data stored in private registers. These parameters can be related as follows: \(P= { fan}\_{ in} \times { sizeof}\_{ data} \times R \times Q\) whereas \(S=P \times L\) and \(B= \frac{G}{L_G}\). In comparison to other libraries, multi-batch problems are performed by a single kernel invocation, where each thread performs the computation associated to each node. Data are processed and stored in registers. After computation, threads collaborate with each other in exchanging data prior to the next stage.

Algorithm 1 presents a first version of the code for the BMS in terms of tuning building blocks, called BPLG–BMCS–Naive. The read-only buffers are declared as const_restrict pointers, allowing the memory accesses to be optimized using texture cache. This code uses two tuning building blocks, copy and compute. The code can be divided into four main sections:

-

Initialization section (lines 3–7). Defines a number of identifiers and some memory offsets for loading and storing operations. Furthermore, registers and shared memory are also allocated.

-

Load data from global memory (lines 8–9) and first compute stage (lines 10–11). Loads coalescent data using a 64-bit load to obtain two consecutive elements instead of accessing a single data element per memory request. In the code, \({ copy}{<}2,Q{>}(\ldots )\) loads two consecutive elements Q times per thread. Then, elements are directly processed in registers by compute function. This building block compares and swaps values.

-

Compute stages of the algorithm (lines 13–32). The loop computes the remaining stages of the algorithm with its internal steps. To this end, the loop loads the corresponding data into registers using shared memory and synchronization barriers. The synchronization barrier in line 17 is avoided in first iteration as data are already in registers. The same behaviour occurs in the internal loop on line 26 where results are returned in registers.

-

Store data to global memory (lines 33–34). The final iteration of loop stores the results into registers, thus final result is moved from register to global memory using 64-bit stores, reducing the number of memory transactions.

To achieve high portability and efficiency in GPUs, this code has been slightly modified. Firstly, we generalize the data type to be sorted from integers into any generic DTYPE using a new template parameter class, allowing for easy portability. The code structure remains constant but even so it provides portability, by simply changing the DTYPE comparator implementation inside the compute function if necessary. Furthermore, our building blocks such as copy or compute have been carefully designed to avoid dynamic indexing.

The effectiveness of BMCS has been demonstrated in [5]. Thus, BMCS is adapted to BPLG, also providing specialization kernels for some DTYPE and Q values, with specific code for each case which exploits the maximum parallelism of each proposal. For example, specialization kernels for enabling the use of customized data types as Float2 or Int4 have been implemented, reducing the number of memory requests and improving performance. This approach has been also optimized with a hybrid implementation; initial stages are computed using shuffle instructions, sorting \({ fan}\_{ in} \times { warpSize}\) elements in each warp, and the other stages use shared memory as a communication channel between warps. If \(N \le 128\), then there is no synchronization barrier in the execution. Finally, BPLG–BMCS is the result of adapting BMCS to BPLG and applying all previous optimizations.

4 Experimental results

In this section, we present the results of our proposals on different NVIDIA GPU architectures. All tests were run using integers as data type. All the data initially reside in the GPU memory, so there are no data transfers to CPU during benchmarks. The test platforms used in our experiments are described in Table 1, where Platform 1 has a representative GPU of Kepler architecture whereas Platform 2 has a Maxwell GPU. All these algorithms were developed to take advantage of the read-only data cache, which slightly improves global memory read bandwidth. The performance of these experiments is measured in million data processed per second, MData/s. The size of the batch depends on the input size and is given by the expression \(G=2^{24}/N\). Thus, MData/s value is performed using the expression \(N \times G \times 10^{-6}/t\). To demonstrate the portion of effectiveness of the algorithm, tuning building blocks and other optimizations, all proposals were tuned to each architecture.

Firstly, Fig. 4 depicts a performance comparison of all our proposals presented for Platform 1 in Sects. 2 and 3. The BMS tag refers to an optimized bitonic merge sort implementation, whereas BMCS represents the implementation of our algorithmic variant with shuffle communications presented in Sect. 2. BPLG–BMS–Naive denotes a naive implementation for \(Q=1\) in terms of tuning building blocks. BPLG–BMCS is a kernel specialization for \(Q=2\), with the optimizations presented in Sect. 3. As this implementation obtains the best performance, it is the one which we use in our BPLG library. In general, while shared memory is not an expensive resource, tuning building blocks implementations are better since they execute several batches per block. Until \(N=256\), BPLG–BMCS is better than BMCS, as each block executes several batches in parallel. The number of batches per block is obtained by tuning, and it guarantees a high occupancy. As N increases, the number of batches per block is reduced in BPLG, and performance is very similar with BMCS. In the case of BMCS, peak of performance is obtained with \(N=256\) or \(N=512\) as occupancy is maximum with these values. In problem sizes that are larger than \(N=512\), both BMCS and BPLG–BMCS can only execute one problem per block, owing to resource consumption. Even in this case, BPLG–BMCS is slightly better than BMCS. Tuning building blocks offer a simple way of programming, obtaining the same (or higher) performance than other complex-optimized verbose kernels for the same task, such as BMCS. In general, shared memory becomes a limiting factor. Figure 5 shows the same comparison on Platform 2, maintaining the same nomenclature. The MData/s achieved on this platform is higher, which can be ascribed to the fact that Maxwell presents a power-efficient performance which provides a higher delivered performance per CUDA core than Kepler owing to its new datapath organization, new improved instruction scheduler, new memory hierarchy and bandwidth, obtaining a higher number of actives blocks per Streaming Multiprocessor. The architecture doubles the number of blocks per SM, up to 32 blocks (double that of Kepler), although the available shared memory per block remains the same. Owing to this behaviour, BMCS occupancy is maximum with \(N=128\) in Maxwell (\(N=256\) in Kepler).

Figure 6 compares BPLG–BMCS with respect to CUDPP implementation [13], CUB and ModernGPU library, all of them developed by NVIDIA, with ModernGPU being the reference library for sorting when small problem sizes are considered. It should be noted that this comparison is made in terms of execution time for only one batch. CUDPP shows the worst results for small problem sizes that can be directly processed in shared memory. Our proposal, BPLG–BMCS, provides highly competitive results compared to ModernGPU. This performance is achieved implementing BPLG–BMCS, obtaining an improvement of up to 10\(\times \) over CUDPP, up to 8.26\(\times \) over CUB and up to 2.6\(\times \) over ModernGPU. On the other hand, Fig. 7 presents the same comparison on Platform 2, Maxwell architecture. Results are similar to Platform 1, obtaining up to 40\(\times \) in comparison to CUDPP, up to 20.9\(\times \) over CUB and up to 4.8\(\times \) over ModernGPU.

Many applications need to solve G batch problems in parallel. Therefore, we used a batch execution to compute G problems each time. Table 2 compares our best proposal BPLG–BMCS to CUDPP, CUB and ModernGPU ones. CUB, ModernGPU and CUDPP prove to be extremely inefficient with problems where many batches of small size are processed in parallel, since they were designed for solving just one large-size problem. To solve G problems of size N, these two libraries have to launch G light kernels. Our proposal is up to 11,794\(\times \) faster than CUDPP library, up to 7581\(\times \) over CUB and up to 5307\(\times \) over ModernGPU on Platform 1. Table 2 shows the MData/s obtained in Platform 2. The MData/s for Platform 2 are higher because Maxwell presents a power-efficient performance which provides a higher delivered performance per CUDA core than Kepler thanks to new datapath organization, new improved instruction scheduler, new memory hierarchy and bandwidth, and more actives blocks per SM. Our proposal is up to 6467\(\times \) faster than CUDPP, up to 5352\(\times \) over CUB and up to 3609\(\times \) than ModernGPU.

5 Conclusions

Sorting is a highly important kernel which takes part in many scientific and engineering applications. In terms of efficiency, a GPU implementation of this operator is not a simple task since hardware requirements have to be considered. In this paper, we provide a new proposal for solving sorting problems that match well to the new GPU architectures. Specifically, this proposal is an algorithmic variant of bitonic merge sort (BMS), called bitonic merge comb sort (BMCS). Furthermore, we obtain a new proposal BPLG–BMCS using a tuning methodology based on tuning building blocks for parallel prefix algorithms. In addition to tuning building blocks, BPLG–BMCS is also optimized with different CUDA techniques such as specialized templates, shuffle instructions or customized datatypes.

Despite its mathematical complexity, the performance of the resulting proposal obtains very competitive results compared to other well-known sorting libraries, such as ModernGPU, CUB and CUDPP, achieving an improvement of up to 40\(\times \) for single-batch execution and up to 11,794\(\times \) for multi-batch execution.

As future work, our primary focus will be to extend our proposal for large-size arrays that cannot be stored in shared memory.

References

Batcher KE (1968) Sorting networks and their applications. In: Proceedings of spring joint computer conference, AFIPS ’68 (Spring), pp 307–314

Corwin E, Logar A (2004) Sorting in linear time—variations on the bucket sort. J Comput Sci Coll 20(1):197–202

Cederman D, Tsigas P (2010) GPU-quicksort: a practical quicksort algorithm for graphics processors. J Exp Algorithmics 14:4:1.4–4:1.24

Dean J, Ghemawat S (2008) MapReduce: simplified data processing on large clusters. Commun ACM 51(1):107–113

Diéguez AP, Amor M, Doallo R (2015) BS-Comb: an efficient sorting algorithm for GPUs. In: Proceedings of the 15th international conference on computational and mathematical methods in science and engineering, CMMSE 2015, pp 461–473

Harris M, Sengupta S, Owens JD (2007) Parallel prefix sum (scan) with CUDA. GPU Gems 3(39):851–876

Hoare CAR (1961) Algorithm 64: Quicksort. Commun ACM 4(7):321

Kipfer P, Westermann R (2005) GPU Gems 2-Chapter 46. Improved GPU Sorting

Ladner RE, Fischer MJ (1980) Parallel prefix computation. J ACM 27(4):831–838

Lobeiras J, Amor M, Doallo R (2015) Designing efficient index-digit algorithms for CUDA GPU architectures. IEEE Trans Parallel Distrib Syst. doi:10.1109/TPDS.2015.2450718

Lobeiras J, Amor M, Doallo R (2015) BPLG: a tuned butterfly processing library for GPU architectures. Int J Parallel Prog 43(6):1078–1102

Nvidia Comp. (2013) Modern GPU library. https://github.com/NVlabs/moderngpu

Nvidia Comp. (2014) CUDPP: CUDA data parallel primitives library. http://cudpp.github.io/

Nvidia Comp. (2015) CUB library. http://nvlabs.github.io/cub/

Satish N, Harris M, Garland M (2009) Designing efficient sorting algorithms for manycore GPUs. In: Proceedings of the 2009 IEEE international symposium on parallel and distributed processing, IPDPS ’09, pp 1–10

Sengupta S, Harris M, Zhang Y, Owens JD (2007) Scan primitives for GPU computing. In: Proceedings of the 22Nd ACM SIGGRAPH/EUROGRAPHICS symposium on graphics hardware, GH ’07, pp 97–106

Sintorn E, Assarsson U (2008) Fast parallel GPU-sorting using a hybrid algorithm. J Parallel Distrib Comput 68(10):1381–1388

Zagha M, Blelloch GE (1991) Radix sort for vector multiprocessors. In: Proceedings Supercomputing ’91, pp 712–721

Acknowledgments

This research has been supported by the Galician Government (Xunta de Galicia) under the Consolidation Program of Competitive Reference Groups, cofunded by FEDER funds of the EU (Ref. GRC2013/055); by the Ministry of Economy and Competitiveness of Spain and FEDER funds of the EU (Project TIN2013-42148-P) and by EU under the COST Program Action IC1305: Network for Sustainable Ultrascale Computing (NESUS).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Diéguez, A.P., Amor, M. & Doallo, R. BPLG–BMCS: GPU-sorting algorithm using a tuning skeleton library. J Supercomput 73, 4–16 (2017). https://doi.org/10.1007/s11227-015-1591-9

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11227-015-1591-9