Abstract

Today’s smartphones with a rich set of cheap powerful embedded sensors can offer a variety of novel and efficient ways to opportunistically collect data, and enable numerous mobile crowdsourced sensing (MCS) applications. Basically, incentive is one of fundamental issues in MCS. Through appropriately integrating three popular incentive methods: reverse auction, reputation and gamification, this paper proposes a quality-aware incentive framework for MCS, QuaCentive, which, pertaining to all components in MCS, can motivate crowd to provide high-quality sensed contents, stimulate crowdsourcers to give truthful feedback about quality of sensed contents, and make platform profitable. Specifically, first, we utilize the reverse auction and reputation mechanisms to incentivize crowd to truthfully bid for sensing tasks, and then provide high-quality sensed contents. Second, in to encourage crowdsourcers to provide truthful feedbacks about quality of sensed data, in QuaCentive, the verification of those feedbacks are crowdsourced in gamification way. Finally, we theoretically illustrate that QuaCentive satisfies the following properties: individual rationality, cost-truthfulness for crowd, feedback-truthfulness for crowdsourcers, platform profitability.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Crowdsourcing [1] is a newly emerging service platform and business model in the Internet. In contrast to outsourcing, where jobs are performed by some designated workers or companies, with crowdsourcing, jobs are outsourced to a an undefined, large, anonymous crowd of workers, so-called human cloud, in the form of an open call. Those jobs can be decomposed into small tasks that are easy for individuals to solve. For instance, Amazon Mechanical Turk (www.mturk.com) provides on-demand access to task forces for micro-tasks such as image recognition and language translation, etc.

Nowadays, the proliferation of smartphones provides a new opportunity for extending existing web-based crowdsourcing applications to a larger contributing crowd, making contribution easier and omnipresent. Smartphones are programmable and equipped with a set of cheap but powerful embedded sensors, such as accelerometer, digital compass, gyroscope, GPS, microphone, and camera, etc. These sensors can collectively monitor a diverse range of human activities and surrounding environment. Especially, when a large number of mobile device users are involved in the sensing procedure, many new applications are enabled by mobile crowdsourced sensing (MCS).

Mobile crowdsourced sensing applications can be broadly classified into two categories, personal and community sensing, based on the type of phenomena being monitored [2]. In personal sensing applications, the phenomena pertain to an individual. For instance, the monitoring of movement patterns (e.g., running, walking, exercising, etc.) of an individual for personal record-keeping or healthcare reasons. On the other hand, community sensing pertains to the monitoring of Large-scale phenomena that cannot easily be measured by a single individual. For example, intelligent transportation systems may require traffic congestion monitoring and air pollution level monitoring. These phenomena can be measured accurately only when many individuals provide speed and air quality information from their daily commutes, which are then aggregated spatio-temporally to determine congestion and pollution levels in cities.

Community sensing is also popularly called participatory sensing or opportunistic sensing [3]. In participatory sensing, the participating user is directly involved in the sensing action e.g., to photograph certain locations or events. In opportunistic sensing, the user is not aware of active applications, and is not involved in making decisions instead the smartphone itself makes decisions according to the sensed and stored data. In brief, MCS is used to refer to a broad range of community-sensing paradigms.

There exists a great deal of MCS applications in numerous fields, especially in environmental monitoring and urban sensing (leveraging the sensors in mobile devices to collecting data from the urban landscape and then make decisions accordingly). For example, a new mobile crowdsourcing service for improving road safety in cities, was presented in Aubry et al. [4], which allows users to report traffic offence they witness in real-time and to map them on a city plan. Regardless of the characterization given by various MCS applications, three common components are present: the crowdsourcers who have sensing tasks needed to be outsourced to the mobile crowd, the mobile crowd who complete sensing tasks and offer sensed contents to crowdsourcers, and the crowdsourcing platform (usually cloud-based) that facilitates the interactions between mobile crowd and crowdsourcers, including publishing tasks, selecting working crowd for crowdsourcers (e.g., through reverse auction), monitoring crowd’s job, and auditing crowdsourcers feedbacks, etc.

While participating in crowdsourced tasks, smartphone users consume their own resources which incurs a heterogeneous variety of costs, such as data processing and transmission, charges by the mobile operator for the bandwidth needed for transmitting data,mobile device battery energy cost for sensing, processing power cost, or discomfort due to manual effort to submit data, etc. Furthermore, users also expose themselves to potential security threats by sharing their sensed data with location tags. Therefore, a user is usually reluctant to contribute her smartphone’s local resources, unless she receives a satisfying reward to compensate her resource consumption and potential security breach. Besides adequate user participation, sensing services also depends crucially on quality of users’ sensed data.

There exist much works on participation incentive in MCS. Two system models are considered for economic-based incentive mechanisms in MCS [5]: the platform/crowdsourcer-centric model (focusing on maximizing platform’s profit) and the user-centric model (focusing on eliciting crowd’s truthful value on tasks). Based on online reverse auction, various online incentive mechanisms was designed in Zhang et al. [6], named threshold based auction (TBA), truthful online incentive mechanism (TOIM) and TOIM with non-zero arrival-departure (TOIMAD). TBA is designed to pursue platform utility maximization, while TOIM and TOIM-AD achieve the crucial property of truthfulness.

However, those works have two weakpoints: First, they only investigate the utilities of partial roles in MCS, i.e., crowd’s utility and platform’s profit. The behavior the third components, crowdsourcer, is not examined at all; Second, they only deal with the users’ participation incentive, ignoring how to incentivize crowd to provide high-quality sensed contents.

Zhang and Schaar [7] proposed a novel class of incentive protocols in crowdsourcing applications based on social norms through integrating reputation mechanisms into the existing pricing schemes currently implemented on crowdsourcing websites (e.g., Yahoo! Answers, Amazon Mechanical Turk.). Especially, the problem of “free-riding” of crowd in ex-ante payment incentive was addressed, which means that if the crowdsourcer pays before the task starts, a worker always has the incentive to take the payment and provides no effort to solve the task. An incentive platform named TruCentive was presented by Hoh et al. [8], which addressed the problems of low user participation rate and data quality in mobile crowdsourced parking systems (that is, smartphone users are exploited to collect real-time information about parking availability).

However, those works mainly related to the crowd side (incentivizing users to participate and provide high-quality contents). We argue that quality-aware incentive mechanisms should pertain to all MCS components: crowd, crowdsourcer, and crowdsourcing platform. Specifically, it is the crowdsourcers that publish tasks needed to be outsourced to the crowd, and the quality of contents provided by crowd should be naturally judged and reported by crowdsourcer. Furthermore, the report on content quality will significantly affect the reward that crowd can obtain. Thus, there exist a social dilemma between crowd and crowdsourcers: the crowd should be stimulated to provide sensed contents with higher quality to get more reward that depends on the quality reports feedback by crowdsourcers; the crowdsourcers want to utilize high-quality contents provided by crowd, but pay crowd as little as possible, probably through false-reporting the quality of sensed contents.

To address the above social dilemma, through appropriately integrating three popular incentive methods: reverse auction, reputation and gamification, this paper proposes a quality-aware incentive framework for MCS, QuaCentive, which can motivate crowd to provide high-quality sensed contents, stimulate crowdsourcers to give truthful feedback about quality of sensed contents, and make platform profitable.

The paper is organized as follows. Section 2 summarizes the existing incentive categories for MCS, including extrinsic (e.g., economic) incentives, intrinsic (e.g., entertainment or game based) incentives and internalized extrinsic incentives (e.g., reputation)-social incentives, and discusses several key factors of quality control in mobile crowdsourcing. Section 3 presents the general quality-aware crowdsourced sensing framework, with emphasizing on incentive engineer going through all components in the framework. Section 5 describes the detailed operations in QuaCentive, and formally characterizes the properties of QuaCentive from the viewpoints of three components: individual rationality, cost-truthfulness for crowd, feedback-truthfulness for crowdsourcers, platform profitability. Finally, we briefly conclude this paper.

2 Existing incentive categories and quality control methodology

An incentive is a kind of stimulus or encouragement to stimulate one to take action, work harder, etc. In mobile crowdsourcing, incentives could be classified into three categories. Probably, the most prominent incentive in today’s crowdsourcing market is the financial incentive. Some crowdsourcing markets, such as Amazon Mechanical Turk, provide payments (sometimes also referred to as micro payments) for the completion of an advertised task. Another type of incentives is entertainment based. The crowdsourcer may provide a form of enjoyment or fun in the crowdsourced activity or may design a game around the crowdsourced activity. The third form of incentives is the social incentive. Some participants may take part in crowdsourcing activities to gain reputation or public recognition.

2.1 Extrinsic (economic) incentives

Economic incentives are the real money or any other commodity that the users consider valuable. They are probably the most straightforward way to motivate participants. It has been reported that in most of the application domains the paid crowdsourcing can produce better completion rate and processing time than the free crowdsourcing [9].

As described above, two system models are considered for economic-based incentive mechanisms in mobile crowdsourcing: the platform/crowdsourcer-centric model and the user-centric model [5]. For the platform-centric model, an incentive mechanism using a Stackelberg game, was designed, where the platform is the leader while the users are the followers. The authors showed how to compute the unique Stackelberg equilibrium, at which the utility of the platform is maximized, and none of the users can improve its utility by unilaterally deviating from its current strategy. For the user-centric model, an auction-based incentive mechanism was designed, which is computationally efficient, individually rational, profitable, and truthful.

However, once with money being involved, quality control becomes a major issue due to the anonymous and distributed nature of crowd workers. Although the quantity of work performed by participants can be increased, the quality cannot, since crowd workers may tend to cheat the system in to increase their overall rate of pay. For example, it has been shown that monetary incentives do not affect quality of work, but rather merely affect the number of times a worker is willing to do a task [10]. Another drawback with economic incentives is that they might destroy pre-existing intrinsic motivations in a process known as “crowding out” [11].

2.2 Intrinsic (entertainment or game based) Incentives

The idea of taking entertaining and engaging elements from computer games and using them to incentivize participation in other contexts is increasingly studied in a variety of fields. In education, the approach is known as “serious games” and in human computing it is sometimes called “games with a purpose”. Most recently, digital marketing and social media practitioners have adopted this approach under the term “gamification”. The idea is to make a task entertaining, like a on-line game, thus making it possible to engage people to conscientiously perform tasks [12].

Ahn’s [13] work on games with a purpose has shown that making work into a game spurs people to complete even challenging tasks. A game prototype, Art Collector, was deployed for digital archives (archiving cultural heritage) on social networking platforms [14]. In this game, players compete for “art pieces” from the game’s photo collection. The gameplay is centered around two main activities: annotating image with keywords (“tags”) and guessing tags of other players. As a side effect of gameplay, user-generated metadata are gathered. In addition, validation of metadata takes place when two or more players agree on a tag for the same image.

In gamification, ideally, the valuable output data itself is actually generated as a by-product by playing game. However, the difficulty of designing such a game is also a well-known problem. In many situations the tasks can be too boring or complicated to turn into any game that is actually enjoyable or fun to play.

Other intrinsic incentive factors can include mental satisfaction gained from performing a crowdsourced activity, self-esteem, personal skill development, knowledge sharing through crowdsourcing (e.g., Wikipedia), and love of the community in which a crowdsourced task is being performed (e.g., open-source communities), etc. Like gamification, there is no consensus on how to quantitatively measure the effect of those elements on MCS.

2.3 Internalized extrinsic incentives (e.g., reputation based): social incentives

Social psychological factor is another widely harnessed non-monetary incentive mechanism to promote increased contributions to online systems. For example, social facilitation effect refers to the tendency of people perform better on simple tasks while under someone else’s watching, rather than while they are alone or when they are working alongside other people. On the contrary, the social loafing effect is the phenomenon of people making less effort to achieve a goal when they work in a group than when they work alone, since they feel their contributions do not count, are not evaluated or valued. Antin [15] suggested ways to take the advantage of the positive social facilitation and avoid the negative social loafing in for online data collection systems: Individuals’ efforts should be prominently displayed, individuals should know that their work can easily be evaluated by others, and the unique value of each individual’s contribution should be displayed.

Specifically, an anonymity preserving reputation scheme was proposed in Christi et al. [16], which utilizes blind signature to provide a secure transfer of reputation scores between pseudonyms. Wang et al. [17] also addressed the problem of trust without identity by proposing a framework to compute the trustworthiness of sensing reports based on anonymous user reputation levels.

Although social psychological incentives like historical reminders of past behaviour or ranking of contributions can significantly increase repeated contributions, the required identity management and reputation measurement are extremely challenging, especially in dynamic MCS environment.

2.4 Key factors of quality control in mobile crowdsourcing

The overall outcome quality in crowdsourcing systems can be characterized along two main dimensions: crowd profiles (e.g., reputation and expertise, etc.) and task design (including task definition, user interface, granularity and incentives and compensation policy, etc.) [18]. Specifically, the trust relationship between a crowdsourcer and a particular participant (through the help of crowdsourcing platform) reflects the probability that the crowdsourcer expects to receive a quality contribution from the participant. In large MCS community, because members might have no experience or direct interactions with other members, they can rely on reputation to indicate the community-wide judgment on a given participant’s capabilities and attitude. Reputation scores are mainly built on community members’ feedback about participants’ activities in the system. Sometimes, this feedback is explicit—that is, community members explicitly cast feedback on a worker’s quality or contributions by rating or ranking the content the worker has created. In other cases, feedback is cast implicitly,as in Wikipedia, when subsequent editors preserve the changes a particular worker has made.

3 Architectures of quality-aware MCS with built-in incentive

Generally, to crowdsource a task, its owner, crowdsourcer, submits the task to a crowdsourcing platform. Crowd who can accomplish the task, called participants, can choose to work on it and devise solutions. participants then submit these contributions to the crowdsourcer via the crowdsourcing platform. The crowdsourcer assesses the posted contributions’ quality and might reward those participants whose contributions have been accepted. This reward can be monetary, material, psychological, and so on. There exist four main pillars in any MCS systems: the crowdsourcer, the crowd, the crowdsourced task and the crowdsourcing platform [19, 20].

-

The crowdsourcer is the entity (a person, a for-profit organization, a non-profit organization, etc.) who seeks the power and wisdom for solving some tasks.

-

The crowdsourcing task is the problem (e.g., noise pollution in a specific urban area, etc.), which is published to a mobile crowd through an open call for solutions.

-

The crowd consists of the people who use their smartphones to contribute to the problem’s solution by generating, processing, or sensing data of interest.

-

The crowdsourcing platform is the system (software or non-software)within which a crowdsourcing task is performed.

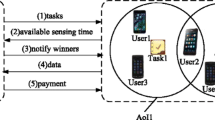

Inspired by [21], we presents a detailed quality-aware mobile crowdsourced sensing framework, composed of seven modules (as shown in Fig. 1).

-

Task management module characterize the sensing specifications and use cases, including data format for heterogeneous types of sensors, the types of participants, the required sampling rate for each type of sensors, the requirements of data visualization and representation, etc.

-

Mobile crowdsourced sensing frontend module provides crowd (participants) with a cross-platform user interface for reporting crowdsourced data. It incorporates the concept of “human-as-a-sensor” into the sensing scheme, where a participant can submit his/her in-situ observations and opinions. A user also has the option to allow whether to expose his/her location information, which helps protect user privacy.

-

Crowdsourcer module should have the following responsibilities: publish the appropriate tasks to platform, through interacting with task management module; provide quality feedback about the sensed contents offered by crowd; and pay the crowdsourcing platform and/or crowd for using the service.

-

Incentive engine, generally, has two purposes: stimulate crowd to actively participate and contribute high-quality data; and encourage crowdsourcers to provide truthful feedback about the quality of crowdsourced data. It may reward/punish crowd and crowdsourcers with monetary, ethical, entertainment, and priority, etc., based on their behaviors.

-

Identity and reputation management, together with the incentive engine, will manage the identities of participants and crowdsourcers, build reputation for them based on their past behaviors (even using crowdsourced way of its own) so as to enhance the quality of sensed data provided by crowd and trustworthiness of feedbacks by crowdsourcers.

-

Knowledge discovery module. In a mobile crowdsourcing system, the submitted data may be unstructured, noisy, and falsified. In this regard, this module provides intelligent data processing capability to extract and re-construct useful information from the raw sensing data submitted by participants. Furthermore, the quality of crowdsourced data can also be evaluated and fed back to the incentive engine (module 4) and identity and reputation management (module 5) to optimize the reward/punishment to crowd and crowdsourcers.

-

External datasets. As some government authorities or agencies have provided open data malls, this module allows sensing data from external datasets to be incorporated into the system to enrich the functionalities and services.

It should be explicitly pointed out that unlike existing work [21], in which incentive engineer only focuses on the crowd’s side (to stimulate users’ participation and provide high-quality contents), in our proposed MCS architecture, incentive engineer goes through the MCS architecture, pertaining to three MCS components: crowd, crowdsourcer, and crowdsourcing platform. The underlying design rationality is nature. It is the crowdsourcer that publishes tasks needed to be outsourced to the crowd, and the quality of contents provided by crowd should be naturally decided and reported by crowdsourcer. Furthermore, the report on content quality will significantly affect the reward that crowd can obtain. Thus, there exist a intrinsically social dilemma between crowd and crowdsourcers: the crowd should be stimulated to provide sensed contents with higher quality to get more reward that depends on the quality feedback by crowdsourcer; the crowdsourcers want to utilize high-quality contents provided by crowd, but pay crowd as little as possible, probably through false-reporting the quality of sensed contents.

Our proposed quality-aware incentive QuaCentive for MCS has the following characteristics:

-

Incentivizing crowd to truthfully bid for sensing tasks, and provide high-quality sensed contents. In QuaCentive, each participant is assigned a reputation ranking, based on her past behaviors when involved MCS. Participants are intentionally selected according to the combination of their reputation rankings and bids in reverse auction, so that not only is truthful biding of participants achieved, but also differential reputation-based reward scheme can be performed by crowdsourcing platform: A participant with a higher reputation will be given a larger chance to participate in tasks and receives more payments upon the completion of a task.

-

Encouraging crowdsourcers to provide truthful quality feedback. If a crowdsourcer provides bad rating on sensed contents offered by crowd, then the task of cross-checking the rating will be crowdsourced to crowd in a gamification. Specifically, in this game, a group of randomly selected players are shown with the task, sensed data, and crowdsourcer’s rating, and asked to verify whether the quality rating truthfully reflect the relationship between the task and sensed data. The players in the verification game will be awarded small points based on the number of other players who answer in agreement. The verification game harnesses collective human intelligence to produce valuable output as a side effect of enjoyable gameplay. It is shown that cross-checking quality with other players tend to produce more predictable and less unique results [25].

4 Operation and theoretical analysis of QuaCentive

4.1 Detailed operation of QuaCentive scheme

The whole process of QuaCentive scheme is described in Fig. 2, which explicitly deals with two scenarios: good rating and bad rating on service (sensed data) reported by crowdsourcer. It mainly consists of 9 steps:

- 1.:

-

A crowdsourcer “buys” a sensing service from the crowdsourcing platform, through depositing \(N\) points in platform. It could give a short description of the task such as explaining its nature, time limitations, and so on. Note that the payment from the crowdsourcer will be refunded, if and only if the crowdsourcer reports that this service is failed (bad rating on sensed data), AND the report (bad rating) is successfully verified by platform through gamification. The deposit itself is only for fairness and voucher purpose;

- 2.:

-

Crowdsourcing platform distributes task details to the crowd to recruit participants, and receive the bids of the participants;

- 3.:

-

Platform selects participants based on their reputation rankings and bids, and then assigns task to the selected participants (contributors);

- 4.:

-

The contributors submit messages (sensed contents) to platform and get a static \(D\) reward;

- 5.:

-

Platform combines external datasets and/or knowledge discovery modules with the sensed data provided by participants, and then provides the result to the crowdsourcer;

- 6.:

-

The crowdsourcer receives this result and sends back a report of whether the sensed contents led to a successful service;

- 7.:

-

If the crowdsourcer reports that the service is successful, then the contributor \(i\) get \(p_i \) bonus;

- 8.:

-

Otherwise, the verification of crowdsourcer’s bad rating (report of unsuccessful service) will be crowdsourced to crowd, in gamification way. If the result of major votes is positive (i.e., the crowdsourcer’s bad rating is the ground-truth), then the crowdsourcer will receive \(R\) refund; otherwise, refund is not given;

- 9.:

-

Platform updates the reputation rankings for both contributors and crowdsourcers.

Here, we described some key steps in more detail.

Selecting participants based on their reputation ranking and bids (step 3)

A concept of reputation ranking was used to represent the trustworthiness (quality) of a participant. The less the value of a user’s reputation ranking is, the higher her trustworthiness is. An analogical example is: in a school, if a student’s ranking is No. 1, then he is considered as the best student in this school. This way of the representation of a participant’s quality will help us choosing the participants with minimum valuation. Of course, the reputation rankings of participants and crowdsourcers should be computed according to their past behaviors and current performance when the service is completed.

Formally, mobile users who want to take part in a task, present their bids (private information to them) in a sealed bid manner, and the wining participants are chosen through reverse auction, based on the measurement of bid multiplied by reputation ranking. We will formulate the process and properties of the selection method in the following subsection.

Rewarding contributors (step 7)

To incentivize users to share their sensed contents, QuaCentive pays a contributor \(i\) two types of rewards: \(D\) points of static reward and a bonus of \(p_i \) points that is dynamically determined. The static reward is granted immediately after the sensed data is accepted by QuaCentive. This incentive is provided irrespective of whether the message is reported by crowdsourcers as good rating or bad rating. Such a participation incentive can be helpful to ensure a steady stream of available participants to make the crowdsourcing platform attractive to crowdsourcer. This constant reward \(D\) is expected to be a small amount. The bonus reward \(p_i \) is granted right after the service is reported as successful. The amount of bonus can be significantly larger than the constant reward \(D\), since the sensed message may lead to a successful service needed by crowdsourcers. Furthermore, QuaCentive collects the statistics of successful service rate, infers each participant’s reputation ranking, and accordingly increases the bonus to encourage contributors to offer high quality data.

Verifying crowdsourcers’ bad ratings on the quality of the sensed contents (step 8)

As describe above, if the crowdsourcer provides positive confirmation on a sensing service, the contributor will receive a bonus of \(p_i \). However, if the crowdsourcer reported that, the quality of contents offered by crowd is bad, the crowdsourcer would have a chance to have \(R\) refund. Thus, without verification, crowdsourcer has the strong incentive to get refund by lying about the outcome of this task, so-called “false-reporting”. To make clear whether the failed service come from the really useless contribution data or crowdsourcer’s malicious behavior for paying less money to platform, QuaCentive framework distributes the primitive task, its associated sensed contents and crowdsourcer’s feedback, through crowdsourcing platform, recruits crowd to vote whether the task is successful or failed in gamification way. If the result of major votes is positive (i.e., truthful rating), the crowdsourcer will have \(R\) refund; otherwise, refund is not given.

Updating reputation rankings of participants and crowdsourcers

In QuaCentive system, after completing each service and verification process, the platform rates each participant and crowdsourcer with a neutral, negative and positive feedback. As a simple example, it could assign 0 point for each neutral feedback, \(-\)1 point for each negative feedback, and 1 point for each positive feedback, and the reputation of the participants could be computed as the sum of its points over a certain period. The users with high reputation are easy to be selected as winning participants to assume the task, and obtain more payment than participants with low reputation. For the crowdsourcers, the lower reputation will lead to more money consumption when buying the crowdsourced service from the platform.

4.2 Theoretical analysis of QuaCentive

In this subsection, we theoretically analyse the properties of QuaCentive from the three players’ viewpoints in MCS: crowd, crowdsourcing platform, crowdsourcer. Table 1 shows the notations and their meanings used in our analysis.

From crowd side: individual rationality, incentive of providing high-quality sensed contents, and truthful bid

Ideally, the users who provide high quality data and require low payment should be select as participants complete sensing tasks. Here we integrate reverse auction and reputation mechanisms to select appropriate participants. Specifically, assume there are \(n\) users in the auction, and user \(i\) takes part in the reverse auction through placing its bid in a sealed bid manner. The valuation of the participant \(i\) in the auction \(\nu _i \), is defined as Eq. (1).

where \(b_i \) and \(c_i \) respectively represent the bid price and the reputation ranking of the participant \(i\). Note that the less the value of \(c_i \) is, the higher the trustworthiness of the participant is. This way of the representation of participant’s trustworthiness will help us choosing the participants with minimum valuation.

All users are sorted in ascendant order according to the metric of valuation defined above. Then, in the sorted list, from top to bottom, the required number of bidders with the minimum valuations are selected as the winners to complete the task. Then, the payment should be given to each winner, which is an important step in QuaCentive to compensate each participant’s cost and attract more users.

Assume that user (\(i-1)\) and \(i\) are the two consecutive participants in the rank list, and user \(i\) is above \((i-1)\), the final payment \(p_i \) to participant \(i\) can be intentionally inferred through Eq. (2).

Theorem 1

The payment scheme in QuaCentive can incentivize users to actively participate in crowdsourced tasks (i.e., individual rationality), provides high-quality sensed contents, and bid truthful price.

The schematic proof is following.

Where the last inequality term stems from the ranking rule of QuaCentive.

Obviously, \(p_i \ge c_i \). it implies the total payment to wining participant \(i\), \((D+p_i )\) is strictly larger than the bid price \(b_i \), thus QuaCentive satisfies individual rationality that is, users will actively participate in crowdsourced tasks.

Furthermore, Eq. (3) illustrates the smaller the value of \(\text {c}_\text {i} \) is, the higher payment the participant \(i\) would obtain. Therefore, the wining participant \(i\) has incentive to provide high-quality sensed service, and build higher reputation (i.e., to be ranked at front positions), so that she could earn larger payment.

Intuitively, if a payment a bidder is offered in an auction is independent of the bidder’s bid value, the auction is truthful. Formally, let \(\overrightarrow{b}_{-i}\) denote the sequence of all users’ bids except user \(i\), i.e., \(\overrightarrow{b}_{-i}=(b_1,\ldots ,b_{i-1},b_{i+1},\ldots ,b_n)\). Then the deterministic bid-independent auction can be defined as follows:

-

The auction constructs a payment (price) schedule \(p(\overrightarrow{b}_{-i});\)

-

If \(p(\overrightarrow{b}_{-i})\ge b_i\), players i win at price \(p_i =p(\overrightarrow{b}_{-i});\) otherwise player is rejected and \(p_i =0\).

According to the theorem in Goldberg et al. [22], a deterministic auction is truthful if and only if it is equivalent to a deterministic bid-independent auction. Obviously, the payment mechanisms in QuaCentive is a deterministic bid-independent auction, thus, it can incentivize users to truthfully bid.

From crowdsourcing platform’s side: condition of being profitable

Under what conditions is QuaCentive a profitable business enterprise? We will analysis this question to narrow down the region of the values for average bonus \(X\) to participants.

We consider a single task, in which, there are \(n\) contributors submitting messages, the probability that a successful service is reported by a crowdsourcer is \(p\), and the probability that the verification game can infer the ground-truth is \(q\). For each task, the cost for the service platform is as follows:

-

1.

Reward to the contributors is: \(n\cdot ( {D+p\cdot X})+n\cdot ( {1-p})\cdot q\cdot X\);

-

2.

Refund to the crowdsourcer is: \(( {1-p})\cdot (1-q)\cdot R,\) where \(( {1-p})\cdot ( {1-q})\) is the probability that the reported unsuccessful service by a crowdsourcer is confirmed by verification game;

-

3.

When unsuccessful serviced reported, the cost to recruit \(m\) participants to vote, \(m\cdot (1-p)\cdot D\), should be added. Here, we simply present the total payment, \(m\cdot D\) to all voters, since the game is easy to play, and the players joining the verification game are mainly for fun, small \(D\) points for each participant could be sufficient to incentivize users to play the verification game.

Usually, MCS platform typically has multiple sources of revenue as an example of targeted advertisements, so it may not rely solely on income from the hosted MCS service. Assume that the platform has a per-service revenue of \(C\) from these alternate sources. Our goal is to ensure the platform is profitable. In other words, we have the following constraint:

That is:

To ensure QuaCentive is a profitable business enterprise, the average bonus to each participant should not be greater than the right term in Eq. (6). So, once a crowdsourcer buys a service with \(N\) points, the platform will compute the possible average bonus to all participants immediately. If the participant’s bid \(b_i \) greater than the average bonus, she will be excluded from the candidates.

From crowdsourcer’s side: truthful feedback about quality of sensed contents

All players in MCS system are rational, and they may make a dishonest comment for self-interests. For crowdsourcers, they may use MCS to complete their tasks, but feedback that they have experienced a failed service, so as to receive refund from the platform. Furthermore, crowdsourcer’s dishonesty has the negative consequence that the contributors who have provided high-quality sensed contents are not rewarded with bonus, which can discourage contributors’ participation.

We now present a game theoretical analysis on how QuaCentive encourages a crowdsourcer gives truthful rating on crowd’s job. With such a design, rational crowdsourcers would choose to be honest in our system.

The key idea behind our approach lies in the verification game and the effect of reputation on crowdsourcer’s behavior choice. In the simplest way, the platform rates each crowdsourcer with a neutral (0), negative (\(-\)1) and positive feedback (\(+1\)) for each quality feedback. The reputation of the crowdsourcer is computed as the sum of its points over a certain period. To simplify analysis, assume \(F(c_j -1)\) denote the expected earning of crowdsourcer \(j\) with reputation ranking \(( {c_j -1})\). Note that in QuaCentive, the less reputation ranking is, the larger the player can obtain, thus, \(F(c_j -1)\ge F(c_j +1)\).

Table 2 shows, under the condition that a crowdsourcer reports unsuccessful service (bad rating on the sensed contents), the gain for a crowdsource if (s)he tells the truth vs. lies, and when verification game can be successful (infer the ground truth) vs. unsuccessful (that is, the crowdsourcer’s lying is not found by the verification game) .

Specifically, when a crowdsourcer \(j\) tells the ground-truth that the quality of sensed data is low, and the verification game can successfully confirm the ground-truth (with probability \(q)\), then the utility of the crowdsourcer \(j\) is the refund \(R+F(c_j -1)\); if unfortunately, the verification game draws the wrong conclusion (that is, the crowdsourcer reports the ground-truth, however, the verification game finally determines that the crowdsourcer lies), then the utility is \(-R+F(c_j +1)\). The other terms in Table 2 can be explained similarly.

To ensure consumers maximize their gain by being honest, we need the following constraint to hold:

Obviously, if \(q\ge 2/3\), then inequality (8) always holds. It implies that if the probability that verification game can successfully infer the ground-truth (when crowdsourcers report unsuccessful service) is larger 2/3, then, the best choice of crowdsourcer is to truthfully feedback the quality of sensed content.

Strategies to deal to whitewashing users

Note that, the reputation rankings are tightly associated with participants and crowdsourcers’ identities. Since it is hard to distinguish between a legitimate newcomer and a whitewasher, this makes whitewashing a big problem in QuaCentive framework. Whitewashing means that when a player (participant or crowdsourcer) has bad reputation in MCS system, then to avoid disincentives, she leaves the system and returns back with a new identity as a new comer to the system. Although the problem of whitewashing can be solved using permanent identities, it may take away the right of anonymity for users. Generally, there exist two methodologies to alleviate the problem of whitewashing in QuaCentive framework.

-

The first way is: The initial reputation for newcomers (including potential whitewashers) is adaptively adjusted according to the level of whitewashing in the current system [23]. It means that if whitewashing level is low, the initial value will be kept high; where as if whitewashing level increases, initial value will be decreased adaptively.

-

Another way lies in that it is possible to counter the whitewashing by impose a penalty on all newcomers, including both legitimate newcomers and whitewashers, which is so-called social cost [24].

5 Conclusion

Mobile crowdsourced sensing system usually pertains to crowd, crowdsourcer, and crowdsourcing platform. Basically, to crowdsource a task, its owner, crowdsourcer, submits the task to a crowdsourcing platform. Crowd who can accomplish the task, called participants, then submit these contributions to the crowdsourcer via the crowdsourcing platform. The crowdsourcer assesses the posted contributions’ quality and might reward those participants whose contributions have been accepted. There exist an intrinsically social dilemma between crowd and crowdsourcers: the crowd should be stimulated to provide high-quality sensed contents to get large reward that depends on the quality feedback by crowdsourcer; the crowdsourcers want to utilize high-quality contents provided by crowd, but pay crowd as little as possible, probably through false-reporting the quality of sensed contents.

To address the above social dilemma, this paper proposes, a quality-aware incentive framework, QuaCentive, which satisfies the following properties: individual rationality, cost-truthfulness for crowd, feedback-truthfulness for crowdsourcers, platform profitability. Specifically, first, we utilize the reverse auction and reputation mechanism to incentivize crowd to truthfully bid the sensing tasks, and then provide high-quality sensed content. specifically, when selecting the wining participants in reverse auction, QuaCentive takes into account both the participants’ bids and their reputation rankings, gives participants with higher reputation rankings a higher chance to win the auction and receive payment upon the completion of a task. Second, to encourage crowdsourcers to provide truthful feedbacks about quality of sensed data, in QuaCentive, the verification of those feedbacks are crowdsourced in gamification way. This verification game harnesses collective human intelligence to produce valuable output as a side effect of enjoyable gameplay. To authors’ best knowledge, it is the relatively pioneering work that appropriately integrates three main incentive methodologies, reverse auction, reputation and gamification in a rigorous manner to design incentive framework for MCS systems.

References

Howe J (2006) The rise of crowdsourcing. Wired Magazine

Ganti RK, Ye F, Lei H (2011) Mobile crowdsensing: current state and future challenges. IEEE Commun Mag 49(11):32–39

Khan WZ, Xiang Y, Aalsalem MY, Arshad Q (2013) Mobile phone sensing systems: a survey. IEEE Commun Surv Tutor 15(1)

Aubry E, Silverston T, Lahmadi A, Festor O (2014) CrowdOut: a mobile crowdsourcing service for road safety in digital cities. In: Proc. of the IEEE international conference on pervasive computing and communications workshops (PERCOM Workshops)

Yang D et al (2012) Crowdsourcing to smartphones: incentive mechanism design for mobile phone sensing. In: Proc. of ACM Mobicom, vol 2012. pp 173–184

Zhang X, Yang Z, Zhou Z, Cai H, Chen L, Li X (2014) Free market of crowdsourcing: incentive mechanism design for mobile sensing. IEEE Trans Parallel Distrib Syst 25(12):3190–3200

Yu Z, van der Schaar M (2012) Reputation-based incentive protocols in crowdsourcing applications. In: Proc. of IEEE INFOCOM

Hoh B et al (2012) TruCentive: a game-theoretic incentive platform for trustworthy mobile crowdsourcing parking services. In: Proc. of the 15th International IEEE conference on intelligent transportation systems (ITSC)

Frei B (2009) Paid crowdsourcing: current state & progress toward mainstream business use. In: Whitepaper produced by Smartsheet.com

Mason W, Watts DJ (2010) Financial incentives and the “performance of crowds”. SIGKDD Explor Newsl 11:100–108

Yefeng L, Lehdonvirta V, Alexandrova T, Liu M, Nakajima T (2011) Engaging social medias: case mobile crowdsourcing. In: Proc. of SoME

Yefeng L, Alexandrova T, Nakajima T (2011) Gamifying intelligent environments. In: Proc. of UbiMUI

von Ahn L, Dabbish L (2008) Designing games with a purpose. Commun ACM 51:58–67

Paraschakis D, Friberger MG (2014) Playful crowdsourcing of archival metadata through social networks. In: Proc. of the ASE BIGDATA/SOCIALCOM/CYBERSECURITY conference

Antin J (2008) Designing social psychological incentives for online collective action. In: Directions and implications of advanced computing; conference on online deliberation (DIAC)

Christi D et al (2012) IncogniSense: an anonymity preserving reputation framework for participatory sensing applications. In: Proc. of IEEE conference on pervasive computing and communications (PerCom)

Wang X et al (2013) ARTSense: anonymous reputation and trust in participatory sensing. In: Proc. of the IEEE INFOCOM

Allahbakhsh M et al (2013) Quality control in crowdsourcing systems. IEEE Internet Comput

Chatzimilioudis G, Konstantinidis A, Laoudias C, Zeinalipour-Yazti D (2012) Crowdsourcing with smartphones. IEEE Internet Comput 16(5):36–44

Hosseini M, Phalp K, Taylor J, Ali R (2014) The four pillars of crowdsourcing: a reference model. In: Proc. of the IEEE Eighth international conference on research challenges in information science (RCIS)

Wu F-J, Luo T (2014) Generic participatory sensing framework for multi-modal datasets. In: Proc. of the IEEE ninth international conference on intelligent sensors, sensor networks and information processing (ISSNIP)

Goldberg AV, Hartline JD, Karlin AR, Saks M, Wright A (2006) Competitive auctions. Games Econ Behav 55(2):242–269

Gupta R, Singh YN (2013) A reputation based framework to avoid free-riding in unstructured peer-to-peer network. http://dblp.uni-trier.de/pers/hd/g/Gupta:Ruchir

Feldman M, Chuang J (2005) The evolution of cooperation under cheap pseudonyms. ACM SIGecom Exch 5(4):41–50

Robertson S, Vojnovic M, Weber I (2009) Rethinking the esp game, In: Proc. of the 27th international conference extended abstracts on Human factors in computing systems (CHI EA)

Acknowledgments

The work was sponsored by the NSFC Grant 61171092, JiangSu Educational Bureau Project 14KJA510004, Huawei Innovation Research Program, and Prospective Research Project on Future Networks (JiangSu Future Networks Innovation Institute).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Wang, Y., Jia, X., Jin, Q. et al. QuaCentive: a quality-aware incentive mechanism in mobile crowdsourced sensing (MCS). J Supercomput 72, 2924–2941 (2016). https://doi.org/10.1007/s11227-015-1395-y

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11227-015-1395-y