Abstract

In the present work we analyze the Country Profiles, open access data from ISI Thomson Reuter’s Science Watch. The country profiles are rankings of the output (indexed in Web of Science) in different knowledge fields during a determined time span for a given country. The analysis of these data permits defining a Country Profile Index, a tool for diagnosing the activity of the scientific community of a country and their possible strengths and weakness. Furthermore, such analysis also enables the search for identities among research patterns of different countries, time evolution of such patterns and the importance of the adherence to the database journals portfolio in evaluating the productivity in a given knowledge field.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

In the scientific research activity, publishing the related outputs has always been a key link of a long chain of processes, which should be considered as a whole in a comprehensive analysis of science as a human activity (Bornmann and Daniel 2008). Nevertheless, focusing on the sole link of the research output in the form of scientific articles, for instance, became a prominent analytical framework for the entire chain. The reasons for that are manifold, although the inherent accountability is certainly an appealing factor (Roessner 2000; van Raan 2004).

This accountability is hence based on metadata of the scientific activity, namely the indexed bibliographic databases. Although indexing efforts and research outputs measurements remount to the nineteenth century (Godin 2006), a clear convergence of quantitative indicators based on bibliographic retrieving tools with research output evaluation came only in the 1950s and 1960s (Price 1963). This period witnessed the birth of a landmark in bibliographic databases, namely the Science Citation Index of the Institute of Scientific Information, ISI, nowadays popularly referred to as Web of Science (WoS) one of the products of ISI, now from Thomson Reuters, TR. ISI WoS became the benchmark of bibliographic databases, in spite of more recent concurrent initiatives, like the Scopus-Elsevier database launched in 2004 and embracing analysis such as the Science and Engineering Indicators undertaken by the National Science Board (Seind10 2010).

One could state that the availability of such frameworks drove a steady development of a new scientific field—Scientometrics—devoted to the quantitative analysis of Science itself, based on bibliometric data. Scientometrics addresses both, the questions raised by peers and those by external clients, like policymakers and research and knowledge based development stakeholders, characterizing an interesting example of mode 2 production of knowledge (Leydesdorff 2005; Neely 2005; van Aken 2005). However, the ever growing easiness in extracting data from WoS leads to the curious scenario in which research evaluation, as well as research policies, are being conducted by different stakeholders, sometimes claiming support from bibliometric data handled without the necessary rigor (Kostoff 1998).

In this context, while access to WoS needs a subscription, ISI TR offers open access to other resources, like Science Watch, in which data and rankings, among other information, are available to the general public. One of the information consists in country profiles, namely field output rankings for different countries, based on the compilation of bibliometric data (number of papers and citations over an approximately 10 to 11 years period) across TR databases. Most recent country profiles, starting in 2008, can be found in Science Watch, under data and rankings, while older ones, originally in the in-Cites web page, are now available in the archives of Science Watch. These profiles have been compiled for several countries in the last ten years, including some cases of profiles for the same country separated by a time span of several years.

The aim of the present work is to analyze these open access country profiles, by means of a new index, the country profile index (CPI). The main motivation is to discuss an interpretation guide for these otherwise not commented information sets. Nevertheless, the underneath fingerprints on research patterns on the macro-level reveal cultural identities and characteristic differences among different scientific fields and countries, as well as the evolution in time of country research profile, taking into account field strengths and database coverage changes.

Methodology: country profiles and activity index

The country profiles released by Science Watch ranks the output and citations, within the ISI Thomson Reuters databases, of a country in a time span of approximately ten years in the 22 major fields considered in Essential Science Indicators. Since country profiles are released successively by Science Watch, the cases consider here are for time spans shifted up to a few years. Although this could be a methodological drawback, we will show that CPIs are in many cases very stable figures of merit, showing only slight changes up to few years’ shifts. On the other hand, drastic changes in some cases are taken under close scrutiny and will shed further light on the given countries scientific production evolution over time. The time spans of the released country profiles are indicated in the figure captions.

We focus here solely on the output, number of papers, as a measure of the scientific production and not on the citations, a not uncontroversial proxy for measuring the impact of this production (Bornmann and Daniel 2008). For each country we build a field index by normalizing the number of papers in a given field by the total country’s output in the consider time span. Initially we take the field ranking of USA, still the largest science producer in the World in all fields, according to the ISI TR. Figure 1 shows this normalized ranking for the USA (full squares)—and also for England (full circles)—were the fields are ordered from the most productive, clinical medicine, to the lowest output, namely multidisciplinary. All other normalized country profiles are also ordered the same way, making a benchmarking possible, since one can directly compare the relative importance of a given field within a certain country total scientific output to the same relative output in the USA. Furthermore, such procedure makes direct comparisons of the relative importance of a certain field within any group of countries possible, as will be shown below. In Fig. 1 we see that, in spite of the great difference in absolute number of publications, the English and US field profiles are almost identical, a remarkable fact that will be further addressed bellow. In what follows, we call this indicator as the CPI, a measure of the share of different fields within the total scientific effort of a country.

The present CPI resembles the concept of the Activity Index (AI) currently used in profiling publications on a macro-level. Nevertheless, important differences have to be carefully outlined, although similar analyses are possible for both cases.

The AI has been introduced by Frame (1977) and is defined as the ratio of the share of a given field in the publication score of a country to the share of the same field in the world total publications. In AI analyses twelve major fields are considered, as in Glänzel et al. (2008). However, while the AI benchmarks a field output from a given country in respect to the world output, the CPI considers normalized field outputs in respect to the total country’s output. Besides that, The CPIs are presented following a specific field ordering, namely the one of the largest science producer in the world. Another important difference is that the CPI considers 22 major fields, while AI only 12.

Along the work we will see that in spite such differences, the paradigmatic patterns for publication profiles found for AI, as discussed by Glänzel et al. (2006), are good guidelines for the findings from CPI analysis. On the other hand, considering 22 major fields is not a simple question of regrouping the same fields, since by using the Science Watch fields’ definition we are including fields like Social Sciences, not considered in previous AI studies. Here it should be mentioned that such field division may be rather arbitrary, since they are composed by subfields that are sometimes very closely related with probably thematic overlapping of the indexed journals (Vinkler 1999). As an example we may quote here three fields: Biology & Biochemistry, Microbiology and Molecular Biology and Genetics. A closer look to the related subfields (Sciencewatch A; Sciencewatch B) suggests that a given publication could be accounted for more than one single field. Nevertheless, it is important to stress that we are analyzing open access data, delivered by Science Watch, obtained by applying the same methodology in all cases, and our approach permits the building up of a simple tool for a broader audience interested in science indicators.

Country profiles: patterns and groupings

An initial useful guidance for presenting and discussing our results are the four paradigmatic patterns for publication profiles found for AI as described by Glänzel et al. (2006) and Glänzel et al. (2008), among others, which are summarized below.

-

(1)

The so called “western model”, fingerprint for developed western countries, presenting clinical medicine and biomedical research as dominant fields.

-

(2)

Predominance in chemistry and physics and less activity in life sciences is considered a common pattern for former socialist countries, China and present economies in transition.

-

(3)

A “bioenvironmental model”, most typical for developing or more “natural” countries, which present main focus on biology, earth and space sciences.

-

(4)

A “Japanese model”, also typical for other developed Asian economies with predominance of engineering and chemistry.

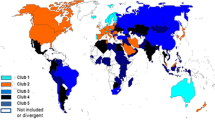

Having in mind that these patterns emerge from AIs and not from CPIs, we see that they partially apply to our purpose. In fact, looking at the CPI for the USA and England, we clearly identify a “western like model”, with a remarkable predominance in clinical medicine, which corresponds, actually, to roughly a quarter of the total scientific output of these countries. Considering other developed western countries, i.e., continental Europe, a more involved picture comes out. Fig. 2 shows the CPIs for seven European countries.

All seven profiles in Fig. 2 are very similar and the “western model” can be identified by the clear predominance of clinical medicine, although the share of this field in the total output of each country ranges from 18% (Spain) up to 30% (Austria). At the other extreme, it is noticeable that agricultural sciences are by far more relevant in Spain, representing circa 4% of the total output, while for the other developed countries considered this share is below 2%.

Further important differences appear between western developed countries. In continental Europe, the share of Physics and Chemistry is considerably higher than in the USA and England. Evidently, a higher share of some fields in the total output is concomitant to a lower share of others. What is rather surprising is that all seven European countries show a pronounced dip for the same fields: social sciences, general; psychiatry/psychology and economics and business. Looking at social sciences, general; while in the USA and England this field represent over 6% of the total number of publications (comparable to physics, chemistry, biology and engineering), in continental Europe this share drops drastically to less than 2%, in some cases even less than 1%.

The low share for Social sciences is in fact a common characteristic of all non English speaking countries CPIs investigated. Although the data show this particular pronounced bias, clearly differentiating low consensus sciences (social sciences, psychology, economics) from high consensus sciences (hard and applied sciences), the discussion about a cause of such bias has to be taken very carefully. The “western model-like” CPIs presented in Figs. 1 and 2 are so divided in two sub groups, within each of them a research profile similarity appears, the meaning of which has to be addressed elsewhere.

An evident similarity in research profile can also be identified within Latin American countries as shown in Fig. 3. Argentina, Brazil and Mexico seem not to fit into the main paradigms described above, showing clinical medicine, chemistry, physics and plant and animal sciences as dominant fields. Besides that, it is noticeable the relative high importance of agricultural sciences, reaching up to 4% of the total output in Brazil. We consider here only the most prolific LA countries, considering, for instance, the number of publications indexed in WoS in the 2006–2010 period: Brazil circa 158,000, Mexico 50,000 and Argentina 38,000 (data retrieved in September 2011) .

Two more examples of CPI similarity or diversity will be considered in the next figures. Figure 4 depicts the CPIs for Japan, and two other Asian developed economies, Taiwan and South Korea. Here the “Japanese model” is less clear than the definition of the “Western model” applied to USA and Europe. The CPI of Japan, for instance, resembles very much the CPIs of Continental Europe, with a clear dominance of clinical medicine and an important “plateau” in chemistry and physics and a very low share in social sciences, general. Interestingly, Taiwan shows dominance in engineering together with clinical medicine and two other noticeable fields: materials science and computer science. Therefore, from the point of view of the presently shown CPI, only Korea fits into the “classical” “Japanese model”, with predominance of chemistry and engineering.

Hence, inspecting the CPIs for the available data for Japan and other Asian countries, including the slightly more recent data for China, Fig. 5, a great diversity is observed, hampering the definition of an unambiguous “Japanese model” from the point of view of CPIs. Nevertheless, an important common feature stands out, namely the high share in materials science for all these countries.

As a last example of CPIs clustering, Fig. 5 shows the profiles for the BRICS countries. The group of emerging economies—Brazil, Russia, India, China and South Africa (included in the original BRIC group in 2010)—labeled by the acronym BRICS, would represent an economic identity in the sense that these countries would characterize the shift in the global economy power in the next decades. The real meaning of this economic grouping is rather controversial and from the point of view of research output profile, no clear identity can be found. Nevertheless, a closer look into such dissimilar profiles reveals important features. South Africa shows plant and animal sciences as a dominant field together with clinical medicine, which shows in this country a share comparable to developed countries. Both fields represent already 40% of the total output, but other relatively important fields appear, especially geosciences and environment and ecology. It should be noticed that social sciences, general, has in South Africa the highest share after USA and England among the countries analyzed here. Russia and China, although both classifiable in the pattern 2 (predominance in chemistry and physics and less activity in life sciences), present some important differences, like the mentioned activity in materials science in China. On the other hand, India presents a hybrid profile (Glänzel and Gupta 2008), besides the dominance of physics and chemistry, important share rates are observed for two very different field groups, like engineering and materials sciences, on one side, and plant and animal sciences and agricultural sciences, on the other side.

CPIs, as shown here, can be correlated to other indicators beyond the comparison to AI undertaken so far. The consistency of patterns suggested here is corroborated by a closer look to the Mean Structure Difference index (MSD) presented by Vinkler (2008). In this work, Vinkler ranks the share of science fields for two groups of countries; the first composed by 14 European Community member states together with the US and Japan (EUJ) and the second group composed by 10 Central and Eastern Central European countries (CEE). Both groups cannot be directly related to a single profile pattern derived from AI, but it is enlightening to verify that among the EUJ group, Clinical Medicine ranks first, followed by Chemistry and Physics. Engineering, Biology and Biochemistry. Plant and Animal Sciences show also similar positions as in the ordering considered here. Two main differences are revealed by the MSD for EUJ: Social Sciences drop to 14th position (6th in US and England) and Material Sciences rise to 7th (13th in US and England). Both differences are consistent with the CPIs shown here, since the EUJ group includes countries where the Social Sciences share is very low, compared to the US and England, and countries where Material Sciences are more relevant. Furthermore, for the CEE countries, Chemistry ranks first, followed by Physics and with Clinical Medicine only in the 3rd position, similar to the former socialist countries patterns mentioned above.

Discussion on profile patterns

The main motivation in gathering open access data from ISI TR into an additional index is the proposal of an analysis tool of scientific activity for a general informed audience. The CPI shows evidences of how the country’s different scientific communities compare to each other, considering a given form of output: source items in indexed publications by ISI TR. The CPIs for different countries can be compared and the benchmark chosen here is based on the CPI for the USA, since their outputs are leading in all 22 fields considered and the descending ordering of their field outputs, Fig. 1, delivers a smooth curve which reveals a useful guideline for the other countries.

The depicted CPIs in the present paper show gross signatures of the four publication patterns deducted from AIs (Glänzel et al. 2006), albeit revealing finer fingerprints, which deserve further investigation beyond the freely available data taken so far. For sake of clearness in the remaining of the paper, we recall two main questions raised by inspecting the figures above, having in mind that the data correspond to the ISI WoS coverage.

-

(1)

Cultural identity and the social sciences bottleneck. Figure 1 shows a remarkable identity between USA and England, regarding the scientific output share of all the considered fields; while developed continental European countries show a very different profile for social sciences, economy and business and, although less pronounced, psychiatry and psychology. Similar profiles for the same fields are also seen in Latin American and Asian countries, Figs. 3 and 4, respectively.

-

(2)

Field strengths and profile time evolution: changing both the scientific productivity and the database coverage. Some rather old profiles, like the Brazilian one, suggest important relative strengths in certain fields, mainly plant and animal sciences (a co-predominance shown also for Argentina and Mexico) and agricultural sciences. The relative strength of agricultural science continued to grow in recent years, while other fields show also important variations in their world shares, according to featured analysis released by Science Watch in 2009. These aspects lead to the necessity of investigating the stability of CPIs over time, opening the quest for identifying if relevant share modifications among fields are due to changes within the country’s scientific community or modifications in the database itself.

Cultural identity and the social sciences bottleneck

An important point of view, concerning the differences among country’s profiles patterns, may be considering the deviations from the sometimes called “standard model”, represented by the USA. There is a vast literature claiming, for instance, that developing countries show different publication patterns because of the low coverage in WoS of local and regional journals, as well as of grey literature (Jeffery 2000; Sancho 1992). In the same sense, one should consider also national, instead of international, oriented journals, as analyzed by Meneghini et al. (2006) for the case of Brazilian journals.

However, including the field of social sciences, absent in previous works on AI (Glänzel et al. 2006) reveals evidences that the WoS coverage may also be a concern to western developed countries. Our results show a strong unbalance in the social sciences output, comparing USA and England, Fig. 1, to continental European countries, Fig. 2. A pronounced dip in the CPIs curves also appear for Social Sciences (there are less pronounced dips for psychology/psychiatry and economics and business) in the Asian and Latin American countries. Remarkable is the relative large share in social sciences presented by South Africa, Fig. 5, suggesting that journal coverage and linguistic bias should be considered (van Raan et al. 2011) in analyzing a country’s output in social sciences and humanities (Nederhof 2006), irrespective to the economic development.

In this context, a further insight in the scientific country profiles may be given by the WoS indexed journal distribution profile. Figure 6a compares the CPI for the USA with the WoS indexed journals distribution among the 22 fields considered in Science Watch master journal list (Sciencewatch B). The correlation between both data sets is depicted in Fig. 6b. The linear fit, suggesting a good correlation, is obtained by excluding the point corresponding to social sciences, added afterwards in Fig. 6b for comparison. The correlations between WoS journals distribution among fields and the scientific outputs of the other countries are always lower than the one shown for USA and England, as illustrated for the Indian case, Fig. 6c.

a Comparison between the USA CPI (squares) and the field distribution of WoS indexed journals (circles). b Correlation between the data set in (a). Each point represents one of the 22 knowledge fields considered by ScienceWatch, showing a high correlation coefficient. c The same correlation between the Indian CPI, Fig. 5, and the distribution of WoS indexed journals, revealing a much lower correlation coefficient

An even better correlation between the CPI for the most prolific country with the number of journals in each field should not be expected, since the publication, co-authorship and citation cultures vary among the fields (Garfield 1979; Mcallister et al. 1983; Pudovkin and Garfield 2002; Rafols and Leydesdorff 2009, Moed 2010). However, the concomitancy of a “western model” and the predominance of journals in clinical medicine is noticeable, as well as a strong discrepancy between the high number of journals and a relative low output in number of papers for the general social sciences, Fig. 6a. Hence, aspects of a given CPI may indicate, among other factors the adherence of a given scientific community to the WoS journals as proper publishing vehicles. The adherence may change both ways: a given community may be driven towards publishing in indexed journals or non indexed journals, relevant to the community, may become indexed. Therefore, changes in the indexed journal portfolio can change the visible scientific profile of a given country. Here Brazil represents an interesting case study, since important changes occurred in the past decade, concerning Brazilian science as seen from the ISI WoS, which will be discussed in the following section.

Field strengths and profile time evolution: changing both the scientific productivity and the database coverage

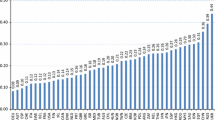

A document released recently by TR, Global research report-Brazil (2009) (Adams and King 2009), helps to map CPI pattern evolution possibilities, concentrating on the Brazilian output in the years 1998–2007. Besides stressing a tenfold growth in number of papers from 1980 to 2008, this report already ranks clinical medicine (14,408) first, considering the production from 2003 to 2007, followed in order by physics, biology and chemistry, all of them with an output of the order of 10,000 papers in the period. Furthermore, the leading world shares are in plant and animal sciences and agriculture, a trend already indicated by the CPI based on the 1993–2003 data, discussed in the present work and also identified in AI analysis by Glänzel et al. (2006). The more recent data, ranking first clinical medicine, already points to a significant shift towards the “western model”, although closer to the continental European countries than the USA–England benchmark. However, this report still does not capture completely the changes due to the regional journal expansion, with the inclusion of 105 Brazilian journals to the WoS database within the period 2007–2009, an outstanding record, considering that previously only 27 Brazilian journals were indexed. (Testa 2011) The total number of source items evolved from circa 14,000 in 2001 to over 36,000 in 2009 (data retrieved in September 2011), with a clear inflexion in 2007–2008 due to the database expansion, Fig. 7.

It is worth mentioning that this expansion in the indexation of the Brazilian scientific output within WoS represents a further driving towards the “Western model”. In particular, considering the share of Physics in the output, it dropped from circa 15% in 2001, a number close to the one presented by the 1993–2003 CPI, to 6, 5% in 2009, resembling the USA–England benchmark, Fig. 7. The number of source items in Physics during the 2001–2009 period were rather stable, therefore, the diminution in share was due to the a growing adherence of other fields to the database, together with the expansion of the database towards regional journals related to these other fields, particularly Clinical medicine, plant and animal sciences and agriculture.

Having in mind these evidences of important changes over time in the Brazilian case, the time evolution of CPIs has to be further investigated. Since the methodology used by Science Watch consider 10 to 11 years of indexed scientific output in building up country profiles, we consider them robust in respect to the time shifts of few years among the CPIs compared within each of the figures shown above. However, Glänzel et al. (2006) point out some important changes in the AIs of some Latin American countries, when comparing non overlapping 5 years outputs, 1991–1995 and 1999–2003. Since we are analyzing country profiles released by Science Watch since 1991, there are not yet profiles for a given country, which do not overlap in time. Nevertheless, cases with relatively smaller time separations are available and were analyzed, Fig. 8. It is rather surprising that the CPIs either for India, from 1993–2003 and 2000–2010; Russia, from 1995–2005 and 2000–2010 (not shown here) and England, from 1995–2005 and 1999–2009, show no remarkable differences as a function of time.

In spite of such negative results, country’s patterns changes should be expected and time overlapping 11 years periods are to robust to clearly identify such changes, although dramatic changes may occur as suggested, for instance, by the Brazilian case. From this particular case we also learn that a complete understanding of the evolution of a given CPI has to take into account changes in the indexed journal portfolio of the database considered.

Conclusions

The availability of open access data on science indicators, as the Country profiles in TR’s Science Watch, demands continuous discussion beyond the construction of bare rankings, otherwise the relevance of such data collection becomes reduced, if not misleading to the general informed people, having in mind the warning that one should not “avoid the question of what is being measured and why” (Leydesdorff 2005).

The present analysis of the Country profiles is organized within the framework of the CPI proposed here, a complementary index to the pioneering AI, widely used in reports on science indicators as the Third European Report on Science and Technology Indicators (TERSTI 2003). Although the global view proportioned by the AI, the inclusion of social sciences, as well as the comparative studies of different countries having the US as a benchmark, lead to a wider questioning on the behavior of the scientific communities. Indeed, cultural identities, as well as differences, could be inferred from the data panels shown here, as depicted in Figs. 1–5, along with revisiting the patterns for publication profiles, suggesting a fine tuning of the four paradigmatic patterns in current use (e.g. Hammarfelt 2010; Andersen et al. 2011).

A deeper insight is obtained by further analyzing the adherence of a given community to the database, actually a quantifiable characteristic, as illustrated here by two examples in Fig. 6. The adherence is both country and field dependent and can be changed both ways: inducing changes in a given field community or by modifying the coverage of the database, as suggested by analyzing the Brazilian case, Fig. 7. On the other hand, relevant stability examples, Fig. 8, should also be kept in mind.

Further work could be undertaken in order to better understand, for instance, certain field strengths and their time evolution. Such further study can be conducted within the chosen database, ISI TR, by looking at the time evolution on the micro level within each field. More important is the comparison between databases. Here we are not restricting to comparing ISI TR with, for instance, a competing database like Scopus-Elsevier, otherwise an intensively studied and still inconclusive issue (Archambault et al. 2009). An important insight may be gained by comparing the representation of a given field output within ISI TR and within a field specialized database.

Finally, we should keep in mind that open access data have to be considered with care and thoroughly interpreted. The importance embedded in this consideration is based on a widely known scenario, but worth recalling: science indicators are the prominent common data to the three helixes in the triple helix model (Leydesdorff 2010; Yuan and Masamitsu 2010) for a knowledge based economy.

References

Adams, J., & King, C. (2009). Global research Report—Brazil-research and collaboration in the new geography of science. http://researchanalytics.thomsonreuters.com/m/pdfs/GRR-Brazil-Jun09.pdf.

Andersen, J. P., & Hammarfelt, B. (2011). Price revisited: On the growth of dissertations in eight research fields. Scientometrics, 88(2), 371–383. doi:10.1007/s11192-011-0408-8.

Archambault, E., Campbell, D., Gingras, Y., & Larivière, V. (2009). Comparing bibliometric statistics obtained from the web of science and scopus. Journal of the American Society for Information Science and Technology, 60(7), 1320–1326.

Bornmann, L., & Daniel, H. D. (2008). What do citation counts measure? A review of studies on citing behavior. Journal of Documentation, 64, 45–80.

Frame, J. D. (1977). Mainstream research in Latin American and the Caribbean. Intersciencia, 2, 143–148.

Garfield, E. (1979). Is citation analysis a legitimate evaluation tool? Scientometrics, 1(4), 359–375.

Glänzel, W., & Gupta, B. M. (2008). Science in India. A bibliometric study of national research performance in 1991–2006. ISSI Newsletter, 4(3), 42–48.

Glänzel, W., Leta, J., & Thus, B. (2006). Science in Brazil. Part 1: A macro-level comparative study. Scientometrics, 67, 67–86.

Glänzel, W., Debackere, K., & Meyer, M. (2008). ‘Triad’ or ‘tetrad’? On global changes in a dynamic world. Scientometrics, 74(1), 71–88.

Godin, B. (2006). On the origin of Bibliometrics. Scientometrics, 68(1), 109–133.

Hammarfelt, B. (2010). Interdisciplinary and the intellectual base of literature studies: citation analysis of highly cited monographs. Scientometrics, 86, 705–725.

Jeffery, K. G. (2000). Architecture for grey literature in a R&D context. International Journal on Grey Literature, 1(2), 64–72.

Kostoff, R. N. (1998). The use and misuse of citation analysis in research evaluation. Scientometrics, 43(1), 27–43.

Leydesdorff, L. (2005). Evaluation of research and evolution of science indicators. Current Science, 89, 1510–1517.

Leydesdorff, L. (2010). A knowledge-based economy and the triple-helix model. Annual Review of Information Science and Technology, 44, 367–417.

McAllister, P. R., Narin, F., & Corrigan, J. G. (1983). Programmatic evaluation and comparison based on standardized citation scores. IEEE Transactions on Engineering Management, 30(4), 205–211.

Meneghini, R., Mugnaini, R., & Packer, A. L. (2006). International versus National Oriented Brazilian Scientific Journals: A scientometric analysis based on SciELO and JCR-ISI databases. Scientometrics, 69(3), 529–538.

Moed, H. F. (2010). Measuring contextual citation impact of scientific journals. Journal of Informetrics, 4(3), 265–277. doi:10.1016/j.joi.2010.01.002.

Nederhof, A. J. (2006). Bibliometric monitoring of research performance in the social sciences and the humanities: A review. Scientometrics, 66, 81–100.

Neely, A. (2005). The evolution of performance measurement research: Developments in the last decade and a research agenda for the next. International Journal of Operations & Production Management, 25(12), 1264–1277.

Price, D. D. S. (1963). Little Science, Big Science. New York: Columbia University Press.

Pudovkin, A. I., & Garfield, E. (2002). Algorithmic procedure for finding semantically related journals. Journal of the American Society for Information Science and Technology, 53(13), 1113–1119.

Rafols, I., & Leydesdorff, L. (2009). Content-based and algorithmic classifications of journals: Perspectives on the dynamics of scientific communication and indexer effects. Journal of the American Society for Information Science and Technology, 60(9), 1823–1835.

Roessner, D. (2000). Quantitative and qualitative methods and measures in the evaluation of research. Research Evaluation, 8(2), 125–132.

Sancho, R. (1992). Misjudgments and shortcomings in the measurement of scientific activities in less developed countries. Scientometrics, 23(1), 221–233.

Sciencewatch A. http://sciencewatch.com/about/met/fielddef/.

Sciencewatch B. http://ip-science.thomsonreuters.com/mjl/.

Seind10. (2010). Science and engineering indicators, published by the National Science Board. http://www.nsf.gov/statistics/seind10/.

TERSTI. (2003) Third european report on science and technology indicators. ftp://ftp.cordis.europa.eu/pub/indicators/docs/3rd_report.pdf.

Testa, J. (2011). The globalization of Web of Science 2005–2010. http://wokinfo.com/media/pdf/globalwos-essay.pdf.

van Aken, J. E. (2005). Management research as a design science: Articulating the research products of mode 2 knowledge production in management. British Journal of Management, 16, 19–36.

van Raan A. F. J. (2004). Measuring science. Capita selecta of current main issues. In: H. F. Moed, W. Glänzel & U. Schmoch (Eds.), Handbook of quantitative science and technology research. The use of publication and patent statistics in studies of S&T systems (pp. 19–50). Dordrecht: Kluwer.

van Raan, T., van Leeuwen, T., & Visser, M. (2011). Non-English papers decrease rankings. Nature, 469, 34.

Vinkler, P. (1999). Ratio of short term and long term impact factors and similarities of chemistry journals represented by references. Scientometrics, 46(3), 621–633.

Vinkler, P. (2008). Correlation between the structure of scientific research, scientometric indicators and GDP in EU and non-EU countries. Scientometrics, 74(2), 237–254.

Yuan, S., & Masamitsu, N. (2010). Measuring the relationships among university, industry and other sectors in Japan’s national innovation system: a comparison of new approaches with mutual information indicators. Scientometrics, 82(3), 677–685.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Schulz, P.A., Manganote, E.J.T. Revisiting country research profiles: learning about the scientific cultures. Scientometrics 93, 517–531 (2012). https://doi.org/10.1007/s11192-012-0696-7

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11192-012-0696-7