Abstract

The purpose of this study is to investigate social science doctoral students’ perceptions and attitudes toward written feedback about their academic writing and towards those who provide it. The study culminates in an explanatory model to describe the relationships between students’ perceptions and attitudes, their revision decisions, and other relevant factors in their written feedback practices. The investigation used a mixed methods approach involving 276 participants from two large mountain west public universities. The main purpose of the qualitative phase was to develop a background for a questionnaire and provisional model to be used in the quantitative phase. Structural Equation Modeling analysis during the quantitative phase provided an eight-factor model that shows the relationships of different factors regarding feedback practices as they relate to doctoral students.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Doctoral student preparation deals with the broad range of academic practice, including research, teaching, and service (Walker et al. 2008). In doctoral programs there is a growing demand for improvement in research, specifically the quality and quantity of academic writing products (Caffarella and Barnett 2000; Lavelle and Bushrow 2007) such as dissertations, journal articles, and grant proposals. There are two interrelated rationales behind this attention. First, developing scholars with good academic writing skills is one of the main goals of doctoral education (Eyres et al. 2001). Doctoral programs require students to engage actively in academic writing, and look towards improving their writing through the application of quality standards and evaluation. Students are expected not only to satisfy degree requirements by writing course assignments and a dissertation, but they are also expected to contribute to their discipline by writing professional and publishable products (Kamler and Thomson 2006; Lovitts 2001) that add to their field of study.

Second, the quality and quantity of publications facilitate both a doctoral student’s career and a university’s reputation (Blaxter et al. 1998; Pageadams et al. 1995). Current academic job entry requirements include items such as a list of publications or grants (Walker et al. 2008) and similarly, academic job requirements include researching and writing in addition to teaching (Blaxter et al. 1998). Once an academic position is obtained promotion, tenure, and other reward systems within doctoral/research extensive universities are largely related to research activities (Blaxter et al. 1998; Young 2006) that depend heavily on academic writing. A measure of research productivity of an institution as well as an individual is the quality and quantity of publications (Toutkoushian et al. 2003). The stakes are high, from an institutional perspective and from the perspective of individual students to obtain academic writing skills.

Despite these demands and expectations, problems are reported regarding doctoral students’ writing and graduate level writing as a whole. Several reports and research studies indicated that many graduate students start writing research papers and even their dissertations underprepared, without adequate writing skills (Surratt 2006; DeLyser 2003; Alter and Adkins 2006; Aitchison and Lee 2006; Caffarella and Barnett 2000). Social science doctoral students are far less successful in refereed publication compared to science doctoral students (Kamler 2008; Lee and Kamler 2008). Studies specified writing problems such as students’ adoption of ineffective and inefficient writing strategies, problems with planning and organizing the writing task, difficulties in putting their ideas into written form (Torrance et al. 1994; Torrance and Thomas 1994; Torrance et al. 1992); problems with writing focused papers with persuasive arguments (Alter and Adkins 2006); inexperienced approaches to revision (DeLyser 2003; Torrance et al. 1994; Torrance and Thomas 1994; Torrance et al. 1992); problems with organization (Alter and Adkins 2006; Surratt 2006), and difficulty with grammar, punctuation, and word-choice (Surratt 2006). Studies also reported students’ anxieties toward the writing process (Kamler 2008), negative self-perceptions of their writing skills (Torrance et al. 1994; Torrance and Thomas 1994; Torrance et al. 1992).

Documented problems and increasing demands to produce underscore the need to effectively help and support students with their writing (Lavelle and Bushrow 2007). Several approaches to supporting doctoral students exist including special academic writing courses, writing centers, and writing groups (Biklen and Casella 2007; Bolker 1998; Delyser 2003; Kamler and Thomson 2006; Pageadams et al. 1995; Parker 2009; Phelps et al. 2007; Torrance and Thomas 1994; Wilkinson 2005). All of these interventions and approaches are based on the provision of effective feedback to students.

Providing feedback not only helps improving teaching skills (Brinko 1993), but also is an essential factor in helping doctoral students understand the academic writing process, improving both their academic writing skills and their final products (Caffarella and Barnett 2000; Kumar and Stracke 2007). Based on Gagné’s theory, providing effective feedback depends not only on the design of the feedback, but also on other conditions that lie within the learner (Gagné 1985; Gagné et al. 1992). One of these conditions is the receiver’s perceptions and attitudes toward feedback. Considering the centrality of feedback process in doctoral supervision (Caffarella and Barnett 2000), it is important to understand students’ reactions toward feedback for their academic writing. While Gagné’s work about feedback is critical, it ignores the fundamentally social nature (Aitchison and Lee 2006; Kamler and Thomson 2006) of academic writing. Feedback practices occur in the broader context of an academic community, discipline, and as part of an institution’s practices. There is a clear need for an understanding of doctoral students’ perceptions and attitudes toward feedback practices in the social context of academic writing.

Only a limited number of studies addressed the perceptions and attitudes of doctoral students toward feedback practices in academic writing (Caffarella and Barnett 2000; Crossouard and Pryor 2009; Eyres et al. 2001; Kumar and Stracke 2007; Li and Seale 2007). Although they provide a valuable foundation, these studies have some limitations as well. The source of the feedback tends to be supervisors (Kumar and Stracke 2007; Li and Seale 2007), other faculty (Eyres et al. 2001), or instructors (Caffarella and Barnett 2000; Crossouard and Pryor 2009). Almost no work examined how students’ revision decisions are related to their perceptions and attitudes toward different characteristics of feedback and feedback providers. Parallel work in feedback on teaching practices suggests that the source may play a critical role in how feedback is received (Brinko 1993). Moreover, available research on academic writing focused on specific programs or disciplines, rendering generalizability to other areas of social science suspect. Although some of these studies (Kumar and Stracke 2007) acknowledged the feedback process as a part of the academic communities’ writing practices, their results provided mostly discrete information without sufficient reference to different dynamics in the students’ feedback practices. There is no empirically supported model to explain doctoral students’ perceptions and attitudes toward feedback practices in regard to their academic writing, much less one that accounts for social context.

The purpose of this study is to describe doctoral students’ perceptions and attitudes toward different types and sources of feedback and provide an explanatory model to understand the relationship of these perceptions and attitudes, their revision decisions, and other relevant factors in their feedback practices. Because academic writing practices (Torrance et al. 1992) and the opportunities for academic and social interactions with the members of the academic community are mainly different in science and social science programs (Lovitts 2001), this work focuses on social science doctoral students and their written feedback practices to provide more homogenous results.

Review of Literature

Of the existing research literature in graduate and professional degree settings, many studies focused on a qualitative approach (Caffarella and Barnett 2000; Crossouard and Pryor 2009; Eyres et al. 2001; Kumar and Stracke 2007; Li and Seale 2007; Li and Flowerdew 2007; Pageadams et al. 1995; Parker 2009) in which interviews or content analyses of written feedback were the primary mechanisms for data collection. Investigations cover a range of disciplines including nursing (Eyres et al. 2001), science (Li and Flowerdew 2007), and social science (Parker 2009) including applied linguistics (Kumar and Stracke 2007), sociology (Li and Seale 2007), social work (Pageadams et al. 1995), educational leadership (Caffarella and Barnett 2000), and education (Crossouard and Pryor 2009; Lavelle and Bushrow 2007). Studies had a variety of aims, investigating perceptions about helpfulness of different feedback types (Eyres et al. 2001; Kumar and Stracke 2007), management of criticism (Li and Seale 2007), perceptions toward the critiquing process (Caffarella and Barnett 2000), perceptions of formative assessment practices (Crossouard and Pryor 2009), writing processes and beliefs about writing (Lavelle and Bushrow 2007), opinions and experiences regarding different feedback sources (Li and Flowerdew 2007), and opinions about feedback in scholarly writing groups (Pageadams et al. 1995; Parker 2009). Most studies focused on feedback from doctoral supervisors, other faculty members, or instructors (Caffarella and Barnett 2000; Crossouard and Pryor 2009; Eyres et al. 2001; Kumar and Stracke 2007; Li and Seale 2007) although some included feedback from peers (Caffarella and Barnett 2000; Pageadams et al. 1995; Parker 2009). Key findings from these studies are discussed below.

Perceptions and Attitudes Toward Different Characteristics of Feedback

Expressed opinions and perspectives in written feedback were perceived as very helpful in building student membership in the academic community and developing necessary skills (Kumar and Stracke 2007). However, critical comments and receiving low quality or contradictory feedback led to negative emotions (Caffarella and Barnett 2000). Evidence suggests that, to help students to manage negative emotions caused by critical feedback, the feedback may be mediated when supplied via email as opposed to face to face (Crossouard and Pryor 2009). Eyres et al. (2001) found that participants, especially those who had limited academic writing experience, reported their preference of receiving positive and encouraging feedback along with critical comments. The positive feedback gave the feeling of encouragement, confidence, and acceptance of the students’ writing (Kumar and Stracke 2007). However, the students find it more helpful when these encouraging comments are coupled with specific comments about their written work (Eyres et al. 2001).

The doctoral students expressed their appreciation for detailed feedback (Crossouard and Pryor 2009); in-depth feedback that stimulated their thinking, improved their arguments, and helped them to connect ideas (Eyres et al. 2001); and feedback regarding the conceptual framework of their papers (Pageadams et al. 1995). Eyres et al. (2001) further found that one of the foremost preferences of the students was to receive feedback about the clarity of their writing, especially regarding the language and conventions used in a specific discipline. Regarding editing, punctuation, grammar, syntax, and organization aspects of writing, most of the students in the research study perceived having editorial feedback in their first draft as irrelevant or offensive (Kumar and Stracke 2007).

Perceptions and Attitudes Toward Different Characteristics of Feedback Providers

Students valued feedback when they perceived that the feedback providers believed in their potential, cared about their improvement of skills, and tried to be helpful. Students presented positive attitudes toward feedback providers who listened and respected students’ ideas. However, they did not like feedback providers who criticized and corrected their writing without carefully examining the ideas on the paper (Eyres et al. 2001). They found it helpful when there is open dialogue toward making decisions about their paper (Eyres et al. 2001; Kumar and Stracke 2007) and similarly they liked the peer feedback they received in scholarly writing groups (Parker 2009).

The feedback provider who acknowledged and respected the opinions of the student while presenting their own opinions from a different perspective was found to be the most helpful regarding students’ revisions and improvement (Kumar and Stracke 2007). However the power relationship with such a feedback provider is also influential to students’ perceptions of the authority of the feedback comments. Although intended to be suggestive by the feedback provider, some comments were perceived as “obligatory” to be addressed in the revision due to power differences between a student and a tutor (Crossouard and Pryor 2009).

Revision Decisions

The doctoral students who had positive attitudes toward critical feedback had a considerable amount of revisions in the draft, examined the draft with a new perspective, and developed a more critical stance for future writings (Kumar and Stracke 2007). As might be expected, their decisions regarding the incorporation of feedback were rendered difficult when faculty contradicted each other (Caffarella and Barnett 2000).

Considering the influence of authority on a student’s revision process, Li and Flowerdew (2007) found that their participants usually trusted and accepted their supervisor’s corrections while rarely disagreed with their comments. However, written feedback in the form of dialogue was perceived as most helpful compared to other feedback forms in the revision process (Kumar and Stracke 2007). For managing disagreements and emotional effects of criticism, sustaining a comfortable and open communication based on mutual respect and politeness; providing praise and advice with criticism; refraining from the situations that may cause embarrassment; and managing power relations were found to be helpful (Li and Seale 2007).

Relevant Factors in Feedback Practices

Doctoral students’ goals, self-efficacy, and self-confidence concerning their writing were examined in relation to their feedback practices. Eyres et al. (2001) found that there is a relationship between students’ long term goals toward improving themselves as scholars and their level of interest and investment in different written assignments. Lavelle and Bushrow (2007) suggested several strategies for providing feedback specific to students with low self efficacy concerning their writing, which implies that these students need different types of feedback. Lack of confidence in writing ability affected revision process negatively, however as students engage in giving and receiving ongoing feedback, their self confidence improves as academic writers (Caffarella and Barnett 2000).

Although these efforts are noteworthy and each makes a valuable contribution, they are parsimonious, focusing on a specific area of a much larger feedback process, and investigating within specific disiplines, among specific populations, or narrowing focus to a single type of feedback provider. The largely discrete and focused prior work may not accurately represent the fundamentally systemic nature of feedback in relation to the writing process. This study aims to provide such an explanatory model drawn from a cross-disciplinary based of empirical data which can function as a scaffold for further research as well as recommendations for feedback providers.

Conceptual Framework of the Study

This study uses two theoretical stances in a complementary way: the Principles of Instructional Design and Conditions of Learning (Gagné 1985; Gagné et al. 1992), and Situated Learning and Communities of Practice (Lave and Wenger 1991). According to the first theoretical stance (Gagné et al. 1992), during instructional planning, external learning conditions should take into consideration a learner’s internal conditions. This is due to the effectiveness of the design of instruction and feedback depending on their compliance with learners’ individual characteristics (Dempsey and Sales 1993), including their attitudes. In the context of this research, the design of feedback provided to doctoral students for their academic writing practices requires the understanding of these students’ internal learning conditions; specifically, their perceptions and attitudes toward feedback and other external conditions related to the feedback process.

However, the examination of doctoral students’ perceptions and attitudes toward feedback practices for their academic writing should not be limited to individual perspectives. Learning is a social process as well as a cognitive one (Vygotsky 1978), and academic writing is a social practice as well as an individual one (Kamler and Thomson 2006). As part of a fundamentally social academic writing process, feedback needs to be examined as an ongoing dialogue between doctoral students and various members of their “community of practice.” Such communities (Lave and Wenger 1991) are not necessarily constrained to individual units within an institution. Nor are communities confined to a single institution. Communities are inherently cross-disciplinary, including members from several subject areas, and several defined roles, such as beginning doctoral students, post-doctoral students, and faculty. Within each community, feedback helps convey the community’s goals and criteria for success, conventions, procedures, tools, and language (Lave and Wenger 1991).

Methodology

This study uses a mixed methods research approach (Creswell et al. 2003; Johnson and Onwuegbuzie 2004) that is both sequential and exploratory (Creswell 2003). Qualitative data preceded quantitative, but the emphasis is on quantitative data collection and analysis. The objective of qualitative data collection was to discover constructs, themes, and their relationships regarding doctoral students’ perceptions and attitudes toward the characteristics of written feedback and written feedback providers, their revision decisions, and other relevant issues in their feedback practices. These qualitative data and associated analysis were then used to inform a questionnaire for the quantitative phase. The main objective of the quantitative data collection, and the primary focus of the study itself, was to confirm and model these relationships with a larger sample. That objective is embodied in the following research questions, all of which are tied to the quantitative phase:

-

1.

What are the perceptions and attitudes of doctoral students toward different characteristics of written feedback in regard to their academic writing?

-

2.

What are doctoral students’ perceptions and attitudes toward different characteristics of written feedback providers with regard to their academic writing?

-

3.

How are the doctoral students’ revision decisions influenced by these perceptions, attitudes, and other relevant factors in their feedback practices?

-

4.

What kind of other relationships exists between doctoral students’ perceptions and attitudes toward different characteristics of written feedback and written feedback providers, their revision decisions, and other relevant factors in their feedback practices?

Qualitative Phase

Because the qualitative portion of the study informed the instrument design to collect quantitative data, this phase is described here along with the results. Standardized open-ended interviews were conducted (Gall et al. 2003). To ensure the content validity of the interviews, two researchers who had cogent expertise reviewed the interview guide. Pilot interviews were also conducted with two doctoral students from the defined population of this research study.

Purposeful sampling was used for participant selection. Department heads were used as key informants to identify doctoral students (N = 28) who had comparatively more academic writing experience than their peers from the same program, with representation from a variety of social science disciplines in a large public university. A total of 15 doctoral students (54% participation rate), 8 women (53%) and 7 men (47%) from eight academic departments participated. As shown in Table 1, interviews generally took about an hour, the location varied but each interview was always private. Participants were asked to bring sample papers with previously obtained feedback to the interview, both to aid their recall of past experiences during the interview and as a source of authentic feedback examples in the eventual questionnaire.

Although this is far from Grounded Theory research (Strauss and Corbin 1998) the constant comparative analysis technique from grounded theory was employed. This has been done outside the context of grounded theory in the past (Brill et al. 2006; Kramer et al. 2007) and is especially appropriate when emergent themes are desired, themes which may go beyond the existing literature base. In this particular study, the approach was used to account for the fundamentally systemic nature of the feedback process, which by the nature of their scope prior studies could not account for. Specific qualitative goals included discovering common themes, constructs, concepts, and dimensions; exploring the relationships between these constructs; building a provisional model based on these analyses; and building the foundation for a subsequent questionnaire.

Analysis was conducted in three phases: open, axial, and selective coding (Strauss and Corbin 1998). While open coding is for discovering the concepts in raw data, axial coding is for relating these concepts to each other in a categorized way. These two coding processes are usually carried out together (Strauss and Corbin 2008). During the open coding process, characteristics of the discovered concepts and the variations within these characteristics, which are called properties and dimensions, were also examined. The open and axial coding analyses were stopped when (a) a meaningful categorization was developed after many iterative examinations of the transcripts; (b) subcategories, properties, and dimensions were repeated; and (c) no novel information was coming from additional transcripts.

The following example illustrates one vignette of the chain of evidence, including the open and axial coding processes. In line with the research questions, several questions were asked of the participants during the interviews regarding feedback providers, such as “whom do you mostly get feedback from?” and “what are the important characteristics of this person that lead you to ask for feedback?” The participants answered the questions with a variety of responses. For example one participant answered the second question as: “His incredible knowledge. He is brilliant. He knows his field…and he can give me resources, references, he can name names of the top of his head.” Through iterative examination of transcripts, subcategories started to emerge such as feedback providers’ knowledge and expertise level, willingness to help, personality, writing style, and so forth. After several thorough examinations of the transcripts at paragraph, sentence, and idea level, repetitive occurrences of these subcategories were observed.

By the end of the analysis, twelve main categories and their subcategories were defined along with their properties and dimensions. The main categories include: Perceptions and attitudes toward different feedback characteristics, perceptions and attitudes toward different feedback providers, examination of written feedback, revision decisions, perceptions and attitudes toward discipline or program, motivations for academic writing, asking for written feedback, perceptions and attitudes toward academic writing, general perceptions and attitudes toward feedback, author’s characteristics, types of academic writing, and specific academic writing instances.

Selective coding is for examining the relationships between categories and for integrating the data for a meaningful representation or theory. In this study, the interview transcripts were reviewed again and the sentences or idea units representing relationships between both main categories and subcategories were located. Then, using concept mapping software these categories and subcategories were visually linked. With the help of this software, the frequencies of relationships in the data were also calculated by counting the number of such sentences or idea units in the transcripts. Based on these analyses results, a provisional model that represents frequent relationships between main categories was developed (See Fig. 1). Some of the categories were not included in the provisional model because of their low frequency of relationships with other categories.

According to the provisional model developed based on the qualitative data analyses results, doctoral students’ motivations for academic writing are influenced by their perceptions and attitudes toward their discipline or program. In turn, their motivations affect their attitudes related to asking for written feedback. Moreover, these motivations influence their revision decisions after receiving the feedback. Students’ perceptions and attitudes toward the feedback provider influence students’ revision decisions and their perceptions and attitudes toward the characteristics of feedback given by these individuals. Students’ perceptions and attitudes toward the different feedback characteristics play a role in their revision decisions.

Trustworthiness Criteria

Lincoln and Guba (1985) suggest credibility, transferability, dependability and confirmability as trustworthiness criteria for qualitative research. Several precautions were taken to meet these criteria. To address credibility, the same standardized open-ended interview questions were used for all participants after examination by content experts in the field. Data were triangulated by asking participants not only for their recollections of their feedback experience but by asking them to bring and discuss examples of feedback. Comments were triangulated among research participants as part of the axial coding process. The consistency of participants’ answers was verified by examining transcripts at different levels (paragraph, page, and whole transcript). The chain of evidence and thick descriptions from the data above addresses the transferability, dependability, and confirmability criteria. Finally, the nature of the mixed methods research is a form of trustworthiness, since the goal of the quantitative findings is to further refine and confirm the qualitative portion of the work.

Instrument Design

The questionnaire was designed based on the qualitative data analysis results using established guidelines for survey research and questionnaire design (Bradburn et al. 2004; Dillman 2007; Oppenheim 1966; Rea and Parker 2005). A concerted effort was made to keep the questionnaire as authentic as possible with most of the items directly quoted from interview transcripts. Quotes were also pulled from actual feedback provided to doctoral students on manuscripts they brought to the interviews. Iterative pilot tests were conducted with ten doctoral students from the sampling frame. Two faculty members who specialize in Technical Writing reviewed the questionnaire for content validity. The final online questionnaire included 17 demographic and general questions about participants, 1 screening question, 2 multiple choice questions, and 135 4-point Likert scale items regarding participants’ perceptions and attitudes toward academic writing, their program, types of written feedback, feedback providers, and their revision decisions.

Quantitative Phase

The purpose of this phase of the study was to identify complex relationship patterns, and test the strength of the relationships between categories. The sampling frame consists of two large universities that provide doctoral programs in the same state. Both are large state funded research extensive institutions with an ample emphasis on doctoral studies and preparation. One is the state’s land-grant institution in a rural setting, the other a state funded school in an urban location. Considering the unclear boundary and definition of social sciences (Becher and Trowler 2001; MacDonald 1994), the Social Science Citation Index Subject Categories (Thomson-Reuters 2009) were used to classify relevant programs. The urban university had several (N = 22) social science departments with doctoral programs, more than twice the number at the land grant (N = 10). All department heads were sent e-mails requesting that they forward the invitation letter to their current and recently graduated doctoral students. In the urban university, 20 of 22 selected departments (91%) participated in the research, and all of the selected departments in the land grant (100%) participated. The questionnaire was administered completely online. After the initial notice and data collection, reminders were sent to departments and data were collected again. As a token of appreciation and incentive, four participants were given $100 gift cards after a computerized drawing.

The sample consisted of 276 participants, 160 from the urban university (58%) and 116 from the land grant university (42%). Participation rates for the questionnaire were 21% of the number of doctoral students in the selected programs at the urban university and 35% at the land grant. The participation rates from different programs were 26% for Education programs, 28% for Social Sciences and Humanities programs; 24% for Health Related Programs; 12% for Business and Economics Programs.

Forty-seven percent of the participants were doctoral students in Education programs. Students in Social Science and Humanities programs constituted 30%, Health related programs 17%, with Business and Economics programs comprising 6%. Most of the participants were in the second, third, and fourth years of their doctoral programs. The participants’ ages ranged from 22 to 83, (M = 37, SD = 10.17). Sixty percent of the participants were women (n = 165) while 40% of them were men (n = 110). Most of the participants (87%, n = 239) reported that they consider English their native language. In terms of work, 81% (n = 223) of the participants were employed but only 56% (n = 124 out of 223) of these participants’ jobs required them to engage in academic writing. Participants engaged in writing mostly conference proposals or proceedings. An average doctoral student had M = 5.01 conference proposals/proceedings, M = 2.43 journal articles, and M = 2.06 grant proposals. Twenty-nine percent of the participants were currently writing their dissertations, all of which were potential sources for feedback on academic writing.

For the first two research questions descriptive data analyses and for the remaining two research questions multivariate correlational data analyses were conducted. The multivariate correlational analysis was conducted in three main steps. First, an Exploratory Factor Analysis (EFA) was initially utilized to find out the latent constructs underlying the group of measured variables based on the data. Next, a Confirmatory Factor Analysis (CFA) was conducted after EFA for establishing the “construct validity” of the factors (Brown 2006, p. 2) and as an a priori step for SEM analysis. Finally, Structural Equation Modeling (SEM) was utilized because of its ability to analyze and test theoretical models (Schumacker and Lomax 2004). In this case, SEM is essential for testing and refining the provisional model developed in the qualitative phase.

Based on the factor analyses and provisional model, a hypothetical model was developed. The connections between the factors were adapted from the provisional model while the factors themselves were derived from the EFA. The resulting hypothetical model was then tested using SEM analysis to determine its level of support from the quantitative data. As part of the testing process, the model was revised several times based on the significance values and fit indexes. Two of the factors, feedback provider-knowledge and feedback provider-skill, were dropped from the model due to their weak association with other factors or due to their negative effect on fit indexes. A modified model with satisfactory indexes and significant relationships between its constructs was identified.

Although there is no consensus for a single fit index or criteria for evaluating model fit (Hu and Bentler 1999), researchers usually consider the following conventional fit indexes: Chi-square (χ2), Comparative Fit Index (CFI), Tucker Lewis Index (TLI), and Root Mean Square Error of Approximation (RMSEA). In this study, model fit criteria suggested by Schumacker and Lomax (2004), Hu and Bentler (1999), Browne and Cudeck (1993), and Hatcher (1994) were all considered and at a level indicating the resulting model appropriately represents the data it is based on.

Results

Descriptive Data Analysis Results for Research Question 1 and 2: Perceptions and Attitudes Toward Different Characteristics of Written Feedback and Written Feedback Providers

The items related to participants’ perceptions and attitudes toward different feedback characteristics were presented in the questionnaire in five main groups: participants’ need for feedback for different aspects of their paper, their preferences for the delivery of the feedback, their general feedback preferences, their specific feedback preferences, and their attitudes toward critical or negative feedback.

The participants needed feedback most frequently for arguments and justifications in their paper, clarity and understandability of the statements, inclusion and exclusion of information, introduction, and conclusion parts of their papers. In terms of communication mode, 45% had a preference for receiving feedback electronically, 17% preferred to have handwritten comments, and 37% had no preference for either method.

Table 2 shows participants preferences for several general as well as specific feedback types. Students tended to favor straightforward, clear, and detailed feedback. They also favored feedback that provides content related resources that support the direction of their papers instead of feedback that tries to change the direction of their paper. The participants preferred feedback examples that are both positive and critical, but mostly feedback with suggestive tones more than directive tones. Their preference of feedback content was all in three areas of content and arguments, organization and flow, and mechanical issues.

Regarding their attitudes toward critical or negative feedback, most participants were more open to rewriting their paper rather than abandoning their paper when they receive very critical or negative feedback. Although more than half of the participants responded that critical or negative feedback affects them emotionally, most participants disagreed that they lose their self-confidence or motivation when they receive critical or negative feedback.

The doctoral students had clear preferences for the kind of feedback they want to receive and tended to favor feedback that gives them clear direction about how to improve their paper. They seek out comments about important elements, such as arguments and justifications.

Participants’ perceptions and attitudes toward the characteristics of feedback providers were examined under two main question groups: the perceived importance of the feedback provider characteristics regarding the participants’ decision to ask for their feedback and their perceptions of the feedback providers in relation to the types of feedback they give. They responded that the feedback providers’ willingness to help; their thinking, organizing, analyzing, and writing skills; and the trust they have for the feedback provider are important when deciding to ask for their feedback. Age and the location of the feedback providers had little importance when participants decided to ask for their feedback. Eighty-eight percent of the participants agreed with the statement that providers’ feedback is influenced by their personality. Most of them (80%) also perceived that the feedback providers have high expectations of the participants when they give critical or negative feedback.

In another question group the participants were asked more questions about their attitudes toward asking for feedback. Nearly all (91%) of the participants responded that they ask others for feedback for their academic papers. Regarding whom to ask for feedback, most participants feel more comfortable asking for feedback from their professors in their committee (91%), and other doctoral students (75%). Comparably, only 63% felt comfortable asking feedback from professors outside of their committee. Seventy-two percent of the participants looked for several people to give them feedback. As for when they ask for feedback, 65% of the participants indicated that they look for several feedback occasions at different stages of their papers and 37% of the participants chose that they ask for feedback only when they cannot improve their paper any further by themselves.

These results show that most of the participants have positive attitudes toward asking for feedback. However some feedback providers’ characteristics are important to the participants in their decisions to ask for their feedback and the providers’ feedback is linked to these characteristics, such as their personality and expectations.

Multivariate Correlational Data Analysis Results for Research Questions 3 and 4: Relationships Between Factors

A total of 108 items were included at the beginning of data analysis. Questions regarding participants’ demographic and general information (17 items) and the screening question (1 item) were not relevant to this part of the analysis. Also, some items regarding the participants’ written feedback preferences (29 items) were not included either because these items were too specific individually to form meaningful factors by themselves or to form a meaningful factor item under other resulting factors. These were confirmed by conducting several pre-analyses.

According to West et al. (1995, p. 68) an important benchmark includes multivariate normality for variables. Values exceeding a skewness of 2 or a kurtosis of 7 indicate that normality assumption may be violated. Across all 108 items, only one was severely non-normal (skewness = 2.42, kurtosis = 5.73) and it was excluded from further analyses. Internal estimates of reliability were computed for the remaining 107 standardized items, all of which had 4-point scales. The Cronbach’s alpha level for subscales ranged from 0.84 to 0.87, indicating good reliability.

Item analysis using reliability procedure was conducted with 107 standardized items according to the guidelines of Green and Salkind (2005). Cronbach’s alpha if item deleted values ranged from 0.83 to 0.84, and Corrected Item Total Correlations values ranged from −1.46 to 0.43. Items with the lowest Corrected Item Total Correlations and items with commonality scores lower than 0.4 were detected. Based on these analyses 16 items impacted the internal reliability and commonality scores negatively were marked for further examination in the next analysis phase.

Exploratory Factor Analysis (EFA)

There were three different scales in the questionnaire (agreement, frequency, and importance scales). Although all of them were 4-point Likert scales, they relied on different continua. Since the scales were fundamentally different, separate exploratory factor analyses were necessary to assure internal consistency of factors. Principal components method was used for factor extraction with total of 107 items. Multiple criteria, specifically a Scree test (Cattell 1966) and parallel analysis (O’Connor 2000, 2009) were used to determine the number of factors to rotate for each group of items (Fabrigar et al. 1999). Ten factors for the agreement scale, four factors for the importance scale, and three factors for the frequency scale were rotated using an oblique rotation procedure (Fabrigar et al. 1999). Factor extraction incorporated the Maximum Likelihood method and rotation employed Direct Oblimin with Delta = 0. Total variance explained was 47% for agreement, 50% for importance, and 58% for frequency scale with Goodness of Fit χ2 = 2695.18, df = 1820, p = .00; χ2 = 394.96, df = 167, p = .00; and χ2 = 84.72, df = 42, p = .00 respectively for each scale.

After the examination of pattern matrix and factor loadings, some factors were eliminated based on the number of items in the factors, their items’ cross-loaded with other factors, their meaningfulness and interpretation as factors, and the item loadings of the factors (Brown 2006). Remaining factors were further examined and at least three representative items for each factor were selected (MacCallum et al. 1999). Appendix shows the selected factors and their representative items that were included in the SEM analysis.

In this study, the ratio of sample size to number of measured variables is 276/35 = 7.8 and the ratio of measured variables to number of factors is 35/10 = 3.5. Therefore the sample size was adequate for the SEM analysis and the factors were overdetermined (Fabrigar et al. 1999; MacCallum et al. 1999). Regarding the commonalities, they ranged from 0.45 to 0.81 with mean value of 0.69 with principal components analysis; and they ranged from 0.09 to 1, with a mean value of 0.57 with maximum likelihood method.

Confirmatory Factor Analysis (CFA)

As an a priori step for SEM, CFA was conducted to establish the construct validity of the factors, both regarding convergent and discriminant validity (Brown 2006). Based on the results of the EFA, a 10-factor model was specified and 35 measured variables were included in the CFA. Because ordinal, noncontinuous indicator variables were included in the model, Weighted Least Squares (WLS) estimator with theta parameterization was specified during both CFA and SEM analyses. In Appendix standardized parameter estimates are presented. Model parameters were all significant (p < .01) and explained substantial amounts of item variance (R 2 ranged from 0.36 to 0.94). Composite reliability of a factor, which is the measurement of reliability of a group of similar items that measure a construct, ranged from 0.69 to 0.93. Except for Factor 6 which had composite reliability of 0.69, all other factors had composite reliability higher than 0.7 as recommended by Hair et al. (1998).

According to these results it can be suggested that the factors found in the EFA may exist. Moreover, the model fit criteria are satisfied. Specifically, CFI = 0.97, TLI = 0.97 (Hu and Bentler 1999), RMSEA = 0.06 (Browne and Cudeck 1993; Hu and Bentler 1999), χ2 = 214.822, df = 108, p = .00 (Hatcher 1994), allowing SEM to proceed. Following is a discussion of the relationship between the factors regarding the nature of the feedback and the student responses to it, the feedback providers, and decisions to act on feedback and engage in revisions. A sample item from each factor is provided as a frame of reference.

The factor with the highest composite reliability score is Attitudes-Critical Feedback. This factor represents the students’ attitudes toward critical feedback, especially the negative effect of the critical feedback on their emotions, self-confidence, and motivation (e.g. “I lose self-confidence when I receive critical/negative written feedback”). The students’ different motivations to write academic papers also formed as a factor. The items in this factor are related to students’ desires to contribute to the field and develop their skills and knowledge as an academician (e.g. “My motivation for academic writing is to contribute knowledge to the field”). Department is another factor formed after the factor analyses. Its items are relevant to the perceived opportunities for collaborative writing with faculty members in the department (e.g. “The faculty members often write academic papers with their students”). Beyond these factors, CFA identified factors about students’ attitudes toward Asking for Feedback. (e.g. “I look for several people to give me written feedback for my papers”) and the sources of feedback.

Four factors, Provider-Help, Provider-Knowledge, Provider-Skill, and Provider-Personality all dealt with students’ perceptions and attitudes toward feedback providers’ different characteristics. The items in these factors answered the following main question: “How important are the following characteristics of a person to you when deciding whether or not to ask for their written feedback?” Provider-Help as a factor contains items regarding the feedback providers’ willingness and time to give feedback to students (e.g.: “Whether I feel that I won’t be a burden to him/her”) while Provider-Knowledge factor items are about the feedback providers’ knowledge and interest level in the content area of the students’ paper (e.g.: “His/her knowledge level in the content area that my paper is about”). Provider-Skill factor items are about the feedback providers’ skills in writing, thinking, organizing, and analyzing (e.g.: “His/her writing skills”). Provider- Personality factor items addressed the question from the perspective of both student trust for and personality alignment with feedback providers (e.g.: “Whether I trust him/her as a person”). While reactions to feedback providers are important, what students do with feedback is equally important.

The final two factors were related to the students’ revision decisions when they do not agree with feedback. The Revision Decision-External factor included items about students’ consideration of punishment–reward and authority–power relationships when they are revising their papers (e.g. “If I don’t agree with a written feedback comment, before deciding to ignore or use that comment for my revisions I ask myself: Will there be some kind of punishment for not revising this way?”). Revision Decision-Justification, on the other hand, represents a trade-off between student confidence in what they wrote and the merits of the feedback provided (e.g. “If I don’t agree with a written feedback comment, before deciding to ignore or use that comment for my revisions I ask myself: Is there any justification for that feedback?”).

Structural Equation Modeling (SEM)

Based on the provisional model, a hypothetical model was developed with all 10 factors resulted in the EFA to be tested in the SEM. Compared to provisional model’s factors which were based on qualitative data analyses results, the hypothetical model’s factors were based on EFA results and included specific questionnaire items. Direct and mediating relationships between factors were identified. The hypothetical model was then tested with Structural Equation Modeling (SEM) analysis. Only seven of the hypothesized direct effects from the hypothetical model were found to be significant. Several other connections were explored and found to not be statistically significant. CFI and TLI indexes satisfied the criteria (CFI = 0.95; TLI = 0.95), and according to RMSEA indexes, this model has a fair fit (RMSEA = 0.08). Chi-square criterion was not satisfied (χ2 = 258.122, df = 91, p = .00, χ2/df = 2.84). It has been concluded that although the model has a fair fit, it can be improved.

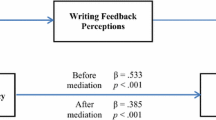

Considering the provisional model, the model was modified several times by deleting weak connections between factors and by observing the effect of the changes on the model modification indices and statistical significance of the parameter estimations. CFI and TLI indexes of the modified model satisfied the criteria, CFI = 0.96; TLI = 0.96 (Hu and Bentler 1999). According to RMSEA indexes, this model has a fair fit, RMSEA = 0.08 (Browne and Cudeck 1993). The Chi-square criterion, however, was not satisfied, χ2 = 1345.002, df = 448, p = .00 (Hatcher 1994; Schumacker and Lomax 2004). Based on the satisfaction of most of the model fit criteria, the model’s strong relationship with qualitative data analysis, and statistical significance of the estimates, the following model as presented in Fig. 2 is deemed representational of the data.

In SEM terminology, exogenous variables or factors are similar to independent variables. They originate paths but they do not receive them. Endogenous variables or factors on the other hand are similar to dependent variables in that they receive paths or influence from exogenous variables. They differ from dependent variables in that they may also originate paths to other variables and factors (Raykov and Marcoulides 2000). In this study, the exogenous factors were Department, Attitudes- Critical Feedback, Provider-Personality. Endogenous factors were Motivations, Asking for Feedback, Provider-Help, Revision Decision- Justification, Revision Decision- External.

The model is recursive, which means the arrows lead in one direction rather than showing bi-directional relationships. There is a mediated relation between Department, Motivations, Asking for Feedback, and Provider-Help, in which Motivations modifies the effect of Department on Asking for Feedback, and Asking for Feedback modifies the effect of Motivations on Provider-Help. Therefore, Motivations and Asking for Feedback are mediating factors although only the mediating effect of the former is significant. However, in mediated relation between the factors Attitudes-Critical Feedback and Provider-Help, Asking for Feedback factor serves as a significant mediator.

According to this model, participants’ perceptions toward the opportunities to write academic papers with faculty members in their departments directly and positively influence their motivations for academic writing, specifically regarding improving and contributing to the field and improving themselves as academicians. Moreover, it positively and indirectly influences participants’ attitudes toward asking for written feedback for their academic papers from several people and several times, which is also positively affected by their described motivations.

Participants’ attitudes toward critical written feedback, especially the negative effect of the critical feedback on their emotions, self-confidence, and motivation negatively influence their attitudes toward asking for feedback. Moreover, it positively influences their revision decisions related to the frequency of their consideration of punishment–reward issues, authority–power issues, and the underlying motivation issues in the feedback; which are also influenced by the participants’ attitudes toward the feedback providers’ personality when considering asking for their feedback.

Furthermore, doctoral students’ tendency to ask for more written feedback negatively influence their attitudes toward the feedback providers’ ability to dedicate time and their willingness to help. The latter is also indirectly influenced by participants’ attitudes toward critical or negative written feedback. Participants’ revision decisions, specifically the frequency in their consideration of the justification in the feedback, the need for revision, and their confidence level in what they wrote when they do not agree with the feedback, is positively influenced by their motivations in improving the field and improving themselves as academicians. The model will be described in detail in the next section.

Discussion

Limitations of the Study

Before discussing the findings, the following limitations of this study should be considered.

-

1.

The use of convenience sampling procedure decreases the generalizability of the findings. The data were collected in only two large universities, which were both research institutions with extensive social science graduate programs.

-

2.

The participation rates for the questionnaire are 21% for the urban university and 35% for the land-grant university. The participation rate for the interviews is 54%. Some students may have chosen not to respond to the questionnaire. There is no information that the remaining doctoral students have the same perceptions and attitudes as the participants in this study.

-

3.

The validity and reliability of the results of this study are limited to the honesty of the participants’ responses to the interviews and the questionnaire.

-

4.

Although the SEM analysis results indicated adequate fit, the final model is not the only model that fits the data. Chi-Square Test of Model Fit criterion was not satisfied although CFI and TLI indexes met the specified criteria. RMSEA value also showed that the model has a fair fit but not a close fit. Therefore, improvements can be made to the final model.

-

5.

Students from the fields of business and economics were left out of the interview stage to make the qualitative data collection and analysis tasks more parsimonious. As a result, the questionnaire and SEM model built on the questionnaire data may not accurately reflect the feedback practices from these disciplines.

Perceptions and Attitudes Toward Different Characteristics of Written Feedback and Written Feedback Providers

Some of the descriptive analysis results were parallel to the findings of Eyres et al. (2001). Most of the doctoral students participating in this study also preferred to have specific comments to support their arguments and to increase clarity. In addition to the content or nature of the feedback students frequently considered the tone of the feedback, preferring a balanced of positive and critical feedback. This aligns with Bolker’s (1998) suggestions that doctoral advisors be careful about the tone of the feedback, start their feedback with positive comments before the critical ones, and give very negative feedback in a gentle way.

Even though 62% of the students reported that critical and negative feedback affects them emotionally, only about half of these participants lose their self confidence or motivations after receiving such feedback. This is an indication that some of these students might be able to control their emotions toward critical or negative feedback; however the remaining students might need additional support. One factor that improves students’ self-confidence in their writing and reduce the negative effects of critical and negative feedback can be the practice of giving and receiving critiques over a period of time (Caffarella and Barnett 2000).

Results showed that most participants wanted to feel comfortable soliciting feedback, and did not want to feel like a burden to feedback providers. Students sought potential feedback providers with time and who were willing to help. They feel more comfortable asking for feedback from professors in their committees than those who are not in their committees. Although this describes a majority of the participant reactions to and perceptions of feedback providers, it does not describe all of them. SEM analysis results indicated that active seekers of feedback are less concerned about the feedback providers’ willingness or time to give feedback.

According to the previous studies in the literature, doctoral students want feedback for multiple drafts (Eyres et al. 2001) and their confidence improves as they receive ongoing feedback (Caffarella and Barnett 2000). In this study, however, about 35% of the participants reported that they do not look for different feedback occasions at different stages of their writing and they wait until they cannot improve their paper any further by themselves. Based on the SEM results, this situation might be related to perceived opportunities to write academic papers with faculty members and their attitudes toward critical feedback. Students perceiving few opportunities to engage in collaborative writing activities with faculty members, may lose their motivation to contribute to the field with academic writing, improve themselves as academicians, and have recognition in the field. This, in turn, negatively influences their feedback seeking attitudes. Such students see sharing their writing as a risk. As one interview participant explained, “I tend not to show my work if I feel like it’s not ready…because I don’t want to expose myself to that level.”

Doctoral students placed value in more general characteristics of feedback providers. Based on the descriptive data analysis, students seek feedback from providers who exhibit high quality thinking, organizational, analytical, and general writing skills. In fact, the skills of feedback providers were more important than content knowledge when students considered potential feedback providers. The lack of emphasis on content knowledge makes sense given that some of the valued forms of feedback were much more content neutral. Students valued comments about clarity of writing, consistency of the overall paper, and organization or flow of the paper. This is encouraging for those seeking to collaborate across departments or programs, and suggests that interdisciplinary feedback opportunities can be useful for students’ academic writing alongside feedback from the students’ own program.

The Influence of Perceived Opportunities to Write Academic Papers with Faculty Members

According to the SEM analysis results, the doctoral students’ perceptions toward available opportunities to write academic papers with faculty members influence their motivations for academic writing to contribute to their field, improve themselves as academicians, and have recognition in the field. This relationships can be explained in the framework of Situated Learning and Communities of Practice (Lave and Wenger 1991). Participation in the actual practices and the activities of the full participants can help doctoral students to improve their knowledge and skills, learn the criteria and conventions of the discipline, and build their identity in the academic community. The available opportunities to write academic papers with faculty members imply the available opportunities to participate with the support of experienced community members to produce publishable products. Perceived availability of this support, therefore, might shift their motivations to write academic papers toward becoming a full participant by contributing knowledge to the field; sharing their ideas and findings with other members; gaining experiences, skills, and knowledge as academicians; and building recognition in the field.

Peters (1992) also listed several advantages of graduate students coauthoring with their advisors. Aside from getting guidance from them to produce good quality papers, it may make it easier for their papers to be published and increases the students’ reputations if their advisors are well-known in the field. However, when there are problems with students’ integration into the academic communities, it becomes not only harder to obtain these outcomes, but this even leads to student’ attrition (Lovitts 2001). The following interview participant’s opinions illustrate this relationship:

It’s [academic writing] obviously enjoyable because I get to do the research that I want with certain faculty members, and then of course the products out of that are writing and publication and also presentations at conferences…. I think they really do a good job here preparing us for that mentality of, really, if you wanna academic position then, you need to publish, so, that’s really what they push here…having those opportunities, we’re very productive department, so you know, everyone’s always writing some sort of grant or some sort of publication, do some sort of research, and so, just asking to be part of that is really nice.

In the process of developing their identity in the academic community, doctoral students can make use of the opportunities to write with more experienced writers and researchers who have similar motivations and interests. This will further increase their motivations to integrate into the academic communities of their disciplines.

SEM analysis results also showed that doctoral students’ motivations for engaging in academic writing activities to contribute to the field, improve themselves as academicians, and have recognition in the field positively influence their attitudes toward actively asking for written feedback. The implication is that students see feedback as a valuable preliminary step in achieving their goal of academic recognition through publication. One participant explained his purpose of asking for written feedback from his committee members and chair as follows:

Maybe because I wanna get through like preliminary peer review. If my committee and mentors, if they like it and they stamp it and say “yep, this is good”, then when I submit it for publication, it has a better chance of being accepted or published.

The perceived opportunities to write academic papers with faculty members was also found to have a significant but indirect influence on the participants’ attitudes of actively asking for feedback. It can be suggested that faculty members’ perceived attitudes toward doctoral students’ participation in the academic practice collaboratively with them might also encourage students to ask for more help in the form of written feedback. One of the interview participants stated:

I have two professors now that have said “we’ve got to write an article together as soon as you get this [dissertation] edited. And so, I am learning humility I think. I am learning from them how to say this “I really need feedback on it” and…when they start the crossing out and the revising in that, I am learning to think “wow, you really did make this better, this is really great!”

Another possible reason is that since collaborative writing requires a lot of communication between authors, it is expected that the doctoral students ask for more frequent feedback from their coauthors. Furthermore, these collaborative writing activities and feedback practices might help to build the collaborative relationship between the student and the faculty, and the student might feel comfortable asking for the faculty’s feedback frequently, as one of the interview participants explained below.

I think the more, the better relationship you have, then the more closely you work with those people and that just kind of sets in motion that cycle of going to those people for more feedback and more feedback and then you forge those relationships.

Consequently, it is possible that developing a colleague-to-colleague relationship with the students, coauthoring academic papers with them, and giving and receiving feedback might encourage students to ask for feedback more actively and frequently when students are motivated to contribute to the field, improve themselves as academicians, and develop their reputation in the field.

The Influence of Doctoral Students’ Attitudes Toward Critical or Negative Written Feedback

Based on SEM analysis results, the participants’ attitudes toward critical or negative feedback regarding their emotions, self confidence, and motivations influence their attitudes of actively asking for feedback. When doctoral students are scared to have critical or negative feedback, and when they feel embarrassed, lose self confidence and motivation after receiving critical or negative feedback, they refrain from actively asking for feedback. As described by one participant, this may be associated with the beginning phases of study: “When I was a new graduate student…. I was scared to ask for feedback, ‘cause…. I was scared about getting negative feedback.” In the reverse case, when students feel that they are less affected by critical or negative feedback they actively ask for feedback from several people and on several drafts. One such interview participant explained the following:

…you just need to learn to take it and say, “ok what can I take away from this, how can I improve?”…. I think, as the more feedback I get the happier I am because…it’s just, it makes me a better writer and even if I reject some of the feedback, it’s still useful, because, I can see where they’re coming from, and I can see why they’ve said it.

Although further research is needed, the implication is that the emotional reactions to negative feedback diminish with experience.

Most of the interview participants in Lovitts’ study (2001) who did not complete their doctoral degree reported that they had limited academic and social interaction with the faculty and their advisors. Their interaction with other doctoral students also diminished over time. They reported faculty were not open to students and not interested in building relationships with them. Faculty were seen as cold and intimidating, and too busy, or not available for them. On the other hand, degree completers reported good experiences with faculty with whom they had academic and social interactions, who were friendly and open, who were interested in students’ progress and cared about them, and who were available and had time for the students. The degree completers found their advisor very helpful. Among their reasons of satisfaction with their advisors, some noted the useful and quick feedback they received from them.

Consequently, as some students whose emotions, self confidence, and motivations are negatively affected by the critical or negative feedback, it is possible that they are also affected by the feedback providers’ attitudes toward the students, especially their helpfulness or negligence. This, in turn, may reflect on students’ decisions in asking for their feedback and therefore may influence their future academic relationship with that person.

The Influence of Doctoral Students’ Feedback Seeking Attitudes

Regarding the significant but negative influence of students’ attitudes toward asking for feedback on the students’ attitudes toward the feedback providers’ willingness and time to provide feedback (SEM analysis finding), it can be suggested that students’ active feedback-pursuit attitude influences them to consider less about the potential feedback providers’ willingness or time to give them feedback when they decide to ask for their feedback.

I would like more, I don’t think there is ever enough feedback, and you know, if there is a way to get more, I would be open to it, but everybody gets really busy with their lives and I think in their eyes, we get to a point where you know, they wanna set us free and have us fly on our own eventually and so the feedback tends to, well actually to the dissertation process, it has been great. You know, but I know that that will end soon.

Walker et al. (2008) reported that some of the faculty who participated in their study explained that they spend more time with students who are “proactive” (p. 107). Considering this, it can be suggested that the faculty might spend more time with students who are actively seeking for feedback.

The Influence on Revision Decisions

According to the SEM analysis results, the participants’ motivations for academic writing to contribute to the field and improve themselves as academicians positively and significantly influence some of their revision decisions when they do not agree with the feedback. When students’ motivations for academic writing are focused on contributing to the field and improving themselves as academicians, this leads them to frequently question the justification in the feedback, the need for revision, and their confidence in what they wrote. As an example, an interview participant whose motivations for academic writing are to share his ideas with others and to improve his vita for job applications described the issues that affect his decisions to accept or reject a feedback comment as follows:

Do I agree with their justification. Because if I do, then the cause is good, and then I’ll take a look at what their specific suggestion is, if they, cause they may say “revise this” and then they’ll say, “and this is how”. Well, those are two separate things. So, their justification will help me accept whether or not it needs revision, then I can evaluate their suggestion to see if I want to do it that way or not.

Foss and Waters (2007) stated that how students manage feedback affects their professional images. They suggested doctoral students listen to critiques and suggestions and accept feedback; however, they also suggested students respond to these critiques and suggestions, defend their ideas, and negotiate for revisions when necessary. Accordingly, the result of this study can be interpreted in that as students are more motivated to develop their academic identity with their writings, they increase their competence to defend their ideas and thus engage in the “scholarly behavior”.

The SEM analysis results also showed that participants’ attitudes toward critical or negative written feedback, especially the effect of feedback on their emotions, self confidence, and motivations, influence their revision decisions when they do not agree with the feedback. When the negative effect of the critical or negative feedback on their emotions, self confidence, and motivations is stronger and when they receive feedback that they do not agree with, this influences them to more frequently consider the issues of punishment–reward, authority–power relationships with the feedback provider and feedback providers’ motivation for giving that feedback. This suggests that the negative emotional effect of feedback might lead students to think more about the conditions under which the feedback is given. As an example, a participant stated:

Sometimes journal reviewers give you snotty feedback (laughing). It’s very, kind of rude, kind of harsh, more putting you down and, you know, kind of makes you wonder if there is some other agenda.

Similarly, Eyres et al. (2001) reported that doctoral students considered the feedback providers’ motivations while examining feedback. They appreciated feedback when they perceive that the feedback provider was trying to be helpful. As for managing criticism and disagreement with feedback Li and Seale (2007) suggested the supervisor and the supervisee to minimize the cases that may cause embarrassment, manage power relations, and have communication based on mutual respect and politeness.

Descriptive data analysis showed that, trust for and perceived responsibility of the feedback provider was important to participants. Personality in fact may both assist and hinder the reception of feedback, “For example, the person’s characteristics, personality will affect what I will fix in the paper. And perhaps, sometimes I don’t like some feedback because I don’t like the person who gave me the feedback.” Perceptions of the feedback provider can also establish punishment–reward, authority–power relationships that call into question the motivations for the feedback itself. At times, these can be quite damaging:

I think intimidation by that person causes you to be a little bit more defensive. And maybe that mentally affects how you consider fixing what they’re telling you to fix. And if you feel more intimidated, or you feel like they’re sort of trying to put you down, or put you in your place then, then maybe you become, you become more of a person, that’s offended by that.

In short, the conditions under which the feedback is given, the feedback provider, and students’ attitudes affect the students’ revision decisions.

As presented in this study, there are several factors that affect doctoral students’ written feedback practices, including their attitudes toward different types of feedback, their relationships with the feedback providers, and so forth. Some of the relationships of these factors were explored and discussed in this article. These findings have important implications to theory and practice and further research would be useful to retest or extend the findings of this study as discussed in the following sections.

Implications

Written feedback is one of the most important instructional communication methods between doctoral students and other members of the academic community. It is part of the dialogue that holds messages about the practices of this community. Doctoral students may perceive these messages differently, they may load other messages to feedback with their attitudes, and they may use feedback in various ways in their revisions. The theory and practice of effective written feedback design for doctoral students’ academic writing needs an understanding of how these factors are related to each other in a way to support students to improve their writing and to increase their motivation for their participation in the practices of the community. This study has been conducted to provide an explanatory model that shows the relationships of several factors related to students’ written feedback practices. It has important implications to the research and theory.

While important contributions have already been made in the literature regarding the perceptions and attitudes of doctoral students toward written feedback, they have been piecemeal, examining only part of the complex relationships examined in this study. The presented model provides a more clear and comprehensive picture of the relationships between each of the identified factors. Researchers investigating one or more of the constructs in the model can utilize this information for several kinds of analyses, including correlation, regression, meaningful covariates for ANCOVA, or by expanding on the SEM done in this study. The instrument used proved both reliable and valid for this particular sampling frame. It is available to those wishing to measure the same constructs, but replication work is needed to determine the extent to which both the instrument and the model remain robust with different populations.

In addition to exploring the relationships between factors previously studied in isolation, this work is informed by the pragmatic solutions that have evolved over time, as judged by those receiving the feedback, meaning the doctoral students themselves. The results are practically significant to those providing the feedback. Faculty, supervisors, journal editors, and peers have no doubt developed their own intuitive and pragmatic frameworks about how to support doctoral students’ academic writing. However with examining the resulting model they might be lead to carefully consider how they craft their feedback so that it leads to meaningful revisions and furthers the shared goal of improving academic writing. Feedback providers may consider the list of feedback characteristics that most of the participant doctoral students preferred to receive in this study. The list will be useful when feedback providers do not know much about their students’ preferences of written feedback. They may individualize their feedback by utilizing the questionnaire developed in this study to know more about their students’ written feedback preferences.

An important implication of the model to the practice is about the effect of doctoral students’ co-authorship with faculty and subsequent increase in students’ motivations to contribute to the field with their writing. Considering the positive effect of co-authorship on motivation, faculty should be encouraged to write with their students in a colleague to colleague relationship to motivate them to engage in academic writing toward becoming full participants in the academic community. They should inform the students about the collaborative writing opportunities in departments and invite students to contribute. Engaging in collaborative writing will influence students to become more active feedback seekers not only to improve their knowledge and skills but also to improve the co-authored piece. One suggestion for faculty is to allocate certain time blocks each week to provide regular feedback for students’ writing. This will not only help individual faculty to organize and regulate feedback activities for these students, but may also allow hesitant students to have a courage to ask for feedback, such as students who are not yet active feedback seekers, who are negatively affected by critical or negative feedback, and who perceive that the faculty do not have time for them or not willing to help. Setting such time blocks will allow and encourage students to get regular feedback from different sources.

In these sessions, faculty may provide feedback in incremental ways, focusing on the most important issues first and then other issues later in the subsequent revisions. This way, students who are negative affected by critical or negative feedback will not be overwhelmed with the amount of critical comments. Examining subsequent versions of the paper regularly and providing one-on-one oral feedback sessions based on written feedback will also allow faculty to detect how their feedback is being used and the patterns for effective revisions.

Aside from these feedback sessions, there should be support structures at department, discipline, or institution level for students who are negatively affected by critical or negative feedback. As these students are usually not active feedback seekers and frequently consider external conditions under which the feedback is given during their revisions, safe environments should be provided for them early in their program to receive regular feedback and to revise their papers. Writing groups are useful support systems to provide such safe environments for them to practice giving and receiving feedback. Involvement in the activities of writing groups can also broaden the opportunities of collaborative writing with peers. Departmental and administrative support is necessary to organize written feedback time-blocks for each faculty and to manage writing groups.