Abstract

Objectives

The objectives of the present study are to investigate the precision of static (fixed-length) short forms versus computerized adaptive testing (CAT) administration, response pattern scoring versus summed score conversion, and test–retest reliability (stability) of the Patient-Reported Outcomes Measurement Information System (PROMIS®) pediatric self-report scales measuring the latent constructs of depressive symptoms, anxiety, anger, pain interference, peer relationships, fatigue, mobility, upper extremity functioning, and asthma impact with polytomous items.

Methods

Participants (N = 331) between the ages of 8 and 17 were recruited from outpatient general pediatrics and subspecialty clinics. Of the 331 participants, 137 were diagnosed with asthma. Three scores based on item response theory (IRT) were computed for each respondent: CAT response pattern expected a posteriori estimates, short-form response pattern expected a posteriori estimates, and short-form summed score expected a posteriori estimates. Scores were also compared between participants with and without asthma. To examine test–retest reliability, 54 children were selected for retesting approximately 2 weeks after the first assessment.

Results

A short CAT (maximum 12 items with a standard error of 0.4) was found, on average, to be less precise than the static short forms. The CAT appears to have limited usefulness over and above what can be accomplished with the existing static short forms (8–10 items). Stability of the scale scores over a 2-week period was generally supported.

Conclusion

The study provides further information on the psychometric properties of the PROMIS pediatric scales and extends the previous IRT analyses to include precision estimates of dynamic versus static administration, test–retest reliability, and validity of administration across groups. Both the positive and negative aspects of using CAT versus short forms are highlighted.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The Patient-Reported Outcomes Measurement Information System (PROMIS®) is a National Institutes of Health (NIH) initiative created to advance the assessment of patient-reported outcomes (PROs) in chronic diseases. Items are evaluated using item response theory (IRT) to derive item banks with scores that are theoretically reliable and valid along the full spectrum of the latent trait [1]. A primary objective is to develop item banks and computerized adaptive testing (CAT) potentially applicable across a variety of chronic disorders [2]. An additional objective has been to develop multiple unidimensional static (fixed-length) short forms in addition to dynamic CAT administration of the item banks.

During the past 10 years, the PROMIS Pediatric Cooperative Group has developed pediatric self-report item banks with polytomous items for ages 8–17 years across five generic health domains (physical functioning, pain, fatigue, emotional health, and social health) consistent with the larger PROMIS network [3]. It was anticipated that measures of these five generic health domains would be applicable across pediatric chronic health conditions, so generic or non-disease-specific scales were developed [4–10]. These five generic health domains have thus far been further delineated into the eight latent constructs of depressive symptoms, anxiety, anger, pain interference, peer relationships, fatigue, mobility, and upper extremity functioning [4–10]. An asthma-specific measure has also been created [11, 12].

The items were initially developed through an extensive review of the literature, expert review, and qualitative methods (focus groups and cognitive interviewing) [13, 14]. Subsequent quantitative methods utilized IRT procedures to develop item banks on a common metric with polytomous items, minimizing local dependence of items and differential item functioning, in addition to creating unidimensional static (fixed-length) short forms of the latent constructs [4–11]. All of the static short forms created consist of 8 items, except for fatigue (10 items) and anger (6 items). However, to date, the precision of different administration and scoring methods (static short forms versus CAT) and test–retest reliability (stability) have not been reported for these PROMIS pediatric self-report scales.

Consequently, the objectives of the present study are to investigate the precision of different scoring and administration options, including the measurement properties of static short forms versus dynamic CAT administration of the item banks and response pattern scoring versus summed score conversion. We also evaluated the test–retest reliability of these recently developed PROMIS pediatric scales measuring the nine latent constructs of depressive symptoms, anxiety, anger, pain interference, peer relationships, fatigue, mobility, upper extremity functioning, and asthma impact.

Method

Data collection

Participants (N = 331) were recruited between September 2009 and March 2010 from outpatient general pediatrics and subspecialty clinics of two public universities in North Carolina (N = 267) and Texas (N = 64). To be eligible for the study, participants had to be between the ages of 8 and 17; able to speak and read English; and have the ability to interact with a computer screen, keyboard, and mouse. Children were excluded from the study if the researcher determined they had a medical or psychiatric condition that precluded participation or a cognitive or other impairment that would interfere with completing the survey. Children with asthma comprised approximately 40 % of the sample (N = 137). Children’s asthma status was self-reported by their parents or caregivers. To be considered, children had to receive a physician diagnosis of asthma and to be using asthma medication at the time of the study.

Research assistants approached parents of children between the ages of 8 and 17. In addition, informational recruitment fliers, brochures, and a study poster were placed in clinic waiting rooms. Researchers provided a brief explanation of the study, and if the participant was willing and eligible to participate, administered consent and assent forms.

All participants completed the majority of the survey on a computer. Surveys were usually completed before and/or after the child’s clinic visit; however, an appointment could also be scheduled for another time if the participant preferred. Parents were asked to answer a few demographic items and for the asthma sample, questions about their children’s asthma status. Children with asthma completed the Asthma Control Test [15, 16] and the Pediatric Asthma Quality of Life Questionnaire [17] on paper while their parent answered the computer-based questions. Then, the child completed the remainder of the survey (i.e., all PROMIS items) on the computer. It took participants approximately 15–30 min to complete the survey, and they received a gift card for participation.

To examine test–retest reliability (stability), a subset of the children (N = 130) were invited for retesting. Participation in the follow-up study was not required, and a target sample size of approximately 50 was set, after which recruitment was discontinued. Fifty-four children completed a second assessment (Time 2) approximately 2 weeks after the first assessment (Time 1). Children were ineligible to participate in the follow-up if they were experiencing an acute illness at enrollment. Research assistants contacted parents of eligible children by phone to initiate the follow-up. If children were not sick at the time of the follow-up phone call, they were asked to complete the online survey by accessing it from their home computers.

Domains and items

All children responded to items from eight of the PROMIS pediatric scales: depressive symptoms, anxiety, anger, pain interference, peer relationships, fatigue, mobility, and upper extremity. In addition, the asthma impact scale was administered to children with asthma. All items used a 7-day recall period and one of the two sets of standardized 5-point response options: never, almost never, sometimes, often, and almost always, for all scales except the physical functioning scales (mobility and upper extremity); with no trouble, with a little trouble, with some trouble, with a lot of trouble, not able to do for the latter scales. The scoring directions of all scales are suggested by their names; higher scores on peer relations, mobility, and upper extremity indicate better functioning, whereas higher scores on depressive symptoms, anxiety, anger, pain interference, fatigue, and asthma indicate poorer functioning.

Administration and scoring

Because one goal of this study was to compare the results obtained with fixed or static short forms with results from CAT administration, item administration was arranged so that the participants received both short form and CAT scores. This was accomplished by administering the CAT first; then any items on the recommended short forms that had not been included in the child’s adaptive test were administered as well. Thus, for each measure, the participants first answered the items that were administered using CAT, and then they were also administered all of the items on the previously published static short forms. If they were administered one of the short-form items as part of the CAT, they were not administered this item again, but their response to this item was used to create both their CAT and short-form scores. All short forms contained 8 items, except fatigue (10) and anger (6), in the published static short forms [4–11].

The CAT was administered using the PROMIS Assessment Center software [18], with maximum posterior weighted information item selection, and stopped when the posterior standard deviation dropped below 0.4 standard units; the CAT administered a minimum of 5 and a maximum of 12 items. Short forms were those recommended in the original development of the scales [4–11]. There was no CAT for the anger scale, because that bank only has six items, all of which were administered as its short form [8]. The second test administration for the retest sample had the same structure as the first; the CAT was completed first, followed by items to complete the short form for each domain.

Three scores based on IRT were computed for each respondent: CAT response pattern expected a posteriori (CATuEAP) estimates, short-form response pattern expected a posteriori (SFuEAP) estimates, and short-form summed score expected a posteriori (SFxEAP) estimates; all are estimates of the latent domain score (see [19] for the IRT scoring algorithms).

Statistical and psychometric analysis plan

The precision of the three types of scores was examined using the posterior standard deviations that are reported as the standard errors of measurement of the scores and root mean square errors (RMSE), the square root of the average error variance across all participants. These values were examined graphically to determine how the precision varied at different levels of the latent construct.

To check the extent to which the CAT system administered fewer or more items than are on the short forms, the distribution of CAT test lengths was summarized with descriptive statistics. The overlap of item administration between the CAT system and the short forms was investigated by computing the proportions of CAT items that were also short-form items, and the proportion of short-form items administered by the CAT system.

Test–retest reliability was assessed by computing the correlations between scores from Time 1 and Time 2 for all three scoring methods for the nine PROMIS pediatric domains. For comparison, simulation was used to compute an IRT analog to classical internal consistency reliability. There are several approximate methods to estimate reliability for scales built with IRT [20]; however, a method more straightforward than any formula is to simulate item response data and compute the squared correlation of the IRT score estimates with the generating values of the underlying score. That was done for these scales, using simulation sample sizes of 30,000.

Correlations between CAT and short-form scores were computed for the Time 1 forms to investigate their comparability. Relationships among the nine scales were examined by computing correlation coefficients among the scores. Group differences analyses using t statistics for the comparison of children diagnosed with asthma versus the other children were computed as standardized effect size estimates, for the three scoring methods, to investigate whether one scoring method might be more responsive to group differences than others.

Results

Participants

A total of 331 children were recruited and administered the questionnaires at Time 1. Of these 331 children, 137 were diagnosed with asthma. Table 1 describes the background characteristics of the study participants.

Of the final study sample, a slight majority were female (n = 170, 51.4 %) and between the ages of 8–12 years (n = 184, 55.6 %), with an average age of 12.1. The largest racial group was White (n = 162, 48.9 %), with representative numbers from Black (n = 130, 39.3 %) and Hispanic (n = 35, 10.6 %) participants. The asthma and non-asthma groups did not differ in gender or age. However, the proportions of African-American and Hispanic participants were higher in the asthma group (p = 0.04 and 0.03, respectively).

The majority of guardians who completed the demographic items were the children’s parents (n = 311, 94.0 %), with most of these being the child’s mother (n = 275, 83.1 %). Most of the parents had either some college education (n = 122, 36.9 %) or a college or advanced degree (n = 108, 32.6 %). Majority of children (n = 185, 55.9 %) were described by their parents as having health problems other than or in addition to asthma. The most commonly reported other health problems were attention deficit disorder (n = 73, 22.1 %), being overweight (n = 46, 13.9 %), being born prematurely (n = 34, 10.3 %), and mental health disorders (n = 33, 10.0 %). On average, children with asthma experienced more additional health problems (0.99 compared to 0.75 for children without asthma, p = 0.03). However, there were no significant differences in the numbers experiencing the most common other health problems.

Score distributions

Figure 1 displays histograms of the CAT and short-form scores across all nine domains. Several results are clear from Fig. 1. First, all scoring distributions are roughly the same for the three types of scores within each domain. The two types of scores based on the short forms (response pattern EAPs and summed score EAPs) are most similar; this is to be expected, because these scores are computed from the same responses. Second, all domains show either a floor or ceiling effect. Domains in which higher scores indicate higher functioning (peer relations, mobility, and upper extremity) display a ceiling, whereas domains in which higher scores indicate lower functioning have a floor. Third, the adaptive nature of the CAT is evident from the histograms. The score range tends to be greater for the CAT than the short forms; this is because an adaptive test is better able to measure the functioning of people falling at the low or high ends of the scale. Using the short forms, frequencies are more likely to accumulate at the maximum (or minimum) scores, whereas using the CAT allows for more nuanced measurement at the extreme ends of the scale.

Short form versus CAT scores

Correlations between CAT and the fixed-length short-form scores were computed from the Time 1 data (see Table 2). All correlations were very high. As expected, the short-form scores were more highly correlated with each other than they were with the CAT scores, but all correlations exceed 0.93.

Precision of measurement

Figure 2 shows the IRT standard errors for each of the three types of scores plotted against the scores. RMSE (the square root of the average squared standard errors) is shown in the lower right hand corner of each plot. RMSE for summed score EAPs is only slightly greater than that for the response pattern EAPs, indicating that minimal precision is lost in using the summed score EAPs for the short form.

In the middle range of the latent constructs, the standard errors for the CAT scores are greater than those for the summed score and response pattern EAPs for the short forms. This is due to the stopping rule of the CAT, which meant that very few items were administered to people falling in this mid-range. However, at the extremes of some scales, the CAT standard errors drop below the short-form scores, indicating that the CAT has adapted to the participant’s level on the latent construct and provided slightly more precise measurement for people scoring at the high or low ends.

Items administered by the CAT system

Table 3 describes the item selection of the CATs relative to the short forms in more detail. The first four columns of Table 3 summarize the numbers of items administered by the CAT system. The minimum number of items was usually 5, as prescribed (there was one participant who only completed 4 items, for pain interference), and the maximum was always the upper limit, 12 items. The range of average CAT length was 6.2–10.7 items, so on average the CAT administered about the same number of items as are on the short forms (8, except for the 10-item fatigue short form).

However, for most scales, the distribution of CAT lengths was distinctly bimodal, as shown in columns five and six of Table 3 that list the proportions of CATs that were 5 or 12 items long; for all scales except fatigue, CATs of minimum and maximum length account for the majority of the administrations. Either the CAT system achieved the precision of a standard error lower than 4 points on the T score scale in 5 items and stopped, or it administered the maximum number of items (12) without obtaining a score of that level of precision.

The next eight columns of Table 3 show the minimum, maximum, mean, and standard deviation of the proportion of CAT-administered items that were also on the short forms, and then the proportion of short-form items administered by the CAT system. The former, on average, ranges from 0.67 to 0.93, meaning that most CAT-administered items were short-form items; and the latter, on average, ranges from 0.66 to 0.88, meaning that the CAT system often administered a fraction of the short form.

The rightmost column of Table 3 lists the proportions of CAT administrations that were exactly subsets of the short forms. That happened often, more than half of the administrations for depressive symptoms, pain interference, fatigue, and asthma impact. When the CAT is a subset of the short form, the CAT score is necessarily less precise than the short-form score; this explains why the RMSE values for CAT scores for those scales are larger than those for the short forms.

At the other extreme, for upper extremity functioning, the CAT was rarely a subset of the short form (0.07 of the administrations), but it most often administered the maximum number of items (0.74 of the administrations). So for upper extremity, the CAT provided more precise measurement, but it did so by becoming (selectively) longer than the short form to measure in the higher score range (see Fig. 1). The mobility scale behaved similarly, but not so extremely. That is, for mobility, the CAT was slightly longer than the short form and administered the maximum number of items to a fairly high proportion of participants (0.42) compared to other domains, but not as high as the upper extremity scale.

The anxiety and peer relationships scales illustrate another pattern: The CAT system adapted to measure the lower/upper ranges of the score range more precisely, but that was more than counterbalanced by less precision for the shorter CATs in the middle of the range, so in aggregate the short form outperformed the CAT in precision.

Test–retest reliability

Fifty-four participants completed the 2-week follow-up survey. Twenty-three of the 54 retested children were from the asthma group. The time interval between completion of the first assessment and 2 week follow-up survey ranged from 11 to 17 days, with a mean of 14.9 days (SD = 2.06).

The group of children who completed the follow-up survey was compared to the children who were initially invited but did not complete the follow-up. No significant differences were found between the two groups in child gender, χ 2 (1, N = 130) = 2.47, p = 0.12; race, χ 2 (2, N = 127) = 2.84, p = 0.24; ethnicity, χ 2 (1, N = 120) = 1.17, p = 0.28; or age, t = 1.50, p = 0.14.

As a measure of test–retest reliability, correlations were computed for the short-form scores and CAT scores between Time 1 and Time 2 (see Table 4). Test–retest correlations were generally high, between 0.7 and 0.8 for the depressive symptoms, anxiety, peer relationships, fatigue, and asthma impact scales. Stability was slightly lower for the physical functioning scales (upper extremity and mobility), with correlations around 0.7, and lower yet for the pain interference and anger scores, with correlations around 0.6.

Test–retest reliability was also compared with internal consistency reliability, calculated using IRT simulation-based reliability for the two short-form scores. While test–retest measures reliability within items across time, internal consistency measures reliability across items within time. Table 4 also shows the internal consistency reliability coefficients. Internal consistency was generally higher than test–retest reliability, with the exception of upper extremity functioning for which test–retest reliability was higher.

Domain correlations

Correlations across scales were calculated using the short-form response pattern scores (see Table 5).

Group difference

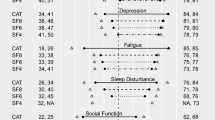

Table 6 shows the standardized effect size estimates and t statistics for the comparison of children diagnosed with asthma versus the non-asthma groups, for the three scoring methods. For this comparison, the only significant difference between the groups is for the mobility score; for the SFuEAPs, the average mobility scores were 46.9 (SD = 8.3) for the asthma group and 50.0 (D = 8.5) for the others. The effect sizes are generally similar for all of the between-group comparisons, again indicating that the scoring method makes little difference.

Discussion

This study demonstrates that scores on the PROMIS pediatric measures are highly correlated regardless of the scoring or administration technique (CAT versus static short forms, response pattern versus summed score conversion). It is especially notable that the correlations between response pattern scores and summed score conversions are either 0.99 or round to 1.00 to two decimal places, indicating that very little loss of information occurs when the convenient summed score calculations are used. This facilitates the ability to score these PROMIS pediatric short-form scales administered on paper or in systems other than Assessment Center since IRT software is not necessary for scoring these scales. It is important to note, however, that there was item overlap between the static and adaptive forms. Items were not administered more than once to a child; the child’s response to an item on the CAT was used in computing the short-form scores. Therefore, high correlation between the two static forms and the CAT is to be expected.

The patterns of measurement precision of the nine scales were very much as expected from the IRT analyses used in the original construction of the scales [4–11]. All of the measures, to a greater or lesser extent, provide more precise measurement on the less-healthy side of the latent continua they measure. It should be noted that for measurement instruments intended for use with patients with chronic health conditions, less precision at the healthy or less severe end of the latent trait spectrum may not be problematic [21].

This study was conducted to determine how well measures would perform if the CAT was designed to be short. We found that if we shortened the CAT to a 12 item maximum with a standard error of 0.4, across most domains it was not as precise as the static short form. These CAT parameters had limited usefulness over and above what was accomplished with the static short forms (8–10 items in this case). For the physical functioning scales, upper extremity and mobility, the CAT system provided greater precision compared to short-form scores (as measured in aggregate by RMSE), but at the expense of longer tests (more items than the short forms). This result occurred because the precision associated with a CAT stopping criterion of a standard error of 4 is greater than could be achieved given the average levels of precision for the short forms. For the other scales, the short-form scores outperformed the CAT system on the average, although the CAT provided slightly more precise measurement at one end of the scale or the other. It should be noted that it is often the case that CAT administration outperforms static short forms in adults [21, 22]. For example, in adults, polytomous item CAT has demonstrated better precision than static short forms in measuring fatigue, but the short forms also demonstrated good precision for most participants [23] and have been found in a measure of depressive symptoms to perform only marginally worse than CAT [22]. Whether CAT would have performed better than the static short forms if a greater number of items had been dynamically administered was not the objective of the present study since we were primarily concerned with reducing participant burden by testing a short CAT versus static short forms. It is quite possible that CAT would have performed equally to or better than the static short forms if the CAT parameters were adjusted to administer more items (or a stopping rule at a more precise level). In this study, we were unable to test this hypothesis. Future research should investigate how the CAT algorithm might be improved. For example, the SE cut point could be lowered, which most likely would result in the administration of more items in the middle range of the latent trait and increase precision. Additionally, the total number of items administered at the extremes of the measurement continuum could be lowered in order to reduce respondent burden for some populations.

Although CAT administration has several notable hypothesized advantages over static measurement instruments, such as the potential for brief scales which may reduce respondent burden and the possibility for greater precision over the full range of the latent trait continuum, unidimensional short forms also have several practical advantages. For example, with paper and pencil administration of short forms, the need for computer access for participants is eliminated, and thus, the available administration options may be increased for some pediatric populations. On the other hand, electronic administration is often preferred since it potentially reduces data entry errors and youth are quite comfortable with computers and other mobile devices [24]. In this case, both electronic administration of static short forms and CAT administration have practical advantages over paper and pencil administration.

The stability of the scale scores over a 2-week period was very much in line with expectation, that is, there was some variability in stability; with stability coefficients lower than internal consistency reliability for all scales, except for the upper extremity scale. The scale scores were moderately correlated for the most part and show an expected pattern of relationships with patients with asthma.

There are several limitations of this study, which suggest future directions for the next generation of PROMIS pediatric scale development. Specifically in this study, the minimum number of items selected for the polytomous CAT was 5 with a maximum of 12 items. This was a decision made at the initiation of the present study, and these analyses are thus limited by this earlier decision. On average, the short forms outperformed the CAT because of how the standard error stopping rule was set (at 0.4). While this stopping rule increased the precision of the CAT, it also effectively increased the minimum number of items administered. Because the short forms have standard errors lower than 0.4 for much of the measured continua, the CAT was stopped before getting to a precision as good as the short forms. Future studies should explore using different cut points for the standard error stopping rule and changing the minimum and maximum number of items CAT administered when comparing static short forms to CAT administration, as well as methods for the administration of polytomous items such as a two-stage semi-adaptive testing strategy [22]. A further potential limitation was that not all participants selected for the test–retest reliability phase completed the second administration, which may limit the generalizability of the stability findings.

In conclusion, this study provides further support for the psychometric properties of the PROMIS pediatric scales and extends the previous IRT analyses [4–11] to include the additional measurement properties of precision estimates of dynamic versus static administration, test–retest reliability, and validity of administration across groups.

Abbreviations

- PROMIS:

-

Patient-Reported Outcomes Measurement Information System

- HRQOL:

-

Health-related quality of life

- NIH:

-

National Institutes of Health

References

Ader, D. N. (2007). Developing the Patient-Reported Outcomes Measurement Information System (PROMIS). Medical Care, 45(Suppl 1), S1–S2.

Reeve, B. B., Hays, R. D., Bjorner, J. B., Cook, K. F., Crane, P. K., Teresi, J. A., et al. (2007). Psychometric evaluation and calibration of health-related quality of life item banks: Plans for the Patient-Report Outcomes Measurement Information System (PROMIS). Medical Care, 45(Suppl 1), S22–S31.

Cella, D., Yount, S., Rothrock, N., Gershon, R., Cook, K., Reeve, B., et al. (2007). The Patient-Reported Outcomes Measurement Information System (PROMIS): Progress of an NIH roadmap cooperative group during its first 2 years. Medical Care, 45(Suppl 1), S3–S11.

Irwin, D. E., Stucky, B. D., Thissen, D., DeWitt, E. M., Lai, J. S., Yeatts, K., et al. (2010). Sampling plan and patient characteristics of the PROMIS pediatrics large-scale survey. Quality of Life Research, 19, 585–594.

Irwin, D. E., Stucky, B. D., Langer, M. M., Thissen, D., DeWitt, E. M., Lai, J. S., et al. (2010). An item response analysis of the pediatric PROMIS anxiety and depressive symptoms scales. Quality of Life Research, 19, 595–607.

Varni, J. W., Stucky, B. D., Thissen, D., DeWitt, E. M., Irwin, D. E., Lai, J. S., et al. (2010). PROMIS Pediatric Pain Interference Scale: An item response theory analysis of the pediatric pain item bank. Journal of Pain, 11, 1109–1119.

DeWitt, E. M., Stucky, B. D., Thissen, D., Irwin, D. E., Langer, M., Varni, J. W., et al. (2011). Construction of the eight-item patient-reported outcomes measurement information system pediatric physical function scales: Built using item response theory. Journal of Clinical Epidemiology, 64, 794–804.

Irwin, D. E., Stucky, B. D., Langer, M. M., Thissen, D., DeWitt, E. M., Lai, J. S., et al. (2012). PROMIS Pediatric Anger Scale: An item response theory analysis. Quality of Life Research, 21, 697–706.

DeWalt, D. A., Thissen, D., Stucky, B. D., Langer, M. M., DeWitt, E. M., Irwin, D. E., Lai, J. S., Yeatts, K. B., Gross, H. E., Taylor, O., & Varni, J. W. PROMIS pediatric peer relationships scale: Development of a peer relationships item bank as part of social health measurement. Health Psychology (in press).

Lai, J.-S., Stucky, B. D., Thissen, D., Varni, J. W., DeWitt, E. M., Irwin, D. E., Yeatts, K. B., & Dewalt, D. A. Development and psychometric properties of the PROMIS® pediatric fatigue item banks. Quality of Life Research. doi:10.1007/s11136-013-0357-1.

Yeatts, K., Stucky, B. D., Thissen, D., Irwin, D. E., Varni, J. W., DeWitt, E. M., et al. (2010). Construction of the Pediatric Asthma Impact Scale (PAIS) for the Patient-Reported Outcomes Measurement Information System (PROMIS). Journal of Asthma, 47, 295–302.

Thissen, D., Varni, J. W., Stucky, B. D., Liu, Y., Irwin, D. E., & DeWalt, D. A. (2011). Using the PedsQL™ 3.0 Asthma Module to obtain scores comparable with those of the PROMIS Pediatric Asthma Impact Scale (PAIS). Quality of Life Research, 20, 1497–1505.

Walsh, T. R., Irwin, D. E., Meier, A., Varni, J. W., & DeWalt, D. A. (2008). The use of focus groups in the development of the PROMIS pediatrics item bank. Quality of Life Research, 17, 725–735.

Irwin, D. E., Varni, J. W., Yeatts, K., & DeWalt, D. A. (2009). Cognitive interviewing methodology in the development of a pediatric item bank: a patient reported outcomes measurement information system (PROMIS) study. Health and Quality of Life Outcomes, 7(3), 1–10.

Nathan, R. A., Sorkness, C. A., Kosinski, M., Schatz, M., Li, J. T., Marcus, P., et al. (2004). Development of the asthma control test: A survey for assessing asthma control. Journal of Allergy and Clinical Immunology, 113, 59–65.

Liu, A. H., Zeiger, R., Sorkness, C., Mahr, T., Ostrom, N., Burgess, S., et al. (2007). Development and cross-sectional validation of the childhood asthma control test. Journal of Allergy and Clinical Immunology, 119, 817–825.

Juniper, E. F., Guyatt, G. H., Feeny, D. H., Ferrie, P. J., Griffith, L. E., & Townsend, M. (1996). Measuring quality of life in children with asthma. Quality of Life Research, 5, 35–46.

Cella, D., Gershon, R., Bass, M., & Rothrock, N. (2012). Assessment center user manual, version 8.7. Chicago, IL: Northwestern University, Department of Medical Social Sciences.

Thissen, D., Nelson, L., Rosa, K., & McLeod, L. D. (2001). Item response theory for items scored in more than two categories. In D. Thissen & H. Wainer (Eds.), Test scoring (pp. 141–186). Mahwah, NJ: Lawrence Erlbaum Associates.

Kim, S. (2012). A note on the reliability coefficients for item response model-based ability estimates. Psychometrika, 77, 153–162.

Choi, S. W., & Swartz, R. J. (2009). Comparison of CAT item selection criteria for polytomous items. Applied Psychological Measurement, 33, 419–440.

Choi, S. W., Reise, S. P., Pilkonis, P. A., Hays, R. D., & Cella, D. (2010). Efficiency of static and computer adaptive short forms compared to full-length measures of depressive symptoms. Quality of Life Research, 19, 125–136.

Lai, J. S., Cella, D., Choi, S. W., Junghaenel, D. U., Christodoulou, C., Gershon, R., et al. (2011). How item banks and their application can influence measurement practice in rehabilitation medicine: A PROMIS fatigue item bank example. Archives of Physical Medicine and Rehabilitation, 92(1 Suppl), S20–S27.

Varni, J. W., Limbers, C. A., Burwinkle, T. M., Bryant, W. P., & Wilson, D. P. (2008). The ePedsQL™ in Type 1 and Type 2 diabetes: Feasibility, reliability and validity of the Pediatric Quality of Life Inventory™ internet administration. Diabetes Care, 31, 672–677.

Acknowledgments

This work was funded by the National Institutes of Health through the NIH Roadmap for Medical Research, Grant U01AR052181. Information on the Patient-Reported Outcomes Measurement Information System (PROMIS®) can be found at http://nihroadmap.nih.gov/ and http://www.nihPROMIS.org.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Varni, J.W., Magnus, B., Stucky, B.D. et al. Psychometric properties of the PROMIS® pediatric scales: precision, stability, and comparison of different scoring and administration options. Qual Life Res 23, 1233–1243 (2014). https://doi.org/10.1007/s11136-013-0544-0

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11136-013-0544-0