Abstract

To ensure litchi fruit yield and quality, reasonable blooming period management such as flower thinning is required during the early flowering period. A combination of the number of litchi flowers and their density map can provide a reference for blooming period management decisions during the flowering period. Flowering intensity is currently largely estimated manually by humans observing the trees in the orchard. Although some automatic computer vision systems have been proposed for estimating flowering intensity, their overall performance is inadequate to meet current flower-thinning needs, and these systems are weak in some situations such as in varying environments and when the target object has a low-density distribution. With male litchi flowers as the research object, the goal of this study was to design a method for calculating the number of flowers. By using an image of the male litchi flower as input to a multicolumn convolutional neural network, a final density map and the number of flowers were generated. Experimental results using a self-constructed male litchi flower dataset demonstrated the feasibility of outputting a density map and flower count. The flower number was estimated from the model with a mean absolute error (MAE) that reached 16.29 and a mean square error (MSE) reaching 25.40 on the test set, which was better than counting by target detection. The proposed method can be used to perform time-saving analyses to help estimate yield and implement follow-up orchard management, and it demonstrates the potential of using density maps as outputs for estimating flowering intensity.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Litchi is an important fruit crop in South China. According to the estimation of the National Industrial Technology System of Litchi, the litchi production area in mainland China has remained relatively unchanged in recent years, accounting for approximately 52.60% of the world’s litchi production area; in 2018, litchi production on the Chinese mainland was approximately 3.02 billion tons, an increase of 54.82% over 2017, which represented almost 61.34% of global litchi production (Qi et al., 2019). The fruit growers’ diligent maintenance of the litchi orchards during the production process is responsible for the high fruit yield.

In the production of litchi trees, its fruit yield is affected by various factors, such as the climate, chemical fertilizer and irrigation (Huang and Chen, 2014; Yang et al., 2015; Zhu, 2020). Many studies have shown that together with external factors above, the internal factor of flowering intensity directly affects litchi fruit yields as well as fruit color and weight (Link, 2000). Defects in flowering habits are the reason why the yield of litchi easily decreases. During the flowering season, litchi trees show the characteristics of flowering by batch orderly and a large quantity of flowers. Male and female flowers bloom in different batches and female flowers usually open only two days, which make pollination and fertilization time urgent (Cai et al., 2011). Plenty of flowers leads to the excessive consumption of carbohydrate reserve, leaving little for fruit set (Jiang et al., 2012). Therefore, in the early litchi tree blooming stage, excess flowers particularly male flowers are removed, which weakens the competition between florets for nutrition and water, while the female/male flower ratio is increased, thereby enhancing litchi fruit yield (Luo et al., 2019). Flowering intensity information largely determines the degree of thinning. During the early florescence stage, farmers thin the blooms appropriately based on previous experience. Too much or too little thinning also affects the yield of this fruit. Therefore, flowering intensity information is important for guiding flower thinning and can be an index for estimating the flowering rate. Efficient and reliable technology for estimating the number of litchi flowers is an important basis for flower period management, which helps enhance the market value of litchi.

Although acquiring flowering intensity is highly important, there are still relatively few studies on automatically detecting and estimating the numbers of litchi flowers, and progress toward this goal is still relatively slow. At present, estimation still relies more on manual work by experienced growers. However, the following problems inevitably arise: (1) manual counting work is labor intensive and time consuming; (2) manual estimation errors are large, and it is easy to acquire qualitative statistics rather than quantitative statistics regarding flower numbers.

Thanks to the development of computer vision, convolutional neural networks (CNNs) have been successful in many fields, such as autonomous driving and face recognition (Feng et al. 2021; Srinivas et al., 2017; Wu and Jiang, 2018). In agriculture, computer vision technology is used for fruit classification and detection, plant growth monitoring, field harvesting operations, agricultural product processing, weed and pest control and other tasks of precision agriculture (Mavridou et al., 2019). Inspired by the convolutional neural network applied in agriculture, the objectives of this study were: (a) to build a convolutional neural network for calculating the number of flowers based on density maps, (b) to establish a dataset of male litchi flowers based on a density map, and (c) to evaluate the model with other counting methods based on target detection and analyze its limitations.

The research idea was guided by a heuristic engineering method outlined by Koen (1988), which involves defining a problem, proposing a solution and testing that solution. Aiming at the task of counting massive male litchi flowers, a method that building an end-to-end CNN algorithm was developed. To test the solution, the evaluation results were also compared with the mainstream method of target detection and the limitations of the method proposed were analyzed.

Background

At present, researchers mainly adopt two different ideas for distinguishing types of flowers: image processing technology and various types of image sensors. The former includes traditional image processing methods and recent popular deep CNNs. In this section, the most relevant works on automated flower counting are reviewed, followed by the concept of this paper.

Flower quantification

In early flower counting research, Adamsen et al. (2000) developed a concept that automatically identified, for example, all lesquerella pixels in an image and calculated the points. They converted the image format, eliminated nonyellow colors, obtained binary images and finally according flower percentage and Euler number using the linear regression function, the number of flower spots was counted. The whole process took 3.5 min for an image of lesquerella and at least 45 min to calculate each tree by hand.

Aggelopoulou et al. (2011) proposed a segmentation idea based on a color threshold. Images of apple blossoms were acquired during certain daylight hours. By setting a black cloth screen behind the tree, they achieved a predicted yield error rate of 18%, which indicated the probability of the correctness of the flower distribution map used in the final yield. However, this method required control of the environmental scene. Thorp and Diering (2011) converted acquired images to the hue, saturation and intensity (HSI) color space to extract the characteristic information of lesquerella flowers and estimated the number of flowers based on the coverage percentage of the flowers in the image. This method achieved a root mean squared error (RMSE) between measured and predicted flower counts ranging from 159 to 194.

Similar to Thorp, Hočevar et al. (2014) proposed a method based on hue, saturation, and brightness (HSL) image analysis to predict apple flowering intensity. They estimated the number of flower clusters on individual fruit trees in a high-density apple orchard and finally determined whether the trees needed to be thinned.

With the development of CNNs, Dias et al. (2018) proposed a flower recognition technology that is robust to clutter and illumination changes and proposed using a pretrained CNN and fine-tuning it to increase its sensitivity to flowers. The resulting model was able to accurately identify flowers of different types under varying lighting conditions, achieving recall and precision rates higher than 90%.

Liu et al. (2018) proposed a robust system to estimate the number of vine flowers. The system includes an improved flower candidate detection algorithm that performs stem or shaft segmentation, uses an unsupervised learning method to achieve flower classification, and relies on a simple linear model to convert the number of classified visible flowers into estimated flower numbers. Xu et al. (2018) used aerial images to detect and count the number of newly bloomed cotton flowers. By constructing and training a CNN to classify flowers, using the structure motion method to construct a dense point cloud of aerial images and using those images to calculate the three-dimensional positions of cotton plants, the trained model was able to correctly classify more than 97% of the training and test images. It was also able to extract the number of potential flowers from a single dataset, reaching a correct classification rate of more than 90% for monitoring cotton plant flowering. Farjon et al. (2020) used the Faster Region-Based CNN (R-CNN) model to detect apple flowers. To make the model more suitable for the flower detection task, they modified the anchor sizes, the percentages of positive and negative examples, and the tolerance to pose deviation. Finally, the model achieved flower detection with an average precision (AP) score of 0.683. Lin et al. (2020) developed a deep-level object detection framework using R-CNN, which could process images through several layers to visually represent the instances of strawberry flowers in outdoor fields. The detection accuracy of the Faster R-CNN model was 86.1%, which showed the effectiveness of the deep-level Faster R-CNN framework for representing strawberry flower instances in shadow, overlapped by other strawberry flowers, etc.

Using technology to estimate the number of flowers based on special sensors, Xiao et al. (2014) proposed a method for calculating the number of apple tree blossoms based on aerial multispectral images. They proposed an algorithm to estimate the number of apple tree blossoms, analyze the spectral characteristics of three target elements (flowers, leaves and soil) in multispectral images, and then use a support vector machine to perform classification, achieving an accuracy rate of over 86.67%. Finally, the pixels in the positive classification category were counted to calculate the ratio of the flower area to the leaf area, which was used as an indicator of the amount of required flower thinning. Liu et al. (2016) collected visible-near-infrared hyperspectral images of the plant canopy during the blooming period. They counted the number of flowers in the trees selected and comparatively analyzed the effects of the partial least-squares (PLS) models based on the original reflectance spectra (OS) and the spectra pretreated by five kinds of methods—PLS, a backpropagation neural network (BPNN) and a least squares support vector machine (LS-SVM) based on characteristic wavelengths obtained by the x-loading weight (x-LW)—on the accuracy of the real-time estimation of the number of flowers per unit area per tree. They finally concluded that the PLS model based on standardized preprocessing achieved the best prediction effect for flower number per unit area on tall spindle-shaped apple tree canopies. Horton et al. (2017) conducted image processing on images from alternative cameras (near infrared, green, blue). The image processing involved separating the three bands and analyzing their color distributions; a basic threshold segmentation method was applied to segment and detect the flowers.

Comas et al. (2019) explored the potential of using UAV-acquired RGB high-resolution images to measure flowering intensity and found a correlation between the percentage of white pixels in each tree and the flowering intensity; they also analyzed the percentage of white pixels in each tree and the correlations between flower clusters. However, the obtained correlations were quite low, demonstrating the complexity involved in processing UAV images but revealing some ideas that could be studied, such as automatic segmentation of white pixels and running tests in orchards with low tree density and a large distance between trees.

Proposed approach

The literature review reveals that image processing technology has been applied to flower estimation with both RGB images and other kinds of images, especially with the proposal of CNNs in recent years. For male litchi flower quantification, the existing methods have some limitations that should be discussed.

Traditional methods based on color thresholds are easily affected by variations in illumination intensity, occlusions, etc., and they are highly dependent on the scene environment (Aggelopoulou et al., 2011).

Detection of male litchi flowers for flower quantification is challenging since litchi trees have small flowers that bloom in large numbers and are more difficult to recognize in limited-resolution images. Target detection does not deliver satisfactory results: too many missed detections occur, and the detection time is excessive. Male litchi flower quantification does not require exact sizes of bounding boxes or high-precision locations.

The shapes of litchi flowers differ during the flowering period, and there are obvious differences in flower scales, making it imperative to use models that can adapt to different scales to achieve better detection results.

Lempitsky and Zisserman (2010) proposed a new supervised learning framework for visual object counting tasks by directly mapping from global image characteristics to the number of objects instead of focusing on the difficult task of learning to detect and localize individual object instances. This kind of counting approach by regression is used well in crowd counting. Zhang et al. (2016) developed a method that can accurately estimate the number of people in images acquired from any angle with any crowd density using a CNN, which has achieved great success in crowd counting.

Therefore, a multicolumn CNN was proposed based on density maps for counting male litchi flowers, which uses five different convolution kernel sizes to map an input image to a flower density map; then, it estimates the number of litchi flowers in the image through integral calculation. To test the performance of the model, a self-constructed male litchi flower dataset was used. In addition, the proposed method of counting by regression was compared with other popular models based on target detection. Finally, with the trained model, the capability of the approach was analyzed.

Materials and methods

Study area and dataset

Data acquisition

In southwestern, southern and southeastern China, the flowering period of litchi occurs primarily from February to May each year, but different varieties or planting areas of litchi have different beginnings of the flowering period. The dataset used in this study was acquired at the Litchi Garden Base of the College of Horticulture, South China Agricultural University, Guangzhou, Guangdong (23°10′ N 113°21′ E). The orchard was 56 m above sea level, with an annual average temperature of 22.3 °C and an area of 0.9 hm2. The main litchi varieties were “Feizixiao” and “Guiwei”, with a row and plant spacing of 5.3 m × 6.4 m.

This study was conducted from February 28th to March 2nd, 2019, when male litchi flowers were in full bloom. Male litchi flowers develop completely with developed stamens and slender filaments. The filaments are arranged around the center of the flower; they can be long or short and vary depending on the type. A Canon EOS 80D camera with a resolution of 6000 × 4000 was used for data collection. The images were taken under different lighting conditions (natural light, direct sunlight, and low light) and had different size, shape, and illumination characteristics.

Data processing and labeling

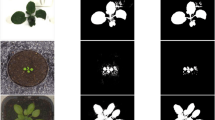

In the captured images, only parts of the images whose flowers could be clearly identified with the naked eye were selected. Then, based on the image clarity, multiple images of 1024 × 768 pixels were randomly cropped from the original 6000 × 4000-pixel image. LabelMe (Kentaro, 2016) is a graphical image annotation tool written in Python that can annotate images with polygons, rectangles, circles, lines and points. In this study, it was used to mark litchi flowers with a point. The flowers in the natural growth state showed different morphologies in the image. Therefore, the labeling principle mainly focused on litchi flowers whose filaments had begun to extend, as illustrated in Fig. 1a. Whether the flower was complete or occluded, the center of the flower was marked. Figure 1b shows difficult cases marked using LabelMe. Figure 1c shows an example of how the flowers were finally labeled. Finally, a JSON file containing all flower coordinates was obtained.

Data augmentation

After completing the annotations, 304 image fragments and their tag files were obtained. In unconstrained scenes, the imaging environments changed greatly, and the counting and labeling task was heavy because the location of each flower in crowded scenes had to be marked manually. To diversify labeling data, researchers (Li et al., 2018; Shorten and Khoshgoftaar, 2019) have applied data augmentation techniques. These methods were also applied to this dataset, including random translations, random cropping, flipping (both horizontal and vertical) and rotation, as illustrated in Fig. 2. In addition, image brightness was randomly altered. The final dataset contained 482 samples in total.

Ground truth generation

Due to the network training requirements, the flower density maps of the input image needed to be obtained. The method proposed by Boominathan et al. (2016) provides a simple way to generate a density map. Each goal is represented by a two-dimensional Gaussian distribution with fixed variance. This type of fixed Gaussian kernel simply blurs the labeled points of each flower without obtaining the precise flower position, making it easier for a CNN to learn. For example, suppose the position of each flower is \({x}_{i}\); its distribution in the image is expressed as a Gaussian kernel \({G}_{\sigma }\left({x}_{i}\right)\), and its corresponding position is expressed as \(\delta \left(x-{x}_{i}\right)\). Then, an image corresponding to \(N\) flowers can be expressed as the following function:

To convert this function to a continuous density function, it was convolved with a Gaussian kernel. The corresponding density map can be expressed as the function \(F\left(x\right)=H(x)*{G}_{\sigma }\left({x}_{i}\right)\).

The fixed standard deviation used in the experiment was 4.0, and the final flowchart for converting the annotation file into a density map is shown in Fig. 3. In other word, in the annotation process, each flower was marked with a point, and its corresponding position was mapped to the value 1 in a binary density map. When all pixel values of the whole binary density map were added, the total number of flowers labeled was obtained. After blurring the binary density map, the ground truth density map was obtained. The sum of the whole density map became the final total number of flowers, as shown in Fig. 4. The exemplar images and corresponding density maps in the dataset are shown in Fig. 5.

Dataset of male litchi flowers

Finally, a labeled dataset of male litchi flowers was obtained. The details of this dataset are shown in Table 1. Figure 6 shows a histogram of the quantitative distribution of flowers in the images in this dataset.

Multicolumn convolutional neural networks

During the experiment, to better reduce the dependence on color features and the computing time, the grayscale images were input to the CNN. Overall, the network accepted a gray image as input, created a corresponding density map, and then estimated the number of flowers through integration. Litchi flowers can have different shapes and sizes within the same image. Therefore, if only a filter with a single receptive field is used, flowers of different shapes and sizes cannot be captured well. Because of the properties of the multicolumn CNN (Ciregan et al., 2012) and the contribution of Zhang et al. (2016), a five-columns CNN to learn a density map for flower detection was adopted. Convolution kernels of different sizes were used in each column to allow the network to adapt to flowers with different scales. Thus, a better-performing CNN network model was obtained.

Figure 7 shows the overall structure of the proposed network, which included five groups of parallel subnetworks, each of which used a different convolution kernel size—these corresponded to flowers with different morphological scales. This figure shows that as the size of the convolution kernel of each subnetwork decreased, the network became deeper. However, the front end of each network basically adopted a convolution-pooling-convolution-pooling structure. After two 2 × 2 pooling ReLU activation functions whose good performance for CNNs has been demonstrated (Zeiler et al., 2013), the output resolution was reduced to 1/4 of the original. The back-end network used the same convolution kernel size as the front-end network, and each column used a different number of convolution kernels and then merged the feature maps from all subnetworks and applied a 1 × 1 convolution kernel to map the feature maps to the density map. Then, the Euclidean distance was used to measure the difference between the true density and the predicted density. The loss function was defined as follows:

where \(\theta\) is a set of learnable parameters in the network; \(N\) is the number of training images; \({x}_{i}\) is the input image; \({F}_{i}\) is the true density of the image; \(F\left({x}_{i};\theta \right)\) represents the density predicted by the model, which varies with the sample and the parameter θ; and \(L\left(\theta \right)\) is the loss between the predicted density and the true density.

Assessment criteria

In research on estimating flowering intensity, no universal standard exists for evaluating the number of flowers. Xiao et al. (2014) divided the flower area by the leaf area to acquire a rough indicator of the number of flowers to be thinned. Hočevar et al. (2014) used the number of flower clusters from a single fruit tree in an apple orchard. Comas et al. (2019) used the correlation between the percentage of white pixels in each tree and the number of flower clusters and finally obtained the number of flower clusters as an evaluation index. As in most crowd counting research (Thorp and Dierig, 2011; Viola et al., 2005; Zhang et al., 2016), two evaluation indexes were applied: mean absolute error (MAE) and mean square error (MSE), both of which can reflect the true prediction error situation. Their formulas appear below.

where \(N\) is the number of test images, \({z}_{i}\) is the actual number of flowers in the \(i\) th image, and \({\widehat{z}}_{i}\) is the predicted number of flowers in the \(i\) th image. \(MAE\) represents the accuracy of the prediction, while \(MSE\) represents the robustness of the prediction.

Results and discussion

Evaluation

The goal of this study was to obtain an improved model for estimating the number of litchi flowers that does not rely on specific datasets or image preprocessing operations. To quickly obtain a reliable CNN training model, the hardware for the model training used on the male litchi flower dataset consisted of an Intel Xeon Gold 64-bit 5218 CPU @ 2.3 GHz, a GeForce RTX 2080Ti GPU, CUDA 9.2, cuDNN 7.6.3 and the Ubuntu 16.04 operating system. The entire program was implemented in Python 3.7 using PyTorch 1.3. The input images were 1024 × 768-pixel grayscale images, the momentum was set to 0.9, and the initial learning rate was 0.00001.

In the homemade litchi flower dataset, the training set was composed of nine image blocks selected from different positions in each image: each block represented 1/4 of the original image size. The first four image blocks contained four nonoverlapping images, while the other five image blocks were randomly cropped from the input image. The error in the training process was obtained, as shown in Fig. 8, which showed that the errors decreased with increasing training and that the results gradually converged. In the 56th epoch, both MAE and MSE obtained the best results and then continued to fluctuate.

Finally, after training, a model that could be used to estimate the number of flowers was obtained. The image shows the performance results of the model in the test set for each picture. According to \(Error=\frac{{\widehat{p}}_{i}-{p}_{i}}{{p}_{i}}\times 100\mathrm{\%}\), \({\widehat{p}}_{i}\) is the predicted number of flowers, and \({p}_{i}\) is the actual number of flowers. The error rate on the test set is shown in Fig. 9.

Figure 10 shows a histogram of the number of images corresponding to the error rate in the test set. Although the maximum prediction error reached 48%, the overall error was between 0 and 20%. The trained model achieved an MAE of 16.29 and an MSE of 25.40 in the test set. Two examples are shown in Fig. 11. They show that the distribution of male litchi flowers in the images were basically the same in both the real and predicted density maps, which indicated the following: (1) the algorithm could express the distribution characteristics of male litchi flowers well from the images; (2) it was feasible to estimate the number of litchi flowers from images.

The ground truth density maps and estimated density maps of two test images in dataset. The images in a show a case of fewer flowers in the image, where the actual number was 64 and the estimated number was 59.49. The images in b show a case of many flowers that have obvious distribution characteristics. A total of 458 flowers were in the ground-truth images, while 410 (409.59) flowers were estimated

Ablation experiment

In the previous multicolumn convolutional neural network, Zhang et al. (2016) adopted three convolution kernels of different sizes, 5 × 5, 7 × 7 and 9 × 9, for density prediction. On the basis of these three convolution kernels, smaller 3 × 3 convolution kernels and larger 11 × 11 convolution kernels were added in this study. To better verify the effectiveness of these two convolution kernels, an ablation experiment of these two convolution kernels was added in the study to better judge the convolutional neural network proposed. Table 2 shows the evaluation result of the ablation experiment. As seen from the table, the three-columns convolutional neural network could still got a relatively satisfied result by using only the subnetworks with convolutional kernels of sizes 5 × 5, 7 × 7 and 9 × 9. The addition of subnetworks with 3 × 3 convolution kernels played a role in improving the accuracy and robustness of the results than results of the three-columns network. The accession of subnetwork with 11 × 11 convolution kernels could improve the robustness of the model but reduce the accuracy, and the number of model parameters does not increase much. When these two subnetworks were added simultaneously, the convolutional neural network had a good statistical effect on the image of male litchi flowers. Compared with the three-columns convolutional neural subnetwork, it could better adapt to the male litchi flowers of different sizes and could achieve a smaller count error in the test set.

Effectiveness of data augmentation

To verify the effectiveness of data augmentation, this study conducted relevant experiments with data augmentation and nonaugmentation. A total of188 pairs of training data for non-augmentation (images and corresponding labels) in the original male litchi flower dataset was collected, and the above data augmentation methods were randomly used to expand the samples. Finally, 300 pairs of training data for data augmentation experiment were obtained. At the same time, 100 pairs of data pairs were selected from the original dataset as the test set which were different from those in training set. Two groups of experiments with the five-columns model were trained with the same network hyperparameters, and the results in the test set were as follows: As seen from Table 3, compared with nonaugmentation, the gap between the flower quantity prediction of the model and the actual flower quantity result was lower after using data augmentation, which could improve the accuracy of flower quantification. This indicates that for regression by a density map, data augmentation does not change the labels of the images, and it can significantly improve the final effect of the model, reduce the structural risk of the model, and improve the generalization ability of the model.

Comparison of the developed method with two different methods

To judge the effect of conventional counting methods based on detection and regression by density map, the performance of multiple models in the test set is shown in the table below. For the detection method, two advanced target detection algorithms are adopted, which are based on the single-stage target detectors YOLOv3 (Redmon & Farhadi, 2018) and YOLOv4 (Bochkovskiy et al., 2020) and on Faster-RCNNs (Ren et al., 2015) whose backbones are Resnet50 based on the candidate region. Faster-RCNN(a) is the initial algorithm, and its anchor areas are 1282, 2562 and 5122, with aspect ratios of 1:1, 1:2 and 2:1, which are too large for small flowers. Therefore, aiming at making the anchor more suitable for the size of the flowers, after manual selection, the areas of the anchors in Faster RCNN(b) are adjusted to 82, 162 and 322, and the aspect ratios are adjusted to 0.5:1, 1:1 and 1:1.2. As shown in Table 4, with the need for target detection methods for each object feature extraction and the sizes and locations of boundary boxes, the target detection algorithms did not perform well in the face of small and dense targets such as male litchi flowers. Among the target detection methods adopted, the YOLOv4 algorithm had the highest precision and minimum counting error. The improved Faster RCNN(b) had better accuracy than YOLOv3, but the result of counting error was worse than that of YOLOv3. The method based on density map regression can better solve the problem of counting male litchi flower. MCNN proposed by Zhang et al. (2016) for Crowd counting and the five-columns network in this study were applied to male litchi flower counting, and the result may quite suitable for the counting task. For the two different counting methods, the methods of regression based on the density map show better advantages, and the number of parameters in the model is far less than that of advanced target detection method models.

The counting results of the six different algorithms of male litchi flowers on an image in test set are shown in Fig. 12. Figure 12c, d showed both YOLOv3 and YOLOv4 could detect male litchi flowers with good results and the counting results are close to the real results, but there were still many false negatives detected (the yellow circles mark in the two figures) showing their low performance. In Fig. 12e, f, although the improved region-based Faster-RCNN had been greatly improved, its counting result was still less than the correct result with more missing detection (the blue circles mark in Fig. 12f). The methods of regression showed advantages in counting, as shown in Fig. 12g, h. And the five-columns model proposed achieved a smaller distance from the right flower number.

Counting result examples of different models: a the original image; b The ground truth density map; c The detection and counting results of YOLOv3 model; d the detection and counting results of YOLOv4 model; e the detection and counting results of Faster RCNN(a) model; f the detection and counting results of Faster RCNN(b) model; g the estimation of MCNN proposed by Zhang et al. (2016); h the estimation of the five-columns model proposed in the research. (GT count means the number of flowers labeled in the image and estimation means the number of flowers predicted by corresponding model.)

Comparison of different single flower pixel size

The model can receive images with different resolutions under sufficient computing power, but different image sizes still affect the results due to the image details. To better judge the performance of the network model, in this study, the resolution of a single flower was used as a comparison object, which could serve as a reference in model deployment.

The qualitative estimation results are illustrated in Fig. 13. More instances are shown to compare the performance using three different single-flower resolutions. Figure 13a, b show that the average resolution per flower was approximately 20 × 20 to 60 × 60 pixels when the best results were obtained. The model can not only better calculate the number of flowers but also better show the distribution of flowers in the image, which means that visual field of a camera with higher resolution can be larger. This can help cameras farther from the tree to capture the appearance of the whole tree, as shown in Fig. 13e. Figure 13c, d show some failure cases of the model on the male litchi flower at sizes below 10 × 10 pixels due to the loss of details. The flowers in the image were too small to achieve good results because the background and distribution of the flowers were wrongly estimated. In future work, more instances for training the network will be needed to estimate lower-resolution images such as adding more flowers to limited-resolution images.

Comparison of the estimated results with resolutions per flower. Images a and b with resolutions of 1024 × 768 pixels show the results of single-flower resolutions ranging from 20 × 20 to 60 × 60. Images c and d with resolutions of 1024 × 768 pixels show the results of single-flower resolutions ranging below 20 × 20. Image e shows an example of a large visual field image with 2500 × 1666 pixels and its estimated result

Comparison of the time consumed by a single image at different resolutions

Because the model accepted input images of various sizes and resolutions, the output density map was more accurate when high-resolution images were input, but the detection required more time and much computing power. In contrast, using low-resolution images as input required less time and fewer hardware resources but performed poorly in calculating the number.

To better demonstrate the performance of the model, images in the test set were resized to the other two resolutions and were then input to the model. The time consumed by the images at each resolution was obtained, as shown in Table 5. Table 5 shows that images at the three different resolutions achieved average times of 0.0363, 0.2230 and 0.0099 s. A shorter time of 0.0099 s was obtained at a resolution of 385 × 512 pixels, which was half that in the test set, while the errors (MAE, MSE) increased. Although the time consumed by images with 1024 × 768 pixels was longer, the model could have a small deviation compared with the time consumed by the other two resolutions, so the time required for the model to predict is acceptable. From the table, the lower the image resolution is, the less prediction time required and the lower the hardware resources. This suggests that the model could balance the ability of the device, the resolution of the input image, the consumption of time and the accuracy of estimation, especially in video applications.

Visualization application

Based on the characteristics of the density map of male litchi flowers, a heatmap can be used to show the flowers distribution information in the density map. For better visualization of flower distribution features, a heatmap based on bivariate kernel density estimation was made. From the heatmap, the distribution state of the density of male litchi flowers—from high to low in the density map—was determined. This information could provide a reference for blooming period management. The heatmap displayed the density of litchi flowers in a special highlighted form, as shown in Fig. 14.

From the visualization of the heatmap, the high-density and low-density areas were displayed. The low-density from the density indicator was closer to a single spike that could be located and calculated. Growers usually often judge whether litchi flowers need to be thinned and whether the thinning rate should be increased according to the number of flowers on each flower spike. Therefore, locating low-density areas will help farmers make judgements regarding chemical and mechanical thinning. In addition, obtaining the whole heatmap from the density map and calculating the number of flowers in it can provide reference for the calculation of the flowering rate and fruit yield measurement in the future.

Conclusion

In the management of litchi orchards, information on flower density is very important in guiding the prediction of litchi fruit thinning and the flowering rate. However, research on flower counts is very limited, and most of methods rely on a target detection algorithm to count flowers, which makes it difficult to show good results in the counting of small and dense male litchi flowers. Aiming at addressing this problem, this study is based on density map regression, which can be further used to count the flowers per spike and to monitor the flowering rate, and the obtained heatmap can be used as a reference for flowering management. The main conclusions of this study are summarized as follows:

-

(1)

The counting task based on a density map had the advantages of being end-to-end, avoiding the work of the accurate positioning and recognition of flowers, and being able to count flowers in an image directly.

-

(2)

To obtain a more robust model, an ablation experiment was carried out on a multicolumn convolution network with convolutional kernel sizes of 3 × 3 and 11 × 11and it is found that the subnetwork with a convolution kernel size of 11 × 11 makes a smaller improvement to the model, but when combined with a subnetwork of 3 × 3 convolutional kernels, it had a stronger generalization ability.

-

(3)

In density map-based counting, traditional data augmentation could improve the effect of the model on male litchi flower counting and could reduce the counting errors of the model.

-

(4)

To better reveal the effectiveness of the proposed method, the model based on density map regression was compared with the counting method based on the existing advanced target detection algorithm. The regression counting method based on the density map has a smaller counting error than the target detection method, and the proposed method achieves the lowest counting error, with an MAE of 16.29 and an MSE of 25.40 in the test set, showing a better detection performance.

-

(5)

The proposed model is very lightweight, with 1.03 M parameters, far lower than that of the target detection method, and it has more advantages in model deployment.

Although the algorithm exhibited differences in resolution and efficiency, it was also shown that it was possible to use a CNN to estimate the number of flowers based on a density map. In future work, more high-density datasets and additional flower morphology data at different blooming periods will be generated. To improve the network model, grayscale image inputs will no longer be used. Color information will be used to better estimate the number of flowers to enhance the robustness of the model and improve the network structure so that it takes less time during training and estimates higher-resolution litchi flower images. By counting based on density map, the number of flowers in images can be used to speculate the flower number per spike through mathematical statistics methods such as Monte Carlo, which is useful in the flowering period.

References

Adamsen, F. J., Coffelt, T. A., Nelson, J. M., Barnes, E. M., & Rice, R. C. (2000). Method for using images from a color digital camera to estimate flower number. Crop Science, 40(3), 704–709. https://doi.org/10.2135/cropsci2000.403704x

Aggelopoulou, A. D., Bochtis, D., Fountas, S., Swain, K. C., Gemtos, T. A., & Nanos, G. D. (2011). Yield prediction in apple orchards based on image processing. Precision Agriculture, 12(3), 448–456. https://doi.org/10.1007/s11119-010-9187-0

Bochkovskiy, A., Wang, C. Y., & Liao, H. Y. M. (2020). YOLOv4: Optimal speed and accuracy of object detection. arXiv preprint. arXiv:2004.10934

Boominathan, L., Kruthiventi, S. S., & Babu, R. V. (2016). CrowdNet: A deep convolutional network for dense crowd counting. In Proceedings of the 24th ACM international conference on Multimedia. https://doi.org/10.1145/2964284.2967300

Cai, C., Chen, J., Ou, L., Xi, X., & Sun, Q. (2011). Study on Litchi blooming habits. Guangdong Agricultural Sciences, 38(21), 20–24. https://doi.org/10.16768/j.issn.1004-874x.2011.21.059

Ciregan, D., Meier, U., & Schmidhuber, J. (2012). Multi-column deep neural networks for image classification. 2012 IEEE Conference on Computer Vision and Pattern Recognition (3642–3649). https://doi.org/10.1109/CVPR.2012.6248110

Comas, A., Valente, J., & Kooistra, L. (2019). Automatic apple tree blossom estimation from UAV rgb imagery. International Archives of the Photogrammetry, Remote Sensing & Spatial Information Sciences, XLII-2/W13, 631–635. https://doi.org/10.5194/isprs-archives-XLII-2-W13-631-2019

Dias, P. A., Tabb, A., & Medeiros, H. (2018). Apple flower detection using deep convolutional networks. Computers in Industry, 99, 17–28. https://doi.org/10.1016/j.compind.2018.03.010

Farjon, G., Krikeb, O., Hillel, A. B., & Alchanatis, V. (2020). Detection and counting of flowers on apple trees for better chemical thinning decisions. Precision Agriculture, 21, 503–521. https://doi.org/10.1007/s11119-019-09679-1

Feng, D., Haase-Schütz, C., Rosenbaum, L., Hertlein, H., Glaeser, C., Timm, F., Wiesbeck, W., & Dietmayer, K. (2021). Deep multi-modal object detection and semantic segmentation for autonomous driving: Datasets, methods, and challenges. IEEE Transactions on Intelligent Transportation Systems. https://doi.org/10.1109/TITS.2020.2972974

Hočevar, M., Širok, B., Godeša, T., & Stopar, M. (2014). Flowering estimation in apple orchards by image analysis. Precision Agriculture, 15(4), 466–478. https://doi.org/10.1007/s11119-013-9341-6

Horton, R., Cano, E., Bulanon, D., & Fallahi, E. (2017). Peach flower monitoring using aerial multispectral imaging. Journal of Imaging. https://doi.org/10.3390/jimaging3010002

Huang, X., & Chen, H. (2014). Studies on shoot, flower and fruit development in litchi and strategies for improved litchi production. Acta Horticulturae, 1029, 127–136. https://doi.org/10.17660/ActaHortic.2014.1029.14

Jiang, S., Xu, H., Wang, H., Hu, G., Li, J., Chen, H., & Huang, X. (2012). A comparison of the costs of flowering in “Feizixiao” and “Baitangying” litchi. Scientia Horticulturae, 148, 118–125. https://doi.org/10.1016/j.scienta.2012.09.035

Kentaro, W. (2016) labelme: Image polygonal annotation with python. Git Code. Retrieved from https://github.com/wkentaro/labelme

Koen, B. V. (1988). Toward a definition of the engineering method. European Journal of Engineering Education, 13(3), 307–315. https://doi.org/10.1080/03043798808939429

Lempitsky, V., & Zisserman, A. (2010). Learning to count objects in images. Advances in Neural Information Processing Systems, 23, 1324–1332. https://doi.org/10.5555/2997189.2997337

Li, Y., Zhang, X., & Chen, D. (2018). CSRNet: Dilated convolutional neural networks for understanding the highly congested scenes. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition (1091–1100). https://doi.org/10.1109/CVPR.2018.00120

Lin, P., Lee, W. S., Chen, Y. M., Peres, N., & Fraisse, C. (2020). A deep-level region-based visual representation architecture for detecting strawberry flowers in an outdoor field. Precision Agriculture, 21, 387–402. https://doi.org/10.1007/s11119-019-09673-7

Link, H. (2000). Significance of flower and fruit thinning on fruit quality. Plant Growth Regulation, 31(1–2), 17–26. https://doi.org/10.1023/A:1006334110068

Liu, S., Li, X., Wu, H., Xin, B., Tang, J., Petrie, P. R., & Whitty, M. (2018). A robust automated flower estimation system for grape vines. Biosystems Engineering, 172, 110–123. https://doi.org/10.1016/j.biosystemseng.2018.05.009

Liu, Y., Wang, K. J., & Xie, R. J. (2016). Estimating the number of apple tree flowers based on hyperspectral information of a canopy. Scientia Agricultura Sinica, 49(18), 3608–3617. https://doi.org/10.3864/j.issn.0578-1752.2016.18.015

Luo, J., He, F., Wang, X., Hu, F., Wang, Z., & Li, J. (2019). Analyzing the physiological causes of the chemical combined with hand and machine flower-thinning technique to increase Feizixiao litchi yield. South China Fruits, 48(01), 20–24. https://doi.org/10.13938/j.issn.1007-1431.20180126

Mavridou, E., Vrochidou, E., Papakostas, G. A., Pachidis, T., & Kaburlasos, V. G. (2019). Machine vision systems in precision agriculture for crop farming. Journal of Imaging, 5(12), 89. https://doi.org/10.3390/jimaging5120089

Qi, W., Chen, H., Luo, T., & Song, F. (2019). Development status, trend and suggestion of litchi industry in mainland China. Guangdong Agricultural Sciences, 46(10), 132–139. https://doi.org/10.16768/j.issn.1004-874X.2019.10.020

Redmon, J., & Farhadi, A. (2018). YOLOv3: An incremental improvement. Retrieved August 30, 2020, from https://arxiv.org/pdf/1804.02767.pdf

Ren, S., He, K., Girshick, R., & Sun, J. (2015). Faster R-CNN: Towards real-time object detection with region proposal networks. Advances in Neural Information Processing Systems, 28, 91–99.

Shorten, C., & Khoshgoftaar, T. M. (2019). A survey on image data augmentation for deep learning. Journal of Big Data. https://doi.org/10.1186/s40537-019-0197-0

Srinivas, S., Sarvadevabhatla, R. K., Mopuri, K. R., Prabhu, N., Kruthiventi, S. S., & Babu, R. V. (2017). An introduction to deep convolutional neural nets for computer vision. Deep Learning for Medical Image Analysis. https://doi.org/10.1016/B978-0-12-810408-8.00003-1

Thorp, K. R., & Dierig, D. A. (2011). Color image segmentation approach to monitor flowering in lesquerella. Industrial Crops and Products, 34(1), 1150–1159. https://doi.org/10.1016/j.indcrop.2011.04.002

Viola, P., Jones, M. J., & Snow, D. (2005). Detecting pedestrians using patterns of motion and appearance. International Journal of Computer Vision, 63, 153–161. https://doi.org/10.1007/s11263-005-6644-8

Wu, Y., & Jiang, M. (2018). Face recognition system based on CNN and LBP features for classifier optimization and fusion. The Journal of China Universities of Posts and Telecommunications, 25(01), 37–47. https://doi.org/10.19682/j.cnki.1005-8885.2018.0004

Xiao, C., Zheng, L., & Sun, H. (2014). Estimation of the apple flowers based on aerial multispectral image. 2014 ASABE Annual International Meeting. https://doi.org/10.13031/aim.20141912593

Xu, R., Li, C., Paterson, A. H., Jiang, Y., Sun, S., & Robertson, J. S. (2018). Aerial images and convolutional neural network for cotton bloom detection. Frontiers in Plant Science, 8, 2235. https://doi.org/10.3389/fpls.2017.02235

Yang, B., Li, G., Yang, S., He, Z., Zhou, C., & Yao, L. (2015). Effect of application ratio of potassium over nitrogen on litchi fruit yield, quality, and storability. Hortscience a: Publication of the American Society for Horticultural Science, 50(6), 916–920. https://doi.org/10.21273/HORTSCI.50.6.916

Zeiler, M. D., Ranzato, M., Monga, R., Mao, M., Yang, K., Le, Q. V., Nguyen, P., Senior, A., Vanhoucke, V., Dean, J., & Hinton, G. E. (2013). On rectified linear units for speech processing. 2013 IEEE International Conference on Acoustics, Speech and Signal Processing (3517–3521). https://doi.org/10.1109/ICASSP.2013.6638312

Zhang, Y., Zhou, D., Chen, S., Gao, S., & Ma, Y. (2016). Single-image crowd counting via multi-column convolutional neural network. 2016 IEEE Conference on Computer Vision and Pattern Recognition. https://doi.org/10.1109/CVPR.2016.70

Zhu, X. (2020). Analysis of suitable climate resources for the growth of high quality litchi in Yulin City. Journal of Agricultural Catastrophology, 10(03), 126–127. https://doi.org/10.19383/j.cnki.nyzhyj.2020.03.052

Acknowledgements

This research was supported by the earmarked fund for the Special Project of the Rural Vitalization Strategy of Guangdong Academy of Agricultural Sciences (Accession No. TS-1-4), the Key-Area Research and Development Program of Guangdong Province (Accession No. 2019B020223002), and the China Agriculture Research System (Accession No. CARS-32-14).

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Lin, J., Li, J., Yang, Z. et al. Estimating litchi flower number using a multicolumn convolutional neural network based on a density map. Precision Agric 23, 1226–1247 (2022). https://doi.org/10.1007/s11119-022-09882-7

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11119-022-09882-7