Abstract

This paper is concerned with the solution of severely ill-conditioned linear tensor equations. These kinds of equations may arise when discretizing partial differential equations in many space-dimensions by finite difference or spectral methods. The deblurring of color images is another application. We describe the tensor Golub–Kahan bidiagonalization (GKB) algorithm and apply it in conjunction with Tikhonov regularization. The conditioning of the Stein tensor equation is examined. These results suggest how the tensor GKB process can be used to solve general linear tensor equations. Computed examples illustrate the feasibility of the proposed algorithm.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

This paper is concerned with the numerical solution of severely ill-conditioned tensor equations. We are particularly interested in the solution of Sylvester and Stein tensor equations. The proposed iterative schemes also can be used to solve equations of the form

where \({\mathcal{L}}:\mathbb {R}^{I_{1}\times I_{2}\times {\ldots } \times I_{N}} \to \mathbb {R}^{I_{1}\times I_{2}\times {\ldots } \times I_{N}}\) is a linear tensor operator. Severely ill-conditioned tensor equations arise in color image restoration, video restoration, and when solving certain partial differential equations in several space-dimensions by collocation methods; see, e.g., [3, 21,22,23,24]. Throughout this work, vectors and matrices are denoted by lowercase and capital letters, respectively, and tensors of order three (or higher) are represented by Euler script letters.

Before discussing the problems to be solved, we recall the definition of an n-mode product from [19]:

Definition 1

The n-mode (matrix) product of a tensor \({\mathcal{X}}\in \mathbb {R}^{I_{1}\times I_{2}\times {\ldots } \times I_{N}}\) with a matrix \(U\in \mathbb {R}^{J\times I_{n}}\) is denoted by \({\mathcal{X}} \times _{n} U\). It is of size

and has the elements

The n-mode (vector) product of a tensor \({\mathcal{X}}\in \mathbb {R}^{I_{1}\times I_{2}\times {\ldots } \times I_{N}}\) with a vector \(v \in \mathbb {R}^{I_{n}}\) is of order N − 1 and is denoted by \({\mathcal{X}} \bar {\times }_{n} v\); its size is I1 ×… × In− 1 × In+ 1 ×… × IN.

The Sylvester and Stein tensor equations are given by

and

respectively, where the right-hand side tensors \({\mathcal{D}}, {\mathcal{F}} \in \mathbb {R}^{I_{1}\times I_{2} \times {\ldots } \times I_{N}}\) and the coefficient matrices \(A^{(n)}\in \mathbb {R}^{I_{n}\times I_{n}}\) (n = 1,2,…,N) are known, and \({\mathcal{X}}\in \mathbb {R}^{I_{1}\times I_{2} \times {\ldots } \times I_{N}}\) is the unknown tensor to be determined.

Many discretized linear partial differential equations in several space-dimensions by finite differences [2, 3, 9] or spectral methods [3, 21,22,23, 27] can be expressed with the aid of a Sylvester tensor equation. A discussion on the conditioning of (2) under certain conditions is provided by Najafi et al. [24], who proposed the application of Tikhonov regularization in conjunction with the global Hessenberg process in tensor form to solve (2) with a perturbed right-hand side. Some perturbation results for (3) are provided by Liang and Zheng [20] and by Xu and Wang [28], who solve (3) by using the tensor forms of the BiCG and BiCR iterative methods. Liang and Zheng [20] present perturbation results for (3) for the case when N is even and A(1) = ⋯ = A(N) = A is Schur stable, i.e., when all eigenvalues of A lie in the open unit disc in the complex plane. These results rely on the matrix spectral norm of (I − A(N) ⊗⋯ ⊗ A(2) ⊗ A(1))− 1.

Recently, Huang et al. [16] proposed to apply the global form of well-known iterative methods in their tensor forms to the solution of a class of tensor equations via the Einstein product. The iterative methods in the present work are well suited to solve problems discussed in [16] when they are severely ill-conditioned; Huang et al. [16] do not consider this situation.

This paper first establishes some results on the conditioning of (3) motivated by [20, 28]. Then the tensor form of the Golub–Kahan bidiagonalization (GKB) process for the solution of severely ill-conditioned tensor equations is described. In particular, we consider the solution of severely ill-conditioned tensor equations of the forms (2) and (3). To this end, we apply results in [3] and generalize techniques described in [5]. We remark that the results discussed in Section 3 also can be applied to the solution of severely ill-conditioned problems of the form (1).

The remainder of this section introduces notation used in throughout this paper. We also recall the concept of the contracted product between two tensors. Section 2 presents some results on the sensitivity of the solution of (3), and in Section 3 we describe a tensor form of the GKB process and discuss the use of Gauss-type quadrature to determine quantities of interest for Tikhonov regularization. Section 4 presents some numerical results, and Section 5 contains concluding remarks.

1.1 Notation

Let \({\mathcal{X}}\in \mathbb {R}^{I_{1}\times I_{2}\times \cdots \times I_{N}}\) be an N-mode tensor, and let \(x_{i_{1}i_{2}{\ldots } i_{N}}\) denote the element (i1,i2,…,iN) of \({\mathcal{X}}\). For a real square matrix A with real eigenvalues, \({\lambda }_{\min \limits }(A)\) and \({\lambda }_{\max \limits }(A)\) stand for its smallest and largest eigenvalues, respectively. The set of all eigenvalues of A is denoted by σ(A). The symmetric and skew-symmetric parts of A are given by

respectively, where the superscript T denotes transposition. The condition number of an invertible matrix A is defined by

where ∥⋅∥2 stands for the spectral norm. The largest and smallest singular values of a matrix A are denoted by \({\sigma }_{\max \limits }(A)\) and \({\sigma }_{\min \limits }(A)\), respectively. In particular, for an invertible matrix it holds

We use the notation \(\underset {i = 1}{\overset {{\ell }}{\bigotimes }} x_{i}:= x_{1}\otimes x_{2}\otimes \cdots \otimes x_{\ell }\) for the multi-dimensional Kronecker product. The vector \(\text {vec}({\mathcal{X}})\) is obtained by using the standard vectorization operator with respect to frontal slices of \({\mathcal{X}}\). The mode-n matricization of a tensor \({\mathcal{X}}\) is denoted by X(n); it arranges the mode-n fibers to be the columns of the resulting matrix. Recall that a fiber of a tensor is defined by fixing all indices but one; see [19] for more details.

1.2 Contracted product

The \(\boxtimes ^{N}\) product between two N-mode tensors

is defined as the \(I_{N} \times \tilde {I}_{N}\) matrix, whose (i,j)th entry is given by

where

and tr(⋅) denotes the trace of its argument. The \(\boxtimes ^{N}\) product is a special case of the contracted product [10]. Specifically, \({\mathcal{X}} \boxtimes ^{N} {\mathcal{Y}}\) is the contracted product of the N-mode tensors \({\mathcal{X}}\) and \({\mathcal{Y}}\) along the first N − 1 modes. For \({\mathcal{X}}, {\mathcal{Y}} \in \mathbb {R}^{I_{1}\times I_{2} \times {\cdots } \times I_{N}}\), we have

and \(\left \| {\mathcal{X}} \right \|^{2}= \text {tr} ({\mathcal{X}} \boxtimes ^{N} {\mathcal{X}})= {\mathcal{X}} \boxtimes ^{(N+1)} {\mathcal{X}}\) for \({\mathcal{X}}\in \mathbb {R}^{I_{1}\times I_{2} \times {\cdots } \times I_{N}}\). We conclude this section by recalling the following two results from [3].

Lemma 1

Let \({\mathcal{X}}\in \mathbb {R}^{I_{1}\times \cdots \times I_{n}\times {\cdots } \times I_{N}}\), \(A\in \mathbb {R}^{J_{n}\times I_{n}}\), and \(y\in \mathbb {R}^{J_{n}}\). Then

Proposition 1

Let \({\mathcal{B}}\in \mathbb {R}^{I_{1}\times I_{2} \times {\cdots } \times I_{N}\times m}\) be an (N + 1)-mode tensor with the column tensors \({\mathcal{B}}_{1},{\mathcal{B}}_{2},\ldots ,{\mathcal{B}}_{m}\in \mathbb {R}^{I_{1}\times I_{2} \times {\cdots } \times I_{N}}\) and \(z=(z_{1},z_{2},\ldots ,z_{m})^{T}\in \mathbb {R}^{m}\). Then for an arbitrary (N + 1)-mode tensor \({\mathcal{A}}\) with N-mode column tensors \({\mathcal{A}}_{1},{\mathcal{A}}_{2},\ldots ,{\mathcal{A}}_{m}\), we have

2 Sensitivity analysis of the Stein tensor equation

This section mainly discusses the conditioning of the Stein tensor (3). To this end, we first consider a linear system of equations that is equivalent to (3), and then derive lower and upper bounds for the condition number of the matrix of this linear system.

It is well-known that (2) is equivalent to the linear system of equations

with \(x=\text {vec} ({\mathcal{X}})\), \(b=\text {vec} ({\mathcal{D}})\), and

Moreover, we have (see [19])

As a result, it follows that (3) corresponds to the linear system of equations

We use the tensor norm

Therefore, the sensitivity analyses of (2) and (3) are closely related to deriving bounds for the condition number of the matrices \(\tilde {\mathcal {A}}\) and \(\mathcal {A}\). For linear systems of equations \(\mathcal {A}x=b\) and \(\mathcal {A}({x+\varDelta x})={b+\varDelta b}\) with a non-singular matrix, it is well known that

Moreover, if \(\left \|{\mathcal {A}^{- 1}}\right \|_{2}\left \|\varDelta \mathcal {A} \right \|_{2}<1\), then

see, e.g., [13] for further details on perturbation analysis for linear systems of equations.

Lower and upper bounds for \(\tilde {\mathcal {A}}\) have been derived in [24] under suitable conditions. Therefore, we limit our discussion to the tensor \(\mathcal {A}\), which we will assume to be invertible. It is shown in [28] that

and

where the latter bound requires the inequality ∥A(N) ⊗⋯ ⊗ A(2) ⊗ A(1)∥2 < 1 to hold. The following proposition presents an alternative upper bound.

Proposition 2

Assume that \(\prod \nolimits _{i = 1}^{N} {{\sigma }_{{\min \limits } }} ({A^{(i)}})>1\). Then

Proof

Define \(\mathcal {F}={A^{(N)}} \otimes {\cdots } \otimes {A^{(1)}}\) and let ρ(M) denote the spectral radius of the matrix M. Then

Since \((I-\mathcal {F})^{-1} = -(I-\mathcal {F}^{-1})^{-1}\mathcal {F}^{-1}\) and

we obtain

and

which shows the proposition. □

Remark 1

We note that the assumption in Proposition 2 differs from the one used in [28]. Because of the importance of determining upper bounds in perturbation analysis, we report the upper bounds provided by (4) and Proposition 2 for two matrices \({\mathcal A}\). The bounds and the exact condition numbers are plotted in Fig. 1. We used the MATLAB function “\(\text {cond}(\text {full}(\mathcal {A}))\).” This allowed us to calculate the condition number of \(\mathcal {A}\) for small n only due to lack of computer memory.Footnote 1 When the matrix \(\mathcal {A}\) is large and sparse, we can compute an estimate of the condition number with MATLAB function “condest(\(\mathcal {A}\)).”

- Case I::

-

We let the matrices A(i), i = 1,2,3, be ill-conditioned “prolate” Toeplitz matrices. This kind of Toeplitz matrix can be generated with the MATLAB command A = gallery(′prolate′,n,w), which returns the n-by-n prolate Toeplitz matrix with parameter w. We set w = 0.11 for A(1), w = 0.12 for A(2), and w = 0.13 for A(3). Then ∥A(3) ⊗ A(2) ⊗ A(1)∥ < 1. Notice that A(1), A(2), and A(3) are full matrices. We therefore do not report “condest(\(\mathcal {A}\))” for this case.

- Case II::

-

For i = 1,2,3, consider the matrices

$$ A^{(i)} =\frac{\nu}{h^{2}} \left[\begin{array}{lllll} 2&-1&&&\\ -1&2&-1&&\\ &\ddots&\ddots&\ddots&\\ &&-1&2&-1\\ &&&-1&2 \end{array}\right]+\frac{c_{i}}{4h} \left[\begin{array}{lllll} 3&-5&1&&\\ 1&3&-5&1&\\ &\ddots&\ddots&\ddots&1\\ &&1&3&-5\\ &&&1&3 \end{array}\right] \in \mathbb{R}^{n\times n}, $$(6)that are the sum of a symmetric tridiagonal matrix and a banded upper Hessenberg Toeplitz matrix with ν = 0.1, c1 = 1, c2 = 2, c3 = 3, and h = 1/(n + 1). It can be verified that \(\prod \limits _{i=1}^{3}{\sigma }_{\min \limits }(A^{(i)})>1\).

We next derive new bounds for \(\text {cond}(\mathcal {A})\). This requires the following two propositions.

Proposition 3

Let \(A^{(i)}\in \mathbb {R}^{n_{i}\times n_{i}}\) and \(x_{i}\in \mathbb {R}^{n_{i}}\) for i = 1,2,…,ℓ. Then

Proof

We show the assertion by induction. Let ℓ = 2. Using the fact that \({x_{i}^{T}}\mathcal {S}(A^{(i)})x_{i}=0\) for i = 1,2, we obtain (7) from the following equality (see [29]):

Now assume that (7) holds for ℓ = k. Let ℓ = k + 1 and define

Then

The proposition now follows from the induction hypothesis. □

Proposition 4

Let \(\mathcal {A}=I - {A^{(N)}} \otimes {\cdots } \otimes {A^{(2)}} \otimes {A^{(1)}}\). Then

and

where the yi and zi are unit eigenvectors such that, for i = 1,2,…,N,

Proof

It is easy to verify that

Let \(\mathcal {Y}=(y_{N}\otimes {\cdots } \otimes y_{1})\) and \(\mathcal {Z}=(z_{N}\otimes {\cdots } \otimes z_{1})\). Then it follows from Proposition 3 that

and

This shows the desired result. □

Remark 2

If the matrices A(i), for i = 1,2,…,N, are positive definite, then

We note that the matrices A(i) are not required to be symmetric. Positive definiteness of the matrix A(i), i = 1,2,…,N, implies that \({\mathcal{H}}(A^{(i)})\) is symmetric positive definite. Furthermore, if

then the following upper bound follows from Proposition 4,

Under additional assumptions, we can derive an alternative upper bound for the condition number. To this end, we need the following result, which is a consequence of Weyl’s Theorem [14, Theorem 4.3.1].

Proposition 5

Let the matrices \(A,B\in \mathbb {R}^{n\times n}\) be symmetric. Then

Remark 3

Let \(\mathcal {F}={A^{(N)}} \otimes {\cdots } \otimes {A^{(1)}}\) and \(\lambda \in \sigma ({\mathcal{H}}(\mathcal {F}))\). Let \(\mathcal {E}_{N}\) denote the set of non-negative even numbers less than or equal to N. Then

where

The result can be shown by considering the symmetric part of \(\mathcal {F}\). For simplicity, let N = 3. Then

Using Proposition 5, we have

It follows from the above discussions that if \(1 + \prod \limits _{i = 1}^{N} {{\sigma }_{{\min \limits } }^{2}} ({A^{(i)}}) -2 {({M_{S}} + {M_{H}})^{N}}>0\), then we can derive an upper bound for \(\|\mathcal {A}^{-1}\|_{2}\) in the following manner: We obtain from (8) that

Therefore,

Combining the inequalities (5) and (9) yields

To illustrate the bound (10), we let \(A^{(1)}=A^{(2)}=A^{(3)}=\tilde {A}\), where the matrix \(\tilde {A}\in {\mathbb R}^{n\times n}\) is defined by

with M = tridiag(− 1,2,− 1) and L = tridiag(0.5,0,− 0.5). We note that the matrix \(\tilde {A}\) is taken from [29,30,31]. The condition

holds for suitable choices of r and even values of n. Figure 2 displays graphs for the exact condition number cond(\(\mathcal {A}\)) and the bound (10). The computations are carried out on the same computer as for Fig. 1. In particular, the function cond(⋅) can be evaluated for fairly small values of n, only.

Computed bounds by (10) versus the exact condition numbers for different values of n; r = 70 (left), r = 100 (center), and r = 500 (right)

We conclude this section by considering the situation when all the matrices A(i) are diagonalizable.

Remark 4

Let the matrices A(i) be diagonalizable, i.e., there are non-singular matrices Si and diagonal matrices Di such that \(A^{(i)}=S_{i}D_{i}S_{i}^{-1}\) for i = 1,2,…,N. Introduce

Then \(\mathcal {A}=\mathcal {S}(I-D_{N} \otimes {\cdots } \otimes D_{1})\mathcal {S}^{-1}\). Hence, if 1∉σ(A(N) ⊗⋯ ⊗ A(1)), then

As a result, we get

where

We obtain the inequality

Let \(\prod \limits _{i = 1}^{N} \|D_{i}^{-1}\|_{2} <1\). Then analogously to the proof of Proposition 2, we have

If \(\prod \limits _{i = 1}^{N} \|D_{i}\|_{2} <1\), then

Finally, we note that if the matrices Di, i = 1,2,…,N, are all positive definite, then

3 The tensor form of GKB and Tikhonov regularization

We first describe the implementation of the Golub–Kahan bidiagonalization (GKB) process in the tensor framework. Subsequently, we discuss an application of the GKB process to Tikhonov regularization. For notational simplicity, we introduce the two linear operators \(\tilde {{\mathcal{M}}}, {{\mathcal{M}}}:\mathbb {R}^{I_{1}\times I_{2} \times {\cdots } \times I_{N}} \to \mathbb {R}^{I_{1}\times I_{2} \times {\cdots } \times I_{N}}\) defined by

The adjoint operators of \(\tilde {{\mathcal{M}}}\) and \({\mathcal{M}}\) are given by

for \({\mathcal{Y}} \in \mathbb {R}^{I_{1}\times I_{2} \times {\cdots } \times I_{N}}\). The tensor equations (2) and (3) can be expressed as

We remark that the results and methods of this section also can be applied to other linear operators from \(\mathbb {R}^{I_{1}\times I_{2} \times {\cdots } \times I_{N}}\) to \(\mathbb {R}^{I_{1}\times I_{2} \times {\cdots } \times I_{N}}\). For notational convenience, we discuss in the sequel results and methods for (11).

Consider for the moment the linear system of equations Ax = b with a non-singular matrix \(A\in \mathbb {R}^{n\times n}\). Application of k steps of the GKB process to A with initial vector b produces the decompositions

where the matrices \(V_{k+1}\in \mathbb {R}^{n\times (k+1)}\) and \(U_{k}\in \mathbb {R}^{n\times k}\) have orthonormal columns, the matrix Vk is made up of the first k columns of Vk+ 1, the first column of Vk+ 1 is b/∥b∥2, the matrix \(\bar {T}_{k}\in \mathbb {R}^{(k+1)\times k}\) is lower bidiagonal with all diagonal and subdiagonal entries positive, and Tk is the leading k × k submatrix of \(\bar {T}_{k}\). We assume that k is small enough so that the decompositions (12) with the stated properties exist. This is the generic situation. Otherwise, the GKB process is said to break down. In the latter event, the computations simplify. We will not dwell on the handling of breakdowns. Thorough discussions on the GKB process can be found in [13, 25].

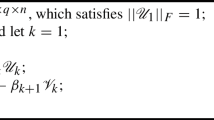

It is natural to extend the GKB process to tensor equations. Algorithm 1 describes the application of the GKB process to (11). We refer to the process so defined as the GKB based on tensor format (GKB−BTF) process.

Assume that the first k steps of Algorithm 1 can be carried out without breakdown, i.e., without any coefficients αj and βj vanishing. The analogue of the lower bidiagonal matrix \(\bar {T}_{k}\in \mathbb {R}^{(k+1)\times k}\) in (12), which we also refer to as \(\bar {T}_{k}\), has the diagonal entries α1,α2,…,αk. They are computed in line 6 of Algorithm 1. The subdiagonal elements β2,β3,…,βk+ 1 of \(\bar {T}_{k}\) are computed in line 12 of the algorithm. We can express the matrix \(\bar {T}_{k}\) in the form

Theorem 1

Let \({\tilde {{\mathcal{V}}}}_{k}\), \({\tilde {{\mathcal{U}}}}_{k}\), \({\tilde {{\mathcal{W}}}}_{k}\), and \({\tilde {{\mathcal{W}}}}^{*}_{k}\) be (N + 1)-mode tensors with frontal slices \({{{\mathcal{V}}}}_{j}\), \({{{\mathcal{U}}}}_{j}\), \({\mathcal{W}}_{j}:={\mathcal{M}}({\mathcal{U}}_{j})\), and \({\mathcal{W}}_{j}^{*}:={\mathcal{M}}^{*}({\mathcal{V}}_{j})\), respectively, for j = 1,2,…,k, computed by Algorithm 1. Then

where \({\mathcal{Z}}\) is an (N + 1)-mode tensor with k column tensors \(0,\ldots ,0,{\mathcal{V}}_{k+1}\). The last column of the matrix \(E_{k}=[0,\ldots ,0,e_{k}]\in {\mathbb R}^{k\times k}\) is the last column of the identity matrix of order k.

Proof

From lines 11 and 16 of Algorithm 1, we have

Note that the (j − 1)st frontal slice of (13) is given by

Equation (13) now follows from (15) and the above relation.

To show (14), we first note that lines 2, 5, and 10 of Algorithm 1 yield

where \({\mathcal{U}}_{0}\) is defined to be zero. Equation (14) now follows by comparing the above equation and the j th frontal slice of the right-hand side of (14). □

We turn to the situation when the operator \({\mathcal M}\) in (11) is severely ill-conditioned and the right-hand side tensor \({{\mathcal{F}}}\) is contaminated by error. Let \(\hat {{\mathcal{F}}}\) denote the unknown error-free tensor associated with \({{\mathcal{F}}}\), and assume that \(\hat {{\mathcal{F}}}\) is in the range of \({\mathcal M}\). We would like to determine the solution of minimal norm, \(\hat {{\mathcal{X}}}\), of

Straightforward solution of (11) may not give a meaningful approximation of \(\hat {{\mathcal{X}}}\) due to a large propagated error in the solution of (11) stemming from the error in \({\mathcal{F}}\). A common way to address this difficulty is to replace (11) by a nearby problem, whose solution is less sensitive to the error in \({\mathcal{F}}\). This replacement is known as regularization. One of the most popular regularization methods is due to Tikhonov. This regularization method replaces the solution of (11) by the minimization problem

The parameter μ > 0 is referred to as a regularization parameter. Its purpose is to balance the influence of the first term (the fidelity term) and the second term (the regularization term).

Let \({\mathcal{X}}_{k,\mu _{k}}={\tilde {{\mathcal{U}}}}_{k}\bar {\times }_{(N+1)} y_{k,\mu _{k}}\) be an approximate solution of (16), where \({\tilde {{\mathcal{V}}}}_{k}\) is defined as above. We obtain from (13), by using Lemma 1 and Proposition 1, that

This shows that (16) is equivalent to the following low-dimensional minimization problem

The minimization problem on the right-hand side can be solved in only \({\mathcal O}(k)\) arithmetic floating point operations for each value of μ > 0; see Eldén [11] for details.

We turn to the choice of the regularization parameter and assume that an upper bound 𝜖 > 0 for the norm of the error in the right-hand side \({\mathcal F}\) is explicitly known. Then the discrepancy principle can be applied to determine the regularization parameter μ. The discrepancy principle prescribes that μ > 0 be chosen so that

for some parameter η > 1 that is independent of 𝜖. This is a non-linear equation for μ > 0; see, e.g., Engl et al. [12] for a discussion on the discrepancy principle. Of course, other techniques for determining a suitable value of μ also can be applied; see, e.g., Kindermann and Raik [17, 18] for discussions.

It is not advisable to use the normal equations associated with the right-hand side of (18) in computations. However, the normal equations are convenient to apply when deriving expressions for determining a value of μ > 0 that approximately satisfies (19). Let yk,μ denote the solution of (18). Using the normal equations associated with the right-hand side of (18), yk,μ can be expressed as

Consequently,

Introduce the functions

Proposition 6

Let η > 1 and 𝜖 > 0 be constants, and let the function ϕk(μ) be defined by (21). If μ > 0 satisfies

then the associated solution yk,μ of (18) is such that

and \({\mathcal{X}}_{k,\mu }= {\tilde {{\mathcal{V}}}}_{k}\bar {\times }_{(N+1)} y_{k,\mu }\) fulfills

Moreover,

Proof

It can be shown that ϕk(μ) ≤ ψk(μ) for μ ≥ 0. A proof based on interpreting ϕk(μ) as a Gauss quadrature rule and ψk(μ) as a Gauss–Radau quadrature rule with a fixed node at the origin is provided in [8] in the context of solving large linear systems of equations with a severely ill-conditioned matrix and an error-contaminated right-hand side. Equation (24) follows from (17). □

The following result is easy to show. A proof can be found in [8].

Proposition 7

Let ϕk(μ) be defined by (21). Then the function μ → ϕk(1/μ) is strictly decreasing and convex for μ > 0. Moreover,

In particular, Newton’s method applied to compute the solution μk of the equation

with an initial approximate solution μ0 ≥ 0 to the left of the solution converges monotonically and quadratically. For instance, one may choose μ0 = 0 when the function \(\mu \rightarrow \phi _{k}(1/\mu )\) and its derivative are suitably defined at μ = 0.

It follows from Proposition 7 that the use of the Newton method to solve (25) is easy to implement, because the method does not have to be safe-guarded when starting with μ0 = 0. This is discussed in [8]. However, a cubically convergent zero-finder described in [26] and applied in [7, 26] requires fewer iterations and less CPU-time.

The most expensive part of the computations with Algorithm 1 is the evaluation of \({\mathcal M}^{*}({\mathcal V}_{j})\) and \({\mathcal M}({\mathcal U}_{j})\) in lines 5 and 11 of the algorithm. With the aim of keeping the computational effort required by Algorithm 1 as small as possible, we would like to choose the number of steps, k, of the algorithm small, but large enough to be able to satisfy (23). To achieve this, we proceed as follows: Carry out a few steps k > 0 with Algorithm 1, say k = 2, and compute the solution μk > 0 of ϕk(1/μ) = 𝜖2. If ψk(1/μk) ≤ η2𝜖2, then (23) holds for

where \(y_{k,\mu _{k}}\) is defined by (20) with μ = μk. If, instead, ψk(1/μk) > η2𝜖2, then we increase k by one, i.e., we set k = k + 1 and carry out one more step with Algorithm 1. We increase the number of steps until (23) holds. Typically, only a few steps of Algorithm 1 are required to satisfy (23). The required number of evaluations of the expressions \({\mathcal M}^{*}({\mathcal V}_{j})\) and \({\mathcal M}({\mathcal U}_{j})\) typically is fairly small. This is illustrated in Section 4. Algorithm 2 summarizes the computations required for Tikhonov regularization based on the GKB−BTF process.

This section focused on the solution of equation (11). However, the solution method described can be applied to the solution of more general tensor (1).

4 Numerical examples

This section shows a few numerical experiments that illustrate the performance of the method described in Section 3. We limit ourselves to the case N = 3 in (2) and (3). For notational simplicity, we write (2) and (3) in the form \({\mathcal{L}}({\mathcal{X}})={\mathcal{C}}\). The right-hand side tensor is in all test problems contaminated by an error tensor \({\mathcal{E}}\) with normally distributed random entries with zero mean. The entries are scaled to yield a specified noise level

All computations were carried out using the Tensor Toolbox [1] in MATLAB version R2018b with an Intel Core i7-4770K CPU @ 3.50-GHz processor and 24-GB RAM.

We report the relative errors

where \(\hat {{\mathcal{X}}}\) denotes the desired solution of the problem with error-free right-hand side tensor \(\hat {{\mathcal{C}}}\) associated with \({\mathcal{C}}\), and \({\mathcal{X}}_{{\mu _{k}},k}\) denotes the k th computed approximation determined by the algorithms.

In the computations for Tables 1, 5, and 7, the iterations were terminated as soon as an approximate solution \({\mathcal{X}}_{{\mu _{k}},k}\) was found such that the discrepancy principle

was satisfied, where η = 1.01 is a user-chosen constant and ε is the norm of error in \({\mathcal{C}}\), i.e., \(\varepsilon =\|{\mathcal{E}}\|\). Our numerical results illustrate that the performance of the algorithms is not very sensitive to the choice of η(≥ 1); we illustrate the convergence behavior of the algorithms for several values of η in Example 5. We remark that the left-hand side of (27) can be computed inexpensively by using (17) with \({\mathcal{M}}\) and \({\mathcal{F}}\) replaced by \({\mathcal{L}}\) and \({\mathcal{C}}\), respectively. We compare Algorithm 2 of the present paper to methods that apply the Hessenberg and flexible Hessenberg processes based on tensor format to reduce the given large problem to smaller ones. These methods are used together with Tikhonov regularization and are described in [24]. The discrepancy principle is used to determine the regularization parameter. We refer to the method that uses the Hessenberg process based on tensor format together with Tikhonov regularization as the HT−BTF method; when the Hessenberg process based on tensor format is replaced by the flexible Hessenberg process based on tensor format, the resulting method is referred to as the FHT−BTF method.

When the coefficient matrices are dense and not very large, the FHT−BTF method outperforms the other methods in our comparison. However, for large and sparse coefficient matrices, FHT−BTF requires more CPU time than Algorithm 2. For large problems, the FHT−BTF method requires many iterations to satisfy the stopping criterion (27). We therefore for the results reported in Tables 2, 3, 4, 6, 8, 9, and 10 used the alternative stopping criterion

for a user-specified value of the parameter τ > 0. Moreover, at most 300 iterations were allowed. In the FHT−BTF method, we used two steps of the stabilized biconjugate gradient method based on tensor format (BiCGSTAB−BTF) [9] as inner iteration; see [24] for further details. Choosing a smaller value of τ results in that a larger number of iteration is required to satisfy (28). We illustrate the performance of Algorithm 2 for several values of τ in Example 5.

We report the number of iterations and the CPU-time (in seconds) required by the methods in our comparison to compute approximate solutions that satisfy the specified stopping criteria. Section 4.1 discusses the solution of severely ill-conditioned problems of the form (2) and Section 4.2 considers severely ill-conditioned problems of the form (3). The blurring matrices used in Section 4.1 can be expressed as

while the blurring matrices applied in Section 4.2 can be written as

where the A(ℓ) are a Gaussian Toeplitz matrix A = [aij] given by

or a Toeplitz matrix with entries

We further present some experiments for Sylvester and Stein tensor equations with the coefficient matrices given in Case II of Remark 1 at the end of each subsection. Blurring matrices of type (29) and (30) have been used in the literature to test iterative schemes for image deblurring; see [4,5,6, 15].

4.1 Experimental results for severely ill-conditioned Sylvester tensor equations

We consider (2) with coefficient matrices that are dense and very ill-conditioned. This kind of equation arises from the discretization of a fully three-dimensional microscale dual-phase lag problem by a mixed-collocation finite difference method; see [21,22,23] for details.

Example 1

Consider (2) with the matrices \(A^{(\ell )}=[a_{ij}]\in \mathbb {R}^{n\times n}\) for ℓ = 1,2,3 defined by

where \(x_{i}=\frac {2\pi (i-1)}{n}\), \(\xi _{j}=\frac {(j-1)L}{n}\), \(i,j=1,2,\dots ,n\), and L = 300. When n is odd, the coefficient matrices A(ℓ) are well-conditioned and the problem can be solved successfully with a block iterative method; see [3]. However, when n is even, the coefficient matrices are very ill-conditioned. This is illustrated in [24, Example 5.4]. The error-free right-hand side of (2) is constructed so that \(\hat {{\mathcal{X}}}=\text {randn}(n,n,n)\) is the exact solution, i.e., \(\hat {{\mathcal{X}}}\) has normally distributed random entries with mean zero and variance one. Table 1 shows the numerical results obtained. Computed approximate solutions and the exact solution are displayed in Fig. 3.

Table 1 shows the FHT−BTF method to perform better than the other methods. This is typical for problems with dense coefficient matrices.

We next turn to an image restoration problem, in which the error-free right-hand side in (2) is constructed so that the exact solution is a hyperspectral image. Here, the matrices A(i), i = 1,2,3, are sparse and we will see that Algorithm 2 performs the best.

Example 2

We consider the situation when the exact solution of (2) is a tensor of order 1019 × 1337 × 33 that represents a hyperspectral image of a natural scene.Footnote 2 The coefficient matrices A(1), A(2), and A(3) are defined by (30) with suitable dimensions and with r = 2 for A(1) and A(2), and r = 3 for A(3). This gives cond(A(1)) = 5.26 ⋅ 1016, cond(A(2)) = 1.75 ⋅ 1017, and cond(A(3)) = 4.75 ⋅ 1016. Thus, all the coefficient matrices are numerically singular.

As mentioned above, the (F)HT−BTF methods can not be efficiently used with the stopping criterion (27). Therefore, we used the stopping criterion (28) for all algorithms. The results are reported in Table 2. Algorithm 2 can be seen to perform better than the HT−BTF and FHT−BTF methods. Table 7 illustrates that the computational effort increases as the error in the tensor \({\mathcal{C}}\) decreases. Here, Algorithm 2 was terminated as soon as (27) was satisfied. The contaminated and restored images are displayed in Figs. 4 and 5.

Example 2. a Exact image, b blurred and noisy image with noise level ν = 0.01, and c restored image by Algorithm 2 using the stopping criterion (27)

Example 2. a blurred and noisy image with noise level ν = 0.01, restored images by b HT−BTF, c FHT−BTF, and d Algorithm 2 using the stopping criterion (28)

Example 3

Consider the Sylvester tensor equation (2) whose coefficient matrices A(1), A(2), and A(3) are defined by (30) with r = 30 for A(1), r = 20 for A(2), and r = 20 for A(3). We examine the performance of algorithms for the following cases:

- Case I :

-

Let the exact solution of (2) be hyperspectral image of order 1019 × 1337 × 33 in the above example. Here, we have cond(A(1)) = 1.66 ⋅ 1018,cond(A(2)) = 4.13 ⋅ 1019, and cond(A(3)) = 5.59 ⋅ 1018.

- Case II :

-

Let \(\hat {{\mathcal{X}}}=\text {randn}(1000,500,100)\) be the exact solution of (2), i.e., \(A^{(1)}\in \mathbb {R}^{1000\times 1000}\), \(A^{(2)}\in \mathbb {R}^{500\times 500}\) and \(A^{(3)}\in \mathbb {R}^{100\times 100}\) for which cond(A(1)) = 1.74 ⋅ 1018,cond(A(2)) = 8.07 ⋅ 1017, and cond(A(3)) = 3.66 ⋅ 1018.

Results for these cases are reported in Table 3. The table shows Algorithm 2 to converge faster for Case I. However, the HT−BTF method outperforms the other approaches for the noise level 0.01 for Case II. We remark that the performance of the methods when applied to the Stein tensor equation is different when increasing r in the coefficient matrices; see Example 7 for more details.

We turn to results for the Sylvester tensor equation with the coefficient matrices given in Case II of Remark 1. This equation arises from the discretisation of a three-dimensional convection-diffusion equation on a uniform grid using a standard finite difference for the diffusion term and a second order convergent scheme (Fromm’s scheme) for the convection term with mesh size h = 1/(n + 1); see [2, 3]. This problem was examined in [3] for n × n × n grids with n ≤ 110, for which the corresponding matrix \(\mathcal {A}\) is not severely ill-conditioned. However, the condition number increases with the value of n.

Example 4

Consider the Sylvester tensor equation for N = 3 with the coefficient matrices A(ℓ) for ℓ = 1,2,3 given in the second case of Remark 1. Table 4 shows that Algorithm 2 is an efficient solver. When the noise level is small, FHT−BTF requires more CPU-time than HT−BTF and produces slightly more accurate approximate solutions.

4.2 Experimental results for severely ill-conditioned Stein tensor equations

In this subsection, we consider the solution of three severely ill-conditioned problems of the form (3). For the first two examples, error-free right-hand sides are constructed so that the exact solutions are color images. The iterations with the algorithms were terminated with the stopping criteria (27) or (28). We conclude this subsection by reporting the results for Stein tensor equations with the coefficient matrices given in Case II of Remark 1.

Example 5

The “exact” imageFootnote 3 is represented by a 576 × 787 × 3 tensor and is displayed in Fig. 6a. The coefficient matrices of (3) are A(1), which is defined by (29), and A(2) and A(3), which are given by (30), and have suitable dimensions. We set r = 7,σ = 2 for A(1), and r = 2 for A(2) and A(3). Then cond(A(1)) = 1.79 ⋅ 106, cond(A(2)) = 4.05 ⋅ 1017, and cond(A(3)) = 6.45 ⋅ 1049. We found that when using the stopping criterion (28), the performance of Algorithm 2 is not very sensitive to small changes in η(> 1) and τ; see Fig. 7 for details.

Example 5. a Exact image, b blurred and noisy image with noise level ν = 0.01, restored image by c HT−BTF, d FHT−BTF, and e Algorithm 2 using the stopping criterion (27)

Example 6

Let the exact solution of (3) be of order 1019 × 1337 × 33; it represents the hyperspectral image shown in Fig. 8. The coefficient matrices A(1), A(2), and A(3) of suitable dimensions are defined by (30) with r = 12 for A(1), r = 2 for A(2), and r = 6 for A(3). Then cond(A(1)) = 2.05 ⋅ 1018, cond(A(2)) = 1.75 ⋅ 1017, and cond(A(3)) = 2.44 ⋅ 1017.

Example 6. a Exact image, b blurred and noisy image with noise level ν = 0.01, and c restored image by Algorithm 2 using the stopping criterion (27)

Tables 5, 6, 7, and 8 show results for Examples 5 and 6. Algorithm 2 can be seen to be superior to the other methods examined. The exact, contaminated, and restored images are shown in Figs. 6, 8, and 9.

Example 6. a Blurred and noisy image, restored images by b HT−BTF, c FHT−BTF, and d Algorithm 2 using the stopping criterion (28) with τ = 3 ⋅ 10− 2

Similarly to Example 3, we consider coefficient matrices (30) with larger values of r. Differently from Sylvester tensor equations, all algorithms perform better when increasing the value of r. For the Stein tensor equation, we note that Algorithm 2 can be competitive with the (F)HT−BTF method.

Example 7

Consider the Stein tensor (3) with the matrices A(ℓ) given by (30) for ℓ = 1,2,3. Let r = 40 for A(1), r = 50 for A(2), and r = 30 for A(3). Table 9 reports results for the following two cases:

- Case I :

-

Let the exact solution of (3) be the hyperspectral image of order 1019 × 1337 × 33 mentioned above. We have cond(A(1)) = 1.18 ⋅ 1018,cond(A(2)) = 4.87 ⋅ 1018, and cond(A(3)) = 3.12 ⋅ 10114.

- Case II :

-

Let \(\hat {{\mathcal{X}}}=\text {randn}(1000,500,100)\) be the exact solution of (3); i.e., \(A^{(1)}\in \mathbb {R}^{1000\times 1000}\), \(A^{(2)}\in \mathbb {R}^{500\times 500}\), and \(A^{(3)}\in \mathbb {R}^{100\times 100}\). We have cond(A(1)) = 2.48 ⋅ 1019, cond(A(2)) = 6.70 ⋅ 1017, and cond(A(3)) = 5.70 ⋅ 1018.

The results reported in Table 9 show Algorithm 2 to perform better than (F)HT−BTF for larger values of r.

We conclude this subsection by reporting results for a Stein tensor equation, whose coefficient matrices are given by (6).

Example 8

Let \(\hat {{\mathcal{X}}}=\text {randn}(n,n,n)\) be the exact solution of equation (3) and let the coefficient matrices A(1), A(2), and A(3) be defined by (6). We observe that the (F)HT−BT methods perform less well when increasing the problem size. Therefore, we used a slightly larger value of τ for n = 200. Table 10 shows that HT−BT is superior to Algorithm 2 for n = 120. When n = 200, Algorithm 2 outperforms (F)HT−BT.

5 Conclusions

This paper first presents some results on the conditioning of the Stein tensor equation. Then it introduces the Golub–Kahan bidiagonalization process with application to solving severely ill-conditioned linear tensor equations, such as Sylvester and Stein tensor equations. The iterative scheme also can be applied to the solution of general linear tensor equations with an operator over \(\mathbb {R}^{n_{1}\times n_{2}\times {\cdots } \times n_{k}}\). We provide new theoretical results and present some numerical examples with applications to high-dimensional PDEs and color image restoration to illustrate the applicability and effectiveness of the proposed iterative method.

Notes

All computations for this section were carried out on a 64-bit 2.50-GHz core i5 processor with 8.00-GB RAM using MATLAB version 9.4 (R2018a).

The image is available at https://www.hlevkin.com/TestImages/Boats.ppm

References

Bader, B.W, Kolda, T.G.: MATLAB tensor toolbox version 2.5. http://www.sandia.gov/tgkolda/TensorToolbox

Ballani, J., Grasedyck, L.: A projection method to solve linear systems in tensor format. Numer. Linear Algebra Appl. 20, 27–43 (2013)

Beik, F.P.A., Movahed, F.S., Ahmadi-Asl, S.: On the Krylov subspace methods based on tensor format for positive definite Sylvester tensor equations. Numer. Linear Algebra Appl. 23, 444–466 (2016)

Bentbib, A.H., El Guide, M., Jbilou, K., Reichel, L.: A global Lanczos method for image restoration. J. Comput. Appl. Math. 300, 233–244 (2016)

Bentbib, A.H., El Guide, M., Jbilou, K, Reichel, L.: Global Golub–Kahan bidiagonalization applied to large discrete ill-posed problems. J. Comput. Appl. Math. 322, 46–56 (2017)

Bouhamidi, A., Jbilou, K., Reichel, L., Sadok, H.: A generalized global Arnoldi method for ill-posed matrix equations. J. Comput. Appl. Math. 236, 2078–2089 (2012)

Buccini, A., Pasha, M., Reichel, L.: Generalized singular value decomposition with iterated Tikhonov regularization. J. Comput. Appl. Math. 373, 112276 (2020)

Calvetti, D., Reichel, L.: Tikhonov regularization of large linear problems. BIT 43, 263–283 (2003)

Chen, Z., Lu, L.Z.: A projection method and Kronecker product preconditioner for solving Sylvester tensor equations. Sci. China Math. 55, 1281–1292 (2012)

Cichocki, A., Zdunek, R., Phan, A. H., Amari, S. I.: Nonnegative matrix and tensor factorizations: Applications to exploratory multi-way data analysis and blind source separation. John Wiley & Sons, Chichester (2009)

Eldén, L.: Algorithms for the regularization of ill-conditioned least squares problems. BIT 17, 134–145 (1977)

Engl, H.W., Hanke, M., Neubauer, A.: Regularization of inverse problems. Kluwer, Dordrecht (1996)

Golub, G.H., Van Loan, C.F.: Matrix computations. The Johns Hopkins University Press, Batimore (1996)

Horn, R.A., Johnson, C.R.: Matrix analysis. Cambridge University Press, Cambridge (1985)

Huang, G., Reichel, L., Yin, F.: On the choice of subspace for large-scale Tikhonov regularization problems in general form. Numer. Algorithms 81, 33–55 (2019)

Huang, B., Xie, Y., Ma, C.: Krylov subspace methods to solve a class of tensor equations via the Einstein product. Numer. Linear Algebra Appl. 26, e2254 (2019)

Kindermann, S.: Convergence analysis of minimization-based noise level-freeparameter choice rules for linear ill-posed problems. Electron. Trans. Numer. Anal. 38, 233–257 (2011)

Kindermann, S., Raik, K.: A simplified L-curve method as error estimator. Electron. Trans. Numer. Anal. 53, 217–238 (2020)

Kolda, T.G., Bader, B.W.: Tensor decompositions and applications. SIAM Rev. 51, 455–500 (2009)

Liang, L., Zheng, B.: Sensitivity analysis of the Lyapunov tensor equation. Linear Multilinear Algebra 67, 555–572 (2019)

Malek, A., Bojdi, Z.K., Golbarg, P.N.N.: Solving fully three-dimensional microscale dual phase lag problem using mixed-collocation finite difference discretization. J. Heat Transf. 134, 094504 (2012)

Malek, A., Masuleh, S.H.M.: Mixed collocation-finite difference method for 3D microscopic heat transport problems. J. Comput. Appl. Math. 217, 137–147 (2008)

Masuleh, S.H.M., Phillips, T.N.: Viscoelastic flow in an undulating tube using spectral methods. Comput. Fluids 33, 1075–1095 (2004)

Najafi-Kalyani, M., Beik, F.P.A., Jbilou, K.: On global iterative schemes based on Hessenberg process for (ill-posed) Sylvester tensor equations. J. Comput. Appl. Math. 373, 112216 (2020)

Paige, C.C., Saunders, M.A.: LSQR: An algorithm for sparse linear equations and sparse least squares problems. ACM Trans. Math. Softw. 8, 43–71 (1982)

Reichel, L., Shyshkov, A.: A new zero-finder for Tikhonov regularization. BIT 48, 627–643 (2008)

Sun, Y.S., Jing, M., Li, B.W.: Chebyshev collocation spectral method for three-dimensional transient coupled radiative-conductive heat transfer. J. Heat Transf. 134, 092701–092707 (2012)

Xu, X., Wang, Q.-W.: Extending BiCG and BiCR methods to solve the Stein tensor equation. Comput. Math. Appl. 77, 3117–3127 (2019)

Zak, M.K., Toutounian, F.: Nested splitting conjugate gradient method for matrix equation AXB = C and preconditioning. Comput. Math. Appl. 66, 269–278 (2013)

Zak, M.K., Toutounian, F.: Nested splitting CG-like iterative method for solving the continuous Sylvester equation and preconditioning. Adv. Comput. Math. 40, 865–880 (2014)

Zak, M.K., Toutounian, F.: An iterative method for solving the continuous Sylvester equation by emphasizing on the skew-Hermitian parts of the coefficient matrices. Internat. J. Comput. Math. 94, 633–649 (2017)

Acknowledgments

The authors would like to thank the anonymous referees for their suggestions and comments.

Funding

Research by LR was supported in part by NSF grants DMS-1720259 and DMS-1729509.

Author information

Authors and Affiliations

Corresponding author

Additional information

Dedicated to Gérard Meurant on the occasion of his 70th birthday.

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Beik, F.P.A., Jbilou, K., Najafi-Kalyani, M. et al. Golub–Kahan bidiagonalization for ill-conditioned tensor equations with applications. Numer Algor 84, 1535–1563 (2020). https://doi.org/10.1007/s11075-020-00911-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11075-020-00911-y

Keywords

- Linear tensor operator equation

- Ill-posed problem

- Tikhonov regularization

- Golub–Kahan bidiagonalization