Abstract

In this paper, a new adaptive thresholding based sub-histogram equalization (ATSHE) scheme is proposed for contrast enhancement and brightness preservation with retention of basic image features. The histogram of an input image is divided into different sub-histogram using adaptive thresholding intensity values. The number of threshold values or sub-histograms of the image are not fixed, but depends on the peak signal-to-noise ratio (PSNR) of the thresholded image. Histogram clipping is also used here to control the undesired enhancement of resultant image thus avoiding over-enhancement. Median value of the original histogram gives the threshold value of clipping process. The main objective of proposed method is to improve contrast enhancement with preservation of mean brightness value, structural similarity index (SSIM) and information content of the images. Image contrast enhancement is examined by well-known enhancement assessment parameters such as contrast per pixel and modified measure of enhancement. The mean brightness preservation of the image is evaluated by using absolute mean brightness error value and feature preservation qualities are checked through SSIM and PSNR values. Through the proposed routine, the enhanced images achieve a good trade-off between features enhancement, low contrast boosting and brightness preservation in addition with the natural feel of the original image. In particular, the proposed ATSHE scheme due to its adaptive nature of threshold selection can successfully enhance images under oodles of weak illumination situations such as backlighting effects, non-uniform illumination low contrast and dark images.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Poor representation of information in the digital images due to circumstances during captured by several devices, operational error, and user’s inexperience, the contrast of captured images may not be satisfactory. To recover apt information for the captured scene, a common applied procedure in low-level computer vision is enhancing the image contrast. Visual image quality is actively enriched using contrast enhancement approaches, which are increasingly essential for the design of consumer electronic devices and digital multimedia systems. Contrast collectively deals the pixel intensity differences between structures and distinct objects in the image. Region of interest (ROI) or an object can be easily observed in a good contrast images.

Substantial researches have undertaken to address this point for vigorous contrast enhancement techniques. In general, image enhancement techniques utilized in many areas as a pre-processing tool to improve pictorial appearance of the images (Sidike et al. 2018; Priyadharsini et al. 2018; Bhandari et al. 2017, 2018). These algorithms categorized into two domains, which are spatial domain and transformation domain (Gonzalez and Woods 2011). Spatial domain-based methods work directly on the pixels of an input image, on the other hand transformation domain-based methods work on the transform domain. Histogram equalization (HE) (Gonzalez and Woods 2011) based approaches are a vital technique for improving both the subjective and objective quality of an image in the image enhancement area. The objective quality of image relates to statistical analysis of image enhancement and subjective quality of image deals with naturalness of the image. HE based techniques (Kim 1997; Wan et al. 1999; Chen and Ramli 2003a; b; Sim et al. 2007; Shanmugavadivu and Balasubramanian 2014; Singh and Kapoor 2014; Singh et al. 2015; Tang and Isa 2017; Liu et al. 2011; Lai et al. 2017; Abdullah-Al-Wadud 2007; Ooi and Isa 2010; Huang and Yeh 2013; Santhi and Wahida Banu 2015; Singh et al. 2016; Xiao et al. 2014; Niu et al. 2016; Wong et al. 2016) have been frequently used to improve the image qualities due to their simplicity, speed, and effective outcomes. These algorithms manipulate histogram of the input image to yield an image with better quality and improved interpretability.

Histogram equalization (HE) method is an algorithm which deals with probability density function, cumulative density function (CDF), and mapping function for contrast enhancement of the images (Gonzalez and Woods 2011). It changes the dynamic range and flatness of an input histogram. CDF of the image used for mapping of input gray levels to new transformed gray levels in the HE. The drawbacks of this framework are undesirable visual artifact, alteration of mean brightness, over-enhancement, and under-enhancement occur in enhanced image. Furthermore, it also fails for weakly illuminated images and produces abrupt changes in the enhanced images with loss of basic image features.

In this paper, the constraints of mean brightness shifting, domination of high-frequency bins, and washed-out appearance suffered by HE approach are addressed. Therefore, the objective of this paper is to design a high performance contrast enhancement framework that fits to provide the best visual result for natural and satellite images. Good contrast enhancement algorithms should specifically address some important properties, which are mentioned below.

-

1.

Uniform contrast: Method should generate uniform contrast of the whole image.

-

2.

Brightness preservation: Technique should improve the contrast of the image with preserving brightness.

-

3.

Noise tolerance: Algorithm should evade noise-enhancing artifacts during enhancement process or exhibit appropriate noise immunity.

-

4.

Convenient implementation: Approach should be simple, efficient, quick and easy to implement.

The rest of the paper arranged as follows. Section 2 presents a comprehensive review of the relevant works in image contrast enhancement area that are focused on HE based methods. The proposed new adaptive thresholding based sub-histogram equalization (ATSHE) presented in Sect. 3. Simulation results and discussions have been provided in Sect. 4, which includes comparison with numerous contrast enhancement methods by computing quality measurement parameters. Section 5 concludes the paper by emphasizing the merits and future scope of the proposed work.

2 Related work

In literature, there are several rigorous definitions of contrast. Generally, every description defines the contrast as a rate of the luminance variation to the background luminance of mean value. Among the noticeable methods of contrast enhancement techniques, HE based algorithms have drawn the attention of many researchers. In Kim (1997) introduced a concept, which segments the original histogram into two parts and equalizes each sub-histogram independently. This technique is known as brightness preserving bi-histogram equalization (BBHE) (Kim 1997), which was the first approach to exploit partitions of the input histogram using mean intensity value. BBHE keeps the mean brightness and improves the contrast of the image. After few years, Wan et al. (1999) presented a method named dualistic sub-image histogram equalization (DSIHE). This framework uses median brightness value in place of mean brightness and it has been claimed that DSIHE is superior to BBHE (Kim 1997) with respect to entropy and brightness preservation of the image. To avoid excess brightness change and improve basic features in the enhanced image, several algorithms have been introduced in last two decades, which based on traditional HE scheme.

Chen and Ramli (2003) proposed an approach to partition the input histogram using the threshold value based on minimum absolute mean brightness error (AMBE). Thereafter, Chen and Ramli (2003) designed a technique where histogram division achieved by mean value similar to BBHE (Kim 1997). In BBHE, the partition done only once whereas in this algorithm the division is performed recursively. There are many methods, which have exploited recursive function for finding optimal threshold value (s) to segment the input histogram. Sim et al. (2007) introduced an algorithm known as recursive sub-image histogram equalization (RSIHE) where median values used for sub-histograms criteria. RSIHE is similar to the Chen and Ramli (2003) method but it uses median value instead of the mean value. The most difficult task for these methods (Sim et al. 2007) is to generate optimum number of sub-histograms for effective contrast enhancement. Most of the techniques fail to maintain basic image features such as mean brightness after enhancement process.

Shanmugavadivu and Balasubramanian (2014) presented a technique, which segments the original histogram into two sub-histograms through Otsu’s threshold. A new method where histogram is divided into over exposed and under exposed sub-histogram parts (ESIHE) (Singh and Kapoor 2014). Singh et al. (2015), improved ESIHE (Singh and Kapoor 2014) by using recursive partition of the histogram and this paper has claimed that it is very effective for night vision and underwater images. Recently, Tang and Isa (2017) introduced a framework based on sub-histogram mechanism that is used to control enhancement rate through minimizing the supremacy effect of high frequency bins. Furthermore, in local histogram equalization (LHE) scheme, an input image divided into number of non-overlapped sub-blocks. An LHE approach where histogram projection is performed separately on each sub-blocks (Liu et al. 2011). Another LHE technique that uses the bilateral Bezier curve for the histogram modification and gradient information for transformation function (Lai et al. 2017).

Abdullah-Al-Wadud (2007) presented a novel approach where local minima of histogram is used for the histogram partitions. Ooi and Isa (2010) have proposed quadrants dynamic histogram equalization (QDHE) for better contrast enhancement. In this method, dynamic histogram equalization (DHE) (Abdullah-Al-Wadud 2007) and clipping process are incorporated for improving the contrast of gray scale images. Subsequently, Huang and Yeh (2013) have introduced a technique of image contrast enhancement for preserving the mean brightness (ICEPMB) (Huang and Yeh 2013) without losing image features. This technique uses mean, standard devitation and PSNR values for contrast enhancement. In Santhi and Wahida Banu (2015) proposed adaptive contrast enhancement using modified histogram equalization (ACMHE) framework and observed that it overcome the drawbacks of change in mean brightness, saturation artifacts, and over enhancement by exploiting general histogram equalization algorithm.

Recently, Singh et al. (2016) proposed a contrast enhancement method via texture region using histogram equalization technique where different quality checking parameters are reported for performance analysis. An approach designed on the grounds of gray-level and gradient magnitude (GLGM) (Xiao et al. 2014) histogram, in which space distribution of GLGM histogram are used through the Fibonacci quantized gradient magnitude to describe space information effectively. Niu et al. (2016) introduced a method on the basis of tone-preserving entropy maximization and high entropy contained in the simulated image to control the loss of tone continuity. Another HE-based scheme (Wong et al. 2016) where color channel stretching, color space conversion, histogram equalization, equalization compression, optimization of compression profile, and restoration of color saturation steps are used to improve image quality.

In recent years, several fuzzy-based enhancement techniques (Cheng and Xu 2000; Sheet et al. 2010; Shakeri et al. 2017; Hasikin and Isa 2014) proposed. A new algorithm, which exploits the fuzzy set and fuzzy entropy principle to control the uncertainty in the images (Cheng and Xu 2000). Sheet et al. (Sheet et al. 2010) introduced a method for the purpose of brightness preserved contrast enhancement, which uses fuzzy statistics and dynamic histogram equalization. Shakeri et al. (Shakeri et al. 2017) proposed a system, which includes histogram analysis, clusters the brightness levels, and transfer function for image enhancement. Hasikin and Isa (Hasikin and Isa 2014) have presented an approach by considering image fuzzification, modification or membership values, and image defuzzification stages. These methods termed as HE-based approaches for image enhancement.

After a brief review of spatial domain-based algorithms, which are completely, focused on traditional HE-based method. A few more important frequency or transformation-based domain techniques (Bhandari et al. 2011, 2014, 2016; Demirel et al. 2010), and other methods (He et al. 2011; Fu et al. 2015, 2016) are also discussed for the image contrast and brightness enhancement related problems. In Bhandari et al. (2011) proposed a new approach for low-contrast satellite image enhancement based on the singular value decomposition (SVD) and discrete cosine transform (DCT). This algorithm changes the input image into the SVD-DCT domain and enhanced image is generated by normalized singular value matrix. Subsequently, Bhandari et al. (2014), they have introduced another new approach by applying cuckoo search algorithm to get contrast and brightness enhancement for satellite image by using DWT–SVD (Demirel et al. 2010). An approach for dark satellite image enhancement using knee transfer function and gamma correction through DWT–SVD methods (Bhandari et al. 2016). In this method, knee function and gamma correction concepts are used to boost the dark satellite images.

He et al. (2011) introduced a new technique known as single image haze removal using dark channel prior (DCP). This method is a simple but effective image prior-dark channel prior to remove haze from a single input image. Other well-known algorithms based on the Retinex theory (Fu et al. 2015, 2016), Multiscale fusion (MF) (Fu et al. 2016), which uses Retinex theory as its base, enhance the observed image by adjusting the illumination component by laplacian pyramid operator. The enhanced image represents a trade-off among preserving the natural feel of the image, detail enhancement and, local contrast improvement. Fu et al. (2015) presented a new probabilistic method for image enhancement (PIE), which is based on a simultaneous estimation of illumination and reflectance in the linear domain.

This paper focuses on achieving a good trade-off between mean brightness preservation and contrast enhancement along with other basic quality parameters. In this paper, a new adaptive thresholding based sub-histogram equalization (ATSHE) scheme has been introduced which preserves the brightness of the image and improves the contrast of the image effectively without introducing structural artifacts.

3 Proposed methodology

In this section, proposed ATSHE enhancement scheme is discussed in detail. It contains three important modules which are adaptive multiple thresholding based histogram partitions, histogram clipping and intensity transformation, respectively. Again, first module has two parts, sharp adaptive multiple threshold selection and an optimum PSNR calculation. After the multiple partitions of histogram, median brightness value is incorporated for the clipping process. In the final stage of the proposed method, intensity transformation module is used for each generated sub-image to get feature preserved enhanced image.

3.1 Adaptive multiple thresholding approach for histogram partition

In this paper, a straightforward and effective adaptive thresholding based histogram modification framework has been proposed for enhancement of weakly illuminated image, which exploits different mature image processing methods. Let input image Q be a gray scale or color image, proposed algorithm is directly applied on gray scale image because gray image defines a two-dimension matrix i.e. A × B. However, color image is represented as a discrete three-dimensional matrix of red–green–blue (RGB) color space (channel). It can be treated as three two-dimensional matrices belong to each color space. Proposed method applies on each (RGB) channel of color image individually.

-

1.

Sharp adaptive multiple threshold selection An input image Q is used for the proposed algorithm, where the total number of pixels and the discrete intensity levels are A × B and D, respectively. The input histogram G of gray scale or any channel of the color image is expressed as

$$ G(l) = h_{l} \quad {\text{where}}\;l = 0,1,2 \ldots \ldots , \, D - 1 $$(1)The number of pixels at specific intensity l of G is represented by hl. Based on G, the mean value µ and standard deviation function σ are defined as

$$ \mu = \frac{{\sum\nolimits_{l = s}^{e} {l \times G(l)} }}{{\sum\nolimits_{l = s}^{e} {G(l)} }} $$(2)$$ \sigma = \left( {\frac{{\sum\nolimits_{l = s}^{e} {(l - \mu )^{2} \times G(l)} }}{{\sum\nolimits_{l = s}^{e} {G(l)} }}} \right)^{1/2} $$(3)where [s, e] denotes the interval of calculation, which takes initial value [0, 255] for both the mean and standard deviation function. Three threshold values are generated by using µ − σ, µ, and µ + σ; these values can be used for further partition of the input histogram.

-

2.

Optimum PSNR calculation In this part, we find the peak signal-to-noise ratio (PSNR) at each optimum recursion level. The interval of each optimal recursive level is between s and e, where the low boundary s is set to µ − σ and the high boundary e is set to µ + σ. Mean function, standard deviation function and two new threshold values µ − σ and µ + σ are computed from the initial interval. New calculated interval [s, e] replaces the previous interval then after new mean and standard deviation are computed to set the new threshold values. Thus, many numbers of sub-histograms are generated in this recursive process. PSNR at each recursive level is obtained to select the appropriate number of sub-histogram (Huang and Yeh 2013; Arora et al. 2008). The formulation of PSNR and root-mean–square error is given as

$$ PSNR = 20\log_{10} \left( {\frac{D - 1}{RMSE}} \right) $$(4)where RMSE is the root-mean–square error, expressed as

$$ RMSE = \left( {\frac{1}{A \times B}\sum\limits_{x = 0}^{A - 1} {\sum\limits_{y = 0}^{B - 1} {[Q_{o} (x,y) - Q(x,y)]^{2} } } } \right)^{1/2} $$(5)where Q and Qo are the input and thresholded enhanced image, respectively, D is equal to 2b for b-bit digital image format (D = 256 for b = 8), and (x, y) represent the spatial coordinates of pixel in the image. For better image quality, PSNR value should be high. The optimum recursion level or optimum PSNR can be achieved when the increased PSNR is lower than a particular value of 0.1 dB (Huang and Yeh 2013) or in other words, we stop iterating when the increase in PSNR is lower than 0.1 dB (Arora et al. 2008). Which leads to saturation for the generation of sub-histograms by splitting the histogram of image.

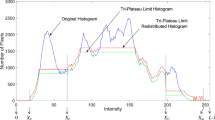

3.2 Histogram clipping

For contrast enhancement, histogram clipping found to be one of the best operation for general-purpose images concerning preservation of color feature (natural look) (Singh and Kapoor 2014). Histogram clipping is the process in which the input histogram is clipped using statistical measurement of the image. The core idea behind the histogram clipping is to control the enhancement rate consequently to produce natural enhanced image. Over enhancement is a big problem in the HE-based methods, which causes loss of natural color appearance, change of textures, destruction of edge, and undesirable artifacts. The clipping threshold value Tc is calculated as the median value of histogram bins (non-empty bins) which occur in the gray scale image (Kong et al. 2009; Singh and Kapoor 2014) or each channel of the color image.

where Gc(l) and G(l) are the clipped and input histogram respectively.

3.3 Intensity transformation

Based on the histogram separation, the optimum number t of sub-images can be expressed as follows:

where Qxy is defined as Q(x, y), k = 0,1,2,…..,t − 1, R denotes threshold value of the sub-images, and Qt(x, y) represents each sub-image.

The probability density function of each sub-histogram (sub-image) is expressed as

where l = Rk + 1, Rk + 2,….,Rk+1, pdk(l) is related with the histogram of the kth sub-image to show the frequency of a specific input gray level or intensity l. The cumulative distribution function (CDF) is defined as

where cdk of the sub-image Qk for kth partition.

Then, the transformation function is expressed as

where Tk(l) represents the transformation function, k = 0,1,2,…,t − 1,and l = Rk + 1, Rk + 2,….,Rk+1.

Finally, the proposed algorithm generates Qo enhanced image. In case of color image, three enhanced images are produced by the proposed approach. Enhanced red, green, and blue channels are combined to form enhanced color output image.

The detail steps of the proposed method are described below and the complete flowchart routine of this framework drawn in Fig. 1.

-

Step 1 An input image Q is taken; if Q is a color image then it is separated into three channels (Red–Green–Blue) whereas if Q is a gray scale image it is directly used. The size of an input image is A × B. Each channel of color image is considered gray scale image and the subsequent steps are followed.

-

Step 2 Calculate the mean value µ and standard deviation function σ of the image in the interval [s, e], with initial value of [0, 255].

-

Step 3 Set two threshold values, µ − σ and µ + σ for the new interval [s, e], where s = µ − σ and e = µ + σ.

-

Step 4 New calculated interval [s, e] replaces the previous interval and again, find the mean and standard deviation values for new generated interval.

-

Step 5 Repeat the steps 3 and 4 for the division of the image into several number of sub-images and obtain the thresholding values as TH1, TH2,……THt-1 using stop iteration condition.

-

Step 6 Histogram clipping process is incorporated to control the enhancement rate, median value is used as a clipping threshold value i.e. Tc = median [G(l)].

-

Step 7 After the clipping process and t number of the sub-images based on the histogram separation, intensity transformation or histogram equalization is used to generate thresholded enhanced sub-image.

-

Step 8 Find the peak signal-to-noise ratio at each recursion level, thereafter, optimum recursion level can be generated when the increase in PSNR is lower than a particular value of 0.1 dB.

-

Step 9 Final enhanced image of each channel of color image or enhanced gray scale image is obtained, all three separated enhance color channel (RGB) are combined to form final enhanced color image Qo.

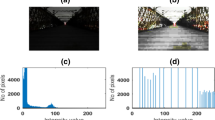

For the better understanding of the proposed method, two gray images are used in this paper and results are shown in Figs. 2 and 3. The details of the test images are represented in Table 1. The proposed ATSHE technique generates even number of the thresholds due to µ − σ and µ + σ. The number of the thresholds or sub-images depends on the optimum PSNR. Table 2 show the thresholds, sub-range’ boundaries and PSNR values for gray images. The difference of the PSNR value between two successive threshold numbers (th) must be lower than 0.1 dB to terminate the iteration process. For example, in Gray1 the difference of the PSNR values (th(4) − th(2)) is not less than the defined limit, results in continuation of the iteration process. The optimum recursion level or optimum PSNR is achieved (th(10) − th(8) < 0.1 dB) at 10th threshold. In case of the Gray2, optimum PSNR value is obtained at 6th threshold or th(6) and stop iteration condition is achieved at th(6).

In Fig. 4 the encircled portions in the input images show the weakly illuminated areas such as low contrast, darker regions, backlighting effects, non-uniform illumination. On the other hand, encircled region in the enhanced images clearly represents the balance level of enhancement and brightness preservation ability of proposed ATSHE approach without loss of information. The colors and features in original and proposed enhanced images clearly show the brightness difference and feature preservation ability with natural feel.

4 Experiments and discussion

In order to demonstrate the effectiveness of the proposed ATSHE method, several conventional and state-of-the-art techniques are compared, which are discussed in the introduction and related work sections. To compare the quantitative results of implemented approaches with the proposed framework, various performance evaluation metrics are required. For this purpose, absolute mean brightness error (AMBE), structural similarity index (SSIM), contrast per pixel (CPP), peak signal to noise ratio (PSNR), modified measure of enhancement (MEME), and discrete Entropy (DE) parameters are obtained and listed in the tables.

Quantitative assessment of the contrast enhancement is necessary along with qualitative assessment. By visual quality inspection the judgement of annoying artifacts, over enhancement and unnatural enhancement can be done. The quality of the enhanced images determines the capacity of techniques, which are justified by human eyes. However, judgement of the qualitative assessment varies from person to person’s views or perception. The human eye is not only very sensitive to the features present at both the extreme pixel intensity values, but also is sensitive to distinguish features present at the mid-range values of intensities (Arora et al. 2008). For fair comparison of the methods, quantities analysis is very important for an image. These parameters are standard and well-known for comparison purpose, and provide a support to qualitative analysis. Different objective assessment parameters discussed as given below.

4.1 Absolute mean brightness error (AMBE)

Absolute mean brightness error is defined as the absolute difference between mean brightness value of the original image and that of enhanced image (Huang and Yeh 2013). The minimum value of the AMBE shows better brightness preservation of the enhanced image.

where µQ and µQo are mean brightness of original and enhanced image, respectively.

4.2 Structural similarity index (SSIM)

Structural similarity index (SSIM) is a parameter, which is used for perceptual quality measurement of enhanced digital image with respect to reference digital image (Bhandari et al. 2014). For the best case of the SSIM, the structural similarity of the enhanced or simulated digital image is identical to reference or original digital image. The value of the SSIM varies from 0 to 1, for the best case it should be 1 and 0 for worst-case scenario.

where σQ is the square root of variance of original digital image ‘Q’ and σQo is square root of variance of enhanced image ‘Qo’, respectively. µQ and µQo are mean intensity of original and enhanced images, respectively. σQQo is the square root of covariance of images ‘Q’ and ‘Qo’, and c1 = (k1L)2 and c2 = (k2L)2 are constants. The value of k1, k2, and L is 0.01, 0.03, and 255, respectively. The constants are included to avoid instability in the SSIM quality parameter (Bhandari et al. 2014; Li and Bovik 2010).

4.3 Contrast per pixel (CPP)

Contrast per pixel is a performance metric, which measures the level of contrast in an image. CPP is a scheme for computing the average intensity difference between a pixel and its bordering pixels of the digital image (Santhi and Wahida Banu 2015; Sangee et al. 2010). The value of the CPP is used to describe local contrast of the image and it is defined as CPP in Eq. (14)

where γ(i, j) is the gray value and γ(m, n) represents neighbor pixel (i, j) in 3 × 3 window.

4.4 Peak signal to noise ratio (PSNR)

Peak signal-to-noise ratio (PSNR) is a feature measurement factor, which depends on the root mean square error (RMSE) of the images. RMSE is defined as the square root of the cumulative squared error between original and enhanced images, whereas the error measures amount of deviation between enhanced and original images. The ratio of maximum possible power of the signal to the power of corrupting noise is termed as PSNR (Celik and Tjahjadi 2010). This quantity approximates the way human observes the reconstruction quality. The PSNR and RMSE are computed according to Eqs. (4) and (5).

4.5 Modified measure of enhancement (MEME)

Modified measure of the enhancement (MEME) (Wang and Chen 2017) is an advanced version of the measure of enhancement (EME); the MEME overcomes the drawbacks or limitations of the EME quality measurement parameter that mentioned in (Agaian et al. 2007; Wang and Chen 2017; Sundaram et al. 2011; Kong and Isa 2017). An image Q is partitioned into d1 × d2 sub-images, Q w i, j represents the sub-image with spatial co-ordinate (i, j), MEME is defined as

where QDC represents a thumbnail image of Q and its size is same as the number of the sub-images that is exactly d1 × d2. Each pixel of the thumbnail image has the mean value (Q w i,j ) and α = 100. \( C_{i,j}^{w} \) and \( C_{{Q_{DC} }} \) are intra-block contrast and inter-blocks contrast, respectively. Peli (Peli 1990) defines the square root contrast C.

where A × B represents the size of image Q of interest and Qm, n denotes an image with size \( A \times B \).

4.6 Discrete Entropy (DE)

Discrete entropy (DE) measures the average information content or the degree of randomness in digital image (Thum 1984). The DE depends on the PDF of an image. A low entropy image has lower degree of randomness, while a high entropy image has higher degree of randomness. The Shannon entropy measures the richness of information associated with gray levels in the image.

where pd(l) represents the PDF at gray level l of the image and D is the maximum intensity value of the image.

4.7 Comparisons with HE based methods

The proposed ATSHE approach is compared with other ten different approaches which includes conventional and state-of-the-art histogram-based image enhancement techniques such as HE (Gonzalez and Woods 2011), BBHE (Kim 1997), DSIHE (Wan et al. 1999), RSIHE (Sim et al. 2007), QDHE (Ooi and Isa 2010), ICEPMB (Huang and Yeh 2013), ESIHE (Singh and Kapoor 2014), ACHME (Santhi and Wahida Banu 2015), ETHE (Singh et al. 2016) and DOTHE (Singh et al. 2016) methods. The simulation results are arranged in form of separate tables and graphical plots for each parameter which examines different aspect of the output images. All the experiments were performed on an Ubuntu Linux personal computer system having 3.1 GHz Intel Core i7 processor with 6 GB of memory. The main reasons behind selecting these methods are due to their exploitation of traditional histogram based approach. These algorithms mainly focused on sub-histogram, histogram clipping, texture histogram equalization, and dynamic histogram equalization schemes.

4.7.1 Qualitative evaluation

For the performance evaluation of contrast enhancement and brightness preservation, input images under weak illumination situations such as backlighting effects, non-uniform illumination, low contrast, and dark images have been selected from different databases. The original images and their corresponding histogram have been shown in Figs. 5 and 6, the enhanced images obtained for each test image and presented in Figs. 7, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18 and 19, respectively. From the visual analysis of the output images, it can be noticed that the proposed method provides the best results for almost all sample images and the presented technique is robust for low contrast images. Generally, a particular state-of-the-art algorithm works best for a special kind of image only and may not be useful in a very wide sense for the different purpose such as dark or low contast images.

A histogram plot of a particular image (Img1) for each algorithm is represented in Fig. 8. In HE method, the enhanced image changes the mean brightness and produces over-enhancement and under-enhancement result. The reason behind such kind of artifacts in HE is that it stretches the dynamic range and flattens histogram, as illustrated in Fig. 8b. Similar type of artifacts in enhanced images can be noticed from Figs. 7b, 9b, 10, 11, 12, 13, 14, 15, 16, 17, 18 and 19b. For the test image Img1, undesirable artifacts, over-enhancement, and under-enhancement can be easily observed at background and hair regions of the HE-based enhanced image, as depicted in Fig. 7(b).

The enhancement results of BBHE and DSIHE approaches are similar in term of visible aspect because both methods follow bi-histogram equalization based technique, as shown in Figs. 7c, d, 9c, d, 10, 11, 12, 13, 14, 15, 16, 17, 18 and 19c, d. Histogram plots of enhanced ‘Img1’ image as shown in figure can also visualize the pictorial similarity of both methods. Figure 8c, d. In fact, both methods improve contrast globally and degrade pictorial features due to over-enhancement. These algorithms can also reduce information content and change natural color appearance of the images. The existing limitation within the HE method improved by BBHE and furthermore DSIHE has overcome the demerits of BBHE.

In case of the RSIHE, QDHE, and ICEPMB methods, the resultant images improve the contrast impressively but enhanced images of RSIHE (using recursive level r = 2) suffer from over-brightness, undesirable artifacts and unnatural appearances of the color as shown in Figs. 7e, 9e, 10, 11, 12, 13, 14, 15, 16, 17, 18 and 19e. On the other hand, the enhanced images using QDHE and ICEPMB results in the over-brightness of the image. As a result, these algorithms generate unwanted artifacts, which are clearly visible in enhanced images as depicted in Figs. 7f, g, 12f, g, 15f, g, and 17f, g. ICEPMB produces slightly over enhanced outcomes. This problem can be visibly confirmed through the enhanced images as represented in Figs. 9e, 10g, 12g, 13g, 15g, and 17g. The histogram plots of all three methods do not follow the original histogram shape properly as shown in Fig. 8e–g.

The ESIHE and ACHME produce well contrast enhanced images, but the detail information of these images is still not clear. In case of the ESIHE method, enhanced images are affected by undesirable artifacts and slightly over-enhanced because it improves contrast as well as noise in the images as shown in Figs. 7h, 9h, 13h, 17h and 18h. ACMHE method (the amount of emphasis δ = 5) uses the dynamic histogram equalization technique for contrast enhancement, due to that it changes the natural look of the color images or generate artifacts in the form of unwanted color in the enhanced images, which can be perceived in Figs. 7i, 11i, 12, 14, 15i and 17i. Histogram plot of ESIHE and ACMHE do not follow pattern of the original histogram properly as shown in Fig. 8h, i. Other well-known algorithms such as ETHE and DOTHE have improved the image contrast, but these approaches change natural color appearance and brightness of the images. The detail information of these enhanced images is still not clear, and these techniques produce over-enhancement, undesirable artifacts and noise as shown in Figs. 7j, k, 9j, k, 10, 11, 12, 13, 14, 15, 16, 17, 18 and 19j, k.

The proposed ATSHE method produces balanced contrast enhanced images with brightness preservation ability as illustrated in Figs. 7l, 9l, 10, 11, 12, 13, 14, 15, 16, 17, 18 and 19 l. The information content of the enhanced images is superior to all the comparable HE-based techniques and histogram plot of the ATSHE enhanced image is demonstrated in Fig. 8 l. The histogram pattern shows a close resemblance of the original image histogram with an extended range, and histogram has dense bins in the gray scale range from 60 to 160 range. This indicates that most of the information is present at middle portions of the histogram. This reveals that the image enhancement using the proposed method is neither darker region nor brighter region. This algorithm tested on two types of images, which are daily-life and remote sensing images to examine robustness and natural color preservation capability of the proposed methodology.

In brief, the qualitative analysis of all processed images, for Img1 and Img2 images using HE, BBHE, DSIHE, RSIHE, QDHE, ICEPMB, ESIHE, ACHE, ETHE, and DOTHE methods reveal undesirable artifacts and over brightness in the face, hair and background regions as shown in Figs. 7 and 9. For Img3 image, the detail information in the output images are not very clear such as tree, roof, car and homogeneous sky regions are not well enhanced by HE, BBHE, DSIHE, RSIHE, QDHE, ETHE, and DOTHE algorithms which are illustrated in Fig. 10. Specially, enhanced images using ICEPMB, ESIHE, and ACMHE techniques show slightly over-enhancement at above-mentioned regions. The proposed ATSHE framework produces superior enhancement result for each test image i.e. Img1, Img2, and Img3.

Most of the HE-based methods consistently attribute to mean shift in the enhanced outcomes because of the rearrangement of gray level values throughout the normalization process of intensity values. On the other hand, the proposed ATSHE scheme proved to preserve the mean brightness and essential features of the input image. The color of the ATSHE enhanced images have maintained the natural feel of the image and avoided over enhancement related problems. For the effective color preservation, the contrast enhancement techniques applied over intensity component for weakly illuminated images.

For Img4 image, all existing methods have created over enhanced image and color appearance is not matched with original image in wall and windows regions as depicted in Fig. 11. Figure 12 shows Img5 and corresponding enhanced images, in which all HE-based algorithms generate non-homogeneous sky region and over brightness in water and boat regions. Subsequently, original Img6 and processed images are represented in Fig. 13. In case of this image, enhanced images with existing methods are yielding some undesirable artifacts in the flower and window regions that clearly indicate that traditional techniques fail to preserve mean brightness and basic image features with natural colors. The output images of ICEPMB, ACHME, and ESIHE are quite good for few standard test images, but the proposed ATSHE scheme is also capable to maintain proper brightness after contrast stretching process, which is essential for various applications like remote sensing or medical images.

Satellite image contains much sensitive information with respect to color image. In other words, color composition of the satellite image gives more detail information of context. Contrast enhancement with brightness preservation of the satellite image is mandatory in various applications. All compared HE-based methods change the color appearance and texture information of satellite images. The output images of these algorithms do not preserve mean brightness and entropy as shown in Figs. 14, 15, 16, 17, 18 and 19. It can be seen that the proposed ATSHE framework shows best natural appearance of color combination and well-enhanced result as compared to different HE based methods for each satellite and standard test images.

4.7.2 Quantitative evaluation

The main objective of the proposed method is to improve the contrast of a weakly illuminated image that can preserve mean brightness and naturalness. Image contrast enhancement is examined by different enhancement assessment parameters such as CPP and MEME. The mean brightness preservation of the image is evaluated by AMBE value and immunity related errors are measured by PSNR. Table 3 and 4 show matrices of image quality measurement of AMBE and SSIM for the twelve images, respectively, where gray scales of color input and color output images are used. The SSIM value of the proposed algorithm for most of the images is close to 1. Tables 5 and 6 represent the enhancement assessment factors such as CPP and MEME, respectively. PSNR and entropy measurements are reported in Table 7 and Table 8, respectively.

Image numbers from 1 to 12 illustrates the consecutive twelve test images viz. Img1, Img2, Img3, Img4, Img5, Img6, Sat1, Sat2, Sat3, Sat4, Sat5, and sat6 respectively. For better and quick analysis of fidelity parameters, Figs. 20, 21, 22, 23, 24 and 25 report the comparison plots of AMBE, SSIM, CPP, EME, MEME, PSNR, and entropy for each method respectively. The proposed ATSHE method has been shown to yield the best-enhanced images in thorough examination of the qualitative evaluation part. The proposed method produces low AMBE value for almost all the images that indicates better mean brightness preservation. Figure 20 shows the graph of AMBE values, the plot of the proposed method stands at the bottom, which represents the best values.

The SSIM value of the proposed algorithm for almost all enhanced images is closer to the maximum value i.e. 1. This can be verified by graphical plot of Table 4 as depicted in Fig. 21. For CPP, where the proposed method is not reaching the highest level because it produces balanced level of contrast and brightness with the control of over-enhancement problem. The CPP value of ETHE and DOTHE algorithms is highest for almost all cases but it shows over-enhancement condition in the resultant images.

Furthermore, MEME numerical parameter testified to reveal best local enhancement, because CPP value of the proposed enhanced images is low as shown in Table 5 and Fig. 22. The obtained value of MEME is too high, which indicates over-enhancement in the resultant image, for a very low value of MEME that denotes hidden detail is not considerably enhanced. An optimal value of MEME must be neither too high nor too low or it should be closer to value of test images (Agaian et al. 2007; Wang and Chen 2017). The value of MEME of the proposed method is best among all methods as depicted in Table 6 or Fig. 23.

Table 7 reports PSNR value of the enhanced images, mostly used to measure contrast enhancement without more changes in enhanced image. The PSNR value of the proposed method is either largest or second largest, as demonstrated in Fig. 24, where highest PSNR value signifies better enhancement. The entropy value of the proposed method for each enhanced image is closer to the original value and greater than the values for all comparable HE-based methods as shown from Table 8 or in Fig. 25. Overall, the proposed ATSHE technique outperforms significantly for image contrast enhancement and brightness preservation among existing methods.

4.8 Comparisons with other methods

For better comparison, the proposed framework is compared with aforementioned techniques (Demirel et al. 2010; He et al. 2011; Fu et al. 2015, 2016), which are not based on HE method. Figures 26, 27 and 28 show the original input image and the enhanced images generated by different approaches for Img7, Img8, and Img9, respectively. From the visual analysis of the output images, it can be noticed that the proposed ATSHE technique provides best visual results for all three-sample images. In Fig. 27 for Img8, DWT-SVD results in over enhancement in the water region and the skin of the child. In all three cases, including this case, DCP darkens the input image indicating the loss of contrast and this can be seen visually in Figs. 26c, 27 and 28c. DWT-SVD performs over enhancement on every input image as can be seen in Figs. 26b, 27 and 28b. MF and PIE perform contrast enhancement but of sub-standard quality as compared to the proposed ATSHE framework as shown in Figs. 26, 27 and 28. The proposed technique generates great informative images that the human eye can readily interpret it: whereas other methods are unable to make the image more informative and the images suffer from artifacts and over enhancement. The proposed enhanced images have maintained the natural color appearance with minimum artifacts as compared with other techniques.

The numerical analysis of Img7, Img8, and Img9 test images reveals the superiority of the proposed method. Tables 9 and 10 represent the enhancement assessment factors such as AMBE, SSIM, CPP, PSNR, MEME, and entropy. The best value for all cases indicated by bold value. Quantitative analysis reveals that proposed method is superior to all methods.

5 Conclusion

In this paper, new adaptive thresholding based sub-histogram equalization has been introduced for image contrast enhancement and brightness preservation. The proposed ATSHE method exploits the clipping process to prevent over-brightness related problem. The main advantage of this scheme is adaptive nature of the number of threshold values or sub-histograms of the image which depends on the PSNR of the thresholded image. To demonstrate the effectiveness of ATSHE algorithm, it is compared with well-known different HE-based approaches and other standard enhancement techniques. Experimental results are reported that the proposed framework has produced highest SSIM, entropy, PSNR, and optimal AMBE, CPP, and MEME values among all the techniques for almost each image. ATSHE is also focused on producing high quality images with respect to contrast enhancement and brightness preservation. The conventional methods have introduced artifacts in the image during the enhancement process, but the proposed method maintains naturalness of the image without losing basic features. The proposed ATSHE technique is also very effective for satellite image enhancement for feature preservation.

As a scope of future work, the proposed algorithm can be used further as an objective function with various evolutionary and swarm based optimization techniques to get better enhancement results. Additionally, different combinations of the presented method can be used through the multi-objective optimization based problems to improve the quality of poor quality images.

References

Abdullah-Al-Wadud, M. (2007). A dynamic histogram equalization for image contrast enhancement. IEEE Transactions on Consumer Electronics, 53, 593–600.

Agaian, S. S., Silver, B., & Panetta, K. A. (2007). Transform coefficient histogram-based image enhancement algorithms using contrast entropy. IEEE Transactions on Image Processing, 16(3), 741–758.

Arora, S., Acharya, J., Verma, A., & Panigrahi, P. K. (2008). Multilevel thresholding for image segmentation through a fast statistical recursive algorithm. Pattern Recognition Letters, 29(2), 119–125.

Bhandari, A. K., Kumar, A., Chaudhary, S., & Singh, G. K. (2017). A new beta differential evolution algorithm for edge preserved colored satellite image enhancement. Multidimensional Systems and Signal Processing, 28(2), 495–527.

Bhandari, A. K., Kumar, A., & Padhy, P. K. (2011). Enhancement of low contrast satellite images using discrete cosine transform and singular value decomposition. World Academy of Science, Engineering and Technology, 55, 35–41.

Bhandari, A. K., Kumar, A., Singh, G. K., & Soni, V. (2016). Dark Satellite image enhancement using knee transfer function and gamma correction based on DWT-SVD. Multidimensional System and Signal Process., 27(2), 453–476.

Bhandari, A. K., Maurya, S., & Meena, A. K. (2018). Social spider optimization based optimally weighted Otsu thresholding for image enhancement. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing. https://doi.org/10.1109/JSTARS.2018.2870157.

Bhandari, A. K., Singh, V. K., Kumar, A., & Singh, G. K. (2014a). Cuckoo search algorithm and wind driven optimization based study of satellite image segmentation for multilevel thresholding using Kapur’s entropy. Expert Systems with Applications, 41(7), 3538–3560.

Bhandari, A. K., Soni, V., Kumar, A., & Singh, G. K. (2014b). Cuckoo search algorithm based satellite image contrast image and brightness enhancement using DWT-SVD. ISA Transactions, 53(4), 1286–1296.

Celik, T., & Tjahjadi, T. (2010). Unsupervised colour image segmentation using dual-tree complex wavelet transform. Computer Vision and Image Understanding, 114(7), 813–826.

Chen, S.-D., & Ramli, A. R. (2003a). Minimum mean brightness error bi-histogram equalization in contrast enhancement. IEEE Transactions on Consumer Electronics, 49(4), 1310–1319.

Chen, S.-D., & Ramli, A. R. (2003b). Contrast enhancement using recursive-mean-separate histogram equalization for scalable brightness preservation. IEEE Transactions on Consumer Electronics, 49(4), 1301–1309.

Cheng, H.-D., & Xu, H. (2000). A novel fuzzy logic approach to contrast enhancement. Pattern Recognition, 33(5), 809–819.

Demirel, H., Ozcinar, C., & Anbarjafari, G. (2010). Satellite image contrast enhancement using discrete wavelet transform and singular value decomposition. IEEE Geoscience and Remote Sensing Letters, 7(2), 333–337.

Fu, X., Liao, Y., Zeng, D., Huang, Y., Zhang, X.-P., & Ding, X. (2015). A probabilistic method for image enhancement with simultaneous illumination and reflectance estimation. IEEE Transactions on Image Processing, 24(12), 4965–4977.

Fu, X., Zeng, D., Huang, Y., Liao, Y., Ding, X., & Paisley, J. (2016). A fusion-based enhancing method for weakly illuminated images. Signal Processing, 129, 82–96.

Gonzalez, R. C., & Woods, R. E. (2011). Digital image processing (3rd ed.). Upper Saddle River: Pearson Prentice Hall.

Hasikin, K., & Isa, N. A. M. (2014). Adaptive fuzzy contrast factor enhancement technique for low contrast and nonuniform illumination images. Signal, Image and Video Processing, 8(8), 1591–1603.

He, K., Sun, J., & Tang, X. (2011). Single image haze removal using dark channel prior. IEEE Transactions on Pattern Analysis and Machine Intelligence, 33(12), 2341–2353.

Huang, S.-C., & Yeh, C.-H. (2013). Image contrast enhancement for preserving mean brightness without losing image features. Engineering Applications of Artificial Intelligence, 26(5), 1487–1492.

Kim, Y. T. (1997). Contrast enhancement using brightness preserving bi-histogram equalization. IEEE Transactions on Consumer Electronics, 43, 1–8.

Kong, N. S. P., Ibrahim, H., Ooi, C. H., Chieh, D. C. J. (2009). Enhancement of microscopic images using modified self-adaptive plateau histogram equalization. In International conference on comput. computer technology and development, 2009 (Vol. 308–310).

Kong, T. L., & Isa, N. A. M. (2017). Bi-histogram modification method for non-uniform illumination and low-contrast images. Multimedia Tools and Applications, 77, 8955–8978.

Lai, Y.-R., Tsai, P.-C., Yao, C.-Y., & Ruan, S.-J. (2017). Improved local histogram equalization with gradient-based weighting process for edge preservation. Multimedia Tools and Applications, 76, 1585–1613.

Li, C., & Bovik, A. C. (2010). Content-partitioned structural similarity index for image quality assessment. Signal Processing: Image Communication, 25(7), 517–526.

Liu, B., Jin, W., Chen, Y., Liu, C., & Li, L. (2011). Contrast enhancement using non-overlapped sub-blocks and local histogram projection. IEEE Transactions on Consumer Electronics, 57(2), 583–588.

Niu, Y., Wu, X., & Shi, G. (2016). Image enhancement by entropy maximization and quantization resolution upconversion. IEEE Transactions on Image Processing, 25, 4815–4828.

Ooi, C. H., & Isa, N. A. M. (2010). Quadrants dynamic histogram equalization for contrast enhancement. IEEE Transactions on Consumer Electronics, 56, 2552–2559.

Peli, E. (1990). Contrast in complex images. JOSA A, 7(10), 2032–2040.

Priyadharsini, R., Sharmila, T. S., & Rajendran, V. (2018). A wavelet transform based contrast enhancement method for underwater acoustic images. Multidimensional Systems and Signal Processing, 29(4), 1845–1859.

Sangee, N., Sangee, A., & Choi, H. K. (2010). Image contrast enhancement using bi-histogram equalization with neighbourhood metrics. IEEE Transactions on Consumer Electronics, 56(4), 2552–2559.

Santhi, K., & Wahida Banu, R. S. D. (2015). Adaptive contrast enhancement using modified histogram Equalization. Optik, 126, 1809–1814.

Shakeri, M., Dezfoulian, M. H., Khotanlou, H., Barati, A. H., & Masoumi, Y. (2017). Image contrast enhancement using fuzzy clustering with adaptive cluster parameter and sub-histogram equalization. Digital Signal Processing, 62, 224–237.

Shanmugavadivu, P., & Balasubramanian, K. (2014). Thresholded and optimized histogram equalization for contrast enhancement of images. Computers & Electrical Engineering, 40, 757–768.

Sheet, D., Garud, H., Suveer, A., Mahadevappa, M., & Chatterjee, J. (2010). Brightness preserving dynamic fuzzy histogram equalization. IEEE Transactions on Consumer Electronics, 56, 2475–2480.

Sidike, P., Sagan, V., Qumsiyeh, M., Maimaitijiang, M., Essa, A., & Asari, V. (2018). Adaptive trigonometric transformation function with image contrast and color enhancement: Application to unmanned aerial system imagery. IEEE Geoscience and Remote Sensing Letters, 15(3), 404–408.

Sim, K. S., Tso, C. P., & Tan, Y. Y. (2007). Recursive sub-image histogram equalization applied to gray scale images. Pattern Recognition Letters, 28(10), 1209–1221.

Singh, K., & Kapoor, R. (2014a). Image enhancement using exposure based sub image histogram equalization. Pattern Recognition Letters, 36, 10–14.

Singh, K., & Kapoor, R. (2014b). Image enhancement via median-mean based sub-image-clipped histogram equalization. Optik, 125, 4646–4651.

Singh, K., Kapoor, R., & Sinha, S. K. (2015). Enhancement of low exposure images via recursive histogram equalization algorithms. Optik, 126, 2619–2625.

Singh, K., Vishwakarma, D. K., Walia, G. S., & Kapoor, R. (2016). Contrast enhancement via texture region based histogram equalization. Journal of Modern Optics, 63(15), 1444–1450.

Sundaram, M., Ramar, K., Arumugam, N., & Prabin, G. (2011). Histogram modified local contrast enhancement for mammogram images. Applied Soft Computing, 11(8), 5809–5816.

Tang, J. R., & Isa, N. A. M. (2017). Bi-histogram equalization using modified histogram bins. Applied Soft Computing, 55, 31–43.

Thum, C. (1984). Measurement of the entropy of an image with application to image focusing. Optica Acta: International Journal of Optics, 31(2), 203–211.

Wan, Y., Chen, Q., & Zhang, B. M. (1999). Image enhancement based on equal area dualistic sub-image histogram equalization method. IEEE Transactions on Consumer Electronics, 45, 68–75.

Wang, X., & Chen, L. (2017). An effective histogram modification scheme for image contrast enhancement. Signal Processing: Image Communication, 58, 187–198.

Wong, C. Y., Jiang, G., Rahman, M. A., Liu, S., Lin, S. C.-F., Kwok, N., et al. (2016). Histogram equalization and optimal profile compression based approach for colour image enhancement. Journal of Visual Communication and Image Representation, 38, 802–813.

Xiao, Y., Cao, Z., & Yuan, J. (2014). Entropic image thresholding based on GLGM histogram. Pattern Recognition Letters, 40(15), 47–55.

Acknowledgements

The authors wish to thank the editors and anonymous referees for their constructive criticism and valuable suggestions.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Kandhway, P., Bhandari, A.K. An optimal adaptive thresholding based sub-histogram equalization for brightness preserving image contrast enhancement. Multidim Syst Sign Process 30, 1859–1894 (2019). https://doi.org/10.1007/s11045-019-00633-y

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11045-019-00633-y