Abstract

Quality Scalability is one of the important features of interactive imaging to obtain better perceptual quality at a specified target bit rate. In JPEG 2000, it is achieved using quality layers obtained by Rate-Distortion (R-D) optimization techniques in Tier-II coding. Some important concerns here are: (i) inefficient conventional Post-Compression Rate-Distortion (PCRD) optimization algorithms, (ii) lack of quality scalability for less or single quality layer string. This paper takes the above mentioned concerns into account and proposes a Blind Quality Scalable (BlinQS) algorithm that provides scalability with the least computational complexity. The novel part of this method is to eliminate the Tier-II coding and add a blind string selection i.e., transcoding algorithm through a normal distribution function for efficient rate control. The results obtained suggest that the proposed method achieves better results than JPEG-2000 at single quality layer and achieves results close to JPEG-2000 without using PCRD optimization algorithms.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

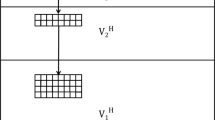

Scalability is one of the main features of any interactive device. Scalability may refer to adaptation in size, shape, quality, rate, etc. In the field of image compression, scalability refers to adaptation in resolution, rate, quality and component. Among these, rate scalability and quality scalability need to achieve a good trade-off for maintaining the image quality at a specified or required target rate. This trade-off has been addressed in the new image compression standard JPEG-2000 using the concept of quality layers [38, 40]. These layers are generated in iterative manner using Post-Compression Rate-Distortion (PCRD) optimization algorithms for all the individual code-blocks. To implement R-D optimization algorithms, JPEG-2000 creates a Tier-II coding system module as shown in Fig. 1 [38]. This module takes the block summary of each code-block as the inputs and arranges them in increasing order of quality for the specified target rates. Some important concerns of this coding are: (i) inefficient conventional Post-Compression Rate-Distortion (PCRD) optimization algorithms (iterative in nature), (ii) lack of quality scalability for less or single quality layer string. Research communities around the globe have been carrying out research to address these key issues in scalable compression. This has been the prime obstacle of JPEG-2000 widespread adoption in entertainment and broadcast sectors [41].

For interactive imaging in entertainment and broadcast sectors, scalability refers to two targets: (i) low computational complexity for fast processing, and, (ii) highly independent data rearrangement to achieve optimal quality at any required rate. JPEG-2000 has attempted to achieve this and earned more popularity in the field of interactive imaging, especially in the cloud-based content distribution applications. Having earned all the praises for its flexibility, JPEG-2000 has its own limitations pertaining to the computational complexity of the block coding (PCRD) algorithms. This has been the prime obstacle of JPEG-2000 widespread adoption in entertainment and broadcast sectors [41]. To break through the obstacles and make JPEG-2000 more flexible researchers throughout the world are continuing their research by keeping the following as the prime targets:

-

1.

low computational complexity for fast processing, and,

-

2.

highly independent data rearrangement to achieve optimal quality at any required rate.

To achieve scalability at low computational complexity, choosing effective optimization algorithm is necessary. This challange has been taken up by [14, 17, 23, 48] by coding the data and obtain the rate-disortion simultaneously . However, these algorithms fail to decrease the computational load on the encoder. To further reduce the computational complexity, wavelet data based and step size based rate-distortion algorithms have been presented in [30] and [20] respectively. Later, other approaches including Lagrange multiplier have been proposed in [1, 49]. In [47], authors have proposed three rate control methods (successive bit-plane rate allocation (SBRA), priority scanning rate allocation (PSRA) and priority scanning with optimal truncation (PSOT)) over PCRD algorithms for reducing computational complexity and memory usage. These rate control methods have provided different trade-off among quality, complexity, memory utilization and coding delay. In [19], a low complexity R-D optimization method based on a reverse order for resolution levels and coding passes has been proposed. This method has attained a comparable quality performance with the conventional method, maintaining low complexity. These algorithms have proven to be efficient, but, high throughput has not been achieved. In [41], D. Taubman et. al, have coined the term FBCOT (Fast Block Coding with Optimized Truncation), to widespread the adoption of JPEG-2000 in entertainment and broadcast sectors. This JPEG-2000 compatible algorithm has sole target of increasing the throughput by reducing the computational complexity. This algorithm offers 10X or higher speed compared to the previous algorithms with a slight sacrifice in the coding efficiency. In order to optimize JPEG-2000 for image transmission through wireless networks, Joint Source-Channel Coding (JSCC) has been proposed in [10] and congestion control for interactive applications over SDN networks has been proposed in [28]. Further detailed study of rate-distortion optimization for JPEG-2000 is found in [9, 29]. These R-D optimization algorithms have been significant in reducing the computational complexity but the problem of scalability for any required rate remains unsolved.

To solve this problem of scalability for any bit rate, there is a need to develop algorithms which do not rely on the rate-distortion algorithms at the encoder, rather calculate the quality layers at the transcoder without actually having the knowledge of the code-block information. One such method has been proposed in [6], in which characterisation of code-blocks does not depend on the distortion measures related to the original image. The method proposed in [6], has been inspired by the algorithms presented in [5, 8], which also speak about achieving the better quality of reconstruction when there is a compressed string with single quality layer or less number of quality layers. As this method is computationally expensive another method called Block-Wise Layer Truncation (BWLT) has been proposed in [7]. The main insight behind BWLT is to dismantle and reassemble the to-be-fragmented layer by selecting the most relevant codestream segments of codeblocks within that layer. All these methods are targetted to achieve optimum scalability for single layered or less number of quality layered strings. In [3] and [4], authors focussed on proposing new estimators to approximate the distortion produced by the successive coding of transform coefficients in bitplane image coders. Recently CNN based lossy image compression with multiple bit-rate has been proposed in [13]. This paper focusses on learning multiple bit-rates from a single CNN using Tuceker Decomposition Network (TDNet). The pace of research on scalable image compression has picked up exponentially in the recent years. Some of the latest literature on scalable image compression using machine learning include [15, 21, 24, 25, 37, 42, 46]. Along with these approaches, a new modification to JPEG-2000 has been introduced to achieve high throughput and to reduce the latency. This new approach for JPEG-2000 is termed as High-throughput JPEG-2000 (HTJ2K) [18, 26, 27, 31, 45]. By taking all these inputs from the worldwide researchers into account, the authors have proposed a new blind quality scalable algorithm in this paper.

Investigation has been done in modification of bit plane strategies using several theoretical-practical mechanisms conceived from rate-distortion theory. The research work presented in this paper, is mainly focussed on 2 entities:

-

Reducing the encoding complexity and increasing the throughput.

-

Achieving scalability for even a single-layered string without using PCRD optimization algorithms.

To achieve this, a strong decision making is necessary at the transcoder, to optimally choose the code-blocks and the truncation points.

Further the paper is organized in the following order: Section 2 discusses R-D optimizaion and quality scalable algorithm used in JPEG-2000, Section 3 introduces the proposed method for achieving Blind Quality Scalable Image compression, Section 4 presents comparative analysis of BlinQS with the JPEG-2000 standard and finally, Section 5 draws the conclusion of the work followed by the references.

2 Quality scaling in JPEG-2000

In JPEG-2000, quality scalability is achieved by arranging the obtained bit streams in the form of layers as shown in Fig. 2 [11]. To get the clear interpretation of quality layer, the basic terms code-block and sub-band are indicated in Fig. 3. Each quality layer contains the truncation point for each code-block, thus having an interpretation of the overall image quality. As per the experimentation done for JPEG-2000, it is found that the number of quality layers should be approximately twice the number of sub-bit-planes to achieve optimal quality scalability. Increased number of layers may create the same quality reconstructed at different rates which are approximately same, causing an increase in the overhead [11]. Hence, the practice of more number of quality layers is not followed in JPEG-2000.

2.1 Rate-distortion (R-D) optimization algorithm in JPEG-2000

Rate (R) and Distortion (D) in JPEG-2000 should satisfy the equations (1) and (2) [11],

where, i represents the current code-block number, ni is the truncation point for the code-block Bi. Here, the main target is to find the values of ni, which minimizes D corresponding to constrained target rate Rmax ≥ R. This optimization problem can be solved by minimizing (3) which is obtained by using the well-known method of Lagrange multipliers,

where, λ is the Lagrange multiplier which should be varied until the target rate is achieved with minimum distortion. To obtain the values of λ, Algorithm-1 need to be followed [11]. This algorithm is an iterative method to obtain the best possible values of λ which achieves optimum distortion for a required target rate. To form progressive quality layers, ni obtained for the calculated values of λ are taken as the truncation points and the bits are arranged accordingly.

3 BlinQS: Blind quality scalability algorithm

This section introduces the proposed BlinQS image compression algorithm to achieve optimum quality at target rate without using PCRD algorithms. As discussed in Section 2, R-D optimization algorithms used for generating quality layers are iterative in nature, thus they increase the computational complexity. BlinQS aims to bypass the R-D optimization algorithm and achieve near optimal quality, thus reducing the computation load on the encoder. This has been achieved by using blind selection of the code-blocks obtained through gaussian normal distribution. It has shown good approximation in chosing the code-blocks optimally for required target rate. This is because, the code-blocks are arranged in the order of descending information content and selected depending on the variance boundary in which the code-block falls. As this operation is computationally effective, it does not create any prominent load on the transcoder, where BlinQS algorithm has been placed. Thus the aim of reducing the computational complexity and memoryless scalability has been achieved using BlinQS algorithm. Complete algorithm is explained in three sections: (i) Encoding, (ii) Inclusion map, and, (iii) Decoding. BlinQS is a part of the transcoder which forms the quality layers blindly from the encoded string using the inclusion map. Main tasks to be performed by the BlinQS trancoder on the received string are:

-

1.

Getting the value of δb for each sub-band.

-

2.

Calculating the truncation point of the code-blocks.

3.1 BlinQS: Encoding

Encoding module consists of three sub-modules, (i) Image Transformation, (ii) Image Compression using Set Partition In Hierarchical Trees (SPIHT), and, (iii) String arrangement along with the header. The encoding procedure is briefly explained in Fig. 4a.

3.1.1 Image tranformation

As shown in Fig. 4a, the first step of BlinQS encoding algorithm is to transform the image using Discrete Wavelet Transform (DWT). To decompose the image, ‘Biorthogonal 4.4 (bior4.4)’ wavelet family has been used [12]. Before decomposing, an intensity level shift of -127 is performed on the image and then it is decomposed using DWT into sub-bands.

After decomposition, the sub-bands are divided into code-blocks as illustrated in Fig. 3, which can be considered as the building blocks of the coding. They are encoded using the SPIHT algorithm. To improve the efficiency of SPIHT, DWT is applied for each code-block in High-pass region using the same ‘bior4.4’ wavelet family before encoding. This has shown substantial improvement in terms of compression ratio.

3.1.2 Image compression using SPIHT

SPIHT is an improvement to Embedded Zerotress of Wavelet (EZW) having main characteristics of SPIHT that include: efficiency, self-adaptiveness, precise rate-control, simple and fast, and fully embedded output [32,33,34]. SPIHT directly provides the binary output, hence, there is no need of using another algorithm for converting bits to symbols [22].

3.1.3 Quantization factor (δ b)

SPIHT algorithm is applied on each code-block (CB), after quantizing them by a factor of predefined quantization parameter (δb) (obtained from Algorithm-2), to obtain the compressed string of the corresponding code-block. Here, quantization parameter (δb) is the function of encoding sub-band δb = f(SB), i.e., the quantization factor depends on, in which sub-band the code-block is present as shown in Fig. 5. Let δb denote the quantization parameter for sub-band SBb, where b denotes DWT level of the sub-band. Each subband has different δb value, which is calculated as per Algorithm-2. Higher the value of δb, lesser the string length (Li) generated for the code-block Bi of the sub-band SBb and higher the quantization error (Qe) i.e, \(\delta _{b} ~\alpha ~\frac {1}{L_{i}} ~\alpha ~Q_{e}\). Therefore, to maintain the reconstruction quality of image, LL components are not quantized before coding (i.e., δb = 1) and rest of the image is quantized with δb > 1 upto a maximum point δmax. Hence, δb is adapted with the sub-band in which the process of quantization is going on, which is termed as adaptive delta (δadap).

Let ‘Li’ denote the length of the compressed string obtained from the code-block ‘Bi’, and ‘Lip’ denote the length of compressed string obtained from bit plane ‘p’ of code-block ‘Bi’. For a given code-block ‘Bi’, the total compressed string length ‘Li’ is the summation of the individual ‘Lip’ from each bit plane as mentioned in equation (4) and complete string length ‘L’ is given by (5). Before transmitting or storing this string, header is formed for ease of access and flexibility in operation, details of which are given in Section 3.1.4.

where, Np represents number of planes in the transformed image and Nc represents the number of code-blocks.

3.1.4 String arrangement along with header

The lengths Lip, obtained from the SPIHT encoder are arranged in the order of their subbands i.e., LL2, HL2, LH2 and so on. While arranging the string in header, strings obtained from each bit plane ‘p’ of code-block ‘Bi’ is considered as a separate entity and is placed in the header along with marker bytes which stores the length of the string i.e., Lip. Therefore, effective length of the string stored is ‘Bits occupied by string + marker bytes’. Along with this information, basic information such as size of image, level of DWT applied on image, code-block size etc, are appended to the header, which forms the basic information header. Using the basic information header, marker byte lengths are extracted first at the transcoder to form the quality layers before decoding, which is discussed in brief in Section 3.2.

3.2 BlinQS: Inclusion map

Blind Quality Scalability refers to “obtaining the inclusion map and truncation points for the optimum reconstruction of an image at a specified bit rate.” An overview of this algorithm is given in Fig. 6. Inclusion map (Im) is a matrix which consists of the information regarding code-blocks needed for decoding the image for the specified rate. The procedure for obtaining the inclusion map generation is briefly discussed in the following steps.

3.2.1 Calculation of string contribution: Step-1

Calculate the precentage contribution (PLi) of each compressed code-block (CB) using (6), where i = 1,2,\(\dots ,N_{c}\) (Number of code-blocks).

3.2.2 Calculation of Normal Distribution Coefficients: Step-2

Find the normal distribution coefficients (X) of array ‘PL’ using the mean (μ) and variance (σ2) of the elements in the array using the (7), (8) and (9).

3.2.3 Obtaining the code-blocks: Step-3

Plot the values of ‘Xi’, against the percentage lengths (PLi) obtained from (6), i.e. Xi vs PLi and divide the plot by taking the step-size of xsσ. This plot for ‘Lena’ image is shown in Fig. 7. Here, the bit rates mentioned in set Rstd = {0.0625,0.125,0.25,0.5,1.0,2.0} are considered as standard bit rates for which xs = 1, where s 𝜖 [1,5], and for bit rates Rs < Rnew < Rs+ 1, a new term xnew is introduced, which can be found out using Algorithm-3. xnew𝜖(0,1) acts as the local step-size generator between Rs and Rs+ 1 to precisely choose the code-blocks Bi for the new rate Rnew. Standard rates are addressed here as \(R_{1}, R_{2}, \dots , R_{6}\) and the partition number for each rate is denoted by \(loc_{R_{s}}\). From normal distribution plot in Fig. 7, inclusion map for standard rates can be obtained as indicated by the arrows i.e., for 0.0625: code-blocks upto μ + 2σ (i.e. \(loc_{R_{1}} = 2\)), for 0.125: code-blocks upto μ + 1σ (i.e. \(loc_{R_{2}} = 3\)) and so on. xnew plays a major role in obtaining the inclusion map for Rnew. If xnew𝜖 (0,1), total number of partitions increase by a factor of ’k’. Therefore, value of \(loc_{R_{new}}\) can be obtained from (10a), where, s and j are indicated in Algorithm-3.

3.2.4 Calculation of inclusion map: Step-4

After obtaining \(loc_{R_{new}}\) and xnew values, all the code-block strings present ahead of \(loc_{R_{new}}\) are included in the inclusion map (Im). The included code-blocks can be determined from (3.2.4) as indicated below.

3.2.5 Calculation of truncation points: Step-5

The truncation points are obtained by solving (12), which considers the code-blocks in the inclusion map (Im).

After obtaining the inclusion map, truncation points ‘ni’ for each code-block ‘Bi’ in the inclusion map have to be identified for a specified target rate ‘Rmax’. The truncation points obtained here must follow the conditions specified in (2) and (12). On solving (12), ni ≈ R/ΣiLi, where, i follows the values obtained from the inclusion map. On obtaining the values of ni for each code-block in the inclusion map, a new header with the obtained lengths and string is formed and sent to decoder for image decoding.

3.3 BlinQS: Decoding

Firstly, in decoding module, bits received are separated and required information is extracted from the header formed in Section 3.2. Functionalities of the decoder are summarized in the flowchart given in Fig. 6. Value of δb for each sub-band is calculated as per the algorithm given in Algorithm-2. After obtaining the adaptive delta, truncation points ‘ni’ for each code-block ‘Bi’ have to be identified for a specified target rate ‘Rmax’. The truncation points obtained here must follow the conditions specified in (12).

On solving (12), ni ≈ R/ΣiLi. On obtaining the values of ni for each code-block, SPIHT decoding is applied to the respective blocks up to the truncation points ‘ni’. Inverse SPIHT is applied on the blocks in the inclusion map upto the obtained truncation points. δb obtained from Algorithm-2 is multiplied with the corresponding block values and then Inverse Discrete Wavelet Transform (IDWT) is applied using ’bior4.4’ wavelet family and arranged in image format. The blocks which are not available in the inclusion map are filled with zeros and image is transformed into spatial domain by applying IDWT. And finally, the transformed image is level shifted by “+ 127” to get the reconstructed image. The general block diagram of the proposed in presented in Fig. 8.

4 Results and discussions

This section presents the performance comparison of BlinQS with JPEG-2000 standard. Results have been presented at standard and non-standard rates for three (3) standard images: Lena (512 × 512), Barbara (512 × 512) and Elaine (512 × 512). This section is divided into three subsections: (i) Inclusion map and truncation points, (ii) Quantitative and qualitative comparison of BlinQS, and (iii) Computational complexity and trade-off.

4.1 Inclusion map and the truncation points

The inclusion map and the truncation points of the corresponding code-blocks for Lena image are presented in Fig. 9. In this figure, the white bar indicates the complete length of the string (Li) obtained for code-blocks Bi and the black bar indicates the truncation point for Bi i.e., amount of string used for reconstruction for the target rate. Obtained Peak Signal to Noise Ratio (PSNR) values for the standard rates mentioned in Rstd are shown in Fig. 9. These values clearly show the effect of inclusion map and the truncation points obtained using BlinQS. For required rates, 0.5 and 1.0, the inclusion map obtained is same as shown in Fig. 7, but the truncation points for these rates are different. Therefore, it is clear that the truncation points have played a major role in providing good quality at that rates. To optimally maintain the quality, BlinQS does not pick the blocks in the order, instead it picks up the blocks in the order of their contribution to the quality which can be derived using Xi. This can be clearly seen for the target rates 0.125 and 0.25 in Fig. 9, where some of the code-blocks are skipped by the algorithm to achieve optimum quality. For obtaining the optimum quality for the non-standard rates Rnew, the local step size xnew plays a vital role in obtaining the inclusion map Im, which is obtained from Algorithm-3.

4.2 Tabular and graphical results

To evaluate the performance of the BlinQS under same platform, results have been compared against JPEG-2000 at standard rates (bpp) with and without quality layer in Table 1. BlinQS has a clear domination over JPEG-2000 without quality layers and a near optimal value with quality layers. This shows that the estimation of BlinQS in optimizing the quality is very good. In JPEG-2000, the variation in PSNR is around 10dB for layered and non-layered string [6], but using BlinQS that has been reduced by a large extent and satisfactory results in terms of visual quality and PSNR are obtained.

To further investigate the proposed method, test images with various resolutions and textures have been selected for comparison and some of the sample test images are presented in Table 2. The detailed PSNR values of around 100 images are presented in Appendix A. PSNR and Structural Similarity (SSIM) index values obtained for these images, using BlinQS and JPEG-2000 are presented in Tables 3 and 4 respectively. From the PSNR values presented in Table 3, it can be observed that even at lower rates BlinQS is giving more quality than JPEG-2000 without quality layer (J2K#) and PSNR is ≈ 30dB (but <J2K value) which clearly tells the visual quality of the image is flawless. From the SSIM values presented in Table 4, it can be clearly seen that BlinQS is giving almost same results as that of J2K and giving very goos results when compared to J2K#. he comparison graph between “PSNR (dB) and Rate” of Lena (512×512) and Barbara (512×512) is depicted in Fig. 10. From this graph, it can be clearly seen that BlinQS is almost following JPEG-2000 in-terms of PSNR value. It is even closer at lower rates like 0.0625 bpp and 0.125 bpp when compared to other rates.

4.3 Visual quality representation

For qualitative analysis, reconstructed images of BlinQS at various standard rates have been presented in Figs. 11, 12 and 13. Where, sub-figure (a), represents the original image used for encoding and (b) to (g) represents the images reconstructed at standard rates as mentioned in the figure captions. The maximum possible rate that can be obtained through the compressed string is represented in subfigure (g). The visual quality is flawless when observed at rates Rstd > 0.5, and quite good even for lower rates. PSNR values for the respective rates are given in Table 1 and also mentioned in the figure along with the obtained rate. Hence, it can be clearly seen that in both qualitative and quantitative analysis BlinQS has provided nearly same results as that of JPEG-2000.

4.4 Computational complexity and trade-off

The basic idea of BlinQS is to reduce the computational load by removing the iterative R-D optimization algorithm and achieve blind scalbility. The computational complexity of R-D optimization algorithm is given by \(\mathcal {O}(N_{crv} \times N_{pt})\), where Ncrv represents the number of code-blocks and Npt represents number of average points in each code-block [2]. Hence, BlinQS has reduced the computational load by the order of \(\mathcal {O}(N_{crv} \times N_{pt})\) at a sacrifice of ≈ 7% of PSNR compared to JPEG-2000.

To achieve optimal quality, non-iterative and computationally less complex algorithm using gaussian normal distribution has been added to the decoder for standard rates. For non-standard rates, iterations are used to achieve optimal quality. It adds computational complexity of \(\mathcal {O}(N_{depth})\), where Ndepth represents precision of the target rate that depends on the threshold and target rate selected by the user. This has also added a new degree of freedom for choosing any required rate at the decoder rather than limiting to the rates calculated at the encoder. Hence, loss of ≈ 7% of PSNR has resulted in the decoder independency for optimal reconstruction at target rate and reduced computational load.

5 Conclusion

This paper addresses the necessity of blind quality scalability for image compression and its implementation. The main concerns of quality scalability, iterative coding and lack of scalability for single layered string, are taken into consideration for developing BlinQS (Blind Quality Scalable) image compression. Normal distribution of percentage lengths has been used for getting the inclusion map for the target rate and this map is used for generating the truncation points (ni) for the respective blocks. Algorithm to obtain the inclusion map for achieving optimum reconstruction quality without iterative process at decoder has been introduced. This has reduced the computational complexity by a factor of \(\mathcal {O}(N_{crv} \times N_{pt})\). The PSNR values obtained by the proposed algorithm have been presented in the the comparison table, which shows that BlinQS has obtained nearly same results using single string without using quality layers. Results shown for standard images clearly show the visual quality of the reconstructed image is flawless at higher rates and quite good even at lower rates. On an average, PSNR values obtained for BlinQS are 7% less than that of JPEG-2000 by reducing the computational load on encoder and making the single string scalable at any desired target rate.

Data Availability

Data used in this work is downloaded from repositories which have been already cited in the manuscript. Data available on request from the authors.

References

Aminlou A, Fatemi O (2005) A novel efficient rate control algorithm for hardware implementation in jpeg2000. In: Proceedings. (ICASSP’05), IEEE International conference on acoustics, speech, and signal processing, 2005., vol 5, pp v/21–v/24 Vol 5

Aminlou A, Fatemi O, Homayouni M, Hashemi MR (2006) A non-iterative rd optimization algorithm for rate-constraint problems. In: Image processing, 2006 IEEE international conference on, pp 2493–2496, IEEE

Auli-Llinas F, Marcellin MW (2009) Distortion estimators for bitplane image coding. IEEE Trans Image Process 18(8):1772–1781

Auli-Llinas F, Marcellin MW (2011) Scanning order strategies for bitplane image coding. IEEE Trans Image Process 21(4):1920–1933

Auli-Llinas F, Serra-Sagrista J (2007) Low complexity JPEG2000 rate control through reverse subband scanning order and coding passes concatenation. IEEE Signal Process Lett 14(4):251–254

Auli-Llinas F, Serra-Sagrista J (2008) Jpeg2000 quality scalability without quality layers. IEEE Trans Circuits Syst Video Technol 18(7):923–936

Auli-Llinas F, Serra-Sagrista J, Bartrina-Rapesta J (2010) Enhanced JPEG2000 quality scalability through block-wise layer truncation. EURASIP J Adv Signal Process 2010:56

Auli-Llinas F, Serra-Sagrista J, Monteagudo-Pereira JL, Bartrina-R J (2006) Efficient rate control for JPEG2000 coder and decoder. In: Data Compression Conference (DCC’06), pp 282–291 IEEE

Aulí-llinàs F, Serra Sagristà J (2007) Model-based JPEG2000 rate control methods Universitat Autònoma de Barcelona

Bi C, Liang J (2017) Joint source-channel coding of jpeg 2000 image transmission over two-way multi-relay networks. IEEE Trans Image Process 26(7):3594–3608

Boliek M (2002) Jpeg 2000 image coding system: Core coding system, ISO/IEC

Bruni V, Cotronei M, Pitolli F (2020) A family of level-dependent biorthogonal wavelet filters for image compression. J Comput Appl Math 367:112467

Cai J, Cao Z, Zhang L (2020) Learning a single tucker decomposition network for lossy image compression with multiple bits-per-pixel rates. IEEE Trans Image Process 29:3612–3625

Chebil F, Kurceren R (2006) Pre-compression rate allocation for jpeg2000 encoders in power constrained devices. In: Visual Communications and image processing 2006, vol 6077, p 607720 International society for optics and photonics

Choi H, Bajić IV (2022) Scalable image coding for humans and machines. IEEE Trans Image Process 31:2739–2754

Chung R (2019) Pictorial color reference images, http://rycppr.cias.rit.edu/cms.html

Du W, Sun J, Ni Q (2004) Fast and efficient rate control approach for jpeg2000. IEEE Trans Consum Electron 50:1218–1221

Edwards T, Smith MD (2021) High throughput jpeg 2000 for broadcast and ip-based applications. SMPTE Motion Imaging J 130(4):22–35

Etesaminia A, Mazinan A (2017) An efficient rate control algorithm for jpeg2000 based on reverse order. J Cent South Univ 24(6):1396–1405

Gaubatz MD, Hemami SS (2007) Robust rate-control for wavelet-based image coding via conditional probability models. IEEE Trans Image Process 16:649–663

Hu Y, Yang S, Yang W, Duan L-Y, Liu J (2020) Towards coding for human and machine vision: A scalable image coding approach. In: 2020 IEEE International Conference on Multimedia and Expo (ICME), pp 1–6 IEEE

Kanchi (2011) Spiht (set partitioning in hierarchical trees), https://in.mathworks.com/matlabcentral/fileexchange/4808-spiht?s_tid=prof_contriblnk. Accessed: 06 March 2020

Kim T, Kim HM, Tsai P-S, Acharya T (2005) Memory efficient progressive rate-distortion algorithm for jpeg 2000. IEEE Trans Circuits Syst Video Technol 15:181–187

Kim SH, Park JH, Ko JH (2021) Target-dependent scalable image compression using a reconfigurable recurrent neural network. IEEE Access 9:119418–119429

Mei Y, Li L, Li Z, Li F (2021) Learning-based scalable image compression with latent-feature reuse and prediction. IEEE Transactions on Multimedia

Naman AT, Taubman D (2019) Decoding high-throughput jpeg2000 (htj2k) on ag. In: 2019 IEEE International Conference on Image Processing (ICIP), pp 1084–1088, IEEE

Naman AT, Taubman D (2020) Encoding high-throughput jpeg2000 (htj2k) images on a gpu. In: 2020 IEEE International Conference on Image Processing (ICIP), pp 1171–1175, IEEE

Naman AT, Wang Y, Gharakheili HH, Sivaraman V, Taubman D (2018) Responsive high throughput congestion control for interactive applications over sdn-enabled networks. Comput Netw 134:152–166

Ortega A, Ramchandran K (1998) Rate-distortion methods for image and video compression. IEEE Signal Process Mag 15(6):23–50

Parisot C, Antonini M, Barlaud M (2002) High performance coding using a model-based bit allocation with ebcot. In: Signal Processing Conference, 2002 11th European, pp 1–4 IEEE

Rajput A, Li J, Akhtar F, Hussain Khand Z, Hung JC, Pei Y, Börner A (2022) A content awareness module for predictive lossless image compression to achieve high throughput data sharing over the network storage. Int J Distrib Sens Netw 18(3):15501329221083168

Said A, Pearlman WA (1996) A new, fast, and efficient image codec based on set partitioning in hierarchical trees. IEEE Trans Circuits Syst Video Technol 6:243–250

Shapiro JM (1993) Embedded image coding using zerotrees of wavelet coefficients. IEEE Trans Signal Process 41(12):3445–3462

Shapiro JM (1993) Smart compression using the embedded zerotree wavelet (ezw) algorithm. In: Signals, systems and computers 1993. 1993 Conference record of the 27th Asilomar Conference on, pp 486–490, IEEE

Sheikh H (2005) Live image quality assessment database release 2, http://live.ece.utexas.edu/research/quality

Sheikh HR, Sabir MF, Bovik AC (2006) A statistical evaluation of recent full reference image quality assessment algorithms. IEEE Trans image process 15(11):3440–3451

Su R, Cheng Z, Sun H, Katto J (2020) Scalable learned image compression with a recurrent neural networks-based hyperprior. In: 2020 IEEE International Conference on Image Processing (ICIP), pp 3369–3373, IEEE

Taubman D (2000) High performance scalable image compression with ebcot. IEEE Trans Image Process 9(7):1158–1170

Taubman D (2000) Kakadu software, http://www.kakadusoftware.com. Accessed 14 Jun 2000

Taubman DS, Marcellin MW (2002) Jpeg2000: Standard for interactive imaging. Proc IEEE 90(8):1336–1357

Taubman DS, Naman AT, Mathew R, Smith MD (2018) High throughput jpeg 2000 (htj2k): New algorithms and opportunities. SMPTE Motion Imaging Journal 127(9):1–7

Tu H, Li L, Zhou W, Li H (2021) Semantic scalable image compression with cross-layer priors. In: Proceedings of the 29th ACM International conference on multimedia, pp 4044–4052

University S (2015) Scien test images and videos, https://scien.stanford.edu/index.php/test-images-and-videos/

Wang Z, Bovik AC, Sheikh HR, Simoncelli EP (2004) Image quality assessment: from error visibility to structural similarity. IEEE Trans Image Process 13(4):600–612

Watanabe O, Taubman D (2019) A matlab implementation of the emerging htj2k standard. In: 2019 IEEE 8th Global Conference on Consumer Electronics (GCCE), pp 491–495, IEEE

Yan N, Gao C, Liu D, Li H, Li L, Wu F (2021) Sssic: Semantics-to-signal scalable image coding with learned structural representations. IEEE Trans Image Process 30:8939–8954

Yeung YM, Au OC (2005) Efficient rate control for jpeg2000 image coding. IEEE Trans Circuits Syst Video Technol 15(3):335–344

Yu W, Sun F, Fritts JE (2006) Efficient rate control for jpeg-2000. IEEE Trans Circuits Syst Video Technol 16:577–589

Zhang YZ, Xu C (2006) Analysis and effective parallel technique for rate-distortion optimization in jpeg2000. In: International conference on image processing, pp 2465–2468

openjpeg (2019) Official repository of the openjpeg project: openjpeg-v2.3.1, https://github.com/uclouvain/openjpeg. Accessed 15 Jun 2019

Funding

No funding have been received from external sources. Research is purely done as a part of academic work.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

• This research is carried out as a part of Academic work. No External funding has been received to carry out this research.

• The research does not involve any Human Participation and/ or Animals.

Conflict of Interests

The authors declare that they have no conflict of interest.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

This appendix presents the comparison results of BlinQS algorithm against JPEG-2000 with and without quality layers in Table 5. Images presented here comprise of standard images and other images downloaded from standard datasets like SCIEN test images and videos [43], Laboratory for Image & Video Engineering, Texas [35, 36, 44], Robert Chung colour management database and Roger K. Fawcett Distinguished Professor [16]. In Table 5, J2K# stands and J2K stands for JPEG-2000 without and with quality layers.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Cheggoju, N., Satpute, V. BlinQS: Blind quality scalable image compression algorithm without using PCRD optimization. Multimed Tools Appl 83, 5251–5275 (2024). https://doi.org/10.1007/s11042-023-15454-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-023-15454-2