Abstract

The histogram equalization technique used for image enhancement diminishes the number of pixel intensities resulting in loss of details and artificial impression. This paper proposes to use a two-dimensional histogram equalization technique based on edge detail to increase contrast while conserving information and maintaining the image’s natural appearance. First, the total variational (TV)/L1 decomposition method retrieves the detailed information present in the low contrast image. The decomposition problem uses an augmented Lagrangian approach to address constraints and an alternate direction technique to determine solutions iteratively. Following that, a two-dimensional histogram is constructed using the detailed image created by the iterative method to determine the cumulative distribution function (CDF). Then the CDF is transferred to distribute the intensities in the whole dynamic range to yield the improved image. The algorithm’s effectiveness is tested on seven databases, including LIME, CSIQ, Dresden, and others, and validated using standard deviation (SD), contrast improvement index (CII), discrete entropy (DE), and the natural image quality evaluator (NIQE). Experimental results show that the proposed method provides better results than the other algorithms. Furthermore, it achieves higher uniformity than existing strategies for all seven databases, as determined by the Kullback-Leibler distance.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The features noticed in a scene provide vital information for a variety of human tasks. This is also true when the number of autonomous robots deployed for industrial purposes increases. Images are used in computerised intelligent systems in a variety of applications, including robotic welding, object detection [13], aerial surveillance, remote sensing of the environment, medical diagnosing [10] and data security.

Despite the advancements in recent visualization techniques, the acquired photos may suffer from retaining the information of the image or results in poor contrast images due to a reduced number of intensities and unequal intensity dispersion [16]. Contrast enhancement (CE) techniques may help with these issues by modifying the pixel. It enhances the visual substance of the image by making it easier to differentiate the required features from their surroundings. Estimating the brightness, colour restoration, and retention of detailed information are essential aspects of the process of enhancement.

The issues such as: over enhancement, failure to sustain mean brightness, and information loss affect contrast-enhanced images [38]. Though numerous techniques were proposed for contrast enhancement in the literature, developing an artifact-free approach in low-level image processing is tricky. Strategies for enhancing contrast are being developed in both the spatial and frequency domains [34, 37].

Due to lower processing costs, spatial domain methods are preferred over frequency-domain methods. The spatial domain enhancement techniques can be classified into histogram and retinex-based approaches [22]. Histogram-based ways are simple, easy to implement, and suitable for enhancing the dark and low contrast images. These methods use the intensity distribution of input images and redistribute them uniformly with the help of the mapping function. But the enhanced images are affected by over enhancement and information loss due to histogram peaks and intensity merging [21]. Preservation of structural details and colour consistency is predominantly observed in retinex-based methods. These methods process either illuminance or reflectance components to enhance the images. But they suffer from graying out, and loss of naturalness in the processed images [18].

1.1 Motivation and objectives

Contrast enhancement is achieved by amplifying the pixel intensity and its neighbourhood difference. During the amplification process, the nature of the pixel has to be determined concerning its neighbourhood. In turn, the neighbourhood information helps to decide the level of amplification. It motivates us to use the spatial information provided by the neighbouring pixels for the enhancement process and the intensity occurrences. It is termed two-dimensional histogram equalization.

Two-dimensional histogram-based techniques produce enhanced images by distributing the intensities over the dynamic range. But these methods suffer from over enhancement due to the absence of controlling parameters. The proposed algorithm addresses the issue mentioned above by developing the following objectives.

-

To ensure the retention of gray levels in the processed image, the information content must be preserved.

-

By regulating the enhancement rate, the artificial appearance created by histogram peaks can be minimised.

-

The use of the entire dynamic range of gray scale enables the separation of diverse objects within the processed image.

The remainder of the paper is structured as follows:- In Section 2, literature survey on spatial domain enhancement methods are discussed. Section 3 describes the proposed methodology. Experimental analysis of the proposed technique in comparison with some of the existing contrast enhancement algorithms are summarized in Section 4. Finally, Section 5 concludes the paper.

2 Related work

In this section, some of the one-dimensional, two- dimensional histogram-based methods and retinex based methods are discussed in detail. Global histogram equalization (GHE) methods consider the whole image for enhancement. It amplifies the noise in homogeneous region. To address this issue, local histogram equalization (LHE) methods were proposed. Contrast limited adaptive histogram equalization method (CLAHE) [46] is a LHE technique that equalizes pixel intensities by partitioning the complete image into small neighbourhoods. It increases the contrast in local regions that helps to identify the objects from the background.

To obviate with the drawbacks of HE method, histogram division based equalization was proposed. Splitting the histogram into two equal parts and equalizing them individually is suggested in brightness preserving bi-histogram equalization (BBHE) [23]. Later, median based separation is proposed in equal area dualistic sub-image histogram equalization technique (DSIHE) [42]. BBHE and DSIHE methods fail to enhance the images, which have their mean and median values in the lower side of the grayscale.To improve the performance of brightness preservation, recursive histogram segmentation methods (RMSHE) [3] are proposed. The number of recursion plays vital role in these algorithms. More number of histogram divisions lead to minor enhancement in the processed image.

The above-mentioned methods concentrate only on brightness preservation. There are other factors like histogram spike resulting in unnatural processed images. To reduce the effect of histogram peaks, histogram clipping limits (CL) are suggested along with the histogram segmentation (BHEPL) [31]. Usage of adaptive cutting limits are suggested (AIEBHE) [36] to improve the efficiency of the histogram clipping process. Further improvement in bi-histogram equalization is achieved by segmenting the histogram using exposure value (CEF) [2, 41]. By employing guided filtering in the transformation function, the structural details and the edges are sharpened in bi-histogram equalization methods (EEBHE) [29].

The above-stated bi-histogram equalization methods suffer from poor utilization of dynamic grayscale and information loss due to the use of clipping limit. So, the processed images have less perceived contrast. Whereas the usage of spatial information along with the occurrence of pixel intensities in the two-dimensional histogram equalization methods increase the contrast of the processed image significantly. The contrast of an image can be increased by magnifying the separation over pixels and its neighbours (LDR) [25]. But it over enhances the over-exposed regions. Based on two-dimensional histogram, T. Celik suggested spatial entropy based enhancement (SECE) [5] and residual entropy based enhancement (RESE) [6]. SECE utilizes the spatial location along with the number of occurrences that helps the pixels to occupy the entire dynamic range. However, it does not control the rate of improvement, which can lead to over enhancement. RESE uses non-linear mapping based on residual entropy, which may result in less improvement in contrast. To overcome the limitations of SECE and RESE, joint histogram equalization (JHE) [1] can be used. The joint histogram (JH) determines the pixel intensities and information in the spatial neighbourhood that occur together. JHE method suffers from loss of information details near the edges due to neighbourhood average usage for the construction of a two-dimensional histogram.

To maintain the colour consistency and naturalness in the processed image, retinex based methods were suggested for image enhancement. Most of the retinex based methods remove the illumination part and keep the reflectance part to get an enhanced image. Later, the enhancement was achieved by modifying illumination components. Fu et.al suggested that illumination component can be modified by combining the first predicted light map with their other derivations (MF) [18]. Though it produces enhanced images with clarity, the absence of illumination structure’s in MF may lack the realistic view of the images with rich textures. To resolve the above-mentioned limitation simultaneous estimation of reflectance and illumination components (SRIE) [17] have been proposed where the illumination component is modified as per the information obtained from the reflectance part of the image. To make further improvement, Gamma transformation is employed in the retinex based methods. Low light image enhancement via illumination map estimation technique (LIME) [20] suggested estimation of illumination component using augmented Lagrangian method and application of the Gamma transformation of estimated illumination part which results in an enhanced image. But it needs filtering as post processing to get a clear enhanced image [43]. Later, removal of noise is also employed in retinex based methods along with enhancement (STRU) [26, 45]. Table 1 illustrates the various existing contrast enhancement methods with their purpose and limitations.

From the above discussion, it is observed that the bi-histogram based techniques are not encompassing the whole dynamic range, which causes minor enhancement of the resultant images. Whereas the two-dimensional histogram-based methods uses the whole dynamic range, however the spreading of intensities are uneven in the processed image. It results in distorted look in the enhanced image. The retinex based methods improve the overall brightness of the image, but they may fail to improve the visual quality of the images which have less number of intensities. The contributions of the proposed methodology is as follows:

-

Total variation-based(TV) L1 minimization is used to decompose an image into smooth and detail images.

-

The minimization problem is solved by using the augmented Lagrangian approach and alternate direction methods.

-

Significant detail information is used along with pixel intensities for transformation which controls the level of enhancement in order to reduce the effect of over enhancement.

-

The subjective evaluation of the proposed technique’s effectiveness over traditional state-of-the-art algorithms is validated by reference and non-reference quantitative parameters.

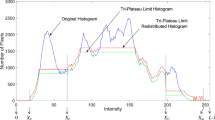

To overcome the limitations observed from the literature, the proposed method employs variational based minimization to extract the significant edge information from the image. Decomposition of an image into smooth and edge components can be effectively done by global variational minimization models. The main objective of this decomposition is to extract the detail part as it contains the meaningful pattern of the input image while suppressing the undesirable intensity transitions caused by the non uniform illuminations. Using the detail part of the input image, two dimensional histogram equalization is determined for enhancement. The calculation of two-dimensional histogram aids in removing the histogram spikes and histogram pits by redistributing the intensities over the entire dynamic grayscale. The redistribution is based on the detail image obtained from decomposition [8, 11]. It helps to preserve the information details. The mapping function helps to control the enhancement rate by limiting the number of pixel occurrences for a particular intensity. The mathematical description of the proposed technique is presented in the next Section.

3 Methodology

The proposed methodology consists of two steps for enhancement. First, the given input image is decomposed into two parts namely smooth and detail image with the help of the total variational (TV)/L1 algorithm. Then the detail image is used for finding the joint occurrence with respect to image intensity. Using the joint occurrence, histogram equalization is performed on the low contrast image to get an enhanced image.

3.1 Total variational (TV)/L1 decomposition

Figure 1 shows the steps followed in the decomposition process. First, the RGB colour image is converted into HSV colour space. The luminance component of the image is considered for the extraction of the edge information. Then TV/L1 algorithm is applied to the luminance component of the image. The algorithm helps to identify the smooth part of the given image by continuously updating the parameters. Finally, the obtained smooth part is subtracted from the luminance component of the input image, resulting in the given image’s edge information.

Consider an image I consists of smooth and edge details denoted as Is and Ie, respectively.

Here TV/L1 algorithm is used to find the smooth regions of the image by minimizing the following objective function which is defined as

where \({{\left \| Is \right \|}_{TV}}=\left \| \nabla Is \right \|\). ∇ represents the forward difference in both horizontal and vertical directions. λ is the regularization parameter. The TV/L1 minimization problem after substituting the TV term is as follows:

To convert the un-constraint to constraint problem, the above minimization is modified into

where G is an auxiliary variable. To solve the above minimization problem, it is again modified into

where Ir is a residual obtained from Is − I. The objective function is solved by using augmented Lagrangian function [4]. After adding the necessary regularization and Lagrange multipliers, the objective function becomes

where z is the Lagrangian multiplier, and μo is the regularization parameter associated with L1 normalization.

where y is the Lagrangian multiplier, and μr is the regularization parameter associated with TV normalization.

3.1.1 I s-subproblem

Collecting the Is involved terms and differentiating with respect to Is and equating it to zero.

From the above equation, it is evident that updating Is needs calculation of inverting the matrix. To simplify the matrix inversion, Fourier transform is used.

where F and F− 1 indicate the Fourier and inverse Fourier transform, respectively.

3.1.2 G-subproblem

Collecting the G related terms for updating the G

The above expression can be solved by using the shrinkage formula [27]

3.1.3 I r-subproblem

Collecting the Ir related terms for updating the Ir

By applying the shrinkage formula

3.1.4 Lagrange multiplier

The iteration is stopped for

δ represents the tolerance value or reaching the maximum number of iterations.

The smooth image Is obtained from the TV/L1 algorithm, is deducted from the low contrast input image I to compute the detail information.

The detail image Ie obtained from the above equation is subjected to two-dimensional histogram equalization (2DHE). The mathematical procedure for 2DHE is mentioned below.

3.2 Contrast enhancement

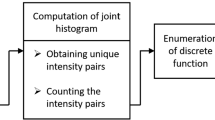

Figure 2 shows the various steps involved in of two-dimensional histogram equalization.the obtained edge information and the luminance component of the input image are used for constructing the two-dimensional histogram by counting the joint occurrences of the intensity values. With the help of the two-dimensional histogram, the cumulative distribution function is used to enumerate a discrete function. Histogram equalization is performed based on the discrete function to produce an enhanced image. The enhanced luminance component of the image is combined with un-processed hue and saturation to produce an RGB colour image. The two-dimensional histogram is computed using the input image’s (I) unique intensity values and the detail image’s (Ie) individual pixel values.

3.2.1 Computation of two dimensional histogram

where U and V indicate, respectively, the number of independent intensities in the input (I) and detail (Ie) image.

The two-dimensional histogram’s size is determined by the number of distinct pixel values appeared in the low contrast input and detailed image. This count is used for computing the CDF.

3.2.2 Computation of CDF

The CDF is calculated from the two-dimensional histogram

where \(F\left (i,j \right )\) is discrete in nature and can be utilized as a cumulative distribution function. This function is used for determination of equalized histogram.

3.2.3 Determination of equalized histogram

A transfer function is created using the discrete function as folllows:

where \(\left \lfloor . \right \rfloor \) parameter is rounded to the nearest integer. \({{ht}_{eq}}\left (i,j \right )\) denotes intensity equivalent that is substituted whenever \(I\left (m,n \right )=i\And Ie\left (m,n \right )=j\). \(F{{\left (i,j \right )}_{min}}\) represents the minimal value of the CDF and L represents the total number of pixel intensities. H and W represent the number of rows and columns of the image, respectively.

The transfer function aids to generate the equalized image. It is represented as follows:

The improved image is obtained by substituting the specified intensities with their corresponding equivalent intensities of Hteq. This includes all corresponding intensities based on expected, combined occurrences in the input and detail image.

The theoretical time complexity of the proposed algorithm is estimated using the procedure described in Cao et al. [7]. The computation required to calculate the two-dimensional histogram for a \(^{\prime }b^{\prime }\) bit grayscale image of size M × N is O(2MN). Calculating a two-dimensional CDF needs O(2b) operations, while mapping functions require O(MN) operations to identify similar intensities. As a result, the total time complexity is O(3MN + 2b).

3.3 Parameter selection

3.3.1 Regularization parameter λ

The regularization parameter plays a vital role in minimization of the objective function. Experimental work is carried out on different values λ to choose an optimum value. Figure 3 shows the various smooth and edge information resulted from varying λ value. From the figure, it is observed that for small values of λ more edge informations are retained in the detail image whereas only significant details are obtained from the incremented λ. The main aim of the proposed algorithm is to find the prominent edge details which in turn helps the histogram equalization by focusing on the difference in various regions of the image. By considering above proposal, in this work the λ value is chosen as’10’.

3.3.2 Regularization parameter μ r

The regularization parameter associated with the total variational term aids to speed up the convergence of the minimization. Studies show that an adaptive parameter increases the convergence compared to the constant one. To make the μr as adaptive the following conditions are used. The initial value of μr is selected as ’2’ based on literature studies.

In accordance with the objective term, the penalty term is reduced to reach the faster convergence. The violation of the constraint can be ensured by ρ and β with their proper selection. Studies show that the β lies between [0,1] and ρ greater than one help to prevent the violation of constraint. Experiment is performed on the various values of ρ to select the proper value.

Figure 4 shows the rate of convergence for the different values of ρ by considering the number of iterations in x-axis and objective value in y-axis. From Fig. 4 it is evident that the smaller values of ρ result in faster convergence compared to the larger values. Empirically, the ρ is chosen as ’2’ in this work.

3.3.3 Effect of μ r, ρ and β

An experiment is conducted to find the performance of the algorithm based on the tunable parameters μr, ρ, and β. In this experiment various values of the tunable parameters are referred as seven cases and are tabulated in Table 2.

The different cases are experimented on the Kodak [32] database which consists of 24 true colour images. The performance of the algorithm is evaluated based on the quantitative metrics such as standard deviation (SD) [29] to measure the global contrast measurement, discrete entropy (DE) [30, 35] to examine the preservation of information, naturalness image quality index (NIQE) [28] to measure the visual distortion and Kullback Leibler (KL)-distance [39] for the uniform distribution of intensities.

Figure 5 shows the effect of tunable parameter on the contrast enhancement. Higher values of standard deviation and the discrete entropy specify the information is preserved in the enhanced image. Similarly, lower values of naturalness image quality index and KL-distance are desirable for distortion less enhanced image. It is evident from the Fig. 5 that Case 1 i.e μr = 2, ρ = 2 and β = 0.7 gives the best values of all four metrics. So, in this work the tunable parameters are chosen according to Case 1.

4 Experimental analysis

Experiments are performed to validate the effectiveness of the proposed algorithm with other algorithms such as: bi-histogram equalization, two-dimensional histogram based methods and retinex based algorithms. EEBHE (2019) and CEF (2018) methods in bi-histogram equalization, CLAHE (1994), LDR (2014) and JHE (2019) in two-dimensional histogram based methods, MF (2016), SRIE (2016), LIME (2017) and STRU (2018) in retinex based methods are considered for comparison.

The proposed and the other state of art algorithms are experimented on various datasets namely Kodak [32], CSIQ [24], Dresden [19], Flickr [33], LIME [20], MF [18], and DICM [25] datasets.The RGB images from the datasets are converted to HSV colour space, then their luminance (V) component is considered for processing. After the enhancement, the processed luminance component is combined with un-processed hue (H) and saturation (S) component for conversion back to the RGB colour space. The above-mentioned process is used for EEBHE, CEF, CLAHE, JHE and the proposed algorithm. However, for LDR, MF, SRIE, LIME and STRU methods, the codes are available in the author websites which accepts the RGB colour images. All the experiments are conducted in MATLAB 2021A running on a PC Windows 10 with 8GB RAM and 2.4 GHz CPU.

4.1 Visual analysis

Figure 6 depicts the low contrast image and its enhanced images using various algorithms and the proposed method from CSIQ database. The first and third row of the Fig. 6 shows the low contrast input image and its corresponding resultant images derived by representative techniques whereas the second and the fourth row show zoomed portion of the input and the enhanced images. Figure 6 (a) and (f) show the actual and zoomed portion of the input image, respectively. Due to poor dynamic range of the pixel intensities, the details are not clear. Figure 6 (b) and (g) depict the enhanced and zoomed portion obtained by EEBHE algorithm, respectively. EEBHE stretches the intensities between the minimum and maximum pixel values of the input image. It may be the reason that, the enhanced image has little or no improvement in the contrast. Figure 6 (c) and (h) display the enhanced and zoomed portion resulted from CLAHE algorithm, respectively. It is observed that, there is a minor improvement in the enhanced image as compared with the input image. CLAHE divides the whole image into small neighbourhood and equalizes the intensities with in the neighbourhood. It could be because of failure to use the full dynamic gray scale of CLAHE.

Figure 6 (d) and (e) display the enhanced and zoomed portion resulted from LDR algorithm, respectively. Compared to the EEBHE and CLAHE, LDR results in better contrast enhanced image. But there is no significant improvement in the water and grass presents in the background. Figure 6 (e), (k), (l), (m) denote the enhanced images resulted from MF, SRIE, LIME and STRU, respectively. Their zoomed portions are shown in Fig. 6 (j), (o), (p) and (q). From the enhanced images and their zoomed portions, it is observed that the overall brightness of the image has been improved but the difference between the various objects with in the scene are small that may cause foggy appearance in the resulted images.

Figure 6 (n) and (r) display the enhanced image and its zoomed portion resulted from the proposed algorithm. From the figure, it is observed that the water, grass in the background and the various objects are clearly visible. This could be because the proposed approach is fully utilized on the dynamic gray scale. Two dimensional histogram obtained from the detail and the input image aids to distribute the intensity levels in the entire dynamic range.

Figure 7 demonstrates the low contrast image and its enhanced images using various algorithms including the proposed method from Flickr database. Like Fig. 6, the first and thrid rows represent the input and the enhanced images whereas the second and the fourth rows show the zoomed portion. Figure 7 (a) and (f) show the input and zoomed portion of the image, respectively. Figure 7 (b) and (g) depict the enhanced and zoomed portion obtained by EEBHE which demonstrates the poor utilization of dynamic gray scale. Figure 7 (c) and (h) represent the enhanced and zoomed portion obtained by CEF. It is inferred that the local regions are enhanced but the over all contrast improvement is less significant. It may be due to the selection of proper exposure parameter for dividing the histogram for equalization.

Figure 7 (d) and (i) illustrates the enhanced and zoomed portion resulted from CLAHE algorithm, respectively. It is noteworthy that the enhanced picture is slightly better than the input image. The enhanced image and its zoomed portion obtained from LDR are shown in Fig. 7 (e) and (j), respectively.The perceived contrast is less in the resulted images.

Figure 7 (k), (l), (m), (n) display the enhanced images resulted from MF, SRIE, LIME and STRU, respectively. Their zoomed portions are represented in Fig. 6 (p), (q), (r) and (s). It is noticed that the retinex-based methods produce the enhanced images with significant contrast improvement compared to the bi-histogram equalization and the layered difference. Still an improvement is required for the background like the building wall in the image.

Figure 7 (o) and (t) illustrate the enhanced image and its zoomed portion resulted from the proposed algorithm. From the figure, it is noticed that the proposed method enhances both objects and its background by stretching the intensities to occupy the dynamic range in the gray scale.

The similar efffect can be noticed in the Fig. 8 where the sample image is taken from the Dresden database.

The sample image taken from LIME database and its enhanced images are depicted in Fig. 9. The low contrast image and its zoomed view of a particular portion are shown in Fig. 9 (a) and (f), respectively. Fig. 9 (b), (c), (d) and (e) represent the enhanced images obtained from EEBHE, LDR, LIME and proposed method, respectively. Their zoomed versions are depicted in Fig. 9 (g), (h), (i) and (j). From the figure, it is observed that the improved images resulted from LIME and the suggested technique have better clarity in comparison with other methods. It is clearly seen from the zoomed view that, the shadow region presented in the newspaper is completely removed in the proposed method compared with the other techniques.

Figure 10 represents the sample images obtained from MF and Flicker database. In these images sample regions are earmarked with red rectangles to illustrate the significance of the enhancement algorithm on these aforesaid regions.

Figure 10 (a) shows that the highlighted regions of the roof arch and glass. Figure 10 (b), (c), (d) and (e) exhibit the enhanced images resulted from EEBHE, LDR, LIME and the proposed method. It is inferred from the figure that roof arch and glass can be well recognised in the proposed method in comparison with other methods deliberated in the literature. The similar effect can be observed in the second row of the Fig. 10 where the shadow regions under the tree is well enhanced by the proposed method in comparison with the other methods.

Figure 11 shows the sample low contrast images and their enhanced outputs. First and second row display the sample and enhanced images of KODAK database. Similarly third and fourth row show the images obtained from the DICM database. From the figure, it is observed that the proposed method enhances the images by differentiating various regions of the image.

The proposed method also compared with fast local Laplacian filtering (FLL) and the enhanced images are shown in Fig. 12.

Qualitative analysis of the sample images taken from CSIQ and Kodak databases. (a) input from CSIQ, (b) enhanced image by FLL, (c) enhanced image by proposed method, (d) input from CSIQ, (e) enhanced image by FLL, (f) enhanced image by proposed method, (g) input from Kodak, (h) enhanced image by FLL, (i) enhanced image by proposed method

Figure 12 shows the sample input images from Kodak and CSIQ databases and their enhanced images resulting from the fast local Laplacian filter (FLL) and the proposed method. Due to lower dynamic range and the uneven distribution of the pixel intensities, the scene information is not conveyed correctly. After enhancement, the scene information is visible for the images processed by the proposed method compared to the FLL technique. It could be the utilization of the entire dynamic grayscale.

From the visual analysis, it is substantiated that the proposed algorithm produces the enhanced images by utilizing the complete dynamic gray scale which results in distinguishing the various objects present in the scene.

4.2 Quantitative analysis

Quantitative analysis validates the visual analysis. It can be used to quantify the performance of the enhancement methods. A performance metric accurately and automatically estimates an image’s quality. An optimal metric should be able to represent the subjective measure’s quality forecasts.

The suggested method’s major goal is to enhance the contrast of the image while maximizing the image’s information features and retaining the edge features. Contrast improvement index (CII) [44] measure is utilized to evaluate local contrast improvement, while standard deviation (SD) [29] is utilized to study global contrast improvement to quantify the goals.Discrete entropyc(DE) [35, 40] is a metric that assesses the amount of information present in an image.Naturalness Image quality evaluator (NIQE) [28] is a non reference metric used for measuring the naturalness of the image after processing. Uniform distibution of pixel intensities defines a high contrast image. To measure the deviation of enhanced image’s pixel distribution from the uniform distribution, Kullback Leibler (KL)-distance [39] is utilized in this paper. The approaches described in the literature, as well as the suggested method, are tested on the entire datasets to improve the accuracy of the evaluation. Table 3 shows the details about the performance metrics used in this work. It provides the information about the mathematical representation, significance and range for the each performance metric. Tables 4, 5, 6, 7 to 8 illustrates mean value for various performance measures obtained from different databases and for each measure, the best values are indicated in boldface.

Table 4 describes the CII values obtained from seven databases using the representative methods disccussed in the literature along with the proposed method. CII measures improved contrast in the nearby area. It is inferred from the above table that the proposed algorithm produces better CII value for the databases considered for experiment compared to the other state of art algorithms. CLAHE, EEBHE, CEF, LDR and JHE methods produce the CII value comparable with the proposed algorithm. CLAHE considers local neighbourhood for histogram equalization which may aid to achieve the improvement in local regoins. EEBHE and CEF methods employ guided filter and the histogram addition, respectively which may lead to local contrast improvement. The usage of spatial information in LDR and JHE may help to enhance the small regions in the image. MF, SRIE, LIME, and STRU may increase the overall brightness and result a minor improvement in the local regions which is indicated by the low value of CII for all the seven databases considered in the experiment. Due to the presence of edge information in the detail image, the proposed algorithm can improve the informations in the local regions which may result better CII value for all the databases compared to the representative methods.

Standard deviation (SD) values are listed in the Table 5. The distribution of intensities that occupy the entire gray scale results in high contrast image. It can be measured with the statistical parameter SD. From the table, it is brought out that the proposed method produces better SD value for all the databases except the Flicker database. The retinex based methods such as MF, SRIE, LIME and STRU follow the distribution of input image that may cause a small improvement in the global contrast indicated by smaller SD values in the Table 5, whereas the histogram based methods such as CLAHE, CEF, EEBHE, LDR and JHE produce the enhanced images by utilizing the complete dynamic gray scale resulting higher SD values indicating global contrast improvement. The mapping function which considers the detail information for transforming the intensities results in the enhanced image to occupy the entire dynamic gray scale. It aids the proposed algorithm to result in high SD value compared to the other state of art algorithms presented in the literature.

Table 6 displays the mean discrete entropy (DE) value of the input and processed images obtained from the techniques described in the literature inclusive of proposed algorithm. DE measures the amount of randomness of an image. In other words, DE states that the amount of visible information details present in an image. Higher DE value signifies the higher clarity in the processed image. It is observed from the table that proposed algorithm results in high DE value compared to the other algorithms. It demonstrates that the proposed algorithm enhances the image with maximizing the information. CEF and EEBHE methods produce entropy values lesser than the input entropy indicating the loss of information during enhancement. It may be due to the histogram clipping employed in these methods. LDR and JHE produce less entropy value compared to the input entropy for the Dresden, LIME and DICM databases due to the merging of their intensities. It is observed from the table that the retinex based methods preserve the information during enhancement. The usage of edge information obtained from the TV/L1 decomposition aids to preserve and maximize the information present in the image which may lead to a higher DE value of the proposed algorithm.

Table 7 illustrates the mean naturalness image quality evaluator (NIQE) value of the processed images obtained from the techniques described in the literature and proposed algorithm. It is a non-reference metric used to quantify the amount of distortion present in a processed image. A low NIQE value indicates distortion less and a visually pleasing image. It has high correlation with human judgements. It is noticed from the Table 7 that proposed algorithm has low NIQE value for the seven databases used in the experiment in comparison with other techniques. It implies that the suggested technique results in an improved image with less distortion.

The mean Kullback leibler distance (KL) is listed in the Table 8 for the seven databases obtained from the representative and the proposed methods. For a high contrast image, it’s histogram should be uniform and the pixel values should spread over the whole dynamic range. The equal dispersal of pixel intensities of the processed image can be measured by KL distance. Low value of KL-distance reveals the better uniformity of the distribution. It is observed from the table that the proposed algorithm results in a low KL value which describes that the distribution of pixel intensities are more uniform compared to the other methods.

Table 9 shows the mean value of the various performance metrics resulting from the FLL and the proposed method. Contrast improvement index (CII) and standard deviation (SD) values are higher for the proposed method than for the FLL technique. It indicates that the local and global contrast improvement is more for the proposed method. The discrete entropy value indicates the amount of information content after processing. The suggested method results in more information content compared to the FLL technique. A low value of naturalness image quality indicator (NIQE) and Kullback-Leibler distance (KL) represent less visual distortion and more uniformity in the distribution. It is observed from the Table that the low value of NIQE and KL- distance results from the proposed methodology in comparison with the FLL method.

Figure 13 shows the comparative plot of mean value of various performance metrics obtained from seven databases. It is observed from the figure that for all the databases, the proposed technique achieves the highest CII, SD and DE values, whereas lowest NIQE and KL values. These results suggest that, the suggested method gives greater improvement in the contrast and preserves information in the processed image in comparison with the existent methods.

4.3 Evaluation of proposed algorithm for the noisy image

During picture acquisition, extrinsic disturbances like as noise, blurring, and lighting conditions have the most impact on the photographs [12, 14]. Various sorts of noise may be introduced into the image throughout the acquisition process. In general, noise can be represented by a Gaussian distribution [9, 15]. Low contrast images are combined with Gaussian noise to test the robustness of the suggested technique in the presence of noise. The probability distribution of the pixel intensities in the low contrast input image is used to calculate the variance of the additional Gaussian noise. The proposed method is then applied to the noisy photos. To validate the robustness of the algorithm in the presence of noise, the performance metrics are compared between the noisy and noiseless enhanced results (Fig. 14).

From the Table 10, it is observed that that proposed method can enhance the noisy images but there is a small difference exist between the performance metrics of the noisy and noise free input. The difference is small and it denotes that the employment of TV/L1 algorithm extracts the edge information significantly in the presence of noise. This analysis indicates that the proposed method is less influenced by the external disturbances.

5 Conclusion

This paper proposed a contrast enhancement technique based on TV/L1 decomposition and two-dimensional histogram equalization for low contrast images. The effectiveness of contrast enhancement depends on differentiating various objects from the background of the scene. The employment of TV/L1 aids in identifying the prominent edge details from the low contrast images, which helps to construct a two-dimensional histogram. The equalization of a two-dimensional histogram spreads the intensities throughout the entire dynamic grayscale with uniform distribution to produce an enhanced image. The uniform distribution ensures the removal of histogram peaks and pits, which in turn causes artifacts in the processed image. The experimental results have revealed that the suggested technique results in an enhanced image with information preservation indicated by the highest values of SD and DE compared to the other methods for all the databases used in this study. It also outperforms by producing visually pleasing images measured by a lower NIQE and KL-distance values compared to other existing methods. This approach could be used as a pre-processing stage for machine vision applications such as edge recognition, object detection, and tracking to increase their performance.

Data Availability

The datasets used in this study are publicly available. The sources are mentioned in the reference Section.

References

Agrawal S, Panda R, Mishro P, Abraham A (2019) A novel joint histogram equalization based image contrast enhancement. Journal of King Saud University - Computer and Information Sciences

Acharya UK, Kumar S (2021) Directed searching optimized mean-exposure based sub-image histogram equalization for grayscale image enhancement. Multimed Tools Appl 80(16):24005–24025

Chen S-D, Ramli AR (2003) Contrast enhancement using recursive mean-separate histogram equalization for scalable brightness preservation. IEEE Trans Consum Electron 49(4):1301–1309

Chan SH, Khoshabeh R, Gibson KB, Gill PE, Nguyen TQ (2011) An augmented lagrangian method for total variation video restoration. IEEE Trans Image Process 20(11):3097–3111

Celik T (2014) Spatial entropy-based global and local image contrast enhancement. IEEE Trans Image Process 23(12):5298–5308

Celik T, Li H-C (2016) Residual spatial entropy-based image contrast enhancement and gradient-based relative contrast measurement. J Mod Opt 63(16):1600–1617

Cao G, Tian H, Yu L, Huang X, Wang Y (2018) Acceleration of histogram-based contrast enhancement via selective downsampling. IET Image Process 12(3):447–452

Diwakar M (2020) Blind noise estimation-based CT image denoising in tetrolet domain. Int J Inf Comput Secur 12(2-3):234–252

Diwakar M, Kumar M (2015) CT image denoising based on complex wavelet transform using local adaptive thresholding and bilateral filtering. In: Proceedings of the third international symposium on women in computing and informatics, pp 297–302

Diwakar M, Kumar M (2018) A review on CT image noise and its denoising. Biomed Signal Process Control 42:73–88

Diwakar M, Kumar P (2019) Wavelet packet based CT image denoising using bilateral method and bayes shrinkage rule. In: Handbook of multimedia information security: Techniques and applications, Springer, pp 501–511

Diwakar M, Kumar P, Singh AK (2020) CT image denoising using nlm and its method noise thresholding. Multimed Tools Appl 79(21):14449–14464

Diwakar M, Patel PK, Gupta K, Chauhan C (2013) Object tracking using joint enhanced color-texture histogram. In: 2013 IEEE Second International Conference on Image Information Processing (ICIIP-2013). IEEE, pp 160–165

Diwakar M, Singh P (2020) CT image denoising using multivariate model and its method noise thresholding in non-subsampled shearlet domain. Biomed Signal Process Control 57:101754

Diwakar M, Verma A, Lamba S, Gupta H (2019) Inter-and ntra-scale dependencies-based CT image denoising in curvelet domain. In: Soft computing: Theories and applications, Springer, pp 343–350

Feng X, Li J, Hua Z (2020) Low-light image enhancement algorithm based on an atmospheric physical model. Multimed Tools Appl 79(43):32973–32997

Fu X, Zeng D, Huang Y, Zhang XP, Ding X (2016) A weighted variational model for simultaneous reflectance and illumination estimation. pp 2782–2790

Fu X, Zeng D, Huang Y, Liao Y, Ding X, Paisley J (2016) A fusion-based enhancing method for weakly illuminated images. Signal Process 129:82–96

Gloe T, Böhme R (2010) The ‘Dresden Image Database’ for benchmarking digital image forensics. In: Proceedings of the 25th Symposium On Applied Computing (ACM SAC 2010), vol 2, pp 1585–1591

Guo X, Li Y, Ling H (2017) Lime: Low-light image enhancement via illumination map estimation. IEEE Trans Image Process 26(2):982–993

Kansal S, Purwar S, Tripathi RK (2018) Image contrast enhancement using unsharp masking and histogram equalization. Multimed Tools Appl 77 (20):26919–26938

Kandhway P, Bhandari AK (2019) An optimal adaptive thresholding based sub-histogram equalization for brightness preserving image contrast enhancement. Multidim Syst Sign Process 30:1859–1894

Kim Y-T (1997) Contrast enhancement using brightness preserving bi-histogram equalization. IEEE Trans Consum Electron 43(1):1–8

Larson E, Chandler D (2010) Most apparent distortion: full-reference image quality assessment and the role of strategy. J Electr Imaging 19(1):1–21

Lee C, Lee C, Kim C (2013) Contrast enhancement based on layered difference representation of 2D histograms. IEEE Trans Image Process 22 (12):5372–5384

Li M, Liu J, Yang W, Sun X, Guo Z (2018) Structure-revealing low-light image enhancement via robust retinex model. IEEE Trans Image Process 27(6):2828–2841

Li C (2010) An efficient algorithm for total variation regularization with applications to the single pixel camera and compressive sensing. Master’s Thesis Rice University

Mittal A, Soundararajan R, Bovik AC (2013) Making a completely blind image quality analyzer. IEEE Signal Process Lett 20(3):209–212

Mun J, Jang Y, Nam Y, Kim J (2019) Edge-enhancing bi-histogram equalisation using guided image filter. J Vis Commun Image Represent 58:688–700

Nath MK, Dandapat S (2012) Differential entropy in wavelet sub-band for assessment of glaucoma. Int J Imaging Syst Technol 22:161–165

Ooi CH, Kong NSP, Ibrahim H (2009) Bi-histogram equalization with a plateau limit for digital image enhancement. IEEE Trans Consum Electron 55(4):2072–2080

(2013). Online. Available: http://r0k.us/graphics/kodak/. Accessed 19 Dec 2019

(2021). Online. Available: https://sites.google.com/site/vonikakis/datasets. Accessed 10 April 2021

Sengupta D, Biswas A, Gupta P (2021) Non-linear weight adjustment in adaptive gamma correction for image contrast enhancement. Multimed Tools Appl 80(3):3835–3862

Shannon CE (1948) A mathematical theory of communication. Bell Syst Tech J 27(3):379–423

Tang JR, Isa NAM (2014) Adaptive image enhancement based on bi-histogram equalization with a clipping limit. Comput Electr Eng 40(8):86–103

Veluchamy M, Subramani B (2020) Fuzzy dissimilarity contextual intensity transformation with gamma correction for color image enhancement. Multimed Tools Appl 79(27):19945–19961

Vijayalakshmi D, Nath MK, Acharya OP (2020) A comprehensive survey on image contrast enhancement techniques in spatial domain. Sens Imaging 21:1–40

Vijayalakshmi D, Nath MK (2021) A novel contrast enhancement technique using gradient-based joint histogram equalization. Circuits, System, and Signal Processing, pp 1–39

Vijayalakshmi D, Nath MK (2021) Taxonomy of performance measures for contrast enhancement. Pattern Recogni Image Anal 30:691–701

Wang X, Chen L (2018) Contrast enhancement using feature-preserving bi-histogram equalization. SIViP 12(4):685–692

Wang Y, Chen Q, Zhang B (1999) Image enhancement based on equal area dualistic sub-image histogram equalization method. IEEE Trans Consum Electron 45(1):68–75

Wang P, Wang Z, Lv D, Zhang C, Wang Y (2021) Low illumination color image enhancement based on gabor filtering and retinex theory. Multimed Tools Appl 80(12):17705–17719

Zeng P, Dong H, Chi J, Xu X (2004) An approach for wavelet based image enhancement. In: 2004 IEEE International conference on robotics and biomimetics (pp. 574–577). IEEE

Zhuang P, Ding X (2020) Underwater image enhancement using an edge-preserving filtering retinex algorithm. Multimed Tools Appl 79(25):17257–17277

Zuiderveld K (1994) Contrast limited adaptive histogram equalization. In: Graphics Gems, P. S. Heckbert, Ed. Academic Press, pp 474–485

Acknowledgment

The work has been supported by the department of ECE, National Institute of Technology Puducherry, India.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interests

The authors declare that there is no conflict of interest to report.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Vijayalakshmi, D., Nath, M.K. A strategic approach towards contrast enhancement by two-dimensional histogram equalization based on total variational decomposition. Multimed Tools Appl 82, 19247–19274 (2023). https://doi.org/10.1007/s11042-022-13932-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-022-13932-7