Abstract

Difference between similar feature points is presented in the fine-grained classification, which depends on discriminative in extremely localized regions. Hence, the accurate localization of discriminative regions is the major challenge found in the fine-grained feature extraction and classification. The patch-based framework has been described to address this issue. The accurate patch localization is enhanced by the triplet of patches with the logical constraints, it minimnized the feature set. Therefore, the object bounding boxes are the only need for the proposed approach. This paper presents an effective fine-grained feature extraction and classification schemes for solid materials. The Fuzzy logic-Scale Invariant Feature Transform (FL-SIFT) is introduced for feature extraction. FL-SIFT based key points are taken for the classification is performed by hybrid Multilayer Perceptron with Faster Region Convolutional Neural Networks (MLP-Faster RCNN). A key advantage of fine-grained based MLP-Faster RCNN approach is, on average better in identification with FL-SIFT key points. The model is retrained to play out the recognition of four sorts of metal articles with the whole procedure taking 4 h time to clarify and prepare the new model per strong piece. The simulation is implemented on Python platform and the results are evaluated by several evaluation measures like specificity, accuracy, precision, f-measure, and recall. The performance outcomes are compared with the existing approaches and existing works. It shows that the proposed model achieved maximum outcomes than existing schemes in terms of accuracy 98.3%, Precision 96%, specificity 97.87% and it takes very low execution time 1.46 s.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Fine-grained visualization scheme [5] has gained popularity in perceiving a regularly expanding number of classifications. Before profound learning Bag of Words, SIFT based calculations are utilized for question recognizable proof and characterization. The matching of common structures among images (i.e., features matching) are taken after extracting the features and their descriptors [1, 8]. Presently CNN based outline has immense fame because of its exactness and precision. Execution on one standard dataset has expanded from 23% to 55.7% in just three years. On the information side, there has an advance in growing the arrangement of fine-grained areas, which now incorporates the strong materials pieces, minerals, metal sheets etc. Compared to nonexclusive question acknowledgement, fine-grained acknowledgement [6, 20] benefits more from learning basic parts of the articles that can help adjust objects of a similar class and separate between neighboring classes. A major aspect of the regulated preparing process is current best in class that require part explanations [12]. This represents an issue for scaling up fine-grained acknowledgement to an expanding number of areas.

The objects at the subordinate level are distinguished by fine-grained object detection, for instances various species of cars, pets and birds. In a certain circumstance, an application of object detection is a fine-grained detection. Towards the objective of preparing fine-grained classifiers an imperative objective fact is mentioned without part explanations [19]. A system built by the image classification and object detection methods for alerting the authorities any dangerous object (a gun, a knife, etc.) is detected in the captured image. Protests in a fine-grained class share a high level of shape similitude, enabling them to be adjusted through division alone. In fine-grained acknowledgement, classifications share comparative shapes, which take into account arrangement to be done simply in light of division [18]. In the preparation procedure, we can take in the trademark parts without the comment exertion. In this work, we propose a strategy to create parts which can be recognized in novel pictures and realize which parts are valuable for acknowledgement. Our strategy for producing parts use late advance in co-division to fragment the preparation pictures. We at that point thickly adjust pictures which are comparable in posture, performing arrangement over all pictures as the structure of these more dependable neighborhood arrangements. Besides it can sum up to fine-grained spaces which don’t have part comments, setting up another great discovery plan on the honest to goodness materials dataset by a vast edge. For the purpose of experiment, the COCO dataset [28] has been utilized.

Deep learning algorithms prompt dynamic representations since more theoretical representations are frequently built-in light of less unique ones. A vital favorable position of more conceptual representations is that they can be invariant to the nearby changes in the info information. Adapting such invariant features is a progressing significant objective in design acknowledgement. Past being invariant such representations can unravel the variables of variety in information [17]. The genuine information utilized as a part based modeling technologies confused communications of many sources. For instance, shapes, question materials, light, and protest are the various wellsprings varieties used for creating a picture. The conceptual depictions given by profound learning calculations can separate the diverse varieties of wellsprings in the information. In various vision tasks (semantic segmentation, object detection and image classification), an impressive performance has been obtained by deep learning technologies. Particularly, advanced deep learning approaches produce promising outcomes for the fine-grained image classification, it gives the subordinate-level categories identification [10].

The algorithms of deep learning [9] are suspicious spaces of research into the computerized complex features extraction. CNN [29], RCNN [26] FAST-RCNN [21], FASTER-RCNN [4], Mask-RCNN [33] are various types of these learning algorithms. Laplacian of Gaussian and Canny algorithms are the famous and recent edge detection algorithms. Although Discrete Cosine Transform (DCT) [16] is widely employed to extract proper features for biometric recognition. Utilizing such algorithms more unique features (key feature points) are characterized as far as lower-level features. This system can be extremely valuable in identifying objects, for example, building materials, and mined metals from the surface and general shape [22]. Deep learning algorithms are in-depth multi-layered operational architectures of consecutive layers. A nonlinear transformation is applied by each layer on its input and given the output representation. By transmitting the data by multiple transformation layers, complicated data is learned hierarchically and this is the objective. The first layer contains the sensory data and each output layer is provided as input to its next layer [25].

The main contributions of this research are given below:

-

In this paper, an effecicient fine-grained feature extraction and classification of solid materials is proposed by the hybrid RCNN.

-

Fine-grained feature extraction and classification of sold material is the main task considered in this research. Enhance the accuracy is the foremost problem and is taken as the main objective.

-

To enhance the accuracy, best feature extraction and precise classification are most important. So FL-SIFT approach is introduced for feature extraction. The corner and edge key points are extracted by fuzzy logic and width and height length are extracted by the SIFT approach.

-

To improve the classification accuracy, hybrid the two classification approaches MPL-Faster RCNN. Compared with MLP, the Faster RCNN has maximum outcome performance.

-

Finally, several performance metrics are used to evaluate the performance outcomes of the proposed approach on COCO dataset.

The organization of this research paper is arranged as follows: section 2 gives the recent related research works, section 3 gives the proposed methodology, and it includes feature extraction and classification approach. Section 4 gives the performance results of the proposed approach and finally, the conclusion of research work is given in section 5.

2 Recent related works: A review

2.1 Recent existing research works related to fine-grained feature extraction and classification of solid materials (Table 1)

Wang et al. [23] have introduced a survey about the usage of CNN for hypothesizing object position and division in mobile heterogeneous SoCs from videos/images. Several CNN based object detection schemes were introduced in recent times for rising both speed and accuracy. Real-time object detection schemes and performances were conducted in this task. The frameworks design parameters were adjusted and employed the design space exploration procedure according to the analysis of the results. In mobile GPUs to enhance the energy-efficiency (mAP/Wh) of real-time object detection outcome observation in the investigation was adopted.

Kernel-based SIFT and spherical SVM classifier were introduced by Bindu and Manjunathachari for face recognition [2]. Besides, for classification and feature extraction a Multi Kernel Function (MKF) was introduced and the facial features were extracted by the SIFT approach, which was altered in the descriptor stage by MKF approach. Hence, it was termed as a new approach named KSIFT. Finally, classification was performed by Multi-kernel Spherical SVM classifier.

Fine-Grained Object Detection proposed in [7] by Goswami et al. over the Scientific Document Images using Region Embedding. A region embedding model was also presented in this research, and the convolutional maps of a neighbors proposal were utilized as context to give an embedding for every proposal. The semantic relationships among a surrounding context and its target region were captured by region embedding. For every proposal, an embedding was produced by the end-to-end model, then a multi-head attention model classified each proposal.

A unified CNN model represented M-bCNN as per the convolutional kernel matrix was proposed in [14] by Lin et al. for fine-grained image classification. A substantial link channels, neurons, and data streams gains at a modest rise of computational necessities were the merits of convolutional kernel matrix compared to plain networks. The positive effort over two-parameter growth inhibition, representational ability-enhancing and methodology architecture were described in this paper. For the strong similarities between sub-categories, the winter wheat leaf diseases images were operated.

The object detection improvement with Deep CNN was proposed by Zhang et al. [30] through the Bayesian optimization and structured prediction. The region proposals were refined in a Bayesian optimization by the fine-grained search approach and the localization was improved by CNN classified trained by SVM. The performance of the proposed model was checked and activated over PASCAL VOC 2007 and 2012 datasets. An important improvement in detection outcomes on the RCNN on both PASCAL VOC 2007 and 2012 benchmarks also demonstrated in this research.

An effective detection system was proposed in [32] by Zhang et al. according to the analysis of the terahertz image characteristics. Initially, numerous terahertz images are collected and labeled, then terahertz classification dataset was established and classified by transfer learning-based classification method. Next, the special distribution of the terahertz image was considered. Thus, the efficiency and effectiveness of the proposed method were demonstrated by experimental outcomes for terahertz image detection.

Leng et al. [11] have introduced a light-weight practical system which includes three stages like trait recognition, illumination normalization, and feces detection. The illumination variances were successfully suppressed by Illumination normalization. From training and labeling, the segmentation scheme was free. Using well-organized threshold-based segmentation approach, the feces object was detected by accurately to minimize the background disturbance. Finally, with a light-weight shallow CNN, the preprocessed images were categorized.

Yang et al. [27] have presented a StoolNet for color classification. The laboratorians’ heavy burden was minimized in this paper by deep learning methods and advanced digital image processing technologies. Aautomatically, the region of interest (ROI) was segmented. With a shallow CNN dubbed StoolNet, the segmented images were classified. The robustness of the algorithm was not tested in this paper.

3 New feature extraction and classification of solid materials

Accurate localization is the major problem in the fine-grained classification, in order to overcome this issue several approaches are used in this proposed approach. The best feature extraction is activated using FL-SIFT approach and the accurate classification is processed by hybrid MLP-Faster RCNN.

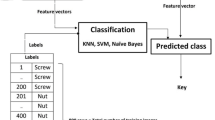

Figure 1 demonstrated the schematic block diagram of proposed methodology. The test image is processed by the FL with SIFT feature extractor, here, the edge points are extracted by the FL and the width and the length points are extracted by the SIFT extractor. Then, the based on the extracted features, features of images are matched. Afterthat, the features of test set is send to the classification, here the solid material is effectively classified by the hybrid MLP with Faster R-CNN. The background of fuzzy logic and the SIFT approaches are explained in the upcoming sections.

3.1 Fuzzy logic theory

The most effective ways to mimic human expertise in a naturalistic way is fuzzy logic [3]. It includes fuzzifier, defuzzifier, inference engine, and knowledge base as shown in Fig. 2. The role of the fuzzifier is to transform real value into a fuzzy system; a number of fuzzy rules (IF–THEN) are formed by rule base, which are presented in the knowlegede base; a decision making according to fuzzy rules is also known as inference engine framework; and finally, the output of the FIS system transformed into a crisp value in the defuzzifier. The crisp values contains a precise data of a real world, which is the input of fuzzy logic system. Furthermore, a database which defines fuzzy set’s membership function (MF) is presented in the knowledge base. How the rules are combined is handled by the inference system. The centroid scheme is used to the transformation in the defuzzification scheme. The robustness of the system is increased by the fuzzy logic.

The Mamdani approach is adopted to implement the FIS system. Thereafter, for the inputs and oucome of FIS the trapezoidal MFs are adopted to form fuzzy sets. The MFs described in the path, a variable matches a fuzzy set and are range from zero to one. The fuzzification system’s input and outcome are then changed into linguistic variables. Besides, the trapezoidal MFs are represented by.

\( {\mu}_F\left(x,a,b,i,j\right)=\left\{\begin{array}{cc}\frac{\left(x-a\right)}{\left(b-a\right)}& a\le x\le b\\ {}1& b<x<j\\ {}\frac{\left(x-i\right)}{\left(j-i\right)}& j\le x\le i\\ {}0& x\le b\boldsymbol{or}i\le x\end{array}\right. \) (1)

Where, a, b, i, j are the MF’s x coordinates and μFrepresented as the MF. For both the fuzzification and defuzzification, the trapezoidal MFs are employed.

Rule: ifI1iisX1andI2iisY1ithenOiisZielse(i = 1, 2, …n).

Where, X1, Y1iandZiare represented as the fuzzy sets corresponding to the MFs; I1iandI2iare represented as the two ith inputs and Oiis the output.

3.2 Background theory of SIFT

In the field of computer vision the SIFT [13] algorithm is widely utilized, which is extracted the local features from images. David Lowe [15] introduced the SIFT approach which was summarized and perfected. Stability, Distinctiveness, Multiplicity and Scalability are the several advantages for features extraction using SIFT. Because of the affine transformation, view angle changing, brightness changes, scale scaling and rotation, the SIFT features are remains stable. The SIFT features are suitable for template match and have abundant information. SIFT is easily interlinked by other eigenvectors using various forms. Viewpoint, scaling, template matching due to its strongness to rotation and 3D modeling, and face recognition are the various field used SIFT algorithm. Descriptor generation, orientation assignment, keypoints localization, and keypoints detection are the four steps in SIFT.

-

Keypoints Detection: The function of Difference-of-Gaussian (DoG) is utilized to determine the local extremes in keypoints detection step, which are orientation in scale space and invariant to scale.

-

Keypoints localization: So many keypoints are produced in scale-space extrema detection, some of which are more complex to know or sensitive to noise.

-

Orientation assignment: Every keypoint is allocated one or more directions to obtain the image rotation invariant and orientations are evaluated by gradient magnitudes of local image.

-

Descriptor generation: For every keypoint, descriptor vector is computed to confirm the invariance to scale and rotation.

3.2.1 Proposed methodology: Feature extraction by FL-SIFT

The width, highest length, edge point, and corner point are considered as feature points in this research. Here, the edge identification is obtained by the fuzzy logic approach and the length and width are obtained by the SIFT approach. Edge detection highlights high-frequency components in the image and is a challenging task. In a digital image, to determine the boundaries of the different object the fine-grained approach is used. Based on the gradients that are present in the image, the edges are searched. According to the value of pixels and intensity, gradients are presented. The SIFT approach extracts features from images of distinctive invariant.

3.2.2 FL based edge detection

In edge detection, one of the better methods is canny edge detection, however, the performance of canny edge detection is very poor due to without post-processing of detected edges and hence, from the large number of falsely accepted edges it will suffer. Therefore, the fuzzy rule-based approach is activated on edge detection.

Fuzzification

Here, one variable is denoted as the output variable and two variables have been denoted as the parameters of input and all the crisp values are changed into fuzzy values. The vertical and horizontal gradients continuing in the input image are represented by the two input variables such as × 2 and × 1 respectively. There are two membership functions are denoted for every output and input variables and the fuzziness in two output and input variables showed by the overlapping section of the membership functions. The triangular membership function is used in this fuzzy logic for output and input variables together.

\( \boldsymbol{triangle}\left(x;a;b;c\right)=\mathit{\max}\left(\mathit{\min}\left(\frac{x-a}{b-a},\frac{c-x}{c-b}\right),0\right) \) (2)

Fuzzy rule construction

It is the next step of fuzzification for the fuzzy system used for the decision-making abilities of the expert scheme. “IF….THEN”, this is the form of rules and is connected or divided using AND in between. There are four rules are generated for one output and two input parameters they are as follows:

-

If (Ix is 0) and (Iy is 0) then (Iout is FALSE)

-

If (Ix is 1) and (Iy is 1) then (Ioutis TRUE)

-

If (Ix is 1) and (Iy is 0) then (Iout is FALSE)

-

If (Ix is 0) and (Iy is 1) then (Iout is FALSE)

Fuzzy inference process

The Mamdani framework has been utilized to improve the proposed expert system. Figure 3 shows the Mamdani inference framework for the proposed approach. One output variable (Iout) and two input variables (IxandIy) are placed here and there are four rules are placed in the inference system.

Defuzzification

In a fuzzy system, it alters whole fuzzy values into crisp value, in this research the centroid approach is utilized for changing the fuzzy values into crisp value.

3.2.3 SIFT based width and length points detection

One of the globally utilized interest points features is also known as SIFT. For large-scale image retrieval, robotic mapping, object detection and tracking this approach is applied. In addition, the width and height length feature points are extracted using SIFT approach. Although, for the rotation and scale variations, the SIFT descriptor is highly robust. The normally used local visual descriptors are also known as SIFT. The image is termed by SIFT whenever the detection wants a multi-scale approach via its interest points. The following steps are involved in the creation of image feature sets:

-

Scale Space Extrema Detection

-

Key point Localization

-

Orientation Assignment

-

Key point Descriptor

Detection of scale space Extrema

For the construction of scale space, the data required a few points i.e., the amount of data isn’t enormous and the parallel degree isn’t high, so they can be set on the host for calculation. The extreme point’s detection is performed independently because the image pixels among the various groups are independent and different from one another. To end the extreme point’s detection in a image data set, a KERNEL function is executed for t times. In the lower and upper points, it is compared with the nearby points after removing the greater part of the unqualified points, which enormously accelerate the efficiency of the algorithms. To implement the extreme points detection, one-dimensional threads and two-dimensional blocks are embraced. Given every block to be answerable for 16 × 16 PIXELS, so for an image with the width×height size want (width + 1) × (stature +1) blocks if its height and width can’t be divisible by 16. To improve the outcomes, the zero elements are added to the outer edge of the image when the width and height of the image can’t be divisible by 16, so the images with height and width can be divisible by 16 can diminish the judgment. The image is rescaled and smoothed at every pyramid level via the Gaussian function way. L(r, s, σ) is represented as scale-space provided by:

L(r, s, σ) = G(r, s, σ) × I(r, s) (3)

D(r, s, σ)which anticipates the minima and maxima’s extracted key points through the function of DoG. the Gaussian function folded the images. The Gaussian function represented as G(), to minimize the two successive scalesD(r, s, σ)is evaluated that are esteemed by a constant scale factor k by \( k=\sqrt{2} \)as the optimal value.

D(r, s, σ) = (G(r, s, kσ) − G(r, s, σ)) × I(r, s) = L(r, s, kσ) − L(r, s, σ) (4)

D(r, s, σ) is equated with scale’s eight neighbors at each point. It is referred to the great if the D(r, s, σ)value is maximum or minimum among the points.

Key point localization

To determine the scale and location, a detailed design is fit at each candidate position. Based on the measure of their stability the key-point selection is done. Key points are poorly localized in the keypoint localization stage which is detached. The great location is evaluated by:

\( \overset{\frown }{r}=-\frac{\partial^2{D}^{-1}}{\partial {r}^2}\frac{\partial D}{\partial r} \) (5)

Orientation assignment

In this phase through the lining up of key points, the SIFT descriptors are generated by offsetting the orientation. Based on local image gradient directions the consistent orientation is given to each key-point location. In the image data, every single future activity is performed which are changed relative to the appointed location, scale and assigned orientations by offering invariance to these transformations for each feature. The modulus m(r, s) and direction θ(r, s)of the gradient at Gaussian smooth image Lat a point (r, s) can be expressed as.

\( M\left(r,s\right)=\sqrt{f_r^2\left(r,s\right)+{f}_s^2\left(r,s\right)} \) (6)

\( \theta \left(r,s\right)={\mathit{\tan}}^{-1}\frac{f_s\left(r,s\right)}{f_r\left(r,s\right)} \) (7)

Where fr(r, s) = L(r + 1, s) − L(r − 1, s), fs(r, s) = L(r, s + 1) − L(r, s − 1).

Key point descriptor

The equivalent SIFT descriptors are implemented according to the nearest neighbors, the closest distance to second closest distance evaluation and the fraction. In the program, the necessary input data has an array of differential pyramid data and feature points. 16 small areas are divided for a 16 × 16 area and the 8-dimensional vector is generated for each small area. So, to get the brightness change invariance through normalization, the 128-dimensional vector’s features point descriptor is utilized. The local image gradients are measured at the selected scale in the region nearby each key-point. They are changed into a demonstration that permits important degrees of local shape illumination and distortion changes.

3.2.4 Feature matching

In the feature matching procedure, the SIFT feature consumes the more time on the KD-tree. The zero-padding process can be executed if the collection of feature points is minimum than 128 and it fulfils the least calculation necessity and decreases the overhead for decision making. Further, the parallel evaluation is accomplished openly using numerous thread patches if the collection of feature points is maximum than 128. The Euclidean distance (ED) is applied to estimate the similarity among them while searching for the adjacent neighbor. While computing the ED between two points it is expected that the both key points warehoused in the global memory are denoted by eight-dimensional vectors like a vector (a0, a1, .…, a7)and b vectorb0, b1, …, b7, individually. For every dimension, the square value of the difference is estimated and warehoused in the shared memory. Through three times the accumulation process, the square root of the outcome is achieved. Therefore, the ED among a and bvectors is gained.

3.3 Classification: Hybrid MLP-faster R-CNN

3.3.1 Brief review of MLP

The MLP is designed to learn the nonlinear spectral feature space at the pixel level irrespective of its statistical properties and is typical non-parametric neural network classifier. It maps the input data sets onto a outputs set in a feedforward manner and is a network. With each layer fully connected to the preceding layer and the succeeding layer, the MLP is composed of interconnected nodes in numerous layers. Followed by a nonlinear activation function, the each nodes output are the weighted units to differentiate the data that are not linearly separable. In remote sensing applications, the MLP has been broadly utilized that includes very fine spatial resolution (VFSR) based land cover classification. The MLP algorithm is simple in model architecture but it is mathematically complicated. Similarly, especially for VFSR imagery through unprecedented spatial detail, a pixel-based MLP classifier make use of or does not consider, the spatial patterns implicit in images. In principle, the pixel’s membership association for each class is predicted by the MLP is a pixel-based classifier through shallow structure.

One of the classical types of neural networks is MLP, it contains three layers: they are, an output, a hidden and an input layer. The following (8) represents the input layer.

a1 = x (8)

Where, a1indicates the networks first layer, input is represented asx, then the weighted output of the preceding layer is represented as the input of each layer and resembles:

a(l + 1) = σ(W(l)a(l) + b(l)) (9)

Where, weight layer indicated by W(l), the specific layer is represented as l, σ is the nonlinear function and the bias symbol in the layerl is represented as b(l). Hyperbolic tangent, sigmoid function etc. are used in the network. Finally, the output layer is represented by (10).

hW, b(x) = a(n) (10)

Reduces the difference among input and the desired output is the foremost objective:

\( J\left(W,b,x,y\right)=\frac{1}{2}{\left\Vert {h}_{\left(W,b\right)}(x)-y\right\Vert}^2 \) (11)

The following section gives a brief description of Faster R-CNN.

3.3.2 Brief review of faster R-CNN

Fast R-CNN and Faster R-CNN has similar architecture, the only change is region proposal i.e. region proposal network (RPN). A system for RPNs + Fast R-CNN detection is known as Faster R-CNN and it produces an accurate and efficient region proposal creation.

In general, for classification and detection, the Fast RCNN framework utilizes the region proposals generated from the RGB images. In order to obtain classification of images by RGB and depth image data, the Fast RCNN network has used. In deep neural network architecture, the VGG-16 is applied as the base learner which is utilized as a feature extractor for the Fast RCNN system which was trained on image-net’s pre-trained weights. For the Fast RCNN, the region proposals and the RGB image are the two inputs. By the combination of depth and RGB images, the region proposals are generated. The model performs better and more quickly in fast RCNN, as the ROI are found using a selective search scheme and all the ROI are found at once for an image, unlike CNN which finds ROI and applies Relu on each ROI individually which is more slower and time-consuming. Initial prediction of Fast RCNN made based on the ROI of the image. The ROI are found by selective search.

For object detection, one of the most accurate deep learning approaches is Faster R-CNN. There are four main parts: classification layer, region of interests (ROI) pooling layer, RPN layer and convolutional layer. The main aim of RPN is to create region proposal by CNN with the full convolution (FC) layer, then the regression is included and to improve the contrast of objects based on the multiple scales of information. Hence, the detection accuracy and region proposal quality are improved. In the input design, the first CNN produce the feature map for RPN and each location in the feature map the RPN achieves k guesses. Hence, 2 × k scores and 4 × k coordinates are produced per location. Several anchors are operated by Faster R-CNN that are pre-selected based on the real-life objects at various scales considerately and have rational aspect ratios as well. The Faster R-CNN training activated by following four key steps:

-

Steps: 1 of 4: Training an RPN

Meanwhile, a VGG16 pre-trained by a large general image dataset can be used to initialize the RPN layer and improve the training speed. The loss function is defined like this:

\( L\left(\left[{PA}_n^{obj}\right],\left[{t}_n\right]\right)=\frac{1}{N_{cls}}\sum \limits_n{L}_{cls}\left({PA}_n^{obj},{PA}_n^{\ast}\right)+\lambda \frac{1}{N_{reg}}\sum \limits_n{PA}_n^{\ast }{L}_{reg}\left({t}_n,{t}_n^{\ast}\right) \) (12)

Where,n represents the anchor index and \( {PA}_n^{obj} \)is the possibility of the anchor contains an object; tn = (xn, yn, wn, hn)describes the anchor; The ground truth label \( {PA}_n^{\ast } \) is 1 if the anchor contains an object, otherwise 0; \( {t}_n^{\ast } \) is the ground truth box; Both the loss functions for classification Lcls and anchor box regression Lreg is normalized by their total numbers Ncls and Nreg. The values, which are based on the batch size specified for balancing the two losses weights and can be indicated as:

\( \frac{\lambda }{N_{reg}}=\frac{1}{\boldsymbol{batch}\kern0.24em \boldsymbol{size}}=\left\{\begin{array}{cc}\raisebox{1ex}{$1$}\!\left/ \!\raisebox{-1ex}{$256$}\right.& PRN\boldsymbol{train}\kern0.24em \boldsymbol{stage}\\ {}\raisebox{1ex}{$1$}\!\left/ \!\raisebox{-1ex}{$128$}\right.& \boldsymbol{Fast}R- CNN\boldsymbol{stage}\end{array}\right. \) (13)

-

2 of 4: Based on the RPN from 1, training a Fast R-CNN Network

-

3 of 4: Re-training RPN by sharing of weight using Fast RCNN.

-

4 of 4: Based on updated RPN, Fast R-CNN is re-trained.

3.3.3 Hybrid MLP-faster R-CNN

The advantages of both Faster R-CNN and MLP are taken for the hybrid network simultaneously. With the same information, two networks are trained together and the test image sends to the input of both networks to this end. Then extract the outcomes and the outcomes are more valid in the hybrid network.

Here, Y = (y1, y2, …yn)is each networks output and n represents the class number. The maximum probability of membership as expected outcomes represented by classification. The deviation in the output vector is represented in (14):

sigma = max (Y) − mean(Y) (14)

Where, the largest number of Yset is known as max(Y)and the average of Y is known asmean(Y). The class existence confidence in an image is calledsigma. The value of sigmais low when the image background heterogeneous background and the sigmavalue is large when the input image background is homogeneous and the Faster R-CNN is more reliable.

The sigmaFasterR − CNNis a larger number in most cases and is more appropriate for images classification, because, compared to MLP the Faster R-CNN network has the highest overall performance, therefore, it is used as a benchmark. For the comparison ofsigma, both the thresholds aand b are used, which amount a ∈ [0.1, 0.4]and amountb ∈ [0.6, 0.9] can be altered. Now, according to sigma the output class criteria is specified as follows:

\( {\boldsymbol{class}}_{MLP-\boldsymbol{Faster}R- CNN}=\left\{\begin{array}{cc}{\boldsymbol{class}}_{MLP}& {\boldsymbol{sigma}}_{\boldsymbol{Faster}R- CNN}<a\\ {}{\boldsymbol{class}}_{MLP}& a\le {\boldsymbol{sigma}}_{\boldsymbol{Faster}R- CNN}<b\&{\boldsymbol{sigma}}_{\boldsymbol{Faster}R- CNN}<{\boldsymbol{sigma}}_{MLP}\\ {}{\boldsymbol{class}}_{\boldsymbol{Faster}R- CNN}& a\le {\boldsymbol{sigma}}_{\boldsymbol{Faster}R- CNN}<b\&{\boldsymbol{sigma}}_{\boldsymbol{Faster}R- CNN}>{\boldsymbol{sigma}}_{MLP}\\ {}{\boldsymbol{class}}_{\boldsymbol{Faster}R- CNN}& {\boldsymbol{sigma}}_{\boldsymbol{Faster}R- CNN}\ge b\end{array}\right. \) (15)

In which, the result of classifications obtained from Faster R-CNN and MLP are represented as classMLP andclassFasterR − CNN respectively. Initially, abegins at the value of 0.1 to 0.05 and b = 0.9for getting the proper value. Maximum accuracy value is considered as the final value. The proposed approach activated in the way of preprocessing, noise removal, then feature extraction and perfect classification and produce better outcomes.

Softmax regression: The softmax classification is the last layer of Faster R-CNN and classify the intellectual features from MLP is the main aim. Through the multilayer stack, the Faster R-CNN network formed a deep network structure and utilized for feature learning. The deep neural network construction combined the Faster R-CNN and softmax regression. The simulation results and discussion is given in the next section 4.

4 Simulation results: Overview

The fine-grain analysis is further experimented using bipolar convolution parameters. A fine-grained Hybrid MLP- Faster RCNN based processing of images is evaluated using FL-SIFT approach after the noise removal by the filter. In this research, initially apply the image preprocessing filter and then the feature key points are extracted using FL-SIFT then activated in the [224 × 224] matrix. The first design has been chosen 2-D images and custom solid objects The COCO dataset is utilized for MLP-Faster RCNN within Python after the initial training. The feature sets are extracted and using big data the feature data is stored and retrieved. In order to show the proposed approach significant performance, several parameters have been done. The performances metrics precision and accuracy hold the best outcomes in fine-grained researches.

Figure 4 shows the feature points which are generated by the FL-SIFT approach, it produce the all feature points in the edge, corner, height and width points. Figures 5 , 6and Fig. 7 are the input image solid metal block and the fruits. The identified image matching points in solid metal block and fruits are shown in Figs. 6 and 8.

4.1 Dataset design: Description

The width, highest length, corner and edge point are considered as feature points. These points are automatically taken considered into Fuzzy logic-SIFT based extraction. The images of solid materials are taken and key points are calculated. On further individual image from COCO is taken and the features are generated. For this purpose, a scalable 2-node fault tolerant big database has been designed using Big Data environment [23, 24]. From this point of view, the dataset is divided into two categories:

-

RAW dataset: It ranges from 30 to 60 GB size and it contains plenty of key points, due to this the size is huge

-

HANDLED dataset: It is obtained from the original dataset, the size is few KB. It covers the data in the form features and the format apt for CNN based analysis. In the training data, there are a few hundred rows and approx. 100 features and the raw dataset is completely resembled by the handled dataset.

4.2 Performance matrices: Overview

The proposed feature extraction and classification of solid materials performances are evaluated by the several statistical measures such as Precision, Recall, F-measure, Specificity, Accuracy and Computation time.

-

Precision: Percentage of entire attacks that are accurately discovered. It is measured with the equation below

\( Pre=\frac{tp}{tp+ fp}\times 100\% \) (16)

-

Recall: Sum of positive predictable correctly is known as recall.

\( \mathit{\operatorname{Re}}\boldsymbol{call}=\frac{tp}{tp+ fn}\times 100\% \) (17)

-

F-Measure: It is the precision and recall’s harmonic mean.

\( F-\boldsymbol{score}=2\frac{pre\times \boldsymbol{recall}}{pre+\boldsymbol{recall}} \) (18)

-

Accuracy: Ratio of summation of true positive and true negative data to the summation of true positive and negative and false positive and negative data. Based on Eq. (17), the accuracy is evaluated, which is explained as the summation of diagonal features in the confusion matrix against total components in the confusion matrix.

\( Acc=\frac{tn+ tp}{tn+ tp+ fn+ fp}\times 100\% \) (19)

-

Specificity: The proportion of real negatives that are properly recognised as such, and it is also known as the true negative rate.

\( \boldsymbol{Specificity}=\frac{TN}{TN+ FP} \) (20)

4.3 Performance results and discussion

The comparison is made with Faster-RCNN fine-grained hybrid approach as shown in Table 2. MLP-Faster RCNN adds only a small overhead to Faster R-CNN and is simple to train, running at 5 fps. Moreover, for easy generalization, the MLP-Faster RCNN used, i.e., in the same system allow to evaluate the human poses. Person keypoint detection, bounding-box object detection [31], and instance segmentation are shown the topmost outcomes in all three COCO suite tracks of challenges. Tables 3 and 4 gives the comparison of introduced scheme with previous methods regarding F-measure, Accuracy, Recall, Precision and specificity.

Figure 9 demonstrates the performance results of the Accuracy of introduced strategy compared using existing methods. From Fig. 9, it propagates that the proposed model achieves maximum accuracy for proposed feature extraction approach FL-SIFT. Compared to Fuzzy and SIFT, the proposed FL-SIFT approach produce maximum accuracy for the proposed classified Hybrid MLP-Faster RCNN. The proposed scheme (MLP-Faster RCNN) achieves maximum accuracy (987.3%) compared to existing approaches such as Faster RCNN, Fast RCNN, RCNN and MLP has 97.8%, 93%, 96.9% and 82% respectively. These results are taken based on the feature extraction such as Fuzzy, SIFT and FL-SIFT.

Figure 10 demonstrates the Recall performance respecting the various classifiers. It shows that the output performance of the proposed classifier achieved maximum (97.87%) recall performance based on the proposed feature extraction FL-SIFT approach. The proposed scheme extracted the optimial features by best extracting schemes. So, the classification provides maximum recall performance. The existing models such as Faster RCNN, Fast RCNN, RCNN and MLP obtains 96.28%, 95.12%, 91.93% and 85.23% recall value respectively. Compared to existing feature extraction approaches named Fuzzy and SIFT, the proposed FL-SIFT based classifier obtains maximum recall. Because the edge points are extracted using Fuzzy approach and the width and height of the solid materials are extracted by the SIFT approach. Hence, it produced a better output than the other extraction approaches.

Performance of precision is shown in Fig. 11 and it produced the comparison of introduced approach with previous terms. It shows, the proposed design (MLP-Faster RCNN) produce better precision (96%) value than the existing models. Optimal feature extracted features are given to the classification. Best classification accuracy is given by the classification scheme named, MLP-Faster RCNN. Hence, the introduced scheme provided the maximum precision than others. The existing schemes such as Faster RCNN, Fast RCNN, RCNN and MLP achieves 94.3%, 91.1%, 89% and 86.4% precision values respectively. FL-SIFT based proposed classifiers correctly classify the solid materials based on the matching effect. FL-SIFT approach produced perfect feature extraction and based on it, the classification also activated in a perfect manner and produce most appropriate outs. Hence, the proposed approach produced better results in accuracy, precision and recall. Compared to MLP based Fuzzy, the proposed model MLP-Faster RCNN based FL-SIFT produce most accurate outcomes.

F-measure is the harmonic amongst the recall and precision, the performance results are shown in Fig. 12. 94.39% f-measure performance is obtained in the proposed model, compared to existing approaches it the maximum outcomes. F-measure is the relation between the recall and the precision vaue. The proposed scheme’s precision and recall values are high and the f-measure value also maximum compared to existing schemes. The existing approaches such as Faster RCNN, Fast RCNN, RCNN and MLP achieves 88.46%, 86.89%, 89.14% and 82% F-measures based on the FL-SIFT feature extraction. The proposed approach has better results in the performance of recall and precision based on this F-measures also achieved maximum results. Python platform is chosen for evaluating the performance of proposed and existing approaches.

Specificity performance comparison is given in Fig. 13 and it displayes the introduced scheme achieved supreme specificity value compared to previous models. The proposed model (MLP-Faster RCNN) achieves 97.87% specificity based on the FL-SIFT approach. The existing approaches named Faster RCNN, Fast RCNN, RCNN and MLP achieves 94.39%, 91.93%, 88.46% and 85.23% specificity value. The comparison is taken for the proposed model with existing classifiers based on the various feature extraction approaches. The values are obtained by implementing the flow on the python platform. The proposed classifier MLP-Faster RCNN produces maximum outcomes with respect to the proposed feature extraction FL-SIFT.

Figure 14 displays the overall performance of the introduced approach (MLP-Faster RCNN) with existing classifiers named Faster RCNN, Fast RCNN, RCNN and MLP based on the proposed feature extraction term FL-SIFT. The outcomes are compared with respect to the performance metrics precision, recall, f-measure, accuracy and specificity.

Table 5 gives the accuracy performance comparison of proposed approach with exiosting works. Compared with existing works, the proposed scheme achieved maximum detection accuracy. Comopared to other sworks, the proposed schme utilized best feature extractor named FL-SIFT, which produced the optimal feature for testing. So, a new hybrid ckassification acheme effectively classified the test sets and produced the maximum Accuracy. The existing schemes like Leng et al. (2020) [11], Yang et al. (2019) [27], and Lin et al. (2019) [14] has 98.40%, 97.5% and 90.1% accuracy, respectively. The proposed scheme (MLP-Faster RCNN) achieved 98.3% accuracy.

A plot of the true positive over the false positive rate is named as ROC Curve shown in Fig. 15 and it is the relationship among specificity and sensitivity. ROC curve is a sensitivity reduction results in specificity increment. The test accuracy is higher in top and less accurate closer to the diagonal. The introduced approach utilized best feature extractor approach FL with SIFT. It produced the optimal features for the classification. So the true positive rate of the proposed scheme is in increased manner.

Table 6 gives the comparison values of the computation time of the proposed approach with existing approaches. Implemenattion processing time of the proposed scheme is compared with existing faster and fast RCNN, RCNN and MPL schemes. The execution time of the existing methods are high compared to the proposed scheme. Actually, the proposed scheme extracted the optimal features by the hybrid FL with SIFT and effectively classified by the hybrid MLP with Faster RCNN schemes. So, the processing is improved by the new approachs and produced the output in quickly. Figure 16 displays the computation time performance comparison of the proposed approach with existing approaches. The proposed scheme execution time is very much low compared to other existing approaches. The proposed feature extraction based classifier takes 1.46 s execution time and the existing approaches named Faster RCNN, Fast RCNN, RCNN and MLP are takes 2.88 s, 5.69 s, 7.22 s and 12 s execution time respectively.

5 Conclusion

The fine-grained feature extraction and classification of solid materials are performed in this research. The test image optimal featutres are extracted with the hybrid feature extractor technique named FL-SIFT. Then the features are matched by the extracted optimal features. Then, the features of test set is given the classification. A new hybrid MLP with Faster RCNN scheme effectively classified the features and provides the better outcomes. The feature extracted classification outcomes of the proposed scheme has been compared with existing approaches and the classification accuracy of proposed scheme is equated with the existing papers. The grained convolution and pooling have made a good improvement in object detection and classification. There are two stages of COCO database are used for the remarkable detection scheme. Tuning of 3–4 convolution layer named FL-SIFT and selective search is the initial layer for extracting the ROI from an input image and next given to the deep neural network part for classification. In this research, the edge points and height and width key points are extracted using FL-SIFT extraction approach and then accurately classified using hybrid MLP-Faster RCNN. Hence, the proposed approach obtained maximum best results based on the proposed feature extraction approach. The proposed model achieved 98.3% accuracy, 96% precision and 97.87% specificity. The classification accuracy of proposed scheme (98.3%) is compared with existing works such as, Leng et al. (2020) [11] (98.40%), Yang et al. (2019) [27] (97.5%) and Lin et al. (2019) [14] (90.1%). The improvements to the feature model will consider in future, for example signify it as a submodel’s mixture, for a subset of classes each one is responsible that are very related to each other but dissimilar as a group from the rest.

Change history

29 July 2024

This article has been retracted. Please see the Retraction Notice for more detail: https://doi.org/10.1007/s11042-024-19934-x

References

Baddeley D, Bewersdorf J (2018) Biological insight from super-resolution microscopy: what we can learn from localization-based images. Annu Rev Biochem 87:965–989

Bindu H, Manjunathachary K (2019) Kernel-based scale-invariant feature transform and spherical SVM classifier for face recognition. J Eng Res 7(3):142–160

Chau NL, Tran NT, Dao TP (2020) A multi-response optimal design of bistable compliant mechanism using efficient approach of desirability, fuzzy logic, ANFIS and LAPO algorithm. Appl Soft Comput 94:106486

Chu J, Guo Z, Leng L (2018) Object detection based on multi-layer convolution feature fusion and online hard example mining. IEEE Access 6:19959–19967

Fang J, Zhou Y, Yu Y, Du S (2017) Fine-grained vehicle model recognition using a coarse-to-fine convolutional neural network architecture. IEEE Trans Intell Transp Syst 18(7):1782–1792

Ge C, Wang J, Wang J, Qi Q, Sun H, Liao J (2020) Towards automatic visual inspection: a weakly supervised learning method for industrial applicable object detection. Comput Ind 121:103232

Goswami A, McGrath J, Peters S, Rekatsinas T (2019) Fine-grained object detection over scientific document images with region Embeddings. arXiv preprint arXiv:1910.12462

Hassaballah M, Awad AI (2016) Detection and description of image features: an introduction. In: Image feature detectors and descriptors (pp. 1–8). Springer: Cham

Hassaballah M, Awad AI (eds) (2020) Deep learning in computer vision: principles and applications. CRC Press

Hsu HK, Yao CH, Tsai YH, Hung WC, Tseng HY, Singh M, Yang MH (2020) Progressive domain adaptation for object detection. In: Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, pp 749–757

Leng L, Yang Z, Kim C, Zhang Y (2020) A light-weight practical framework for feces detection and trait recognition. Sensors 20(9):2644

Li A, Chen J, Kang B, Zhuang W, Zhang X (2019) Adaptive multi-attention convolutional neural network for fine-grained image recognition. In: 2019 IEEE Globecom workshops (GC Wkshps) 1-5

Li J, Shi X, You ZH, Yi HC, Chen Z, Lin Q, Fang M (2020) Using weighted extreme learning machine combined with scale-invariant feature transform to predict protein-protein interactions from protein evolutionary information. IEEE/ACM Trans Comput Biol Bioinforma

Lin Z, Mu S, Huang F, Mateen KA, Wang M, Gao W, Jia J (2019) A unified matrix-based convolutional neural network for fine-grained image classification of wheat leaf diseases. IEEE Access 7:11570–11590

Lowe DG (2002) Object recognition from local scale-invariant features. In: The proceedings of the seventh IEEE international conference on computer vision, 1150

Lu L, Zhang J, Khan MK, Chen X, Alghathbar K (2010) Dynamic weighted discrimination power analysis: a novel approach for face and palmprint recognition in DCT domain. Int J Phys Sci 5(17):2543–2554

Moorthy U, Gandhi UD (2018) A survey of big data analytics using machine learning algorithms. In: HCI Challenges and Privacy Preservation in Big Data Security, IGI Global, 95–123

Pashaei A, Ghatee M, Sajedi H (2020) Convolution neural network joint with mixture of extreme learning machines for feature extraction and classification of accident images. J Real-Time Image Proc 17(4):1051–1066

Qiu H, Li H, Wu Q, Meng F, Shi H, Zhao T, Ngan KN (2020) Language-aware fine-grained object representation for referring expression comprehension. ACM International Conference on Multimedia, 4171-4180

Shariati M, Azar SM, Arjomand MA, Tehrani HS, Daei M, Safa M (2019) Comparison of dynamic behavior of shallow foundations based on pile and geosynthetic materials in fine-grained clayey soils. Geomech Eng 19(6):473–484

Sun X, Wu P, Hoi SC (2018) Face detection using deep learning: an improved faster RCNN approach. Neurocomputing 299:42–50

Tian G, Liu L, Ri J, Liu Y, Sun Y (2019) ObjectFusion: an object detection and segmentation framework with RGB-D SLAM and convolutional neural networks. Neurocomputing 345:3–14

Wang C, Wang Y, Han Y, Song L, Quan Z, Li J, Li X (2017) CNN-based object detection solutions for embedded heterogeneous multicore SoCs. Asia and South Pacific Design Automation Conference (ASP-DAC), pp 105–110

Wang L, Chen M, Wang L, Haoi Y, Hwang K (2017) Disease prediction by machine learning over big data from healthcare communities. IEEE Access

Wei J, He J, Zhou Y, Chen K, Tang Z, Xiong Z (2019) Enhanced object detection with deep convolutional neural networks for advanced driving assistance. IEEE Trans Intell Transp Syst 21(4):1572–1583

Yan J, Wang H, Yan M, Diao W, Sun X, Li H (2019) IoU-adaptive deformable R-CNN: make full use of IoU for multi-class object detection in remote sensing imagery. Remote Sens 11(3):286

Yang Z, Leng L, Kim BG (2019) StoolNet for color classification of stool medical images. Electronics 8(12):1464

Yao Y, Wang Y, Guo Y, Lin J, Qin H, Yan J (2020) Cross-dataset training for class increasing object detection. arXiv preprint arXiv:2001.04621

Yuan Y, Chu J, Leng L, Miao J, Kim BG (2020) A scale-adaptive object-tracking algorithm with occlusion detection. EURASIP J Image Video Process 2020(1):1–15

Zhang Y, Sohn K, Villegas R, Pan G, Lee H (2015) Improving object detection with deep convolutional networks via bayesian optimization and structured prediction. In: Proceedings of the IEEEConference on Computer Vision and Pattern Recognition, pp 249–258

Zhang L, Shen P, Zhu G, Wei W, Song H (2015) A fast robot identification and mapping algorithm based on kinect. Sensor 15(8):19937–19967. https://doi.org/10.3390/s150819937

Zhang J, Xing W, Xing M, Sun G (2018) Terahertz image detection with the improved faster region-based convolutional neural network. Sensors 18(7):2327

Zhang Y, Chu J, Leng L, Miao J (2020) Mask-refined R-CNN: a network for refining object details in instance segmentation. Sensors 20(4y):1010

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This article has been retracted. Please see the retraction notice for more detail: https://doi.org/10.1007/s11042-024-19934-x"

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Dalai, R., Das, P. RETRACTED ARTICLE: Effective fine-grained feature extraction and classification of solid materials using hybrid region convolutional neural networks. Multimed Tools Appl 80, 32171–32196 (2021). https://doi.org/10.1007/s11042-021-11189-0

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-021-11189-0