Abstract

Vectorizing hand-drawn sketches is an important but challenging task. Many businesses rely on fashion, mechanical or structural designs which, sooner or later, need to be converted in vectorial form. For most, this is still a task done manually. This paper proposes a complete framework that automatically transforms noisy and complex hand-drawn sketches with different stroke types in a precise, reliable and highly-simplified vectorized model. The proposed framework includes a novel line extraction algorithm based on a multi-resolution application of Pearson’s cross correlation and a new unbiased thinning algorithm that can get rid of scribbles and variable-width strokes to obtain clean 1-pixel lines. Other contributions include variants of pruning, merging and edge linking procedures to post-process the obtained paths. Finally, a modification of the original Schneider’s vectorization algorithm is designed to obtain fewer control points in the resulting Bézier splines. All the steps presented in this framework have been extensively tested and compared with state-of-the-art algorithms, showing (both qualitatively and quantitatively) their outperformance. Moreover they exhibit fast real-time performance, making them suitable for integration in any computer graphics toolset.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Raw paper sketches are usually the starting point of many creative and fashion workflows. For many artists the choice of drawing with pencil and paper (or pen) grants them the most expressiveness and creative freedom possible. By using these simple tools they can convey ideas in a very fast and natural way. That allows them to propose powerful and innovative designs. Later, the prototypal idea from the hand-drawn sketch must be converted to a real world product.

The de-facto standard for distributing fashion and mechanical designs is the vectorized set of lines composing the raw sketches: formats like SVG, CAD, Adobe Illustrator files are manually created by the designers, and delivered and used by a plethora of departments for many applications, e.g. marketing, production line, end-of-season analyses, etc..

Unfortunately, the vectorization process is still a manual task, which is both tedious and time-consuming. Designers have to click over each line point by point and gain a certain degree of experience with the used tools to create a good representation of the original sketch model.

Therefore, the need for an automated tool arises. A great amount of designers’ time can be saved and re-routed in the creative part of their job. Some tools with this specific purpose are commercially available, such as Adobe Illustrator™TMFootnote 1 Live Trace, WintopoFootnote 2 and PotraceFootnote 3. Anyway, to our experience and knowledge with Adidas designers, none of these tools does this job in a proper or satisfying way.

Vectorization of hand-drawn sketches is a well-researched area, with robust algorithms, such as SPV [6] and OOPSV [28]. However, these methods, as well as others in the literature, fail to work with real scribbles composed of multiple strokes, since they tend to vectorize each single line, while not getting the right semantics of the drawing [1]. Problems to be faced in real cases are mainly the followings: bad/imprecise line position extractions; lines merged together when they should not or split when they were a single one in the sketch; lines extracted as “large” blobs (shapes with their relative widths instead of zero-width line segments); unreliability of detection with varying stroke hardness (dark lines are overly detected, faint lines are not detected at all); resulting “heavy” b-splines (the vectorized shapes are composed of too many control points, making subsequent handling hard).

Some works for a complete vectorization workflow are also present in the literature, even if mainly addressing vectorization of really clean sketches, or obtaining decent artistic results from highly noisy and “sloppy” paintings. None of them is designed to work with “hard” real data trying to retrieve the most precise information of exact lines and produce high quality results.

This paper addresses exactly this issue and provides a complete workflow for automated vectorization of rough and noisy hand-drawn sketches. Two new methods (contributions) are presented:

-

A reliable line extraction algorithm. Being based on Pearson’s Correlation Coefficient, it is able to work under severe variations and inconsistencies of the input sketch.

-

A fast unbiased thinning. The algorithm solves a well-known biasing problem that afflicts most thinning algorithms (where steep angles are not well preserved).

Moreover, many different existing techniques are discussed, improved and evaluated: paths extraction, pruning, edge linking, and Bézier curve approximation.

All of these techniques are used together in a cohesive system and presented as a modular framework that produces accurate vectorizations of difficult, noisy sketches. Valuable properties of the system are: the high quality of its output, in both representation error and simplification strength; the low number of needed parameters; the fast real-time performance; the complete modularity of the system; the full control and maintainability of a classic Computer Vision system (as opposed as a Deep Learning system).

The efficacy of the proposal has been demonstrated on both hand-drawn sketches and images with added artificial noise, showing in both cases excellent performance w.r.t. the state of the art. Numerical benchmarks such as Precision, Recall and ’mean Centerline Distance’ are evaluated. Moreover, feedback from Adidas designers testify the quality of the process and prove that it greatly outperforms existing solutions, in terms of both efficiency and accuracy.

The remainder of this paper is structured as follows. The next Section reports the related work in sketch vectorization. Section 3 describes the different steps of the framework (namely, line extraction, thinning, path creation and vectorization). Experimental results on these steps are reported in Section 4, while Section 5 summarizes the contributions and draws some conclusions.

2 Related work

This section reports the most relevant previous works on sketch vectorization. The work in [1] proposed a line enhancement method, based on Gabor and Kalman filters. It can be used to enhance lines for subsequent vectorization. However, this approach fails to correctly extract all the drawing components when the image is noisy or presents parallel strokes, resulting, for instance, in gaps in the final vectorized result or strokes incorrectly merged. Moreover, experiments are conducted with quite simple images.

The paper in [17] provided the first treatment of ridge extraction at multiple resolutions. Their approach works by applying derivatives of Gaussians to an input image and looking for a very specific response configuration to that derivatives. In particular, they look for what the derivative of an ideal ridge should look like. Their experiments, while being good enough, are limited and obtained using only low resolution images. See the related work in [29] for more details and examples. While this is an interesting approach, we claim that derivatives are better suited for edge detection than line detection. Just looking for derivatives when modeling a line essentially implies that:

-

line surface is uniform (or symmetric along middle bisector);

-

left and right line limits have the same slope to white background.

Moreover, we claim that looking at the edges of a line to locate it, is an unnatural approach, and does not take into account of the information spread over the whole line surface. Instead, this paper proposes to match the image with an opportune kernel, taking into account all the information residing over the whole line area. Also, while they address the scale variation problem, they do not address (or just partially) the contrast variation problem, and the line width problem. Their work also needs more parameters to be used than ours.

The work in [11] reported a first proposal of a framework transforming raw images to full vectorized representations. However, the “binarization” step is not considered at all, by presenting directly the skeleton processing and vectorization steps. In addition to this limitation, this paper also bases the vectorization to the simple fitting of straight lines and circular arcs (instead of using Bézier interpolation), which represents a too simplified and limited representation of the resulting path.

The paper in [20] provided a more complete study of the whole vectorization process. This is another proposal that works with derivatives. In fact, they provide a neat derivation-based algorithm to estimate accurate centerlines for sketches. They also provide a good insight of the problem of correct junction selection. Unfortunately, they work under the assumption of somewhat “clean” lines, that does not hold in many real case scenarios, such as those we are aiming at.

Simo-Serra et al. [27] trained a Convolutional Neural Network to automatically learn how to simplify a raw sketch. No preprocessing or postprocessing is necessary and the network does the whole process of conversion from the original sketch image to a highly-simplified version. This task is related to our needs, since it can be viewed as a full preliminary step, that just needs the vectorization step to provide the final output form. In Section 4.1 we will compare with this work and show that our proposal achieves better results for retrieval (in particular in terms of recall) and is more reliable when working with different datasets and input types. The same author still improved their results in a recent work [26], to which we will compare too.

The paper in [7] provided an overview of the whole process. Anyway, they gave just brief notions of line extraction and thinning, while they concentrated more on the final vectorization part, in which they proposed an interesting global fitting algorithm. Indeed, this paper is the only paper providing guidelines to obtain a full Bézier-curves representation as final output. Representing images with Bézier curves is of paramount importance in our application domain (fashion design), and is moreover important to obtain “lightweight” vectorial representations of the underlying shapes (composed of as few control points as possible). The vectorization task is casted as a graph optimization problem. However, they treated just partially the noisy image problem, focusing on working with somehow clean paintings.

The work in [18] proposes interesting ways for simplifying sketches, giving much focus on multiple strokes merging and paths linking. It also works in detecting and taking into account different, adjacent regions. Still, to our purpose, their solution only works on very clean images, where the main difficulty lies the presence of multiple strokes. It does not take into account varying stroke pressure and width, nor it accounts pencil roughness and paper porosity or non-uniform background.

Another recent work [2] provided a good proposal for a vectorization system. Regarding line extraction, they rely on Vector Fields, which give high quality results with clean images, but fail in presence of noise and fuzzy lines. Still, they dedicated a lot of attention to correctly disambiguate junctions and parallel strokes.

An interesting work regarding vectorization is reported in [8]. Great care is taken into producing faithful and “beautiful” vectorial representations of drawing. In this case, the input is directly a shape produced by a mouse or a drawing tablet, still their process is relevant to obtain good artistic results from inexperienced users inputs.

When working with colored sketches (or cartoons), [32] proposed a robust method for vectorization. The paper also introduces an algorithm called Trapped Ball Segmentation, that can take into account additional detail about the context surrounding each line, and could be very beneficial in each work that expects colored input sketches.

Another interesting variation of the vectorization problem consists in taking advantage of on-line information about the sketch, while it’s being drawn. This is typically done using a PC and a drawing tablet. For example, the PC can measure the order in which the traits are drawn, their direction, the speed and the pressure of the pen, etc.. All of these information greatly help the vectorization process: e.g. crossing lines are automatically disambiguated, multiple strokes are easily merged. Examples of works in this specific area are: [12] and [21].

The sketch vectorization field also partially overlaps with the so-called “Coherence Enhancing” field. For instance, the work in [13] estimated Tangent Vector Fields from images, and used them in order to clean or simplify the input. They do that by averaging a pixel value with its corresponding neighbors along the Vector Fields. This could be integrated as a useful preprocessing step in our system, or could be used as a standalone tool if the objective is just to obtain a simplified representation of the input image.

The same research topic has been explored in the work from [4], that showed remarkable results in the task of disambiguating parallel, almost touching, strokes (a very useful property in the sketch analysis domain).

3 Sketch vectorization framework

We propose a modular workflow for sketch vectorization, differently from other papers (e.g., [27]) that are based on monolithic approaches. Following a monolithic approach usually gives very good results for the specific-task dataset, but grants far less flexibility and adaptability, failing to generalize for new datasets/scenarios. A modular workflow is better from many perspectives: it is easy to add parts to the system, change them or adapt them to new techniques and it is much easier to maintain their implementations, and to parametrize or add options to them (expected error, simplification strength, etc..).

The approach proposed in this paper starts from the assumption that the sketch is a monochromatic, lines-only image. That is, we assume that no “dark” large blob is present in the area, just traits and lines to be vectorized. Fig. 1a shows an example of the sketches we aim to vectorize, while Fig. 1b reports another exemplar sketch that, given the previous assumption, will not be considered in this paper. Moreover, we will also assume a maximum allowed line width to be present in the input image. These assumptions are valid in the vast majority of sketches that would be useful vectorize: fashion designs, mechanical parts designs, cartoon pencil sketches and more.

Our final objective is to obtain a vectorized representation composed by zero-width curvilinear segments (Bézier splines or b-splines), with as few control points as possible.

This is an overview of the modules composing the workflow:

-

First, line presence and locations from noisy data, such as sketch paintings, need to be extracted. Each pixel of the input image will be labeled as either part of a line or background.

-

Second, these line shapes are transformed into 2d paths (each path being an array of points). This can be done via a thinning and some subsequent post-processing steps.

-

Third, these paths are used as input data to obtain the final vectorized b-splines.

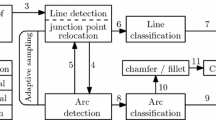

Each of these modules will be extensively described in the next subsections. A visual overview of the system can be seen in Fig. 2.

3.1 Line extraction

Extracting precise line locations is the mandatory starting point for the whole vectorization process. When working with hand-drawn sketches, we usually deal with pencil lines traced over rough paper. Other options are pens, marker pens, ink or PC drawing tables.

The most difficult of these tool traits to be robustly recognized is, by far, pencil. Unlike ink, pens and PC drawing, it presents a great “hardness” (color) variability. Also, it is noisy and not constant along its perpendicular direction (bell shaped). Figure 3 shows a simple demonstration of the reason of this. Moreover, artists may intentionally change the pressure while drawing to express artistic intentions.

In addition, it is common for a “wide” line to be composed by multiple superimposed thinner traits. At the same time, parallel lines that should be kept separated may converge and almost touch in a given portion of the paint, having as the sole delimiter the brightness of the trait (Fig. 4).

With these premises, precise line extraction in this situation represents a great challenge. Our proposed approach is a custom line extraction mechanism that tries to be invariant to the majority of the foretold caveats, and aims to: be invariant to stroke hardness and stroke width; detect bell “shaped” lines, transforming them into classical “uniform” lines; merge multiple superimposed lines, while keeping parallel neighbor lines separated.

3.1.1 Cross Correlation as a similarity measure

The proposed line extraction algorithm is based on Pearson’s Correlation Coefficient (PCC, hereinafter). This correlation coefficient exhibits the properties to identify the parts of the image which resemble a “line”, no matter the line width or strength. This section will briefly introduce the background about PCC.

The “standard” Cross Correlation (or Convolution) operator is known for expressing the similarity between two signals (or images in the discrete 2D space), but it presents several limitations, i.e. dependency on the sample average, the scale and the vector’s sizes. To address these problems, pcc between two samples a and b can be defined as:

where cov(a, b) is the covariance between a and b, and σa and σb are their standard deviations. From the definitions of covariance and standard deviation, Eq. 1 can be written as follows:

∀q0,1 ∧∀m0,1 : m0m1 > 0. Equation 2 implies invariance to affine transformations. Another strong point in favor of PCC is that its output value is of immediate interpretation. In fact, it holds that − 1 ≤ pcc(a, b) ≤ 1. pcc ≈ 1 means that a and b are very correlated, whereas pcc ≈ 0 means that they are not correlated at all. On the other hand, pcc ≈− 1 means that a and b are strongly inversely correlated (i.e., raising a will decrease b accordingly).

PCC has been used in the image processing literature and in some commercial machine vision applications, but mainly as an algorithm for object detection and tracking [15, 31]. Its robustness derives from the properties of illumination and reflectance, that apply to many real-case scenarios involving cameras. Since the main lighting contribution from objects is linear [14], pcc will give very consistent results for varying light conditions, because of its affine transformations invariance (2), showing independence from several real-world lighting issues.

PCC grants much-needed robustness in detecting lines under major changes in illumination conditions, for instance when images can potentially be taken from different devices, such as a smartphone, a satellite, a scanner, an x-ray machine, etc.. In our application domain, at the best of our knowledge, this is the first paper proposing to use PCC for accurate line extraction from hand-drawn sketches. Besides robustness to noise, this also allows to work with different “sources” of the lines: from hand-drawn sketches, to fingerprints, to paintings, to corrupted textbook characters, etc.. In other words, the use of PCC makes our algorithm generalized and applicable to many different scenarios.

3.1.2 Template matching with Pearson’s correlation coefficient

In order to obtain the punctual PCC between an image I and a smaller template T, for a given point p = (x, y), the following equation can be used:

∀j ∈ [−Tw/2; Tw/2] and ∀k ∈ [−Th/2; Th/2], and where Tw and Th are the width and the height of the template T, respectively. Ixy is a portion of the image I with the same size of T and centered around p = (x, y). \(u_{I_{xy}}\) and uT are the average values of Ixy and T, respectively. T(j, k) (and, therefore, Ixy(j, k)) is the pixel value of that image at the coordinates j, k computed from the center of that image.

The punctual PCC from (3) can be computed for all the pixels of the input image I (except for border pixels). This process will produce a new image depicting how well each pixel of image I resembles the template T. In the remainder of the paper, we will call it PCC. In Fig. 5 you see PCC s obtained with different templates. It is worth remembering that PCC(x, y) ∈ [− 1, 1], ∀x, y.

Two examples of PCC images obtained with different kernels. These pictures show that using a line-shaped kernel (KLine) can be detrimental for retrieval quality: b, e; crossing lines are truncated or detected as thinner than they should be. Using KDot can alleviate the problem: c, f; this kernel detects more accurately ambiguous junctions

3.1.3 Choosing a Kernel for line extraction

To achieve line extraction, we will use PCC with a suitable template, or kernel. Intuitively, the best kernel to find lines would be a sample approximating a “generic” line. A good generalization of a line might be a 1D Gaussian kernel replicated over the y coordinate, i.e.:

Since this is a vertical Gaussian kernel, we would also need all of its rotations, in order to match different orientations of lines. This kernel achieves good detection results for simple lines, which are composed of clear (i.e., well separable from the background) and separated (from other lines) points. Unfortunately, this approach can give poor results in the case of multiple overlapping or perpendicularly-crossing lines. In particular, when lines are crossing, just the “stronger” would be detected around the intersection point. If both lines have about the same intensity, both lines would be detected, but with an incorrect width (extracted thinner than they should be). An example is shown in the middle column of Fig. 5.

Considering these limits, a full symmetric 2D Gaussian kernel might be more appropriate:

This kernel would also have the plus of being fully isotropic. Experimental tests have also proven that it solves the concerns raised with KLine, as shown in the rightmost column of Fig. 5. In fact, this kernel resembles a dot, and considering a line as a continuous stroke of dots, it will approximate our problem just as well as the previous kernel. Moreover, it behaves better in line intersections, where intersecting lines become (locally) T-like or plus-like junctions, rather than simple straight lines. Unfortunately, this kernel will also be more sensitive to noise.

3.1.4 Multi-scale size invariance

One of the main objectives of this proposal is to detect lines without requiring many parameters or custom, domain-specific techniques. We also aim to detect both small and large lines that might be mixed together, as it happens in many real drawings. In order to achieve invariance to line widths, we will use kernels of different sizes.

We will generate N Gaussian kernels, each with its σi. In order to find lines of width w a sigma of σi = w/3 would work, since a Gaussian kernel gives a contribution of about 84% of samples at 3 ⋅ σ.

We use a multiple-scale approach similar to the pyramid used by the SIFT detector [19]. Given wmin and wmax as, respectively, the minimum and maximum line width to be detected, we can set σ0 = wmin/3 and σi = C ⋅ σi− 1 = Ci ⋅ σ0, ∀i ∈ [1, N − 1], where N = logC(wmax/wmin), and C is a constant factor or base (e.g., C = 2). Choosing a different base C (smaller than 2) for the exponential and the logarithm will give a finer granularity.

The numerical formulation for the kernel will then be:

where Si is the kernel size and can be set as Si = next_odd(7 ⋅ σi), since the Gaussian can be well reconstructed in 7 ⋅ σ samples.

This generates a set of kernels that we will call KDots. We can compute the correlation image PCC for each of these kernels, obtaining a set of images PCCdots, where PCCdotsi = pcc(Image, KDotsi) with pcc computed using (3).

3.1.5 Merging results

Once the set of images PCCdots is obtained, we will merge them into a single image that will uniquely express the probability of line presence for a given pixel of the input image. This merging is obtained as follows:

where

Given that − 1 ≤ pcc ≤ 1 for each pixel, where ≈ 1 means strong correlation and ≈− 1 means strong inverse correlation, Eq. 5 tries to retain only confident decisions: “it is definitely a line” or “it is definitely NOT a line”.

By thresholding MPCC of (5), we obtain the binary image LinesRegion. The threshold has been set to 0.1 in our experiments and resulted to be stable in different scenarios.

3.1.6 Post-processing filtering

The binary image LinesRegion will unfortunately still contain incorrect lines due to the random image noise. Some post-processing filtering techniques can be used, for instance, to remove too small connected components, or to delete those components for which the input image is too “white” (no strokes present, just background noise).

For post-processing hand-drawn sketches, we first apply a high-pass filter to the original image, computing the median filter with window size s > 2 ⋅ wmax and subtracting the result from the original image value. Then, by using the well-known [22] method, the threshold that minimizes black-white intraclass variance can be estimated and then used to keep only the connected components for which the corresponding gray values are lower (darker stroke color) than this threshold. A typical output example can be seen in Fig. 6.

3.2 Thinning

An extracted line shape is a “clean” binary image. After post-processing (holes filling, cleaning) it is quite polished. Still, each line has a varying, noisy width, and if we want to proceed towards vectorization we need a clean, compact representation. The natural choice for reducing line shapes to a compact form is to apply a thinning algorithm [9].

Several thinning variants are well described in the review [23]. In general terms, thinning algorithms can be classified in one-pass or multiple-passes approaches. The different approaches are mainly compared in terms of processing time, rarely evaluating the accuracy of the respective results. Since it is well-known, simple and extensively tested, we chose [33]’s algorithm as baseline. However, any iterative, single-pixel erosion-based algorithm will work well for simple skeletonizations.

Unfortunately, Zhang and Suen’s algorithm presents an unwanted effect known as “skeletonization bias” [23] (indeed, most of the iterative thinning algorithms produce biased skeletons). In particular, along steep angles the resulting skeleton may be wrongly shifted, as shown in Fig. 7. The skeleton is usually underestimating the underlying curve structure, “cutting” curves too short. This is due to the simple local nature of most iterative, erosion-based algorithms. These algorithms usually work by eroding every contour pixel at each iteration, with the added constraint of preserving full skeleton connectivity (not breaking paths and connected components). They do that just looking at a local 8-neighborhood of pixels and applying masks. This works quite well in practice, and is well suited for our application, where the shapes to be thinned are lines (shapes already very similar to a typical thinning result). The unwanted bias effect arises when thinning is applied to strongly-concave angles. As described by the work of Chen [5], the bias effect appears when the shape to be thinned has a contour angle steeper (lower) than 90 degrees.

To eliminate this problem, we developed our custom unbiased thinning algorithm. The original proposal in [5] is based, first, on the detection of the steep angles and, then, on the application of a “custom” local erosion specifically designed to eliminate the so-called “hidden deletable pixels”. We propose a more rigorous method that generalizes better with larger shapes (where a 8-neighbors approach fails).

Our algorithm is based on this premise: a standard erosion thinning works equally in each direction, eroding one pixel from each contour at every iteration. However, if that speed of erosion (1 pixel per iteration) is used to erode regular portions of the shape, a faster speed should be applied at steep angle locations, if we want to maintain a well proportioned erosion for the whole object, therefore extracting a more correct representation of the shape.

As shown in Fig. 8, an erosion speed of:

should be applied at each angle point that needs to be eroded, where α is the angle size. Moreover, the erosion direction should be opposite to the angle bisector. In this way, even strongly concave shapes will be eroded uniformly over their whole contours.

The steps of the algorithm are the following:

-

First, we extract the contour of the shape to be thinned (by using the border-following algorithm described by [30]). This contour is simply an array of 2d integer coordinates describing the shape outlines. Then, we estimate the curvature (angle) for each pixel in this contour. We implemented the technique proposed in [10], based on cord distance accumulation. Their method estimates the curvature for each pixel of a contour and grants good generalization capabilities. Knowing each contour pixel supposed curvature, only pixels whose angle is steeper than 90 degrees are considered. To find the approximate angle around a point of a contour the following formula is used:

$$\alpha \approx 6I_{L} / L^{2}$$where IL is the distance accumulated over the contours while traveling along a cord of length L. Figure 9a shows an example where concave angles are represented in green, convex in red, and straight contours in blue. Han et al.’s method gives a rough estimate of angle intensity, but does not provide its direction. To retrieve it, we first detect the point of local maximum curvature, called PE. Starting from it, the contours are navigated in the left direction, checking curvature at each pixel, until we reach the end of a straight portion of the shape (zero curvature - blue in Fig. 9a), which presumably concludes the angular structure (see Fig. 9). This reached point is called PL, the left limit of the angle. We do the same traveling right along the contour, reaching the point that we call PR. These two points act as the angle surrounding limits.

-

We then estimate the precise 2D direction of erosion and speed at which the angle point should be eroded. Both values can be computed by calculating the angle between segment \(\overline {P_{L} P_{E}}\) and segment \(\overline {P_{E} P_{R}}\), that we call αE. As already said, the direction of erosion dE is the opposite of αE bisector, while the speed is sE = 1/sin(αE).

-

After these initial computations, the actual thinning can start. Both the modified faster erosion of PE and the classical iterative thinning by [33] are run in parallel. At every classical iteration of thinning (at speed s = 1), the point PE is moved along its direction dE at speed sE, eroding each pixel it encounters on the path. The fact that PE is moved at a higher speed compensates for the concaveness of the shape, therefore performing a better erosion of it. Figure 9c, d and e show successive steps of this erosion process.

-

Additional attention should be posed to not destroy the skeleton topology; as a consequence, the moving PE erodes the underlying pixel only if it does not break surrounding paths connectivity. Path connectivity is checked by applying four rotated masks of the hit-miss morphological operator, as shown in Fig. 10. If the modified erosion encounters a pixel which is necessary to preserve path connectivity, the iterations for that particular PE stop for the remainder of the thinning.

To achieve better qualitative results, the faster erosion is performed not only on the single PE point, but also on some of its neighbor points (those who share similar curvature). We call this neighborhood set of points \(N_{P_{E}}\) and are highlighted in green in Fig. 9b. Each of these neighbor points Pi should be moved at the same time with appropriate direction, determined by the angle αi enclosed by segments \(\overline {P_{L} P_{i}}\) and \(\overline {P_{i} P_{R}}\). In this case, it is important to erode not only Pi, but also all the pixels that connect it (in straight line) with the next Pi+ 1 eroding point. This is particularly important because neighbor eroding pixels will be moving at different speeds and directions, and could diverge during time.

a Curvature color map for the contours. Concave angles (navigating the contour clockwise) are presented in green, convex angles in red. Blue points are zero-curvature contours. b A portion of the contours curvature map, and a real example of the erosion-thinning process steps c, d, e. The green part of b is the neighborhood \(N_{P_{E}}\) that will be eroded at the same time along dE direction

As usual for thinning algorithms, thinning is stopped when, after any iteration, the underlying skeleton has not changed (reached convergence).

3.3 Creating and improving paths

3.3.1 Path creation

The third major step towards vectorization is transforming the thinned image (a binary image representing very thin lines) in a more condensed and hierarchical data format. A good, simple representation of a thinned image is the set of paths contained in the image (also called “contours”). A path is defined as an array of consecutive 2D integer points representing a single “line” in the input thinned image:

In this paper, sub-pixel accuracy is not considered. A simple way to obtain sub-pixel accuracy could be zooming the input image before thinning and extracting real valued coordinates.

The successive steps of skeleton analysis will rely on 8-connectivity, so we need to convert the output of our thinning algorithm to that format. Our algorithm, being based on Zhang-Suen’s thinning, produces 4-connected skeletons (with lines wide 1-2 pixels). A 4-connected skeleton can be transformed into a 8-connected one by applying the four rotations of the mask shown in Fig. 11. Pixels that match that mask must be deleted in the original skeleton (in-place). Applying an in-place hit-miss operator implies reading and writing consecutively on the same input skeleton.

The resulting thinned image is called a “strictly 8-connected skeleton”: this definition does not imply that a pixel can not be connected with its 4-neighbors, but that each 4-neighbor pixel has been erased if not needed for topology preservation.

3.3.2 Path classification

Once the “strictly 8-connected skeleton” is obtained, the path classification step is issued. In order to better understand how this step works, let us define some basic concepts. We first define a junction between paths. A junction is a pixel that connects three or more paths. By deleting a junction pixel, paths that were connected are then split. We can detect all the junctions in the strictly 8-connected skeleton by applying the masks in Fig. 12 (and their rotations) in a hit-miss operator. In this case, the algorithm must be performed with an input image (read only) and an output image (write only) and the order of execution does not matter (i.e., it can be executed in parallel).

Sometimes, for peculiar input conformations, a junction pixel could be neighbor of another junction (see Fig. 13). These specific junctions are merged together for successive analysis. Each path connecting to one of these junctions is treated as connecting to all of them. For simplicity, we choose one of them as representative.

Similarly, an endpoint of a path can be defined as a pixel connected with one and only one other pixel, which needs to be part of the same path. Basically, an endpoint corresponds to either the starting or the ending point of a path. Endpoints can be straightforwardly found by applying the hit-miss morphological operator with the rotated versions of the masks reported in Fig. 14.

Given these definitions and masks, we can detect all the endpoints and the junctions of the strictly 8-connected skeleton. Then, starting from each of these points and navigating the skeletons, we will at the end reach other endpoints or junctions. By keeping track of the traversed pixels we can compose paths, grouped in one of these categories:

-

paths from an endpoint to another endpoint (e ↔ e);

-

paths from a junction to endpoint (j ↔ e);

-

paths from a junction to a junction (j ↔ j).

There exists one last type of path that can be found, i.e. the closed path. Obviously, a closed path has no junctions or endpoints. In order to detect closed paths, all the paths that cannot be assigned to one of the three above groups are assigned to this category.

Examples of all types of paths are reported in Fig. 15. Figure 16 also shows a notable configuration, called “tic-tac-toe” connectivity. In this case, an assumption is made, i.e. paths cannot overlap or cross each other. If this happens, paths are treated as distinct paths. In order to recognize that two crossing paths actually belong to the same “conceptual” path, a strong semantic knowledge of the underlying structures is necessary, but this is beyond the scope of this paper. A treatment of the problem can be found in [3], where they correctly group consecutive intersecting line segments.

3.3.3 Path post-processing

The resulting paths are easy to handle as they contain condensed information about the underlying shapes. However, they can often be further improved using some prior knowledge and/or visual clues from the original sketch image. Examples of these improvements are pruning, merging and linking.

Pruning consists in deleting small, unwanted “branches” from the thinning results. The skeleton is usually composed by many long paths that contain most of the information needed. However, also smaller, unwanted “branches” are often present. They may be artifacts of thinning, or resulting from noise in the original image. By applying the pruning, branches shorter than a given length l can be deleted. Branches are paths of the first or second type: e ↔ e or j ↔ e. They can not belong to the third type, j ↔ j, because we do not want to alter skeleton connectivity by deleting them. An example of pruning is reported in Fig. 17. Pruning can be performed with different strategies, by deciding to keep more or less details. One simple idea is to iteratively prune all the image with increasing branch length threshold l.

Merging is the process of grouping together junctions or paths. Junctions that are close each other could be grouped together in a single junction, simplifying the overall topology. After doing that, the same can be done for paths. Parallel and near paths that start and end in the same junctions are good candidates for merging (see an example in Fig. 18).

Linking (or endpoint linking, or edge linking) is the technique of connecting two paths whose endpoints are close in order to create a single path. Besides the endpoints distance, a good criteria for linking could be the path directions at their endpoints. Incident and near paths are suitable to be linked into a single, more representative, path (an example is reported in Fig. 19).

In order to improve their accuracy, all these post-processing techniques might benefit from the data (pixel values) from the original color image.

3.4 Vectorization process

Once cleaned paths are obtained, they need to be converted in a vectorized version with the minimum number of points. The basic vectorization algorithm used was firstly introduced in [25]. Schneider’s algorithm tries to solve a linear system (with least squares method), fitting a Bézier curve for each of the obtained paths. In detail, it tries iteratively to find the best set of cubic Bézier parameters that minimize the error, defined as the maximum distance of each path point from the Bézier curve. At each iteration, it performs a Newton-Raphson re-parametrization, to adapt the underlying path representation to the Bézier curve, in order to create a more suitable linear system to be optimized.

The algorithm is parametrized with a desired error to be reached, and a maximum number of allowed iterations. Whenever it converges, the algorithm returns the four points representing the best-fitting Bézier curve. If the convergence is not reached within the maximum number of iterations, the algorithm splits the path in two and recursively looks for the best fitting for each of them separately. The path is split around the point which resulted to have the maximum error w.r.t. the previous fitting curve. An additional constraint is related to C1 continuity of the two resulting curves on the splitting point, in order to be able to connect them smoothly.

In order to be faster, the original algorithm skips automatically all the curves which do not correspond to a minimum error (called “iteration-error” Ψ), and proceeds to the splitting phase without trying the Newton-Raphson re-parametrization. However, this simplification also affects accuracy of the vectorization, by generating a more complex representation due to the many split points created. Therefore, since the computational complexity is not prohibitive (worst case is O(n ⋅ log n), with n being the path length), we modified the original algorithm by removing this simplification.

Conversely, another early-stop condition has been introduced in our variant. Whenever the optimization reaches an estimation error lower than a threshold after having run at least a certain number of iterations, the algorithm stops and the estimated Bézier curve is returned. This can be summarized by the following condition:

where “f” is an arbitrary fraction (set to 0.1 in our experiments). This condition speeds up the algorithm if the paths are easily simplified (which is common in our case), while the full optimization process is run for “hard” portions that need more time to get good representations.

We also extended Schneider’s algorithm to work for closed paths. First, C1 continuity is imposed for an arbitrary point of the path, selected as the first (as well as the last) point of the closed path. A first fit is done using the arbitrary point. Then, the resulting point with the maximum error w.r.t. the fitted curve is selected as a new first/last point of the path and the fitting algorithm is run a second time. In this way, if the closed path has to be fitted to two or more Bézier curves, the split point will be the one of highest discontinuity, not a randomly chosen one.

4 Experiments

4.1 Line extraction

Assessing the accuracy of a line extraction method is not an easy task. Results can be subjectively considered as good or bad, depending on the final objective of the overall system. Having in mind our final objective (sketch vectorization of hand-drawn samples for fashion industry), we started by using a large dataset of hand-drawn shoe sketches (courtesy of Adidas AG™TM). These sketches have been drawn from expert designers using different pens/pencils/tools, different styles and different backgrounds (thin paper, rough paper, poster board, etc.). Each image has its peculiar size and resolution, and has been taken from scanners or phone cameras.

However, this dataset does not come with a manual ground truth, preventing us to conduct a quantitative (together with a qualitative or visual) evaluation of the results. Manually segmenting complex hand-drawn images such as that reported in Fig. 20, last row, to obtain the ground truth to compare with, is not only tedious, but also very prone to subjectivity. Therefore, we searched for large and public datasets (possibly with an available ground truth) to be used in this evaluation. One possible solution is the use of the SHREC13 - “Testing Sketches” dataset [16], whereas alternatives are Google Quick Draw and Sketchy dataset [24]. SHREC13 contains very clean, single-stroke “hand-drawn” sketches (created using a touch pad or mouse), such as those reported in Fig. 21a and c. It is a big and representative dataset: it contains 2700 sketches divided in 90 classes and drawn by several different authors. Unfortunately, these images are too clean to really challenge our algorithm, resulting in almost perfect results. The same can be said for Quick Draw and Sketchy datasets.

Comparisons for the line extraction step: an image from our inverse dataset - first column; a random sketch from the internet, author: Michael Bencik - second column; a real Adidas AGTM hand drawn design - third column. Results are obtained from: a commercial tool in second row, two state of the art algorithms ([27] in third row and [26] in fourth row), and our method in the bottom row

Examples of the “inverse dataset” sketches created from SHREC13 [16]

To fairly evaluate the ability of our and other algorithms to extract lines in more realistic situations, we have created a “simulated” dataset, called inverse dataset. The original SHREC13 images are used as ground truth and processed with a specifically-created algorithm with the aim of “corrupting” them and recreating as closely as possible different drawing styles, pencils and paper sheets. More specifically, this algorithm randomly selects portions of each ground truth image, and moves/alters them to generate simulated strokes of different strength, width, length, orientation, as well as multiple superimposed strokes, crossing and broken lines, background and stroke noise. The algorithm for inverse dataset creation works as follows:

-

Select any of the SHREC13 images, resize it to 2000x2000 and convert the image to one-pixel thinned paths. Paths are white, while image background is black.

-

Select a random circle from that image. The circle center is randomly chosen from the whole image coordinates, and circle diameter is selected as random_uniform(135, 195). This should simulate a typical pencil trait length.

-

Choose a random brush type, brush width and pressure. Brush type can be ball shaped (pen) or bell shaped (pencil). Brush width is selected as random_uniform(4.0, 20.0). Pressure is selected as random_uniform(0.3, 1.7)

-

Paint the selected circle to the output image. This is done by blurring the thinned input ground truth with the ball or bell shaped tool (convolution filter). This blurred portion circle of the input image is copied over the output painting, multiplying it with the value of pressure. A lower pressure is applied to the start and end portion of each trait (first-last 10%); usually this is the case with real traits.

-

To better simulate hand strokes uncertainty and multiple strokes, a random shift and rotation is applied before drawing the trait to the output (an x, y translation of random_uniform(− 15, + 15) pixels, a rotation of random_uniform(− 5, + 5) degrees). The random shifted trait is added over the output image.

-

This process is repeated until the whole image has been copied (choosing many random circles). An additional constraint is applied: allowing for a maximum of multiple strokes drawn over the same portion of output image. For example, we choose a maximum of 3-5 overlapping strokes (three as a general rule, five allowed if needed to complete copying the last missing bits of the input image).

-

After the image has been completely copied, a final global phase is performed to apply multiple kinds of global noise. This is done to simulate: pencil tip roughness, paper porosity, paper non-uniform background. We define a Gaussian noise image GNI(σ) as follows: create a uniform noise image assigning a random_uniform(0.0, 1.0) to each pixel. Smooth that image with a Gaussian filter of given σ, and normalize the resulting image to have mean and deviation of 0 and 1. It is worth noting that this noise should challenge our Gaussian correlation matching. Then, we define a exponential Gaussian noise image EGNI(σ, s) pixel per pixel as following:

$$ EGNI(\sigma, s)_{x, y} = s^{GNI(\sigma)_{x, y}} $$(6)∀x, y composing GNI. This form of exponential noise is useful when multiplying different noises together, as s models the noise strength. As a consequence:

-

Pencil roughness is simulated by multiplying the traits with a EGNI(σ = 0.5, s = 1.5). Only traits are multiplied, by applying a threshold in order to detect traits.

-

Paper porosity is simulated by multiplying the image with a EGNI(σ = 1.0, s = 1.2).

-

Paper non-uniform background is simulated by multiplying the image with a EGNI(σ = 101.0, s = 2.0). In this case, before applying EGNI to the image, EGNI is previously eroded with a morphological operator (gray-levels erosion) of diameter 71.0 to achieve more uniform, smoothed results.

-

-

At this point the simulated pencil sketch has been obtained and it just needs to be scaled in the 0-255 domain (128 mean) and inverted (usually we want white background and dark strokes).

The resulting database has the same size as the original SHREC13 (2700 images), and each picture has been resized to 4 MegaPixels to better simulate real world pencil sketches. Example results (used as inputs for our experiments) are reported in Fig. 21b and d.

We performed visual/qualitative comparisons of our system with the state-of-the-art algorithms reported in [27] and in [26], and with the Adobe IllustratorTM’s tool “Live Trace” using different input images. Regarding the algorithm in [27], we used their nice online tool where users can upload their own sketches and get the resulting simplified image back for comparison purposes. For [26] we compared using their github code. Results are reported in Fig. 20, where it is rather evident that our method performs better than the compared methods in complex and corrupted areas.

To obtain quantitative evaluations we used the “inverse dataset”. Precision and recall in line extraction and the mean Centerline Distance, similar to the notion of Centerline Error proposed in [20], are used as performance metrics. The results are shown in Table 1. Our method outperforms Live Trace and strongly beats [27] in terms of recall, while nearly matching its precision. This can be explained by the focus we put in designing a true image color/contrast-invariant algorithm, also designed to work at multiple resolutions and stroke widths. Simo-Serra et al. low recall performance is probably influenced by the selection of training data (somewhat specific) and the data augmentation they performed with Adobe IllustratorTM (less generalized than ours). They changed this method in their second work [26], obtaining better performance mostly by improving recall. Still, their method exhibits a considerably lower recall than our proposed method. This results in a global F-measure of 97.8% for our method w.r.t. 87.0% of [27] and 90.8% of [26].

Finally, we compared the running times of these algorithms. We run our algorithm and “Live Trace” using an Intel Core i7-6700 @ 3.40Ghz, while the performance of [27] are extracted from the paper. The proposed algorithm is much faster than Simo-Serra et al. method (0.64 sec per image on single thread instead of 19.46 sec on Intel Core i7-5960X @ 3.00Ghz using 8 cores), and offers performance within the same order of magnitude of Adobe IllustratorTM Live Trace (which on average took 0.5 sec). The theoretical complexity of our algorithm is \(O({w_{max}}^{2} * log(w_{max}) * n)\), where \(w_{max}\) is the maximum line width to be detected, and n is the total number of pixels of the image. It is also worth noting that [26] work is quite heavy on RAM usage, in our experience the simplification code needed more than 15 GB of dynamic memory, while our proposed method is very memory efficient (< 100 MB).

4.2 Unbiased thinning

In order to assess the quality of the proposed unbiased thinning algorithm, a qualitative comparison with other two thinning algorithms is shown in Fig. 22. The other two algorithms are: [33], as a standard, robust, iterative algorithm, and K3M [23], which represents the state of the art for unbiased thinning. In the literature, the standard testing scenario for thinning algorithms is typewriting fonts.

It is quite evident that the proposed algorithm is able to correctly thin the most difficult parts of the characters, in particular along “N” steep angles, “X” crossing, and “Y” intersection, where it reconstructs the original structure of the letters with much more precision than the other algorithms.

Moreover, in all of the examples our algorithm associates the robustness of a standard algorithm (like Zhang-Suen), with the complete removal of biases, and has the additional benefit of working with shapes of arbitrary dimension. In fact, the test images range from the dimensions of 120 to 200 font points. K3M, on the other hand, has been designed to reduce bias but does not show any appreciable result with these shapes; this is because it has been designed to work with very small fonts (< 20 pts), and provides limited benefits for larger shapes.

For shapes that are already almost thin, with no steep angles (like Dijkstra cursive font - second row), the thinning results are similar for all the algorithms.

The unbiasing correction works better when the shapes to be thinned have a constant width (as in Arial and TwCen fonts). If the width of the line changes strongly within the shape, as in Times New Roman (and in general Serif fonts, that are more elaborated), the unbiasing correction is much more uncertain to perform, and our results are similar to the standard thinning by Zhang-Suen.

We can also note that K3M has some stability problems if the shape is big and has aliased contours, such as the rotated Times New Roman. In that scenario it creates multiple small fake branches.

For every example, our algorithm used a cord length accumulation with size of 15 points.

Finally, thinning speed is very similar to the one from [33], on which our algorithm relies, with just a 1-2% variation.

4.3 Vectorization algorithm

We improved Schneider’s algorithm mainly regarding the quality of the vectorized result. In particular, our objective was to reduce the total number of control points in the vectorized representation, thus creating shapes that are much easier to modify and interact with. This is sometimes called lines “weight”.

Figure 23 shows that we successfully reduced the number of control points w.r.t. Schneider’s original algorithm with a percentage that ranges from 10% to 30% less control points, depending on the maximum desired error. This nice property of fewer control points comes at the cost of increased computational time. In fact, the total run time has increased, but is still acceptable for our purposes: standard Schneider’s algorithms takes about 1 - 4 seconds to vectorize a 5 MegaPixels sketch on a Intel Core i7-6700 @ 3.40Ghz, whereas the improved version takes 2 - 15 seconds. Moreover, Schneider’s algorithm total running time is heavily dependent on the desired error (quality), while our version depends mainly on the specified maximum number of iterations. For our experiments we set the number of iterations dynamically for each path to its own path length, in pixels (short paths are optimized faster than long paths).

Four example portions of vectorization. a and c use Schneider’s stock algorithm, b and d use our improved version. Our algorithm considerably reduces the number of control points for both cases (err = 3 and err = 6), without decreasing result quality. For all the examples the maximum number of iterations has been dynamically set for each path to its own length in pixels (1 iteration per pixel)

Tables 2 and 3 show some quantitative analyses about execution times and number of control points obtained. They also show that we could stick with 0.1 iterations per pixel and achieve execution times very similar to the original Schneider’s version, while still reducing the number of control points considerably.

Finally, Figs. 24 and 25 report some challenging cases in which the final vectorization result of our system is reported.

5 Conclusions

The proposed system proved its correctness and viability at treating different input formats for complex hand-drawn sketches. It has been tested with real fashion sketches, artificial generated pictures with added noise, as well as random subject sketches obtained from the web.

The line extraction algorithm outperforms the state of the art in recall, without sacrificing precision. It is also faster, more accurate and easier to adapt to one’s needs to due to the modular nature of the system.

The unbiased thinning helps in representing shapes more accurately. It has proven to be better than the existing state-of-the-art approaches in retaining detail, in particular with difficult, sharp shapes. Still, it exhibits the same speed and robustness of a classical thinning strategy.

The discussed path extraction algorithm provides a complete treatment of the conversion of thinned images into a more suitable, compact representation.

Finally, Schneider’s algorithm for vectorization has been improved in the quality of its results. Experiments show a noticeable reduction in the number of generated control points (by a 10-30% ratio), while keeping good runtime performance.

In conclusion, the current version of the proposed framework has been made available as an Adobe Illustrator plugin to several designers at Adidas exhibiting excellent results. This further demonstrates its usefulness in real challenging scenarios.

References

Bartolo A, Camilleri KP, Fabri SG, Borg JC, Farrugia PJ (2007) Scribbles to vectors: preparation of scribble drawings for cad interpretation. In: Proceedings of the 4th Eurographics workshop on Sketch-based interfaces and modeling, pp 123–130. ACM

Bessmeltsev M, Solomon J (2018) Vectorization of line drawings via polyvector fields. arXiv:1801.01922

Bo P, Luo G, Wang K (2016) A graph-based method for fitting planar b-spline curves with intersections. J Comput Des Eng 3(1):14–23

Chen J, Guennebaud G, Barla P, Granier X (2013) Non-oriented mls gradient fields. In: Computer graphics forum, vol 32, pp 98–109. Wiley online library

Chen YS (1996) The use of hidden deletable pixel detection to obtain bias-reduced skeletons in parallel thinning. In: Proceedings of the 13th international conference on pattern recognition, vol 2, pp 91–95. IEEE

Dori D, Liu W (1999) Sparse pixel vectorization: an algorithm and its performance evaluation. IEEE Trans Pattern Anal Mach Intell 21(3):202–215

Favreau JD, Lafarge F, Bousseau A (2016) Fidelity vs. simplicity: a global approach to line drawing vectorization. ACM Trans Graph (TOG) 35(4):120

Fišer J, Asente P, Schiller S, Sỳkora D (2016) Advanced drawing beautification with shipshape. Comput Graph 56:46–58

Gonzalez RC, Woods RE (2007) Digital image processing 3rd edition

Han JH, Poston T (2001) Chord-to-point distance accumulation and planar curvature: a new approach to discrete curvature. Pattern Recogn Lett 22(10):1133–1144

Hilaire X, Tombre K (2006) Robust and accurate vectorization of line drawings. IEEE Trans Pattern Anal Mach Intell 28(6):890–904

Igarashi T, Matsuoka S, Kawachiya S, Tanaka H (2006) Interactive beautification: a technique for rapid geometric design. In: ACM SIGGRAPH 2006 courses, p 8. ACM

Kang H, Lee S, Chui CK (2007) Coherent line drawing. In: Proceedings of the 5th international symposium on non-photorealistic animation and rendering, pp 43–50. ACM

Lambert JH (1760) Photometria: sive de mensvra et gradibvs lvminis, colorvm et vmbrae. sumptibus vidvae E. Klett typis CP Detleffsen

Lewis JP (1995) Fast normalized cross-correlation. Vision Interface 10(1):120–123

Li B, Lu Y, Godil A, Schreck T, Aono M, Johan H, Saavedra JM, Tashiro S (2013) SHREC’13 track: large scale sketch-based 3D shape retrieval

Lindeberg T (1998) Edge detection and ridge detection with automatic scale selection. Int J Comput Vis 30(2):117–156

Liu X, Wong TT, Heng PA (2015) Closure-aware sketch simplification. ACM Trans Graph (TOG) 34(6):168

Lowe DG (1999) Object recognition from local scale-invariant features. In: International conference on computer vision, 1999, pp 1150–1157. IEEE

Noris G, Hornung A, Sumner RW, Simmons M, Gross M (2013) Topology-driven vectorization of clean line drawings. ACM Trans Graph (TOG) 32(1):4

Orbay G, Kara LB (2011) Beautification of design sketches using trainable stroke clustering and curve fitting. IEEE Trans Vis Comput Graph 17(5):694–708

Otsu N (1975) A threshold selection method from gray-level histograms. Automatica 11(285-296):23–27

Saeed K, Tabędzki M, Rybnik M, Adamski M (2010) K3m: a universal algorithm for image skeletonization and a review of thinning techniques. Int J Appl Math Comput Sci 20(2):317–335

Sangkloy P, Burnell N, Ham C, Hays J (2016) The sketchy database: Learning to retrieve badly drawn bunnies. ACM Trans Graph (TOG) 35(4):119:1–119:12

Schneider PJ (1990) Graphics gems. chap. An algorithm for automatically fitting digitized curves, pp 612–626. Academic Press Professional, Inc., San Diego

Simo-Serra E, Iizuka S, Ishikawa H (2018) Mastering sketching: adversarial augmentation for structured prediction. ACM Trans Graph (TOG) 37(1):11

Simo-Serra E, Iizuka S, Sasaki K, Ishikawa H (2016) Learning to simplify: fully convolutional networks for rough sketch cleanup. ACM Trans Graph (SIGGRAPH) 35(4):121

Song J, Su F, Tai CL, Cai S (2002) An object-oriented progressive-simplification-based vectorization system for engineering drawings: model, algorithm, and performance. IEEE Trans Pattern Anal Mach Intell 24(8):1048–1060

Steger C (1998) An unbiased detector of curvilinear structures. IEEE Trans Pattern Anal Mach Intell 20(2):113–125

Suzuki S, et al (1985) Topological structural analysis of digitized binary images by border following. Comput Vis Graph Image 30(1):32–46

Tsai DM, Lin CT (2003) Fast normalized cross correlation for defect detection. Pattern Recogn Lett 24(15):2625–2631

Zhang SH, Chen T, Zhang YF, Hu SM, Martin RR (2009) Vectorizing cartoon animations. IEEE Trans Vis Comput Graph 15(4):618–629

Zhang T, Suen CY (1984) A fast parallel algorithm for thinning digital patterns. Commun ACM 27(3):236–239

Acknowledgements

This work is funded by Adidas AG. We are really thankful to Adidas for this opportunity.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Donati, L., Cesano, S. & Prati, A. A complete hand-drawn sketch vectorization framework. Multimed Tools Appl 78, 19083–19113 (2019). https://doi.org/10.1007/s11042-019-7311-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-019-7311-3