Abstract

In this paper, we propose a fast and easy-to-use projector calibration method needing a minimum set of input data, thus reducing the calibration time. The method is based on the Direct Linear Transformation (DLT) mathematical model, which makes it simple and fully automatic. We show the application of this method on cylindrical surfaces, as well as some real application examples. The results show that with the minimum configuration of 6 control points (CPs), the standard deviation in the projector positioning yielded by the calibration process is less than one per cent of the position values.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Over the last few years, a variety of geometry-based proposals have been made to calibrate projectors, a procedure which is crucial for some applications, like multi-projector visualization on large screens [1, 5, 6], videogrammetry [13], and 3D object reconstruction in structured light scanning [11, 12, 18, 19]. The most extended calibration methods make use of planar homographies supported by physical planar surfaces with known patterns (e.g. checkerboards, grids of dots) [14, 15, 17, 20]. In these methods, the geometric correction and alignment is carried out by means of planar homography transformations between the planar surface, the projector frame buffers and the images of one or more cameras observing the surface. To that end, some physical and projected set of points are considered, whose 2D-to-3D coordinates are previously known or computed during the calibration procedure. The image-based recognition of the known patterns, either alone or in combination with other planar surfaces, are used to automatically measure 2D points and then establish 2D-to-3D correspondences. Therefore, in this kind of methods the physical surface with the known pattern has to be moved and rotated into different spatial positions (at least 10 different positions are recommended) to complete the calibration procedure.

Nevertheless, the use of planar surfaces is not always feasible, specially when some space or environment restrictions arise. For instance, a case where the projector to be calibrated is located at 3 meters high would require a planar surface of equivalent dimensions (of that of the projected image at the working distance) than could be moved to 10 different positions, making impractical this kind of calibration methods. In order to avoid this kind of restrictions, other calibration methods using cylindrical surfaces have been proposed, such as the ones described in [7, 25, 26]. Nevertheless, the calibration method is still an open issue, since the restrictions encountered in real scenarios often require ad-hoc solutions.

In this paper, we propose a new calibration method based on the well-known Direct Linear Transformation (DLT) equations to calibrate projectors making use of cylindrical surfaces. For evaluation purposes, we have dealt with a real case scenario that has specific restrictions. It consist of a typical “Visionarium”, a room for 3D environments visualization with a cylindrical projection screen at University of Valencia premises. In these facilities, the curved image is composed from the projections of three projectors, which are located at a height of 5 m. The image of the central projector is completely projected onto the screen. The image of the two side projectors is only partially projected onto the screen. First we show the application of this method on cylindrical surfaces. The calibration results show that with the minimum configuration of 6 control points (CPs), the standard deviation in the projector positioning yielded by the calibration process is less than one per cent of the position values. Also, we show the application of the proposed method to some real application examples.

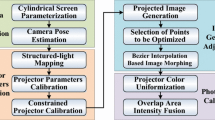

The rest of the paper is organized as follows: Section 2 presents some related work. Section 3 introduces the proposed calibration method. Next, Section 5 describes some real use cases of the proposed method. Finally, Section 6 shows some conclusion remarks.

2 Related work

Projectors are key elements in many different applications like scientific visualization, virtual and augmented reality, structured light techniques and other visually intensive applications. The geometric calibration of a projector can be based on either a mechanical and electronic alignment, or by vision-based indirect methods, involving one or more cameras that observe a set of projected images [2]. Since the latter strategy requires no special infrastructure and/or resources except one or two cameras, many vision-based techniques have been proposed in the last years for the geometrical calibration of projectors. Many of these techniques use planar screens as physical surfaces. In these cases, the automated geometric correction and alignment are simplified through the use of planar homographic transformations between the physical surface, the projector frame buffers, and the images of one or more cameras focusing on the surface [3, 16, 22].

Some other works can be found where non-planar surfaces are used to achieve calibration. One of the first works found in the literature made use of a 3D calibration pattern with spatially-known control points (CPs) and two cameras, which were fully calibrated by means of projective geometry [23]. Other authors incorporate structured light techniques in the process of calibration. As an example, an approach that allows one or more projectors to display an undistorted image on a surface of unknown geometry is presented in [27]. In this case, patterns which are commonly used in techniques based on structured light are considered for computing the relative geometric relationships between camera and projector, thus avoiding an explicit calibration. A different method for projecting images without distortion onto a developable surface (producing a wall-paper effect) is presented in [7]. In this case, the correspondences between a camera and a projector are computed by projecting a sequence of bar code images (they use between 8 and 12 images) of increasingly fine spatial frequency, in such a way that projector coordinates corresponding to various camera pixels can be temporarily encoded.

It is also worth citing the approach introduced in Sajadi and Majumder [24, 25], where some spatial geometric relationships are established to derive the exterior orientation of a camera (the interior orientation is considered as already known) and from this one, the interior and exterior orientation of a projector is computed.

Finally, our previous work [21] proposes a calibration method which results from the combination of surveying, photogrammetry and image processing approaches, and has been designed by considering the spatial restrictions of virtual reality simulators. However, that method needs a previous laboratory work to obtain the internal calibration of the camera. Also, the camera usually needs the re-calibration periodically, and the method requires that the optical zoom is the same used in the internal calibration of the camera.

In the next sections, we present a projector calibration method that can greatly simplify the calibration process and can be applied to cylindrical surfaces. The proposed method eliminates the need for the previous laboratory work and fully exploits the advantages of the DLT, as shown in the next section. Together with a fixed calibration bench whose points are measured with a topographic total station, they allow the calibration of an unlimited number of projectors.

3 Six Control Points Method (SCPM)

The aim of our research is to design a fast and easy-to-use method to provide both camera and projector geometric calibration with the smallest set of input data as possible, in order to reduce the calibration time. Our method combines the easiness of establishing 2D-3D correspondences with checkerboards-based patterns from only two images (one with the background and the other with a projected pattern), with the facility of its mathematical formulation. The Direct Linear Transformation has been traditionally used in camera calibration, and our previous experience with the DLT [21] shows that it yields several advantages: first, it defines a linear relationship between the points in the image and the real world, which in turn implies a simple mathematical formulation. Second, the focal length may be unknown and it can vary between different images. This feature is specially advantageous when several projectors (with different characteristics) should be calibrated simultaneously, like in spatial augmented reality applications. Third, the position of the system coordinates of the image can be arbitrary. This feature implies that the system can be calibrated even with only part of the image. In projectors, this feature means calibrating with zoom. Thus, we propose the DLT also for projector calibration. Moreover, we propose the double application of the DLT, once for calibrating the camera and once for calibrating the projector. In this way, the SCPM duplicates the advantages of the DLT. In the end, we have two optical devices calibrated almost simultaneously, without needing to know in advance the internal calibration of neither of them (unlike other calibration methods), with a very simple mathematical formulation and reducing the required time (since we only need to select six points in a semi-automatic way).

The proposed method is based on projective geometry similar to the one presented in [23], but using one instead of two cameras, and assuming that geometry of the projection surface is a vertical cylinder, a common case in many virtual reality systems. The mathematical background makes use of the Direct Linear Transformation (DLT) model [4, 9], which gives both simplicity and robustness to our implementation because the model is easy to implement and the results can be refined through a least square procedure if redundancies (more CPs than the minimum required) are available.

Our method assumes that the camera and the projector are linear devices with no radial distortion and the projector can be considered as equivalent to a pin-hole camera. Then, the mathematical formulation that lies on the background is the DLT that is twice applied, one to derive camera parameters and the other one to derive projector parameters. The formulation of the DLT is shown in (1) and (2), where x and y denote the observable 2D image coordinates of a given point, the parameters X, Y, Z denote the 3D spatial coordinates of that point, and the terms ai, bi, and ci denote the 11 DLT parameters for a particular image. Since a single observed point yields 2 equations, a minimum of 6 points are needed to solve the equations and find the 11 unknown DLT parameters.

In order to solve the 11 unknowns of the DLT, a matrix system can be built as shown in (3), which can be solved by a Least Square fitting. The elements of the matrix A and the vector k are given in (4) and (5), respectively, for the first CP; r is the vector of residuals and x is the vector of unknowns, i.e. the 11 DLT parameters.

Solving these equations makes the computing of the projector orientation straightforward, since the 11 DLT parameters are related to the six parameters of exterior orientation (coordinates X0, Y 0, Z0, and angles κ, φ, ω), and to the five elements of interior orientation (principal point coordinates x0, y0, focal length c, relative y-scale λ and shear d) [4, 10]. Since each observed point (with 2D-3D correspondences) gives two equations and there are 11 unknowns, solving these equations for a minimum of 6 observed points leads to the computation of the interior and exterior sensor (camera or projector) orientation. In case that more points are available, the system can be solved with a minimum squares procedure. Finally, it is worth mentioning that the DLT fails if all CPs lie in a single plane. However, this situation cannot occur in our case, because the projection surface is a vertical cylinder, which is non-planar.

The input data of the SCPM calibration method are: four measured distances (a, b, c and h in Fig. 1, from which the coordinates of the six required CPs are obtained), an image of a checkerboard pattern, an image acquired by the camera of the projected checkerboard pattern and an image acquired by the camera of the projection screen. It is assumed that the screen has a cylindrical shape which is vertically aligned.

The first step in this method is to compute the 2D-3D camera correspondences of a minimum of six CPs. From the four measured distances, the radius r of the cylinder and the 3D coordinates of the six CPs are computed. In order to achieve this goal, the six CPs must be located as shown in Fig. 1, being vertically aligned in pairs and horizontally aligned in threes. In this way, the triangles formed by the three upper and the three lower points are equal, and the relative vertical distance between pairs is a constant value, h. From the triangle, the radius r of the vertical cylinder is calculated. Then, a coordinates system with the origin at the central point of the circle defining the cylinder and at the same horizontal plane than the three lower CPs is established, with the Y coordinate in the vertical direction. At this point, the computation of the 3D coordinates of the CPs and the vertical distance h is straightforward. On other hand, the CPs’ image coordinates are measured from the image acquired by the camera. This procedure can be either automatic or manual. The implementation includes a method to automatically find round shaped CPs, but for those cases where artificial CPs cannot be placed on the screen, the manual selection of any physical feature that can act as a control point is allowed.

The second step consist of computing the interior and exterior orientation parameters of the camera. In this step, the DLT (1) and (2) are applied to the 2D-3D correspondences of CPs derived in the first step. From the 11 obtained parameters, the relationship between them and the equations of central projection is straightforward, and therefore the interior and exterior orientation parameters are computed.

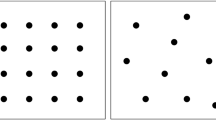

The third step is to compute the camera image coordinates of a projected checkerboard pattern. The image of the checkerboard pattern is projected and recovered by the camera. The background of the recovered image is subtracted, in order to avoid the visualization of the CPs or other artifacts, and then it is thresholded. The corners of the squares are automatically detected with sub-pixel accuracy by an image-processing algorithm based on the OpenCV library [8]. Figure 2 illustrates how the background image and the projected checkerboard-like pattern showing detected points are displayed. These points constitute the array of camera image coordinates of the checkerboard which will be used in the following step to derive their corresponding 3D coordinates.

Figure 3 illustrates the spatial positioning of devices and 3D points. In this figure, the black dots represent the camera projection center; the cyan dot represents the projector projection center; the red dots represent the CPs at the cylindrical surface; the green dots represent the checkerboard points at Z = 0, and finally the blue dots represent the checkerboard points at the cylindrical surface. The fourth step consists of obtaining the 3D coordinates of the projected checkerboard on the cylinder.

As Fig. 3 shows, the projected points belong to the spatial rays that start in the camera projection center, intersect the checkerboard in the image plane of the camera, and intersect the vertical cylinder (that we have previously defined in a mathematical way). Thus, the fourth step is to obtain 3D coordinates of the projected checkerboard. Since at this point we have computed the intrinsic calibration of the camera and the 3d coordinates of the camera, we can trace rays going from the 3d coordinates of the camera, and passing through the image plane of the camera. The points of intersection between these rays and the vertical cylinder are the 3D coordinates of the projected checkerboard on the cylinder that we are looking for. Equations (1) and (2) can be rearranged as the equation system (6) to trace rays from the camera origin to a certain spatial direction. This system can be solved assuming e.g. Z = 0, and being the X and Y the unknown variables. This will produce the green dots in Fig. 3.Then, tracing spatial rays from the camera projection center to each of them, the rays will intersect the cylinder as the blue dots in Fig. 3.

The fifth step in this method is to compute the 2D-3D projector correspondences of the checkerboard points. In order to achieve this goal, we propose a similar approach as the one given in the third step described above. However, in this step there is no need to apply background extraction and thresholding. Figure 4 depicts an example of the result of this step.

Finally, the last step consists of computing the interior and exterior parameters of the projector. The same approach as the one followed in the second step can be used acquire the interior and exterior parameters of the projector. In this case, the 2D-3D correspondences are the ones derived in steps 4 and 5, with a total of 60 points (as shown in Fig. 4). It must be noted that in this case the number of equations (60x2) is much higher than the number of unknowns variables (11), and a least-square procedure can be applied.

4 Method validation

In order to validate the proposed method (SCPM), we have conducted some experiments to check how stable the system is in computing the interior and exterior orientation parameters of the projector for different camera positions. We have conducted two representative experiments, one carried out in a laboratory and the other one carried out on a real Visionarium available at IRTIC, University of Valencia. We have denoted the latter one as the professional setup.

The laboratory setup consists of a 1800 home-made cylindrical surface with a radius of 0.48 m and 1.0 m height, a Logitech webcam of 640×480 pixel resolution, and a 1280×800 pixel resolution Vivitek multimedia projector with integrated DLP technology. A total of six black circular shaped control points have been attached to the surface. Distances a, b, c and h (from which the coordinates of 6 CPs are derived, see Fig. 1) have been manually measured with a measuring tape.

The camera was moved into 6 different positions, leading to a total of 6 different probes. The obtained results are shown in Tables 1 and 2. These tables include the values obtained for parameters X, Y and Z, measured in meters; the values for parameters κ, φ, ω measured in [gon]; and the values for parameters x0, y0, and c measured in pixels. As a measure of variability, the standard deviation for each parameter is included in both tables. It must be noted that the exterior orientation parameters of the camera are also given with the purpose of showing the camera locations in the different probes.

Tables 1 and 2 show that the highest variability when moving the camera to different positions is reached in the projector calibration, for the Z direction (14 mm), κ parameter (0.0214 gon) and y0 parameter (9.2 pixels). Nevertheless, these values of standard deviation represent 2.8%, 0.36%, and 0.91% of the maximum values shown in the corresponding column. Therefore, these results show that SCPM yields a low level of variability and require a minimum effort for calibration.

The professional setup consists of a 180 sgi Silicon Graphics cylindrical surface with a radius of 3.75 m and 3.0 m height, and a CanonⒸPowerShot G12 camera. The camera has up to 10 MP resolution (2816x2112 resolution setup was used) and integrated optics with varying focal lengths from 6.1 to 30.5 mm (the minimum value was used in order to have a greater field of view). Although the cylindrical surface commercial system has its own projectors, we used for the professional setup the same projector as in the laboratory setup, in order to achieve comparable results. The surface had a 40×9 grid of small white circular shaped control points (ca. 2.5 mm of radius) that were sensitive (and thus visible) under black light conditions. Six of those points where taken as control points. Distances a, b, c and h were measured in the same way as in the laboratory setup.

Like in the laboratory setup, the camera was moved into 6 different positions, leading to a total of 6 different probes. The obtained results are shown in Tables 3 and 4. These tables show that the results for the projector calibration obtained with the professional setup are not better than those of the laboratory setup. This is an expected result, because the professional surface (and thus the working volume) is greater than the one considered for the laboratory setup, although the camera of the professional setup has a greater resolution. In the professional setup, the major variability also is achieved in the Z direction (31 mm) and κ (0.0049 gon), whereas x0 and y0 give similar results (4.7 and 4.5 pixels, respectively). It also draws the attention the fact that a greater variability is obtained for the IO parameters of the camera in the professional setup. A way of reducing this variability could be to increase the number of control points.

5 Application examples

5.1 Image warping

Once the projector orientation parameters have been obtained, it is possible to project on the cylindrical surface (whose geometry is known) any point in a 3D known position. In the same way, images can be mapped into any known surface. As an example, Fig. 5 shows the implementation of three different mappings: Fig. 5a shows a wallpaper-like mapping, Fig. 5b shows an orthogonal-like mapping, and Fig. 5c shows a perspective-like mapping.

In all cases, the original image i is first scaled to i’ in order to fit the size ratio of the surface area where it should be mapped. Then, the mapping transformation is applied. The name of the wallpaper-like mapping comes from the fact that it produces the same effect as if the image was printed on a paper and attached to the cylindrical surface following its curvature. Figure 5a shows how first the cylindrical surface is straightened to form a planar surface, and the image is orthogonally mapped. Next, the surface with the attached image is again bended. In our implementation, the user can choose the height of the projection on the cylindrical surface and the horizontal angle (w) that has to be covered.

The orthogonal-like mapping represented in Fig. 5b produces the same effect as if the projection surface was planar when viewed at a certain distance to the surface. This kind of projection can be used to project planar images to non-regular surfaces by producing the effect that they are not distorted because of the surface geometry. The user can choose the height and width that the projection has to cover on the cylindrical surface. Finally, the perspective-like mapping shown in Fig. 5c tries to reproduce the human viewing, and it is commonly used in virtual reality simulations. In this case, the user has to specify the coordinates of the projection center, the distance to a virtual projection plane, and the dimensions of that plane. In Fig. 5c two different projection planes that are at different distances from the projection center (d and d’). In this way, this Figure shows how different the projections on the cylindrical surface can be, depending on this parameter.

Next, we have used the laboratory setup described in Section 3 to compute the distortions to be applied to an image in order to be projected on the cylindrical surface. As an example, Fig. 6 shows the warped images and their projections on a cylindrical surface of a checkerboard image. The images on the left side of this Figure show photographs of the laboratory setup. In the case of wallpaper-like mapping, the projection covers a vertical distance of 0.3 m., and it is 120 horizontally opened. In the case of orthogonal-like mapping, the projection is 0.3 m. high and 0.4 m. width. Finally, in the perspective-like mapping, the projection center is located at coordinates (0.0, 0.3, 2.0), the projection plane is located at a distance of 1.5 m. from the projection center and it is 0.3 m. high by 0.4 m. wide.

5.2 3-D object reconstruction

Once the camera and the projector have been calibrated, the projector-camera pair can act as a stereoscopic system. That means that for any point projected onto any surface and recovered by the camera, its 3D coordinates can be computed.

Equation (7) can be used in turn to derive the 3D object coordinates of each image point. These equations are derived from (1) and (2) assuming that two rays intersect at XYZ, where XYZ are the unknown object coordinates of a point, \(a_{1c} {\dots } c_{3c}\) are the 11 DLT calculated parameters for the camera, \(a_{1p} {\dots } c_{3p}\) are the 11 DLT parameters for the projector, xc, yc are the image coordinates of the principal point of the camera and xp, yp are the image coordinates of the principal point of the projector.

Therefore, the proposed calibration method can be applied to recover the 3D shape of any object by projecting a grid of points, and then using an automatic approach that identifies correspondences between each projected point and the imaged point by the camera. Nevertheless, this can be tricky if the 3D surface is complex, as the grid recovered by the camera may be quite different from the projected grid (regular or not), and thus some correspondences may be erroneously established. However, correspondences can be unequivocally assigned if the projector is used to sequentially illuminate point after point and the camera is synchronized to recover those points. While the first strategy is fast because only one image is needed, the second one is much slower as it needs to recover as many images as projected points. Therefore, the first strategy can be used in real-time applications where usually the object and/or the camera-projector system are moving and the accuracy is not critical. The second strategy can be used to reconstruct static objects with greater accuracy. Apart from these techniques, other structured light techniques can be applied, which are out of the scope of this paper.

As an example, Fig. 7 shows the 3D reconstruction of a mannequin body by using the technique of illuminating point after point and using the laboratory setup as described in Section 3. A total of 5,000 points were recovered, which were afterwards triangulated (as shown in Fig. 7b) and meshed for visualization purposes (as shown in Fig. 7c).

6 Conclusions

In this paper, we have proposed a fast and easy-to-use projector calibration method needing a minimum set of input data, which reduces the required calibration time. The method is based on the Direct Linear Transformation (DLT) mathematical model, which makes it simple and fully automatic. We have shown the application of this method on cylindrical surfaces. The results show that with the minimum configuration of 6 control points (CPs), the standard deviation in the projector positioning yielded by the calibration process is less than one per cent of the position values. Also, we have used the proposed method in some real application examples, validating it as an efficient method for real cases.

References

Bhasker E, Sinha P, Majumder A (2006) Asynchronous distributed calibration for scalable and reconfigurable multi-projector displays. IEEE Trans Vis Comput Graph 12 (5):1101. https://doi.org/10.1109/TVCG.2006.121

Brown M, Majumder A, Yang R (2005) Camera-based calibration techniques for seamless multiprojector displays. IEEE Trans Visual Comput Graph 11(2):193. https://doi.org/10.1109/TVCG.2005.27

Chen H, Sukthankar R, Wallace G, Li K (2002) Scalable alignment of large-format multi-projector displays using camera homography trees. In: Proceedings of the conference on visualization ’02. IEEE Computer Society, Washington, pp 339–346. http://dl.acm.org/citation.cfm?id=602099.602151

Dermanis A (1994) Free network solutions with the DLT method. ISPRS J Photogramm Remote Sens 49:2. https://doi.org/10.1016/0924-2716(94)90061-2

Garcia-Dorado I, Cooperstock J (2011) Fully automatic multi-projector calibration with an uncalibrated camera. In: 2011 IEEE computer society conference on computer vision and pattern recognition workshops (CVPRW), pp 29–36. https://doi.org/10.1109/CVPRW.2011.5981726

Griesser A, Van Gool L (2006) Automatic interactive calibration of multi-projector-camera systems. In: 2006 CVPRW ’06 conference on computer vision and pattern recognition workshop, p 8. https://doi.org/10.1109/CVPRW.2006.37

Harville M, Culbertson B, Sobel I, Gelb D, Fitzhugh A, Tanguay D (2006) Practical methods for geometric and photometric correction of tiled projector. In: 2006 CVPRW ’06 conference on computer vision and pattern recognition workshop, p 5. https://doi.org/10.1109/CVPRW.2006.161

Itseez (2015) Opencv 3.0. http://opencv.org/opencv-3-0.html

Karara HM, Abdel-Aziz YI (1979) Accuracy aspects of non-metric imageries. Photogramm Eng 48(7):1107

Kraus K, Waldhäusl P Photogrammetry: Advanced methods and applications. Photogrammetry / Karl Kraus (Dümmler, 1997). https://books.google.es/books?id=sih2QgAACAAJ

Li T, Zhang H, Geng J (2010) Geometric calibration of a camera-projector 3D imaging system. In: 2010 25th international conference of image and vision computing New Zealand (IVCNZ), pp 1–8. https://doi.org/10.1109/IVCNZ.2010.6148798

Liao J, Cai L (2008) A calibration method for uncoupling projector and camera of a structured light system. In: AIM 2008 IEEE/ASME international conference on advanced intelligent mechatronics, pp 770–774. https://doi.org/10.1109/AIM.2008.4601757

Lin SY, Mills JP, Gosling PD (2008) Videogrammetric monitoring of as-built membrane roof structures. Photogramm Rec 23(122):128. https://doi.org/10.1111/j.1477-9730.2008.00477.x

Moreno D, Taubin G (2012) Simple, accurate, and robust projector-camera calibration. In: 2012 second international conference on 3D imaging, modeling, processing, visualization and transmission (3DIMPVT), pp 464–471. https://doi.org/10.1109/3DIMPVT.2012.77

Nakamura T, de Sorbier F, Martedi S, Saito H (2012) Calibration-free projector-camera system for spatial augmented reality on planar surfaces. In: 2012 21st international conference on pattern recognition (ICPR), pp 85–88

Okatani T, Deguchi K (2006) Autocalibration of an ad hoc construction of multi-projector displays. In: Conference on computer vision and pattern recognition workshop, CVPRW ’06, p 4. https://doi.org/10.1109/CVPRW.2006.35

Park SY, Park GG (2010) Active calibration of camera-projector systems based on planar homography. In: 20th international conference on pattern recognition (ICPR). IEEE Computer Society, pp 320–323

Portalés C, Morillo P, Orduña JM (2014) Towards an improved method of dense 3D object reconstruction in structured light scanning. In: Proceedings of international conference on computational and mathematical methods in science and engineering (CMMSE), pp 992–1001

Portalés C, Orduña JM, Morillo P (2015) Parallelization of a method for dense 3D object reconstruction in structured light scanning. J Supercomputing 71(5):1857. https://doi.org/10.1007/s11227-014-1364-x

Portalés C, Ribes-Gómez E, Pastor B, Gutiérrez A (2015) Calibration of a camera-projector monochromatic system. Photogramm Rec 30(149):82. https://doi.org/10.1111/phor.12094

Portalés C, Casas S, Coma I, Fernández M (2017) A multi-projector calibration method for virtual reality simulators with analytically defined screens. J Imag 3(2):19. https://doi.org/10.3390/jimaging3020019

Raij A, Pollefeys M (2004) Auto-calibration of multi-projector display walls. In: Proceedings of the 17th international conference on pattern recognition, ICPR 2004, vol 1, pp 14–17. https://doi.org/10.1109/ICPR.2004.1333994

Raskar R, Brown M, Yang R, Chen WC, Welch G, Towles H, Scales B, Fuchs H (1999) Multi-projector displays using camera-based registration. In: Proceedings of the visualization ’99, pp 161–522. https://doi.org/10.1109/VISUAL.1999.809883

Sajadi B, Majumder A (2009) Markerless view-independent registration of multiple distorted projectors on extruded surfaces using an uncalibrated camera. IEEE Trans Visual Comput Graph 15(6):1307. https://doi.org/10.1109/TVCG.2009.166

Sajadi B, Majumder A (2010) Auto-calibration of cylindrical multi-projector systems. In: 2010 IEEE virtual reality conference (VR), pp 155–162. https://doi.org/10.1109/VR.2010.5444797

Sun W, Sobel I, Culbertson B, Gelb D, Robinson I (2008) Calibrating multi-projector cylindrically curved displays for “Wallpaper” projection. In: Proceedings of the 5th ACM/IEEE international workshop on projector camera systems. ACM, New York, pp 1:1–1:8. https://doi.org/10.1145/1394622.1394624.

Tardif JP, Roy S, Trudeau M (2003) Multi-projectors for arbitrary surfaces without explicit calibration nor reconstruction. In: 2003 3DIM Proceedings of the fourth international conference on 3-D digital imaging and modeling, pp 217–224. https://doi.org/10.1109/IM.2003.1240253

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This work has been supported by Spanish MINECO and EU ERDF programs under grants TIN2015-66972-C5-5-R, and TIN2016-81850-REDC.

Rights and permissions

About this article

Cite this article

Portalés, C., Orduña, J.M., Morillo, P. et al. An efficient projector calibration method for projecting virtual reality on cylindrical surfaces. Multimed Tools Appl 78, 1457–1471 (2019). https://doi.org/10.1007/s11042-018-6253-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-018-6253-5