Abstract

Humans possess an intelligent system which effortlessly detect salient objects with high accuracy in real-time. It is a challenge to develop a computational model which can mimic human behavior such that the model achieves better detection accuracy and takes less computation time. So far the research community have suggested models which achieve better detection accuracy but at the cost of computation time and vice versa. In this paper, we attempted to realize a model that takes less computational time and simultaneously achieves higher detection accuracy. In the proposed model the original image is divided into m superpixels using SLIC superpixels algorithm and then these superpixels are clustered into k regions using k-means algorithm. Thereafter the result of the k-means clustering is used to build Gaussian mixture model whose parameters are refined using Expectation-Maximization algorithm. Finally the spatial variance of the clusters is computed and a center-weighted saliency map is computed. The performance of the proposed model and seventeen related models is evaluated both qualitatively and quantitatively on seven publicly available datasets. Experimental results show that the proposed model outperforms the existing models in terms of precision, recall and F -measure on all the seven datasets and in terms of area under curve on four datasets. Also, the proposed model takes less computation time in comparison to many methods.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Salient object detection [5] refers to the extraction of dominant objects (salient objects) in an image which automatically attracts visual attention. It is a challenging problem in the field of computer vision and has many real-time applications in surveillance systems, remote sensing and image retrieval. It is helpful in automatic target detection, robotics, image and video compression, automatic cropping/ centering to display objects on small portable screens, medical imaging, advertising a design, image enhancement and many more.

Salient object detection involves the transformation of the original image to a saliency map [14] such that the salient objects are highlighted while the background is suppressed. Saliency map generally take the values between [0, 1]. Higher the value of a pixel, higher is its chances to become a salient pixel. The approaches for salient object detection can be broadly classified into two main categories [7]: bottom-up and top-down. Bottom-up approaches involves the extraction of low-level features from the image and then combining them into a saliency map. They are fast, stimulus driven and task independent. While in the top-down approaches, human observation behavior is exploited to accomplish certain goals and is task dependent. Usually top-down approaches are combined with the bottom-up approaches to detect salient objects.

Most of research works mostly focussed on the bottom-up aspect of visual attention. With the advancement of these bottom-up approaches, researchers started distinguishing the two very similar terms: fixation prediction and salient object detection. The fixation prediction models try to mimic the human vision with an objective that the human eyes mainly focus on some of the points in a given scene if shown for a few seconds. These points are helpful in eye movement prediction. The second category of models which are salient object detection models detects the most salient object in an image by segmenting the image into two regions, a salient object and background, by drawing accurate silhouettes of the salient object. Both categories of models construct saliency maps which are useful for different purposes. In literature, the research community has suggested different combination schemes in order to yield a saliency map from a set of low level features. The research work of Itti et al. [14] is motivated by the neuronal activity of the receptive fields in the human visual system. The three features such as intensity, color and orientation were considered equally important and were linearly combined to obtain a saliency map. While Liu et al. [19] proposed a supervised approach to learn a weight vector in order to combine the multi-scale contrast, center-surround histogram and the color spatial distribution features into a saliency map. We also investigated some of the other most popular related models like the one given by Bruce and Tsotsos [6] who modeled visual saliency by utilizing the concept of information maximization. Han et al. [10] applied region growing techniques over the saliency map obtained using the research work of Itti et al. [14] and extracted salient regions. Meur et al. [22] used the subband decomposition based energy for the chromatic as well as the achromatic channels to compute the saliency. Harel et al. [11] extended the work of Itti et al. [14] and gave a graph based visual saliency model. Hou and Zhang [12] gave a simple and fast method for visual saliency detection by extracting the spectral residual of the image. Yu and Wong [29] extracted the salient objects at the grid level instead at the pixel level. Zhang et al. [30] used Bayesian framework to compute the probability of a target at every location in the image. Achanta et al. [2] used an image subtraction technique to generate a frequency tuned saliency model. Achanta and Susstrunk [1] gave the visual saliency model by utilizing the maximum symmetric surround difference for every pixel in the image. Zhang et al. [31] combined position, area and intensity saliency based on the outcome of scalable subtractive clustering, and employed Bayesian framework to classify a pixel into an attention pixel or a background pixel. Goferman et al. [9] proposed a context-aware saliency detection algorithm to detect salient objects. Liu et al. [20] used kernel density estimation method and two-phase graph cut approach to detect salient objects. Shen and Wu [24] incorporated the low rank matrix and a sparse noise in some feature space to detect the salient object. Vikram et al. [27] randomly sampled the image into a number of rectangular regions and computed local saliency over these regions. İmamoğlu et al. [13] proposed a saliency detection model by extracting low-level features based on wavelet transform. Singh and Agrawal [25] modified the Liu et al. [19] model at the feature level and employed a combination of Kullback-Leibler divergence and Manhattan distance to detect salient objects. Liu et al. [21] proposed a novel saliency tree approach to extract salient objects from the image. Zhu et al. [34] used a multisize superpixel approach based on multivariate normal distribution estimation for salient object detection. Peng et al. [23] suggested a saliency-aware image-to-class distances for image classification. Jiang et al. [15] proposed multi-level image segmentation technique which utilizes the supervised learning approach to map the regional feature vector to a saliency score.

Few researchers have extended saliency detection to co-saliency detection, like the one suggested by Fu et al. [8]. They used two layer clustering, where one layer focusses on groups the pixels on each image (single image), and the other layer associates the pixels on all images (multi-image).

Recently researchers have also suggested few models based on deep learning. Zhao et al. [33] proposed a multi-context deep learning framework using deep convolutional neural networks for salient object detection. Lin et al. [18] suggested a model which uses midlevel features on the basis of low-level k-means filters within a unified deep framework in a convolutional manner for saliency detection. Zhang et al. [32] proposed a co-saliency detection method based on intrasaliency prior transfer and deep intersaliency mining. Li and Yu [16, 17] suggested a deep contrast learning method for salient object detection using deep convolutional neural networks.

The common thing that is witnessed from related models is that they explored multiple low-level features of the image and then combined those using different strategies. The features involved were either of the same size of the image or of reduced size. The evaluation of the models is done on publicly available datasets to find their detection accuracy and its computation time. Experimental results demonstrated that most of the models [1, 2, 12, 14, 22, 27, 29,30,31] take less computation time but provide degraded detection accuracy because of either reduced size of image or simpler combination strategies. On the other hand, the models such as [9, 13, 19, 20, 24, 25] achieve better detection accuracy at the cost of higher computation time because they involved either full resolution image or some kind of learning technique is involved in combining the low-level features. However, there is need to develop a model which takes less computation time and simultaneously achieves high detection accuracy. One possible way to realize this objective is to utilize a single dominant feature in a model that is sufficient to describe an image instead of multiple features as commonly used in most of the state-of-the-art methods. In most of the state-of-the-art models dealing with multiple features, we have observed experimentally that color feature is most commonly used and dominates the remaining features. Snowden [26] also suggested that a purely chromatic signal is sufficient to capture visual attention. Color feature can be extracted either at the local level or the global level. Since colors are widely spread in an image, so color as a global feature may be more appropriate.

In this paper we propose an approach which utilizes color feature at the global level to detect the salient object. The motivation of the model came from the fact that image is constructed from several signals (say k), assumed to be Gaussians. Here a signal can be formed from various shades of a color present in the image. Then a mixture of Gaussians needs to build over these signals using a parametric estimation technique. Generally the images present in the datasets consist of thousands of pixels. Estimating the parameters of k Gaussians (strength, mean and covariance) using these large number of pixels will require huge computation time. Instead of this, if these large numbers of pixels are reduced to a smaller number of regions of similar pixels, then the estimation of parameters of k signals will take less computation time.

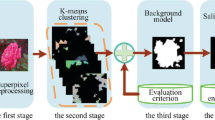

In the proposed model, the original RGB image of size W × H, where of W and H represent the width and height of the image respectively, is first divided into m superpixels using SLIC superpixels algorithm [3] as it is fast and efficient. Since the superpixel comprises of pixels which are similar in color, hence each superpixel is represented by the mean value of its pixels, thereby reducing the image pixels to only m pixels. The colors of these m superpixels are further clustered into k color components using k-means algorithm. The result of the clustering procedure is used to build Gaussian mixture model, whose parameters are further refined using Expectation-Maximization algorithm. Thereafter, spatial variance of these color components is computed and a center-weighted saliency map is formulated.

It is found that the researchers have adopted superpixels for computing saliency at the local level [28] (i.e. in a specific neighborhood of a superpixel) and not at the global level (i.e. considering the complete image as a whole). The problem that arises here is that only smaller objects are captured and gets higher saliency value, while the larger objects are discarded and gets lower saliency value. To capture the details of the larger objects as well, we used superpixels at the global level. So the use of superpixels and GMM to capture saliency at the global level in a computationally efficient manner is the innovation in the proposed method.

In order to check the efficacy of the proposed model, experiments are carried out on seven publicly available image datasets. The performance is evaluated in terms of precision, recall, F -measure, area under curve and computation time and compared with existing seventeen other popular models.

The paper is organized as follows. Section 2 describes the proposed model. The experimental setup and results are included in section 3. Conclusion and future work are presented in Section 4.

2 Proposed model

In general, humans can effortlessly detect salient objects with high accuracy in real-time. It is a challenge to develop a model which can mimic human behavior such that the model achieves high detection accuracy and takes less computation time. One way of accomplishing this task is to utilize a single dominant feature in the model that best characterizes an image. We have investigated a number of features that are used in different state-of-the-art models and have found that the feature computed in terms of color is most commonly used. Also, Snowden [26] has very well suggested that a purely chromatic signal is sufficient to capture visual attention. There are two different ways of extracting a feature in salient object detection, at local or the global level. In the local level a certain region is picked within an image and saliency is computed over it, while in the global level the complete image is considered while computing the saliency. As far as color is concerned, it is widely spread in an image, so using color as a global feature may be more appropriate.

The proposed model employs the concept of SuperPixels and Gaussian Mixture Model (SP-GMM) which is discussed in detail underneath.

2.1 Gaussian mixture model construction

In the color space, clustering of the RGB image I, i.e. I(p) = [R(p) G(p) B(p)]T of size W × H into k regions and then constructing Gaussian mixture model is a time consuming process. But if the number of pixels is decreased to m such that m ≪ W × H, then the computation time can be considerably reduced. So the input RGB image is first divided into m superpixels using SLIC superpixels algorithm [3]. Let SP be the set containing the RGB values of m superpixels given by

where SP i is the RGB value of the i -th superpixel, S i is the set of pixels in the i -th superpixel and |S i | represents its size such that \( \sum_{i=1}^m\left|{\boldsymbol{S}}_i\right|= W\times H \). Now the set SP is partitioned into k clusters using k-means algorithm. The result of the clustering algorithm is used as samples to build Gaussian mixture model (GMM).

The parameters of the GMM include the weights, means, and co-varainces of the Gaussians. The initial weight \( {w}_i^0 \) of the i -th cluster is given as

where n i is the number of superpixels belonging to the i -th cluster. Assuming that the j –th superpixel belongs to the i -th cluster, the initial mean of the i-th cluster \( {\boldsymbol{\mu}}_i^0 \) is given as

where P i is the set of superpixels belonging to the i -th cluster. The initial co-variances \( {\boldsymbol{\varSigma}}_i^0 \) are defined as

Thereafter, the expectation maximization (EM) algorithm is applied to update the parameters of the GMM until convergence is achieved. Using the current parameters of the l -th iteration the probability of a superpixel j to belong to the i -th cluster is calculated as

Then weight, mean and co-variance of the Gaussians are updated as

The log-likelihood for l + 1 iteration is computed as

Eqs. (5–7) are repeated until convergence is achieved. The inequality for the convergence condition is given as

Using the final parameter values of the GMM, each and every pixel p of the original RGB image I of size W × H is assigned to the i -th cluster with a probability given as

where w i , μ i and Σ i are the weight, mean and covariance matrix of the i -th cluster respectively.

2.2 Spatial variance and saliency map computation

The spatial variance measures the distribution of a color component in an image. Lower the spatial variance of a color component better is its chances to be salient and vice-versa. In the spatial domain, variance of the i -th cluster is computed both in the horizontal as well as the vertical direction. The horizontal variance \( {V}_i^h \) of the i -th cluster is given as

where \( {M}_i^h=\frac{\sum_{p\in \boldsymbol{P}}{Pr}^{final}\left( i|\boldsymbol{I}(p)\right).{x}_p}{\sum_{p\in \boldsymbol{P}}{Pr}^{final}\left( i|\boldsymbol{I}(p)\right)} \), x p is the x-coordinate of the p -th pixel and P is the set of all the pixels present in the image. Similarly the vertical variance \( {V}_i^v \) is computed. The total spatial variance is given by

V i is normalized between [0,1] computed as

Thereafter, a center-weighted scheme is applied to give more weightage to the clusters present near the center of the image. The position weight D i of the i -th cluster is given by

where d p is the distance between the pixel p and the image center using the L2 norm. D i is also normalized between [0, 1] computed as

Finally the pixel-wise saliency map SM is given as

The values of the saliency map SM are normalized between [0, 1] computed as

A threshold is applied on the saliency map to generate an attention mask. Fig. 1 depicts the working of the model on certain images.

3 Experimental setup and results

Intensive care has been taken while evaluating the related models. The parameters as suggested in the related papers have been set accordingly and saliency maps are computed. Table 1 list the parameter values of various models. A qualitative as well as a quantitative evaluation is done in order to measure the performance of the proposed model, and is compared with the existing approaches. All the experiments are carried out using Windows 7 environment over Intel (R) Xeon (R) processor with a speed of 2.27 GHz and 4GB RAM.

3.1 Salient object database

The performance of the proposed model and seventeen other related models is examined using the following seven publicly available datasets (Table 2):

The test dataset comprises of all these 12,500 images and is used for performance evaluation.

3.2 Qualitative evaluation

The qualitative evaluation of the proposed model and seventeen other related models can be seen in Fig. 2. We have chosen some of the images from the test data set that contain objects differing in shape, size, position, type etc. It can be clearly seen from Fig. 2 that the proposed model yields better saliency maps in comparison to the related methods.

3.3 Quantitative evaluation

The quantitative evaluation of the proposed model and seventeen other models is done in terms of precision, recall, F measure, area under curve (AUC), and computation time. Using the ground truth G and the detection result R, precision, recall, F -measure are calculated as

where β = 1 as we are giving equal weightage to both precision and recall, and TP (true positives) is the number of salient pixels that are detected as salient pixels. FP (false positives) is the number of background pixels that are detected as salient pixels. FN (false negatives) is the number of salient pixels that are detected as background pixels.

AUC is computed by drawing a receiver operator characteristic (ROC) curve. ROC curve is plotted between the true positive rate (TPR) and the false positive rate (FPR). TPR and FPR are given by

where W and H represents the width and height of the image respectively. The saliency maps corresponding to the proposed model as well as state-of-the-art models are first normalized between [0,255]. Then 256 thresholds are chosen one by one and the values of TPR and FPR are computed and the ROC curve is plotted and finally area under the curve (AUC) is calculated. Table 3 shows the quantitative performance evaluation of the proposed method in comparison to the other state-of-the-art methods on all the seven datasets including their average computation time per image. Their ROC curves are shown in Fig. 3.

The number of superpixels (m) and clusters (k) required to build the Gaussian mixture model play vital role. The number of superpixels were varied from 50 to 500 and it was found that with the increase in the number of superpixels the performance increases till m = 200 and remains constant thereafter. It can be observed from Fig. 4 and Fig. 5 that the best value of performance measures can be obtained at m = 200 and k = 5.

Table 3 shows the quantitative evaluation of the proposed model in comparison with seventeen related models. The best results are shown in bold.

-

MSRA-B

-

The proposed model gives fine shape information that fetches it the highest precision, recall and F-measure.

-

The proposed model has the best AUC value except SA [25] model.

-

ASD

-

The proposed model gives the highest precision, recall, F-measure and AUC values.

-

SAA GT

-

The proposed model gives the highest precision, recall, F-measure and AUC values.

-

SOD

-

The proposed model fetches it the highest precision, recall and F-measure.

-

The proposed model has the best AUC value except COSAL [8] model.

-

SED1

-

The proposed model has the highest precision, recall and F-measure.

-

The proposed model has the best AUC value except COSAL [8] model.

-

SED2

-

The proposed model gives the highest precision, recall, F-measure and AUC values.

-

ECSSD

-

The proposed model fetches the highest precision, recall, F-measure and AUC values.

-

Computation Time

4 Conclusion and future work

Salient object detection can be achieved by either exploring the bottom-up components or its integration with the top-down components. The research community is mostly fascinated by the bottom-up components as these methods are fast and task independent. Researchers have tried to improve the detection accuracy at the cost of complexity of model which is computationally expensive. Some research efforts are made to reduce the computation time but degraded the detection accuracy. In the proposed model, we attempted to improve the salient object detection accuracy with less computation time. The model employed the use of SLIC superpixels, Gaussian mixture model and Expectation-Maximization algorithm to detect a salient object. Generally the images present in the datasets are of size 300 × 400, i.e. around 0.12 million pixels. Estimating the parameters of Gaussians (strength, mean and covariance) using 0.12 million samples is time consuming. The manuscript attempted to reduce the pixels, say to 200, where there is not much of a loss in the estimated values of the parameters, and then the computation time can be reduced to a considerable extent.

Experimental results demonstrate better performance of the proposed model in comparison to the existing methods in terms of precision, recall and F-measure on all the seven datasets and AUC on four datasets. In comparison to many state-of-the-art models, the proposed model requires less computation time.

There are certain more challenges in detecting salient objects. These include partial occlusion, background clutter, articulation, etc. The datasets used in our experiments contain images with only one salient object. Research work may be extended to detect any number of salient objects or no salient object at all.

References

Achanta R, Susstrunk S (2010) Saliency Detection using Maximum Symmetric Surround. Proc. International Conference on Image Processing, pp. 2653–2656

Achanta R, Hemamiz S, Estraday F and Susstrunk S (2009) Frequency-tuned Salient Region Detection. Proc IEEE International Conference on Computer Vision and Pattern Recognition, pp. 1597–1604

Achanta R, Shaji A, Smith K, Lucchi A, Fua P, Susstrunk S (2010) SLIC Superpixels. EPFL Technical Report 2010:149–300

Arya R, Singh N, Agrawal RK A novel hybrid approach for salient object detection using local and global saliency in frequency domain. Multimedia Tools and Applications. doi:10.1007/s11042-015-2750-y

Borji A, Itti L (2013) State-of-the-art in visual attention modeling. Proc IEEE Trans Pattern Anal Mach Intell 35(1):185–207

Bruce NDB, Tsotsos JK (2006) Saliency based on information maximization. Proc Adv Neural Inf Proces Syst 18:155–162

Frintrop S, Rome E and Christensen HI (2010) Computational Visual Attention Systems and their Cognitive Foundation: A Survey. Proc. ACM Transactions on Applied Perception, Vol. 7, no. 1

Fu H, Cao X, and Tu Z (2013) Cluster-based co-saliency detection. IEEE Transactions on Image Processing, 2013.

Goferman S, Zelnik-Manor L, Tal A (2012) Context-aware saliency detection. Proc IEEE Trans Pattern Anal Mach Intell 34(2012):1915–1926

Han J, Ngan KN, Li MJ, Zhang HJ (2006) Unsupervised extraction of visual attention objects in color images. Proc IEEE Trans Circuits Syst Video Technol 16:141–145

Harel J, Koch C and Perona P (2007) Graph Based Visual Saliency. Proc. Advances in Neural Information and Processing Systems, pp. 545–552

Hou X and Zhang L (2007) Saliency Detection: A Spectral Residual Approach, Proc. IEEE Conference on Computer Vision and Pattern Recognition, pp. 1–8

İmamoğlu N, Lin W, Fang Y (2013) A saliency detection model using low-level features based on wavelet transform. Proc IEEE Trans Multimedia 15:96–105

Itti L, Koch C, Niebur E (1998) A model of saliency based visual attention for rapid scene analysis. Proc IEEE Trans Pattern Anal Mach Intell 20:1254–1259

Jiang, H., Wang, J., Yuan, Z., Wu, Y., Zheng, N. and Li, S., (2013). Salient object detection: A discriminative regional feature integration approach. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 2083–2090).

Li, G. and Yu, Y., (2016). Deep contrast learning for salient object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (pp. 478–487).

Li G, Yu Y (2016) Visual saliency detection based on multiscale deep CNN features. IEEE Trans Image Process 25(11):5012–5024

Lin, Y., Kong, S., Wang, D. and Zhuang, Y., (2014). Saliency detection within a deep convolutional architecture. In Workshops at the Twenty-Eighth AAAI Conference on Artificial Intelligence.

Liu T, Yuan Z, Sun-Wang J, Zheng N, Tang X, Shum HY (2011) Learning to detect a salient object. Proc IEEE Trans Pattern Anal Mach Intell 33:353–366

Liu Z, Shi R, Shen L, Xue Y, Ngan KN, Zhang Z (2012) Unsupervised salient object segmentation based on kernel density estimation and two-phase graph cut. Proc IEEE Trans Multimedia 14:1275–1289

Liu Z, Zou W, Meur OL (2014) Saliency tree: a novel saliency detection framework. IEEE Trans Image Process 23:1937–1952

Meur OL, Callet PL, Barba D, Thoreau D (2006) A coherent computational approach to model bottom up visual attention. Proc IEEE Trans Pattern Anal Mach Intell 28:802–817

Peng P, Shao L, Han J, Han J (2015) Saliency-aware image-to-class distances for image classification. Neurocomputing 166:337–345

Shen X, Wu Y (2012) A unified approach to salient object detection via low rank matrix recovery. Proc IEEE Conf Comput Vis Pattern Recognit 2012:853–860

Singh N, Agrawal RK (2013) Combination of Kullback–Leibler divergence and Manhattan distance measures to detect salient objects. SIViP 9(2015):427–435

Snowden RJ (2012) Visual attention to color: parvocellular guidance of attentional resources? Proc Psychol Sci 13:180–184

Vikram TN, Tscherepanow M, Wrede B (2012) A saliency map based on sampling an image into random rectangular regions of interest. Proc Pattern Recognit 45(9):3114–3124

Yang, C., Zhang, L., Lu, H., Ruan, X. and Yang, M.H., 2013. Saliency detection via graph-based manifold ranking. In Proceedings of the IEEE conference on computer vision and pattern recognition (pp. 3166–3173).

Yu Z, Wong HS (2007) A rule based technique for extraction of visual attention regions based on real time clustering. Proc IEEE Trans Multimedia 9:766–784

Zhang L, Tong MH, Marks TK, Shan H, Cottrell GW (2008) SUN: a Bayesian framework for saliency using natural statistics. Proc J Vis 8:1–20

Zhang W, Wu QMJ, Wang G, Yin H (2010) An adaptive computational model for salient object detection. IEEE Trans Multimedia 12:300–315

Zhang D, Han J, Han J, Shao L (2016) Cosaliency detection based on intrasaliency prior transfer and deep intersaliency mining. IEEE transactions on neural networks and learning systems 27(6):1163–1176

Zhao, R, Ouyang W, Li H, and Wang X (2015) "Saliency detection by multi-context deep learning." In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1265–1274

Zhu L, Klein DA, Frintrop S, Cao Z, Cremers AB (2014) A Multisize Superpixel approach for salient object detection based on multivariate normal distribution estimation. IEEE Trans Image Process 23:5094–5107

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Singh, N., Arya, R. & Agrawal, R.K. Performance enhancement of salient object detection using superpixel based Gaussian mixture model. Multimed Tools Appl 77, 8511–8529 (2018). https://doi.org/10.1007/s11042-017-4748-0

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-017-4748-0