Abstract

This paper presents a novel scheme for satellite hyperspectral images broadcasting over wireless channels. First, a simple pre-processing is performed. Then, a new hyperspectral band ordering algorithm that improves the compression performance is implemented. The ordered image data is also normalized. The discrete wavelet transform with three-level decomposition is used to divide each hyperspectral image band into ten wavelet sub-bands; nine of them are the details and the last LL-LL-LL is an approximation version of the band. Coset coding based on distributed source coding (DSC) is used for the LL-LL-LL sub-band to achieve high compression efficiency and low encoding complexity. Then, without syndrome coding, the transmission power is allocated directly to the band details and coset values according to their distributions and magnitudes without forward error correction (FEC). Finally, these data are transformed by the Hadamard matrix and transmitted over a dense constellation. Satellite hyperspectral images from an Airborne Visible/Infrared Imaging Spectrometer (AVIRIS) satellite are used for the validation of the proposed scheme. Experimental results demonstrate that the proposed scheme improves the average image quality by 6.91, 3.00 and 7.68 dB over LineCast, SoftCast-3D, and Softcast-2D, respectively. It also achieves up to a 5.63 dB gain over JPEG2000 with FEC.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Wireless image and video communication has been studied for a long time. Shannon concluded two main issues are required to transmit data over wireless channels: source coding (data compression) and channel coding (forward error correction (FEC) and modulation scheme) [33, 34]. This conventional scheme with separate source coding and channel coding is based on Shannon’s source-channel separation principle, and it is the most classic digital scheme. Source coding is designed independently of channel coding. Using this classical scheme, the source data can be transmitted without any loss of information, if the channel is point-to-point (i.e., unicast communication). The channel quality is known or can be easily measured at the source, by the selection for the optimal transmission rate for the channel and the corresponding FEC and modulation [34]. However, for digital broadcast/multicast, a cliff effect challenge is found, where each receiver observes a different channel quality. Thus, the bitrate selected by a conventional wireless image delivery scheme cannot fit all receivers at the same time. If the image is transmitted at a high bitrate, it can be decoded only by those receivers with better quality channels, but it is not reasonable for receivers with worse quality channels. On the contrary, if it transmits at a low bitrate supported by all receivers, it reduces the performance of the receivers with better quality channels, and it is not optimal for performance. In order to overcome the cliff effect, many researchers propose different joint source-channel coding (JSCC) frameworks for distributed image/video transmission [27, 42]. Except for these JSCC works, the transmission of distributed coded video is still similar to that of the conventional scheme. In contrast to the separate design, there are many joint image/video coding and transmission schemes [15, 22, 23, 41] that have been proposed for wireless image/video multicasting. SoftCast [22, 23] is one of the analog approaches that is designed within the JSCC framework. The SoftCast encoder consists of the following steps: transform, power allocation and direct dense modulation. A line-based coding and analog-like transmission is proposed in [41], called LineCast, which consists of three steps, reading the image line by line and the decorrelation of each line by DCT, scalar modulo quantization, and finally power allocation and transmission.

Currently, satellite hyperspectral images have been used in a wide variety of applications and remote sensing projects ranging from independent land mapping services to government and military activities [8, 18, 29, 40, 41]. Some of these applications and projects desperately need the use of wireless communication to transmit satellite images [6, 11, 13, 20, 24, 41]. Therefore, satellite wireless systems have become an important topic of study. In agriculture, the need for observational data, aircraft, and satellite remote sensing plays an important role in farm management due to the large volume of information represented in the satellite images. Moreover, for meteorologists, satellite images play an essential role to explore water vapor, cloud properties, aerosol, and absorbing gases.

Satellite hyperspectral images have significant information for different applications. Only three selected bands can be transmitted for the true color image. Furthermore, more than three bands can be selected to represent the vegetation features of the Earth’s surface. This paper suggests that all the image bands can be transmitted and then the images can be classified on the receiving end. Therefore, using a separate wireless design is not the best solution with various quality channels. As a result, a new distributed coding and transmission scheme is proposed in this paper for broadcasting satellite hyperspectral images to a large number of receivers. The scheme avoids the cliff effect found in the digital broadcasting schemes by using linear transform between the transmitted image signal and the original pixels luminance. In the proposed scheme, the transform coefficients are directly transmitted through a dense constellation after allocating a certain power without FEC and digital modulation. In multicasting, each user can optimal quality matching for its channel conditions. A distributed source coding (DSC) is applied based on the Slepian-Wolf theory [35] to achieve efficient compression and low encoding complexity. Moreover, a simple and more efficient algorithm is proposed for satellite hyperspectral band ordering to achieve better compression efficiency. The original satellite image band ordering is used in SoftCast, and thus, it is inefficient in the removal of spectral redundancy. Furthermore, the 3D DCT which has been used is insufficient for removing most of the redundancy in the satellite images. In contrast to SoftCast, the proposed scheme adopts a new band ordering algorithm to remove spectral redundancy in the hyperspectral image. Moreover, the DSC provides lower encoding complexity than those of traditional compression techniques. LineCast does not exploit the spectral correlation between bands. Therefore, this leads to LineCast being inefficient for satellite images containing more than one band. However, most satellite images have more than one band and these bands contain spectral redundancies. In conclusion, LineCast is able to provide better performance than the state-of-the-art 2D broadcasting schemes because of its high efficiency and flexibility of line prediction. However, without a spectral decorrelator, it is still not efficient enough for the use of 3D satellite images. Unlike LineCast, the proposed scheme removes redundant information within a spectral dimension. Furthermore, the proposed scheme presents a band ordering algorithm for the satellite hyperspectral images. Experimental results on the selected hyperspectral datasets demonstrate that the proposed scheme improves the average image quality by 6.91 and 3.00 dB over LineCast and SoftCast, respectively, and it achieves up to a 5.63 dB gain over JPEG2000 with FEC.

The rest of the paper is organized as follows. Section 2 briefly reviews some related works. Section 3 introduces the proposed scheme with detailed explanations of each component. Section 4 presents the experimental results. Finally, Section 5 concludes this paper.

2 Related works

2.1 Digital broadcasting

Digital broadcasting systems separate source coding and channel coding based on Shannon’s source-channel separation theorem [33, 34].

2.1.1 Source coding

In the source coding, one of many lossy compression techniques is selected to achieve the target compression ratio. Recently, several techniques have been used to compress satellite images [8]. One of them is the JPEG2000 standard [37]. This standard is based on wavelet coding. JPEG2000 supports the 9/7 and the 5/3 integer wavelet transforms. After transformation, coefficient quantization is adapted for individual scales and sub-bands, and quantized coefficients are arithmetically coded. In addition, in order to improve the coding performance of this technique, a common strategy for hyperspectral images is to first decorrelate the image in the spectral domain.

Previous research on satellite image compression concluded that JPEG2000 (wavelet-based algorithm) gives better results than those based on DCT such as JPEG as described in [8, 19, 29]. The research in [19] showed that, for lossy compression of satellite multi-spectral images, JPEG2000 (wavelet-based algorithm) produces better results than JPEG. Also, the research in [8] compared several techniques with different compression methods. All of these methods are wavelet-based algorithms and they showed that the results of these methods are better than other methods. Moreover, the research in [29] showed that, for lossy compression of satellite hyperspectral images, using discrete wavelet transform (DWT) and Principal Component Analysis (PCA) provided improved compression quality when compared with others. In conventional frameworks JPEG2000 with PCA the spectral decorrelator [29] is used in the digital source coding. The researches in [4, 7] present a new hyperspectral image coding scheme using orthogonal optimal spectral transform (OrthOST). The actual drawback of these transforms is their heavy computational complexity. In the presented experiments, JPEG2000 with OrthOST is used in the digital source coding. In [39], a general framework to select quantizers in each spatial and spectral region of a hyperspectral image is presented. This framework achieves the desired target rate while minimizing distortion. The rate control algorithm allows for achieving lossy near-lossless compression and any in-between type of compression, e.g., lossy compression with a near-lossless constraint. While the target bitrate for this framework is greater than 1bpppb. Recently, a 2-step scalar deadzone quantization (2SDQ) scheme has been presented in [2, 5]. The main insights behind 2SDQ are the use of two quantization step sizes that approximate wavelet coefficients with more or less precision depending on their density, and a rate-distortion optimization technique that adjusts the distortion which decreases the produced distortion when coding 2SDQ indexes. The applicability and efficiency of 2SDQ are demonstrated within the framework of JPEG2000. Several works have been reported by several authors to design compression algorithms for satellite multispectral and hyperspectral images using JPEG2000 [8, 9, 12, 19, 28, 29].

2.1.2 Channel coding

In the channel coding, FEC and digital modulation are used in the digital scheme. However, all channel conditions cannot be accommodated simultaneously for all receivers in a broadcasting scenario, because the transmission rate has to be adapted to the actual channel conditions by adjusting the channel coding rate and modulation. In order to improve the digital broadcast, some layered digital schemes consisting of layered source coding and layered channel coding have been proposed [17, 30, 31, 43–46] such as H.264/SVC [32], with hierarchical modulation (HM) [25] and multi-resolution coding [30]. Although the layered digital scheme has better performance than a separation coding scheme, it is unable to be adapted to users with different classes of channel conditions. Different from digital broadcasting, the proposed scheme avoids the cliff effect (i.e., in multicast, each user can obtain the best quality matching its channel conditions).

2.2 SoftCast

Softcast [22, 23] is a JSCC scheme for wireless image/video multicasting. SoftCast transmits a linear transform of the source signal directly in an analog channel without quantization, entropy coding and FEC. These schemes can optimize received distortion by a linear combination of original signals, which are then directly transmitted over a dense constellation. This principle naturally enables a transmitter to satisfy multiple receivers with diverse channel qualities.

On the server side, the SoftCast first transforms the original image data. In the second step, power allocation minimizes the total distortion by optimally scaling the transform coefficients. Then, SoftCast employs a linear Hadamard transform to make packets with equal power and equal importance. Finally, the data is directly mapped into wireless symbols by a very dense QAM. In the transform, there are two versions of SoftCast: SoftCast-2D [22] and SoftCast-3D [23]. For SoftCast-2D, a 2D discrete cosine transform (2D DCT) is used to remove the spatial redundancy of an (image band/video frame). For SoftCast-3D, 3D DCT is used to remove spatial and spectral redundancies for a group of (image bands/video frames).

To reconstruct the signal at the receiver, SoftCast uses a linear least square estimator (LLSE) as the opposite operation for power allocation and Hadamard transform. Once the decoder has obtained the DCT components, it can reconstruct the original signal by applying the inverse DCT. Almost all the steps in Softcast are linear operations, and thus the channel noise is directly transformed into reconstruction noise of the signal. Therefore, there is no need to know a user’s channel conditions, and each receiver can receive a quality matching its channel conditions.

2.3 LineCast

LineCast [41] is a line-based framework that provides a natural way method to avoid broadcasting the entire area of the images to all the users. On the server side, the LineCast encoder consists of reading the image line by line, transformation, power allocation and transmission. In the first step, the LineCast reads the image line by line. In the transform, lines are decorrelated with DCT transformation. After that, scalar modulo quantization is performed on the DCT components with the same technique used in [14, 15], which partitions source space into several cosets and transmits only the coset indices to the decoder. After the scalar modulo quantization, a power allocation technique is employed for these frequencies, which are scaled with different parameters at different frequencies to minimize the total distortion between them. Like SoftCast, LineCast employs a linear Hadamard transform to achieve resilience against packet losses. Finally, the data is directly mapped into wireless symbols by a very dense 64 K-QAM without digital forward error correction and modulation.

At the receiver, LineCast uses LLSE to reconstruct the line signal from the DCT components. Moreover, side information is generated to aid in the recovery of transform coefficients in the scalar modulo dequantization. Finally, a minimum mean square error (MMSE) approach is used to denoise the reconstructed signal.

3 The proposed scheme

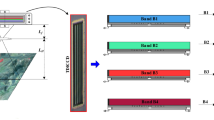

The proposed scheme directly transmits a hyperspectral image over the raw orthogonal frequency-division multiplexing (OFDM) channel without FEC and digital modulation. In the proposed scheme, the first band is compressed and transmitted as in SoftCast, which consists of DCT, power allocation, and Hadamard transform. In the rest of this section, the compression and transmission of the remaining hyperspectral bands is discussed from the second band to the final band. Figure 1 shows the server side of the proposed scheme and the receiver side is depicted in Fig. 2.

On the server side, the proposed scheme adopts simple pre-processing, which consists of two steps, applying a hyperspectral band ordering algorithm to exploit the spectral redundancy in the original image data x, and normalizing the ordered image data r. Then, every band in the normalized data n is coded and transmitted, separately. The selected band s is first decomposed by spatial 2D-DWT with three level decomposition, and the resulting w is divided into two groups. The differences between group w d , is that it contains nine wavelet sub-bands LH, HL, HH, LL-LH, LL-HL, LL-HH, LL-LL-LH, LL-LL-HL, and LL-LL-HH, where the average group w LL , contains the third level of average wavelet sub-band LL-LL-LL. The proposed scheme first transforms the w LL group into a DCT domain. Then, it applies coset coding on the transform DCT coefficients d to get the coset data c. In the next step, the coset data c is combined and the differences between group w d is in the one signal data t. Due to the output t having different distributions, a power allocation technique is employed to protect signal t against channel noise. Before transmission, the proposed scheme employs a Hadamard transform to redistribute energy as is used in communication systems to obtain weighted frequencies. Finally, the weighted frequencies y are transmitted through a raw OFDM channel without FEC or digital modulation as shown in Fig. 1.

On the client side, after the physical layer (PHY) returns the list of coded data y ′, LLSE is applied to provide a high-quality estimate of the recovery data. The output t ′ consists of two groups. The first group is w d ′ that contains the nine reconstructed wavelet sub-bands LH, HL, HH, LL-LH, LL-HL, LL-HH, LL-LL-LH, LL-LL-HL, and LL-LL-HH, while the other group is c ′, contains the reconstructed coset values of the LL-LL-LL wavelet sub-band. The coset decoder uses the side information (i.e., the DCT coefficients that come from the previous reconstructed LL-LL-LL wavelet sub-band) to reconstruct the DCT coefficients d ′ for the wavelet sub-band LL-LL-LL, and then applies an inverse DCT transform for d ′ and gets a reconstructed wavelet sub-band LL-LL-LL in w ′ LL . Once the decoder has obtained all the reconstructed wavelet sub-bands in w ′, the decoder reconstructs the original band data s ′ by the inverse 2D-DWT (three level decomposition). Finally, the reprocessed data is used to reconstruct the entire hyperspectral image in x ′ as shown in Fig. 2.

In the following subsections, each aspect of the proposed scheme is described.

3.1 Pre-processing

Before wavelet transformation, the hyperspectral image is first processed in two steps which are hyperspectral band ordering in order to exploit the spectral redundancy in the original image data, and normalization to normalize the energy of the ordered image data.

3.1.1 Band ordering

Satellite hyperspectral images include several hundred bands. These bands contain spectral redundancies which need to be removed. Therefore, optimal band ordering for satellite images is imperative.

Different from the correlation coefficient algorithms [19, 36, 38], a simple and more efficient algorithm for satellite band ordering is proposed.

This algorithm is based on the mean value of each band. The problem of optimal image band reordering is equivalent to the problem of finding a minimum spanning tree (MST) in weighted graphs as is expressed in [38]. In the proposed algorithm, the nodes of the spanning tree are assumed to be represented by hyperspectral bands, and the weighted values are represented by the absolute mean difference between bands as shown in Fig. 3. Kruskal’s algorithm [26] is used to construct this MST. For example, if there are three bands A, B, and C and they are connected to each other, the weighted values are represented by the absolute mean difference between these three bands as shown in Fig. 3. After the Kruskal’s algorithm is applied, one out of three results will be obtained, representing the new ordering of these three bands (i.e., the outputs are ABC, BAC, or BCA) as shown in Fig. 3.

Kruskal’s algorithm is applied to all hyperspectral image bands and the output of the algorithm is a set of band numbers corresponding to the path that indicates how the image bands should be rearranged in order to achieve a minimum distortion between the bands. Eventually, bands with a similar or close mean value are allocated together.

3.1.2 Data normalization

In the second step of pre-processing, the ordered hyperspectral image r is normalized by subtraction of the integer mean value m s for each band s and then is divided by the scaled value z s .

and

where each band s contains N rows and M columns. For each band s, the mean value m s and the scaled value z s need to be transmitted to the receiver as metadata so that s can be recovered. The first band of the normalized hyperspectral image is coded and transmitted similar to SoftCast, which consists of DCT, power allocation and the Hadamard transform. In the rest of this section, the focus will be about the compression and transmission of the other bands (i.e., the sequence of bands from 2 to λ, where λ is the number of spectral bands).

3.2 Wavelet transform

Each band s of the remaining bands in the hyperspectral image is first decomposed by spatial 2-D-DWT with 3 level decomposition to obtain ten wavelet sub-bands in w containing w d and w LL . Nine of these wavelet sub-bands are LH, HL, HH, LL-LH, LL-HL, LL-HH, LL-LL-LH, LL-LL-HL, and LL-LL-HH that represent the details of the image band in w d . The tenth wavelet sub-band is LL-LL-LL in w LL . The Daubechies 9/7 filter-bank, introduced in [1], is used for transformation.

With the DWT, all the wavelet differences are obtained from the image band and LL-LL-LL wavelet sub-band. The coset values for the LL-LL-LL wavelet sub-band are achieved instead of the whole image which decreases the computational complexity. Moreover, compression is gained, because the wavelet differences and the coset index typically have lower entropy than the original source values.

3.3 Coset coding

The details sub-bands of the full size image band s is already obtained in the DWT step in w d and there is still an average sub-band in w LL (i.e., LL-LL-LL wavelet sub-band). In the proposed scheme, the coset coding scheme proposed in [16] is used only for the LL-LL-LL wavelet sub-band for each image band. Coset coding is a typical technique used in DSC, and here the coset values represent the details of the LL-LL-LL wavelet sub-band. As mentioned above, coset coding achieves compression, because the coset values typically have lower entropy than the source values. Moreover, the DSC produces a lower encoding complexity than the traditional compression techniques.

In the proposed scheme, DCT is applied to the LL-LL-LL wavelet sub-band of band s, and encode the DCT coefficients d to get coset values c.

where q s is the coset step for band s calculated by estimating the noise of the decoder prediction as shown in [16]. We combine the coset data c and the differences group w d in one signal data t to transmit it over the wireless channel.

3.4 Power allocation and transmission

Before the transmission, the signal data t for band s has different frequencies. So, signal data t is scaled for optimal power allocation in terms of minimizing the distortion [22] with the scaling factor g s against channel errors.

where υ i is the variance of t i , K is the number of frequencies, and P is the total transmission power. The signal t after power allocation is

After power allocation, to redistribute energy, the whitening module protects the weighted signal u against packet losses by multiplying it by the Hadamard matrix H. The outputs are then transmitted using the raw OFDM without FEC or modulation.

In addition to the image data, the encoder sends a small amount of metadata which contains m s , z s , and {υ i } to assist the decoder in inverting the received signal. The metadata is transmitted using a traditional digital method (i.e., OFDM in 802.11 PHY with FEC and modulation). Here a binary phase shift keying (BPSK) is used for modulation and a half-rate convolutional code is used for FEC to protect the metadata from channel errors.

3.5 LLSE decoder

At the receiver, for each transmitted signal y, a noisy signal is received.

where n is a random channel noise. The LLSE is used to reconstruct the original signal as follows:

where R s = H · g s for band s, Λ n and Λ t are the covariance matrices of n and t, respectively.

3.6 Inverse coset and transforms

Now, the signal t ′ that contains two wavelet groups is obtained and they are the reconstructed wavelet differences sub-bands in w ′ d , and the reconstructed coset values for the LL-LL-LL wavelet sub-band in c ′.

For c ′ the side information is generated at the receiver to help the coset decoder to reconstruct the DCT coefficients for the LL-LL-LL wavelet sub-band. Here, the side information SI is the DCT coefficients that come from the previous reconstructed LL-LL-LL wavelet sub-band as shown in Fig. 2. Then, the inverse of coset coding is applied to the reconstruction of signal c ′ as follows

where SI s − 1 is the side information for band s that comes from the previous reconstructed band s-1, and q ′ s is the coset step for band s calculated based on the noise and the previous received band as shown in [16]. Once the decoder has obtained all the reconstructed DCT coefficients in d ′, group w ′ LL is recovered by applying the inverse DCT (i.e., the reconstructed LL-LL-LL wavelet sub-band for band s). In the final step, the two groups w ′ LL and w ′ d are combined into one data set w ′, and the inverse 2D-DWT is applied with three level decomposition to get the reconstructed band s ′.

3.7 Reprocessing

After the reconstructed bands are completed, all reconstructed bands are collected to represent the normalized hyperspectral image in n ′. Then it is reprocessed to get the final reconstructed hyperspectral image in x ′.

Note that the scaled value z s and the mean value m s are transmitted as metadata by the encoder for the selected band s.

4 Experimental results

4.1 Dataset

The proposed scheme has been tested on several hyperspectral images from the Airborne Visible/Infrared Imaging Spectrometer (AVIRIS) sensor developed by the NASA Jet Propulsion Laboratory in 1987. It provides spectral images with 224 contiguous bands covering the spectral ranges from 0.41 μm to 2.45 μm spectrums in 10 nm bands. The datasets provided by the consultative committee for space data system (CCSDS) [10] is comprised of five uncalibrated radiance hyperspectral images, acquired over Yellowstone, WY in 2006, and is publicly available from the AVIRIS web site [21]. All images are 16 bits per pixel per band (bpppb). Technical names and sizes are provided in Table 1.

4.2 Pre-processing assessment

In this subsection, the performance of the pre-processing step is evaluated and it shows that there is an improvement in the image quality compared to the scheme without pre-processing (see Table 2).

Quality is computed by comparing the original image x(M, N, λ) with the recovered image x ′(M, N, λ). These methods are evaluated using the peak signal-to-noise ratio (PSNR), a standard metric of image/video quality.

where λ is the number of satellite image bands, and L is the maximum possible pixel value of the satellite image (i.e., L = 2B – 1 , where B is the bit depth). Typical values for the PSNR in lossy hyperspectral image compression are between 60 and 80 dB, provided that the bit depth is 16 bits.

The reconstructed images PSNRs are compared in Table 2. According to the result, it can be seen that the proposed scheme with the pre-processing step achieves better performance when compared with the others without pre-processing.

4.3 Broadcasting results

In this subsection, the performance of the proposed scheme in broadcasting channels is evaluated, and is compared to SoftCast-2D, SoftCast-3D, LineCast, and JPEG2000 with different combinations of FEC rates and modulation methods. All frameworks have been implemented using MATLAB R2014a. Five tests were conducted.

In the SoftCast-2D [22], the 2D DCT is applied for every band separately in the selected hyperspectral images, whereas the SoftCast-3D [23] uses the 3D DCT to remove both spectral and spatial redundancies from the hyperspectral image data. SoftCast-3D divides the image data into groups of bands (each group includes four bands). Different from SoftCast, LineCast compresses every scanned line of an image by the transform-domain scalar modulo quantization without prediction. LineCast [41] has been implemented for every band separately in the selected hyperspectral images. In conventional frameworks based on JPEG2000, DWT and PCA are often used as spectral decorrelators [29]. In the presented experiments, JPEG2000 with PCA in the spectral decorrelator is used in the digital source coding and is called JPEG2000-PCA. The hyperspectral image data is first decorrelated with PCA and then a 2D DWT with 5 level decomposition is used for spatial decorrelating. In the DWT, the 9/7 filter was used. Also, JPEG2000 with OrthOST transform [4, 7] is used in the digital source coding and is called JPEG2000-OrthOST. Moreover, JPEG2000 with CB-2SDQ [2, 5] is used in the digital source coding and is called JPEG2000-CB-2SDQ. The reference software BOI [3] was used for JPEG2000 implementation.

The bit rates for the conventional framework based on JPEG2000 are shown in Table 3. For SoftCast, LineCast, and the proposed scheme, there is no bit rate but only a channel symbol rate. The channel noise is assumed to be Gaussian and the channel bandwidth is equal to the source bandwidth. All the frameworks consume the same bandwidth and transmission power. The proposed scheme improves the average image quality by 5.63, 5.42, and 4.72 dB over JPEG2000-PCA, JPEG2000-OrthOST, and JPEG2000-CB-2SDQ, respectively.

The reconstructed satellite images PSNRs of each scheme under different channel SNRs between 5 and 25 dB are given in Fig. 4. It can be seen that the proposed scheme achieves better performance than the other schemes in the broadcasting of satellite hyperspectral images.

In Fig. 4, the separable source channel coding (i.e., JPEG2000 with FEC and modulation) exhibits the cliff effect for all seven conventional transmission approaches. For example, the approach ‘JPEG2000, 3/4FEC, QPSK’ performs well when the channel SNR is between 10 and 12 dB, but it is not good when the channel SNR is beyond this range. There is no improvement in the image quality for SNR higher than 12 dB.

Note that the result in Fig. 4 does not mean the proposed scheme can outperform JPEG2000-PCA, JPEG2000-CB-2SDQ, or JPEG2000-OrthOST in compression efficiency. These methods are satellite image coding standards, while the proposed is a wireless satellite image transmission framework. The compression methods have very high compression efficiency but the bitstream is not very robust to error. This why the bitstreams need additional FEC bits for protection. However, the proposed scheme is robust to channel noise. Thus, it can skip FEC, and is able to achieve high transmission efficiency.

4.4 Visual quality

Visual quality comparison is given in Fig. 5. The channel SNR is set to be 5 dB. Hyperspectral image 2, the YellowstoneUnSc3 band 152 is selected for comparison. The proposed scheme shows better visual quality than the others. The proposed scheme delivers a PSNR gain up to 6.91, 3.00, 7.68 and 5.63 dB over LineCast, SoftCast-3D, SoftCast-2D, and JPEG2000 with FEC and modulation, respectively.

Visual quality comparison at SNR = 5 dB. a Image 2: YellowstoneUnSc3 band 152. Full size. b and c First and second rectangular regions, respectively. The six results from left to right, top to bottom are: Original, JPEG2000 at 0.25bpppb with 1/2 FEC and BPSK, SoftCast-2D, SoftCast-3D, LineCast and Proposed, respectively

4.5 Encoding complexity

At the encoder for the proposed scheme, coset coding based on DSC is used for the wavelet LL-LL-LL sub-band. The DSC provides a lower encoding complexity than traditional compression techniques. Moreover, the proposed scheme does not apply DCT to the wavelet details sub-bands. Therefore, low complexity has been achieved during the encoding process. Figure 6 shows the average encoding time per hyperspectral image using different schemes (JPEG2000 with FEC and modulation, SoftCast, LineCast and proposed scheme). The test machine has an Intel(R) Xeon(R) CPU E3-1230 V2 @ 3.30GHz 3.70GHz, 32GB internal memory and Microsoft Windows 8.1 Enterprise 64-bit. All the encoding time results shown in Fig. 6 are measured in seconds.

As shown in Fig. 6, in the case of JPEG2000 with FEC and modulation, the encoding time becomes longer when the bitrate increases. The proposed scheme has less encoding time than JPEG2000, when the FEC is 3/4 and the modulation is 16QAM, whereas it is approximately equal to that of JPEG2000, when the FEC is 1/2 and the modulation is 16QAM. The proposed scheme has neither FEC nor modulation. SoftCast-3D has the longest encoding time due to the 3D DCT used in the encoding process. It can be seen that the proposed scheme has less encoding time than all state-of-the-art schemes (i.e., SoftCast-3D, SoftCast-2D, and LineCast).

5 Conclusions

An efficient joint source-channel coding scheme for transmission of satellite hyperspectral images is proposed in this paper. This scheme is based on discrete wavelet transform (DWT) and distributed source coding (DSC). A new band ordering algorithm for satellite hyperspectral images is first proposed. Furthermore, the benefits from the wavelet transform are taken to represent the details of the image band. Coset coding is used to achieve low encoding complexity and efficient compression performance. The proposed scheme avoided the cliff effect found in digital broadcasting schemes by using linear transform between the transmitted image signal and the original pixels luminance. Experimental results on the selected hyperspectral datasets demonstrate that the proposed scheme is more effective than other schemes.

References

Antonini M, Barlaud M, Mathieu P, Daubechies I (1992) Image coding using wavelet transform. IEEE Trans Image Process 1(2):205–220

Auli-Llinas F (2013) 2-step scalar deadzone quantization for bitplane image coding. IEEE Trans Image Process 22(12):4678–4688

Aulí-Llinàs F, Boi codec (2014) [Online]. Available: http://www.deic.uab.cat/~francesc/software/boi/

Barret M, Gutzwiller J-L, Hariti M (2011) Low-complexity hyperspectral image coding using exogenous orthogonal optimal spectral transform (orthost) and degree-2 zerotrees. IEEE Trans Geosci Remote Sens 49(5):1557–1566

Bartrina-Rapesta J, Aul-Llinàs F (2015) Cell-based two-step scalar deadzone quantization for high bit-depth hyperspectral image coding. IEEE Geosci Remote Sens Lett 12(9):1893–1897

Beck RA, Vincent RK, Watts DW, Seibert MA, Pleva DP, Cauley MA, Ramos CT, Scott TM, Harter DW, Vickerman M et al (2005) A space-based end-to-end prototype geographic information network for lunar and planetary exploration and emergency response (2002 and 2003 field experiments). Comput Netw 47(5):765–783

Bita IPA, Barret M, Pham D-T (2010) On optimal transforms in lossy compression of multicomponent images with jpeg2000. Signal Process 90(3):759–773

Blanes I, Magli E, Serra-Sagrista J (2014) A tutorial on image compression for optical space imaging systems. IEEE Geosci Remote Sens Mag 2(3):8–26

Carvajal G, Penna B, Magli E et al (2008) Unified lossy and near-lossless hyperspectral image compression based on jpeg 2000. IEEE Geosci Remote Sens Lett 5(4):593–597

Consultative committee for space data systems (2014). [Online]. Available: http://www.ccsds.org

Crowley MD, Chen W, Sukalac EJ, Sun X, Coronado PL, Zhang G-Q (2006) Visualization of remote hyperspectral image data using google earth. In: Geoscience and Remote Sensing Symposium, 2006. IGARSS 2006. IEEE International Conference on. IEEE, 2006, pp. 907–910

Du Q, Fowler JE (2007) Hyperspectral image compression using jpeg2000 and principal component analysis. IEEE Geosci Remote Sens Lett 4(2):201–205

Evans B, Werner M, Lutz E, Bousquet M, Corazza GE, Maral G (2005) Integration of satellite and terrestrial systems in future multimedia communications. IEEE Wirel Commun 12(5):72–80

Fan X, Wu F, Zhao D, Au OC, Gao W (2012) Distributed soft video broadcast (dcast) with explicit motion. In: Data Compression Conference (DCC), 2012. IEEE, 2012, pp. 199–208

Fan X, Wu F, Zhao D, Au OC (2013) Distributed wireless visual communication with power distortion optimization. IEEE Trans Circuits Syst Video Technol 23(6):1040–1053

Fan X, Wu F, Zhao D (2011) D-cast: Dsc based soft mobile video broadcast. In: Proceedings of the 10th International Conference on Mobile and Ubiquitous Multimedia. ACM, 2011, pp. 226–235

Ghandi MM, Ghanbari M (2006) Layered h. 264 video transmission with hierarchical qam. J Vis Commun Image Represent 17(2):451–466

Hagag A, Amin M, El-Samie FEA (2013) Simultaneous denoising and compression of multispectral images. J Appl Remote Sens 7(1):073511

Hagag A, Amin M, El-Samie FEA (2015) Multispectral image compression with band ordering and wavelet transforms. SIViP 9(4):769–778

Herwitz S, Johnson L, Dunagan S, Higgins R, Sullivan D, Zheng J, Lobitz B, Leung J, Gallmeyer B, Aoyagi M et al (2004) Imaging from an unmanned aerial vehicle: agricultural surveillance and decision support. Comput Electron Agric 44(1):49–61

Hyperspectral datasets available for downloaded from the nasa web site, 2014. [Online]. Available: http://compression.jpl.nasa.gov/hyperspectral/

Jakubczak S, Katabi D (2011) Softcast: one-size-fits-all wireless video. ACM SIGCOMM Comp Commun Rev 41(4):449–450

Jakubczak S, Katabi D (2011) A cross-layer design for scalable mobile video. In: Proceedings of the 17th annual international conference on Mobile computing and networking. ACM, 2011, pp. 289–300

Johnson M, Freeman K, Gilstrap R, Beck R (2004) Networking technologies enable advances in earth science. Comput Netw 46(3):423–435

Kratochvl T (2009) Hierarchical modulation in dvb-t/h mobile tv transmission. In: Multi-carrier systems & solutions 2009. Springer, 2009, pp. 333–341

Kruskal JB (1956) On the shortest spanning subtree of a graph and the traveling salesman problem. Proc Am Math Soc 7(1):48–50

Liveris AD, Xiong Z, Georghiades CN (2002) Joint source-channel coding of binary sources with side information at the decoder using ira codes. In: multimedia signal processing, 2002 I.E. Workshop on. IEEE, 2002, pp. 53–56

Penna B, Tillo T, Magli E, Olmo G (2006) Progressive 3-d coding of hyperspectral images based on jpeg 2000. IEEE Geosci Remote Sens Lett 3(1):125–129

Penna B, Tillo T, Magli E, Olmo G (2007) Transform coding techniques for lossy hyperspectral data compression. IEEE Trans Geosci Remote Sens 45(5):1408–1421

Ramchandran K, Ortega A, Uz KM, Vetterli M (1993) Multiresolution broadcast for digital hdtv using joint source/channel coding. IEEE J Sel Areas Commun 11(1):6–23

Reznic Z, Feder M, Freundlich S (2011) Apparatus and method for applying unequal error protection during wireless video transmission, Aug. 2 2011, uS Patent App. 13/137,263

Schwarz H, Marpe D, Wiegand T (2007) Overview of the scalable video coding extension of the h. 264/avc standard. IEEE Trans Circuits Syst Video Technol 17(9):1103–1120

Shannon CE (2001) A mathematical theory of communication. ACM SIGMOBILE Mob Comput Commun Rev 5(1):3–55

Shannon CE et al (1961) Two-way communication channels. In: Proc. 4th Berkeley Symp. Math. Stat. Prob, vol. 1. Citeseer, 1961, pp. 611–644

Slepian D, Wolf JK (1973) Noiseless coding of correlated information sources. IEEE Trans Inf Theory 19(4):471–480

Tate SR (1997) Band ordering in lossless compression of multispectral images. IEEE Trans Comput 46(4):477–483

Taubman D, Marcellin M (2012) JPEG2000 image compression fundamentals, standards and practice: image compression fundamentals, standards and practice. Springer Science & Business Media, 2012, vol. 642

Toivanen P, Kubasova O, Mielikainen J (2005) Correlation-based band-ordering heuristic for lossless compression of hyperspectral sounder data. IEEE Geosci Remote Sens Lett 2(1):50–54

Valsesia D, Magli E (2014) A novel rate control algorithm for onboard predictive coding of multispectral and hyperspectral images. IEEE Trans Geosci Remote Sens 52(10):6341–6355

Wu S, Chen H, Bai Y, Zhu G (2016) A remote sensing image classification method based on sparse representation. Multimed Tools Appl, pp. 1–18

Wu F, Peng X, Xu J (2014) Linecast: line-based distributed coding and transmission for broadcasting satellite images. IEEE Trans Image Process 23(3):1015–1027

Xu Q, Stankovic V, Xiong Z (2007) Distributed joint source-channel coding of video using raptor codes. IEEE J Sel Areas Commun 25(4):851–861

Yan C, Zhang Y, Dai F, Wang X, Li L, Dai Q (2014) Parallel deblocking filter for hevc on many-core processor. Electron Lett 50(5):367–368

Yan C, Zhang Y, Dai F, Zhang J, Li L, Dai Q (2014) Efficient parallel hevc intra-prediction on many-core processor. Electron Lett 50(11):805–806

Yan C, Zhang Y, Xu J, Dai F, Li L, Dai Q, Wu F (2014) A highly parallel framework for hevc coding unit partitioning tree decision on many-core processors. IEEE Signal Processing Letters 21(5):573–576

Yan C, Zhang Y, Xu J, Dai F, Zhang J, Dai Q, Wu F (2014) Efficient parallel framework for hevc motion estimation on many-core processors. IEEE Trans Circuits Syst Video Technol 24(12):2077–2089

Acknowledgements

This work was supported in part by the National Science Foundation of China (NSFC) under grants 61472101, 61631017 and 61390513, the Major State Basic Research Development Program of China (973 Program 2015CB351804), and the National High Technology Research and Development Program of China (863 Program 2015AA015903). The authors would like to thank Prof. Dr. Michel Barret and Dr. Ibrahim Omara for their support in this work and also the anonymous reviewers for their valuable comments that greatly improved this paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

This work was supported in part by the National Science Foundation of China (NSFC) under grants 61472101, 61631017 and 61390513, the Major State Basic Research Development Program of China (973 Program 2015CB351804), and the National High Technology Research and Development Program of China (863 Program 2015AA015903).

Rights and permissions

About this article

Cite this article

Hagag, A., Fan, X. & Abd El-Samie, F.E. Hyperspectral image coding and transmission scheme based on wavelet transform and distributed source coding. Multimed Tools Appl 76, 23757–23776 (2017). https://doi.org/10.1007/s11042-016-4158-8

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-016-4158-8