Abstract

In previous research on human machine interaction, parameters or templates of gestures are always learnt from training samples first and then a certain kind of matching is conducted. For these training-required methods, a small number of training samples always result in poor or user-independent performance, while a large quantity of training samples lead to time-consuming and laborious sample collection processes. In this paper, a high-performance training-free approach for hand gesture recognition with accelerometer is proposed. First, we determine the underlining space for gesture generation with the physical meaning of acceleration direction. Then, the template of each gesture in the underlining space can be generated from the gesture trails, which are frequently provided in the instructions of gesture recognition devices. Thus, during the gesture template generation process, the algorithm does not require training samples any more and fulfills training-free gesture recognition. After that, a feature extraction method, which transforms the original acceleration sequence into a sequence of more user-invariant features in the underlining space, and a more robust template matching method, which is based on dynamic programming, are presented to finish the gesture recognition process and enhance the system performance. Our algorithm is tested in a 28-user experiment with 2,240 gesture samples and this training-free algorithm shows better performance than the traditional training-required algorithms of Hidden Markov Model (HMM) and Dynamic Time Warping (DTW).

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

As computers and mobile devices are becoming increasingly important in our daily life, many researches are focused on human machine interaction. Traditional methods, such as mouse, keyboard or button based remote control, bore the youngsters and, more seriously, trouble the blind and the old, since they can not see the buttons on the keyboard or remoter controller clearly, nor can they track the trail of the mouse. Therefore it is necessary to propose other human machine interaction methods. Hand gesture, which is a natural way of communication between individuals, becomes one of the most suitable ways and attracts a large number of research groups [15, 24, 35, 37, 41].

With the rapid development of sensors, 3D accelerometers are becoming much cheaper and more widely used in mobile devices. Accelerometer based gesture recognition has been highlighted by a number of research groups. For example, Elisabetta Farella, et al., detected the movement of the human body by analyzing the accelerometer network [9]. Wolfgang Hürst et al., chose the accelerometer, together with the compass, to fulfill gesture-based interaction for mobile augmented reality [14]. Andrew Wilson et al., implemented a gesture based interaction device called Xwand [38]. Cho et al., proposed Magic Wand to control home electronic facilities [7]. Chen et al., adopted the gesture-aware device as a presentation tool [6]. There has been numerous researches on the applications of the acceleration sensor in mobile phone platform [3, 5, 8, 19–21, 36]. Also, accelerometer based gesture researches have shown great potential for applications in e-Learning/e-Teaching [11–13].

In previous work, the gesture recognition algorithms can be mainly divided into two categories: template based methods and model based methods, as shown in Table 1. Among the template based methods, which set training examples as gesture templates, Dynamic Time Warping (DTW) is perhaps the most frequently used algorithm. It is easy to implement and requires only one training sample to initiate. DTW based researches have shown satisfied performance in a number of user-dependent systems [2, 17, 40]. However, since people may have various personalities when performing the same gesture, setting gesture samples from one person as templates always results in poor performance when used by others. Thus DTW fails in the user-independent applications [39]. In contrast, model based methods learn the parameters which describe each gesture from a large quantity of training samples. Thus, HMM, which is the most widely used model based method, can perform well in many user-independent applications [16, 23, 27, 30]. Also, Hidden Conditional Random Fields (HCRF) [33], Dynamic Bayesian Networks (DBN) [34], and Self Organizing Networks (SON) [10] have been implemented in some previous gesture recognition systems. However, the determination of the parameters in model based method must require a large number of training samples from different users, which leads to a time-consuming and laborious sample collection process. But with a limited number of training samples, there will be a sharp decline in system performance.

Whether template based or model based methods, the templates or parameters of the gestures are always learnt from training samples first and then certain kind of matching is conducted to output the classification result. For these training-required methods, a small number of training samples always result in poor performance and a large quantity of training samples result in time-consuming and laborious sample collection process. Thus, training-free recognition is becoming a hot research topic in many fields recently [26, 31]. Without useful information of the gestures, training-free gesture recognition seems a task impossible. However, standard gesture trails, the crucial prior information ignored in previous researches, are frequently provided in the instructions for user-independent gesture recognition applications. With this prior knowledge, we can do much more.

In this paper, by exploiting gesture trail information, high-performance training-free hand gesture recognition with accelerometer is fulfilled. First, we determine the underlining space for gesture generation with the physical meaning of acceleration direction. Then, the template of each gesture in underlining space can be generated from gesture trails directly, which are frequently provided in the instructions of gesture recognition devices. Thus, during the gesture template generation process, fulfills the training-free gesture recognition without requiring training samples. After that, a feature extraction method, which transforms the original acceleration sequence into a sequence of more user-invariant features in underlining space, and a more robust template matching method, which is based on dynamic programming, are presented to finish the gesture recognition process and enhance the system performance. To test the algorithm’s performance, 2,280 gesture samples are collected from 28 persons for 8 gestures used in DVD control [18]. An accuracy of 93.1 %, which is even better than standard training-required methods of DTW(an accuracy of 88.7 % for the best template, and an accuracy of 72.9 % on average) and HMM(an accuracy of 91.1 % when using 90 % percentage samples as training ones), is achieved by our training-free method of MMDTW in the user-independent experiment.

The remainder of the paper is organized as follows. First, classical algorithms of DTW and HMM are discussed in Section 2. In Section 3, key points in designing high-performance training-free algorithm are addressed. Then Section 4 proposes the algorithm for training-free accelerometer based gesture recognition. Section 5 tests the performance of the algorithm and some conclusions are drawn in Section 6.

2 Related work

This section discusses the classical algorithms for gesture recognition: DTW and HMM (Fig. 1).

2.1 DTW

DTW is a well-known algorithm for measuring the similarity between two sequences which may vary in length [22] (Fig. 1). For two sequences of X: = (x 1,x 2,...,x N ) of length N ∈ ℕ and Y: = (y 1,y 2,...,y M ) of length M ∈ ℕ, we define a local cost measure c(x n ,y m ) ≥ 0,Footnote 1 which depicts the difference between x n and y m , we can obtain the cost matrix C ∈ ℝN ×M defined by C(n,m): = c(x n ,y m ). Then (N,M)-warping path is a sequence p = (p 1,...,p L ) with p l = (n l ,m l ) ∈ [1:N]×[1:M] for l ∈ [1:L] satisfying the following three conditions.

-

1.

Boundary condition: p 1 = (1,1) and p L = (N,M);

-

2.

Monotonicity condition: n 1 ≤ n 2 ≤ ... ≤ n L and m 1 ≤ m 2 ≤ ... ≤ m L ;

-

3.

Step size condition:p l + 1 − p l ∈ {(1,0),(0,1),(1,1)} for l ∈ [1:L − 1].

The total cost C p (X,Y) of a warping path p between X and Y with respect to the local cost measure c is defined as.

An optimal warping path is a warping path p ∗ having minimal total cost among all possible warping paths. The DTW distance DTW(X,Y) between X and Y is then defined as the total cost of p ∗ ,

With prefix sequences X(1:n): = (x 1,...,x n ) for n ∈ [1:N] and Y(1:m), we can define:

DTW finds an alignment between X and Y with minimal overall cost DTW(X,Y) = D(N,M) by dynamic programming with the following recursive process.

-

1.

Initialization:

\(D(n,1)=\sum_{k=1}^n c(x_k,y_1)\), where n ≤ N;

\(D(1,m)=\sum_{k=1}^m c(x_1,y_k)\), where m ≤ M.

-

2.

Iteration:

$$ D(n,m) = min \left\{ \begin{array}{lll} D(n-1,m) & + & C(n,m)\\ D(n-1,m-1) & + & C(n,m)\\ D(n,m-1) & + & C(n,m)\\ \end{array} \right.; $$where 1 < n ≤ N,1 < m ≤ M.

In summary, the key steps of DTW are

-

1.

determine the local cost measure function c(x n ,y m ) ≥ 0 and obtain the count cost matrix C ∈ ℝN ×M;

-

2.

output overall cost D(N,M) via dynamic programming.

In practical, the above process is performed between the sequence for classification (X of length N) and the delegates of each class (Y i of length M i ). Then the classification result is given as the best matched class C = arg min i D i (N,M i ).

2.2 HMM

2.2.1 Introduction of HMM

HMM is a famous and widely applied model [28] dealing with the sequential data efficiently. In the model, we assume that it is the latent variables x i which form a Markov chain that give rise to the observations z i . As a generative model shown in Fig. 2, HMM is depicted by the following three sets of parameters: (1) Initial probabilities π k ≡ p(z 1k = 1); (2) Transition probabilities A jk ≡ p(z nk = 1| z n − 1,j = 1), determining the relationship between adjacent latent states under Markov assumption, where z nk indicates the kth states of the latent variable state in time n; (3) Emission probabilities p(x n |z n ,φ), determining the relationship between the latent states and the observations in time n, where φ indicates the parameters of the emission distribution.

According to the relationship between variables, we can get the joint distribution of all variables in the model(both latent variable and observations) as follows:

where X = {x 1,...,x N }, Z = {z 1,...,z N }, θ = {π,A,φ} represent all parameters in the model.

2.2.2 Training process of HMM

When using HMM for classification, we should first determine the model parameters for each class i : θ i = {π i , A i , φ i } with the training samples of class i. The (local optimal) maximum likelihood solution can be determined by Expectation Maximum (EM) Algorithm:Footnote 2

-

1.

E-step: θ old = θ new, obtain q(Z) = p(Z|X,θ old);

-

2.

M-step: Maximize \(Q(\theta,\theta^{old})=\sum_\mathbf{Z} p(\mathbf{Z}|\mathbf{X},\theta^{old}) ln p(\mathbf{X},\mathbf{Z}|\theta)\) with respect to model parameters θ, obtain θ new.

When adopting EM algorithm in HMM, by defining

the optimization objective function in M-step can be reformulated as,

The updating function of parameters can be obtained by using Lagrange multipliers,

The updating function of ϕ is related to the formulation emission probabilities p(x n |z n ,ϕ). The quantities \(\gamma(\boldsymbol z_n)\) and \(\xi(\boldsymbol z_{n-1},\boldsymbol z_n)\) in each iteration can be efficiently computed via Sum-Product Algorithm.Footnote 3

2.2.3 Classification process of HMM

Then the likelihood for a sequence belonging to each class P(X|C i ) = P(X|θ i ) can be efficiently determined. According to Bayesian rule, the posterior probabilities P(C i |X) can be obtained and the final recognition result is achieved:

3 Well-performed training-free algorithm design

To recognize a gesture, it’s significantly important to analyze the generation process of the acceleration data and then find out the reason why the traditional methods perform well or not. Based on the analysis results, we can design well-performed and training-free algorithms.

3.1 Gesture generation process

When we are conducting a gesture, the brain analyzes the trail of the gesture first and divides it into a series of hand operations in turn. Then, individual discrepancy is also addressed due to habitual hand motion. Eventually, after the accelerometer senses the data and outputs it, the acceleration sequences are observable. In the above process, as illustrated in Fig. 3, the acceleration sequences are observations obtained from the accelerometer. There are underlining factors determining these observations, namely motion process in our case. For the same gestures performed by different people, though the observations vary greatly, the underlining factors are roughly the same.

3.2 Analysis of traditional algorithms

DTW chooses one or more acceleration samples as templates for each gesture. Then the matching is performed in the observable space of acceleration sequences directly. Since Dynamic Programming based matching can capture long term dependencies of sequences [29], DTW performs well in user dependent applications [17]. However, in the case of user-independent, it is nearly impossible for DTW to get rid of the personal features and thus DTW fails to show satisfied performance.

HMM, in a different way, depicts the underlining factors for gesture generation via latent variables. Consequently, it has displayed a rather desirable performance in user-independent applications. However, HMM depicts the relationship between latent variables via 1-order transition matrix. Thus it can not discriminate gestures with similar transition matrix and fails to model the long term dependencies (high order relations) between underlining factors [25, 32].

3.3 Well-performed training-free algorithm design

3.3.1 Key points for well performance

Inspired by the analysis in the previous Sections of 3.1 and 3.2, to obtain well-performed gesture recognition algorithms, it is important for us to consider the following aspects during the design of the algorithm:

-

Underlining Space: the underlining space should be able to depict the user-invariant underlining factors of gestures.

-

Matching Algorithm: the matching should be able to capture long term dependencies.

3.3.2 Key points for training-free

Assume gesture trails and observations of acceleration sequences are given in the system, to fulfill training-free gesture recognition, the following aspects should be considered during the design of the algorithm:

-

Generation of gesture templates in underlining space: atomic gestures should be defined so that gesture trails can be made full use of and generate the gesture templates in underlining space can be generated without training samples.

-

Observation representation in underlining space: an unsupervised feature extraction should be designed to map the original acceleration sequences into the underlining space.

-

Training-free matching algorithm: the designed matching algorithm should have no parameters to learn.

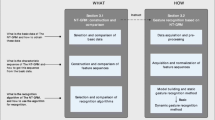

3.3.3 Framework of our well-performed training-free algorithm

It’s convenient and suitable for us to determine the underlining space with the physical meaning of acceleration direction for the following reasons:

-

Provided the gesture trails, their corresponding templates of acceleration direction sequences can be analyzed conveniently;

-

The observations of acceleration sequences can be mapped into acceleration direction sequences conveniently;

-

Acceleration direction sequences enjoy user-invariant property.

Thus in the underlining space, we define the atomic gestures as the acceleration action in certain directions. Then we can obtain the template of each gesture and the mapped sequences of accelerometer data. After that, the modified DTW matching is conducted to obtain the recognition result since this matching strategy of dynamic programming captures long term dependencies better than 1-order transition matrix in HMM [29].

-

The Underlining Space (Physical Meaning of Acceleration Direction)

-

Gesture template generation (no training samples required):

Gesture trails → Templates of gestures (atomic gesture sequences);

-

Observation Feature Extraction (unsupervised):

Acceleration sequences → Mapped sequences (probabilistic atomic gesture sequences);

-

-

Matching via Modified DTW (no parameters to train).

For the process of our algorithm is mainly comprised of template generation, mapping, matching and classification, our algorithm is called Mapping based Modified DTW (MMDTW), which is shown can see seen in Fig. 4.

4 Process of our algorithm

In this section, the process of our algorithm will be discussed in details.

4.1 Gesture template generation

In this gesture recognition process, we make the following definitions: X indicates the right-left direction (left positive), Y indicates the vertical direction (upward positive), and Z indicate the backward-forward direction (forward positive). As shown in Fig. 5, the Nokia gestures defined in [18] will be discussed as an example. Since the gestures are all defined in XY space, the atomic gestures are represented by normalized acceleration vectors with Z dimension always remaining 0: z 1 = (1,0,0), z 2 = ( − 1,0,0), z 3 = (0,1,0), z 4 = (0, − 1,0), \(\mathbf{z}_5=(\sqrt{2}/2,\sqrt{2}/2,0)\), \(\mathbf{z}_6=(\sqrt{2}/2,-\sqrt{2}/2,0)\), \(\mathbf{z}_7=(-\sqrt{2}/2,\sqrt{2}/2,0)\), \(\mathbf{z}_8=(-\sqrt{2}/2,-\sqrt{2}/2,0)\).Footnote 4

Now under the following assumptions, the gesture templates can be generated according to straight forward physical analysis:

-

1.

For basic gestures moving uni-directionally, there exists both an acceleration process and a deceleration process. For example, the left moving gesture in Fig. 5 can be regarded as a left acceleration (corresponding to the underlining state z 1) followed by left deceleration (correspond to the underlining state z 2). Thus the template for this gesture is written as [1 2].Footnote 5

-

2.

For complex gestures originated from basic gestures, assumption 1 follows during each basic gesture process.

For example, gesture square in Fig. 5 is comprised of up [3 4], right [2 1], down [4 3], left [1 2]. Since each basic gesture follows assumption 1, we can give out the total template for square as [3 4 2 1 4 3 1 2].

-

3.

For circle gestures, they contain the following sub-motions,

-

(1)

a uniform motion during the whole gesture process,

-

(2)

an acceleration process when the gesture starts,

-

(3)

an deceleration process at the end of the gesture.

For example, gesture clock-wise circle in Fig. 5 contains (a) Uniform Circular Motion: approximated by underlining states as [3 7 2 8 4 6 1 5 3]; (b) Beginning Acceleration: [1]; (c) Ending Acceleration: [2]. When we adding submotion b to the first state of submotion a, together with submotion c added to the final state of submotion a, we get the template for clock-wise circle: [5 7 2 8 4 6 1 5 7].

-

(1)

Under above assumptions, we can get the template sequences of gestures T = [t 1,...,t m ,...,t M ] as shown in Fig. 5. And the fact the mth element of sequence T, t m , numbered d indicates the underlining state at this time to be z d .

4.2 Feature extraction

This subsection will describe how to map the acceleration sequence of length N into the underlining space and how to present the mapped sequence L = (l 1,...,l i ,...,l N ). In most accelerometers, the raw acceleration sequence contains the influence of gravity. Here we minus g in the vertical direction and get sequence A = (a 1,...,a i ,...,a N ).

In order to minimize the loss in the mapping process, soft mapping is adopted in our algorithm. With the distance between a i and the underlining state z d set, the distance is mapped into probabilities with Softmax function:

where a id is determined by

where a small positive number ϵ is added to avoid a id being infinite.

By assigning l id = p(z d |a i ), we can obtain a probabilistic underlining sequence L, where l id indicates the probability of a i to be underlining state z d .

4.3 Modified DTW based matching

This subsection will describe the matching method between the mapping result L and the template sequence of a gesture T in the underlining space.

4.3.1 Cost matrix

The cost matrix C(i,m) indicates the distances between the ith element in the mapped sequence L: l i and the mth element in the template sequence T: t m decided by.

where w i is the weight of l i and d im is the distance between l i and t m .

Since points with larger amplitudes are more important for recognition, w i is given in proportion to the amplitude of the point as

In particular, if t m = d, then the distance between t m and l i can be measured in proportion to l id = p(z d |a i ). Thus we define the following piecewise function

where 0 < t < 1. The threshold θ indicates that when l id is very small, it’s nearly impossible for l i to be t m and the distance between l i and t m should be very large. In this case, we multiply the distance by k > 1.

So that C(i,m) is given by the following function

where d = t m .

4.3.2 Modified DTW

For each element of a template sequence t m should last a period of time, when given the optimal alignment of DTW matching, there should be at least K adjacent elements of mapped sequence M to be assigned to one element t m in the template. So DTW is modified in the following way. First, each underlining state in template is repeated K times, for example, when K equals 2, the original sequence of [1 2] extends to [1 1 2 2]; then the symmetric traditional DTW is modified into an unsymmetric one as follows

-

Initialization

\(D(i,1)=\sum_{k=1}^n C(x_k,y_1)\), where i ≤ N;

\(D(1,m)=\sum_{k=1}^m C(x_1,y_k)\), where m ≤ KM

-

Modified DTW

$$ D(i,m) = min \left\{ \begin{array}{lll} D(i-1,m-1) & + & C(i,m)\\ D(i-1,m) & + & C(i,m) \end{array} \right. \label{Eq:MMDTW} $$(19)where 1 < i ≤ N,1 < m ≤ KM;

N indicates the length of the mapped sequence;

M indicates the length of the original template,

thus KM indicates the length of extended template

4.3.3 Classification

After matching, we get the tamplate of each gesture C: MDTW(L,T c ) = D(N,K M c ) for templates of each gesture c. Now the sequence should be recognized as the most similar gesture

where C is the recognition result.

5 Experiment

In this section, the environment and results of the experiment will be discussed in details.

5.1 Experiment description

5.1.1 Hardware and software environment

We built a real time recognition system to test the performance of our algorithm. As shown in Fig. 6, the system is mainly comprised of Wii remote, Bluetooth Module and Laptop.

-

1.

Wii remote: collect the acceleration data.

Wii remote [1] has a built-in three-axis accelerometer: ADXL330 from Analog Devices. When operating at 100 HZ, the accelerometer can sense acceleration between − 3 g to 3 g with noise below 3.5 mg. The acceleration data and button action information can be sent from Wii Remote to Laptop via Bluetooth module. When collecting samples, the process of each gesture begins when the participant presses ’A’ button on Wii remote, and it finishes when the ‘A’ button is released.

-

2.

Bluetooth module: communicate between the wii remote and the laptop.

IVT Bluesoleil is utilized to connect the laptop and Wii and the sample rate is 10 Hz.

-

3.

Laptop: recognize the gesture in real time.

The computer is a Thinkpad Laptop with Intel T5870 processer and 2 G memory. Our real time gesture recognition is implemented on the platform of Matlab. The Operation System is Windows XP and the Matlab edition is R2010a. To read the date from the Wii remote by bluetooth communication, we use the toolbox of WiiLab [4].

5.1.2 Samples description

The algorithm is tested on eight gestures arising from research undertaken by Nokia[18], shown in Fig. 5. We collect samples from 28 persons. 15 of them are male while others are female. All of them are graduate students and they have never used gesture recognition devices before. Each of the 8 gestures is repeated 10 times. Consequently we get the database of 28 person ×8 gestures ×10 times, which aggregates totally 2,240 samples.

5.1.3 Parameters setting and experiment process

In our algorithm shown in Algorithm 1, we do not need any training samples. We use cosine metric to measure the distance between the acceleration vectors. The parameters are set as follows, ϵ = 0.001,k = 100,\(N=\lfloor \frac{1}{2}\frac{l}{l_{M_{i}}} \rfloor\). We get our recognition result although the real time gesture recognition system mentioned above.

We also implement HMM and DTW to conduct a comparison. When testing DTW, we take the first sample of each gesture collected by a person as the template. To see the influence of personality, we adopt the templates by different persons in turn, and classify all the other samples according to their similarity with the templates. In the experiment of HMM, since discrete HMM and continual HMM get similar performance [18], we utilize discrete HMM. We employ leave one out strategy and use all other persons’ samples besides the testing person’s as training samples. We use different percentage of training samples to see the recognition performance. The training samples of HMM are randomly selected from the data set and the parameters of HMM are determined according to [18] which sets 8 cluster classes and 5 latent states, In addition we use HMM toolbox by MurphyFootnote 6 to realize our left-to-right HMM test system.

5.2 Result

As displayed in Fig. 7, the comparison between DTW and MMDTW shows the result that the choice of template is closely related to the recognition result of DTW. With the best templates, DTW achieves an accuracy of 88.7 %; while the least accuracy of DTW sharply drops to 26.5 %. The average accuracy for DTW is 72.9 % and 9 out of 28 templates get an accuracy less than 70.0 %, which is intolerable in practical applications. In comparison, the accuracy of MMDTW is 93.1 % and is better than DTW whichever the template is. This improvement just stems from the no-inclination templates and the matching in underlining space.

Then in Fig. 8, we can see the comparison of HMM and MMDTW. The result shows that MMDTW displays a much better performance than HMM when the latter uses relative small percentage of training samples. With increasing of training samples, HMM performs better. However, the accuracy of HMM can not exceed that of MMDTW even when 90 % samples are used for training. Compared with HMM, the improvement of MMDTW may come from the Modified DTW matching, which compares the differences between the template and the mapped sequence more efficiently.

Just we can conclude in Fig. 9, the best accuracy of HMM is 91.1 % and the average accuracy of DTW is 72.9 %. Thus, we can figure out that our algorithm can not only meet the training-free requirement, but also show a better accuracy than the training-required algorithms of DTW and HMM.

6 Conclusion

In this paper, in order to fulfill the training-free gesture recognition with accelerometer for user-independent applications, we make use of the crucial prior knowledge of gesture trail and design the algorithm of MMDTW. The algorithm defines an underlining space with the physical meaning of acceleration direction. After that, template for each gesture is defined in this space according to the gesture trail. Then acceleration sequence, the output of the accelerometer, is mapped into the underlining space to conduct a modified DTW matching with templates. Finally the best matched gesture turns out to be the recognition result. When tested in an user-independent experiment with 2,240 samples, the training-free algorithm even shows better performance than the traditional training-required algorithms of DTW and HMM, which verifies the effectiveness of MMDTW.

We believe our algorithm is the first step toward the training-free gesture recognition. In future work, by exploiting the gesture trail information, more complicated physical analysis can be adopted to generate the templates of general gestures with more general sensors, such as video camera. Also, without the time-consuming and laborious sample collection process, the well-performed training-free gesture recognition makes it much more convenient for users to extend the original gesture vocabulary themselves. Thus, this kind of training-free algorithms enjoys great potential for a large number of applications, such as self-defined vocabulary based gesture recognition, gestures devices to support E-teaching, and so on.

Notes

c(x,y) is smaller (lower cost) when x and y are more similar to each other.

The EM algorithm used in HMM is also called Baum Welch Algorithm.

The Sum-Product Algorithm used in HMM is also called Forward-backward Algorithm.

The lasting time for each atomic gesture to continue will be considered in the step of matching.

Number d here indicates the underlining state of z d for simplicity.

References

Adxl330 datasheet (2006)

Akl A, Valaee S (2010) Accelerometer-based gesture recognition via dynamic-time warping, affinity propagation, & compressive sensing. In: 2010 IEEE international conference on acoustics speech and signal processing (ICASSP), IEEE, pp 2270–2273

Brezmes T, Gorricho JL, Cotrina J (2009) Activity recognition from accelerometer data on a mobile phone. In: Distributed computing, artificial intelligence, bioinformatics, soft computing, and ambient assisted living, pp 796–799

Brindza J, Szweda J, Liao Q, Jiang Y, Striegel A (2009) Wiilab: bringing together the nintendo wiimote and matlab. In: Frontiers in education conference, 2009. FIE’09. 39th IEEE. IEEE, pp 1–6

Byrne D, Doherty AR, Snoek CGM, Jones GJF, Smeaton AF (2010) Everyday concept detection in visual lifelogs: validation, relationships and trends. Multimed Tools Appl 49(1):119–144

Chen Y, Liu M, Liu J, Shen Z, Pan W (2011) Slideshow: Gesture-aware ppt presentation. In: 2011 IEEE international conference on multimedia and expo (ICME), IEEE, pp 1–4

Cho SJ, Oh JK, Bang WC, Chang W, Choi E, Jing Y, Cho J, Kim DY (2004) Magic wand: a hand-drawn gesture input device in 3-d space with inertial sensors

Choi ES, Bang WC, Cho SJ, Yang J, Kim DY, Kim SR (2005) Beatbox music phone: gesture-based interactive mobile phone using a tri-axis accelerometer. In: IEEE international conference on industrial technology, 2005. ICIT 2005. IEEE, pp 97–102

Farella E, Pieracci A, Benini L, Rocchi L, Acquaviva A (2008) Interfacing human and computer with wireless body area sensor networks: the wimoca solution. Multimed Tools Appl 38(3):337–363

Flórez F, García JM, García J, Hernández A (2002) Hand gesture recognition following the dynamics of a topology-preserving network. In: Fifth IEEE international conference on automatic face and gesture recognition, 2002. Proceedings. IEEE, pp 318–323

Holzinger A, Nischelwitzer AK, Kickmeier-Rust MD (2006) Pervasive e-education supports life long learning: some examples of x-media learning objects (xlo). Digital Media, pp 20–26

Holzinger A, Softic S, Stickel C, Ebner M, Debevc M (2009) Intuitive e-teaching by using combined hci devices: experiences with wiimote applications. In: Universal access in human-computer interaction. applications and services, pp 44–52

Holzinger A, Softic S, Stickel C, Ebner M, Debevc M, Hu B (2012) Nintendo wii remote controller in higher education: development and evaluation of a demonstrator kit for e-teaching. Comput Inform 29(4):601–615

Hürst W, van Wezel C (2012) Gesture-based interaction via finger tracking for mobile augmented reality. Multimed Tools Appl 62(1):233–258

Kettebekov S, Sharma R (2000) Understanding gestures in multimodal human computer interaction. Int J Artif Intell Tools 9(2):205–223

Lee HK, Kim JH (1999) An hmm-based threshold model approach for gesture recognition. IEEE Trans Pattern Anal Mach Intell 21(10):961–973

Liu J, Zhong L, Wickramasuriya J, Vasudevan V (2009) uwave: accelerometer-based personalized gesture recognition and its applications. Pervasive Mob Comput 5(6):657–675

Mäntyjärvi J, Kela J, Korpipää P, Kallio S (2004) Enabling fast and effortless customisation in accelerometer based gesture interaction. In: ACM international conference proceeding series

Mantyla VM, Mantyjarvi J, Seppanen T, Tuulari E (2000) Hand gesture recognition of a mobile device user. In: 2011 IEEE international conference on multimedia and expo (ICME), vol 1. IEEE, pp 281–284

Montoliu R, Blom J, Gatica-Perez D (2013) Discovering places of interest in everyday life from smartphone data. Multimed Tools Appl 62(1):179–307

Montoliu R, Gatica-Perez D (2010) Discovering human places of interest from multimodal mobile phone data. In: Proceedings of the 9th international conference on mobile and ubiquitous multimedia. ACM, p 12

Müller M (2007) Ltd MyiLibrary. Information retrieval for music and motion, vol 6. Springer Berlin

Park CB, Roh MC, Lee SW (2008) Real-time 3d pointing gesture recognition in mobile space. In: 8th IEEE international conference on automatic face & gesture recognition, 2008. FG’08. IEEE, pp 1–6

Pavlovic VI, Sharma R, Huang TS (1997) Visual interpretation of hand gestures for human-computer interaction: a review. IEEE Trans Pattern Anal Mach Intell 19(7):677–695

Pei M, Jia Y, Zhu SC (2011) Parsing video events with goal inference and intent prediction. In: 2011 IEEE international conference on computer vision (ICCV), IEEE, pp 487–494

Peng X, Bennamoun M, Mian AS (2011) A training-free nose tip detection method from face range images. Pattern Recogn 44(3):544–558

Quintana GE, Sucar LE, Azcárate G, Leder R (2008) Qualification of arm gestures using hidden markov models. In: 8th IEEE international conference on automatic face & gesture recognition, 2008. FG’08. IEEE, pp 1–6

Rabiner LR (1989) A tutorial on hidden markov models and selected applications in speech recognition. Proc IEEE 77(2):257–286

Rabiner LR, Juang BH (1993) Fundamentals of speech recognition

Rajko S, Qian G (2008) Hmm parameter reduction for practical gesture recognition. In: 8th IEEE international conference on automatic face & gesture recognition, 2008. FG’08. IEEE, pp 1–6

Seo HJ, Milanfar P (2010) Training-free, generic object detection using locally adaptive regression kernels. IEEE Trans Pattern Anal Mach Intell 32(9):1688–1704

Sminchisescu C, Kanaujia A, Li Z, Metaxas D (2005) Conditional models for contextual human motion recognition. In: Tenth IEEE international conference on computer vision, 2005. ICCV 2005, vol 2. IEEE, pp 1808–1815

Song Y, Demirdjian D, Davis R (2011) Multi-signal gesture recognition using temporal smoothing hidden conditional random fields. In: 2011 IEEE international conference on automatic face & gesture recognition and workshops (FG 2011), IEEE, pp 388–393

Suk HI, Sin BK, Lee SW (2008) Recognizing hand gestures using dynamic bayesian network. In: 8th IEEE international conference on automatic face & gesture recognition, 2008. FG’08. IEEE, pp 1–6

Takahashi M, Fujii M, Naemura M, Satoh S (2013) Human gesture recognition system for tv viewing using time-of-flight camera. Multimed Tools Appl 62(3):761–783

Tsukada K, Yasamura M (2002) Ubi-finger: gesture input device for mobile use. In: Asia-Pacific computer and human interaction

Wang D, Xiong Z, Zhang M (2012) An application oriented and shape feature based multi-touch gesture description and recognition method. Multimed Tools Appl 58(3):497–519

Wilson A, Shafer S (2003) Xwand: Ui for intelligent spaces. In: Computer human interaction, pp 545–552

Wilson D, Wilson A (2004) Gesture recognition using the xwand

Wu J, Pan G, Zhang D, Qi G, Li S (2009) Gesture recognition with a 3-d accelerometer. In: Ubiquitous intelligence and computing, pp 25–38

Zhu Y, Xu G, Kriegman DJ (2002) A real-time approach to the spotting, representation, and recognition of hand gestures for human–computer interaction. Comput Vis Image Underst 85(3):189–208

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Yin, L., Dong, M., Duan, Y. et al. A high-performance training-free approach for hand gesture recognition with accelerometer. Multimed Tools Appl 72, 843–864 (2014). https://doi.org/10.1007/s11042-013-1368-1

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-013-1368-1