A new method for the measurement of the geometric parameters of objects of complex shape is proposed. A mathematical model of the proposed photoprojection method is developed. Distortions in the projection of the coordinate grid onto an object of complex shape relative to a selected coordinate origin specified by the marker of a laser range finder are determined. Analytic relationships that determine the essential core of the method are identified. A technique for creating a digital image (set of points) of a three-dimensional object from a single image in the beams of a projector of an external coordinate system is described.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

With the development of industry, much less time is now spent on the fabrication of articles (objects) of complex shape than is needed to monitor their geometric parameters. In aviation, exact correspondence of a part to given dimensions is of exceptional importance, since this affects the functionality and reliability of different mechanisms. Thus, the development of a high-speed, high-precision method for the measurement of the geometric parameters of objects of complex shape is an important problem related to safe operation of aircraft.

Analysis of existing measurement methods. Current widespread methods of measurement are of two types, either contact or noncontact. Contact methods are realized with the use of standard devices and plate-measuring engines. With the use of such devices, it is possible to produce measurements with precision ~(1–9)·10–6 m, though these devices suffer from a number of drawbacks, including low response, high overall dimensions, stationary placement, direct contact of the probe (or template) with the part; and high production cost and high operating costs [1].

Contactless methods utilize laser scanning systems which lack these drawbacks. Through the use of the most recent developments of laser scanning systems, it is possible to achieve measurement precision no less than the precision of contact devices [2], though despite the high rate of measurement of a single individually selected point, the overall response of laser scanners is not adequate. Where the part possesses large dimensions and (or) a set of interface lines that create discontinuities in the functions of the mathematical model, maintenance of a given degree of precision requires tens of millions of measurements. The engine used to deflect the laser beam responsible for scanning the surface is also a drawback of laser measuring engines. Such systems always possess mechanical elements that undergo wear as they are used, which reduces the quality of the measuring engine. Still another drawback derives from the rigorous requirements imposed on the reflection of the beam by the surface of the measured part. Often, laser range finders are unable to measure the distance to an object due to aspects of the reflection properties of the surface or incongruous reflection angles.

In the present article, we will propose a measurement method by means of which we can dispense with the scanning of the object with a laser beam.

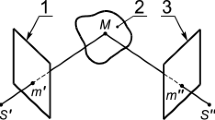

Photoprojection method. The design of the photoprojection method of measuring a three-dimensional (3D) profile of an object is presented in Fig. 1. In the measurement procedure, the object 4 is scanned by a projector of a periodic pattern (coordinate grid) 1. The functional relationships of the variation of the pattern by coordinates X and Y are known. The distance to a single arbitrarily selected point is measured by a laser range finder 2. The reflection spot of the range finder’s laser beam is adopted as the coordinate origin relative to which the set of points of the mathematical model is constructed. The image of the object with projection of the periodic pattern superimposed on it and the light spot of the laser range finder are fixed by the camera 3.

We adopt the following terms and assumptions: absolute reading point, or exit point of laser beam from the range finder’s optical system; reading point of projector, or focus of optical system of projection; reading point of camera, or point of intersection of the focal axis of the optical system with plane of camera; focal planes of the projection system and the camera coincide and are orthogonal to the beam of the laser range finder; the input-output points of the optical system of the range finder, projector, and camera are coplanar; light propagates rectilinearily; the distance to the object is measured along the Z-axis.

Similar designs have been developed abroad, though these designs utilize illumination of the object by parallel rays of light without positioning of the reference point by the beam of a laser range finder [3].

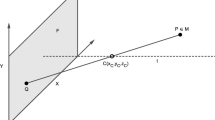

Procedural foundations. In the general case, the problem of contactless remote measurement of the 3D profile of objects reduces to a determination of the coordinates of a set of points on the surface of the object to which the distance from an absolute reading point and the angles of deviation of the beam of a range finder from the Z-axis are known (Fig. 2). We will propose that measured coordinates n of the check points on the object form a file {P 1, ..., P i }, where i = 0, ..., n. We determine the coordinates of an arbitrary point P i by means of the formulas

A digital image of an object of complex shape is created in this method. The arbitrary linear dimension, i.e., the distance between points P i and P n on the object, is determined as

Any curvilinear dimension belonging to the controlled surface is determined as a result of successive summation of its constituent segments, provided that there is a small enough distance between the scanned points. A set of points is needed to create an exact digital image of an object and this is a difficult procedure and requires time and a precision system for the deflection of the beam of a range finder. There now exist high-speed systems [4] by means of which the geometric parameters of objects of simple shape (figures of revolution) may be measured using a small number of reference points. The distinction of the proposed method of measurement of the geometric parameters of objects of complex shape is that it makes it possible to obtain a set of points of the digital image of a test part in a single measurement cycle without the use of mechanical systems to produce a deflection of a laser beam.

Mathematical model of device. Let us consider a projection system consisting of a projector, laser range finder, and planar screen (including the X-axis). We will assume that the plane of the screen and the XY plane of the computational coordinate system are parallel and that the pattern is projected by a diverging light fl ux. Figure 3 (XY plane perpendicular to the plane of figure) shows a geometric model of the photographic measuring engine (projector).

We select a point S on the X-axis the distance to which is known from the readings of the laser range finder as the coordinate origin. In this case the coordinates X 0 of a point lying on the X-axis and belonging to the terminator line (light separator) of the projected grid may be calculated by the formula

where ρ and σ are the dimensions of the dark and light bands of the screen (physical carrier) of the grid (cf. Fig. 3); k x , ordinal number of darkened band counted off from the optical axis of the projector in the direction of the laser range finder (coefficient); D, distance from focal plane of projector to plane of screen; and ƒ, focal distance of lens of projector.

Since the grid is periodic, the coordinate Y 0 may be calculated in similar fashion, but without taking into account the displacement of the reading point along the X-axis:

Thus, once the distance D is known, it is then always possible to determine the geometric locus of an arbitrary point of the projection of the terminator line on an ideally planar screen. This makes it possible to find an analytic relationship between the absolute coordinate system (X; Y) as a function of the distance to the screen D and the step ρ, σ of the coordinate system of the projector: Y = F(D; σ; ρ), X = F(D; σ; ρ).

Mathematical model of photorecorder. We adopt the following initial conditions: focal plane of optical system of camera and plane of screen are parallel; the axis m of the coordinate system of the projector is collinear with the axis μ of the coordinate system of the photomatrix; and the focal distance ƒ of the optical systems of the projector and the camera are identical. In this case, the axis n of the coordinate system of the projector is parallel to the axis η of the plane of the photomatrix. The coordinates x 0 and μ0 of the points of the terminator on the controlled object and on the photomatrix are connected by the relationship [4]

where the coordinate μ of the point in the plane (μ; η) corresponds to the coordinate x of the point in the plane (X; Y).Substituting in (5) the analytic value (3) derived for a point with coordinate x, we obtain

Since the directions of the axes n, Y, and η coincide, we may write similar expressions for the axis Y as follows:

Thus, the known distribution function F(m; n) of the lines of the projector grid is transformed into the computed function F(X; Y) of the lines of the grid on an ideally planar screen and is next transformed into a measured coordinate F(μ; η) for finding the corresponding lines of the grid in the camera matrix. The procedure used to measure the geometric parameters of an object of complex shape begins with selection of a reading point specified by the marker of the laser range finder, which determines the distance D (cf. Fig. 3). We select the point S on the object as the coordinate origin for all three reference systems. In the photomatrix, this point is represented in the form S′ and will correspond to the origin of the scale in the image of the object.

Expressions (3)–(9) determine analytic relationships between the parameters of the coordinate grid specified by the functions F(m; n) and F(X; Y) that depend on the distance to the screen D and F(μ; η), the values of which are computed by the camera matrix. Obviously, where the screen deviates from an ideal form, the observed lines will be shifted relative to the computed positions, Since from the practical point of view any object may be considered a nonideally planar screen, once the displacement of the lines of the projected pattern is fixed, we obtain values ΔX i , ΔY i , ΔZ i for any point found on the terminator line, that is, information about the shape of the study object. The number of measurement points accessible for the construction of a digital image of the object will be equal to the number of pixels in the photomatrix on the lines of the grid.

A block diagram of the photoprojection measuring engine is shown in Fig. 4. It follows from (2) that the solution of the system of equations in (1) relative to an arbitrarily selected point of the surface must be known in order to calculate the dimensions of an object relative to a selected reference point. The coordinates of the points of intersection of the beams of the projector with the imaginary plane (X 0; Y 0) found at a distance D 0 form the terminator line. Any computed point P i situated on these lines has the coordinates X ob, Y ob, Z ob and is represented in the plane of the photomatrix in the form X ob(μ; η), Y ob(μ; η). We write a system of equations for determining the position of the observed points forming a real terminator line on the object relative to the imaginary terminator line, which must occur in the imaginary plane (X 0; Y 0):

Viewed from the projector (cf. Fig. 3), the coordinate of any point X 0 of a projected terminator line is determined from (3).

Recalling that

we find

Comparing (11) and (12), we write an equation for determining the distance to an observed point D ob, expressed in terms of the parameters of the projector (σ, ρ), and the photomatrix (η, ρ):

We recall (7) and write

For the Y-axis,

Recalling (8), we find

For the Z-axis,

System (10) may be written in the following form:

where m = σ/2 + k x (ρ + σ), n = σ/2 + k y (ρ + σ) are parameters of the projection grid; k x , k y , node numbers of the projection grid.

The distances between arbitrary points P 0, ..., P i , ..., P n of the surface of the object and points lying on terminator lines of the projected coordinate system may be calculated with the use of the expressions in (13). By determining the set of points of an object of complex form relative to the nodes of the grid, it is possible to increase the precision and speed of the measurements without resorting to continuous scanning of the object.

The errors in the measurements of the geometric parameters of objects of complex shape depend on the precision with which the coordinates of points on the surface of the object is determined. In the present method, the measurement errors are determined by many factors, including the procedure error associated with the step of the projection grid; and the instrument errors of the laser range finder, projection system, and television camera, all of which depend on the resolution of the optical system and the photomatrix. A complete metrological analysis of the new device requires a large number of studies and falls outside the scope of the present investigation. Here, we only wish to indicate a plan of metrological analysis by means of which a technique for determining the general metrological indicators of an article may be created and the requirements imposed on the component parts of equipment, determined based on the concrete requirements imposed on the errors in the measurements of the dimensions of an article.

In the analysis of the geometric parameters of articles of complex shape, we understand by a measurement of linear dimensions a determination not only of the length M jk of a rectilinear segment connecting two arbitrary points P j and P k in the image, but also the length of the line MS jk connecting selected points on a curvilinear surface of the object. In this case, the absolute Δ jk and relative ε jk errors in the measurements are determined correspondingly by the formulas [5]

where \( {M}_{jk}^{\mathrm{ref}} \) is the length of the segment P j P k measured by a reference device, and M jk (μη) is the length of the same segment measured in the image and computed in terms of its coordinate by formula (2) with the use of (13).

In [4, 6], it is shown that in the most unfavorable case, when all the errors in the measurement of the coordinates are of the same sign, the errors in the measurements of the dimensions Δ jk and in the coordinates of the points in space Δco are related by the relationship \( {\Delta}_{jk}=\sqrt{3{\Delta}_{\mathrm{co}}} \). The actual error may be significantly less and in the range \( 0<{\Delta}_{jk}<{\Delta}_{\mathrm{co}}=\sqrt{3} \).

where Δn is the error of the projection system of the grid; Δc, error of the camera, which includes the optical distortions produced by the lens and the digitization of the matrix; Δ l , error in the measurement of the ultimate dimension of the laser spot; and Δr, error of laser range finder.

Let us assume that at a distance of 1 m from the laser to the object, the errors are given as Δn = 0.1 mm; Δr = 0.1 mm; Δc = 0.3 mm (matrix 1024 × 1024); and dimension of laser spot Δ l = 0.2 mm, whence by (15) ΔΣ = 0.39 mm.

Preliminary calculations have shown that all the components of the errors are commensurable and depend directly proportionally on the distance from the optical system to the object. The greatest contribution is made by the errors caused by the discreteness of the photomatrix, the parameters of which are being continuously improved. In the measurements it is necessary to reduce the distance to the object, though this leads to significant optical distortions. When processing information, it is best to use the complete screen in the image and to employ special programs for correction of optical distortions. The numerical values of the errors which are obtained are maximally overstated, since they are defined for unfavorable cases, when all the different types of errors are of the same sign and contain the maximum constituent components.

On the whole, whether the required measurement precision can be attained depends on the shape of the object and the components of the equipment employed as well as requiring an individualized approach for different objects and different measurement conditions.

Conclusion. The proposed photoprojection method for the measurement of the geometric parameters of parts of complex shape substantially reduces the time needed for quality control of an article, since it does not require scanning of the surface and enables the determination of an arbitrarily large set of points on a surface found in recognizable elements of the projected coordinate system, such as a terminator line or projection grid. The proposed method does not require systems for the deflection of a laser beam and is less demanding as regards angles and the reflectance of the material of the part, since it utilizes both the illuminated region of the projected grid as well as its darkened part as the information signal. Thus, in view of the substantial capabilities of image processing, it may be asserted that the overall time needed for measurement of the geometric parameters of a part will be equal to the time needed to obtain a single photograph of an object from each of several required approach angles. Through the use of the proposed method, it is possible to significantly increase the rate of inspection of the geometric parameters of articles of complex shape, increase the work efficiency, selectively reject effectively all articles that do not correspond to given dimensions, including those encountered with the use of additive technologies for the production of the parts of machines, engines, and the elements of the structures of aircraft engines.

The present study was carried out with the support of the Ministry of Education and Science of Russia in the framework of the State Assignment (Project No. 8.2297.2017/4.6).

References

A. I. Pershev, S. I. Feoktistov, D. G. Kolykhanov, and V. I. Shport, “Coordinate-measuring engines and complexes,” Nauka Tekhnol. Prom., No. 3, 36–48 (2011).

B. V. Skvortsov, I. Yu. Zhiganov, and A. N. Malysheva-Stroikova, Methods for Remote Measurements of the Geometric Parameters of Objects, Lambert, Moscow (2012).

A. Sikardi-Segade, J. C. Estrada, A. Martines-Gargia, and G. Garnica, “On axis fringe projection: A new method for shape measurement,” Optics and Lasers in Eng., 69, 29–34 (2015).

B. V. Skvortsov, A. N. Malysheva-Stroikova, and A. V. Chernykh, “Method of laser television inspection of the geometric parameters of objects of complex shape,” Prib. Tekhn. Eksperim., No. 1, 71–77 (2016).

E. I. Tsvetkov, Foundations of Mathematical Metrology, Politekhnika, St. Petersburg (2005).

B. V. Skvortsov, A. N. Malysheva-Stroikova, and I. Yu. Zhiganov, “An opto-electronic device for remote measurement of the geometric parameters of objects of special shapes,” Izmer. Tekhn., No. 7, 23–26 (2014).

Author information

Authors and Affiliations

Corresponding author

Additional information

Translated from Metrologiya, No. 3, pp. 14–24, July–September, 2017.

Rights and permissions

About this article

Cite this article

Skvortsov, B.V., Chernykh, A.V. & Malysheva-Stroikova, A.N. Photoprojection Method for the Measurement of the Geometric Parameters of Objects of Complex Shape. Meas Tech 60, 893–900 (2017). https://doi.org/10.1007/s11018-017-1289-x

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11018-017-1289-x