Abstract

Hierarchical parametric models consisting of observable and latent variables are widely used for unsupervised learning tasks. For example, a mixture model is a representative hierarchical model for clustering. From the statistical point of view, the models can be regular or singular due to the distribution of data. In the regular case, the models have the identifiability; there is one-to-one relation between a probability density function for the model expression and the parameter. The Fisher information matrix is positive definite, and the estimation accuracy of both observable and latent variables has been studied. In the singular case, on the other hand, the models are not identifiable and the Fisher matrix is not positive definite. Conventional statistical analysis based on the inverse Fisher matrix is not applicable. Recently, an algebraic geometrical analysis has been developed and is used to elucidate the Bayes estimation of observable variables. The present paper applies this analysis to latent-variable estimation and determines its theoretical performance. Our results clarify behavior of the convergence of the posterior distribution. It is found that the posterior of the observable-variable estimation can be different from the one in the latent-variable estimation. Because of the difference, the Markov chain Monte Carlo method based on the parameter and the latent variable cannot construct the desired posterior distribution.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Hierarchical parametric models are employed for unsupervised learning in many data-mining and machine-learning applications. Statistical analysis of the models plays an important role for not only revealing the theoretical properties but also the practical applications. For example, the asymptotic forms of the generalization error and the marginal likelihood are used for model selection in the maximum-likelihood and Bayes methods, respectively (Akaike 1974; Schwarz 1978; Rissanen 1986).

Parametric models generally fall into two cases: regular and singular. The present paper focuses on the models, the function of which are continuous and sufficiently smooth with respect to the parameter. In regular cases, the Fisher information matrix is positive definite, and there is a one-to-one relation between the parameter and the expression of the model as a probability density function. Otherwise, the model is singular, and the parameter space includes singularities. Due to these singularities, the Fisher information matrix is not positive definite, and so the conventional analysis methods that rely on its inverse matrix are not applicable. In this case, an algebraic geometrical approach can be used to analyze the Bayes method (Watanabe 2001, 2009).

Hierarchical models have both observable and latent variables. The latent variables represent the underlying structure of the model, while the observable ones correspond to the given data. For example, unobservable labels in clustering are expressed as the latent variables in mixture models, and the system dynamics of time-series data is a sequence of the variables in hidden Markov models. Hierarchical models thus have two estimation targets: observable and latent variables. The well-known generalization error measures the performance of the prediction of a future observable variable. Combining the two model cases and the two estimation targets, there are four estimation cases, which are summarized in Table 1. We will use the abbreviations shown in the table to specify the target variable and the model case; for example, Reg-OV estimation stands for estimation of the observable variable in the regular case.

In the present paper, we will investigate the asymptotic performance of the Sing-LV estimation. One of the main concerns in unsupervised learning is the estimation of unobservable parts and in practical situations, the ranges of the latent variables are unknown, which corresponds to the singular case. The other estimation cases have already been studied; the accuracy of the Reg-OV estimation has been clarified on the basis of the conventional analysis method, and the results have been used for model selection criteria, such as AIC (Akaike 1974). The primary purpose for using the algebraic geometrical method is to analyze the Sing-OV estimation, and the asymptotic generalization error of the Bayes method has been derived for many models (Aoyagi and Watanabe 2005; Aoyagi 2010; Rusakov and Geiger 2005; Yamazaki and Watanabe 2003a, b, 2005a, b; Zwiernik 2011). Recently, an error function for the latent-variable estimation was formalized in a distribution-based manner, and its asymptotic form was determined for the Reg-LV estimation of both the maximum likelihood and Bayes methods (Yamazaki 2014). Hereinafter, the estimation method will be assumed to be the Bayes method unless it is explicitly stated otherwise.

In the Bayes estimation, parameter sampling from the posterior distribution is an important process for practical applications. The behavior of posterior distributions has been studied in the statistical literature. The convergence rate of the posterior distribution has been analyzed (e.g., Ghosal et al. 2000; Le Cam 1973; Ibragimov and Has’ Minskii 1981). Specifically, the rate based on the Wasserstein metrics is elucidated in finite and infinite mixture models (Nguyen 2013). To avoid singularities, conditions for the identifiability guaranteeing the positive Fisher matrix are necessary. Allman et al. (2009) use algebraic techniques to clarify the identifiability in some hierarchical models. In the regular case, the posterior distribution has the asymptotic normality, which means that it converges to a Gaussian distribution. Because the variance of the distribution goes to zero when the number of data is sufficiently large, the limit distribution is the delta distribution. Then, the sample sequence from the posterior distribution converges to a point. On the other hand, in the singular case, the posterior distribution does not have the asymptotic normality and the sequence converges to some area of the parameter space (Watanabe 2001). Studies on the Sing-OV estimation such as Yamazaki and Kaji (2013) have shown that the convergence area of the limit distribution depends on a prior distribution. The behavior of the posterior distribution has not been clarified in the Sing-LV estimation. The analysis of the present paper enables us to elucidate the relation between the prior and the limit posterior distributions.

The main contributions of the present paper are summarized as follows:

-

1.

The algebraic geometrical method for the Sing-OV estimation is applicable to the analysis of the Sing-LV estimation.

-

2.

The asymptotic form of the error function is obtained, and its dominant order is larger than that of the Reg-LV estimation.

-

3.

There is a case, where the limit posterior distribution in the Sing-LV estimation is different from that in the Sing-OV estimation.

The third result is important for practical applications: in some priors, parameter-sampling methods based on latent variables, such as Gibbs sampling in the Markov chain Monte Carlo (MCMC) method, cannot construct the proper posterior distribution because the sample sequence of the MCMC method follows the posterior of the Sing-LV estimation, which has a different convergence area from the desired one in the Sing-OV estimation.

The rest of this paper is organized as follows. The next section formalizes the hierarchical model and the singular case, and introduces the performance of the Reg-OV and the Sing-OV estimations. Section 3 explains the asymptotic analysis of the free energy function and the convergence of the posterior distribution based on the results of the Sing-OV estimation. In Sect. 4, the latent-variable estimation and its evaluation function are formulated in a distribution-based manner. Section 5 shows the main results: the asymptotic error function of general hierarchical models, and the detailed error properties in mixture models. In Sect. 6, we discuss the limit distribution of the posterior in the Sing-LV estimation and differences from the Sing-OV estimation. Finally, Sect. 7 presents conclusions.

2 The singular case and accuracy of the observable-variable estimation

In this section, we introduce the singular case and formalize the Bayes method for the observable-variable estimation. This section is a brief summary of the results on the Reg-OV and the Sing-OV estimations.

2.1 Hierarchical models and singularities

Let a learning model be defined by

where \(x\in R^M\) is an observable variable, \(y\in \{1,\ldots ,K\}\) is a latent one, and \(w\in W \subset R^d\) is a parameter. For the discrete \(x\) such that \(x\in \{1,2,\ldots ,M\}\), all results hold by replacing \(\int dx\) with \(\sum _{x=1}^M\).

Example 1

A mixture of distributions is described by

where \(f\) is the density function associated with a mixture component, which is identifiable for any \(b_k \in W_b \subset R^{d_c}\). The mixing ratios have constraints \(a_k\ge 0\) and \(\sum _{k=1}^K a_k =1\). We regard \(a_1\) as a function of the parameters \(a_1=1-\sum _{k=2}^K a_k\). The parameter \(w\) consists of \(\{a_2,\ldots ,a_K\}\) and \(\{b_1,\ldots ,b_K\}\), where \(w \in \{[0,1]^{K-1},W_b^{Kd_c}\}\). The latent variable \(y\) is the component label.

Assume that the number of data is \(n\) and the observable data \(X^n=\{x_1,\ldots ,x_n\}\) are independent and identically distributed from the true model, which is expressed as

Note that the value range of the latent variable \(y\) described as \([1,\ldots ,K^*]\) is generally unknown and can be different from the one in the learning model. In the example of the mixture model, the true model is expressed as

We also assume that the true model satisfies the minimality condition:

For example, consider a three-component model such that \(q(x|y=1)\ne q(x|y=2)=q(x|y=3)\). This model does not satisfy the minimality condition. Defining a new label, we obtain the following two-component expression, which satisfies the condition;

where \(y\in \{1,\bar{2}\}\) and \(\bar{2}=\{2,3\}\).

The present paper focuses on the case in which the true model is in the class of the learning model. More formally, there is a set of parameters expressing the true model such that

which is referred to as the true parameter set for \(x\). This means that the latent variable range satisfies \(K=K^*\) or \(K>K^*\). The former relation corresponds to the regular case and the latter one to the singular case. The true parameter set \(W_X^t\) includes \(K!\) isolated points in the regular case due to the symmetry of the parameter space. On the other hand, it consists of an analytic set in the singular case. We explain this structure using the following model settings.

Example 2

Assume that \(K=2\) and \(K^*=1\) in the mixture model. For illustrative purposes, let the learning and the true models be defined by

respectively, where \(x\in R^1\) and \(w=\{a,b_1,b_2\}\) such that \(a\in [0,1]\) and \(b_1,b_2\in W_b \subset R^1\). We can confirm that the true parameter set consists of the following analytic set:

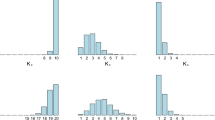

As shown in Fig. 1, let \(W_1\), \(W_2\), and \(W_3\) be the neighborhood of \(W^t_1\), \(W^t_2\), and \(W^t_3\), respectively. The Fisher information matrix is not positive definite in \(W^t_X\). Moreover, the intersections of \(W^t_1\), \(W^t_2\) and \(W^t_3\) are singularities.

When \(K=K^*\), \(W^t_X\) is a set of points, which corresponds to the regular case;

Example 3

If both the learning and the true models have two components,

for \(a^*\ne 0,1\) and \(b^*_1\ne b^*_2\), the estimation will be in the regular case. Due to \(K!=2!=2\), the set consists of two isolated points;

where the Fisher information matrix is positive definite.

2.2 The observable-variable estimation and its performance

In Bayesian statistics, estimation of the observable variables is defined by

where \(\varphi (w;\eta )\) is a prior distribution with the hyperparameter \(\eta \), \(p(w|X^n)\) is the posterior distribution of the parameter, and its normalizing factor is given by

This formulation is available for both the Reg-OV and Sing-OV estimations. In the mixture model, the Dirichlet distribution is often used for the prior distribution of the mixing ratio;

where \(a=\{a_1,\ldots ,a_K\}\), \(b=\{b_1,\ldots ,b_K\}\), \(\eta =\{\eta _1,\eta _2\}\in R^2_{>0}\), and \(\varGamma \) is the gamma function. Since \(a_k\) has the same exponential part for all \(k\), \(\varphi (a;\eta _1)\) is referred to as a symmetric Dirichlet distribution.

The estimation accuracy is measured by the average Kullback–Leibler divergence:

where the expectation is

Let us define the free energy as

which plays an important role in Bayes statistics as a criterion for selecting the optimal model. In the Reg-OV estimation, the Bayesian information criterion (BIC; Schwarz 1978) and the minimum-description-length principle (MDL; Rissanen 1986) are both based on the asymptotic form of \(F(X^n)\). Theoretical studies often analyze the average free energy given by

where the entropy function is defined by

The model that minimizes \(F(X^n)\) is then selected as optimal from among the candidate models. The energy function \(F_X(n)\) allows us to investigate the average behavior of the selection. Note that the entropy term does not affect the selection result because it is independent of the candidate models. According to the definitions, the average free energy and the generalization error have the relation

which implies that the asymptotic form of \(F(n)\) also relates to that of \(G(n)\). The rest of the paper discusses the case \(W_X^t\ne \emptyset \), although it is also important to consider the case \(W_X^t=\emptyset \), where the learning model cannot attain the true model.

The algebraic geometrical analysis (Watanabe 2001, 2009) is applicable to both the regular and singular cases for deriving the asymptotic form of \(F_X(n)\). Its result shows that the form is expressed as

where the coefficients \(\lambda _X\) and \(m_X\) are positive rational and natural, respectively. The reason why the free energy has this form will be explained in the next section. According to the relation Eq. (5), the asymptotic form of the generalization error is given by

Since the learning model can attain the true model, we can confirm that the generalization error converges to zero for \(n\rightarrow \infty \). The coefficients are \(\lambda _X=d/2\) and \(m_X=1\) in the regular case. It is proved that \(\lambda _X<d/2\) in the singular case (Section 7, Watanabe 2009).

3 Asymptotic analysis of the free energy and posterior convergence

This section introduces the asymptotic analysis of \(F_X(n)\) based on algebraic geometry and explains how the prior distribution affects convergence of the posterior distribution. The topics in this section have already been elucidated in the studies on the Sing-OV estimation (e.g., Watanabe 2009).

3.1 Relation between the free energy and the zeta function

Let us define another Kullback–Leibler divergence,

which is assumed to be analytic (Fundamental Condition I, Watanabe 2009). We consider the prior distribution \(\varphi (w;\eta )=\psi _1(w;\eta )\psi _2(w;\eta )\), where \(\psi _1(w;\eta )\) is a positive function of class \(C^{\infty }\) and \(\psi _2(w;\eta )\) is a nonnegative analytic function (Fundamental Condition II, Watanabe 2009). Let the zeta function of a parametric model be given by

where \(z\) is a complex variable. From algebraic analysis, we know that its poles are real, negative, and rational (Atiyah 1970). Let the largest pole and its order be \(z=-\lambda _X\) and \(m_X\), respectively. The zeta function includes the term

where \(f_c(z)\) is a holomorphic function. We define the state density function of \(t>0\) as

The zeta function is its Mellin transform:

Moreover, it is known that the inverse Laplace transform of \(v(t)\) has the same asymptotic form as \(F_X(n)\);

Then, there is the following relation,

Based on the Laplace and the Mellin transforms, the asymptotic forms of all functions are available if one of them is given. Following the transforms from \(\zeta _X(z)\) to \(F_X(n)\) through \(v(t)\), we obtain the asymptotic form

Let us define the effective area of the parameter space, which plays an important role in the convergence analysis of the posterior distribution. According to the results on the Sing-OV estimation, it has been found that the largest pole exists in a restricted parameter space. In Example 2, the parameter space is divided into \(W_1\), \(W_2\), \(W_3\) and the rest of the support of \(\varphi (w;\eta )\). The first three sets are neighborhoods of the analytic sets \(W^t_1\), \(W^t_2\) and \(W^t_3\) constructing \(W^t_X\), respectively. Assume that a pole \(z=-\lambda _e\) of the zeta function

is equal to the largest pole \(z=-\lambda _X\), where \(W_e=W_1\cap W_2\). In the present paper, we refer to \(W_e\) as the effective area. Let the effective area be denoted by the minimum set \(W_1\cap W_2\). In other words, we do not call \(W_1\) the effect area even though \(W_1\) includes \(W_e\). If the largest pole of \(\int _{W_1\setminus W_1\cap W_2} H_X(w)^z\varphi (w;\eta )dw\) is also equal to \(z=-\lambda _X\), the effective area is \(W_1\) since \(W_1\cap W_2\) can not cover the area.

3.2 Phase transition

A switch in the underlying function of the free energy is generally referred to as a phase transition. When the prior of the mixing ratio parameters is the Dirichlet distribution. the phase transition is observed in \(F_X(n)\). Combining the results of Yamazaki et al. (2010) and Yamazaki and Kaji (2013), we obtain the following lemma;

Lemma 1

Suppose that \(K=2\), \(K^*=1\) in the mixture model, where the true and the learning models are given by

respectively. Let the component be expressed as

where \(x\in \{1,\ldots ,M\}\), \(M\) is an integer such that \(K<M\), and \((M\; m)^\top \) is the binomial coefficient. We consider the case \(0<b^*<1\). Let the prior distribution for the mixing ratio be the symmetric Dirichlet distribution, and the one for \(b_k\) be analytic and positive. Then the largest pole of the zeta function \(\zeta _X(z)\) is

Moreover, the effective area \(W_e\) is given by

The proof is in Appendix 3. Lemma 1 indicates that the free energy has the phase transition at \(\eta _1=1/2\).

3.3 Convergence area of the posterior distribution

The asymptotic form of the free energy determines the limit structure of the posterior distribution. In this subsection, we will show that the convergence area is the effective parameter area.

The free energy \(F(X^n)\) has an asymptotic form similar to the average energy \(F_X(n)\) (Watanabe 2009, Main Formula II),

where \(S(X^n)=\frac{1}{n}\sum _{i=1}^n\ln q(x_i)\). According to \(Z(X^n) = \exp (-F(X^n))\), the posterior distribution has the expression,

Let us divide the neighborhood of \(W_X^t\) into \(W_e \cup W_o\), where \(W_e\) is the effective area. Then, there is a pole \(z=-\mu _X\) such that \(\mu _X>\lambda _X\) in the other area \(W_o\), and the posterior value of \(W_o\) is described by

The posterior asymptotically has zero value in \(W_o\), which means that it converges to the effective area.

According to Lemma 1, the effective area depends on the hyperparameter. Therefore, the convergence area changes at the phase transition point \(\eta _1=1/2\). It also shows how the learning model realizes the true one. In \(W_1\cup W_3\), the true model is expressed by one-component model, which means that the redundant component is eliminated. On the other hand, all components of the learning model are used in \(W_2\).

The phase transition is observed in general mixture models;

Theorem 1

Let a learning model and the true one be expressed as Eqs. (1) and (2), respectively. When the prior of the mixing ratio is the Dirichlet distribution of Eq. (4), the average free energy \(F_X(n)\) has at least two phases: the phase that eliminates all redundant components when \(\eta _1\) is small, and the one that uses them when \(\eta _1\) is sufficiently large.

The proof is in Appendix 3.

4 Formal definition of the latent-variable estimation and its accuracy

This section formulates the Bayes latent-variable estimation and an error function that measures its accuracy.

We first consider a detailed definition of a latent variable. Let \(Y^n=\{y_1,\ldots ,y_n\}\) be unobservable data, which correspond to the latent parts of the observable \(X^n\). Then, the complete form of the data is \((x_i,y_i)\), and \((X^n,Y^n)\) and \(X^n\) are referred to as complete and incomplete data, respectively. The true model generates the complete data \((X^n,Y^n)\), where the range of the latent variables is \(y_i\in \{1,\ldots ,K^*\}\). The learning model, on the other hand, has the range \(y_i\in \{1,\ldots ,K\}\). For a unified description, we define that the true model has probabilities \(q(y)=0\) and \(q(x,y)=0\) for \(y>K^*\).

We define the true parameter set for \((x,y)\) as

which is a proper subset of \(W_X^t\). In Example 2,

The subsets \(W_2=\{b_1=b_2=b^*\}\) and \(W_3=\{a=0,b_2=b^*\}\) in \(W_X^t\) are excluded since \(W_{XY}^t\) takes account of the representation with respect to not only \(x\) but also \(y\). Due to the assumption \(W_X^t\ne \emptyset \), \(W_{XY}^t\) is not empty. The set \(W_{XY}^t\) again consists of an analytic set in the singular case, and it is a unique point in the regular case.

While latent-variable estimation falls into various types according to the target of the estimation, the present paper focuses on the Type-I estimation of Yamazaki (2014): the joint probability of \((y_1,\ldots ,y_n)\) is the target and is written as \(p(Y^n|X^n)\). The Bayes estimation has two equivalent definitions:

where the marginal likelihood for the complete data is given by

It is easily confirmed that \(Z(X^n)=\sum _{Y^n} Z(X^n,Y^n)\).

The true probability of \(Y^n\) is uniquely given by

The accuracy of the estimation is measured by the difference between \(q(Y^n|X^n)\) and \(p(Y^n|X^n)\). Thus, we define the error function as the average Kullback–Leibler divergence,

where the expectation is defined as

5 Asymptotic analysis of the error function

In this section, we show that the algebraic geometrical analysis is applicable to the Sing-LV estimation, and present the asymptotic form of the error function \(D(n)\).

5.1 Conditions for the analysis

Before showing the asymptotic form of the error function, we state necessary conditions.

Let us define the zeta function on the complete data \((x,y)\) as

where the Kullback–Leibler divergence \(H_{XY}(w)\) is given by

Let the largest pole of \(\zeta _{XY}(z)\) be \(z=-\lambda _{XY}\), and let its order be \(m_{XY}\).

We consider the following conditions:

-

(A1)

The divergence functions \(H_{XY}(w)\) and \(H_X(w)\) are analytic.

-

(A2)

The prior distribution has the compact support, which includes \(W_X^t\), and has the expression \(\varphi (w;\eta )=\psi _1(w;\eta )\psi _2(w;\eta )\), where \(\psi _1(w;\eta )>0\) is a function of class \(C^{\infty }\) and \(\psi _2(w;\eta )\ge 0\) is analytic on the support of \(\varphi (w;\eta )\).

They correspond to the Fundamental Conditions I and II in Watanabe (2009), respectively. It is known that models with discrete \(x\) such as the binomial mixture satisfy (A1) (Yamazaki et al. 2010). On the other hand, if \(x\) is continuous, there are some models, of which \(H_X(w)\) is not analytic;

Example 4

(Example 7.3 in Watanabe 2009) In the Gaussian mixture, \(H_X(w)\) is not analytic, which means that the mixture model does not satisfies (A1). Let us consider a simple case; \(K=2\) and \(K^*=1\), where the true model and a learning model are given by

respectively, where \(x \in R^1\),

and \(b\in W^1=R^1\). Then,

where the last expression is a formal expansion. Since its convergence radius is zero at \(a=0\), \(H_X(a)\) is not analytic. Based on the similar way, we can find that \(H_X(w)\) is not analytic in a general Gaussian mixture .

The following example shows a prior distribution for the mixture model satisfying (A2).

Example 5

The symmetric Dirichlet distribution satisfies the condition (A2) because Eq. (4) is obviously analytic and non negative in its support. Choosing an analytic distribution for \(\varphi (b;\eta _2)\), we obtain the prior \(\varphi (w;\eta )\) satisfying the condition (A2).

5.2 Asymptotic form of the error function

Now, we show the main theorem on the asymptotic form of the error function:

Theorem 2

Let the true distribution of the latent variables and the estimated distribution be defined by Eqs. (9) and (10), respectively. By assuming the conditions (A1) and (A2), the asymptotic form of \(D(n)\) is expressed as

The proof is in Appendix 1. The theorem indicates that the algebraic geometrical method plays an essential role for the analysis of the Sing-LV estimation because the coefficients consist of the information of the zeta functions such as \(\lambda _{XY}\), \(\lambda _X\), \(m_{XY}\) and \(m_X\). The order \(\ln n/n\) has not ever appeared in the Reg-LV estimation. In the Reg-LV estimation such that \(K=K^*\), the asymptotic error function has the following form (Yamazaki 2014);

where \(w^*\) is the unique point consisting of \(W_{XY}^t\). The dominant order is \(1/n\), and the coefficient is determined by the Fisher information matrices on \(p(x,y|w)\) and \(p(x|w)\). Theorem 2 implies that the largest possible order is \(\ln n/ n\) in the Sing-LV estimation. This order change is adverse for the performance because the error converges more slowly to zero. In singular cases, the probability \(p(Y^n|X^n)\) is constructed over the space \(Y^n \in K^n\) while the true probability \(q(Y^n|X^n)\) is over \(Y^n\in K^{*n}\). The size of the redundant space \(K^n-K^{*n}\) grows exponentially with the amount of training data. For realizing \(p(x,y|w^*)\), where \(w^* \in W_{XY}^t\), we must assign zero to the probabilities on the vast redundant space. The increased order reflects the cost of assigning these values.

Let us compare the dominant order of \(D(n)\) with that of the generalization error. We find that both Reg-OV and Sing-OV estimations have the same dominant order \(1/n\) as shown in Eq. (6) while the redundancy and the hyperparameter affect the coefficients. Thus, changing the order is a unique phenomenon of the latent-variable estimation.

5.3 Asymptotic error in the mixture model

In Theorem 2, the possible dominant order was calculated as \(\ln n/n\). However, there is no guarantee that this is the actual maximum order; the order can decrease to \(1/n\) if the coefficients are zero, where the zeta functions \(\zeta _{XY}(z)\) and \(\zeta _X(z)\) have their largest poles in the same position and their multiple orders are also the same. The result of the following theorem clearly shows that the dominant order is \(\ln n/n\) in the mixture models.

Theorem 3

Let the learning and the true models be mixtures defined by Eqs. (1) and (2), respectively. Assume the conditions (A1) and (A2). The Bayes estimation for the latent variables, Eq. (9), with the prior represented by Eqs. (3) and (4) has the following bound for the asymptotic error:

The proof is in Appendix 1. Due to the definition of the Dirichlet distribution, \(\eta _1\) is positive. Combining this with the assumption \(K^*<K\), we obtain that the coefficient of \((\ln n)/n\) is positive, which indicates that it is the dominant order.

The Dirichlet prior distribution for the mixing ratio is qualitatively known to have a function controlling the number of available components, the so-called automatic relevance determination (ARD); a small hyperparameter tends to have a result with few components due to the shape of the distribution. Theorem 3 quantitatively shows an effect of the Dirichlet prior. The lower bound in the theorem mathematically supports the ARD effect; the redundancy \(K-K^*\) and the hyperparameter \(\eta _1\) have a linear influence on the accuracy.

Theorem 3 holds in a wider class of the mixture models since the error is evaluated as the lower bound. The following corollary shows that the Gaussian mixture has the same bound for the error even though it does not satisfy (A1) as shown in Example 4.

Corollary 1

Assume that in a mixture model, \(H_{XY}(w)\) is analytic, and the prior distribution for the mixing ratio is the symmetric Dirichlet distribution. If there is a positive constant \(C_1\) such that

the error function has the same lower bound as Theorem 3. In the Gaussian mixture, components of which are defined by

where \(x\in R^M\) and \(b\in W^M=R^M\), \(H_{XY}(w)\) is analytic and the inequality holds.

The proof is in Appendix 1.

6 Discussion

Theorem 2 shows that the asymptotic error has the coefficient \(\lambda _{XY}-\lambda _X\), which is the difference of the largest poles in the zeta functions. Based on the free energy of the complete data defined as \(F(X^n,Y^n)=-\ln Z(X^n,Y^n)\), we find that the error is determined by the different properties between \(F(X^n,Y^n)\) and \(F(X^n)\) since their asymptotic forms are expressed as

where \(S(X^n,Y^n) = -\frac{1}{n}\sum _{i=1}^n \ln q(x_i,y_i)\).

In this section, we examine the properties of \(F(X^n,Y^n)\) and indicate that the difference from those of \(F(X^n)\) affects the behavior of the Sing-LV estimation and the parameter sampling from the posterior distribution.

6.1 Effect to eliminate redundant labels

According to Eq. (9), the MCMC sampling of the \(Y^n\)’s following \(p(Y^n|X^n)\) is essential for the Bayes estimation. The following relation indicates that we do not need to calculate \(Z(X^n)\) and that the value of \(Z(X^n,Y^n)\) determines the properties of the estimation:

The expression of \(p(X^n,Y^n)\) can be tractable with a conjugate prior, which marginalizes out the parameter integral (Dawid and Lauritzen 1993; Heckerman 1999).

We determine where the estimated distribution \(p(Y^n|X^n)\) has its peak. Obviously, the label assignment \(Y^n\) minimizing \(F(X^n,Y^n)\) provides the peak due to the definition \(F(X^n,Y^n)=-\ln Z(X^n,Y^n)\) and Eq. (12). Let this assignment be described as \(\bar{Y}^n\);

The following discussion shows that \(\bar{Y}^n\) does not include the redundant labels.

We have to consider the symmetry of the latent variable in order to discuss the peak. In latent-variable models, both the latent variable and the parameter are symmetric. In Example 2, the component \(f(x|b^*)\) of the true model can be attained by the first component \(a_1f(x|b_1)\) or the second one \((1-a_1)f(x|b_2)\) of the learning model. Because the true label \(y=1\), which the true model provides, is unobservable, there are two proper estimation results \(Y^n=\{1,\ldots ,1\}\) and \(Y^n=\{2,\ldots ,2\}\) to indicate that the true model consists of one component. This is the symmetry of the latent variable. In the parameter space, it corresponds to the symmetric structure of \(W_1\) and \(W_3\) shown in Fig. 1. The symmetry makes it difficult to interpret the estimation results, which is known as the label-switching problem.

For the purpose of the theoretical evaluation, the definition of the error function \(D(n)\) selects the true assignment of the latent variable. In the above example, only \(Y^n=\{1,\ldots ,1\}\) is accepted as the proper result. However, there is no selection of the true assignment in the estimation process; other symmetric assignments such as \(Y^n=\{2,\ldots ,2\}\) will be the peak of \(p(Y^n|X^n)\). Then, the true parameter area \(W^t_{XY}\) is not sufficient to describe the peak. Taking account of the symmetry, we define another analytic set of the parameter as

\(\varSigma \) is the set of injective functions from \(\{1,\ldots ,K^*\}\) to \(\{1,\ldots ,K\}\). It is easy to confirm that \(W^t_{XY}\subset W_{XY}^p\). In Example 2, \(W^t_{XY}=W^t_1\subset W^t_1\cup W^t_3=W_{XY}^p\). Note that the redundant components are eliminated in \(p(x|w^*)\), where \(w^*\in W^p_{XY}\).

Let us analyze the location of the peak. Define that

where \(w^* \in W^p_{XY}\). Switching the label based on the symmetry, we can easily prove that \(\max _{w^*,Y^n}S'(X^n,Y^n)=\max _{Y^n}S(X^n,Y^n)\). Moreover, \(-\frac{1}{n}\sum _{i=1}^n \ln p(x_i,y_i|w)\) with \(w\in W_X^t \setminus W_{XY}^p\), such as \(w\in W^t_2\) in Example 2, cannot realize \(S(X^n,Y^n)\) according to a simple calculation as shown in the next paragraph. Because the leading term of the asymptotic \(F(X^n,Y^n)\) is \(nS(X^n,Y^n)\) and \(nS'(X^n,\bar{Y}^n)\) realizes it, the true assignment \(\bar{Y}^n\) follows the parameter \(w^* \in W^p_{XY}\). Recalling that the redundant components are eliminated when \(w\in W^p_{XY}\), we can conclude that the redundant labels are eliminated in \(\bar{Y}^n\). This elimination occurs in any prior distribution if its support includes \(W^p_{XY}\).

Let us confirm the elimination in Example 2. We consider three parameters; \(w^*_1\in W^t_1=\{a=1,b_1=b^*\}\), \(w^*_2\in W^t_2=\{b_1=b_2=b^*\}\) and \(w^*_3\in W^t_3=\{a=0,b_2=b^*\}\). The leading term of the asymptotic \(F(X^n,Y^n)\) is expressed as

for \(j=1,2,3\). This is rewritten as

where \(\delta _{i,j}\) is the Kronecker delta. The assignment \(\bar{Y}^n\) depends on \(w^*_j\). For example, \(\bar{Y}^n=\{1,\ldots ,1\}\) for \(w^*_1\) and \(\bar{Y}^n=\{2,\ldots ,2\}\) for \(w^*_3\). Then, we obtain that

where \(N_1=\sum _{i=1}^n \delta _{y_i,1}\) and \(N_2=\sum _{i=1}^n \delta _{y_i,2}\). The cases \(j=1\) and \(j=3\) have the same value and the case \(j=2\) is smaller than the others due to the first two terms in Eq. (13), which holds for any value of \(0<a<1\) in \(W^t_2\). This means that \(W^t_2\) cannot make \(p(Y^n|X^n)\) maximum. In other words, the assignment \(Y^n\) using both labels \(1\) and \(2\) is not the peak.

6.2 Two approaches to calculate \(p(Y^n|X^n)\) and their difference

It is necessary to emphasize that the calculation of \(p(Y^n|X^n)\) based on sampling from \(p(w|X^n)\) following Eq. (8) can be inaccurate. According to Theorem 1 and Eq. (7), we confirm that \(F(X^n)\) has a phase transition in mixture models due to the hyperparameter of the Dirichlet prior. This means that, when the hyperparameter \(\eta _1\) is large, the Monte Carlo sampling are from the area, in which all the components are used such as \(W^t_2\). In the numerical computation, the integrand of Eq. (8) will be close to \(\prod _{i=1}^n p(x_i,y_i|w^*_2)/p(x_i|w^*_2)\), where \(w^*_2\in W^t_2\). Because \(w^*_2\in W^t_2 \subset W^t_X\),

On the other hand, based on Eq. (9), the desired value of \(p(Y^n|X^n)\) is calculated as

Since \(S'_2(X^n,Y^n)>S(X^n,Y^n)\), the value of Eq. (8) is much smaller than that of Eq. (9). Therefore, the result of the numerical integration in Eq. (8) is almost zero. The parameter area providing non-zero value of integrand in Eq. (8) is located in the tail of the posterior distribution when \(p(w|X^n)\) converges to \(W^t_X\setminus W^p_{XY}\).

6.3 Failure of parameter sampling from the posterior distribution

In the previous subsection, parameter sampling from the posterior distribution can make an adverse effect on the calculation of the distribution of the latent variable. Here, in the other way, we show that latent-variable sampling can construct an undesired posterior distribution.

There are methods to sample a sequence of \(\{w,Y^n\}\) from \(p(w,Y^n|X^n)\). Ignoring \(Y^n\), we obtain the sequence \(\{w\}\). The Gibbs sampling in the MCMC method (Robert and Casella 2005) is one of the representative techniques.

[Gibbs Sampling for a Model with a Latent Variable]

-

1.

Initialize the parameter;

-

2.

Sample \(Y^n\) based on \(p(Y^n|w,X^n)\);

-

3.

Sample \(w\) based on \(p(w|Y^n,X^n)\);

-

4.

Iterate by alternately updating Step 2 and Step 3.

The sequence of \(\{w,Y^n\}\) obtained by this algorithm follows \(p(w,Y^n|X^n)\). The extracted parameter sequence \(\{w\}\) is assumed to be samples from the posterior because \(p_G(w|X^n)=\sum _{Y^n} p(w,Y^n|X^n)\) is theoretically equal to \(p(w|X^n)\). However, in the mixture models, the practical value of \(p_G(w|X^n)\) based on the Monte Carlo method can be different from that of the original posterior \(p(w|X^n)\) when the hyperparameter for the mixing ratio \(\eta _1\) is large.

Let us consider the expression

We determine a location of a pair \((\bar{w},\bar{Y}^n)\) that minimizes this expression in the asymptotic case \(n\rightarrow \infty \) because the relation \(p(X^n,Y^n,w)\propto p(w,Y^n|X^n)\) indicates that the sequence \(\{w,Y^n\}\) is mainly taken from the neighborhood of the pair. The third term of the last expression does not have any asymptotic effect because it has the constant order on \(n\). The first two terms have the same expression as Eq. (13). Based on the calculation of \(S'_j(X^n,Y^n)\), \(\bar{w}\in W^p_{XY}\) and \(\bar{Y}^n=\arg \max _{Y^n} p(X^n,Y^n,\bar{w})\). Therefore, the practical value of \(p_G(w|X^n)\) is calculated by the sequence \(\{w\}\) around \(W_{XY}^p\) for any \(\eta _1\) while the convergence area of the original \(p(w|X^n)\) depends on the phase of \(F(X^n)\) controlled by \(\eta _1\).

In Example 2, the posterior \(p(w|X^n)\) converges to \(W^t_2\) when \(\eta _1\) is large. On the other hand, the sampled sequence based on \(p(X^n,Y^n,w)\) are mainly from \(W_1\cup W_3\) since \(S'_2(X^n,\bar{Y}^n_2)>S'_1(X^n,\bar{Y}^n_1)=S'_3(X^n,\bar{Y}^n_3)\), where \(\bar{Y}^n_j\) stands for the assignment minimizing \(S'_j(X^n,Y^n)\). In order to construct the sequence \(\{w\}\) following \(p(w|X^n)\), we need samples \((w,Y^n)\in W_2\times \bar{Y}^n_2\), which are located in the tail of \(p(w,Y^n|X^n)\). In theory, the sequence \(\{w\}\) from \(p(w,Y^n|X^n)\) realizes the one from \(p(w|X^n)\). However, in practice, it is not straightforward to obtain \(\{w,Y^n\}\) from the tail of \(p(w,Y^n|X^n)\). This property of the Gibbs sampling has been reported in a Gaussian mixture model (Nagata and Watanabe 2009). The experimental results show that the obtained sequence of \(\{w\}\) is localized in the area corresponding to \(W_{XY}^p\). Note that there is no failure of the MCMC method when \(\eta _1\) is sufficiently small, where the peaks of \(p(w|X^n)\) and \(p(w,Y^n|X^n)\) are in the same area. Thus, to judge the reliability of the MCMC sampling, we have to know the phase transition point such as \(\eta _1=1/2\) in Lemma 1.

7 Conclusions

The present paper clarifies the asymptotic accuracy of the Bayes latent-variable estimation. The dominant order is at most \(\ln n/n\), and its coefficient is determined by a positional relation between the largest poles of the zeta functions. According to the mixture-model case, it is suggested that the order is dominant and the coefficient is affected by the redundancy of the learning model and the hyperparameters. The accuracy of prediction can be approximated by methods such as the cross-validation and bootstrap methods. On the other hand, there is no approximation for the accuracy of latent-variable estimation, which indicates that the theoretical result plays a central role in evaluating the model and the estimation method.

References

Akaike, H. (1974). A new look at the statistical model identification. IEEE Transaction on Automatic Control, 19, 716–723.

Allman, E., Matias, C., & Rhodes, J. (2009). Identifiability of parameters in latent structure models with many observed variables. Annals of Statistics, 37, 3099–3132.

Aoyagi, M. (2010). Stochastic complexity and generalization error of a restricted boltzmann machine in Bayesian estimation. Journal of Machine Learning Research, 11, 1243–1272.

Aoyagi, M., & Watanabe, S. (2005). Stochastic complexities of reduced rank regression in Bayesian estimation. Neural Networks, 18, 924–933.

Atiyah, M. F. (1970). Resolution of singularities and division of distributions. Communications on Pure and Applied Mathematics, 23, 145–150.

Dawid, A. P., & Lauritzen, S. L. (1993). Hyper-Markov laws in the statistical analysis of decomposable graphical models. Annals of Statistics, 21(3), 1272–1317.

Ghosal, S., Ghosh, J. K., & Vaart, A. W. V. D. (2000). Convergence rates of posterior distributions. Annals of Statistics, 28, 500–531.

Heckerman, D. (1999). Learning in graphical models. In M. I. Jordan (Ed.), A tutorial on learning with Bayesian networks (pp. 301–354). Cambridge, MA, USA: MIT Press.

Hironaka, H. (1964). Resolution of singularities of an algebraic variety over a field of characteristic zero I. Annals of Mathematics, 79(1), 109–203.

Ibragimov, I. A., & Has’ Minskii, R. Z. (1981). Statistical estimation-asymptotic theory (Vol. 16). Berlin: Springer.

Le Cam, L. (1973). Convergence of estimates under dimensionality restrictions. Annals of Statistics, 1, 38–53.

Nagata, K., & Watanabe, S. (2009). Design of exchange monte carlo method for Bayesian learning in normal mixture models. In: Proceedings of the 15th international conference on advances in neuro-information processing—Volume Part I (pp. 696–706). Berlin, Heidelberg: Springer, ICONIP’08.

Nguyen, X. (2013). Convergence of latent mixing measures in finite and infinite mixture models. Annals of Statistics, 41, 370–400.

Rissanen, J. (1986). Stochastic complexity and modeling. Annals of Statistics, 14, 1080–1100.

Robert, C. P., & Casella, G. (2005). Monte Carlo statistical methods (Springer texts in statistics). Secaucus, NJ: Springer New York Inc.

Rusakov, D., & Geiger, D. (2005). Asymptotic model selection for naive Bayesian networks. Journal of Machine Learning Research, 6, 1–35.

Schwarz, G. E. (1978). Estimating the dimension of a model. Annals of Statistics, 6(2), 461–464.

Watanabe, S. (2001). Algebraic analysis for non-identifiable learning machines. Neural Computation, 13(4), 899–933.

Watanabe, S. (2009). Algebraic geometry and statistical learning theory. New York, NY: Cambridge University Press.

Yamazaki K (2014) Asymptotic accuracy of distribution-based estimation for latent variables. Journal of Machine Learning Research, 13, 3541–3562.

Yamazaki, K., & Kaji, D. (2013). Comparing two Bayes methods based on the free energy functions in Bernoulli mixtures. Neural Networks, 44C, 36–43.

Yamazaki, K., & Watanabe, S. (2003a). Singularities in mixture models and upper bounds of stochastic complexity. International Journal of Neural Networks, 16, 1029–1038.

Yamazaki, K., & Watanabe, S. (2003b). Stochastic complexity of Bayesian networks. In Proceedings of UAI, pp. 592–599.

Yamazaki, K., & Watanabe, S. (2005a). Algebraic geometry and stochastic complexity of hidden Markov models. Neurocomputing, 69(1–3), 62–84.

Yamazaki, K., & Watanabe, S. (2005b). Singularities in complete bipartite graph-type Boltzmann machines and upper bounds of stochastic complexities. IEEE Transactions on Neural Networks, 16(2), 312–324.

Yamazaki, K., Aoyagi, M., & Watanabe, S. (2010). Asymptotic analysis of Bayesian generalization error with Newton diagram. Neural Networks, 23, 35–43.

Zwiernik, P. (2011). An asymptotic behaviour of the marginal likelihood for general Markov models. Journal of Machine Learning Research, 999888, 3283–3310.

Author information

Authors and Affiliations

Corresponding author

Additional information

This research was partially supported by the Kayamori Foundation of Informational Science Advancement and KAKENHI 23500172 and 24700139.

Editor: Peter Flach.

Appendices

Appendix 1

Here, we prove Theorems 2 and 3, and Corollary 1.

1.1 Proof of Theorem 2

Proof

Let us define another average free energy as

where the entropy function is given by

According to the definitions of the error function \(D(n)\) and the Bayes estimation method Eq. (9), it holds that

Based on (A1), (A2), and algebraic geometrical analysis, we obtain the asymptotic forms of \(F_{XY}(n)\) and \(F_X(n)\):

which proves the theorem. \(\square \)

1.2 Outline of the calculation of a pole of the zeta function

We will show the outline of calculation to find a pole. Let us introduce some useful lemmas for the zeta function. The proofs are omitted because they are almost obvious due to the relation between the free energy and the zeta function.

Lemma 2

Let the largest poles of the zeta functions \(\int \!\! H_1(w)^z\varphi (w)dw\) and \(\int H_2(w)^z\varphi (w)dw\) be \(z=-\lambda _1\) and \(z=-\lambda _2\), respectively. It holds that \(\lambda _1\le \lambda _2\) when \(H_1(w)\le H_2(w)\) on the support of \(\varphi (w)\).

Lemma 3

Under the same conditions as Lemma 2, it holds that \(\lambda _1=\lambda _2\) if there exist positive constants \(C_1\) and \(C_2\) such that \(C_1 H_2(w) \le H_1(w) \le C_2 H_2(w)\).

We define an equivalence relation \(H_1(w)\equiv H_2(w)\) due to \(\lambda _1=\lambda _2\) in Lemma 3.

Let us now calculate a general zeta function \(\int H(w)^z\varphi (w)dw\). First, we focus on the restricted area \(W_{res}\), which is the neighborhood of \(\{w: H(w)=0\}\) in the parameter space because poles of the zeta function do not depend on other areas (Watanabe 2001). Next, we need a function \(H^{\text {alg}}(w)\), which is a polynomial of \(w\) and satisfies \(H(w)\equiv H^{\text {alg}}(w)\). Based on Lemma 3, the largest pole of the zeta function \(\int _{W_{res}} H^{\text {alg}}(w)^z \varphi (w)dw\) is the same as that of the original zeta function. According to the resolution of singularities (Hironaka 1964), there is a mapping \(u=\varPhi (w)\) such that

where \(a(u)\) is a non-zero analytic function in \(\{u{:}H(\varPhi (w))=0\}\), and \(\alpha _1,\ldots ,\alpha _d\) are integers. Let \(|\varPhi |=|u_1|^{\beta _1}\ldots |u_d|^{\beta _d}\) be the Jacobian, and the prior distribution is described as \(\varphi (\varPhi (w))=u_1^{\gamma _1}\ldots u_d^{\gamma _d}\), where \(\beta _i\) and \(\gamma _i\) are integers. Then, it holds that

Calculating the integral over \(u_i\) in the last expression, we find that the zeta function has factors \((2\alpha _iz+\beta _i+\gamma _i+1)^{-1}\). This means that there are poles \(z=-(\beta _i+\gamma _i+1)/(2\alpha _i)\).

When it is not straightforward to find the multiple form such as Eq. (14), we can consider a partially-multiple form;

where the function \(g(u\setminus u_1)\) can be a polynomial of \(u\setminus u_1\). The zeta function is written as

Calculating the integral over \(u_1\), we obtain a pole \(z=-(\beta _1+\gamma _1+1)/(2\alpha _1)\).

Assume that we obtain a partially-multiple form as the upper bounds such that

where the Jacobian and the prior include factors \(|u_1|^{\beta _1}\) and \(u_1^{\gamma _1}\), respectively. Due to Lemma 2, a pole of the zeta function with respect to the right-hand side provides the upper bounds \(\lambda \le (\beta _1+\gamma _1+1)/(2\alpha _1)\).

1.3 Proof of Theorem 3

The following lemma shows the calculation of \(\lambda _{XY}\).

Lemma 4

The largest pole of the zeta function \(\zeta _{XY}(z)\) is

Proof

(Lemma 4) We consider a restricted parameter space \(W_1\), which is a neighborhood of \(W^t_{XY}\) given by

This is a generalization of \(W_1\) in Example 2. The Kullback–Leibler divergence has the expression

Based on the shift transformation \(\varPhi _1(w)\), such that

we can find an equivalent polynomial described as

where the detailed derivation is in Appendix 2. Let the right-hand side of Eq. (15) be \(H^{\text {alg}}_{XY}(\varPhi _1(w))\), and consider a zeta function given by

According to Lemma 3, the positions of the poles of \(\zeta _1(z)\) are the same as those of \(\zeta _{XY}(z)\). By using a blow-up \(\varPhi _2\) defined by

we obtain the following expression in the restricted area,

where \(f_1\) is a function consisting of the parameters except for \(u_2\), and a factor on \(|u_2|\) is derived from the Jacobian of \(\varPhi _2\). Note that there is not \(u_1\) as a parameter in \(\varPhi _2\varPhi _1(w)\) since \(w_1\) is already omitted on the basis of the relation \(a_1=1-\sum _{k=2}^K a_k\). The symmetric Dirichlet prior has a factor \(\prod _{k=2}a_k^{\eta _1-1}\) in the original parameter space. According to \(\varPhi _2\varPhi _1(a_k)=u_2^2u_k\) for \(k>K^*\), it has a factor \(u_2^{2(K-K^*)(\eta _1-1)}\) in the space of \(\varPhi _2\varPhi _1(w)\), which indicates that \(\zeta _1(z)\) has a pole at \(z=-(K^*-1+K^*d_c)/2-(K-K^*)\eta _1\). Considering the symmetry of the parameters in \(H_{XY}^{\text {alg}}(w)\), we determine that this pole is the largest and that its order is \(m_{XY}=1\), which proves Lemma 4. \(\square \)

The result for \(\lambda _X\) is shown in the following lemma.

Lemma 5

The largest pole of the zeta function \(\zeta _X(z)\) has the bound

Proof

(Lemma 5) It is known [cf. Yamazaki et al. (2010) ; Section 7.8 of Watanabe (2009)] that, in the restricted area \(W_1\), there are positive constants \(C_1\) and \(C_1'\) such that

Using \(\varPhi _1(w)\), we obtain

Because \(f(x|b_k)\) is a regular model, there is a positive constant \(C_2\) such that

in \(W_1\), where the detailed derivation is in Appendix 2. Let the right-hand side be \(H_X^{\text {alg}}(\varPhi _1(w))\), and consider a zeta function given by

According to Lemma 2, a pole \(z=-\mu \) of the zeta function \(\zeta _2(z)\) provides bounds for the largest pole of \(\zeta _X(z)\), such that \(z=-\lambda _X\ge -\mu \). By using a blow-up \(\varPhi _3\) defined by

we obtain

where \(f_2\) is a function of the parameters except for \(u_2\), and the factor on \(|u_2|\) is derived from the Jacobian of \(\varPhi _3\). It is easy to confirm that the Dirichlet prior has a factor \(u_2^{(K-K^*)(\eta _1-1)}\). Therefore, \(\zeta _2(z)\) has a pole at \(z=-\mu =-(K^*-1+K^*d_c)/2-(K-K^*)\eta _1/2\), which proves Lemma 5. \(\square \)

We are now prepared to prove Theorem 3.

Proof

(Theorem 3) According to Theorem 2, it holds that

Combining Lemmas 4 and 5, we obtain

which completes the proof. \(\square \)

1.4 Proof of Corollary 1

Proof

Since \(H_X(w)\) has the bound,

Lemma 5 immediately holds. Due to the analytic divergence \(H_{XY}(w)\), Lemma 4 also holds. Combining these lemmas, we obtain the same lower bound as Theorem 3. In the Gaussian mixture,

Because \(f(x|b)\) is identifiable, \(H_{XY}(w)\) is analytic. Section 7.8 in (Watanabe 2009) shows that \(H_X(w)\) has the upper bound expressed as Eq. (17) in the Gaussian mixture, which proves the corollary. \(\square \)

Appendix 2

This section shows supplementary proofs for some equations in the proof of Theorem 3.

According to the analysis with the Newton diagram (Yamazaki et al. 2010), the following relations hold;

where \(w=\{w_1,w_2,\ldots ,w_d\}\), and \(h_0\) and \(h_1\) are polynomial. Using these relations, we prove Eqs. (15) and (16).

1.1 Proof of Equation (15)

Recall that the Kullback–Leibler divergence has the following equivalent expression;

Based on the transformation \(\varPhi _1(w)\) and the Taylor expansion of \(\ln (1+\varDelta x)\) around \(|\varDelta x|= 0\), we obtain

where \(h_r(w)\) includes the higher order terms on \(\bar{a}_k\). By applying Eq. (19) to \(\bar{a}_k^2\), it holds that

Due to Eqs. (20) and (21), \(h_r(w)\) is excluded;

Because \(f(x|b_k)\) is regular, it is known that

which proves that

\(\square \)

1.2 Proof of Equation (16)

Recall that the Kullback–Leibler divergence \(H_X(\varPhi _1(w))\) has the following bound;

In the area \(\varPhi _1(W_1)\), there is a positive constant \(C''_1\) such that

The Taylor expansion at \(\bar{b}_k\) yields

The second term of the right-hand side in Eq. (22) has the following bound,

where \(C_b\) is a positive constant and \(\bar{b}_k^2h_r(\bar{b}_k)\) stands for the rest of the terms. Based on Eq. (21), the bound has the equivalent form,

which changes the first term of Eq. (22) into

due to Eq. (19). Then, there is a positive constant \(C_2\) such that

\(\square \)

Appendix 3

1.1 Proof of Lemma 1

Proof

The calculation is based on the way of the proof of Theorem 3. Define the shift transformation \(\varPhi _4\) given by

This corresponds to focusing on the area \(W_1\cup W_2\). Following the calculation of Yamazaki et al. (2010), we obtain

Let the right-hand side be \(H_{X2}^{\text {alg}}(w)\), and consider a zeta function given by

By using a blow-up \(\varPhi _5\) defined by

we obtain the following expression,

where \(f_3\) is a function of the parameter \(v_2\). The prior has a factor \(v_1^{\eta _1-1}\). Therefore, \(\zeta _3(z)\) has poles at \(z=-3/4\) and \(z=-(1+\eta _1)/2\), which are calculated from the factors \(u_1\) and \(v_1\), respectively. Considering the cases \(u_1=0\) and \(v_1=0\), we find that the effective area of the pole \(z=-3/4\) is \(W_2\) and that of \(z=-(1+\eta _1)/2\) is \(W_1\). Due to the symmetry, the area \(W_2\cup W_3\) has the same poles. Then, the largest pole changes at \(\eta _1=1/2\), where the order of the pole is \(m_X=2\). This completes the proof. \(\square \)

1.2 Proof of Theorem 1

First, we introduce tighter upper bounds on \(\lambda _X\).

Lemma 6

Under the same condition as in Theorem 3, it holds that

Proof

Consider the area \(W_2\), which is the neighborhood of

Let us define the shift transformation \(\varPhi _5\) given by

Based on the Taylor expansion of \(f(x|\bar{b}_k+b^*_k)\), there is a positive constant \(C_3\) such that

Let the right-hand side be \(H_{X3}^{\text {alg}}(w)\), and consider a zeta function given by

By using a blow-up \(\varPhi _7\) defined by

we obtain the following expression:

where \(f_4\) is a function consisting of the parameters except for \(u_2\). Therefore, \(\zeta _4(z)\) has a pole at \(z=-(K^*-1+Kd_c)/2\), which shows that

Compared to the result of Lemma 5, we find that the bounds are tighter when \(\eta _1>d_c\), which proves the lemma. \(\square \)

Second, the following lemma shows the lower bound of \(\lambda _X\);

Lemma 7

Under the same condition as in Theorem 3, it holds that

Proof

We can immediately obtain the inequality based on the minimality condition of \(q(x)\) and \(d > K^*-1+K^* d_c\). \(\square \)

Last, using these lemmas, we prove Theorem 1. As shown in the proofs of Lemmas 5 and 6, \(\lambda _X\) is a linear function of \(\eta _1\) due to the factor \(a_k^{\eta _1-1}\) in the Dirichlet prior. The upper and lower bounds imply that, for \(\eta _1\) close to zero, there exists a constant \(\alpha \) such that

where \(\beta =(K^*-1+K^* d_c)/2\). Eliminated components appear in \(\alpha \eta _1\) since their mixing ratio parameters converge to zero in the effective area, and the prior factor \(a_k^{\eta _1-1}\) works on the calculation of the pole of \(\zeta _X(z)\). The phase in the upper bounds eliminates all redundant components, and the constant term \(\beta \) in the above expression is the same value as that of the bounds. This means that the redundant components are all eliminated in this phase. On the other hand, the upper bounds also indicate that \(\lambda _X\) must be a constant function for a sufficiently large \(\eta _1\). When there is no linear factor of \(\eta _1\) in \(\lambda _X\), all mixing ratio parameters converge to nonzero values; all components are used in this phase. Therefore, we have found the two phases, as desired. \(\square \)

Rights and permissions

About this article

Cite this article

Yamazaki, K. Asymptotic accuracy of Bayes estimation for latent variables with redundancy. Mach Learn 102, 1–28 (2016). https://doi.org/10.1007/s10994-015-5482-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10994-015-5482-3