Abstract

In this paper, we discuss a class of fractional optimal control problems, where the system dynamical constraint comprises a combination of classical and fractional derivatives. The necessary optimality conditions are derived and shown that the conditions are sufficient under certain assumptions. Additionally, we design a well-organized algorithm to obtain the numerical solution of the proposed problem by exercising Laguerre polynomials. The key motive associated with the present approach is to convert the concerned fractional optimal control problem to an equivalent standard quadratic programming problem with linear equality constraints. Given examples illustrate the computational technique of the method together with its efficiency and accuracy. Graphical representations are provided to analyze the performance of the state and control variables for distinct prescribed fractions.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Fractional calculus has captured the attention of researchers during the last few decades due to its applicability in the diverse field of applied mathematics and engineering such as continuum mechanics, fluid mechanics, signal processing, bioengineering, etc. This apparent modernization inspires us to look for admissible applications and physical properties unattended by integer-order operators (integral/differential). Starting with a remarkable application in the tautochronous problem, continuing with applications in viscoelasticity, diffusion and wave propagation, control theory: Fractional calculus appears to be suitable for modeling purposes as the previous set of data is associated.

The classical optimal control problem is extended to the fractional optimal control problem (FOCP) by means of fractional calculus. The deviation exists in dynamical constraints that contain fractional-order derivatives in place of classical derivatives. The existence theorem on FOCPs was first introduced by Bhatt in [1]. Some of the recent work done on FOCP include Hamiltonian formulation [2], central difference numerical scheme [3], a numerical scheme using modified Jacobi polynomials [4], FOCP with free terminal time [5].

In this paper, we present a class of FOCPs and further investigate it for necessary and sufficient optimality conditions. In the literature, orthogonal polynomials like Chebyshev polynomials [6], Jacobi polynomials [4, 7], Legendre polynomials [8], have already practiced while handling FOCPs. The representation of a function in terms of the series expansion by orthogonal polynomials is a fundamental concept in approximation and optimization. The presence of orthogonality is sufficient in scaling down the complexity by reducing it to a simpler system of algebraic equations. We adopt the Laguerre polynomials to approximate the state, control variable and thus the objective function. The concerned problem is then transformed to an equivalent standard quadratic programming problem with linear equality constraints. A solution to the latter problem corresponds to a solution of the original FOCP.

2 Problem Statement

Consider a class of FOCPs, designed to find the optimal control u(t), that minimizes the given performance index J

subject to the system dynamic constraints

and the initial condition

where x(t) is the state variable known as the optimal trajectory.

-

The functions F and \(G_2\) are assumed to be continuously differentiable in all the three arguments.

-

To generalize a class of FOCPs already present in the literature (see, e.g., [9, 10]), the function \(G_1\) is considered to be a combination of classical and fractional-order derivatives.

-

By taking \(G_1\equiv {}^c_0D^{\alpha }_t x(t)\), (P) reduces to the problem originally introduced by Agrawal [11]. Further investigations for solution schemes are made by many researchers like Baleanu [2], Dehghan [4], Lotfi [12].

Our aim is to obtain the necessary and sufficient conditions, together with a solution scheme for the proposed problem (P).

3 Preliminaries

In this section, we give some basic definitions of fractional-order derivatives [13,14,15] and Laguerre polynomials, which will be needed in the sequel.

Fractional-Order Derivatives Let \(f\in C^n [a, b];n\in \mathbb {N} \), where \(C^n [a, b] \) is the space of n times continuously differentiable functions defined over [a, b].

Definition 3.1

For all \(t\in [a, b], n-1\le \alpha <n\), left Riemann–Liouville fractional derivative of order \(\alpha \) is defined as

Definition 3.2

For all \(t\in [a, b] , n-1\le \alpha <n\), left Caputo fractional derivative of order \(\alpha \) is defined as

Definition 3.3

(Integration by parts) If f, g, and the fractional derivatives \(_aD_t^{\alpha }g, {}_t D_b^{\alpha }f\) are continuous at every point \(t \in [a, b] \), then

Laguerre Polynomials The Laguerre polynomials \(L_n(x)\) (see [16] and references therein), are solutions of a second-order linear differential equation \(xy''+(1-x)y'+ny=0,\,n\in \mathbb {N}.\)

-

The power series representation for Laguerre polynomials is given by

$$\begin{aligned} L_n(x)=\sum _{k=0}^n \, \frac{(-1)^k}{k!}\, {\large {{}^nc_k}}\, x^k, \quad \mathrm{where}\,\,{}^nc_k=\frac{n!}{k!(n-k)!}. \end{aligned}$$(4) -

Orthogonal property in \([0,\infty [\) with \(e^{-x}\) as the weight function

$$\begin{aligned} \int _0^{\infty }L_n(x)L_m(x)\, e^{-x}\mathrm{d}x= \delta _{nm}=\left\{ \begin{array}{ll} 1 &{}\quad \mathrm{if}\,\, n=m, \\ 0 &{}\quad \mathrm{otherwise.} \end{array} \right. \end{aligned}$$(5) -

In the interval ]0, 1[, the orthogonality relation can be viewed as

$$\begin{aligned} \int _0^{1}L_n\left( -\ln (x)\right) L_m\left( -\ln (x)\right) \, \mathrm{d}x= \delta _{nm}=\left\{ \begin{array}{ll} 1 &{}\quad \mathrm{if}\,\, n=m, \\ 0 &{}\quad \mathrm{otherwise.} \end{array} \right. \end{aligned}$$(6)

4 Necessary and Sufficient Conditions

In this section, we derive the necessary optimality conditions of the problem (P). Under appropriate assumptions, it is shown that the obtained necessary optimality conditions become sufficient.

Theorem 4.1

(Necessary conditions) If u is an optimal control of the problem \(\mathbf{(P)}\), then u satisfies the necessary conditions given by

with terminal conditions, \(x(0)=x_0\) and \(\lambda (1)=0.\)

Proof

To find the optimal control u(t), define the modified performance index

where \(\lambda \) is a Lagrange multiplier also known as the costate or adjoint variable. Taking the first variation of the modified performance index \(\bar{J}(u)\), we get

where \(\delta x,\delta u\) and \(\delta \lambda \) are the variations of x, u and \(\lambda \), respectively, with the specified terminal conditions. Using integration by parts, we obtain

provided \(\delta x (0) =0\) or \(\lambda (0) =0\), and \(\delta x(1)=0\) or \(\lambda (1)=0\). As x(0) is specified, we take \(\delta x (0) =0\). Since x(1) is not specified, we require \(\lambda (1)=0\). Thus,

To minimize \(\bar{J}(u)\), we need the first variation \(\delta \bar{J}(u)\) to be zero, i.e., the coefficients of \(\delta x,\delta u\) and \(\delta \lambda \) in Eq. (7) have to be zero. Thus,

Equations (8)–(11) represent necessary optimality conditions for the posed problem (P). We may mark that, once the costate variable \(\lambda \) is obtained by solving (8) and (9), the control variable u(t) can easily be obtained by (10). \(\square \)

Particular Cases

-

For \(G_1={}_0D^{\alpha }_t x(t)\), the problem (P) reduces to the simplest (FOCP) given by Agrawal in 1989 (see [11]).

-

For \(G_1=Ax'(t)+B{}_0D^{\alpha }_t x(t)\,;\, A,B\in \mathbb {R}\), the necessary conditions reduce to

$$\begin{aligned} A\,x'(t)+B\,{}_0D^{\alpha }_t x(t)= & {} G_2(x,u,t),\\ B\,{}_tD^{\alpha }_1 \lambda -A\,\frac{d\lambda }{dt}= & {} \frac{\partial F}{\partial x}+\lambda \frac{\partial G_2}{\partial x},\quad \mathrm{and}\quad \frac{\partial F}{\partial u}+\lambda \frac{\partial G_2}{\partial u}=0, \end{aligned}$$with terminal conditions (11). For solution schemes and sufficient optimality conditions of this problem, we refer the reader to [5, 17].

Note that, \(G_1\) involves left Riemann–Liouville fractional derivative. Similar results are obtained by taking Caputo’s fractional-order derivatives, as shown in the next theorem.

Theorem 4.2

If u is an optimal control of performance index (1) subject to the system dynamical constraint \(G_1(x'(t),\, {}^c_0D_t^{\alpha }x(t))=G_2(x,u,t),\) and terminal conditions (3), then u satisfies the necessary conditions given by

with terminal conditions (11).

In the next theorem, we shall show that the necessary optimality conditions (Theorem 4.1) become sufficient under suitable conditions.

Theorem 4.3

(Sufficient conditions) Let \((x^*,u^*,\lambda ^*)\) be a triplet satisfying necessary conditions (8)–(10) of the fractional optimal control problem \((\mathbf{P})\). Moreover, assume that

-

(i)

f and \(G_2\) are convex in the arguments x and u.

-

(ii)

\(\lambda (t)\ge 0,\) for all \(t\in [0,1]\) or \(G_2\) is linear in x and u.

Then \((x^*,u^*)\) is an optimal solution.

Proof

Since \((x^*,u^*,\lambda ^*)\) satisfies necessary conditions (8)–(10).

Refereing to (8), we get

Let (x, u) be an admissible solution of the problem (P). For \((x^*,u^*)\) to be an optimal solution, it is left to show that \(J(x^*,u^*)\le J(x,u)\). According to Eqs. (13) and (14), we observe that

Using integration by parts, we arrive at

\(J(x,u)\ge J(x^*,u^*)\) implies that \((x^*,u^*)\) is an optimal solution of the problem (P), which concludes the proof. \(\square \)

5 Mathematical Formulation of Solution Scheme: Algorithm

We explore the Laguerre polynomials to obtain a solution scheme for FOCPs and analyze its effectiveness practically. These polynomials are used to approximate more complicated functions into a linear or nonlinear system of algebraic equations. The formulation of the solution scheme for FOCP starts with parameterizing the state variable with a polynomial of degree N, and hence u(t) can be determined by lesser number of parameters that minimizes the performance index J.

Let \(Q\subset C[0,1]\) be the set of all functions satisfying initial condition (3), and \(Q_N\subset Q\) be the class of all Laguerre polynomials of degree up to N.

Numerical Scheme

-

State parameterization Parameterize the state variable as a linear combination of Laguerre polynomials of degree up to N.

$$\begin{aligned} x(t)\approxeq x_N(t)=\sum _{k=0}^N a_k\,L_k(t),\quad N=1,2,3... \end{aligned}$$where \(L_k\)s are Laguerre polynomials of degree k and \(a_k\)s are the unknown coefficients to be determined.

-

Expression of the control variable Dynamical constraint (2) assists in rewriting the control variable as a function (say \(\phi \)) of time t, parameterized state variable \(x_N(t)\), and fractional derivative \({}^{c}_0D_t^{\alpha }x_N(t)\). For \(\alpha ,\beta \in ]0,1[,\)

$$\begin{aligned} u(t)\approxeq u_N(t)= & {} \phi \left( t,x_N(t),{}^{c}_0D_t^{\alpha }x_N(t)\right) ,\\= & {} \phi \left( t,\sum _{k=0}^N a_k L_k(t),\sum _{k=0}^{N}a_k\,{}^{c}_0D^{\alpha }_t L_k(t)\right) . \end{aligned}$$ -

Approximation of performance index Transform performance index (1) to a function of \(N+1\) unknowns \(a_i\,(i=0,1,2,...N)\).

$$\begin{aligned} \hat{J}[a_0,...,a_N]= & {} \int _{t_0}^{t_1}F\left( t,\sum _{k=0}^N a_k L_k(t),\phi \left( t,\sum _{k=0}^N a_k L_k(t),\sum _{k=0}^{N}a_k{}^{c}_0D^{\alpha }_t L_k(t)\right) \right) dt,\nonumber \\ \end{aligned}$$(15)$$\begin{aligned}&\mathrm{subject}\,\mathrm{to}\quad x_0\approxeq x_N(t_0)=\sum _{k=0}^N a_k\, L_k(t)\,{\large {|}_{t=t_0}}\,. \end{aligned}$$(16) -

Transformation to standard programming problem With quadratic performance index, the problem (P) is converted into a quadratic function (15) of unknown parameters \(a_i\) with equality constraints (16) described below in the equivalent matrix form.

$$\begin{aligned} {Minimize } \,\, \left( \frac{1}{2}\,a'Ha+G'a\right) ,\quad a\in \mathbb {R}^{N+1}, \end{aligned}$$(17)subject to the linear equality constraints

$$\begin{aligned} Ba=C, \end{aligned}$$(18)where \(a=[a_0\,\,a_1...\,a_N]'\), \(B=[L_0(t_0)\,\,L_1(t_0)...\,L_N(t_0)]\), and \(C=[x_0]\).

-

Obtain the optimal value We now find the optimal value \(a^*\) to solve quadratic programming problem (17)–(18) as follows

$$\begin{aligned} a^*=-H^{-1}\,(G+B'\lambda ^*), \quad \mathrm{where} \quad \lambda ^*=-(BH^{-1}B')^{-1}(C+BH^{-1}G). \end{aligned}$$ -

Expression for approximated state and control variable Using \(a^*\), we write the expressions for \(x_N(t)\), \(u_N(t)\) and the optimal value of \(\hat{J}\) that approximates the original performance index J.

Proposed Algorithm

-

1.

Parameterize the state variable x(t) by \(x_N(t)\), using Laguerre polynomials.

-

2.

Approximate u(t) by \(u_N(t)\), as a function of \(x_N(t)\) and \({}^c_{0}D^{\alpha }_t x_N(t)\).

-

3.

Obtain H, G: Approximate J by \(\hat{J}\), write \(\hat{J}=a'Ha+G'a\).

-

4.

Obtain B, C: Write the linear equality constraints as \(Ba=C\).

-

5.

Determine \(\lambda ^*\) and \(a^*\).

-

6.

Deduce \(x_N(t)\), \(u_N(t)\) and find the optimal value of \(\hat{J}\) that approximate J.

We have used Mathematica to perform all the numerical and graphical segments. One can also use MATLAB software to solve a quadratic programming problem with linear equality constraints by providing the matrices \(H,\,G,\,B,\,C\,\) as input and extracting the column matrix a as output.

6 Laguerre Orthogonal Approximation: Convergence Criterion

In this section, we compute the \(\alpha {\mathrm{th}}\)-order fractional derivative of Laguerre polynomials for \(\alpha \in ]0,1[\), followed by an approximation formula for the fractional derivative of the state variable. Afterward, we discuss the convergence analysis of the designed approximation.

Theorem 6.1

For \(\alpha \in ]0,1[\), the fractional derivative of Laguerre polynomials of order \(\alpha \) is given by

Proof

By Eq. (4), we have \(L_n(t)=\sum _{k=0}^n \, \frac{(-1)^k}{k!}\, {\large {{}^nc_k}}\, t^k\,\).

For Caputo’s derivative, we know that

We clearly observe that,

\(\square \)

Next, we parameterize the state variable x(t) as a linear combination of Laguerre polynomials of degree N with unknowns \(\{a_k\}_{k=0}^{N}\),

where \(L_k\)s are Laguerre polynomials of degree k. Using (21) and (6),

Remark 6.1

In (20), one may observe that \(t^{k-\alpha }\) can be expressed approximately in terms of Laguerre polynomials, which is given by

where \(c_{_{kj}}\) can be obtained from (22) with \(x_N(t)=t^{k-\alpha }\,\).

Theorem 6.2

For \(\alpha \in ]0,1[\), the approximation formula for fractional derivative of the state variable is given by

where \(L_k^{\alpha }(t)\) is given by Eq. (20). Or,

Proof

Using (21), we approximate \({}^c_0D_t^{\alpha }x(t)\) as

\(\square \)

We shall now explain the convergence analysis of the proposed algorithm, ensured by the Weierstrass approximation theorem.

Theorem 6.3

(Weierstrass approximation theorem [18]) Let \(f\in C([a, b], \mathbb {R})\). Then, there is a sequence of polynomials \(P_n(x)\) that converges uniformly to f(x) on [a, b].

Theorem 6.4

(see [6]) If \(\xi _n = \mathrm{inf}_{Q_n}J,\, n\in \mathbb {N}\), where \(Q_n\) is a subset of Q, consisting of all polynomials of degree at most n. Then, \(\mathrm{lim}_{n\rightarrow \infty }\xi _n=\xi \), where \(\xi = \mathrm{inf}_QJ\).

Theorem 6.5

If J has continuous first-order derivatives, and for \(n\in \mathbb {N}, \beta _n=\mathrm{inf}_{Q_n}J\). Then, \(\mathrm{lim}_{n\rightarrow \infty }\beta _n=\beta \), where \(\beta =\mathrm{inf}_QJ\).

Proof

Let us define the sequence, \(\beta _n=\mathrm{min}_{a_n\in \mathbb {R}^{n+1}}J(a_n).\)

Since \(a_n^*\in \mathrm{Argmin}\{J(a_n);\,a_n\in \mathbb {R}^{n+1}\}\), that implies, \(\beta _n=J(a_n^*).\)

Again, let \(x_n^*\in \mathrm{Argmin}\{J(x(t));\,x(t)\in Q_n\}\)

in which \(Q_n\) is a class of combinations of Laguerre polynomials in t, of degree n, so \(\beta _n = J(x_n^*(t))\). Additionally \(Q_n\subset Q_{n+1}\), thus we have

which shows that \((b_n)\) is a nonincreasing sequence. Theorem 6.4 concludes the proof, that is, \(\mathrm{lim}_{n\rightarrow \infty }\beta _n = \mathrm{min}_{x(t)\in Q} J(x(t))\,.\) \(\square \)

Note Theorem 6.5 is proved when \(Q_n\) is a class of combinations of Chebyshev polynomials, Boubaker polynomials (see, e.g., [6, 19]).

7 Computational Segment: Examples

In this section, we exhibit the applicability of the formulated numerical scheme by considering the time-varying and time-invariant FOCPs. The efficiency and accuracy of the strategy are observed by plotting the absolute error functions, state, and control variable as a function of time t.

Example 7.1

(see [3]) Find the optimal control u(t) that minimizes the time-invariant FOCP with quadratic performance index

subject to the system dynamical constraints

and the initial condition

As elaborated in the proposed algorithm (Sect. 5), we parameterize the state variable x(t) by Laguerre polynomials (\(L_k\)’s) of degree up to \(N=5\), i.e.,

where \(a_k\)s (\(k=0,1,...,5\)) are the unknown coefficients to be determined. Using initial condition (26), we get

We materialize system dynamical constraint (25) to approximate the control variable u(t) by \(u_5(t)\). Thus, \(u(t)\approxeq u_5(t)\) is considered as a function of \(x_5(t)\) and its \(\alpha {\mathrm{th}}\)-order Caputo derivative \({}^{c}_0D_t^{\alpha }\,x_5(t)\), that is,

In order to make the mechanism evident, we choose \(\alpha =0.99\).

Use \(x_5(t)\) and \(u_5(t)\) to approximate the performance index \(J^*\) in Eq. (24),

where

Finally, fractional optimal control problems (24)–(26) are converted into a quadratic programming problem described below:

subject to \(Ba=C\), where \(a=[a_0\,a_1\,a_2\,a_3\,a_4\,a_5]', B=[1\, 1\,1\,1\,1\,1]\), and \(C=[1]\).

On simplifying the equivalent quadratic programming problem with linear equality constraints, \(\mathbf{a}=[1.59173\,\,-3.9641\,\,4.19967\,\,1.9204\,\,-4.98458\,\,2.23688]'.\) At last, we figure out the approximated state \(x_5(t)\) and optimal control \(u_5(t)\) as a function of time t.

and the approximate minimum value of \(J^*=1.19155077\).

For \(\alpha =1\), the classical case is widely investigated and the analytic solution for this system is given as (see [7] and references therein)

where \(\beta =\frac{2\sqrt{2}-3}{-e^{\sqrt{2}}+2\sqrt{2}-3}\). The exact solution for the performance index in this case is \(J=0.1929092978\). Using \(5{\mathrm{th}}\)-order Laguerre approximation, we obtain the approximate solution for \(\alpha =1\) as \(J=0.1929115932\). The approximated state \(x_5^*(t)\) and control variable \(u_5^*(t)\) of order 5 (\(\alpha =1\)) are given as

Example 7.2

(see [5]) Find the optimal control u(t) that minimizes the time-varying (FOCP) with quadratic performance index

subject to the system dynamic constraints

and the boundary conditions

The analytical solution for this problem is

Following the same way as in Example 7.1, we parameterize the state variable \(x(t)\,\left( \approxeq x_5(t)\right) \) by Laguerre polynomials (see Eq. (27)) with the initial condition given in (34). The approximated control variable \(u(t)\,\left( \approxeq u_5(t)\right) \) can be determined by Eq. (33), that is,

Choose \(\alpha =0.6\), and substitute the approximated state and control variable into the performance index M. Problems (32)–(34) are now transformed into a quadratic programming problem described below

where \(a=[a_0\,\,a_1\,\,a_2\,\,a_3\,\,a_4\,\,a_5]'{\small B=\left[ \begin{array}{cccccc} 1 &{} 1 &{} 1 &{} 1 &{} 1 &{} 1 \\ 1 &{} 0 &{} -\frac{1}{2} &{} -\frac{2}{3} &{} -\frac{5}{8} &{} -\frac{7}{15} \\ \end{array} \right] }\), \(C=[0\,\,\, 0.538065]'\), \(G'=[1.3\,\,\,1.07743\,\,\,0.703284\,\,\,0.287267\,\,\,-0.0989874\,\,\,-0.412705]\), \(k=0.142857\), and

On simplifying the above quadratic programming problem, we get

Using a, the approximated state variable \(x_5(t)\) and control variable \(u_5(t)\) can now be obtained as a function of time t. The approximate value of performance index is \(M=0.000035032\), for \(\alpha =0.6\).

Absolute error function of state and control variable for \(N=5\) (Example 7.1)

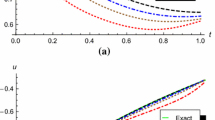

\(x_5(t)\) and \(u_5(t)\) as a function of time t (Example 7.1)

State and control variable, for \(\alpha =0.9, 0.99,1.0\) (Example 7.1)

Absolute error function of state and control variable for \(N=5\) (Example 7.2)

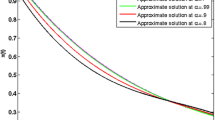

\(x_5(t)\) and \(u_5(t)\) as a function of time t (Example 7.2)

State and control variable (exact and approximated), for \(\alpha =1\) (Example 7.2)

Discussion on Graphical Representation We display the approximate value of optimal performance index \(J^*,\,M\) for different values of \(\alpha \in ]0,1[\), and \(N=5\) (Table 1). One can review the absolute error of state and control variable (i.e., \(|x(t)-x_5^*(t)|\) and \(|u(t)-u_5^*(t)|\)) in Fig. 1 (Example 7.1) and Fig. 4 (Example 7.2). To monitor the action of parameterized state \(x_5(t)\) and control variable \(u_5(t)\) for different values of \(\alpha \), we plot these as a function of time t. The graphs presented are essential to outlook the nature of state and control variable for different values of \(\alpha \). We see that the edges come closer as \(\alpha \) approaches 1 and meet the exact solution for \(\alpha =1\). To make the comments clear, dotted line in Fig. 2 corresponds to the exact solution, whereas pink and orange correspond to \(\alpha =1\) and \(\alpha =0.99\), respectively. In order to have a nice interpretation, one may look at Fig. 3 (Example 7.1) which gives the exact and approximate plots of state and control variable at one place, Figs. 5 and 6 (Example 7.2).

8 Conclusions

We have investigated a class of FOCPs for necessary and sufficient optimality conditions along with a solution scheme. The presented computational technique is advantageous as we can easily convert the original FOCP to a quadratic programming problem. We extract the state and control variables as a continuous function of time to obtain the minimum value of the performance index. The technique is systematically executed for both time-invariant and time-varying FOCPs. Error analysis corresponding to other orthogonal polynomials will be an issue of future research of the authors.

References

Bhatt, S.K.: An existence theorem for a fractional control problem. J. Optim. Theory Appl. 11, 379–385 (1973)

Agrawal, O.P., Baleanu, D.: A Hamiltonian formulation and a direct numerical scheme for fractional optimal control problems. J. Vib. Control 13, 1269–1281 (2007)

Baleanu, D., Defterli, O., Agrawal, O.P.: A central difference numerical scheme for fractional optimal control problems. J. Vib. Control 15, 583–597 (2009)

Dehghan, M., Hamedi, E.A., Khosravian-Arab, H.: A numerical scheme for the solution of a class of fractional variational and optimal control problems using the modified Jacobi polynomials. J. Vib. Control 22, 1547–1559 (2016)

Pooseh, S., Almieda, R., Torres, D.F.M.: Fractional order optimal control problems with free terminal time. J. Ind. Manag. Optim. 10, 363–381 (2014)

Kafash, B., Delavarkhalafi, A., Karbassi, S.M.: Application of Chebyshev polynomials to derive efficient algorithms for the solution of optimal control problems. Sci. Iran. 19, 795–805 (2012)

Doha, E.H., Bhrawy, A.H., Baleanu, D., Ezz-Eldien, S.S., Hafez, R.M.: An efficient numerical scheme based on the shifted orthonormal Jacobi polynomials for solving fractional optimal control problems. Adv. Differ. Equ. 12, 1–17 (2015)

Ezz-Eldien, S.S., Doha, E.H., Baleanu, D., Bhrawy, A.H.: A numerical approach based on Legendre orthonormal polynomials for numerical solutions of fractional optimal control problems. J. Vib. Control 20, 16–30 (2015)

Agrawal, O.P.: Formulation of Euler–Lagrange equations for fractional variational Problems. J. Math. Anal. Appl. 272, 368–379 (2002)

Agrawal, O.P.: A general formulation and solution scheme for fractional optimal control problems. Nonlinear Dyn. 38, 323–337 (2004)

Agrawal, O.P.: General formulation for the numerical solution of optimal control problems. Int. J. Control 50, 627–638 (1989)

Lotfi, A., Dehghan, M., Yousefi, S.A.: A numerical technique for solving fractional optimal control problems. Comput. Math. Appl. 62, 1055–1067 (2011)

Kilbas, A.A., Srivastava, H.M., Trujillo, J.J.: Theory and Applications of Fractional Differential Equations. North-Holland Mathematics Studies, Amsterdam (2006)

Miller, K.S., Ross, B.: An Introduction to the Fractional Calculus and Fractional Differential Equations. Wiley, New York (1993)

Oldham, K.B., Spanier, J.: The Fractional Calculus: Theory and Applications of Differentiation and Integration to Arbitrary Order. Academic Press, New York (1974)

Aizenshtadt, V.S., Krylov, V.I., Metel’skii, A.S.: Tables of Laguerre Polynomials and Functions. Mathematical Tables Series. Pergamon Press, Oxford-New York (1966)

Almeida, R., Pooseh, S., Torres, D.F.M.: Fractional variational problems depending on indefinite integrals. Nonlinear Anal. 75, 1009–1025 (2012)

Rudin, W.: Principles of Mathematical Analysis. McGraw-Hill, New York (1976)

Kafash, B., Delavarkhalafi, A., Karbassi, S.M., Boubaker, K.: A Numerical approach for solving optimal control problems using the Boubaker polynomials expansion scheme. J. Interpolat. Approx. Sci. Comput. 3, 1–18 (2014)

Bryson Jr., A.E., Ho, Y.C.: Applied Optimal Control. Optimization, Estimation, and Control. Hemisphere Publishing Corp., Washington (1975)

Acknowledgements

The authors are grateful to the anonymous reviewers for their valuable comments and suggestions, which improved the presentation of the paper.

Author information

Authors and Affiliations

Corresponding author

Appendix: Singular Fractional Optimal Control Problem

Appendix: Singular Fractional Optimal Control Problem

We wish to address some control problems where the performance index is either linear or independent of the control function u. For example, consider the FOCP to find an optimal control u(t) that minimizes the performance index

subject to the system dynamic constraints

and the initial condition \(x(0)=1\). One can clearly observe that:

-

The integrand of J is independent of the control function u.

-

Necessary optimality conditions (Theorem 3.1) reduce to \({}_0D_t^{\alpha }x=u\), \({}_tD_1^{\alpha }\lambda =x(t)\) and \(\lambda (t)=0\). The condition \(\lambda (t)=0\) complicates the system to further look for some control function, and hence the control problem is termed as singular.

If \(\alpha =1\), the problem stated above corresponds to the classical singular optimal control problem (see [20] and references therein). An independent investigation by researchers has to be carried out for such problems.

Rights and permissions

About this article

Cite this article

Singha, N., Nahak, C. An Efficient Approximation Technique for Solving a Class of Fractional Optimal Control Problems. J Optim Theory Appl 174, 785–802 (2017). https://doi.org/10.1007/s10957-017-1143-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10957-017-1143-y