Abstract

The aim of the current study was to investigate the influence of happy and sad mood on facial muscular reactions to emotional facial expressions. Following film clips intended to induce happy and sad mood states, participants observed faces with happy, sad, angry, and neutral expressions while their facial muscular reactions were recorded electromyografically. Results revealed that after watching the happy clip participants showed congruent facial reactions to all emotional expressions, whereas watching the sad clip led to a general reduction of facial muscular reactions. Results are discussed with respect to the information processing style underlying the lack of mimicry in a sad mood state and also with respect to the consequences for social interactions and for embodiment theories.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Looking at a vis-à-vis’ facial expression leads to congruent muscular reactions in the observer’s face, a phenomenon called facial mimicry. This behavior occurs fast (i.e., within 1,000 ms after the beginning of picture presentation) and in even minimal social contexts, for example when only passively viewing pictures of persons one does not know and with whom one will never get in touch (e.g., Dimberg 1982), or pictures of computer generated faces (Likowski et al. 2008) or when emotional expressions are only presented subliminally (Dimberg et al. 2000). Thus, facial mimicry is assumed to be an automatic und unconscious reaction (Dimberg et al. 2002).

However, recent research has shown several moderating variables for facial mimicry like attitudes (Likowski et al. 2008), similarity (Gump and Kulik 1997), shared versus opposing goals (McHugo et al. 1991), consciously and unconsciously evoked interaction goals (Lanzetta and Englis 1989; Weyers et al. 2009), or group identity (Bourgeois and Hess 2008; Herrera et al. 1998; Mondillon et al. 2007). These findings fit well with the proposed function of mimicry to serve as a social glue by facilitating the creation and maintenance of relationships and by smoothing interactions (Chartrand and Bargh 1999; Chartrand et al. 2005; Hess 2001; Lakin and Chartrand 2003; Lakin et al. 2003): The motivation for and benefit from creating social relationships and having smooth interactions tends to be higher when dealing with liked individuals, in-group members, and persons with the same goal than when dealing with disliked individuals, out-group members, and persons with opposing goals.

One can easily envision that one’s current emotional state also influences facial reactions to the emotional facial expressions of interaction partners. Moody et al. (2007) measured facial muscular reactions in response to neutral, angry, and fearful facial expressions after participants watched film clips intended to induce a fearful or a neutral state. They could show that the fear condition lead to fear expressions in response to angry and fearful but not to neutral faces. Specifically, they found elevated activity over the Frontalis and the Corrugator muscles.

The question remains how positive and negative mood states other than fear affect facial mimicry. Just imagine being in a sad mood and your interaction partner shows a happy face. Will you respond with a congruent happy facial expression? From the motivational account just outlined, reciprocating that smile would give you a chance to have a pleasant interaction, thereby repairing your mood. And also the study by Moody et al. (2007) would suggest considerable facial reactions. However, the valence of a state alone does not suffice to predict its effect on facial mimicry. Two lines of research indicate that you might not be able to reciprocate a smile in a sad mood.

First, several studies have shown that sadness increases self-focused attention (e.g., Green and Sedikides 1999; Salovey 1992; Silvia et al. 2006; Sedikides 1992; Wood et al. 1990), probably in order to find out the source and meaning of one’s state or to deal with it (Wood et al. 1990). Thus, the capacity for directing one’s attention to external––also social––stimuli and their perception might be reduced. This, however, might not necessarily mean that one does not want to affiliate with the interaction partner at all as in the case of negative attitudes or ingroup–outgroup relationships, but that one simply does not have the actual capacity to show affiliative tendencies because of self-focused attention or mood regulation processes. Conversely, individuals in a happy mood should show facial mimicry early after stimulus onset because their attention is more outwardly directed (e.g., Wood et al. 1990).

Second, according to a very different account––the affect-as-information theory––mood serves as a signal conveying information about the friendliness or dangerousness of our environment (cf. Schwarz and Clore 1996). A negative mood is assumed to signal that something might be wrong; thus one has to be careful, which results in a more analytical, effortful, and cautious information processing style, and hence, more cautious behavior and less automatic processes. On the other hand, individuals in a happy mood are expected to execute rather than inhibit automatically evoked tendencies. This prediction is based on the assumption that a positive mood is supposed to signal that the environment poses no threat leading to a more heuristic, less analytic, and less effortful information processing style, and hence, more automatic processes (cf. Schwarz and Clore 1996). Based on the assumption that mimicry is an automatic behavior it should become more easily adopted in a positive than in a negative mood (i.e., less mimicry in a negative mood). This prediction is in line with findings by van Baaren et al. (2006) who investigated the effects of a happy and a sad mood on non-facial mimicry, specifically on the mimicry of pen-playing. The authors found that individuals in a negative mood state hardly mimicked pen-playing, while individuals in a positive mood state did.

Both of the two theoretical accounts––attention focus theories and the affect-as-information theory––lead to the same conclusions about facial reactions in happy and sad mood. Therefore, the purpose of the current study was not to differentiate between these mechanisms but to test the derived predictions about the modulation of facial mimicry by specific mood states. Accordingly, we predict a general lack of facial mimicry for individuals in a sad state. This lack, however, does not indicate a lack of motivation to affiliate as in the studies cited above, but rather a current lack of capacity to reciprocate the facial expressions of others because of self-focused attention or analytical information processing.

We predicted facial mimicry to happy and sad expressions in a happy but not in a sad mood. Specifically, for the happy group we expected an activation of the Zygomaticus major and a deactivation of the Corrugator supercilii to happy faces, and an activation of the Corrugator supercilii to sad faces. This pattern has been reported several times in the literature (c.f. Dimberg 1982; Lundqvist 1995). Conversely, individuals in a sad state should show a general lack of facial mimicry. Furthermore, according to the results by Moody et al. (2007) that emotional states influence even the rapid facial reactions, we expected differences between these two mood conditions even for the very early facial reactions, i.e., in the second half of the first second after stimulus onset. In addition we presented angry facial expressions in order to test whether individuals in a sad mood respond with a fearful expression (Frontalis activation), as has been shown for a fearful mood (Moody et al. 2007). However, our expectation was that they would not because sad states do not direct individuals’ attention to potential danger cues in the environment but rather inwards to the self. Finally, we did not have clear predictions regarding facial mimicry of angry faces in the happy group. On the one hand, we expected the happy group to be attentive to external stimuli and to have a tendency to show enhanced automatic reactions, including facial mimicry. On the other hand, several studies failed to find anger mimicry, and indeed one can argue that it is not functional for smoothing interactions (see Bourgeois and Hess 2008). Accordingly, finding mimicry in the happy group even for anger would be strong evidence for the tendency to show automatic reactions in a happy mood.

Method

In order to test these assumptions we showed two film clips, one to induce a happy mood state and one to induce a sad mood state. Immediately afterwards we presented happy, sad, angry, and neutral facial expressions and measured participants’ muscular activity over M. Zygomaticus major, M. Corrugator supercilii, and M. Frontalis medialis regions.

Until now, the Moody et al. (2007) experiment is the only one investigating the effects of an emotional state on facial mimicry. Therefore, we chose to keep our procedures fairly similar to those used by Moody et al. in order to be able to compare the results more easily. This is particularly important for the choice of the interval for data analysis. Thus, we test our predictions for the same interval as Moody et al. did, i.e. 500–1,000 ms after stimulus onset.

Design and Participants

The experiment is based on a 2 (group: happy vs. sad) × 4 (expression: happy vs. sad vs. angry vs. neutral) × 3 (muscle: M. Zygomacticus major vs. M. Corrugator supercilii vs. M. Frontalis medialis) factorial design with group as between and expression and muscle as within subjects factors.

Participants were 60 healthy female students from the University of Wuerzburg. They were recruited by local internet announcements and received 7€ for participation. Recruitment was limited to women because of earlier findings (Dimberg and Lundqvist 1990) indicating that females show more pronounced, but not qualitatively different mimicry effects than males. Seven participants had to be excluded because of either technical problems or too many EMG artifacts (more than 30% of the trials). Thus, all statistical analyses are based on 53 participants (mean age 23.64 years; SD = 2.85; range 19–35 years), with 27 participants in the happy and 26 participants in the sad condition.

Stimulus Materials and Apparatus

Emotional Facial Expressions

Computer generated artificial faces were used as stimuli. The advantage of employing computer generated faces instead of pictures of real individuals is that they can be modeled completely symmetrically into different emotional facial displays and that they allow full control over the expression’s intensity (cf. Krumhuber and Kappas 2005). In addition, they offer the possibility to use the same prototypical faces for all types of expressions. Spencer-Smith et al. (2001) found that quality and intensity ratings of emotional expressions generated with Poser are comparable to those of human expressions from the Pictures of Facial Affect (Ekman and Friesen 1976). Moreover, there is empirical evidence for the ecological validity of such faces because they have been proven to elicit facial mimicry (Likowski et al. 2008; Weyers et al. 2009) as would be expected from human faces. Thus, computer generated artificial emotional facial expressions provide an alternative and successful research tool for research on emotional facial expressions.

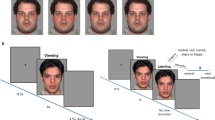

The artificial faces were created with Poser 4 (Curious Labs, Santa Cruz, CA) and the software extension by Spencer-Smith et al. (2001), the latter allowing manipulation of action units according to the Facial Action Coding System (Ekman and Friesen 1978). Two prototypical faces (male and female) were equipped with three different hair colors (blond, brown, and black). Each of these 6 faces was modeled into a happy, sad, angry, and neutral expression (for examples see Fig. 1). The happy faces were doubled to reach a balance of positive (12 happy faces) and negative stimuli (6 sad faces and 6 angry faces) resulting in altogether 30 stimuli (including 6 neutral ones). The faces were presented with a size of 19 cm × 25 cm on a computer screen 1 m in front of the participants.

Mood Manipulation

Two film clips including sound were chosen in order to induce a happy and a sad mood state. These two film clips had previously been shown to be highly effective in inducing a happy and a sad mood (Hewig et al. 2005). Participants in the happy condition watched an amusing segment from the movie When Harry Met Sally whereas participants in the sad condition watched a sadness-inducing segment from the movie The Champ. Both film clips had nearly the same length of about 3 min and were presented on the same monitor as the avatar faces later on one meter in front of the participants. Most participants were familiar with the scene from When Harry Met Sally, whereas the scene from The Champ was rather unknown.

In a pilot study both film clips were presented to Psychology students in group settings, and the students had to fill in an adjective check list immediately afterwards. T-tests for independent groups revealed several significant group differences in the expected direction. Students who had watched the When Harry Met Sally clip (n = 39) described themselves as more joyful, cheerful, sociable, active, and full of the joy of living, while students who had watched the clip from The Champ (n = 36) described themselves as sadder, more distempered, and more afraid of people, all ts > 2.7, all ps < 0.01.

Procedure

Participants were tested individually in a laboratory room. After arrival they were handed a paper explaining the procedure of the study, and after giving their written consent they were told that they would participate in two independent and short experiments. To conceal the recording of facial muscle activity participants were advised that skin conductance was being measured (see Dimberg et al. 2000), first in response to an emotional film clip and afterwards in response to computer generated faces. The experimenter attached the EMG electrodes and equipped the participants with headphones. Next, participants filled in the Positive and Negative Affect Schedule (PANAS; Watson et al. 1988; German version by Krohne et al. 1996) as a baseline measure of current mood and were randomly assigned to watch one of the two film clips. To ensure that participants paid attention to the clip, they were informed that they would be asked about it later. We decided not to assess subjective mood directly after the movie, because we did not want to draw participants’ attention to their mood. Our aim was to study the effect of mood per se, not of the conscious labeling, categorizing, and dealing with one’s mood that might be evoked by a question.

On completion of the mood induction procedure participants were introduced to the second part. They were told that this part would be concerned with the evaluation of avatars with respect to their suitability for the internet. In addition it was explained that avatars are artificial persons or graphical substitutes of a human in virtual reality, like for example in a computer game. Thereafter they were instructed to carefully monitor the following faces, which were preceded by a warning pitch tone and a centrally located fixation cross 3 s before picture onset. In the following, 30 stimuli (12 happy, 6 sad, 6 angry, and 6 neutral expressions) were presented for 6 s each in randomized order with an overall presentation time of about 11 min. During inter-trial intervals (varying from 14 to 16 s), participants saw a white computer screen. Subsequently, participants completed a second PANAS in order to check for mood manipulation effects, before they were asked to answer several questions about the previously presented film clip in order to affirm the cover story. Finally, the participants filled in the Saarbrücker Persönlichkeits-Fragebogen (SPF; Paulus 2000) to measure empathy, the electrodes were detached, and participants were thanked and received 7 € for taking part in the study.

Dependent Measures

Facial EMG

Facial muscular responses were assessed electromyographically on the left side of the face. To measure the activity of M. Zygomaticus major (elevates the lips during a smile), M. Corrugator supercilii (knits the eyebrows during a frown), and M. Frontalis medialis (wrinkles the forehead during a frown), two 13/7 mm Ag/AgCl miniature surface electrodes for each muscle were attached to the corresponding muscle sites in line with the guidelines by Fridlund and Cacioppo (1986) with a forehead electrode as common reference. The ground electrode was applied behind the left ear (left mastoid). Impedance for all electrodes was kept below 10 kΩ.

The EMG raw signal was assessed with a digital amplifier (V-Amp 16, Brain Products Inc., Munich, Germany), digitalized by a 16-bit analogue-to-digital converter and stored on a personal computer with a sampling frequency of 1,000 Hz. Before further processing, the difference of each two electrodes from the same muscle site was computed. The stored EMG raw signals were filtered offline with a 30 Hz low cutoff filter, a 500 Hz high cutoff filter, a 50 Hz notch filter and rectified and transformed with a 125 ms moving average. For statistical analysis, EMG data were collapsed over the 6 stimulus faces with the same emotional facial expressions, whereby 6 out of the 12 presented happy faces were chosen randomly for analysis. Then reactions were averaged over both the 500 ms to 1,000 ms time interval after stimulus onset (cf. Moody et al. 2007) and the 6 s of stimulus exposure and transformed into mean change scores from baseline. The baseline corresponded to the average muscular activity 1 s before each stimulus onset and was set to zero. Artifacts in the baseline defined as fluctuations of more than ±8 μV, and artifacts during picture presentation defined as fluctuations of more than ±30 μV were excluded from data analyses (less than 5%).

Baseline Muscular Activity

The baseline (see above) was not only used to correct the measurements during stimulus presentation, but also compared between the groups to determine if the induced emotional state would be reflected in participants’ baseline muscular activity. If that was the case, the Zygomaticus activity should be higher in the happy group, and the Frontalis Footnote 1 and Corrugator activity should be higher in the sad group.

Mood Ratings

Participants judged their current mood state with the Positive and Negative Affect Schedule (PANAS; Watson et al. 1988; German version by Krohne et al. 1996) both before mood induction and following presentation of the facial expressions.

Avatar Ratings

To check whether explicit emotional reactions to the emotional expressions show a similar pattern as the implicit (muscular) reactions, we also assessed participants’ ratings of the avatars. Participants rated eight facial stimuli (four blond female and four blond male avatars, each with a happy, sad, angry, and neutral expression) according to valence, arousal, and liking on 9-point Likert scales. The stimuli were presented for 3 s each, followed by the three rating scales with the corresponding question above each.

Individual Difference Measures

There is empirical evidence that high empathic individuals show stronger facial reactions (Sonnby-Borgström et al. 2003) compared to low empathic subjects. Thus the SPF (Saarbrücker Persönlichkeitsfragebogen, Paulus 2000), a German version of the Interpersonal Reactivity Index (IRI, Davis 1980), was used to check for empathy differences between the two experimental groups. The SPF has reliability and validity coefficients comparable to the IRI, e.g. Cronbach α = 0.78 (see Paulus 2009).

Results

We first tested for pre-existing group differences in the empathy score that might have occurred despite the random assignment. Then, we checked for evidence of facial mimicry by conducting tests against zero (i.e. against the baseline), for the rapid facial reactions (500–1,000 ms) in the relevant cells separately for the two groups. Afterwards, we conducted an ANOVA and follow-up tests to compare these reactions between the groups. We then conducted equivalent analyses for the whole stimulus presentation time (0–6,000 ms) in order to allow comparison with other data sets which used longer time windows (e.g., Bourgeois and Hess 2008; Likowski et al. 2008; Lundqvist 1995). Next, we compared the muscular activity of the two groups during the no-stimulus baseline intervals in order to detect facial signs of their respective emotional state. Last, we compared the mood ratings and the explicit ratings of the avatars between the groups.

Individual Difference Measures

A comparison of the Empathy scores by t-test showed a marginal group difference, t(51) = 1.879, p = 0.066, d = 0.516, with higher scores in the happy (M = 45.89, SEM = 1.024) as compared to the sad group (M = 42.96, SEM = 1.179).

Facial Mimicry

A descriptive inspection of Figs. 2, 3, 4 reveals that for the relevant Emotion × Muscle combinations the happy group shows stronger congruent muscular reactions to the emotional faces than the sad group. In addition, the sad group’s reactions show hardly any change from zero. These impressions are confirmed by a set of t-tests against zero as specified in our hypotheses.

To test our assumption that the happy group would mimic sad and happy, and possibly also angry expressions, we first conducted t-tests against 0 for the relevant muscle groups for the rapid facial reactions (500–1,000 ms after stimulus onset). In line with our hypotheses, the t-tests against zero for happy faces showed a significant increase in Zygomaticus major activity only for the happy group, t(26) = 3.022, p = 0.006, d = 0.822, with p = 0.28 for the sad group. Also as expected, the happy group showed a significant decrease in Corrugator supercilii activity to happy faces, t(26) = 3.635, p = 0.001, d = 0.989. However, contrary to expectations, the sad group also showed a marginal activity decrease of the Corrugator supercilii in reaction to happy faces, t(25) = 1.898, p = 0.069, d = 0.527, see Figs. 2 and 3. In line with hypotheses, for sad faces, testing against zero showed significant activity increase over the Corrugator supercilii in the happy group, t(26) = 3.214, p = 0.003, d = 0.875, but not in the sad group, p > 0.92. Thus, in line with expectations, we found in all three indices a clear pattern of facial mimicry in the happy group and only one marginal effect of a congruent deactivation in the sad group.

For angry faces, the Corrugator supercilii activity of the happy group was marginally increased, t(26) = 1.770, p = 0.088, d = 0.482, while no reactions were observed in the sad group, p = 0.19. Thus, we found some evidence that participants in the happy group mimicked even angry faces. Finally, in line with hypotheses, there was no reaction of the Frontalis muscle to angry faces in either group, ps > 0.28 (see Fig. 4). Neither sad nor happy participants showed a fear reaction to angry faces. Next, we tested whether the groups indeed differ from each other in the indices of facial mimicry.

Group Differences

A three-way factorial Group × Emotion × Muscle ANOVA was conducted with group as between factor and emotion and muscle as within factors for the rapid facial reactions (500–1,000 ms after stimulus onset). Interaction effects were followed-up by ANOVAs per muscle. In all tests empathy was included as a covariate because of the above mentioned marginal group differences in this variable. Greenhouse-Geisser corrections were applied if necessary.

The overall ANOVA with empathy as covariate revealed a significant group effect, F(1, 50) = 6.272, p = 0.016, η 2p = 0.111, and a significant Group x Emotion x Muscle interaction, F(6, 45) = 6.249, p = 0.003, η 2p = 0.111, with no other effect reaching significance (all ps > 0.10). To further analyze the three-way interaction, separate follow-up ANOVAs for the Zygomaticus major, the Corrugator supercilii, and the Frontalis medialis were calculated.

Zygomaticus Major

The Group × Emotion ANOVA on the Zygomaticus major data with empathy as covariate revealed a significant group effect, F(1, 50) = 4.665, p = 0.036, η 2p = 0.085, and a significant Group × Emotion interaction, F(3, 48) = 7.272, p < 0.01, η 2p = 0.127, with no other effect reaching significance (all ps > 0.10). As predicted, a follow-up tests with empathy as covariate revealed a significant group difference for happy faces, F(1, 50) = 12.522, p = 0.001, η 2p = 0.200, with stronger reactions in the happy as compared to the sad group (cf. Fig. 2). No other comparison reached significance (all ps > 0.10).

Corrugator Supercilii

The Group × Emotion ANOVA on the Corrugator supercilii data with empathy as covariate showed a marginal group effect, F(1, 50) = 3.859, p = 0.055, η 2p = 0.072, and a significant Group × Emotion interaction, F(3, 48) = 4.413, p = 0.021, η 2p = 0.081. Follow-up tests with empathy as covariate revealed significant group differences for sad faces, F(1, 50) = 7.644, p = 0.008, η 2p = 0.133, as well as angry faces, F(1, 50) = 4.703, p = 0.035, η 2p = 0.086, with stronger reactions to both emotional expressions in the happy as compared to the sad group (see Fig. 3). No other comparison reached significance (all ps > 0.10).

Frontalis Medialis

The Group × Emotion ANOVA on the Frontalis data (Fig. 4) did not reveal any significant effect (all ps > 0.10).

To sum up, we found more mimicry of happy, sad, and angry faces in the happy than in the sad group. Furthermore, the sad group did not react with more fear expressions (i.e., Frontalis reactions), to angry faces than the happy group.

Analyses for the Whole Stimulus Duration Time (0–6,000 ms) with Empathy as Covariate

We conducted an equivalent analysis (i.e., with empathy as covariate) on the whole stimulus presentation interval (0–6,000 ms after stimulus onset) to allow comparison with facial mimicry studies that used longer time windows. Because the pattern and results for the whole stimulus duration were essentially the same as for the rapid facial reactions, we report only the differences here. The full results of the analyses can be obtained from the corresponding author.

Facial Mimicry

The Corrugator supercilii activation of the happy group to the angry faces, and the Corrugator supercilii deactivation of the sad group to the happy faces were not marginal any more, ps > 0.11. Thus, the sad group showed even less evidence of mimicry when considering the whole period.

Group Differences

The group difference for Corrugator supercilii reactions to angry faces was now only marginally significant, F(1, 50) = 3.561, p = 0.065. Furthermore, a marginal group difference for Zygomaticus major reactions to neutral faces emerged, F(1 50) = 2.896, p = 0.095, η 2p = 0.055.

Baseline Muscular Activity

Table 1 summarizes the descriptive statistics for baseline muscular activity measured during the 1 s before picture onset.

While the happy group showed higher Zygomaticus major activity, the sad group showed higher Corrugator supercilii and Frontalis medialis activity. T-tests for independent groups, however, revealed only a significant difference for the Frontalis medialis, and a marginal difference for the Zygomaticus major (see Table 1). This means that in the period when no faces were shown, the facial expressions of the happy group already showed signs of happiness (Zygomaticus major activity), whereas the facial expression of the sad group showed signs of sadness (Corrugator supercilii and Frontalis medialis activity).

Mood Ratings

Table 2 shows the descriptive statistics for the mood ratings at the beginning and at the end of the experimental procedure. The Group × Time ANOVAs with repeated measures for the positive as well as the negative mood ratings did not reveal any significant effects involving the factor group, contrary to the results from our pilot study.Footnote 2 The only significant effect is a time effect for positive mood, F(1, 51) = 63,233, p < 0.001, η 2p = 0.554, indicating a decrease during the experiment which, however, was independent of the experimental manipulation.

Avatar Ratings

Only for the mean arousal ratings of the sad faces the t-test revealed a significant difference between the two groups, t(51) = 2.443, p = 0.018, d = 1.303, with higher ratings in the sad, M = 4.769, SEM = 0.255, compared to the happy group, M = 3.833, SEM = 0.285, with all other ps > 0.10.

Discussion

The main result of our experiment is that participants in a sad mood showed hardly any facial mimicry of happy and sad facial expressions. As shown by the main effects and post-hoc analyses, those in the sad condition neither showed significant mimicry in the rapid facial muscular reactions nor over the whole stimulus presentation time of 6 s. That is, participants in this group did not mimic the emotional facial expressions at all while participants in a happy mood mimicked happy, as well as sad, and in part also angry expressions. Furthermore, the sad group rated the sad faces as more intense than the happy group. In addition we could demonstrate a slightly heightened baseline Zygomaticus major activity in the happy group and a heightened baseline Frontalis activity in the sad group, as measured during 1 s before stimulus onset.

The missing congruent facial reactions in participants who watched the sad movie are well in line with the results by van Baaren et al. (2006) who could provide similar results for non-facial mimicry. Participants in their experiment’s sad condition hardly mimicked a person who played with a pen. However, it is not clear whether the underlying mechanism proposed by van Baaren et al. (2006) can be applied to the results in our study. According to the reasoning by van Baaren et al. the results could be explained by the affect-as-information theory (cf. Schwarz and Clore 1996). This theory proposes that a negative mood signals that something might be wrong in the environment which in turn leads to a more analytical, effortful, and cautious information processing style and thereby disrupts automatic tendencies such as mimicry. Unfortunately, this theory does not differentiate between different qualities of negative mood states. This is actually relevant because not all negative emotions lead to an analytical information processing style (Tiedens and Linton 2001). In our opinion, analytic processing would fit well for negative mood states that signal threat and require further action like anger or fear. Accordingly, Derryberry and Tucker (1994) could show that fearful states direct attention away from the self and to the environment. Consequently, facial expressions should be perceived and processed easily, especially when they signal danger, such as angry and fearful expressions. This is supported by the study by Moody et al. (2007) which showed that a fearful state evokes a fearful response to an angry face.

But we do not think that a cautious, analytical processing style would also be functional for negative mood states that do not require further action just like sadness. For sadness we would propose a more specific explanation: according to the proposed mechanism behind mimicry, the Perception-Behavior-Link (Chartrand and Bargh 1999), the perception of a behavior activates the representation of this behavior and this behavior becomes easier to be performed. In a sad mood, the attention to and perception of external stimuli is reduced due to an increase in self-focused attention (see “Introduction”). This reduced perception of the counterpart’s behavior should lead to a smaller activation of the perception-behavior-link and thereby to less mimicry.

Whether the effect of a lack of automatic mimicry in a sad mood is specifically mediated by information processing style or by self-focused attention should be investigated in further studies. One possibility could be to register evoked potentials to assess whether attentional processes in visual tasks are modified. Probably the most direct method to assess selective attention and the time course of attentional deployment is to continuously record the exact position of eye gaze by eye tracking devices. The eye tracking methodology provides an excellent research tool to investigate a variety of processes in visual tasks related to attention (for a review see Findlay and Gilchrist 2003) and allows the discrimination of early and late stages of attentional processing.

While van Baaren et al. (2006) could also demonstrate effects of the presented movies on mood, the two groups in our experiment did not show significant mood differences on subjective measures. These different results for mood, however, might be explained by procedural differences: We measured mood after presentation of the facial expressions (i.e., about 15 min after the end of the mood induction procedure), while participants in the van Baaren et al. experiment rated their mood immediately after the movie, a time point for which we also found profound mood differences in our pilot study. Thus, it seems likely that consciously experienced mood changes faded throughout the presentation of the faces in our experiment. This interpretation is supported by our pilot study (see Footnote 2). In this study we could demonstrate mood differences immediately after the film clips in the expected direction. However, we could not find significant mood differences after presentation of the emotional faces, irrespectively of whether the participants rated their mood only after the presentation of the emotional faces or two times, namely after the film clips as well as after the presentation of the emotional faces.

Besides the fact that the sad group did not show any significant facial mimicry in accordance with the results by van Baaren et al. (2006) two other results also point to an effective manipulation. First, we found baseline muscular activity differences for the 1 s before stimulus onset in a direction one would expect from the movie clip contents: While in the happy group the baseline Zygomaticus major activity was slightly higher compared to the sad group, baseline Frontalis activity in the sad group was higher compared to the happy group. Thus again, implicit measures tell us that there are manipulation effects. And second, sad faces were perceived differently by both groups as is obvious from the higher arousal ratings by the sad group (respectively, lower arousal ratings by the happy group).

This lack of congruent facial muscular reactions in the sad group on the one hand and the higher intensity ratings for sad faces on the other hand reflect a clear dissociation between an implicit (facial muscular reactions) and an explicit measure (intensity ratings). Recent embodiment theories (for a summary see Niedenthal 2007; for empirical evidence see Niedenthal et al. 2000, 2001), however, postulate that our imitation of the vis-à-vis’ emotional expression helps us to understand our counterpart. But our results show that the vis-à-vis’ sad expression is not imitated at all and that the observer rates exactly this expression as more intensive. Thus the question arises how embodiment theories can handle this discrepancy. In the same vein, embodiment theories can hardly explain the results by Moody et al. (2007), showing that a fearful state evokes a fearful response to an angry face. Furthermore, we do have data showing that a competition priming also leads to incongruent facial reactions in the very early facial muscular reactions (i.e., within the first second after the presentation of the facial expressions; Weyers et al. 2010). That is, in some situations the vis-à-vis emotional facial expression seems to be perceived and evaluated without imitating the expression, leading to an abandoned or even an incongruent reaction according to the situational context. Up to now one can only speculate about the underlying mechanisms (cf. Moody et al. 2007).

Our data for the rapid facial reactions show a trend towards congruent facial reactions to angry expressions, specifically an increase in Corrugator supercilii activity, after having watched the clip intended to induce a happy mood state, while this was not the case for the clip intended to induce a sad mood state. In addition, the marginal effect in the happy condition disappears when looking at the whole presentation time. The former results are in line with the results by Dimberg and colleagues who mostly found an increase in Corrugator supercilii activity towards anger expressions (e.g. Dimberg 1982, 1988; Dimberg and Petterson 2000). On the other hand, Bourgeois and Hess (2008) measured the facial reactions over several seconds and provided evidence that the facial reactions to angry faces depend on the social context. They argue that an angry facial expression is mimicked when anger is assumed to be legitimate (e.g., when directed at a shared anger object). In line with this, van der Velde et al. (2010) could recently show that mimicking anger can have negative consequences. Participants in their study reported significantly less liking for an interaction partner if they imitated the other’s anger expressions than if they did not.

Accordingly, anger mimicry is supposed to be inappropriate when the context lacks social information on its source because an angry face is supposed to indicate threat and aggression (Aronoff et al. 1988, 1992) and to be a non-affiliative expression (Bourgeois and Hess 2008). Thus, further research seems necessary to clarify the conditions under which imitation of angry facial expressions is shown. Also, if further research could show that anger mimicry is a reliable effect for a happy mood state, one could assume that the more heuristic, less analytic, and less effortful information processing style (see “Introduction”)––which is connected to the happy mood––gives way to more automatic processes, specifically to more mimicry also in response to angry faces.

One limitation of our study is the absence of a neutral control condition. Thus, we cannot clearly determine the source of differences between the two mood conditions. However, compared to conditions without context manipulations in other studies reporting predominantly significant amounts of facial mimicry (e.g., Bourgeois and Hess 2008; Dimberg 1982; Dimberg et al. 2000, 2002; Moody et al. 2007; Weyers et al. 2009) it seems very plausible that the lack of facial reactions in the sad mood condition actually arose from the specific sadness induction. More difficult to draw is the conclusion whether the happy mood induction increased facial mimicry. One way of coping with that problem is comparing the amplitudes of muscular activation to happy and sad expressions with those in one of our own studies that used the same stimuli and timing parameters (Likowski et al. 2008). In this comparable study, participants had to look through emotional facial expressions of positive and negative avatar characters. Amplitudes of zygomaticus reactions to happy expressions of positive characters were about 0.6 ΔμV and amplitudes of corrugator reactions to sad expressions of positive characters about 0.2 ΔμV in size. Whereas the zygomaticus reactions to happy faces are very similar to the reactions in the happy mood condition in this study (0.65 ΔμV in the happy mood condition), the corrugator reactions to sad faces appear to be much higher in the present study (0.67 ΔμV in the happy mood condition). This suggests that the happy mood induction increased the amount of facial mimicry compared to a neutral control level. However, further studies should include a control condition with a neutral movie clip, showing for example, a nature scene or a non-arousing documentary, to unambiguously answer this question.

Another critical aspect of our study might be that we used computer generated artificial faces. Although there is empirical evidence that such faces can elicit facial mimicry as would be expected from human faces (Likowski et al. 2008; Weyers et al. 2009) one might wonder whether the results for the sad group are related to the artificial nature of the stimuli. This, however, would imply a very specific phenomenon based on the co-occurrence of being in a sad mood and being confronted with computer generated faces leading to hardly any facial reactions. In order to test this assumption further research should use photos from real persons like the ones included in the Pictures of Facial Affect (Ekman and Friesen 1976) or the Karolinska Directed Emotional Faces (Lundqvist et al. 1998) in a similar setting. As we (Likowski et al. 2008) and others (Krumhuber and Kappas 2005; Schrammel et al. 2009) have shown, however, computer generated faces are well suited for experiments to further extend our knowledge about the moderating influence of social context variables on nonverbal social interaction. Furthermore, in a real-life setting with real interaction partners the overall amplitudes of facial reactions, of course, might be higher than in this lab study. This, however, should only manifest itself as a main effect. The difference between happy and sad mood condition should be independent of the nature of the stimuli.

Our results of a nearly complete lack of congruent facial muscular responses to the happy and sad faces might contribute to a further understanding of the fact that dysphoric and depressed individuals are frequently rejected by others (e.g., Alloy et al. 1998; Coyne 1976). Wexler et al. (1994) found hardly any congruent facial reactions to emotional faces in depressed individuals, and for a subclinical sample Sloan et al. (2002) found incongruent reactions to happy faces. Reduced mimicry or even incongruent facial reactions especially to happy and sad faces, however, might be interpreted by other persons as a lack of affiliative tendencies, and thus be a specific mediator––among others––for reduced sympathy and liking (Bailenson and Yee 2005; Bavelas et al. 1986; Chartrand and Bargh 1999), leading to withdrawing from and avoiding dysphoric individuals. Our results point to the possibility of a vicious cycle starting with ordinary negative mood, leading to social exclusion which should only prolong the negative mood. This could be one mechanism explaining how prolonged episodes of dysphoria can be initiated. It also seems to be important for close relationships. Frequent negative mood and poor mood repair skills should then negatively predict relationship quality because of a frequent lack of mimicry with its social glue function.

Another interesting aspect of our data is to show differences between negative mood and dysphoria. Whereas temporarily saddened individuals do not react to facial expressions, dysphoric individuals even react with an increase in Corrugator activity (Sloan et al. 2002) that is usually a sign of negative evaluation (Larsen et al. 2003). Furthermore, whereas dysphoric individuals do not show any baseline muscular activity different from non-dysphoric individuals (Sloan et al. 2002) saddened individuals compared to happy individuals show larger Frontalis activity at baseline. Thus, even though both groups feel negative affect and a lack of positive affect, the physiological reactions are quite different.

In summary, in line with the results by van Baaren et al. (2006) our results show in a controlled, experimental fashion that even short-term sad mood states have a substantial impact on peoples’ nonverbal social behavior, and specifically on peoples’ affiliative behavior. However, whether this effect of a disruption of automatic imitative processes is specifically mediated by information processing style or by self-focused attention should be investigated in further studies.

Notes

Because of this discrepancy with the pilot study, we suspected that the lack of a mood effect was due to the mood dissipating over the course of the experiment. To verify this assumption, we conducted another study with the same procedures as our main study, but varying when mood was measured: immediately after its induction or in the end of the experiment. The results of this study confirmed our assumption. Whereas we found significant differences immediately after the clips, there were no longer significant differences after the presentation of the facial expressions, independent of whether mood was measured only after the presentation of the facial expressions or after the film clips and after the facial expressions. Details of this pilot study are available upon request.

References

Alloy, L. B., Fedderly, S. S., Kennedy-Moore, E., & Cohan, C. L. (1998). Dysphoria and social interaction: An integration of behavioral confirmation and interpersonal perspectives. Journal of Personality and Social Psychology, 74, 1566–1579.

Aronoff, J., Barclay, A. M., & Stevenson, L. A. (1988). The recognition of threatening facial stimuli. Journal of Personality and Social Psychology, 54, 647–665.

Aronoff, J., Woike, B. A., & Hyman, L. M. (1992). Which are the stimuli in facial displays of anger and happiness? Configurational bases of emotion recognition. Journal of Personality and Social Psychology, 62, 1050–1066.

Bailenson, J. N., & Yee, N. (2005). Digital chameleons: Automatic assimilation of nonverbal gestures in immersive virtual environments. Psychological Science, 16, 814–819.

Bavelas, J. B., Black, A., Lemery, C. R., & Mullett, J. (1986). “I show how you feel”: Motor mimicry as a communicative act. Journal of Personality and Social Psychology, 50, 322–329.

Bourgeois, P., & Hess, U. (2008). The impact of social context on mimicry. Biological Psychology, 77, 343–352.

Chartrand, T. L., & Bargh, J. A. (1999). The chameleon effect: The perception–behavior link and social interaction. Journal of Personality and Social Psychology, 76, 893–910.

Chartrand, T. L., Maddux, W. W., & Lakin, J. L. (2005). Beyond the perception–behavior link: the ubiquitous utility and motivational moderators of nonconscious mimicry. In R. R. Hassin, J. S. Uleman, & J. A. Bargh (Eds.), The new unconscious (pp. 334–361). New York: Oxford University Press Oxford University Press.

Coyne, J. C. (1976). Depression and the response of others. Journal of Abnormal Psychology, 100, 316–336.

Davis, M. (1980). A multidimensional approach to individual differences in empathy. JSAS Catalogue of Selected Documents in Psychology, 10, 85.

Derryberry, D., & Tucker, D. M. (1994). Motivating the focus of attention. In P. M. Niedenthal & S. Kitayama (Eds.), Heart’s eye: Emotional influences in perception and attention (pp. 167–196). New York: Academic Press.

Dimberg, U. (1982). Facial reactions to facial expressions. Psychophysiology, 19, 643–647.

Dimberg, U. (1988). Facial electromyography and the experience of emotion. Journal of Psychophysiology, 2, 277–282.

Dimberg, U., & Lundqvist, L.-O. (1990). Gender differences in facial reactions to facial expressions. Biological Psychology, 30, 151–159.

Dimberg, U., & Petterson, M. (2000). Facial reactions to happy and angry facial expressions: Evidence for right hemisphere dominance. Psychophysiology, 37, 693–696.

Dimberg, U., Thunberg, M., & Elmehed, K. (2000). Unconscious facial reactions to emotional facial expressions. Psychological Science, 11, 86–89.

Dimberg, U., Thunberg, M., & Grunedal, S. (2002). Facial reactions to emotional stimuli: Automatically controlled emotional responses. Cognition and Emotion, 16, 449–471.

Ekman, P., & Friesen, W. V. (1976). Pictures of facial affect. Palo Alto: Consulting Psychologists Press.

Ekman, P., & Friesen, W. V. (1978). The Facial Action Coding System. Palo Alto: Consulting Psychologists Press.

Findlay, J. M., & Gilchrist, I. D. (2003). Active vision: The psychology of looking and seeing. Oxford: Oxford University Press.

Fridlund, A. J., & Cacioppo, J. T. (1986). Guidelines for human electromyographic research. Psychophysiology, 23, 567–589.

Friesen, W. V., & Ekman, P. (1984). EMFACS-7 Unpublished manuscript. Human Interaction Laboratory. San Francisco: University of California.

Green, J. D., & Sedikides, C. (1999). Affect and self-focused attention revisited: The role of affect orientation. Personality and Social Psychology Bulletin, 25, 104–119.

Gump, B. B., & Kulik, J. A. (1997). Stress, affiliation, and emotional contagion. Journal of Personality and Social Psychology, 72, 305–319.

Herrera, P., Bourgeois, P., & Hess, U. (1998). Counter mimicry effects as a function of racial attitudes. Poster presented at the 38th Annual Meeting of the Society for Psychophysiological Research. Colorado: Denver.

Hess, U. (2001). The communication of emotion. In A. Kaszniak (Ed.), Emotions, qualia and consciousness (pp. 397–409). Singapore: World Scientific Publishing.

Hewig, J., Hagemann, D., Seifert, J., Gollwitzer, M., Naumann, E., & Bartussek, D. (2005). A revised film set for the induction of basic emotions. Cognition and Emotion, 19, 1095–1109.

Krohne, H. W., Egloff, B., Kohlmann, C.-W., & Tausch, A. (1996). Investigations with a German version of the Positive and Negative Affect Schedule (PANAS). Diagnostica, 42, 139–156.

Krumhuber, E., & Kappas, A. (2005). Moving smiles: The role of dynamic components for the perception of the genuineness of smiles. Journal of Nonverbal Behavior, 29, 3–24.

Lakin, J. L., & Chartrand, T. L. (2003). Using nonconscious behavioral mimicry to create affiliation and rapport. Psychological Science, 14, 334–339.

Lakin, J. L., Jefferis, V. E., Cheng, C. M., & Chartrand, T. L. (2003). The chameleon effect as social glue: Evidence for the evolutionary significance of nonconscious mimicry. Journal of Nonverbal Behavior, 27, 145–162.

Lanzetta, J. T., & Englis, B. G. (1989). Expectations of cooperation and competition and their effects on observers’ vicarious emotional responses. Journal of Personality and Social Psychology, 56, 543–554.

Larsen, J. T., Norris, C. J., & Cacioppo, J. T. (2003). Effects of positive and negative affect on electromyographic activity over zygomaticus major and corrugator supercilii. Psychophysiology, 40, 776–785.

Likowski, K. U., Mühlberger, A., Seibt, B., Pauli, P., & Weyers, P. (2008). Modulation of facial mimicry by attitudes. Journal of Experimental Social Psychology, 44, 1065–1072.

Lundqvist, L.-O. (1995). Facial EMG reactions to facial expressions: A case of facial emotional contagion? Scandinavian Journal of Psychology, 36, 130–141.

Lundqvist, D., Flykt, A., & Öhman, A. (1998). The Karolinska Directed Emotional Faces––KDEF, CD ROM from Department of Clinical Neuroscience, Psychology section, Karolinska Institutet, ISBN 91-630-7164-9.

McHugo, G. J., Lanzetta, J. T., & Bush, L. K. (1991). The effect of attitudes on emotional reactions to expressive displays of political leaders. Journal of Nonverbal Behavior, 15, 19–41.

Mondillon, L., Niedenthal, P. M., Gil, S., & Droit-Volet, S. (2007). Imitation of in-group versus out-group members’ facial expressions of anger: A test with a time perception task. Social Neuroscience, 2, 223–237.

Moody, E. J., McIntosh, D. N., Mann, L. J., & Weisser, K. R. (2007). More than mere mimicry? The influence of emotion on rapid facial reactions to faces. Emotion, 7, 447–457.

Niedenthal, P. M. (2007). Embodying emotion. Science, 316, 1002–1005.

Niedenthal, P. M., Brauer, M., Halberstadt, J. B., & Innes-Ker, A. H. (2001). When did her smile drop? Contrast effects in the influence of emotional state on the detection of change in emotional expression. Cognition and Emotion, 15, 853–864.

Niedenthal, P. M., Halberstadt, J. B., Margolin, J., & Innes-Ker, A. H. (2000). Emotional state and the detection of change in facial expression of emotion. European Journal of Social Psychology, 30, 211–222.

Paulus, C. (2000). Der Saarbrücker Persönlichkeitsfragebogen SPF (IRI). [The Saarbrücker Personality Inventory SPF (IRI)]. [Web document]. Retrieved from http://www.uni-saarland.de/fak5/ezw/abteil/motiv/paper/SPF(IRI).pdf.

Paulus, C. (2009). Der Saarbrücker Persönlichkeitsfragebogen SPF (IRI) zur Messung von Empathie: Psychometrische Evaluation der deutschen Version des Interpersonal Reactivity Index. [The Saarbrueck Personality Questionnaire on Empathy: Psychometric evaluation of the German version of the Interpersonal Reactivity Index]. [Web document]. Retrieved from http://psydok.sulb.uni-saarland.de/volltexte/2009/2363/.

Salovey, P. (1992). Mood-induced self-focused attention. Journal of Personality and Social Psychology, 62, 699–707.

Schrammel, F., Pannasch, S., Graupner, S. T., Mojzisch, A., & Velichkovsky, B. M. (2009). Virtual friend or threat? The effects of facial expression and gaze interaction on psychophysiological responses and emotional experience. Psychophysiology, 46, 922–931.

Schwarz, N., & Clore, G. (1996). Feelings and phenomenal experiences. In E. T. Higgins & A. W. Kruglanski (Eds.), Social psychology: Handbook of basic principles (pp. 433–465). New York: Guilford.

Sedikides, C. (1992). Mood as a determinant of attentional focus. Cognition and Emotion, 6, 129–148.

Silvia, P. J., Phillips, A. G., Baumgaertner, M. K., & Maschauer, E. L. (2006). Emotion concepts and self-focused attention: Exploring parallel effects of emotional states and emotional knowledge. Motivation and Emotion, 30, 229–235.

Sloan, D. M., Bradley, M. M., Dimoulas, E., & Lang, P. J. (2002). Looking at facial expressions: Dysphoria and facial EMG. Biological Psychology, 60, 79–90.

Sonnby-Borgström, M., Jönsson, P., & Svensson, O. (2003). Emotional empathy as related to mimicry reactions at different levels of information processing. Journal of Nonverbal Behavior, 27, 3–23.

Spencer-Smith, J., Wild, H., Innes-Ker, A. H., Townsend, J., Duffy, C., Edwards, C., et al. (2001). Making faces: Creating three-dimensional parameterized models of facial expression. Behavior Research Methods, Instruments & Computers, 33, 115–123.

Tiedens, L. Z., & Linton, S. (2001). Judgment under emotional certainty and uncertainty: the effects of specific emotions on information processing. Journal of Personality and Social Psychology, 81, 973–988.

van Baaren, R. B., Fockenberg, D. A., Holland, R. W., Janssen, L., & van Knippenberg, A. (2006). The moody chameleon: The effect of mood on non-conscious mimicry. Social Cognition, 24, 426–437.

van der Velde, S. W., Stapel, D. A., & Gordijn, E. H. (2010). Imitation of emotion: When meaning leads to aversion. European Journal of Social Psychology, 40, 536–542.

Watson, D., Clark, L. A., & Tellegen, A. (1988). Development and validation of brief measures of positive and negative affect: The PANAS scales. Journal of Personality and Social Psychology, 54, 1063–1070.

Wexler, B. E., Levenson, L., Warrenburg, S., & Price, L. H. (1994). Decreased perceptual sensitivity to emotion-provoking stimuli in depression. Psychiatry Research, 51, 127–138.

Weyers, P., Likowski, K. U., Seibt, B., Wernecke, S., Pauli, P., Mühlberger, A., & Hess, U. (2010). Facial reactions to emotional facial expressions after subliminal priming for competition and cooperation. (submitted).

Weyers, P., Mühlberger, A., Kund, A., Hess, U., & Pauli, P. (2009). Modulation of facial reactions to avatar emotional faces by nonconscious competition priming. Psychophysiology, 46, 328–335.

Wood, J. V., Saltzberg, J. A., & Goldsamt, L. A. (1990). Does affect induce self-focused attention? Journal of Personality and Social Psychology, 58, 899–908.

Acknowledgments

The research was supported by the German Research Foundation (DFG WE2930/2-1) and a Postdoc-grant from the German Research Foundation (DFG) to the third author (SE 1121/3-1).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Likowski, K.U., Weyers, P., Seibt, B. et al. Sad and Lonely? Sad Mood Suppresses Facial Mimicry. J Nonverbal Behav 35, 101–117 (2011). https://doi.org/10.1007/s10919-011-0107-4

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10919-011-0107-4