Abstract

We propose an efficient numerical method for a non-selfadjoint Steklov eigenvalue problem. The Lagrange finite element is used for discretization and the convergence is proved using the spectral perturbation theory for compact operators. The non-selfadjointness of the problem leads to non-Hermitian matrix eigenvalue problem. Due to the existence of complex eigenvalues and lack of a priori spectral information, we employ the recently developed spectral indicator method to compute eigenvalues in a given region on the complex plane. Numerical examples are presented to validate the effectiveness of the proposed method.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Steklov eigenvalue problems arise in mathematical physics with spectral parameters in the boundary conditions [24]. Applications of Steklov eigenvalues include surface waves, mechanical oscillators immersed in a viscous fluid, the vibration modes of a structure in contact with an incompressible fluid, etc. [13, 14, 24]. Recently, Steklov eigenvalues have been used in the inverse scattering theory to reconstruct the index of refraction of an inhomogeneous medium [12]. Note that most Steklov eigenvalue problems considered in the literature are related to partial differential equations of second order. However, Steklov eigenvalue problems of higher order were also studied, e.g., the fourth order Steklov eigenvalue problem [2].

In contrast to the theoretical study of the Steklov eigenvalue problems, numerical methods, in particular, finite element methods have attracted some researchers rather recently [1, 3, 7, 8, 14, 16, 23, 26, 38]. Various methods have been proposed, including the isoparametric finite element method [3], the virtual element method [26], non-conforming finite element methods [16, 25, 38], the spectral-Galerkin method [2], adaptive methods [7], multilevel methods [18, 35], etc. All of the above works consider the selfadjoint cases. In this paper, we consider a non-selfadjoint Steklov eigenvalue problem arising in the study of inhomogeneous absorbing media in inverse scattering theory [12]. There seems to exist only one paper by Bramble and Osborn [10], which considered the non-selfadjoint Steklov eigenvalue problem. However, the second order non-selfadjoint operator is assumed to be uniformly elliptic and no numerical results were reported in [10]. The current paper contains both finite element theory and numerical examples for a non-selfadjoint Steklov eigenvalue problem. Note that there exist works on other non-selfadjoint eigenvalue problems, e.g., [28, 36, 37]. For the general theory and examples of finite element methods for eigenvalue problems, we refer the readers to the book chapter by Babuška and Osborn [4], the review paper by Boffi [9], and the monograph by Sun and Zhou [34].

Finite element discretizations for non-selfadjoint eigenvalue problems usually lead to non-Hermitian generalized eigenvalues problems. Most classical eigensolvers for large sparse matrices are designed for Hermitian (or symmetric) matrices. For non-Hermitian problems, there exist less methods including the Arnoldi method, Lanczos method and Jacobi–Davidson method [5]. Unfortunately, these methods are still far from satisfactory [30], in particular, when the number and distribution of eigenvalues are not known.

In a recent paper [20], a novel spectral indicator eigenvalue solver RIM (recursive integral method) is developed for non-Hermitian eigenvalue problems. RIM computes all eigenvalues in a region on the complex plane \({\mathbb {C}}\) without any a priori spectral information. Roughly speaking, given a region \(S \subset {\mathbb {C}}\) whose boundary \(\Gamma :=\partial S\) is a simple closed curve, RIM computes an indicator \(\delta _S\) for S using spectral projection defined by a Cauchy contour integral on \(\Gamma \). The indicator is used to decide if S contains eigenvalue(s). In case of positive answers, S is divided into sub-regions and indicators for these sub-regions are computed. The procedure continues until the size of the region is smaller than a specified precision \(d_0\) (e.g., \(d_0 = 10^{-9}\)). The centers of the regions are the approximations of eigenvalues.

Spectral projection (contour integral) is a classical tool in operator theory [22] and has been used extensively in the finite element theory for eigenvalues problem [4, 27]. It became popular recently for matrix eigenvalue problems using invariant subspaces [6, 29, 31]. RIM differs from these integral based methods [29, 31] in the sense that the basic idea is to define an indicator of a region and use bisection-like technique to compute the eigenvalues accurately. Contour integrals are only used in RIM to compute an indicator of the region. The approximation of the integral need not be accurate. Furthermore, no estimation of the number of eigenvalues in the region is needed as a prior.

In this paper, we propose a simple finite element method and extend the spectral indicator method RIM to compute complex Steklov eigenvalues in the region of interest. The contributions of the paper are (1) it provides a finite element analysis for a non-selfadjoint Steklov eigenvalue problem; and (2) it extends a new eigensolver for the resulting non-Hermitian matrix eigenvalue problem. The rest of the paper is organized as follows. In Sect. 2, we introduce the Steklov eigenvalue problem, its adjoint problems, variational formulations, and prove the well-posedness. In Sect. 3, we propose a linear finite element method and prove the convergence. In Sect. 4, we extend the new spectral indicator method for the resulting non-Hermitian matrix eigenvalue problems. Numerical examples are presented in Sect. 5. Finally, some conclusions and future work are discussed in Sect. 6.

2 A Non-selfadjoint Steklov Eigenvalue Problem

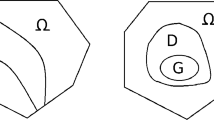

Let \(\Omega \subset {\mathbb {R}}^2\) be a bounded polygon with Lipshitz boundary \(\partial \Omega \). Let \(\nu \) be the unit outward normal to \(\partial \Omega \). Let k be the wavenumber and n(x) be the index of refraction. We consider the Steklov eigenvalue problem to find \(\lambda \in {\mathbb {C}}\) and a nontrivial function \(u \in H^1(\Omega )\) such that

Define

and the continuous sesquilinear form

The weak formulation for (2.1) is to find \((\lambda , u) \in {\mathbb {C}} \times H^1(\Omega )\) such that

The associated source problem is, given \(g \in L^2(\partial \Omega )\), to find \(u \in H^1(\Omega )\) such that

In this paper, we assume that n(x) is a bounded complex valued function given by

where \(i = \sqrt{-1}\), \(n_1(x) > 0\) and \(n_2(x) \ge 0\) are bounded smooth functions.

It is easy to verify that \(a(\cdot , \cdot )\) satisfies the Gårding’s inequality [11], i.e., there exist constants \(K < \infty \) and \(\alpha _0 > 0\) such that

Let K be a positive constant, which is large enough. Define the sesquilinear form \(A: H^1 \times H^1 \rightarrow {\mathbb {C}}\) such that

The following lemma shows that A is \(H^1(\Omega )\)-elliptic [19].

Lemma 2.1

For K large enough, the sesquilinear form A is \(H^1(\Omega )\)-elliptic, i.e., there exists \(\alpha _0 > 0\) such that

Proof

Since \(n_1(x)\) is bounded, there exist a constant B such that \(n_1(x) < B\) for all \(x \in \Omega \). Using the Gårding’s inequality (2.4), we have that

where \(\alpha _0=\min \{1, K-k^2B\}\) for K large enough. \(\square \)

As a consequence, the Fredholm alternative can be used to show the existence of a unique solution for (2.3). To this end, we need to define the (generalized) Neumann eigenvalues.

Definition 2.1

The Neumann eigenvalue problem associated with n(x) is to find \(k^2 \in {\mathbb {C}}\) and a nontrivial \(u \in H^1(\Omega )\) such that

Theorem 2.1

Let \(g \in L^2(\partial \Omega )\). Assuming that \(k^2\) is not a Neumann eigenvalue associated with n(x) on \(\Omega \), there exists a unique solution \(u \in H^1(\Omega )\) to (2.3) such that

Proof

Since \(k^2\) is not a Neumann eigenvalue, then the uniqueness holds for (2.3). By Fredholm Alternative (see e.g., Section 5.3 of [19]), there exists a unique solution u to (2.3) and the regularity follows readily. \(\square \)

Consequently, one can define an operator, which is in fact the Neumann-to-Dirichlet mapping, \(T: L^2(\partial \Omega ) \rightarrow L^2(\partial \Omega )\) [12]

The mapping T is compact since \(Tg \in H^{1/2}(\partial \Omega )\) and \(H^{1/2}(\partial \Omega )\) is compactly embedded in \(L^2(\partial \Omega )\). Denote an eigenpair of T by \((\mu , g)\) such that

It is clear that \(\mu \) and \(\lambda \) are related

We shall also need the adjoint operator \(T^*\) of T for the proof of convergence of the finite element method later. Consider the adjoint problem for (2.3). Given \(g \in L^2(\partial \Omega )\), find \(v \in H^1(\Omega )\) such that

Then (2.9) has a unique solution v. The solution operator for (2.9) is the adjoint operator \(T^*g: L^2(\partial \Omega ) \rightarrow L^2(\partial \Omega )\) such that \(T^*g = v|_{\partial \Omega }\).

3 Finite Element Approximation

In this section, we present a finite element approximation \(T_h\) for T. Let \({\mathcal {T}}_h\) be a regular triangular mesh for \(\Omega \) with mesh size h. Let \(V_h \subset H^1(\Omega )\) be the Lagrange finite element space associated with \({\mathcal {T}}_h\) and \(V^B_h := V_h|_{\partial \Omega }\) be the restriction of \(V_h\) on \(\partial \Omega \). It is clear that \(V^B_h \subset L^2(\partial \Omega )\). The finite element formulation for the Steklov eigenvalue problem is to find \((\lambda _h, u_h) \in {\mathbb {C}} \times V_h\) such that

For the convergence of eigenvalues, we first study the source problem. Given \(g \in L^2(\partial \Omega )\), let \(g_h\) be the projection of g onto \(V^B_h\). The discrete problem is to find \(u_h \in V_h\) such that

In the rest of this section, we assume that \(u \in H^2(\Omega )\) and the same regularity holds for the solution of the adjoint problem. This is the case when \(\Omega \) is convex. In general, when \(\Omega \) is non-convex, u does not belong to \(H^2(\Omega )\). We refer the readers to [17] for further discussions on the regularity of u, which is out of the scope of the current paper.

We have the following estimate for (3.2). For completeness, we provide the proof as well, which is based on Theorem 5.7.6 of [11].

Theorem 3.1

Let u be the solution to (2.3). Assume that k is not a Neumann eigenvalue. There exists a unique solution \(u_h\) to (3.2) such that, for h small enough,

and

Proof

For the finite dimensional problem (3.2), existence of a solution can be established using uniqueness. Assuming that there exists a nontrivial solution \(u_h\) to (3.2) for \(g_h=0\). For the continuous problem, \(g=0\) implies that the solution \(u=0\). Then (3.6) asserts that \(u_h=0\). Thus the uniqueness holds, which implies the existence of the solution \(u_h\) as well.

Using (2.3) and (3.2), one has the Galerkin orthogonality

The Gårding’s inequality (2.4) implies that

Assume that the estimate (3.4) holds, i.e.,

for some constant \(C_1 > 0\). One has that

Then for h small enough, we obtain

The rest of the proof is devoted to verifying (3.4). Let w be the solution to the adjoint problem

Then, for any \(w_h \in V_h\),

where we have used the regularity of the solution for the adjoint problem. Consequently,

\(\square \)

As a result, problem (3.2) defines a discrete operator \(T_h: L^2(\partial \Omega ) \rightarrow V^B_h\) such that

The following theorem shows that \(T_h\) converges to T in norm in \(L^2(\partial \Omega )\).

Theorem 3.2

Assume that \(g \in H^{1/2}(\partial \Omega ) \subset L^2(\partial \Omega )\). Let T and \(T_h\) be defined as in (2.8) and (3.7) using linear Lagrange element, respectively. Then

Proof

Using the approximation property of the linear Lagrange finite element (see Eq. (3.9) of [34]), for \(u \in H^2(\Omega )\), one has that

Therefore, by Theorem 3.1,

One has that

where we have applied Theorem 3.1 and the trace theorem (Theorem 1.6.6 in [11]). Hence (3.8) follows immediately and the proof is complete. \(\square \)

Similarly, the discrete problem for the adjoint problem (2.9) can be defined as follows. Given \(g \in L^2(\partial \Omega )\), find \(v_h \in H^1(\Omega )\) such that

Then all results in this section also hold for the adjoint problem (2.9). In particular, one has that discrete adjoint operator \(T^*_h: L^2(\partial \Omega ) \rightarrow V^B_h\) such that \( T_h g_h = v_h|_{V^B_h} \) where \(v_h\) the solution of (3.10) and

Now we turn to the finite element spectral approximation of Steklov eigenvalues based on the theory in [27]. We shall need some preliminaries on the spectral theory of compact operators (see, e.g., [22]). Let \(T: X \rightarrow X\) be a compact operator on a complex Hilbert space X. Let \(z \in {\mathbb {C}}\). The resolvent operator of T is defined as

The resolvent set of T is

The spectrum of T is \(\sigma (T)={\mathbb {C}} \setminus \rho (T)\).

Since T is compact, each \(\mu \in \sigma (T)\) is an isolated eigenvalue of T and the generalized eigenspace associated with \(\mu \) is finite dimensional. Furthermore, there exists a smallest positive integer \(\alpha \) such that

where \({\mathcal {N}}\) denotes the null space. The integer \(m=\dim {\mathcal {N}}\left( (\mu -T)^\alpha \right) \) is called the algebraic multiplicity of \(\mu \). The functions in \({\mathcal {N}}\left( (\mu -T)^\alpha \right) \) are called the generalized eigenfunctions of T corresponding to \(\mu \). Note that the geometric multiplicity of \(\mu \) is defined as \(\dim {\mathcal {N}}(\mu - T)\).

Let \(\Gamma \) be a simple closed curve on the complex plane \(\mathbb C\) lying in \(\rho (T)\), which contains an eigenvalue \(\mu \) and no other eigenvalues. Let the algebraic multiplicity of \(\mu \) be m. The spectral projection is defined by

It is well-known that E is a projection onto the space spanned by the generalized eigenfunctions \({\phi }_j, j=1,\ldots , m\) associated with \(\mu \), i.e., the range of E, \({\mathcal {R}}(E)\), coincides wth \( {\mathcal {N}}\left( (\mu -T)^\alpha \right) \).

Since \(T_h\) converges to T in norm as \(h \rightarrow 0\), \(\Gamma \subset \rho (T_h)\) for h small enough. In addition, there exists m eigenvalues \(\mu _h^1, \ldots , \mu _h^m\) of \(T_h\) inside \(\Gamma \) such that

The spectral projection

converges to E pointwise and \(\text {dim}{\mathcal {R}}(E_h) = \text {dim}{\mathcal {R}}(E)\).

If \(\mu \) is an eigenvalue of T, then \({\overline{\mu }}\) is an eigenvalue of \(T^*\). Let \(\phi _1, \ldots , \phi _m\) be a basis for \({{\mathcal {R}}}(E)\) and \(\phi _1^*, \ldots , \phi _m^*\) be the dual basis to \(\phi _1, \ldots , \phi _m\) (see Section 1.1 of [34]). The following lemma (Theorem 3 of [27]) will be used to prove the convergence of Steklov eigenvalues.

Lemma 3.1

Let \(\mu \) be an eigenvalue of T with algebraic multiplicity m. Let \(\mu _h^1, \ldots , \mu _h^m\) be the m eigenvalues of \(T_h\) converge to \(\mu \) and define

Then there exists a constant C such that

where \({{\mathcal {R}}}(E^*) = \text {span}\{ \phi _1^*, \ldots , \phi _m^*\}\).

Using the convergence results of the finite element method for the source problem and the above lemma, we have the following theorem.

Theorem 3.3

Let \(\mu \) be an eigenvalue of T with multiplicity m and \(\mu _h^j, j=1,\ldots ,m\) be the m eigenvalues of \(T_h\) approximating \(\mu \). Then there exists a constant C, independent of h, such that

Proof

Let \(u_j\) and \(u_{h,j}\) be the solutions of (2.3) and (3.2) with the right hand side being \(\phi _j\), respectively. Let \(u_j^*\) and \(u_{h,j}^*\) be the solution of the adjoint problem (2.9) and the corresponding finite element solution with the right hand side being \(\phi _j^*\), respectively. In view of Theorem 3.2 and (3.11), we only need to estimate the first term of (3.14).

\(\square \)

As a consequence, we have the following convergence result on the Steklov eigenvalues.

Theorem 3.4

Let \(\lambda \) be a Steklov eigenvalue with multiplicity m and \(\lambda _h^j, j=1,\ldots ,m\) be the m discrete eigenvalues of (3.1) approximating \(\lambda \). Then there exists a constant C, independent of h, such that

4 Spectral Indicator Method

When n(x) is complex, the Steklov eigenvalue problem is non-selfadjoint. The above finite element method leads to a non-Hermitian matrix eigenvalue problem. Due to the lack of a priori spectral information, classical methods might not work effectively. To this end, we extend the spectral indicator method RIM, which was proposed recently in [20] (see also [21]) for the non-selfadjoint transmission eigenvalue problem [15, 32], to compute (complex) Steklov eigenvalues in a given region on the complex plane \(\mathbb C\).

From the finite element approximation, \(T_h: V^B_h \rightarrow V^B_h\) has the following matrix form, which is also denoted by \(T_h\),

where \(I_h\) corresponds the restriction of a function in \(V_h\) onto \(V^B_h\). \(T_h\) is an \(M\times M\) matrix where M is the number of vertices on \(\partial \Omega \). Clearly, \(M \ll N\) and the following problem is much smaller than (4.2)

Unfortunately, one needs to invert the \(N \times N\) matrix \((G-k^2 M_n)\) and the cost is prohibitive.

In the following, we use the matrix form for (3.1) given by

where G is the stiffness matrix, \(M_n\) is the mass matrix, \(M_{\partial \Omega }\) is the mass matrix on \(\partial \Omega \).

We first introduce RIM proposed in [20] for the generalized eigenvalue problem

where \(A=(G-k^2 M_n)\) and \(B = -M_{\partial \Omega }\).

Let \(S \subset {\mathbb {C}}\) be a simply connect domain and \(\Gamma =\partial S\). The problem of interest is to compute all eigenvalues of (4.3) in S. Let \({{\varvec{g}}}\) be a random vector. From the previous section, the spectral projection of \({{\varvec{g}}}\) is defined as

The idea behind RIM is very simple. The spectral projection \(E {{\varvec{g}}}\) can be used to define an indicator \(\delta _S\), which decides if there exist eigenvalues in S or not. If there are no eigenvalues inside \(\Gamma \), \(|E {{\varvec{g}}}| = 0\). Otherwise, if there exist m eigenvalues \(\lambda _j, j = 1, \ldots , m\), \(|E {{\varvec{g}}}| \ne 0\).

Without loss of generality, let S be a square. \(E{{\varvec{g}}}\) can be approximated using a quadrature

where \(\omega _j\)’s are quadrature weights and \({{\varvec{x}}}_j\)’s are the solutions of the linear systems

Recall that if there is no eigenvalue inside \(\Gamma \), then \(E{{\varvec{g}}} = \mathbf{0}\) for all \({{\varvec{g}}} \in \mathbb C^n\). Hence \(|E{{\varvec{g}}}|\) can be used as an indicator of S. However, in practice, it is difficult to distinguish between \(|E{{\varvec{g}}}| \ne 0\) and \(|E{{\varvec{g}}}|= 0\). The solution in [20] is to normalize \(E{{\varvec{g}}}\) and project it again. The indicator \(\delta _S\) is set to be

Since we use numerical quadratures, \(\delta _S \approx 1\) if there exist eigenvalues in S. In this case, S is divided into sub-regions and indicators for these sub-regions are computed. The procedure continues until the size of the region is smaller than a specified precision \(d_0\) (e.g., \(d_0 = 10^{-9}\)). Then the centers of the regions are the approximations of eigenvalues.

According to (4.6), \(\delta _S = 1\) if there exists at least one eigenvalue in S and \(\delta _S = 0\) if there is no eigenvalue in S. Since only “yes” (\(\delta _S = 1\)) or “no” (\(\delta _S = 0\)) is needed in the algorithm and quadrature is used to evaluate \(E{{\varvec{g}}}\), it is natural to use a threshold \(\delta _0\) to distiguish “yes” and “no”. Let \({{\varvec{g}}}\) be a random vector and \(\delta _0, 0< \delta _0 < 1\), be a threshold value. The following is the basic algorithm for RIM.

-

RIM\((A, B, S, d_0, \delta _0, {{\varvec{g}}})\)

-

Input: matrices A, B, region S, precision \(d_0\), threshold \(\delta _0\), random vector \({{\varvec{g}}}\).

-

Output: generalized eigenvalue(s) \(\lambda \) inside S

-

If \(\delta _S < \delta _0\), then exit.

-

Otherwise, compute the size h(S) of S.

-

If \(h(S) > d_0 \),

-

partition S into subregions \(S_j, j=1, \ldots J\).

-

for \(j=1: J\)

-

\(\qquad RIM(A, B, S_j, d_0, \delta _0, {{\varvec{g}}})\).

-

end

-

-

If \(h(S) \le d_0\),

-

set \(\lambda \) to be the center of S.

-

output \(\lambda \) and exit.

-

-

The computational cost of RIM mainly comes from solving the linear system (4.5) at each quadrature point. Note that these matrices are \(N \times N\), where N is the number of vertices of the triangular mesh if the linear Lagrange element is used. Furthermore, for robustness, the strategy of RIM in [20] selects a small threshold \(\delta _0=0.1\), i.e., S contains eigenvalue(s) whenever \(\delta _S > \delta _0\). This choice of threshold for selecting a region systematically leans towards further investigation of regions that may potentially contain eigenvalues. Such a strategy leads to more linear systems.

Since both A and B are large and sparse, to employ RIM in a more efficient way, we resort to a new version of RIM using the Krylov subspace and Cayley transformation [21]. Assume that \(\sigma \) is not an eigenvalue. Multiplying both sides of (4.5) with \((A- \sigma B)^{-1}\), one gets that

Let \(M=(A- \sigma B)^{-1}B\) and \({{\varvec{b}}}=(A- \sigma B)^{-1}{{\varvec{g}}}\). Then (4.5) becomes

Given a matrix M, a vector \({{\varvec{b}}}\), and a non-negative integer m, the Krylov subspace is defined as

which processes the shift-invariant property

where a and b are two scalars. Consequently, \(K_{m}(I+(\sigma -z_j)M; {{\varvec{b}}})=K_{m}(M; {{\varvec{b}}})\).

The idea is to built one Krylov subspace \(K_{m}(M; {{\varvec{b}}})\) for a shift \(\sigma \) to solve (4.5) for as many \(z_j\)’s as possible. As a result, one expects that Krylov subspaces for only a few shifts are needed to solve (4.5) for all quadrature points \(z_j\)’s. We refer the readers to [21] for more details. We simply denote this version of spectral indicator method by SIM in the rest of the paper.

5 Numerical Examples

We present some numerical results in this section. For all examples, we choose \(k=1\). Consider three domains: \(\Omega _1\) is the unit disk, \(\Omega _2\) is the square whose vertices are

and \(\Omega _3\) is an L-shaped domain given by

For the disk with radius R and constant index of refraction n, separation of variables in polar coordinates can be used to obtain exact Steklov eigenvalues. Since u is the solution of the Helmholtz equation (2.1a), it has the expansion

where m’s are integers and \(J_{|m|}\) denotes the Bessel function of order |m|. By the boundary condition (2.1b), the coefficients \(a_m\) satisfy

i.e.,

If \(\lambda \) is a Steklov eigenvalue, there exists at least one m such that \(a_m \ne 0\). Then from (5.2), \(\lambda \) must satisfy

Therefore, the Steklov eigenvalues are given by

On the other hand, it is clear that all \(\lambda _m=-\frac{k\sqrt{n} J_{m}'(k\sqrt{n}R)}{J_{m}(k\sqrt{n}R)},\ m=0, 1, 2, \ldots ,\) are Steklov eigenvalues since \(J_{m}(k\sqrt{n}r)e^{im\theta }\) are non-trivial solutions of the Steklov eigenvalue problem.

From the above discussion, for the unit disk, Steklov eigenvalues are given by

In Fig. 1, we show \(\lambda _m\) against the index of refraction n for \(m=0, 1, 2\).

Using (5.3), when \(n=4\) the 6 largest Steklov eigenvalues are

and when \(n=4+4i\) the 4 complex Steklov eigenvalues with largest imaginary parts are

5.1 Selfadjoint Cases

When the index of refraction n(x) is real, the problem is selfadjoint and all Steklov eigenvalues are real. We compute the six largest Steklov eigenvalues for \(n(x) = 4\) on a series of uniformly refined meshes for each domain. The results are shown in Tables 1, 2, and 3. The mesh sizes are denoted by h. The values are consistent with those in [12].

Note that for the unit disk, the first 3 eigenvalues are given by (5.3) for \(m=0, 1, 2\). The values of the columns 2, 3, 4 in Table 1 approximate the intersections of \(\lambda _m, m=0, 1, 2\) and \(n=4\) in Fig. 1.

In Fig. 2, we show the convergence rates of Steklov eigenvalues of three domains. Since we use the linear Lagrange finite element, the second order convergence is achieved for the unit circle and the square. For the L-shaped domain, which is non-convex, the convergence rate of the second Steklov eigenvalue is lower than 2, while the other 5 eigenvalues have second order convergence.

Note that the convergence rate of the eigenvalues relates to the regularity of the associated eigenfunctions. The result in Fig. 2 implies that the eigenfunction associated with the second eigenvalues does not belong to \(H^2(\Omega )\).

5.2 Non-selfadjoint Cases

When n(x) is complex, the solution operator is non-selfadjoint. Consequently, we end up with non-Hermitian matrix eigenvalue problem. Computation of complex eigenvalues of non-Hermitian matrices are challenging, in particular, when there is no a priori spectral information on the number and distribution of eigenvalues. To this end, we use the new spectral indicator method introduced in the previous section to compute Steklov eigenvalues. For all examples, we take \(n(x) = 4+4i\).

The left picture of Fig. 3 shows the distribution of Steklov eigenvalues for the unit disk on the complex plane. These are eigenvalues computed using Matlab ‘eig’ for the non-Hermitian matrix (\(2097 \times 2097\)) resulting from the finite element method. The mesh size is \(h \approx 0.0613\). Note that ‘eig’ is a direct eigensolver and not suitable for larger matrices.

Since we are interested in eigenvalues close to the origin, we choose the search region S on the complex plane to be the square \([-3, 0.5] \times [0, 3.5]\). The right picture of Fig. 3 shows how SIM explores S and finds the eigenvalues inside S.

The computed complex eigenvalues for the three domains are shown in Tables 4, 5, and 6, respectively. We arrange the eigenvalues according to the decreasing order of their real parts. Again, these values are consistent with the values given in [12], which are reconstructed by some inverse algorithm using scattering data.

In Fig. 4, we show the convergence rates of complex Steklov eigenvalues. The second order convergence is achieved for the unit circle and square. Similar to real n(x), the second eigenvalue of the L-shaped domain has lower convergence rate indicating that the associated eigenfunction has lower regularity.

For the last example, the index of refraction is a function \(n(x)=|x|+4+|x|^2i\). The eigenvalues are shown in Table 7, which are computed with the mesh sizes \(h \approx 0.2341\) for the circle, \(h \approx 0.2441\) for the square, and \(h \approx 0.2383\) for the L-shaped domain, respectively. Since n(x) is a smooth function, the convergence rates are similar to the above constant cases and are thus omitted.

6 Conclusions and Future Work

In this paper, we propose a finite element method of a non-selfadjoint Steklov eigenvalue problem arising from the inverse scattering theory. The current paper contains both convergence theory and numerical examples. An earlier paper by Bramble and Osborn considered a similar but different non-selfadjoint Steklov eigenvalue problem [10]. The second order non-selfadjoint operator is assumed to be uniformly elliptic and no numerical results were reported therein.

The convergence of Lagrange finite elements is proved using the spectral perturbation theory for compact operators. Due to the fact that the problem is non-selfadjoint and no a priori spectral information is available, the recently developed spectral indicator method RIM-C is considered for the resulting non-Hermitian matrix eigenvalue problems.

Non-selfadjoint Steklov eigenvalue problems have many important applications. Numerical computation of these problems is challenging. The problem considered in this paper is related to the Helmholtz equation. Similar problems exist for the Maxwell equation and elasticity equation. In future, we plan to extend the theory and algorithm to these problems.

The current spectral indicator method cannot compute the multiplicity and the eigenspaces. However, it can be done by adding some functions. For example, one can choose a small circle centered at an computed eigenvalue with no other eigenvalues inside. Accurate computation of the spectral projection of several random vectors would lead to the associated eigenspace, which also provides the multiplicity of the eigenvalue [29, 31]. This will be considered in the future version of the method. Note that for the inverse problems considered in [12, 33], the multiplicity is not requried.

References

Armentano, M.G., Padra, C.: A posteriori error estimates for the Steklov eigenvalue problem. Appl. Numer. Math. 58(5), 593–601 (2008)

An, J., Bi, H., Luo, Z.: A highly efficient spectral-Galerkin method based on tensor product for fourth-order Steklov equation with boundary eigenvalue. J. Inequal. Appl. 12, 211 (2016)

Andreev, A.B., Todorov, T.D.: Isoparametric finite-element approximation of a Steklov eigenvalue problem. IMA J. Numer. Anal. 24(2), 309–322 (2004)

Babuška, I., Osborn, J. In: Ciarlet, P.G., Lions, J.L. (eds.) Eigenvalue Problems, Handbook of Numerical Analysis, vol. II, Finite Element Methods (Part 1). Elsevier Science Publishers B.V., North-Holland (1991)

Bai, Z., Demmel, J., Dongarra, J., Ruhe, A., van der Vorst, H. (eds.): Templates for the Solution of Algebraic Eigenvalue Problems: a Practical Guide. Society for Industrial and Applied Mathematics, Philadelphia (2000)

Beyn, W.J.: An integral method for solving nonlinear eigenvalue problems. Linear Algebr. Appl. 436(10), 3839–3863 (2012)

Bi, H., Li, H., Yang, Y.: An adaptive algorithm based on the shifted inverse iteration for the Steklov eigenvalue problem. Appl. Numer. Math. 105, 64–81 (2016)

Bi, H., Li, Z., Yang, Y.: Local and parallel finite element algorithms for the Steklov eigenvalue problem. Numer. Methods Partial Differ. Equ. 32(2), 399–417 (2016)

Boffi, D.: Finite element approximation of eigenvalue problems. Acta Numer. 19, 1–120 (2010)

Bramble, J.H., Osborn, J.E.: Approximation of Steklov eigenvalues of non-selfadjoint second order elliptic operators. In: The Mathematical Foundations of the Finite Element Method with Applications to Partial Differential Equations Proceedings Symposyium, University of Maryland, Baltimore, MD, pp. 387–408. Academic Press, New York (1972)

Brenner, S.C., Scott, L.R.: The mathematical theory of finite element methods. In: Texts in Applied Mathematics, 3rd edn. vol. 15. Springer, New York (2008)

Cakoni, F., Colton, D., Meng, S., Monk, P.: Stekloff eigenvalues in inverse scattering. SIAM J. Appl. Math. 76(4), 1737–1763 (2016)

Canavati, J.A., Minzoni, A.A.: A discontinuous Steklov problem with an application to water waves. J. Math. Anal. Appl. 69(2), 540–558 (1979)

Cao, L., Zhang, L., Allegretto, W., Lin, Y.: Multiscale asymptotic method for Steklov eigenvalue equations in composite media. SIAM J. Numer. Anal. 51(1), 273–296 (2013)

Colton, D., Monk, P., Sun, J.: Analytical and computational methods for transmission eigenvalues. Inverse Probl. 26(4), 045011 (2010)

Dello Russo, A., Alonso, A.E.: A posteriori error estimates for nonconforming approximations of Steklov eigenvalue problems. Comput. Math. Appl. 62(11), 4100–4117 (2011)

Grisvard, P.: Elliptic Problems in Non Smooth Domains. Pitman, Boston (1985)

Han, X., Li, Y., Xie, H.: A multilevel correction method for Steklov eigenvalue problem by nonconforming finite element methods. Numer. Math. Theory Methods Appl. 8(3), 383–405 (2015)

Hsiao, G.C., Wendland, W.L.: Boundary integral equations. In: Applied Mathematical Sciences, vol. 164. Springer, Berlin (2008)

Huang, R., Struthers, A., Sun, J., Zhang, R.: Recursive integral method for transmission eigenvalues. J. Comput. Phys. 327, 830–840 (2016)

Huang, R., Sun, J., Yang, C.: Recursive integral method with Cayley transformation. Numer. Linear Algebr. Appl. 25(6), (2018). https://doi.org/10.1002/nla.2199

Kato, T.: Perturbation Theory of Linear Operators, Classics in Mathematics. Springer, Berlin (1995)

Kumar, P., Kumar, M.: Simulation of a nonlinear Steklov eigenvalue problem using finite-element approximation. Comput. Math. Model. 21(1), 109–116 (2010)

Kuznetsov, N., Kulczycki, T., Kwaśnicki, M., Nazarov, A., Poborchi, S., Polterovich, I., Siudeja, B.: The legacy of Vladimir Andreevich Steklov. Not. Am. Math. Soc. 61(1), 9–22 (2014)

Li, Q., Lin, Q., Xie, H.: Nonconforming finite element approximations of the Steklov eigenvalue problem and its lower bound approximations. Appl. Math. 58(2), 129–151 (2013)

Mora, D., Rivera, G., Rodríguez, R.: A virtual element method for the Steklov eigenvalue problem. Math. Models Methods Appl. Sci. 25(8), 1421–1445 (2015)

Osborn, J.: Spectral approximation for compact operators. Math. Comp. 29(131), 712–725 (1975)

Peng, Z., Bi, H., Li, H., Yang, Y.: A multilevel correction method for convection–diffusion eigenvalue problems. Math. Probl. Eng. 904347 (2015)

Polizzi, E.: Density-matrix-based algorithms for solving eigenvalue problems. Phys. Rev. B 79, 115112 (2009)

Saad, Y.: Numerical Methods for Large Eigenvalue Problems, Classics in Applied Mathematics, vol. 66. SIAM, Philadelphia (2011)

Sakurai, T., Sugiura, H.: A projection method for generalized eigenvalue problems using numerical integration. J. Comput. Appl. Math. 159(1), 119–128 (2003)

Sun, J.: Iterative methods for transmission eigenvalues. SIAM J. Numer. Anal. 49(5), 1860–1874 (2011)

Sun, J.: Estimation of transmission eigenvalues and the index of refraction from Cauchy data. Inverse Probl. 27, 015009 (2011)

Sun, J., Zhou, A.: Finite Element Methods for Eigenvalue Problems. CRC Press, Taylor & Francis Group, Boca Raton (2016)

Xie, H.: A type of multilevel method for the Steklov eigenvalue problem. IMA J. Numer. Anal. 34, 592–608 (2014)

Xie, H., Wu, X.: A multilevel correction method for interior transmission eigenvalue problem. J. Sci. Comput. 72, 586–604 (2017)

Xie, H., Zhang, Z.: A Multilevel Correction Scheme for Nonsymmetric Eigenvalue Problems by Finite Element Methods. arXiv:1505.06288

Yang, Y., Li, Q., Li, S.: Nonconforming finite element approximation of the Steklov eigenvalue problem. Appl. Numer. Math. 59, 2388–2401 (2009)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

The research of J. Liu was supported by the Guangdong Natural Science Foundation of China (2016A030313074) and the NNSF of China under Grant 11801218. The research of J. Sun was supported in part by NSF Grant DMS-1521555.

Rights and permissions

About this article

Cite this article

Liu, J., Sun, J. & Turner, T. Spectral Indicator Method for a Non-selfadjoint Steklov Eigenvalue Problem. J Sci Comput 79, 1814–1831 (2019). https://doi.org/10.1007/s10915-019-00913-6

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10915-019-00913-6