Abstract

We present two limit theorems for the zero-range process with nonlocal diffusion on inhomogeneous networks. The deterministic model is governed by the reaction–diffusion equation with an integral term in space instead of a Laplacian. By constructing the reproducing kernel Hilbert space to consider the inhomogeneities of the network structure, we prove that the law of large numbers and the central limit theorem hold for our models. Furthermore, under a special case of the kernel, we can show that the fluctuation limit obtained by the central limit theorem has a continuous sample path.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The continuum limit is a deterministic or stochastic differential equation given by the approximation of the difference equation modeled by interacting particle systems when the network size goes to infinity. This limit has been used to understand the dynamics of large networks, for example, existence and stability. Many studies have investigated different models and their continuum limits, such as the hydrodynamic and high-density types. The main difference between the two limits is the parameter to be rescaled. Specifically, the hydrodynamic limit is time and space, while the high-density limit is the time, space, and initial quantity of particles per site [8]. Although the high-density limit has interesting characteristics, studies investigating this limit are fewer than those investigating the hydrodynamic limit.

To the best of our knowledge, [18, 19] was the first study to investigate the high-density limit for a Markov chain approximating ordinary differential equations. Arnold and Theodosopulu considered the stochastic model as a space–time Markov process related to chemical reactions and diffusion [1]. Given a network size of N, their model is given by a simple random walk proportional to \(N^{2}\) that can move the neighborhood site without a loop, and the number of particles in each site is unlimited. This process is called the zero-range process. Moreover, they showed a high-density limit or law of large numbers for this model and revealed that the continuum limit is governed by a standard heat equation:

In [4,5,6, 15, 17], the central limit theorem and the law of large numbers in various settings, specifically boundary conditions and reaction terms were investigated. Some studies have been conducted both for equations such as (1.1), parabolic partial differential equations [16], and Lotka–Voltera systems [26].

Recently, a discretized model of nonlocal diffusion or interaction that represent phenomena that cannot be modeled by only local interactions has attracted attention. Moreover, the approximation theory revealing its continuum limit has been tackled by many researchers. For instance, [11] considered a random graph, \(\Gamma _{N}=(V(\Gamma ^{N}),E(\Gamma ^{N}))\), where \(V(\Gamma ^{N})\) and \(E(\Gamma ^{N})\) denote the set of nodes and edges, respectively. Moreover, the discrete model on \(\Gamma ^{N}\) is given by \({i}\in {V(\Gamma ^{N})}\), where

and it was shown that the continuum limit of (1.2) is governed by

where \(W:[0,1]^{2}\rightarrow [0,1]\) is the graphon: a measurable function representing the limiting behavior of \(\Gamma _{N}\) (see [21]).

In [27], we attempted to determine the high-density limit of the nonlocal diffusion model, and two mathematical models of nonlocal diffusion on homogeneous networks were considered. First, the deterministic model u(t, x) was given by the following integro-differential equation proposed in [9]:

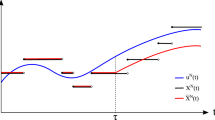

where \(R(\cdot )\) is the reaction term given by the first-degree polynomial function. Second, the stochastic model \(X^{N}(t,x)\) was scaled by \(l>0\) as a parameter proportional to the initial average number of particles in a site. It was constructed using a simple random walk on the discrete torus \({\mathbb {Z}}/N{\mathbb {Z}}\) which can move everywhere and has a jump rate proportional to \(N^{-1}\). Adopting the methods of [3], we compared these models and proved that the law of large numbers and the weak convergence theorem hold for our particle model. To show weak convergence in the \(L^{2}\) framework, we considered the rescaled difference \(\sqrt{l}(X^{N}(t)-u(t))\), and only proved that it weakly converges to a stochastic process, which is a mild solution to the stochastic differential equation on the Skorokhod space. In other words, we could not show that it weakly converges to the Ornstein–Uhlenbeck process. For this reason, we named the weak convergence theorem, not the central limit theorem.

The aims of this study are as follows: first, we extend the analysis to a nonlocal diffusion model for inhomogeneous networks. To include the structure of networks, we use the measurable function \(J(x,y):[0,1]\times [0,1]\rightarrow {\mathbb {R}}\) as the distribution of jumps from location x to y. Therefore, the deterministic model is governed by

where \(R(\cdot )\) is the same as above. By the adequate discretization of J, a stochastic model on an inhomogeneous network is also constructed. Second, we try to reveal more detailed properties of limits by considering whether the central limit theorem holds for our models. The methods in this study benefited from [3, 4], and there are two points of difference between their works and this work. First, in these works, two limit theorems were considered on the Sobolev space because of the regularity argument related to the Laplacian operator. However, we considered nonlocal integral operators, and thus, we must construct a different function space. To this end, we considered the reproducing kernel Hilbert space (RKHS) \({\mathscr {H}}_{J}\) based on the network structure. We denote the dual space of \({\mathscr {H}}_{J}\) associated with the usual inner product as \({\mathscr {H}}_{-J}\). Second, for the purpose of seeing two limit theorems (namely, the central limit theorem), we obtain the convergence rate of semigroups. In [3, 4], the author utilized spectral decomposition by the eigenfunctions and eigenvalues of Laplacian and discrete Laplacian operators and their convergences. However, in this study, because we cannot have an explicit form of eigenfunctions and eigenvalues of integral and summation operators, we use the \(\frac{1}{2}\)-Hölder regularity of functions in RKHS \({\mathscr {H}}_{J}\) which is obtained by assuming the Lipschitz continuity of the kernel J. Using these spaces and technique, we can prove the central limit theorem as well as the law of large numbers. Specifically, we prove that the difference \(X^{N}(t)-u(t)\) converges to 0 probability on \({\mathscr {H}}_{-J}\) and that the rescaled difference \(\sqrt{Nl}(X^{N}(t)-u(t))\) converges to the Ornstein–Uhlenbeck process U(t) in distribution on \(\mathbb {D}_{{\mathscr {H}}_{-J}}[0,T]\) equipped with the Skorokhod topology. Furthermore, assuming \({\int _{0}^{1}J(x,y)dy}=1\) for any \({x}\in {[0,1]}\), we can prove that U(t) has a continuous sample path. However, as it is obvious that the \(L^{2}\) space cannot be constructed as \({\mathscr {H}}_{J}\) by its definition (see Remark 2.1), the central limit theorem remains an open problem in the sense of the \(L^{2}\)-framework.

The remainder of this paper is organized as follows. In Sect. 2, we provide the definition and some properties of the RKHS \({\mathscr {H}}_{J}\) with kernel J. In Sect. 3, we introduce the deterministic model of nonlocal diffusion on inhomogeneous networks, which is governed by Equation (1.3) with periodic boundary conditions. In Sect. 4, we construct a stochastic model of nonlocal diffusion on a discrete torus \({\mathbb {Z}}/N{\mathbb {Z}}\). Finally, in Sects. 5 and 6, we prove the law of large numbers and the central limit theorem.

2 RKHS

In this section, we provide a definition and some well-known results for RKHS and construct it using the eigenfunction of the integral operator.

Definition 2.1

Let H be a real-valued Hilbert space over [0, 1] with an inner product \(\langle \cdot , \cdot \rangle _{H}\).

-

(i)

A function \(J:{[0,1]^{2}}\rightarrow {\mathbb {R}}\) is a reproducing kernel of H if we have \({J(\cdot ,x)}\in {H}\) for all \({x}\in {[0,1]}\), and the reproducing property,

$$\begin{aligned} f(x)=\langle f, J(\cdot ,x) \rangle _{H}, \end{aligned}$$holds for all \({f}\in {H}\) and all \({x}\in {[0,1]}\).

-

(ii)

The space H is an RKHS over [0, 1] if for all \({x}\in {[0,1]}\) the Dirac functional \(\delta _{x}:H\rightarrow {\mathbb {R}}\) defined by

$$\begin{aligned} \delta _{x}(f):=f(x),\quad {f}\in {H}, \end{aligned}$$is continuous.

Remark 2.1

Note that \(L^{2}([0,1])\) is not an RKHS because of \({\delta _{x}}\notin {L^{2}([0,1])}\).

Next, we define the integral operator \(T_{J}\) on \(L^{2}([0,1])\) by

The following are the fundamental results of constructing the separable RKHS associated with \(T_{J}\) with kernel J:

Theorem 2.1

(Theorem 4.20 and 4.21 in [25]) Let \(J:{[0,1]}^{2}\rightarrow {\mathbb {R}}\) be positive definite; that is, for all \({n}\in {{\mathbb {N}}}\), \({\alpha _{1},\ldots ,\alpha _{n}}\in {{\mathbb {R}}}\) and all \({x_{1},\ldots ,x_{n}}\in {[0,1]}\),

Then, there exists a unique RKHS H that reproduces kernel J. Conversely, for H, there is a unique reproducing kernel k of H.

Theorem 2.2

(Theorem 3.a.1 in [13]) Let \({J}\in {L^{\infty }([0,1]^{2})}\) be a kernel such that \(T_{J}:{L^{2}([0,1])}\rightarrow {L^{2}([0,1])}\) is positive. Then, the eigenvalues \(\{a_{n}\}\) of \(T_{J}\) are completely summable. The eigenfunctions \({e_{n}}\in {L^{2}([0,1])}\) of \(T_{J}\), which provide the complete orthonormal basis of \(L^{2}([0,1])\), belong to \(L^{\infty }([0,1])\) with \(\sup _{n}\Vert e_{n}\Vert _{\infty }<\infty \), and

where the series converges absolutely and uniformly. Representation (2.1) is a Mercer expansion.

Theorem 2.3

(Lemma 4.33 in [25]) Let J be a continuous kernel. Thus, the RKHS with J is separable.

Utilizing the above results, the RKHS with integral kernel J, satisfying the assumptions in Theorems 2.1 and 2.2, is given by

where the inner product of \({\mathscr {H}}_{J}\) is

for \({f,g}\in {L^{2}([0,1])}\) (for details, see Chapter 2 in [24]). We checked the reproducing properties. For \({f}\in {{\mathscr {H}}_{J}}\), we have

Furthermore, for \({f}\in {L^{2}([0,1])}\), let

and let \({\mathscr {H}}_{-J}\) be the completion of \(L^{2}([0,1])\) in the norm, \(\Vert \cdot \Vert _{{\mathscr {H}}_{-J}}\). Note that \({\mathscr {H}}_{-J}\) is the dual space of \({\mathscr {H}}_{J}\) with respect to the usual inner product.

Remark 2.2

Note that the functions in RKHS \({\mathscr {H}}_{J}\) has the \(\frac{1}{2}\)-Hölder regularity whenever the kernel J is Lipschitz continuous.

Example 2.1

(Brownian motion kernel) Next, we define the kernel \(J:{[0,1]}\times {[0,1]}\rightarrow [0,1]\) as

Note that this kernel is symmetric and positive semi-definite. Then, the eigenfunction and eigenvalue are given by

respectively. Furthermore, this is the Mercer expansion for J; that is, J is represented by

3 Deterministic Model

Let \(R(x)=b(x)-d(x)=b_{1}x+b_{0}-d_{1}x\) with \({b_{0},b_{1},d_{1}}\ge {0}\), and assume that \(R(x)<0\) for large \(x>\rho \). We consider the following nonlocal reaction–diffusion equation:

where the kernel J is a nonnegative, symmetric, positive-definite, and continuous function that can be interpreted as the distribution of jumping from location x to y. Thus, we can regard that the equation (3.1) represents a nonlocal diffusion on inhomogeneous networks. By standard semigroup theory (e.g., see Chapter 6 in [22]), there exists a unique mild solution \({u(t,x)}\in {C\bigl ([0,T];C([0,1])\bigr )}\) such that \({0}\le {u(t,x)}<\rho \) for \({x}\in {[0,1]}\) and \({t}\in {[0,T]}\).

4 Stochastic Model

Let \(H^{N}\) denote the space of real-valued step functions on [0, 1], which are constant on \([kN^{-1},(k+1)N^{-1})\), \({0}\le {k}\le {N-1}\). For \({f}\in {H^{N}}\), we define operator \(I_{J}^{N}\) as

where

Note that this operator is a spatially discretized version of the first and second terms of Equation (3.1). If we must extend the domain of \(I_{J}^{N}\) to \(L^{2}([0,1])\), we define the projection mapping \(P^{N}\) from \(L^{2}([0,1])\) to \(H^{N}\) as

and we consider operator \(I_{J}^{N}P^{N}\) instead of \(I_{J}^{N}\).

Let \(n_{k}(t)\) be the number of particles in the kth site on \({{\mathbb {Z}}}/N{{\mathbb {Z}}}\) at time t, and let \(\vec {n}(t) = (n_{0},\ldots ,n_{N-1}(t))\) be the state of a multidimensional Markov chain at time t. Now, we define the stochastic analog as

which has the transition rate,

for \({i}\in {\{0,1,\ldots ,k-1,k+1,\ldots ,N-1\}}\), where \(e_{k}\) is the kth unit vector on \({\mathbb {R}}^{N}\). Here \(n_{N}=n_{0}\) and \(n_{-1}=n_{N-1}\). We take n(t) to be a càdlàg process defined on some probability space.

Let \({\mathscr {F}}_{t}^{N}\) be the completion of the \(\sigma \)-algebra \(\sigma (\vec {n}(s);{s}\le {t})\), and let \(\varLambda n(t)=n(t)-n(t-)\) be the jump at time t. Suppose that \(\tau \) is an \({\mathscr {F}}_{t}^{N}\)-stopping time, such that

for all \(T>0\), we obtain as in Lemma 2.2 [3];

Lemma 4.1

The following are \({\mathscr {F}}_{t}^{N}\)-martingales:

-

(a)

\(\displaystyle n_{k}({t}\wedge {\tau })-n_{k}(0)-{\int _{0}^{{t}\wedge {\tau }}\frac{1}{N}{\sum _{i=0}^{N-1}J^{N}(iN^{-1},kN^{-1})(n_{i}(s)-n_{k}(s))}ds}-\int _{0}^{{t}\wedge {\tau }} lR(n_{k}(s)l^{-1})ds\).

-

(b)

\(\displaystyle {\sum _{{s}\le {{t}\wedge {\tau }}}(\varLambda n_{k}(s))^{2}}-{\int _{0}^{{t}\wedge {\tau }}\frac{1}{N}\sum _{\begin{array}{c} i=0\\ i\ne {k} \end{array}}^{N-1}J^{N}(iN^{-1},kN^{-1})(n_{i}(s)+n_{k}(s))ds}-\int _{0}^{{t}\wedge {\tau }} l|R|(n_{k}(s)l^{-1})ds\), where \(|R|(x)=b(x)+d(x)\).

-

(c)

\(\displaystyle {\sum _{{s}\le {{t}\wedge {\tau }}}(\varLambda n_{k}(s))(\varLambda n_{i}(s))}+{\int _{0}^{{t}\wedge {\tau }}\frac{1}{N}J^{N}(iN^{-1},kN^{-1})(n_{i}(s)+n_{k}(s))ds}\) for all \({i}\ne {k}\).

By Lemma 4.1 (a) and the definition of \(X^{N}(t)\), we can write

where \(Z^{N}({t}\wedge {\tau })\) is an \(H^{N}\)-valued martingale. Furthermore, by utilizing Lemma 4.1, we can estimate the quadratic variation for a martingale \(Z^{N}\).

Lemma 4.2

For \({\xi }\in {H^{N}}\),

is a mean-zero martingale.

Proof

Note that the first term in the above formula simply counts the number of events between each site. Hence, we obtain terms that only include the transition rate \(J^{N}(\cdot ,\cdot )\) without the square of \(J^{N}(\cdot ,\cdot )\). We obtain the proof by a similar calculation as Lemma 3.2 in [27], and hence we omit it. \(\square \)

5 Law of Large Numbers

In this section, we consider the behavior of the difference between \(X^{N}\) and u. First, we set \({\overline{J}}=\Vert J(\cdot ,\cdot )\Vert _{L^{\infty }([0,1]^{2})}<\infty \). Based on some assumptions for kernel J, we can construct the separable RKHS \({\mathscr {H}}_{J}\) with the form (2.2). Furthermore, suppose that there is a constant a, such that

By definition, we immediately obtain

for \({x}\in {[kN^{-1},(k+1)N^{-1})}\), and \({0}\le {k}\le {N-1}\). The following is the main result of this section:

Theorem 5.1

Assume that

-

(i)

\(\Vert X^{N}(0)-u(0)\Vert _{{\mathscr {H}}_{-J}}\rightarrow 0\) in probability as \(N\rightarrow \infty \),

-

(ii)

\(l(N)\rightarrow \infty \) as \(N\rightarrow \infty \).

Then, for \(T>0\),

To demonstrate this theorem, we give some results:

Lemma 5.1

-

(i)

For every positive function \({f}\in {{\mathscr {H}}_{-J}}\), \(C_{1}=C_{1}(J)>0\) such that

$$\begin{aligned} {\langle f, 1 \rangle }\le {C_{1}\Vert f\Vert _{{\mathscr {H}}_{-J}}}. \end{aligned}$$ -

(ii)

For \({f}\in {{\mathscr {H}}_{-J}}\), \(C_{2}=C_{2}(J,T)\) does not depend on N such that

$$\begin{aligned} {\Vert e^{I_{J}^{N}t}f\Vert _{{\mathscr {H}}_{-J}}}\le {C_{2}\Vert f\Vert _{{\mathscr {H}}_{-J}}}. \end{aligned}$$

Proof

For \({f}\in {{\mathscr {H}}_{-J}}\), by (5.1), we can see that

Next, we consider part (ii). Because both \(I_{J}^{N}\) and \(T_{J}\) are self-adjoint operators, we obtain

Note that for \({f}\in {{\mathscr {H}}_{-J}}\),

hence,

Therefore, applying Gronwall’s inequality gives

\(\square \)

Lemma 5.2

For a continuous function f,

Proof

Utilizing Hölder’s and Young’s inequalities, we have

where \(P^{N}\) is the projection into \(H^{N}\) defined in Sect. 4. By the continuity of f and J, we obtain

finishing the proof. \(\square \)

Proof of Theorem 5.1

We fix \({\varepsilon }\in {(0,1]}\) and set the stopping time \(\tau \) as

Recall that \({0}\le {u(t,x)}<\rho \) for all \({t}\in {[0,T]}\) and \({x}\in {[0,1]}\). If \(t<\tau \), it is easy to prove that \(\left\| X^{N}(t)\right\| _{{\mathscr {H}}_{-J}}<{\overline{J}}\rho +1\). Furthermore,

for sufficiently large N. Thus, we get

Using the semigroups \(e^{I_{J}t}\) and \(e^{I_{J}^{N}t}\), we obtain

hence, by Lemma 5.1 and Gronwall’s inequality, we have

where

Based on assumption (a), Lemma 5.2, and by applying the Trotter–Kato theorem, the first, third, and last terms of \(E_{N}\) converge to zero with the \({\mathscr {H}}_{-J}\) norm as \(N\rightarrow \infty \). Furthermore, because \(e^{I_{J}^{N}({t}\wedge {\tau }-s)}b_{0}=e^{I_{J}({t}\wedge {\tau }-s)}b_{0}\), the fourth term is zero. Thus, it suffices to show that the stochastic integral term converges to zero in probability. Thus,

By the maximal inequality related to the stochastic convolution-type integral (or the stochastic *-integral) of Theorem 1 in [14], for \(\delta >0\),

where \(C_{2}\) is a constant given by Lemma 5.1 (ii). From Lemmas 4.2 and 5.1 (i), we have

where we have used \({\sum _{n}a_{n}}<\infty \) in the last inequality. This completes the proof. \(\square \)

Remark 5.1

Note that Theorem 5.1 holds when the reaction term R is Lipschitz continuous and \(R(0)=b_{0}>0\). Indeed, by a similar estimate in the proof of Theorem 5.1, we have

where K is a Lipschitz constant of R, and

Because R is a linear growth, we observe that

as \(N\rightarrow \infty \). The remaining terms go to zero by an argument similar to the one in Theorem 5.1, and hence we obtain the proof.

6 Central Limit Theorem

Let \(M^{N}(t)=\sqrt{Nl}Z^{N}({t}\wedge {\tau })\) and \(U^{N}(t)=\sqrt{Nl}(X^{N}(t)-u(t))\), where \(\tau \) is the stopping time defined in the proof of Theorem 5.1. Two operators \({A}_{J}\) and \(A_{J}^{N}\) as \(A_{J}f=I_{J}f+(b_{1}-d_{1})f\) and \(A_{J}^{N}g=I_{J}^{N}g+(b_{1}-d_{1})g\) for \({f}\in {L^{2}([0,1])}\) and \({g}\in {H^{N}}\), respectively. Recall that to guarantee the existence of dynamics, we assume that \(R(x)<0\) for large x. This condition is equivalent to the dissipative \(b_{1}<d_{1}\). From this, we can construct semigroups \(e^{A_{J}t}\) and \(e^{A_{J}^{N}t}\). In this section, we assume further that the kernel J and initial data u(0) are globally Lipschitz continuous in \([0,1]^{2}\) and [0, 1], respectively. The main result of this section is as follows:

Theorem 6.1

In addition to the assumptions of Theorem 5.1, we assume

-

(a)

\(l/N\rightarrow 0\),

-

(b)

\(U^{N}(0)\rightarrow U_{0}\) in distribution on \({\mathscr {H}}_{-J}\).

Then, there exists a unique \({\mathscr {H}}_{-J}\)- valued Gaussian process M(t) such that \(U^{N}(t) \rightarrow U(t)\) in distribution on \({\mathbb {D}}_{{\mathscr {H}}_{-J}}[0,\infty )\), where

is the mild solution of the stochastic differential equation

Remark 6.1

If we assume that the initial positions \(X^{N}(0)\) of the particles are i. i.d., by the standard central limit theorem, assumption (b) holds automatically.

We give the sketch of the proof. Using the semigroups gives

and we show that the first and second terms of RHS of the above equality converge to the RHS in (6.1), and the other terms converge to 0 as \(N\rightarrow \infty \). It is relatively easy to see the convergence of the last three terms by the semigroup convergence result and the law of large numbers. In particular, we must consider the limiting behavior of stochastic fluctuation \(M^{N}(t)\). Recall that the projection \(P^{N}\) into \(H^{N}\) is defined by

for any measurable functions f and \({x}\in {[kN^{-1},(k+1)N^{-1})}\) and \({0}\le {k}\le {N-1}\). For simplicity, we abbreviate \(P^{N}f\) as \(f^{N}\) for the remainder of this paper. Let \(\lambda ^{N}(X^{N})\) be the waiting time parameter, and let \(\sigma ^{N}(X^{N},dw)\) be the jump distribution function of \(X^{N}\). Then, from Lemma 2.6 in [19], for \({\varphi }\in {{\mathscr {H}}_{J}}\), the variance of \(\langle M^{N}(t), \varphi \rangle \) is given by

Lemma 6.1

There exists a compact set \({K}\subset {{\mathscr {H}}_{-J}}\) such that \({P({M^{N}(t)}\in {K^{\varepsilon }})}\ge {1-\varepsilon }\), where \(K^{\varepsilon }=\bigl \{{f}\in {{\mathscr {H}}_{-J}} \mid \Vert f-g\Vert _{{\mathscr {H}}_{-J}}<\varepsilon \mathrm{for}\mathrm{some}{g}\in {K}\bigr \}\).

Proof

We obtain the proof using a parallel argument to that presented in Lemma 4.3 of [3] (see Appendix in [27]). \(\square \)

Lemma 6.2

There is a unique (in distribution) \({\mathscr {H}}_{-J}\)-valued Gaussian process \(M(\cdot )\), which has a characteristic functional

for \({\varphi }\in {{\mathscr {H}}_{J}}\).

Proof

Consider the quadratic functional \({\mathscr {E}}_{t}\) defined by

for \({\varphi ,\psi }\in {{\mathscr {H}}_{J}}\). By the Riesz representation theorem, for \({\varphi ^{*}}\in {{\mathscr {H}}_{-J}}\) and \({\psi }\in {{\mathscr {H}}_{J}}\), there exists a linear operator \(L:{\mathscr {H}}_{-J}\rightarrow {\mathscr {H}}_{J}\) such that \(\langle \varphi ^{*}, \psi \rangle =\langle \psi , L(\varphi ^{*}) \rangle _{{\mathscr {H}}_{J}}\). We define an operator A(t) on \({\mathscr {H}}_{-J}\) as

Note that \(\{a_{n}^{-\frac{1}{2}}e_{n}\}\) is the CONS in \({\mathscr {H}}_{-J}\), and we have

by a similar calculation for \(Z^{N}\) in the proof of Theorem 5.1. This means that A(t) is in the trace class. Thus, A(t) is a self-adjoint compact operator on \({\mathscr {H}}_{-J}\). Moreover, the structure of \({\mathscr {E}}_{t}\), \(\langle A(t)\varphi ^{*}, \varphi ^{*} \rangle _{{\mathscr {H}}_{-J}}\) is a positive-definite quadratic function of \(\varphi ^{*}\) for every t and is continuous and increasing in t for every \(\varphi ^{*}\). Therefore, the proof is obtained based on the proof of Theorem 4.1 in [10] using Kolmogorov’s extension theorem. \(\square \)

Next, we show that the Gaussian process \(M(\cdot )\) constructed in Lemma 6.2 is the limiting process of martingale \(M^{N}(\cdot )=\sqrt{Nl}Z^{N}(\cdot )\).

Lemma 6.3

For a function \({\varphi }\in {{\mathscr {H}}_{J}}\),

Proof

The proof is essentially the same as Lemma 4.4 in [3], but we must modify some calculations owing to the change from a Laplacian to an integral operator. Let \(f(w)=e^{iw}\), \(g(w)=\langle u(w), {\int _{0}^{1}J(\cdot ,y)(\varphi (\cdot )-\varphi (y))^{2}dy} \rangle +\langle |R|(u(s)), \varphi ^{2} \rangle \), and \(m^{N}(t)=\langle M^{N}(t), \varphi \rangle \). As in the proof of Lemma 4.4 in [3], it suffices to show that

where \({|\varepsilon _{N}(t,s)|}\le {{\mathbb {E}}[\alpha _{N}(T) \mid {\mathscr {F}}_{s}^{N}]}\), \({\alpha _{N}(T)}\ge {0}\), and \({\mathbb {E}}[\alpha _{N}(T)]\rightarrow 0\) as \(N\rightarrow \infty \). Let \([m^{N}](t)={\sum _{{w}\le {t}}(\varLambda m^{N}(w))^{2}}\) be the quadratic variation of \(m^{N}(t)\), and let

Note that \([m^{N}](t)-{\int _{0}^{{t}\wedge {\tau }}g^{N}(w)dw}\) is a mean zero martingale from Lemma 4.2. Using the Itô formula (see [23]), we have

where \(\varepsilon _{N}(t,s)=\varepsilon _{1}(t,s)+\varepsilon _{2}(t,s)\) and

Hence, we have to show that \(\varepsilon _{1}(t,s)\) and \(\varepsilon _{2}(t,s)\) has an upper bound for which the expectation converges to 0. Roughly, the jump size of \(m^{N}\) has the order \(1/\sqrt{Nl}\), and hence the difference induced by jumps vanishes when N goes to infinity. Thus, the statement for \(\varepsilon _{1}(t,s)\) holds. Furthermore, the LLN in Theorem 5.1 yields a statement for \(\varepsilon _{2}(t,s)\).

Let us consider the proof in detail. First, we show that the integral of \(g^{N}(w)\) on [0, T] is uniformly bounded in N. Indeed, let \(f^{N}(x)\) be the function defined above. By the reproducing property and the Cauchy–Schwarz inequality, for every \({\varphi }\in {{\mathscr {H}}_{J}}\), we have

Therefore, we obtain

and

Thus, we obtain the boundedness of the integral of \(g^{N}\) from (5.2). Next, we consider each term of \(\varepsilon _{N}(t,s)\). We apply the Taylor expansion to \(e^{iax}\). Then, we have the following inequality:

Hence, by the fact that \({|\varLambda m^{N}(w)|}\le {C(\varphi ,J)/\sqrt{Nl}}\), we obtain

It remains the boundedness of \(\varepsilon _{2}\). Because \(|f''|=1\), we have

We set \(g(w)-g^{N}(w)=G_{1}^{N}(w)+G_{2}^{N}(w)\) where

and

Now, we analyze two terms separately. For \(G_{1}^{N}(w)\),

By the Cauchy–Schwarz inequality, we obtain

and

Note that for any \({\phi }\in {L^{2}([0,1])}\), we have

Therefore, (6.3) and Theorem 5.1 conclude that \({\mathbb {E}}\left[ {\int _{0}^{T}|G_{1}^{N}(w)|dw}\right] \rightarrow 0\) as \(N\rightarrow \infty \). For \(G_{2}^{N}(w)\),

The expectation and integration in the time of the second term converges to 0 by a similar argument for the above. Thus, we show that the first term converges to 0 as \(N\rightarrow \infty \). Indeed, we have

where

It is relatively easy to see that \({\int _{0}^{T}|G_{2,1}^{N}(w)|dw}\rightarrow 0\) as \(N\rightarrow \infty \) by using (6.3) for the kernel J and the \(L^{\infty }\) estimate of \(\varphi \). For \(G_{2,2}^{N}\), because we have

utilizing (6.3) and \(L^{\infty }\) estimate of \(\varphi \) yields \({\int _{0}^{T}|G_{2,2}^{N}(w)|dw}\rightarrow 0\) as \(N\rightarrow \infty \). Hence, we can finally see that

Choosing the bounds \(\alpha (T)\) for \(\varepsilon (t,s)\) as

we then complete the proof. \(\square \)

This lemma implies that \(M^{N}(t)\) converges to M(t) in distribution on \({\mathscr {H}}_{-J}\) for any \({t}\in {[0,T]}\). We will see that the convergence on the Skorokhod space \({\mathbb {D}}_{{\mathscr {H}}_{-J}}[0,\infty )\), and its limit is only M in Lemma 6.2. The following is a well-known result of the convergence of the stochastic process on the Skorokhod space, which can be found in [7]:

Theorem 6.2

(Theorem 8.6 in [7]) Let (E, r) be complete and separable, and let \(\{Y^{N}\}\) be a family of processes with sample paths in \({\mathbb {D}}_{E}[0,\infty )\). Then, \(\{Y^{N}\}\) is relatively compact if and only if the following two conditions hold:

-

(a)

For every \(\varepsilon >0\) and \({t}\ge {0}\), there exists a compact set \({K_{\varepsilon }}\subset {E}\) such that

$$\begin{aligned} {\inf _{n}P\left( {Y^{N}(t)}\in {K_{\varepsilon }}\right) }\ge {1-\varepsilon }. \end{aligned}$$ -

(b)

For each \(T>0\), there exists \(\beta >0\) and a family \(\{\gamma _{N}(s)\}\), \(s>0\), of nonnegative random variables satisfying

and \(\lim _{s\rightarrow 0}\sup _{N}{\mathbb {E}}[\gamma _{N}(s)]=0\).

Condition (a) follows Lemma 6.1, and it remains that condition (b) holds for \(({\mathscr {H}}_{-J},\Vert \cdot \Vert _{{\mathscr {H}}_{-J}})\).

Lemma 6.4

For \({0}\le {s}\le {t}\)

Proof

Because \(M^{N}(\cdot )\) is a martingale, we have

It suffices to show that the expected values on the right-hand side of the above equation are uniformly bounded in n. As in the proof of Theorem 5.1, we obtain

Therefore, for any \({s,t}\in {[0,T]}\) with \({s}\le {t}\), we have

finishing the proof. \(\square \)

Lemma 6.5

The stochastic processes \(M^{N}\) converge to M in distribution on \({\mathbb {D}}_{{\mathscr {H}}_{-J}}[0,\infty )\), where M is constructed by Lemma 6.2.

Proof

Using Lemmas 6.1, 6.4, and Theorem 6.2, \(M^{N}\) is relatively compact in \({\mathbb {D}}_{{\mathscr {H}}_{-J}}[0,\infty )\). Thus, there exists a subsequence of \(\{M^{N}\}\) such that the subsequential limit is indeed M by Lemma 6.3. This completes the proof. \(\square \)

To complete the proof, we observe the convergence of each term in (6.2).

Lemma 6.6

-

(i)

For \(\alpha \)-Hölder continuous function f with \({\alpha }\in {(0,1]}\),

$$\begin{aligned} {\sup _{{0}\le {t}\le {T}}\Vert (e^{A_{J}t}-e^{A_{J}^{N}t})f\Vert _{2}}\le {C(J,T,f)N^{-\alpha }}. \end{aligned}$$ -

(ii)

Suppose \(g^{N}\rightarrow g\) in distribution on \({\mathscr {H}}_{-J}\). Then,

$$\begin{aligned} \sup _{{0}\le {t}\le {T}}\Vert (e^{A_{J}t}-e^{A_{J}^{N}t})g^{N}\Vert _{{\mathscr {H}}_{-J}}\rightarrow 0\text{ in } \text{ probability } \text{ as } N\rightarrow \infty . \end{aligned}$$

Proof

For the part (i), by the definition of two operators \(A_{J}\) and \(A_{J}^{N}\), and by similar calculation in the proof of Lemma 5.2, we observe that

By the regularity of f and J, and Lemma 4.1 in [12], we have

Note that for any \(j>0\),

Then, using the series expansion of exponential of operators gives

Next, we consider part (ii). The proof is based on Lemma 4.19 in [4]. By tightness of \(g^{N}\), it suffices to show that for some compact set \({K}\subset {{\mathscr {H}}_{-J}}\),

where \(P_{m}f={\sum _{{n}\le {m}}\langle f, e_{n} \rangle e_{n}}\) for fixed m. Recall that the function in RKHS \({\mathscr {H}}_{J}\) has the \(\frac{1}{2}\)-Hölder regularity when the kernel J is Lipschitz continuous. Indeed, for \({h}\in {{\mathscr {H}}_{J}}\), using the reproducing kernel property gives

where we have used the globally Lipschitz continuity of J in last inequality. Hence, we have

as \(N\rightarrow \infty \), where the lat inequality follows from part (i). This completes the proof. \(\square \)

Remark 6.2

For a local diffusion model considered in [3, 4], the convergence in (i) cannot be obtained directly from the definition of the exponential of operators because the \(L^{2}\)-norm of \(\Delta _{N}\) may depend on N. Therefore, they used the spectral decomposition and higher regularity.

Lemma 6.7

in distribution on \({\mathbb {D}}_{{\mathscr {H}}_{-J}}[0,\infty )\).

Proof

Here, we have

First, we examine the second term. By partial integration and the fact that \(M^{N}(0)=0=M(0)\), we have

Set the mapping \(h:{\mathbb {D}}_{{\mathscr {H}}_{-J}}[0,\infty )\rightarrow {\mathbb {D}}_{{\mathscr {H}}_{-J}}[0,\infty )\) by

then it is easy to see that h is continuous. Thus, by the continuous mapping theorem (cf. Theorem 5.1 in [2] and Proposition 2.5 in [20]), the weak convergence of \(M^{N}\) implies that the second term of the RHS of (6.4) converges to 0 in distribution on \({\mathbb {D}}_{{\mathscr {H}}_{-J}}[0,\infty )\). It remains the estimate of the first term in (6.4), and we can show that

Indeed, by partial integration, we have

Hence, (6.5) follows from Lemma 6.6 (ii) and Doob’s maximal inequality. This completes the proof. \(\square \)

Proof of Theorem 6.1

Due to Lemmas 6.6 (i) and 6.7, the proof follows. \(\square \)

For the kernel J satisfying the special condition, we obtain the continuity of the sample path of U.

Proposition 6.1

-

(i)

\(U_{0}\) is independent of M.

-

(ii)

Suppose that \({\int _{0}^{1}J(x,y)dy}=1\) for all \({x}\in {[0,1]}\); then, U(t) has a continuous sample path.

Proof

The proof of (i) can be obtained using a parallel argument to that presented in Lemma 4.15 of [3]. Consider part (ii), and we will show that for any \({0}\le {s}\le {t}\le {T}\), there exists \(C>0\), \(p>0\), and \(\beta >1\) such that

where \(V(t)={\int _{0}^{t}e^{A_{J}(t-w)}dM(w)}\). We take \(p=4\). By definition of \(\Vert \cdot \Vert _{{\mathscr {H}}_{-J}}\),

where we have used the Cauchy–Schwarz inequality and the fact that \(\{a_{n}\}\) are absolutely summable. Because M(t) is a Gaussian process, we can obtain the expression

where \(g(e_{n},\sigma )=\langle |R|(u(\sigma )), e_{n}^{2} \rangle +\langle u(\sigma ), {\int _{0}^{1}J(\cdot ,y)(e_{n}(\cdot )-e_{n}(y))^{2}dy} \rangle \), and \(B(\sigma )\) is the standard Brownian motion. Therefore, using the Burkholder–Davis–Gundy inequality, we obtain:

where two constants \(C(\rho ,J,R)\) are independent of n. Utilizing the absolute summability of \(\{a_{n}\}\) again, we conclude that there is a constant \(C_{2}=C_{2}(\rho ,J,R)\) such that

Hence, this completes the proof by applying Kolmogorov’s continuity theorem (see Proposition 10.3 in [7]). \(\square \)

Data Availibility

Data-sharing is not applicable to this article as no datasets were generated or analyzed during the study.

References

Arnold, L., Theodosopulu, M.: Deterministic limit of the stochastic model of chemical reactions with diffusion. Adv. Appl. Probab. 12(2), 367–379 (1980)

Billingsley, P.: Convergence of Probability Measures. Wiley, New York (1968)

Blount, D.: Comparison of a Stochastic Model of a Chemical Reaction with Diffusion and the Deterministic Model. University of Wisconsin, Madison (1987)

Blount, D.: Comparison of stochastic and deterministic models of a linear chemical reaction with diffusion. Ann. Probab. 19, 1440–1462 (1991)

Blount, D.: Law of large numbers in the supremum norm for a chemical reaction with diffusion. Ann. Appl. Probab. 2, 131–141 (1992)

Blount, D.: Limit theorems for a sequence of nonlinear reaction–diffusion systems. Stoch. Process. Appl. 45(2), 193–207 (1993)

Ethier, S.N., Kurtz, T.G.: Markov Processes: Characterization and Convergence, vol. 282. Wiley (1986)

Franco, T.: Interacting particle systems: hydrodynamic limit versus high density limit. In: From Particle Systems to Partial Differential Equations, pp. 179–189. Springer (2014)

Hutson, V., Martinez, S., Mischaikow, K., Vickers, G.T.: The evolution of dispersal. J. Math. Biol. 47(6), 483–517 (2003)

Itô, K.: Continuous additive S-processes. Stochastic differential systems, (B. Grigelionis, ed.) Springer, Berlin (1980)

Kaliuzhnyi-Verbovetskyi, D., Medvedev, G.S.: The semilinear heat equation on sparse random graphs. SIAM J. Math. Anal. 49(2), 1333–1355 (2017)

Kaliuzhnyi-Verbovetskyi, D., Medvedev, G.S.: Sparse Monte Carlo method for nonlocal diffusion problems. arXiv preprint arXiv:1905.10844 (2019)

König, H.: Eigenvalue Distribution of Compact Operators, vol. 16. Birkhäuser (1986)

Kotelenez, P.: A submartingale type inequality with applicatinos to stochastic evolution equations. Stoch. Int. J. Probab. Stoch. Process. 8(2), 139–151 (1982)

Kotelenez, P.: Law of large numbers and central limit theorem for linear chemical reactions with diffusion. Ann. Probab. 14(1), 173–193 (1986)

Kotelenez, P.: Linear parabolic differential equations as limits of space–time jump Markov processes. J. Math. Anal. Appl. 116(1), 42–76 (1986)

Kotelenez, P.: High density limit theorems for nonlinear chemical reactions with diffusion. Probab. Theory Relat. Fields 78(1), 11–37 (1988)

Kurtz, T.G.: Solutions of ordinary differential equations as limits of pure jump Markov processes. J. Appl. Probab. 7(1), 49–58 (1970)

Kurtz, T.G.: Limit theorems for sequences of jump Markov processes approximating ordinary differential processes. J. Appl. Probab. 8(2), 344–356 (1971)

Kurtz, T.G.: Approximation of Population Processes. SIAM (1981)

Lovász, L.: Large Networks and Graph Limits, vol. 60. American Mathematical Society (2012)

Pazy, A.: Semigroups of Linear Operators and Applications to Partial Differential Equations. Springer, New York (1983)

Protter, P.E.: Stochastic integration and differential equations. In: Stochastic Modelling and Applied Probability, vol. 21, 2nd edn. Springer, Berlin (2005)

Saitoh, S., Sawano, Y.: Theory of Reproducing Kernels and Applications. Springer (2016)

Steinwart, I., Christmann, A.: Support Vector Machines. Springer (2008)

Wang, X.Q., Bo, L.J., Wang, Y.J.: From Markov jump systems to two species competitive Lotka–Volterra equations with diffusion. Acta Math. Sin. Engl. Ser. 25(1), 157–170 (2009)

Watanabe, I., Toyoizumi, H.: Comparison between the Deterministic and Stochastic Models of Nonlocal Diffusion. J. Dyn. Differ. Equ. (2022). https://doi.org/10.1007/s10884-022-10135-4

Acknowledgements

The author gratefully acknowledges the beneficial discussion with Professor Hiroshi Toyoizumi.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflicts of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Watanabe, I. Continuum Limit of Nonlocal Diffusion on Inhomogeneous Networks. J Dyn Diff Equat 36, 2321–2340 (2024). https://doi.org/10.1007/s10884-022-10209-3

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10884-022-10209-3

Keywords

- Stochastic model of nonlocal diffusion

- Reproducing kernel Hilbert space

- Law of large numbers

- Central limit theorem