Abstract

A wide field-of-view (FOV) imaging system is essential for a vehicle around-view monitoring system to ensure the safety of driving or parking. This study presents a hybrid hyperbolic reflector for catadioptric wide FOV imaging. It is possible to observe the horizontal side scene as well as the vertical ground scene surrounding a vehicle using the hyperbolic reflector imaging system with a single camera. The image acquisition model is obtained for the hyperbolic reflector imaging system using the geometrical optics in this study. The image acquisition model is the basis of the image reconstruction algorithm to convert the side scene into a panoramic image and the ground scene into a bird’s-eye image to present it in the driver’s display. Both the horizontal panoramic image and the vertical bird’s-eye image surrounding a vehicle are helpful for driving and parking safety.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

A vehicle around-view monitoring (AVM) system will be a great help to enhance the safety of driving or parking by eliminating the blind spots around a vehicle. In order to eliminate the blind spots, four or six cameras are deployed on four sides of a vehicle. Figure 1 shows an example AVM image as presented in [1]. There has been increasing interest in AVM as an essential element of a smart vehicle in recent years.

In general, an AVM system consists of (1) acquisition of images surrounding a vehicle, (2) viewpoint transformation of the acquired images, and (3) stitching the images into a display image. The viewpoint transformation converts an original image from a camera into a bird’s-eye view. The image stitching process removes the overlapping area of each image and combines the images to be shown on the driver’s display. In order to secure a wide field-of-view (FOV), a fisheye lens is generally used for image acquisition.

Many results are available about study of the AVM. Yebes et al. developed image processing hardware for an AVM system with four cameras and applied it to a mobile robot [2]. The original image acquired through a fisheye lens requires rectification to compensate the radial distortion. Lo et al. proposed a rectification method to convert the fisheye image into an ideal pinhole image for their AVM system [3]. There are overlapping areas between the images from the cameras on the four sides of a vehicle. Some obstacle information in the overlapping area of each image may be lost in the stitching process of the images. A dynamic switching method was proposed to select one image from the AVM cameras to reduce the chance of missing obstacles in the overlapping area [4]. B. Zhang et al. proposed a photometric alignment method to blend the overlapping areas of the AVM camera images [1]. Sato et al. used a spatio-temporal bird’s-eye view image to construct a robust surrounding view of a vehicle or a mobile robot by compensating a broken camera or network disturbance [5]. They also showed that the surrounding bird’s eye view is more useful for achieving accurate remote control for a mobile robot than a conventional front view. Similarly, Lin et al. proposed a top-view image transformation involving the transformation of a perspective image into its corresponding bird’s-eye view [6]. They applied the top-view transformation to a vehicle parking assistant system with a camera at the rear end of a vehicle.

Vehicle AVM image sample [1]: a Original images from four cameras; b surrounding bird’s-eye view

On the other hand, the catadioptric approach is an image acquisition method that uses a reflector with a conventional camera. The catadioptric imaging method has been used for single-camera stereo image acquisition by optically dividing the image sensor plane [7, 8], or for wide FOV omnidirectional image acquisition by using a bowl-shaped convex/concave reflector [9,10,11,12]. Several types of these reflectors have been developed for omnidirectional imaging, including hyperbolic, parabolic, elliptic, and conic reflectors [13]. Wide FOV image acquisition is the most important part of the AVM for reducing the number of cameras and eliminating blind spots.

Based on the omnidirectional image of vehicle surroundings obtained by the catadioptric approach, it is possible to generate a bird’s-eye view of the ground [14] or a virtual perspective along the driver’s viewing direction [15]. Cao et al. proposed a special rectifying catadioptric omnidirectional reflector that preserves the actual distance on the ground surface in the image surface obtained by the reflector [16]. The rectifying reflector has the advantage of wide perspective image acquisition along the diameter without distortion on any plane perpendicular to the optical axis; it makes the image unwarping easy with reduced computation. A similar omnidirectional reflector was proposed based on a rectilinear projection scheme by Kweon et al. [17, 18]. The rectifying reflector was used along with a conventional hyperbolic omnidirectional reflector to obtain the surrounding view of a vehicle for a driver assistance system and autonomous driving [19]. As the imaging system uses the rectifying reflector and the hyperbolic reflector to provide two views in the overlapping area, it can be utilized to create a sparse 3D reconstruction. In [20], Yi et al. proposed an omnidirectional stereo vision method using a single camera with an additional concave lens for the 3D reconstruction. As ultrawide omnidirectional imaging sensors, Cheng et al. [21] and Sturzl et al. [22] presented similar designs consisting of an annularly combined catadioptric mirror and lens assembly, respectively. Their approaches overcame the central blind area occluded by the camera in the conventional catadioptric imaging system.

The main aim of this study is to provide a hybrid hyperbolic reflector for a vehicle catadioptric AVM. The hybrid reflector consists of the upper cylindrical section and the lower half-omnidirectional section with common focal point and surface continuity. The AVM with the hybrid reflector has the following potential advantages compared with the existing AVM systems that use a traditional fisheye lens or an omnidirectional reflector: (1) the horizontal side scene from the hybrid reflector has better image quality than that from the omnidirectional reflector or from the fisheye lens because the upper cylindrical section of the hybrid reflector has higher sensor utilization and (2) it is possible to observe the horizontal side scene as well as the vertical ground scene without any seamline between them. The conventional AVM systems present only the vertical ground scene in the driver’s display, as shown in Fig. 1 in general. Not only the vertical bird’s-eye view of the ground scene but also the horizontal panoramic view from the hybrid reflector are helpful for a driver to be aware in a driving or parking situation. Using geometrical optics, this study presents an image acquisition model of the hyperbolic reflector imaging system and the image reconstruction algorithm for the horizontal panoramic view and vertical bird’s-eye view of the ground scene.

The organization of this paper is as follows: In Sect. 2, the imaging system with the proposed hyperbolic reflector is briefly explained. The image acquisition model of the imaging system and the image reconstruction algorithm are described in Sects. 3 and 4, respectively. Experiments to verify the performance of the hyperbolic reflector imaging system and concluding remarks are presented in Sects. 5 and 6.

2 Imaging System with the Hybrid Hyperbolic Reflector

The hybrid hyperbolic reflector presented in this study has an upper section of a cylindrical hyperbolic reflector, and a lower section of a half-omnidirectional hyperbolic reflector. The hyperbolas in the upper and the lower sections share a common focal point with surface continuity. According to the property of the hyperbolic curve, it is possible to acquire a wide FOV image by using a conventional camera at the symmetric focal point of the hyperbolic reflector, as shown in Fig. 2. The horizontal FOV of the image is over \(180{^{\circ }}\) because of the upper cylindrical hyperbolic reflector, and the vertical downward FOV is over \(90{^{\circ }}\) from the lower omnidirectional hyperbolic reflector. Figure 3 shows the design of the hybrid hyperbolic reflector.

The hyperbolic function in the \(x-y\) plane is described as

where a and b are the design parameters of the function. A pair of symmetric focal points of the hyperbolic function is represented herein as \(\left[ {0\;\;F} \right] ^{ t}=\left[ {0\;\;\sqrt{a^{2}+b^{2}}} \right] ^{ t}\) and \(\left[ {0\;\;{F}'} \right] ^{ t}=\left[ {0\;\;-\sqrt{a^{2}+b^{2}}} \right] ^{ t}\).

3 Image Acquisition Model

The image acquisition model is a mathematical relationship between an object point, \(P_o =\left[ {x_o \;y_o \;z_o } \right] ^{ t}\), in three-dimensional space and a corresponding image point, \(P_i =\left[ {x_i \; z_i } \right] ^{ t}\), on the image plane. In the coordinate system shown in Fig. 2, it is assumed that the y coordinate value of the image plane is constant as \(y_i =F+\Lambda \), where \(\Lambda \) is the focal length of the camera. It is possible to obtain the image acquisition model of the catadioptric imaging system based on the geometrical optics. The object point in the celestial sphere is represented also by the longitude and the latitude angles as \(P_o =\left[ {\lambda \;\;{\varphi }} \right] ^{ t}\), where \(\lambda \) and \({\varphi }\) are given by

The longitude and the latitude angles of an object point are described with respect to \({F}'\) without loss of generality and the imaging camera is modeled as an ideal pinhole without lens distortion in this study.

3.1 Imaging Model for the Upper Cylindrical Hyperbolic Reflector

The upper section of the cylindrical reflector is the same as the hyperbolic reflector in the horizontal \(x-y\) plane and the planar reflector in the vertical z direction. It is convenient to describe the imaging model of the upper section separately in the horizontal plane and in the vertical direction.

3.1.1 Horizontal Plane

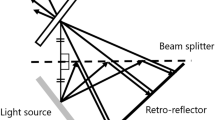

Figure 4 shows the ray tracing in the horizontal plane for the upper section of the reflector. The horizontal cross-section is the hyperbolic curve represented as (1) above. A light ray from an object, \(P_o \) toward \({F}'\) changes its direction for F after reflection on the hyperbolic reflector surface. It has an image at \(P_i \) on the image plane through the pinhole of a camera at F. In Fig. 4, the light rays I and II are described as

Because the reflection point, \(P_c =\left[ {x_c y_c } \right] ^{ t}\) on the horizontal plane is an intersection of (1) and (2), it is obtained as follows:

where n is a solution of the following quadric equation

By inserting (5) into (4) and evaluating x with \(y_i =F+\Lambda \) at the image plane, the relationship between an object point \(P_o \) and the corresponding image point \(x_i \) of \(P_i \) is obtained as follows:

3.1.2 Vertical Direction

At the reflection point, the upper section of the reflector is the same as a planar reflector in the vertical z direction. The imaging model of the planar reflector is described easily by using the effective viewpoint as depicted in Fig. 5a. The effective viewpoint is the symmetric point of the camera pinhole at F with respect to the tangential plane at the reflection point, \(P_c \) [23]. As shown in Fig. 5a, a light ray from the object point passes through the reflection point and the effective viewpoint, and has an image on the effective image plane. Those points are placed on a common vertical plane shown in Fig. 5b, where the image point \(z_i \) is obtained by

where \(t_o \), \(t_p \), and \(t_i \) are given by

The value of \(x_c \), \(y_c \), and \(x_i \) for evaluating (9) is obtained in (5) and (6) as stated previously.

As a summary of (6) and (7), the imaging model between an object point \(P_o =\left[ {x_o \;y_o \;z_o } \right] ^{ t}\) and a corresponding image point \(P_i =\left[ {x_i z_i } \right] ^{ t}\) is written as

where \(x_c \) and \(y_c \) are given by (5). As the object point is described as \(P_o =\left[ {\lambda \;\;{\varphi }} \right] ^{ t}\), the image point in (9) is represented by

where a, b, and \(\Lambda \) are the design parameters of the imaging system.

3.2 Imaging Model for the Lower Omnidirectional Hyperbolic Reflector

In the coordinate system shown in Fig. 2, the lower section of the hyperbolic reflector is described as follows:

Figure 6 shows the ray tracing in the vertical cross-sectional \(y-z\) plane of the lower section of the reflector.

The light rays I and II are represented in terms of \(\lambda \) and \({\varphi }\) in 3-dimensional space as (13) and (14), respectively:

It is possible to obtain the reflection point \(P_c =\left[ {x_c y_c z_c } \right] ^{ t}\) on the hyperbolic curve as the intersection of (12) and (13) as follows:

where k is the solution of the following quadric equation

Inserting (15) into (14) and evaluating x and z at the image plane\(\left( {y_i =F+\Lambda } \right) \) gives the image points \(P_i =\left[ {x_i \; z_i } \right] ^{ t}\) as follows:

The image acquisition model is summarized in terms of \(\lambda \) and \({\varphi }\) of an object point as

4 Image Reconstruction

It is possible to observe the side scene as well as the ground scene by the imaging system with the hyperbolic reflector in this study. Image reconstruction converts the side view from the upper section of the reflector into a horizontal panoramic view and the ground view from the lower section into a vertical bird’s-eye view.

4.1 Horizontal Panoramic View from the Upper Section of the Reflector

For each image point \(P_i =\left[ {x_i \; z_i } \right] ^{ t}\), it is possible to obtain \(P_o =\left[ {\lambda \;\;{\varphi }} \right] ^{ t}\) for the corresponding object point on the basis of the image acquisition model in Sect. 3. The well-known Mercator projection is a conformal map that rearranges an object point on the surface of the Earth to a map point on a rectangular surface according to \(\lambda \) and \({\varphi }\) while preserving linear scale. Figure 7 shows image reconstruction using the Mercator projection for a part of the original image from the upper section of the reflector. In the figure, the coordinate frame is drawn at \({F}'\) to show that \(\lambda \) and \({\varphi }\) of an object point are described with respect to \({F}'\). According to the object point \(P_o =\left[ {\lambda \;\;{\varphi }} \right] ^{ t}\) from an original image point, the reconstructed image point, \(P_w =\left[ {x_w \; y_w } \right] ^{ t}\), is represented as follows [24]:

where l is a scale factor and \(\lambda _o \) denotes the center of the longitudes, that is, the center of the vertical lines of the original image acquired. In this image reconstruction algorithm, object points at the same longitude are placed on the same vertical line in the reconstructed image, and object points at the same latitude are placed on the same horizontal line in the reconstructed image, resulting in a horizontal panoramic image.

4.2 Bird’s-Eye View from the Lower Section of the Reflector

Figure 8 illustrates the image reconstruction for the ground plane image from the lower section of the reflector, resulting in a bird’s-eye view image. In the coordinate system shown in Fig. 2, the ground plane equation is described as

As an intersection of the plane equation (20) and the line equation (13), it is possible to obtain the coordinates of the object points on the ground plane by

The bird’s-eye view of the ground plane is obtained as (22) by rearranging the coordinates of the object points (21) onto the reconstructed image plane:

where m is a scale factor.

5 Experiments

The imaging system with the hyperbolic reflector developed in this study is shown in Fig. 9. The camera has a resolution of \(1280\times 960\). Table 1 summarizes the parameter values of the reflector in Fig. 3.

The placement of the camera relative to the reflector is fundamental for developing a central catadioptric imaging system with a single viewpoint. The central imaging system has low computational cost while finding the reflection point on the reflector surface and the corresponding object point without causing distortion that would induce errors onto the image. Considerable research has been conducted on the calibration and placement of the catadioptric imaging system [25, 26]. This study adopts the following steps for imaging system calibration and camera placement: (1) employing the publicly available MATLAB calibration toolbox [27], which allows the ideal pinhole model of the camera and (2) manually adjusting the camera placement with respect to the reflector for the single viewpoint constraint as described in [28]. In the central imaging system with a hyperbolic reflector, a line connecting the vanishing points of a set of circles fitted on the images of the parallel three-dimensional lines intersects at the optical center of the image [28]. Figure 10 shows the calibration result using parallel three-dimensional lines in a grid pattern. In Fig. 10a, a set of circles fitted on the three-dimensional lines of the non-central image does not have a common vanishing point. Instead, the circles on the central image have common vanishing points and the line connecting the vanishing points intersects the camera optical center in Fig. 10b. Figure 10c is the bird’s-eye view of the lower part of the central image.

For the image reconstruction, an inverse mapping method is adopted to reduce the overall computational burden. According to (11) for the lower side of the image or (18) for the upper side of the image, it is possible to find a pixel \(P_i =\left[ {x_i \; z_i } \right] ^{ t}\) from the original image corresponding to \(P_o =\left[ {\lambda \; {\varphi }} \right] ^{ t}\). Then, rearranging the pixel \(P_i =\left[ {x_i \; z_i } \right] ^{ t}\) onto \(P_w =\left[ {x_w \; y_w } \right] ^{ t}\) in accordance with (19) or (22) results in the image reconstructed from the original image. The well-known bilinear interpolation method is adopted to interpolate the non-integer \(P_i =\left[ {x_i \; z_i } \right] ^{ t}\) [29].

Bird’s-eye view according to the height of the imaging system. Actual length of the grid pattern is 4846 mm. a Ground image in accordance with the height of the imaging system, b original images with different heights: Left: \(h_1 =1395 mm\), right: \(h_2 =1017 mm\) and c reconstruction of the bird’s-eye views using the same height, \(h=1395 mm\)

For a vehicle experiment, the imaging system is attached at the center of the left side of a vehicle. Figure 11 shows the results of the experiment. The horizontal panoramic view in Fig. 11b and the vertical bird’s-eye view in Fig. 11c are reconstructed from the original image in Fig. 11a. Those views are wide and natural to a driver. The images in Fig. 11b, c have low resolution around the left and the right sides because of the non-uniform resolution of the catadioptric imaging system with the hyperbolic reflector.

In the vehicle application, the height of the imaging system can change depending on the specific wheels and tires used or the ground condition, which may cause distortion in the image. Figure 12 shows the influence of the imaging system height on the reconstruction of the bird’s-eye view for the ground plane. Figure 12a explains that the central imaging system has only the object size change according to the height without further distortions in the image. The size change in the image in accordance with the height is shown in Fig. 12b. To achieve exact reconstruction, the bird’s eye view algorithm in (21) requires the actual height, h of the imaging system. The right side of Fig. 12c shows the size change of the same grid pattern reconstructed using the false height information.

6 Concluding Remarks

The AVM system can secure the safety of a vehicle in driving or parking. In order to reduce the number of cameras required to observe the surrounding area of a vehicle as widely as possible, a wide FOV imaging method is essential for the AVM system. The catadioptric imaging system using the hybrid hyperbolic reflector proposed in this study can observe the horizontal side view as well as the ground view surrounding a vehicle with a wide FOV. The reflector consists of an upper section of a cylindrical hyperbolic reflector and a lower section of an omnidirectional hyperbolic reflector. The upper and lower sections of the reflector share a common focal point with surface continuity. From the geometrical optics, the image acquisition model for the proposed imaging system is obtained in this study. The image acquisition model is used to reconstruct an image from the imaging system and to present the reconstructed natural image in the driver’s display. Experimental results showed a natural panoramic view from the horizontal side scene and a vertical bird’s-eye view from the ground scene surrounding a vehicle. Both reconstructed views are useful for the AVM to improve the safety of a vehicle.

References

Zhang, B., Appia, V., Pekkucuksen, I., Liu, Y.: A surround view camera solution for embedded systems. In: Proceedings of IEEE Conference on Computer Vision and Pattern Recognition Workshops, Ohio, USA, pp. 676–681 (2014)

Yebes, J., Alcantarilla, P., Bergasa, L., Gonzalez, A., Almazan, J.: Surrounding view for enhancing safety on vehicles. In: Proceedings of IEEE Intelligent Vehicles Symposium Workshops (2012)

Lo, W., Lin, D.: Embedded system implementation for vehicle around view monitoring. In: Proceedings of International Conference on Advanced Concepts for Intelligent Vision Systems(ACIVS 2015), Museo Diocesano, Italy. LNCS 9386, pp. 181–192 (2015)

Chen, Y., Tu, Y., Chiu, C., Chen, Y.: Embedded system for vehicle surrounding monitoring. In: Proceedings of IEEE International Conference on Power Electronics and Intelligent Transportation System, pp. 92–95 (2009)

Sato, T., Moro, A., Sugahara, A., Tasaki, T., Yamashita, A., Asama, H.: Spatio-temporal bird’s-eye view images using multiple fish-eye cameras. In: Proceedings of IEEE/SICE International Symposium on System Integration, Kobe, Japan, pp. 753–758 (2013)

Lin, C., Wang, M.: A vision based top-view transformation model for a vehicle parking assistant. Sensors 12, 4431–4446 (2012)

Jang, G., Kim, S., Kweon, I.: Single camera catadioptric stereo system. In: The 6th Workshop on Omnidirectional Vision, Camera Networks and Non-Classical Cameras (OMNIVIS2005) (2005)

Gluckman, J., Nayer, S.: Catadioptric stereo using planner mirrors. Int. J. Comput. Vis. 44(1), 65–79 (2001)

Hicks, R., Bajcsy, R.: Catadioptric sensor that approximate wide-angle perspective projections. In: Proceedings of International Conference on Pattern Recognition’00, pp. 545–551 (2000)

Svoboda, T., Pajdlar, T.: Epipolar geometry for central catadioptric cameras. Int. J. Comput. Vis. 49(1), 23–37 (2002)

Micusik, B., Pajdla, T.: Structure from motion with wide circular field of view cameras. IEEE Trans. Pattern Anal. Mach. Intell. 28(7), 1135–1149 (2006)

Nayer, S.: Catadioptric omnidirectional camera. In: Proceedings of IEEE Computer Society Conference on Computer Vision and Pattern Recognition, pp. 482–488 (1997)

Baker, S., Nayar, S.: A theory of catadioptric image formation. In: Proceedings of IEEE International Conference on Computer Vision, Bombay, pp. 35–42 (1998)

Trivedi, M., Gandhi, T., McCall, J.: Looking-in and looking-out of a vehicle: selected investigations in computer vision based enhanced vehicle safety. IEEE Trans. Intell. Transp. Syst. 8(1), 109–120 (2007)

Ehlgen, T., Pajdla, T., Ammon, D.: Eliminating blind spots for assisted driving. IEEE Trans. Intell. Transp. Syst. 9(4), 657–664 (2008)

Cao, M., Vu, A., Barth, M.: A novel omni-directional vision sensing technique for traffic surveillance. In: Proceedings of IEEE Intelligent Transportation Systems Conference, pp. 648–653 (2007)

Kweon, G., Hwang-bo, S., Kim, G., Yang, S., Lee, Y.: Wide-angle catadioptric lens with a rectilinear projection scheme. Appl. Opt. 45(34), 8659–73 (2006)

Kweon, G., Choi, Y., Kim, G., Yang, S.: Extraction of perspectively normal images from video sequences obtained using a catadioptric panoramic lens with the rectilinear projection scheme. In: Technical Proceedings of the 10th World Multi-Conference on Systems, Cybernetics, and Informatics, pp. 67–75 (2006)

Vu, A., Barth, M.: Catadioptric omnidirectional vision sensor integration for vehicle-based sensing. In: Proceedings of 12th IEEE Conference on Intelligent Transportation Systems, pp. 120–126 (2009)

Yi, S., Ahuja, N.: An omnidirectional stereo vision system using a single camera. In: Proceedings of the 18th International Conference on Pattern Recognition (ICPR’06), pp. 861–865 (2006)

Sturzl, W., Boeddeker, N., Dittmar, L., Egelhaaf, M.: Mimicking honeybee eyes with A \(280{^{\circ }}\) field of view catadioptric imaging system. Bioinspiration Biomim. 5, 1–13 (2010)

Cheng, D., Gong, C., Xu, C., Wang, Y.: Design of an ultrawide angle catadioptric lens with an annularly stitched aspherical surface. Opt. Express 24(3), 2664–2677 (2016)

Gluckman, J., Nayar, S.: Catadioptric stereo using planar mirrors. Int. J. Comput. Vis. 44(1), 65–79 (2001)

Sanchez, J., Canton, M.: Space Image Processing. CRC Press, Boca Raton (1998)

Schonbein, M., Straus, T., Geiger, A.: Calibrating and centering quasi-central catadioptric cameras. In: Proceedings of IEEE International Conference on Robotics and Automation (ICRA), pp. 4443–4450 (2014)

Perditoto, L., Araujo, H.: Estimation of mirror shape and extrinsic parameters in axial catadioptric systems. Image Vis. Comput. 54, 45–59 (2016)

Geyer, C., Daniilidis, K.: A unifying theory for central panoramic systems and practical implications. In: Proceedings of European Conference on Computer Vision (ECCV2000), pp. 445–461 (2000)

Jain, R., Kasturi, R., Schunck, B.: Machine Vision. McGraw-Hill, New York (1995)

Author information

Authors and Affiliations

Corresponding author

Additional information

This research was supported by the research program funded by National Research Foundation(Ministry of Education) of Korea (NRF-2015R1D1A1A01057227).

Rights and permissions

About this article

Cite this article

Ko, YJ., Yi, SY. Catadioptric Imaging System with a Hybrid Hyperbolic Reflector for Vehicle Around-View Monitoring. J Math Imaging Vis 60, 503–511 (2018). https://doi.org/10.1007/s10851-017-0770-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10851-017-0770-0