Abstract

In actual industrial applications, the defect detection performance of deep learning models mainly depends on the size and quality of training samples. However, defective samples are difficult to obtain, which greatly limits the application of deep learning-based surface defect detection methods to industrial manufacturing processes. Aiming at solving the problem of insufficient defective samples, a surface defect detection method based on Frequency shift-Convolutional Autoencoder (Fs-CAE) network and Statistical Process Control (SPC) thresholding was proposed. The Fs-CAE network was established by adding frequency shift operation on the basis of the CAE network. The loss of high-frequency information can be prevented through the Fs-CAE network, thereby lowering the interference to defect detection during image reconstruction. The incremental SPC thresholding was introduced to detect defects automatically. The proposed method only needs samples without defects for model training and does not require labels, thus reducing manual labeling time. The surface defect detection method was tested on the air rudder image sets from the image acquisition platform and data augmentation methods. The experimental results indicated that the detection performance of the method proposed in this paper was superior to other surface defect detection methods based on image reconstruction and object detection algorithms. The new method exhibits low false positive rate (FP rate, 0%), low false negative rate (FN rate, 10%), high accuracy (95.19%) and short detection time (0.35 s per image), which shows great potential in practical industrial applications.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

The air rudder is an essential structural component that controls the flight attitude of aircraft (Liu et al., 2021a, 2021b, 2021c). The surface defects of the rudder directly affect the strength and service life of the air rudder, which cause great hidden dangers to the flight safety of the aircraft (Torabi et al., 2021). Therefore, nondestructive detection of the defects on air rudder surface is crucial. Traditional surface defect detection of air rudder mainly relies on manual judgment, which is easy to miss minor defects and inefficient. Combining the outstanding advantages of machine vision, such as non-contact, non-damage, high degree of automation, high safety and reliability (Hu 2015; Tsai & Luo 2010; Sanghadiya & Mistry 2015), and the excellent detection capacity of deep learning (Badmos et al., 2020; Singh & Desai, 2022; Soukup & Huber-Mork 2014; Wei et al., 2018; Yi et al., 2017), a variety of detection methods for the surface defects of the air rudder have been designed.

Surface defect detection using deep network models generally requires many samples for training. However, the number of samples in actual industrial manufacturing is often unbalanced. In general, too few defect samples can be provided to meet the requirements of most deep learning networks. Researchers have been gradually noticing and exploring solutions to this situation (Božič et al., 2021). Current solutions are based on Measure Learning, Transfer Learning, Data Augmentation, Multi-modal, and Unsupervised Learning.

Common deep learning methods such as Convolutional Neural Networks (CNN) belong to Representation Learning in Supervised Learning, which predicts test samples through feature extraction. Measure Learning is another type of Supervised Learning that compares the similarity between tested and training samples to make predictions for the tested objects. Siamese Network is the most commonly used model in Measure learning (Liu et al., 2021a, 2021b, 2021c), which inputs the image of the tested sample and the image of the standard sample into two networks with the same architecture and parameters simultaneously. It determines the presence of defects by comparing the eigenvalue outputs of the two networks (Norouzi et al., 2012). Wu et al. (2019) used the Siamese Network as a feature extractor to solve the problem of imbalanced samples in button surface defect detection. It clusters non-defective samples according to the eigenvalues. The defective samples become outliers in the clustering process to detect defects on the surface of the button. Tao et al. (2022) designed a Dual-Siamese Network, which can detect the defects of the tested samples while realizing defect localization. In this method, Dense Feature Fusion (DFF) module was introduced to extract features and improve the accuracy of defect location. In these studies, it can be found that the Siamese Network still needs a certain number of negative samples to measure the outliers of the tested samples, so as to distinguish the positive and negative categories of the tested samples. At the same time, the detection object should have a relatively uniform style, so as to better cluster the eigenvalues. Therefore, these characteristics make it inapplicable to complex and changeable actual industrial scenarios.

Transfer Learning is the transfer of data or knowledge structures in related fields to complete or improve the learning effect of the target field or task. Transfer Learning in surface defect detection is achieved by using pre-trained models with large datasets. It can be used to solve similar defect detection problems and generally has good generalization ability (Yosinski et al., 2014). This method can effectively handle the problem of sample unbalance (Ren et al., 2017). Abu et al. (2021) studied the Transfer Learning effect of four basic models, including ResNet, VGG, MobileNet, and DenseNet, for surface defect detection of steel strips. The SEVERSTAL dataset and NEU dataset have been tested respectively to demonstrate the effectiveness of Transfer Learning in solving the problem of insufficient negative samples. Transfer Learning is based on the network model parameters obtained by pre-training many datasets and re-training them for specific detection samples. This method can obtain a detection model with solid generalization ability. However, its effectiveness in detecting specific objects is still limited by the quantity and quality of samples of the object, so it sometimes cannot meet the requirements of detection accuracy.

The method based on Data Augmentation compensates for the deficiency of data through specific methods (Jain et al., 2020). The simplest image augmentation methods include translation, rotation, scaling, and cropping the image or transplanting the surface defects of other objects to the current detected object (Tabernik et al., 2020). In addition, Generative Adversarial Network (GAN) is a deep learning network (2014) proposed by Lan Goodfellow in 2014, which has been widely used in Data Augmentation due to its unique capabilities. The basic idea of GAN is to combine two deep neural networks, Generator and Discriminator, to obtain a converged Generator through adversarial training. The Generator network can use random input values and finally generate images conforming to the characteristics of sample space through the neural network (He et al., 2020). Qin et al. (2020) applied GAN-based data augmentation technology to classify skin lesions images due to the lack of labeled data and class imbalanced datasets for the classification of skin lesions. Many evaluation indicators, including Inception Score (IS), Fréchet Inception Distance (FID), Precision, and Recall, were used to demonstrate the effectiveness of the method. Liu et al. (2019) proposed a GAN-based general defect sample simulation method. Under the GAN framework, the simulation network of the Encoder-Decoder structure was proposed, and the method was used in deep learning to detect surface defects. Through testing the data sets of button defects, road cracks, weld defects and microsurface, it was demonstrated that the simulation samples generated by the simulation network can be directly used to train surface detection network models. The idea of Data Augmentation is to directly increase the number of samples, hoping to fundamentally solve the problem of insufficient number of trained network models. Existing data enhancement methods are challenging to supplement various forms of high-quality samples, resulting in networks of varying qualities.

Multi-modal methods are based on introducing other modal information in the case of a small number of samples and utilizing information fusion to enhance the differences between different types of samples (Sun et al., 2020). Gao et al. (2019) proposed a vision-based defect detection method based on multi-level information fusion, which achieved feature fusion through three VGG16 networks and showed superior performance on the NEU dataset. The premise of multi-modal detection is multi-source data. That is, multiple sensors are required for acquisition. The expansion of hardware scale in actual engineering will reduce its application scenarios and decrease the applicability of detection tools.

Compared with Supervised Learning, the most significant difference of Unsupervised Learning is that no training sample is required. There are two main classification methods in Unsupervised Learning. One is probabilistic Unsupervised Learning, Restricted Boltzmann Machine (RBM), and its derivative models. The other is the deterministic type, Autoencoder (AE), and its derivative models (Chase & Simon, 1973). Among them, AE was improved by Hinton et al. (1993), which consists of an Encoder and a Decoder. The Encoder encodes the input data through specific encoding rules and compresses the dimensions of the input data to obtain the feature expression. The Decoder performs data reconstruction on the compressed feature expression and restores the input data as much as possible (Tschannen et al., 2018). The data reconstruction function of the AE network is noticed and used for surface defect detection based on the image. The sample images with defects are input into the AE network, which is trained entirely by the positive samples (no-defect samples). The defect areas in the reconstruction result will be reconstructed into defect-free areas. According to this feature, AE is improved and used for surface defect detection in the case of unbalanced samples. Mujeeb et al. (2019) proposed a defect detection method using AE to extract features which can be detected without defect samples during the training process. For different defects, the study used welding defect images and surface scratch images from NEU to verify the effect of the method. Heger et al. (2020) compared the reconstruction error of Convolutional Autoencoder (CAE) with the set thresholding to realize defect detection and applied the method to detect cracks and wrinkles in the formed metal of the production line to verify its effectiveness. Tsai and Jen (2021) proposed a regularization loss function to constrain CAE training. The feature distribution of no-defect samples was optimized so that there was an obvious distance between the features of defect-free and defective samples. Compared with ordinary CAE, the superiority of this model was verified by the textured surface data set. Liu et al., (2021a, 2021b, 2021c) proposed a semi-supervised anomaly detection method named Dual Prototype Automatic Encoder (DPAE). The network was an Encoder-Decoder-Encoder structure. Defects were determined by the distance between the final output features of non-defective samples and defective samples after passing through the network. The detection effect of the network was verified by constructing an aluminum profile surface defect (APSD) dataset. AE, the defect detection principle of its improved network, was based on image reconstruction, which exhibited the characteristics of training only with positive samples. At the same time, this method offered a broader range of use and the ability to detect unknown types of defects. It is one of the detection ideas that are currently under investigation.

In summary, many types of studies have been carried out to address the problem that the defect samples are insufficient to meet the general requirements of deep network training. Among them, Unsupervised Learning can use samples without labels for training. This method only uses positive samples as the training set of the network, which can solve the problem of insufficient defect samples. Moreover, it does not need to manually set labels to distinguish sample classification, which reduces the workload in the early stage of the detection. Based on this idea, the CAE in Unsupervised Learning uses the images of positive samples (non-defective samples) to train the network so that sample surface features can be extracted to reconstruct sample images. When the tested samples are input into the network, the presence of defects can be judged by observing whether the reconstructed residuals are abnormal or not. The network realizes surface defect detection by detecting the differences between original and reconstructed images, which requires manual judgment to determine the presence of abnormalities (Chow et al., 2020; Szarski & Chauhan, 2022).

Two problems need to be solved in current surface defect detection methods based on the reconstructed image of the CAE. One is that this method cannot automatically judge the existence of surface defects, and the other is the high-frequency loss during image reconstruction which interferes with defect detection. For the first problem, Statistical Process Control (SPC) is introduced to determine the threshold for defect detection. The distance between the reconstruction residual of the tested image and the average reconstruction residual of the non-defective training image is quantitatively distinguished to achieve defect detection (Qiu, 2020). For the second problem, according to the frequency principle of the deep network (F-Principle) (Luo et al., 2021; Xu et al., 2019), the low-frequency components are captured first during image reconstruction and then the relatively slow high-frequency components are captured. This principle makes CAE suitable for the reconstruction of low-frequency information but not for high-frequency information, resulting in the loss of reconstructed high-frequency information. The high-frequency information generally occurs in the areas where adjacent pixel values change rapidly (such as object edges, textures, and other details). These missing details also appear as abnormalities in the reconstructed residuals, interfering with defect detection. In this paper, CAE is modified into a Frequency-shifted Convolutional Autoencoder (Fs-CAE) with the frequency-shift function, in which high-frequency components are utilized during image reconstruction, thus greatly improving the ability of surface defect detection.

In this paper, a surface defect detection method based on Fs-CAE and SPC thresholding is proposed. The method uses positive samples to train the network in an unsupervised mode and does not require prior knowledge of defects and manually extracted features, nor does it require domain experts to discover possible defects. The effectiveness of the method is verified on the self-established air rudder surface image dataset. In summary, the contributions of this paper are listed as follows:

-

Aiming at the problem of insufficient defective samples, an air rudder surface defect detection method based on unsupervised learning CAE is proposed, which exhibits a detection accuracy of 95.19%.

-

The SPC thresholding is introduced as the classifier of defect detection to realize automatic defect detection of CAE image reconstruction.

-

CAE is improved into the Fs-CAE with the frequency-shift function, which solves the problem that the loss of high-frequency information interferes with defect detection in the process of image reconstruction.

This paper is organized as follows: Sect. 2 shows the problem and the theory and implementation of various methods. Section 3 shows the experimental details. Section 4 shows the detection results. The conclusions are drawn in Sect. 5.

Problem and methodology description

In this section, the styles of air rudders and their surface imperfections are described. The surface defect detection method of air rudder based on Fs-CAE reconstructed images proposed in this paper is introduced, including the image reconstruction method based on CAE, the defect detection method based on SPC thresholding, and the Fs-CAE architecture.

Problem and methodology description

The air rudder is mainly composed of connecting metalcore, rocker arm, and rudder surface. The rudder surface is shown in Fig. 1. The manufacturing process of the air rudder surface includes molding, heating, curing, and cutting, which can be investigated using related knowledge and theories such as mechanics, thermals, chemistry, materials, vibration, and tribology (Tao et al., 2020). This multidisciplinary coupled machining process results in complex product changes, including dimensional changes, surface roughness changes, and residual stress changes. For example, cracks caused by internal stress changes due to excessive heating rate, pits caused by external physical impact, and stick molds caused by insufficient release agent or uneven application, as shown in Fig. 2. These defects are the detection targets of this study.

The principle of image reconstruction based on CAE

AE consists of an Encoder and a Decoder. The Encoder is a fully connected layer, encoding the input data to obtain the feature expression. The Decoder is also a fully connected layer, decoding feature expression to restore the input data as much as possible. CAE is the improved network based on AE, introducing convolutional layers into the Encoder and Decoder instead of just using fully connected layers. The image input to CAE is encoded by the Encoder to obtain the features and then decoded and restored to an image by the Decoder. The introduction of the convolutional layer can better preserve the spatial information of the 2-dimensional signal so that the CAE has a better image restoration ability.

CAE maintains the spatial locality of the original image by extracting features through convolution operations on each neuron. Therefore, for a given input image \({x}_{i}\), the Encoder performs computations using Eq. (1):

where \({e}_{i}\) is the encoding result of the image \({x}_{i}\), \(\sigma \) is the activation function, \(*\) is the 2D convolution, \({F}^{n}\) is the \(n\)th 2D convolution filter, and \(b\) is the bias brought by the neurons in the encoder.

Then the reconstructed image for \({e}_{i}\) is decoded by the Decoder using Eq. (2):

where \({z}_{i}\) is the decoding result of the encoding result \({e}_{i}\) by the Decoder, \(\widetilde{{F}^{n}}\) is the \(n\)th 2D deconvolution filter in the Decoder, and \(\widetilde{b}\) is the bias brought by the neurons in the Decoder.

During this unsupervised training process, the network model updates the parameters by minimizing the reconstruction loss of the network using Eq. (3):

where \(\theta =\left[W,b,\widetilde{W},\widetilde{b}\right]\), \(\left[W,b\right]\) are the weights and biases of the neurons in the Encoder, \(\left[\widetilde{W},\widetilde{b}\right]\) are the weights and biases of the neurons in the Decoder, and \(M\) is the number of images in the training set.

At the same time, in order to enable the network model to effectively extract the features of samples even when there are many neurons in the hidden layer, a sparsity constraint is introduced in the hidden layer. The loss with sparse parameters is calculated using Eq. (4):

where \(h\) represents the number of neurons in the hidden layer, and \(\beta \) represents the sparsity ratio. \(\sum_{j=1}^{h}KL\left(p||{p}_{j}\right)\) represents the Kullback–Leibler (KL) divergence between the variables whose mean values are \(p\) and \({p}_{j}\), respectively, where \(p\) represents the expected average activation value, and \({p}_{j}\) represents the average activation value of the \(j\)th neuron node. The KL divergence between \(p\) and \({p}_{j}\) is calculated using Eq. (5):

As the training progresses, each \({p}_{j}\) will approach \(p\), and the reconstruction loss will gradually decrease, making the reconstructed image as consistent as possible with the original image. Image reconstruction is achieved through this process.

For the Encoder in this paper, the input is set as a grayscale image of a single channel. The Encoder contains three consecutive down-sampling layers. Each down-sampling layer includes a convolutional layer with the kernel of 3 × 3 and the stride of 1, a convolutional layer with the kernel of 4 × 4 and the stride of 2, a 0–1 normalization function, and a Rectifier Linear Unit (ReLU) activation function. After each down-sampling layer, the sizes of rows and columns of the image will be double-diminished, and the number of channels will be doubled, thus achieving a more robust representation of coding features. The convolutional layer with a non-unit stride is used instead of pooling to realize down-sampling. The pooling operation will bring about the loss of image pixel position information, which significantly reduces the reconstruction effect of the network. The final features obtained by down-sampling are flattened and then input to the fully connected layer to map to a 30-dimensional hidden layer, which is the output (Code) of the Encoder. Then, the Decoder performs reverse mapping according to the encoding process to reconstruct the input image. The Decoder adopts the symmetrical design with the Encoder, and its input is Code. The input of 30 channels is mapped through the fully connected layer to the flattened feature of the same dimension as the input of the fully connected layer in the Encoder. Then it is shaped as the same size as the result of continuous down-sampling in the Encoder and input to the Decoder. The Decoder consists of three consecutive up-sampling layers. Each up-sampling layer contains a convolutional layer with the kernel of 3 × 3 and the stride of 1, a deconvolutional layer named ConvTranspose with the kernel of 4 × 4 and the stride of 2, a 0–1 normalization function, and a ReLU activation function. After each up-sampling layer, the pixel row and column size of the image will be doubled, while the number of channels will be double-diminished to restore the features to the image gradually. Table 1 shows the structural parameters of the network used in this work.

Defect detection method based on CAE and SPC thresholding

The image reconstruction capability of CAE can be used in defect detection. Through training, the images output by CAE can be reconstructed into the input images. If the CAE is trained using only positive samples without defects, the tested samples with defects will be reconstructed into no-defect samples. In this way, the surface defect areas of tested sample with defects are reconstructed into the normal surface. Figure 3 shows the framework of the CAE architecture and the image reconstruction process. When the tested image with defects is input into CAE, the defect area in the original image becomes a normal area in the reconstructed image.

The image in the computer is essentially the visualization result of a matrix whose elements are pixel values. After the CAE reconstructs the image, the difference between the input test sample image and the reconstructed image yields a reconstructed residual matrix with the same size as the input image. The elements in the matrix are the reconstructed residual values of each pixel of the image. The absolute value of the elements in the matrix can be used to obtain the visualized residual image, as shown in Fig. 4. The existence of defects on the tested sample can be observed through the image reconstruction residual (Heger et al., 2020). However, this is the result of manual observation, and a method for quantitative and automatic detection of defects is still required.

In this paper, SPC thresholding is employed as the classifier to identify the defects in the tested samples, which provides a quantitative basis for defect detection. The process is shown in Fig. 5. The training image set of the network is set as \(\mathrm{X}\), where the image is \({x}_{j}\), \(j\in \left(1,\mathrm{M}\right)\), and \(\mathrm{M}\) is the number of images in the training set. The reconstructed image set of the training image set is set as \(\mathrm{Y}\), where the image is \({y}_{j}\), \(j\in \left(1,\mathrm{M}\right)\), and \(\mathrm{M}\) is the number of images in the reconstructed image set, which is the same as the training set. The residuals of \({x}_{j}\) and \({y}_{j}\) are \({res}_{j}=\left|{x}_{j}-{y}_{j}\right|\). The SPC thresholding \({T}_{d}\) for \(\mathrm{X}\) is calculated using Eqs. (6) to (9):

where

and

where \({\overline{res} }_{x}\) is the average reconstruction residual of the training set \(\mathrm{X}\), and \(\mathrm{C}\) is a control limit constant.

The tested image is set as \(x\), the reconstructed image as \(y\), and the reconstruction residual as \(res=\left|x-y\right|\). For \(x\), SPC thresholding for distance \(d\) is calculated using Eq. (10):

If \(d>{T}_{d}\), the detected image is considered to contain defects. Otherwise, it is flawless.

The SPC thresholding is calculated based on the training set. The training set consists of collected positive sample images and is continuously updated with the production process. To meet changing samples, SPC thresholding is dynamically changed by deriving an incremental SPC thresholding. The training set of newly added sample images is set as \({\mathrm{X}}^{^{\prime}}\), where the image is \({x}_{j}\), \(j\in \left(1,\mathrm{M}+\mathrm{L}\right)\), and \(\mathrm{L}\) is the number of new images in the training set. The reconstructed image set of the new training set is set as \({\mathrm{Y}}^{^{\prime}}\), where the image is \({y}_{j}\), \(j\in \left(1,\mathrm{M}+\mathrm{L}\right)\). The residuals of \({x}_{j}\) and \({y}_{j}\) are \({res}_{j}=\left|{x}_{j}-{y}_{j}\right|\). The new \({\sigma }_{d}\) and \({\mu }_{d}\) are calculated using Eq. (11) and (12):

Improved Fs-CAE network model

The Encoder and Decoder structures of traditional CAE are similar to CNN, which are composed of convolutional layers and fully connected layers. Therefore, CAE also follows the F-Principle of the deep network. The network model will preferentially learn the low-frequency components of the training set images, while some high-frequency components will be lost. Figure 6 shows the frequency component changes in spectrum image of the reconstructed image with the training process. The spectrum of the air rudder image shows the distribution state from the center to the surrounding area from the low frequency to the high frequency on the spectrum image. It can be seen that in the process of CAE network training from 100th epoch to 400th epoch, the low-frequency components are preferentially learned and reconstructed. Finally, compared to the original spectrum image, some high-frequency components are also not effectively learned. The high-frequency components appear in the image as detailed information such as object edges and textures. The loss of high-frequency components prevents CAE from effectively reconstructing the details in the image. As a result, high-amplitude areas other than the defect area will appear in the reconstructed residual image, interfering with defect detection. In theory, by continuously increasing the training epochs, the network model can gradually learn the high-frequency components of the image, but this introduces more expensive time costs and increases the risk of overfitting the network.

The Frequency-shift Convolutional Autoencoder (Fs-CAE) proposed in this paper is shown in Fig. 7, from which it can be seen that deep networks first achieve convergence in the low-frequency range. Before the encoding stage, the original image is transformed into a spectral image by Fourier transform and decomposed into five frequency bands by Butterworth filters with different pass frequency ranges (< 100 Hz, 100 Hz-200 Hz, 200 Hz-300 Hz, 300 Hz-400 Hz and > 400 Hz). These frequency bands are then inverse-Fourier transformed back to the spatial domain, and allocated to different channels of convolutional layer. It enables the network to learn information from different frequency bands and realizes the frequency shift operation. The features of different frequency ranges can be extracted parallelly by convolutional layers in the Encoder, and finally a high-dimensional feature is output. In the decoding stage, the features output by the encoder are reconstructed into an air rudder image in the spatial domain through the restriction of the loss function. Fs-CAE, which extracts the features of different frequency bands of the image, can be used to analyze each frequency component of the image. Due to the frequency shift, the CAE starts learning at the lowest frequency of each frequency band. Therefore, the proposed Fs-CAE can convert wideband frequency learning to multiple low-frequency learning, so that high-frequency components can be effectively learned. Numerical results show that Fs-CAE can uniformly reconstruct input sample images from low frequency to high frequency.

Experimental details

In this section, the experiments related to this paper are introduced in detail, including the hardware equipment for collecting air rudder images, the data sets used for algorithm training and testing, the operating environment of the detection algorithm, and the specific experimental process.

Experimental device

In order to collect the surface images of the air rudder, the air rudder surface defect detection platform was built, as shown in Fig. 8. Table 2 shows the segmental components of the air rudder surface defect detection platform. The platform includes industrial cameras, light sources, fixtures, positioning guide rails, a microcomputer control system, and a computer operating system. The platform includes two industrial cameras and four light sources. The camera model is MV-HS2000GM, with a resolution of 5472*3648 pixels. The lens model is MicroVision BT-11C0814MP10, with a focal length of 8 mm and a working distance from 0.1 m to infinity. The light source model is MV-WL600X27W-V, with a 600*27 mm light-emitting area. This combination can provide an adequate view field of 700*400 mm, suitable for the rudder surface of air rudders. In addition, the platform can realize a series of operations such as fixing the air rudder, adjusting the position of the industrial camera, acquiring images, and transmitting images to the computer.

Dataset

The dataset used in this paper consists of the air rudder images collected by the acquisition platform described in Sect. 3.1. A total of 500 sample images without surface defects and 36 sample images with surface defects were collected. The dataset includes a training set and a test set, which are used to train the network model and test the detection effect, respectively. The training set consists of 328 sample images without surface defects. The test set consists of 100 sample images without surface defects, 36 sample images with actual surface defects, and 72 sample images with generated defects. The source of sample images with generated defects will be described in Sect. 3.3.2.

Experimental process

The detection process of the air rudder surface defect is expressed in Fig. 9. The preliminary work is mainly to establish the dataset, including a training set for establishing the detection network model and a test set for the verification of the detection method effect. Then, the training stage includes the parameter training of the Fs-CAE network model and the calculation of the SPC thresholding. Finally, the testing stage includes the calculation of the image reconstruction residuals of the tested samples and defect detection.

Image preprocessing

Images captured by industrial cameras are not suitable for directly training and testing network models. In this paper, the surface defect detection of the air rudder surface is studied, and the air rudder in the image contains other components such as metalcore and rocker arm. The presence of these components and image backgrounds will impact the performance and detection efficiency of the network model. It is necessary to preprocess the air rudder image to segment the air rudder surface. The preprocessing process is illustrated in Fig. 10, including grayscale, binarization, mask segmentation, and grayscale transformation. It can be seen from Fig. 10 that the metal core, rocker arm of the air rudder, and background in the preprocessed image are all removed, leaving only the air rudder surface. In addition, the contrast of the air rudder surface image is increased through grayscale transformation, which makes the surface details more obvious.

Data enhancement

The testing dataset consists of air rudder images without defects and with defects. The number of air rudders containing actual surface defects is small, so the verification of the detection method is not sufficient. Generative Adversarial Network (GAN) is a widely used data augmentation method. It consists of two networks, one of which is a Generator Network that inputs random noise to generate fake images. Another is the Discriminator network, which identifies the authenticity of an image. During the training process, the generator and discriminator reach topological equilibrium by playing games. The recognition accuracy of the Discriminator for both the real and the fake images generated by the Generator reaches about 50%. GAN is often used as a data enhancement technique because of its particular structure.

In this paper, Deep Convolutional Generative Adversarial Network (DCGAN) (Radford et al., 2015) is adopted as the data enhancement method. DCGAN uses convolutional layers instead of the fully connected layer in GAN, and sets a stride for the convolution to automatically complete the down-sampling. In addition, the network introduces a Batch Normalization layer (BN layer) for normalization, which helps to converge. Compared with GAN, DCGAN has a stronger generation ability.

The framework of DCGAN in this paper is shown in Fig. 11. The Generator consists of 6 deconvolution layers. When the last layer uses Tanh as the activation function, the activation functions of the first five layers use ReLU to ensure that the output value is in the range of 0–255 BGR. The Discriminator contains four convolutional layers. Leaky ReLU is used as the activation function, and the Logistic function is used to test the convolution results to give the authenticity probability of the input image. The random noise subject to normal distribution is input into the Generator, the fake image, and then into the Discriminator for identification. The loss function can be calculated by comparing the identification result with the original label of the image. The RMSProp optimizer in the PyTorch architecture is used as the optimizer to update the parameters of the Generator and Discriminator according to the loss function.

The defects generated by DCGAN are transplanted to the sample without defects, and the air rudder image with generated defects is obtained as shown in the Fig. 12. The Inception Score (IS) was introduced to demonstrate the quality of the generated defective images. IS is usually calculated with the help of Google's Inception Net-V3 classification model. After the image is input to Inception Net-V3, a 1000-dimensional label vector will be output, and each dimension of the vector represents the probability that the input image belongs to a certain category of samples. For a given input image \(x\) with the corresponding output vector \(v\), There is a distribution \(\mathrm{p}\left({v}_{k}|x\right)\). For \(\mathrm{N}\) images, the edge distribution \(\widehat{\mathrm{p}}\left(v\right)\) is as Eq. (13):

To sum up, the formula of IS is as Eq. (14):

where \(\sum_{k=1}^{\mathrm{N}}KL\left(\mathrm{p}\left(v|{x}_{k}\right)\Vert \widehat{\mathrm{p}}\left(v\right)\right)\) represents the KL divergence between \(\mathrm{p}\left(v|{x}_{k}\right)\) and \(\widehat{\mathrm{p}}\left(v\right)\). The KL divergence between \(\mathrm{p}\left(v|{x}_{k}\right)\) and \(\widehat{\mathrm{p}}\left(v\right)\) is calculated using Eq. (15):

IS can quantitatively reflect the probability distribution of the output. The smaller the value, the more likely the generated image belongs to a certain category, and the higher the quality of the generated image. Three IS values for the dataset were calculated, with only 36 real defects, only 36 generated defects, and both 18 real defects and 18 generated defects, which get the values 1.28, 1.58 and 1.33. The IS value does not increase significantly when there are both real defects and generated defects, indicating that the generated defects and real defects are identified as the same type by the classifier, which proves the quality and availability of the generated defects.

Training stage

The training set used in the network model consists entirely of air rudder images without defects. The proposed model and method are implemented with PyTorch. They are executed on a PC equipped with an NVIDIA GTX 1660-TI GPU. The mean square error (MSE) is used as the loss function to update the network model parameters. The optimizer chooses Adam to reduce the reconstruction loss. The epoch is 500, the batch size is 32, and the learning rate is 0.001. Under the mentioned computational settings, this training process took about 9 h. All the sample images in the training set are input into the trained network model to obtain the reconstructed image set. The reconstruction residuals of each training set image are calculated to obtain the mean and variance. Finally, the control limit constant is selected to calculate the SPC thresholding.

Testing stage

The test set consists of sample images without surface defects, sample images with actual surface defects, and sample images with generated defects. The sample image without surface defects has a label of 1, and the sample image with actual surface defects or generated defects has a label of 0. The test set images are traversed and input to the defect detection algorithm, and the reconstruction result of the reconstruction network is generated. The reconstruction residual and the distance between it and the average reconstruction residual of the training set are calculated. The distance with the SPC thresholding is calculated to determine the presence of defects and the results are output in the form of labels. The label rules are the same as the test set.

Experimental results and discussion

In this section, the experimental results are presented. The image reconstruction effect and surface defect detection results based on Fs-CAE are respectively shown. A comprehensive comparison with other image reconstruction networks, including traditional CAE, Variational Autoencoder (VAE) (Kingma & Welling, 2013), and Convolutional Autoencoder with Skip-layer connections (Skip-CAE) (Mao et al., 2016), is carried out to verify the performance and effectiveness of the proposed defect detection method.

Image reconstruction results and discussion

The loss function is an important indicator to measure the performance of a network. Unlike Supervised Learning, which computes a loss function by comparing predicted and accurate labels, the loss function of the reconstruction network computes the difference between the reconstructed image and the original image. In this paper, the MSE loss function is selected to quantitatively reflect the image reconstruction effect of the reconstruction network by using Eq. (16):

where \(\mathrm{N}\) is the number of images in the test set, and \(k\) is the index of the images in the test set, \(k\in \left(1,\mathrm{N}\right)\). \(\mathrm{h}\) and \(\mathrm{w}\) represent the height and width of the image in the test set, respectively. \(u\) is the column coordinate of the image pixel, \(v\) is the row coordinates of the image pixel, and \(e\left(u,v\right)\) is the reconstruction residual value of the pixel in column \(u\) and row \(v\).

In the process of image reconstruction, the loss function quantitatively reflects the difference between the output of the network model and the original image. Figure 13 shows the loss functions of Fs-CAE and the other three network models. It can be seen that the loss functions of these four models can be effectively reduced to a low level.

The loss function of CAE has continuous fluctuations, but it still tends to a low level in general. The loss function of VAE has several large fluctuations, and the final stable value is lower than that of CAE. Skip-CAE shows a fast convergence rate in the early stage of training, and its loss function reaches a low and stable value in small continuous fluctuations. The loss function of Fs-CAE also has a fast rate of decline, and the overall fluctuation is the smallest, and the stable value is the lowest.

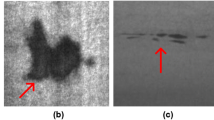

The loss function can only show the gradient convergence of the network model because of its specific calculation process. Although the effect of image reconstruction can be described quantitatively, the details of the effect cannot be visualized. In addition, the ability to distinguish the defect areas in the reconstruction residual is the focus of defect detection. Figure 14 shows the reconstructed residual images of Fs-CAE and the other three network models for sample images with crack defects. The existence of defects can be observed in the reconstructed residual images of CAE. However, the reconstruction residuals of surface area and air rudder edge severely interfere with the observation of defects. The reconstruction effect of the air rudder surface area in the reconstructed residual image of VAE is improved. However, the residual error of the edge is still significant, which greatly interferes with subsequent defect detection. Although the edge residuals in the reconstructed residual image of Skip-CAE are suppressed, the defect residuals are weakened to a certain extent. The defects in the reconstructed residual images of Fs-CAE are the most obvious, while the residuals in other areas only show sporadic noise.

According to the loss functions and reconstruction residuals of these network models, it can be seen that CAE cannot reduce the loss function to a low level because of its preferential capture of low-frequency components. The reconstructed details are poor, and the surface and edge details are largely absent, which interferes with defect detection. VAE improves the image reconstruction ability by introducing probability distribution but still cannot solve the convergence problem of high-frequency components. Its loss function is still relatively large, and the reconstruction residuals of the edge are still apparent. Skip-CAE adds skip-layer connections between the Encoder and the Decoder compared with the traditional CAE. Introducing this unique structure enhances the depth of feature transfer, which dramatically increases the network convergence speed and reduces the loss function. As a result, the detail reconstruction effect of the surface and edge is improved. However, the defect area is also partially reconstructed so that the reconstruction residuals of the defect become small. The idea of Fs-CAE is to improve image reconstruction by forcing the network model to learn the high-frequency components of the image through the decomposition and translation of the spectrum. From the results, the Fs-CAE network exhibits better convergence ability and can reconstruct the detailed information of the original image more completely than the others. The defect area is reconstructed as the normal surface area, and the reconstruction residuals of the defect have larger amplitudes and are more easily detected than the others.

Overall, Fs-CAE shows excellent performance in surface defect detection based on image reconstruction. Figure 15 shows the reconstruction residuals of Fs-CAE for different types of defects on the air rudder. It can be seen that defects such as Cracks, Pits, and Stick molds have apparent reconstruction residuals, which can be easily identified manually. It facilitates the implementation of subsequent automatic defect detection methods.

Defect detection results and discussion

This paper introduces the SPC thresholding method as a classifier to detect the existence of defects in the air rudder image. The detection result is output as a 0 or 1 label. 1 means no defect, and 0 means the defect exists. The rules are consistent with the test set labels. According to the calculation method of SPC thresholding, the mean and variance of the reconstruction residuals of each network model for the training set are calculated, that is, \({\mu }_{d}\) and \({\sigma }_{d}\) in \({T}_{d}={\mu }_{d}+\mathrm{C}\cdot {\sigma }_{d}\). The control limit constant \(\mathrm{C}\) is set manually, and \(\mathrm{C}\) usually takes a value from 0 to 1. In this paper, five values of 0.1, 0.3, 0.5, 0.7, and 0.9 are selected to verify the effect of defect detection. The defect detection performances of CAE, VAE, Skip-CAE, and Fs-CAE under different control limit constants \(\mathrm{C}\) are determined by the P–R curve (Buckland & Gey, 1994) and ROC curve (Hoo et al., 2017), and the optimal value of \(\mathrm{C}\) is determined.

The P-R curve takes Recall as the abscissa axis and Precision as the ordinate axis. A Recall and Precision point pair is obtained for a network model according to its detection result on the test set. Generally, the closer the overall P-R curve is to the (1,1) point, the better the performance of the network model. Figure 16 shows the P-R curves of CAE, VAE, Skip-CAE, and Fs-CAE at \(\mathrm{C}\) values of 0.1, 0.3, 0.5, 0.7, and 0.9, respectively.

The full name of the ROC curve is the Receiver Operating Characteristic curve. The abscissa of the curve is False Positive rate (FPR), and the ordinate is True Positive rate (TPR). A TPR and FPR point pair is obtained for a certain network model according to its detection result on the test set. Normally, the curve should be above the line between (0, 0) and (1, 1). The farther the distance from the line is, the better the performance of the network model. However, when many positive samples are detected as negative samples, there will be an abnormal situation where the ROC curve is below the line connecting (0, 0) and (1, 1). Although the shape of the curve can qualitatively determine the performance of the model, a numerical value, such as Area Under ROC Curve (AUC), is always desirable to assess the quality of a classifier (Lobo et al., 2008). The value of AUC is the area below the ROC curve, which is typically between 0.5 and 1.0, with a larger AUC representing better performance. Figure 17 shows the ROC curves of CAE, VAE, Skip-CAE, and Fs-CAE at \(\mathrm{C}\) values of 0.1, 0.3, 0.5, 0.7, and 0.9, respectively. Table 3 shows the corresponding AUC values of the ROC curves for CAE, VAE, Skip-CAE, and Fs-CAE when \(\mathrm{C}\) is 0.1, 0.3, 0.5, 0.7, or 0.9 respectively.

The optimal control limit constant \(\mathrm{C}\) is determined to be 0.7 for CAE, 0.9 for VAE, 0.1 for Skip-CAE, and 0.5 for Fs-CAE by P-R curve, ROC curve, and AUC value, respectively. Table 4(a) shows the False positive rate (FP rate), False negative rate (FN rate), and Accuracy of each network model under these \(\mathrm{C}\) values, providing a more obvious indication of the detection results. The results show that the traditional CAE has an FP rate of as high as 96.29%, indicating that the model cannot effectively detect defects in air rudder images. VAE has a 23.15% FP rate, 70% FN rate, and 54.33% Accuracy, and the detection performance is poor. Compared with VAE, the FP and FN rates of Skip-CAE are slightly lower, and the Accuracy is slightly improved, but the performance is still at a low level. The Fs-CAE model proposed in this paper can reduce the FP rate to 0% and the FN rate to 10%. The Accuracy is increased to 95.19%, which has a relatively good defect detection performance. The FP rate is the most critical indicator in actual industrial production, representing the proportion of undetected defects out of all samples with defects. The FP rate of Fs-CAE is 0%, indicating that all the samples with defects can be detected, which makes the model possible to be applied to actual industrial production.

The SPC thresholding is introduced to judge the outlier degree of the detected sample images according to statistical and scientific principles, providing an automatic classification process which manual regulation. By comparing the detection results of the reconstruction images of the Fs-CAE network under the manual threshold and the SPC thresholding method, the superiority of the automatic determination method based on statistics is demonstrated. The SPC threshold of the Fs-CAE model for the train set is 374.86 when the optimal control limit constant \(\mathrm{C}\) is 0.5. Manual thresholds are set to 250, 300, 350, 400, 450 and 500. Table 5 shows the detection results. The results show that the SPC thresholding method achieved the lowest FP rate and FN rate and the highest accuracy. Manual threshold is difficult to try to get good detection results. The optimal manual threshold requires continuous attempts to obtain, and it is difficult to apply to the constantly updated sample set in actual production.

Table 6 shows the detection time and average detection time of each network model under these C values for the rudder test set including 100 images. The results show that the traditional CAE has the least amount of average detection time of 0.29 s, and Skip-CAE has the most amount of average detection time of 0.41 s. Fs-CAE and Skip-CAE with more complex structures are more time-consuming than CAE and Skip-CAE, but the detection time of this type of detection method is short and the detection speed is fast, which can meet the requirements of online detection in actual production.

Comparison with the detection results of other algorithms

In the research of object detection algorithms requiring a large number of samples to train, the small sample problem has also been noticed, and this type of problem is called Few-shot object detection (FSOD). Several well-performed FSOD algorithms were used to compare with our method, including Dense Relation Distillation with Context-aware Aggregation (DCNet) (Hu et al., 2021), Few-Shot Object Detection via Contrastive Proposal Encoding (FSCE) (Sun et al., 2021), Class Margin Equilibrium for Few-shot Object Detection (CME) (), and Few-Shot Classification Network (FSCN) (Li et al., 2021a, 2021b). Table 7 shows the FP rate, FN rate, and Accuracy of these network model. Several algorithms have achieved low FP rate, FN rate and high accuracy. Among them, the CME have the lowest FP rate of 11.11% and FN rate of 16%, and the highest accuracy of 85.29%.

The effect of CME is close to the algorithm in this paper, but it doesn’t have an FP rate below 10%, with the risk of a defect going undetected. In comparison, the method in this paper is more suitable for application in industrial defect detection.

Conclusions

In this paper, we aim to solve the problems of large sample demand and long acquisition cycle of defect samples in practical applications of deep learning-based defect detection methods. A defect detection method combining Fs-CAE and SPC thresholding is proposed to detect surface defects of air rudder through image reconstruction. The method does not require defective samples and manual labels for training, which greatly saves manual labeling time. At the same time, a Fs-CAE network based on frequency shift is proposed to solve the loss of reconstruction details caused by the frequency principle (F-Principle) of the deep network. In addition, the incremental SPC thresholding is introduced to evaluate the reconstruction residual quantitatively, and realized the automatic detection of defects. The detection results show that the accuracy of the defect detection method combining Fs-CAE and SPC thresholds reaches 95.19%, and average detection time on test set of this method as short as 0.35 s. In particular, the method achieves an FP rate of 0%, indicating its potential for practical engineering applications. The proposed method realizes surface defect detection trained only with positive samples, realizing high detection accuracy and showing high robustness to different defects.

The dataset used in this paper is established based on images acquired by a specific detection platform with relatively fixed lighting conditions and acquisition angles. Before the method is to be applied to actual engineering detection, it still needs to be validated for its effectiveness and robustness by using more objects under different environments and working conditions. In addition, the method proposed in this paper is only used for the detection of air rudder surface defects. In the future, our method can be used to further investigate the possibility of defect type identification.

References

Abu, M., Amir, A., Lean, Y. H., Zahri, N. A. H., & Azemi, S. A. (2021). The performance analysis of transfer learning for steel defect detection by using deep learning. In Journal of Physics: Conference Series, 1755(1), 012041.

Badmos, O., Kopp, A., Bernthaler, T., & Schneider, G. (2020). Image-based defect detection in lithium-ion battery electrode using convolutional neural networks. Journal of Intelligent Manufacturing, 31(4), 885–897.

Božič, J., Tabernik, D., & Skočaj, D. (2021). Mixed supervision for surface-defect detection: From weakly to fully supervised learning. Computers in Industry, 129, 103459.

Buckland, M., & Gey, F. (1994). The relationship between recall and precision. Journal of the American Society for Information Science, 45(1), 12–19.

Chase, W. G., & Simon, H. A. (1973). Perception in chess. Cognitive Psychology, 4(1), 55–81.

Chow, J. K., Su, Z., Wu, J., Tan, P. S., Mao, X., & Wang, Y. H. (2020). Anomaly detection of defects on concrete structures with the convolutional autoencoder. Advanced Engineering Informatics, 45, 101105.

Gao, Y., Gao, L., Li, X., & Wang, X. V. (2019). A multilevel information fusion-based deep learning method for vision-based defect recognition. IEEE Transactions on Instrumentation and Measurement, 69(7), 3980–3991.

Goodfellow, I., Pouget-Abadie, J., Mirza, M., Xu, B., Warde-Farley, D., Ozair, S., & Bengio, Y. (2014). Generative adversarial nets. Advances in Neural Information Processing Systems, 27, 1–9.

He, J., Zheng, J., Shen, Y., Guo, Y., & Zhou, H. (2020). Facial image synthesis and super-resolution with stacked generative adversarial network. Neurocomputing, 402, 359–365.

Heger, J., Desai, G., & El Abdine, M. Z. (2020). Anomaly detection in formed sheet metals using convolutional autoencoders. Procedia CIRP, 93, 1281–1285.

Hoo, Z. H., Candlish, J., & Teare, D. (2017). What is an ROC curve? Emergency Medicine Journal, 34(6), 357–359.

Hu, G. H. (2015). Automated defect detection in textured surfaces using optimal elliptical Gabor filters. Optik, 126(14), 1331–1340.

Hu, H., Bai, S., Li, A., Cui, J., & Wang, L. (2021). Dense relation distillation with context-aware aggregation for few-shot object detection. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 10185–10194).

Jain, S., Seth, G., Paruthi, A., Soni, U., & Kumar, G. (2020). Synthetic data augmentation for surface defect detection and classification using deep learning. Journal of Intelligent Manufacturing, 33, 1007–1020.

Kingma, D. P., & Welling, M. (2013). Auto-encoding variational bayes. arXiv preprint arXiv:1312.6114.

Li, B., Yang, B., Liu, C., Liu, F., Ji, R., & Ye, Q. (2021a). Beyond max-margin: Class margin equilibrium for few-shot object detection. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 7363–7372).

Li, Y., Zhu, H., Cheng, Y., Wang, W., Teo, C, S., Xiang, C., Vadakkepat, P., & Lee, T. H. (2021b). Few-shot object detection via classification refinement and distractor retreatment. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 15395–15403).

Liu, J., Song, K., Feng, M., Yan, Y., Tu, Z., & Zhu, L. (2021a). Semi-supervised anomaly detection with dual prototypes autoencoder for industrial surface inspection. Optics and Lasers in Engineering, 136, 106324.

Liu, L., Cao, D., Wu, Y., & Wei, T. (2019). Defective samples simulation through adversarial training for automatic surface inspection. Neurocomputing, 360, 230–245.

Liu, S., Bao, J., Lu, Y., Li, J., Lu, S., & Sun, X. (2021b). Digital twin modeling method based on biomimicry for machining aerospace components. Journal of Manufacturing Systems, 58, 180–195.

Liu, X., Tang, X., & Chen, S. (2021c). Learning a similarity metric discriminatively with application to ancient character recognition. In International Conference on Knowledge Science, Engineering and Management, 12815, 614–626.

Lobo, J. M., Jiménez-Valverde, A., & Real, R. (2008). AUC: A misleading measure of the performance of predictive distribution models. Global Ecology and Biogeography, 17(2), 145–151.

Luo, T., Ma, Z., Wang, Z., Xu, Z. Q. J., & Zhang, Y. (2021). An upper limit of decaying rate with respect to frequency in deep neural network. arXiv preprint arXiv:2105.11675.

Mao, X., Shen, C., & Yang, Y. B. (2016). Image restoration using very deep convolutional encoder-decoder networks with symmetric skip connections. Advances in Neural Information Processing Systems, 29, 1–9.

Mujeeb, A., Dai, W., Erdt, M., & Sourin, A. (2019). One class based feature learning approach for defect detection using deep autoencoders. Advanced Engineering Informatics, 42, 100933.

Norouzi, M., Fleet, D. J., & Salakhutdinov, R. R. (2012). Hamming distance metric learning. Advances in Neural Information Processing Systems, 25, 1061–1069.

Qin, Z., Liu, Z., Zhu, P., & Xue, Y. (2020). A GAN-based image synthesis method for skin lesion classification. Computer Methods and Programs in Biomedicine, 195, 105568.

Qiu, P. (2020). Big data? Statistical process control can help! The American Statistician, 74(4), 329–344.

Radford, A., Metz, L., & Chintala, S. (2015). Unsupervised representation learning with deep convolutional generative adversarial networks. arXiv preprint arXiv:1511.06434.

Ren, R., Hung, T., & Tan, K. C. (2017). A generic deep-learning-based approach for automated surface inspection. IEEE Transactions on Cybernetics, 48(3), 929–940.

Sanghadiya, F., & Mistry, D. (2015). Surface defect detection in a tile using digital image processing: Analysis and evaluation. International Journal of Computer Applications, 116(10), 33–35.

Singh, S. A., & Desai, K. A. (2022). Automated surface defect detection framework using machine vision and convolutional neural networks. Journal of Intelligent Manufacturing, 1–17.

Soukup, D., & Huber-Mörk, R. (2014). Convolutional neural networks for steel surface defect detection from photometric stereo images. In International Symposium on Visual Computing, 8887, 668–677.

Sun, B., Li, B., Cai, S., Yuan, Y., & Zhang, C. (2021). Fsce: Few-shot object detection via contrastive proposal encoding. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (pp. 7352–7362).

Sun, Y., Yin, S., & Teng, L. (2020). Research on multi-robot intelligent fusion technology based on multi-mode deep learning. International Journal of Electronics and Information Engineering, 12(3), 119–127.

Szarski, M., & Chauhan, S. (2022). An unsupervised defect detection model for a dry carbon fiber textile. Journal of Intelligent Manufacturing. https://doi.org/10.1007/s10845-022-01964-7

Tabernik, D., Šela, S., Skvarč, J., & Skočaj, D. (2020). Segmentation-based deep-learning approach for surface-defect detection. Journal of Intelligent Manufacturing, 31(3), 759–776.

Tao, J., Qin, C., Xiao, D., Shi, H., Ling, X., Li, B., & Liu, C. (2020). Timely chatter identification for robotic drilling using a local maximum synchrosqueezing-based method. Journal of Intelligent Manufacturing, 31(5), 1243–1255.

Tao, X., Zhang, D. P., Ma, W., Hou, Z., Lu, Z., & Adak, C. (2022). Unsupervised anomaly detection for surface defects with dual-siamese network. IEEE Transactions on Industrial Informatics. https://doi.org/10.1109/TII.2022.3142326

Torabi, A. R., Shams, S., Narab, M. F., & Atashgah, M. A. (2021). Unsteady aero-elastic analysis of a composite wing containing an edge crack. Aerospace Science and Technology, 115, 106769.

Tsai, D. M., & Jen, P. H. (2021). Autoencoder-based anomaly detection for surface defect inspection. Advanced Engineering Informatics, 48, 101272.

Tsai, D. M., & Luo, J. Y. (2010). Mean shift-based defect detection in multicrystalline solar wafer surfaces. IEEE Transactions on Industrial Informatics, 7(1), 125–135.

Tschannen, M., Bachem, O., & Lucic, M. (2018). Recent advances in autoencoder-based representation learning. arXiv preprint arXiv:1812.05069.

Wei, P., Liu, C., Liu, M., Gao, Y., & Liu, H. (2018). CNN-based reference comparison method for classifying bare PCB defects. The Journal of Engineering, 16, 1528–1533.

Wu, S., Wu, Y., Cao, D., & Zheng, C. (2019). A fast button surface defect detection method based on Siamese network with imbalanced samples. Multimedia Tools and Applications, 78(24), 34627–34648.

Xu, Z. Q. J., Zhang, Y., & Xiao, Y. (2019). Training behavior of deep neural network in frequency domain. In International Conference on Neural Information Processing, 11953, 264–274.

Yi, L., Li, G., & Jiang, M. (2017). An end-to-end steel strip surface defects recognition system based on convolutional neural networks. Steel Research International, 88(2), 1600068.

Yosinski, J., Clune, J., Bengio, Y., & Lipson, H. (2014). How transferable are features in deep neural networks? Advances in Neural Information Processing Systems, 27, 3320–3328.

Acknowledgements

This work is partially supported by National Natural Science Foundation of China (Grant number 52175461, 11632004 and U1864208); Intelligent Manufacturing Project of Tianjin (Grant number 20201199); Fund for the High-level Talents Funding Project of Hebei Province (Grant number B2021003027); Key Program of Research and Development of Hebei Province (Grant number 202030507040009); Innovative Research Groups of Natural Science Foundation of Hebei Province (Grant number A2020202002); Top Young Talents Project of Hebei Province, China (Grant number 210014).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Competing interest

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work reported in this paper.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Yang, Z., Zhang, M., Chen, Y. et al. Surface defect detection method for air rudder based on positive samples. J Intell Manuf 35, 95–113 (2024). https://doi.org/10.1007/s10845-022-02034-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10845-022-02034-8