Abstract

In this study, the innovative compact heat exchanger (CHE) newly designed and manufactured using metal additive manufacturing technology were numerically and experimentally investigated. Some experiments were carried out to determine the hot water (\(hw\)) and cold water (\(cw\)) outlet temperatures of CHE. As a result of the CFD analysis, the average outlet temperatures of the \(hw\) and \(cw\) flow loops on the CHE were calculated as 48.24 and 35.38 °C, respectively. On the other hand, the experimental outlet temperatures were measured as being 48.50 and 35.72 °C, respectively. The studies showed that the numerical and experimental results of the CHE are compliant at the given boundary conditions. Furthermore, it was observed that the heat transfer rate of the CHE with lower volume is approximately 47.7% higher than that of standard brazed plate heat exchangers (BPHEs) produced by traditional methods. More experiments conducted on the CHE will inevitably have a negative effect on its manufacture time and cost. Thus, various models were developed to predict the results of unperformed experiments using the machine learning methods, ANN, MLR and SVM. In the models developed for each experiment, the source and inlet temperatures of \(hw\) and \(cw\), respectively, and the volumetric flow rate of \(cw\) were selected as input parameters for the machine learning methods. Thus, the \(hw\) and \(cw\) outlet temperatures of the CHE were estimated on the basis of these input parameters. The best performance was achieved by ANN. In addition, there is no significant performance difference between other methods.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

One of the most important pieces of equipment used in engineering applications is plate heat exchangers (HEs) that commonly used in heating and cooling applications as the brazed and gasketed structure. However, compact and complex-structures and three-dimensional (3D) HEs can’t be manufactured by conventional methods. Nowadays, MAM technology, which is an innovative production method, can be used for the manufacture of HEs with lower volumes and higher heat transfer rates. We are seeing an increase in both academic studies and industrial applications relating to the manufacture of qualified parts from different metal powders, using MAM technology. Thanks to new production applications, MAM technology has become a very important method in the manufacture of complex and technological three-dimensional (3D) equipment, which may be impossible to produce using traditional techniques (Bineli et al. 2011; Hudák et al. 2013; Roberts et al. 2009; Ventola et al. 2014). Tsopanos et al. (2005) and Manfredi et al. (2013) have determined the quality of parts manufactured using MAM technology depending on inlet process parameters and material properties. Also, Manfredi et al. (2013), Roberts et al. (2009) and Kwon et al. (2020) have investigated the manufacture of complex parts using MAM technology. In investigations conducted by Dong et al. (2007), Foroozmehr et al. (2016) and Peyre et al. (2015), 3D finite element models (FEM) have been applied for the dimensional analyses of the melt pool during the MAM process. Therefore, the thermal cycles of the melt pool, its fusion depths and their relationship have been experimentally and numerically determined during the process of manufacture using MAM technology. It has been observed that an increase in the fluid inlet temperature increases the heat transfer performance of the heat exchanger, while a pressure drop increases with an increase in the mass flow rate and concentration (Sarafraz and Hormozi 2016). İpek et al. (2017) have experimentally investigated of exergy loss analysis of newly designed compact heat exchanger (CHE). In the study results, it has showed that the highest exergy loss value of newly designed CHE has been obtained as about 7.6 kW, while its minimum exergy loss value has been obtained as about 4.65 kW.

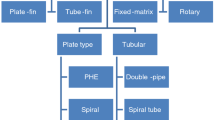

As of very recently, this equipment can be directly manufactured as a mono block from the CAD data relating to the design (Cui and Cui 2015; Hussein et al. 2013; Kan et al. 2015; Roberts et al. 2009). MAM technology is used in the production of high value-added and sized technical components with complex structures, predominantly in the fields of healthcare and space exploration, the defense industry, electronics, avionics, metrology, robotics, industrial processes, telecommunication, the automotive industry, satellite systems and aircraft components, cryogenic applications, gas and steam turbines, mini jet turbine production, and compressor and internal combustion engine parts (Chabot et al. 2019; Jiang et al. 2020; Penumuru et al. 2020; Zhao and Guo 2019). Today, one of the most important pieces of equipment used in a range of engineering applications is the HE. HEs come in different types and geometrical structures, with various names, including evaporators, condensers, heaters and coolers. Plate HEs, which are commonly used in heating and cooling systems, are designed to require sealing and brazing as part of the manufacture process. However, those HEs capable of heating and cooling with a high coefficient of performance (COP), employing an innovative channel and wing structure, and boasting a high transfer amount and a minimal size tend to be developed using new and up-to-date technology (Hajabdollahi and Seifoori 2016; Khudheyer and Mahmoud 2011; Ranganayakulu et al. 2017; Sheikholeslami et al. 2016).

MAM technology is an innovative method for the manufacture of CHEs with complex structures (Bineli et al. 2011; Hussein et al. 2013; Kan et al. 2015; Manfredi et al. 2013; Roberts et al. 2009; Usta and Köylü 2012). In the HEs manufactured using MAM technology, it has been observed that the deterioration of the heat exchanger body can be repaired, and requirements such as brazing and sealing have been eliminated. As can be seen from the literature, many academic studies have been conducted based on computational fluid dynamics (CFD) analyses of plate HEs. In these previous studies, the numerical methods used in thermal and dynamic analyses have been evaluated and important parameters such as heat transfer performance, heat transfer coefficient, and Reynolds and Nusselt numbers have been investigated (Dong et al. 2007; Ebrahimzadeh et al. 2016; Han et al. 2010; Khudheyer and Mahmoud 2011; Roberts et al. 2009). Researchers have also shared information concerning the effect of artificial roughness on the advanced heat transfer coefficient. According to the results of these CFD analyses, rough surfaces affect heat transfer positively (Cui and Cui 2015; Ventola et al. 2014). Metallic powders such as titanium, nickel, cobalt, chromium, aluminum and stainless steel GP1 (AISI 316) have all been used as manufacture materials in the production of HEs using MAM technology (Fluent 2015). Because the average grain size of the metal powder used in production ranges from 20 to 200 μm, it has been observed that it is possible to manufacture products with different roughnesses and internal pore structures using MAM technology (Brooks and Molony 2016; Kan et al. 2015; Roberts et al. 2009; Usta and Köylü 2012). Additionally, the production parameters selected during this process affect the production position of the produced HEs and their production quality and cost. Investigators have determined that the quality of parts manufactured using the MAM method depends on inlet processing parameters and material properties (Dong et al. 2007; Ebrahimzadeh et al. 2016; Roberts et al. 2009). One of the most important of these parameters is temperature. Temperature analysis is the basis for the feedback of other laser-processing parameters in manufacturing. According to studies of the process parameters employed in production using MAM technology, the layer thickness of the metallic powder, the source power of the laser, the spot diameter and scanning speed, and the processing and scanning direction all have an effect on the strength of the obtained product.

Among the state of the art technologies in additive manufacturing, powder bed fusion (PBF), including selective laser sintering/selective laser melting (SLS/SLM), direct metal laser sintering (DMLS), direct metal deposition (DMD), laser cladding (LC), laser-engineered net shaping (LENS), shape metal deposition (SMD), hybrid layered manufacturing (HLM), laser metal forming (LMF), directed energy deposition (DED), wire and arc additive manufacturing (WAAM), laminated object manufacturing (LOM) can be mentioned (Bai et al. 2020; Bhatt et al. 2019; Dinovitzer et al. 2019; Niu et al. 2019).

Research gaps and motivation

As can be seen from the literature survey, there have been many numerical and experimental studies with regard to plate HEs, but there have been very few studies focusing on the analysis and design of the novel and compact heat exchanger considered in this study. In order to fill this gap in the literature, new studies should be undertaken with regard to this issue. Therefore, the aim of this study is to numerically and experimentally investigate the thermal and hydrodynamic behaviors of a CHE that was recently designed by İpek et al. (2017) and manufactured using MAM methods, in terms of temperature distribution and pressure drop, heat exchanger effectiveness and heat transfer rates.

In this study presented, models for the prediction of performance parameters of a CHE produced by MAM method were created by using Artificial neural networks (ANN), Multiple linear regression (MLR) and Support vector machines (SVM) of Machine learning methods. When the literature is reviewed, it is observed that studies in which MAM technology and Machine learning algorithms are used together generally aim at determining and predicting physical process parameters and processing conditions (Kwon et al. 2020; Li et al. 2020; Yang et al. 2020).

In the study by Stathatos and Vosniakos (2019), in the laser-based MAM method, temperature and density change through randomly selected manufacturing were predicted in real time using ANN. In another study Paul et al. (2019), machine learning approach was used to simulate process behavior to predict multiple physical parameters in MAM technology. In this study, the researchers conducted a study on the design and development of the basic components of a scientific framework to develop a model-based and real-time control system from time-dependent heat transfer and temperature distribution data. In the study by Silbernagel et al. (2019), ML approach was used as an alternative method to determine and optimize process parameters during the processing of pure copper with MAM technology. According to the results of their studies, it was seen that they achieved results that can be found with traditional parameter optimization and that effective results were achieved by using Machine learning algorithms in parameter optimization for MAM technology. And in the study by Gobert et al. (2018), the Machine learning approach was used to develop strategies for detecting errors in the laser powder bed in production with the Additive Manufacturing (AM) method. During this process, it was stated that multiple images were collected from each building layer using a high-resolution digital single lens reflex (DSLR) camera, and these images were evaluated using the SVM approach. In the study by Wang et al. (2020), a comprehensive review of ML applications in various AM fields was madden. In this study, ML in DfAM (design for additive manufacturing) was used to optimize new and high performance metamaterials and to produce topological designs. In manufacturing with the AM method, results that shall contribute to the optimization of process parameters, laying powder, quality evaluation and in-process defect monitoring processes were obtained by using ML algorithms.

In the literature, it is seen that there are studies carried out by focusing on details such as plates, wings, etc. for the design of HEs. Any study on a product obtained with MAM technology has not been found within our knowledge. In our study, unlike its peers, in order to produce CHE with a low volume and weight and a high thermal efficiency and effectiveness value, its 3D CAD design and CFD analysis were performed. On the other hand, it is not possible to obtain these properties using the traditionally produced standard brazed plate heat exchanger (BPHE). For this reason, in order to produce CHE with small dimensions, complex surface geometry, reduced weight and high overall heat transfer coefficient, DMLS system, a MAM technology, was used. Performance of CHE, produced using this method, was tested according to its \({\dot{m}}_{hw}\) values of different hot fluid mass flow rate in a developed experimental setup. In this context, \({\dot{m}}_{hw}\) value of CHE, which is designed, analyzed and produced prototype, ranges in \({\dot{6\le m}}_{hw}\le 12\) due to its purpose and function. CHE does not provide the desired thermal efficiency at flow rates outside this range. For this reason, the experimental setup was set to make measurements in these flow rate ranges. The experimental setup and equipment in our study does not support flow rates outside this range. For this reason, the tests were carried out considering the given flow rate range. At this stage, it is very important to determine parameters such as \({T}_{hwo}\) (Outlet temperature of the \(hw\)) and \({T}_{cwo}\) (Outlet temperature of the\(cw\)) used as an indicator of HEs performance, especially in the development of an innovative HEs. For this purpose, the tests performed during the test phase both take time and increase the cost significantly. Thus, it has become important to develop models that will also predict the values. Thus, it has become important to develop models that will also predict the \({T}_{hwo}\) and \({T}_{cwo}\) values of experiments (6.1, 6.2 etc.) that couldn’t be performed within the range of \( {\dot{6\le m}}_{hw}\le 12\). \({T}_{hwo}\) and \({T}_{cwo}\) parameters affecting the performance of the CHE developed in this study were predicted by machine learning techniques ANN, MLR and SVM using present testing data. And prediction results with the high performance achieved for \({T}_{cwo}\) and\({T}_{hwo}\), can be used to produce a CHE with low volume and weight and a high thermal performance.

Material and methods

The processes performed in this study consists of five basic parts as shown in the diagram in Fig. 1 In the first two sections, 3D CAD design and CFD analysis of CHE were carried out, respectively. In the next step, CHE, whose dimensions and shape are shown in the diagram, was produced with MAM technology. In the fourth stage, testing was carried out by means of a special setup we developed through our own means in order to measure the heat transfer performance of CHE. Due to the intended purpose and function of CHE, hot \({\dot{m}}_{hw}\) value should be in the range of \({\dot{6\le m}}_{hw}\le 12\). As a result of the testing carried out for different \({\dot{m}}_{hw}\), 11,325 data were obtained. As seen in the diagram in Fig. 1, \({T}_{hwo}\) and \({T}_{cwo}\) values, the outputs of the data set, are an important indicator of CHE's performance. In this study, ANN, MLR and SVM methods, which have achieved significant prediction success in the literature, were preferred as machine learning models. Recently in the literature, some hybrid studies are also seen (Garg et al. 2016a, b). The performance of the models was determined using regression performance evaluation criteria. These stages involved in general schema of experimental studies are examined in detail in the subsections below.

Design, analysis and manufacture of the CHE

Assuming steady-state conditions and no phase change and constant specific heats, the heat transfer rate (\(\dot{\mathrm{Q}}\)) for \(hw\) and \(cw\) in the HE can be calculated from Eq. (1) (Erbay et al. 2013; Kays and London 1984).

where \({\text{T}}_{\mathrm{cwo}}\) and \({\text{T}}_{\mathrm{cwi}}\) are the outlet and inlet temperatures of the \(cw\) flow loop in the CHE, respectively. Similarly, \({\text{T}}_{\mathrm{hwo}}\) and \({\text{T}}_{\mathrm{hwi}}\) are the outlet and inlet temperature of the \(hw\) flow loop in the CHE, respectively. \(U\) is overall heat transfer coefficient [\(\frac{kJ}{{m}^{2}}h^\circ{\rm C} \)]. \({A}_{s}\) is effective heat transfer area of the CHE (\({m}^{2}\)). \({\mathrm{C}}_{\mathrm{h}}\) and \({\mathrm{C}}_{\mathrm{c}}\) are \(hw\) and \(cw\) heat capacity rates, respectively. \(F=F\left(P,R\right)\) is the correction factor and calculated as function of \(P=\frac{{T}_{hwo}-{T}_{hwi}}{{T}_{cwi}-{T}_{hwi}}\) and \(R=\frac{{T}_{cwi}-{T}_{cwo}}{{T}_{hwo}-{T}_{hwi}}\).

The maximum possible heat transfer rate \({\dot{\mathrm{Q}}}_{\mathrm{max}}\) can be calculated from Eq. (2), depending on the is maximum temperature difference and \({C}_{min}\).

where \(\left({\text{T}}_{\mathrm{hwi}}-{\text{T}}_{\mathrm{cwi}}\right)\) is maximum temperature difference, \({C}_{min}\), heat capacity is smaller of the two magnitudes \({\mathrm{C}}_{\mathrm{h}}\) and \({\mathrm{C}}_{\mathrm{c}}\) heat capacities for the \(hw\) and \(cw\) heat capacity, respectively. Thus, the heat transfer effectiveness \({\upvarepsilon }_{\mathrm{eff}}\) can be calculated as given in Eq. (3).

where \(NTU=\frac{U \mathrm{A}}{{C}_{min}}\) (number of transfer unite) is a measure of the heat transfer per surface area, = \({C}_{r}\frac{{C}_{min}}{{C}_{max}}\)is the heat capacity ratio, and \({C}_{max}\) heat capacity is larger of the two magnitudes \({\mathrm{C}}_{\mathrm{h}}\) and \({\mathrm{C}}_{\mathrm{c}}\). Once \({\upvarepsilon }_{\mathrm{eff}}\) is heat transfer effectiveness, the actual heat transfer rate \({\dot{\mathrm{Q}}}_{\mathrm{a}}\) is calculated as given in Eq. (4).

In CFD analyses, the dimensionless heat transfer and fluid flow parameters were taken into account for investigation of the fluid flow and heat transfer behavior of CHE, due to a non-linear relationship between the HE geometry and operating parameters. The characteristics of the dimensionless heat transfer and pressure drop are defined depending on the Colburn-j and Stanton number \(\mathrm{St}\) as given in Eq. (5) (Erbay et al. 2013; Kays and London 1984; Ranganayakulu et al. 2017).

where \(Nu(Re,Pr,\mu )=0.2 {Re}^{0.67} {\mathrm{Pr}\left(T,P\right)}^{0.4} {\lceil\frac{\mu \left(T,P\right)}{{\mu }_{o}}\rceil}^{0.1}\) is Nusselt number, \(Re=\rho \left(T,P\right)\vartheta \frac{{d}_{h}}{\mu \left(T,P\right)}\) is Reynolds number.

The 3D CAD design of the CHE, its cross-section view, the 3D contour of the temperature and pressure distributions in the hot water layers (\(hwls\)) and cold water layers (\(cwls\)) plotted by using data derived from the CFD analysis results are shown in Fig. 2a–e respectively.

Detailed cross-section views of the newly designed CHE: a 3D CAD design of the CHE, b Cross-section view of the CHE geometry, c The standard brazed plate heat exchanger (BPHE), d Temperature distribution counter obtained from CFD analysis results of the CHE, e Pressure distribution counter obtained from CFD analysis results of the CHE

In CFD analyses of the CHE, discretized forms of the non-linear 3D governing equations were taken into account, such as the continuity, momentum, energy, turbulent kinetic energy, and dissipation of the turbulent kinetic energy. The CFD analyses were performed with the Ansys-Fluent software under steady-state conditions using a pressure-based solver and simple algorithms (Fluent 2015). Wall of the interface plates between the \(hw\) and \(cw\) was assumed to be a coupled wall for CHE. The boundary conditions and process parameters used for analyses were collected in Table 1. In the numerical solutions based on the standard \({\mathrm{k}}\) turbulence model, the equations given in Eqs. (6) and (7) were used (Lam and Bremhorst 1981).

where \({\mathrm{G}}_{\mathrm{k}}\) is the turbulent kinetic energy production in the mean velocity gradient, \({\mathrm{G}}_{\mathrm{b}}\) is the turbulence kinetic energy production of the lift force, and \({\mathrm{Y}}_{\mathrm{M}}\) is the contribution of the unstable expansion in the compressible turbulent flow.\({\mathrm{C}}_{1\upvarepsilon }\), \({\mathrm{C}}_{2\upvarepsilon }\) and \({\mathrm{C}}_{3\upvarepsilon }\) are the empirical constants for k and ε used in the CFD analysis in the \({\mathrm{k}}\) turbulence model. \({\upsigma }_{\mathrm{k}}\) and \({\upsigma }_{\upvarepsilon }\) are the turbulence Prandtl numbers for \(\mathrm{k}\) and\(\upvarepsilon \), respectively. \({\mathrm{S}}_{\mathrm{k}}\) and \({\mathrm{S}}_{\upvarepsilon }\) are user-defined source terms for \(\mathrm{k}\) and\(\upvarepsilon \).

In present study, the design of the CHE was based on the BPHE manufactured by conventional methods. As shown in Table 2, the best results for BPHE in terms of pressure drops and outlet temperatures were obtained at a chevron angle θ of 75° and a plate thickness t of 1 mm, for the minimum flow gap \(\delta\) in the range of 1–1.5 mm.

The input and boundary conditions used for the numerical analysis of the CHE and its analysis results were given in Table 3. According to the analysis, the numerical pressure drops of the \(cw\) and \(hw\) flow loops of the CHE (\({P}_{cw}\) and \({P}_{hw}\), respectively) increased as the outlet temperature of the \(cw ({T}_{cwo})\) increases. Therefore, to reduce the numerical pressure drops, the channel and wing geometries of the CHE were revised.

According to the results obtained from the analyses, the outlet temperatures of \(cw\) and \(hw\) recirculating in the CHE were calculated as \({T}_{cwo}={35.38}^\circ \mathrm{C}\) and \({T}_{hwo}={48.57}^\circ \mathrm{C}\), respectively. A standard BPHE with a \(75^\circ \) chevron angle and high heat transfer performance was taken into account to compare with the results of the newly designed CHE. The \({T}_{hwo}\) and \({T}_{cwo}\) (outlet temperatures of \(hw\) and \(cw\)) were calculated as 52.07 and 28.80 °C, respectively, for the BPHE used as a reference for comparison. As a result of the revision of the CHE’s channel and wing geometries, while \({\Delta T}_{cw} ={T}_{cwo}-{T}_{cwi}\) (increase in \(cw\) temperature) decreases by only 10.653%, \({\Delta T}_{hw} ={T}_{hwi}-{T}_{hwo}\) (decrease in \(hw\) temperature) decreased by only \(11.533\%\). Despite these results, the numerical pressure drops in the \(cw\) and \(hw\) flow loops in the CHE were reduced by 2.934 and 2.878 times, respectively.

A comparison of the results of the CHE and BPHE showed that amount of heat transfer rate \((\dot{\mathrm{Q}})\) in the newly designed CHE was \(47.7\%\) higher than that in the standard BPHE. As seen in Fig. 3a, the production of the CHE was carried out using STL (stereolithography) data derived from the final 3D CAD designs, based on the design and analysis results given in Fig. 2. A photographic picture of the MAM technology used in its production is given in Fig. 3a. As schematically illustrated in Fig. 3b, the manufacturing process carried out using MAM technology involves the sintering of metallic powders, laid on the built platform of the system, using fiber laser beams that pass through the galvanic optics layer-by-layer.

a The Photograph of the CHE by the MAM technology, b A schematic view of the MAM technology. (1) 200 W fiber laser source, (2) Powder supply container, (3) Wiper, (4) Argon gas inlet ports (argon atmosphere), (5) Beam focusing optics, (6) Scanning mirrors, (7) Lens, (8) Laser beam spot, (9) Argon gas outlet port, (10) Manufacture position of the 3D CAD model the solidified part), (11) Built platform, (12) Unsintered metallic powder bed

In this process, the fiber laser beams follow a path dependent on the STL data. Thus, precision is very important when it comes to selecting the production parameters, such as the layer thickness of the metallic powder laid on the built platform, the laser scanning speed and scanning direction, the source power, and the spot diameter. According to the results of the design and analysis, the following values were used for the manufacture parameters: a fiber laser power of 200 W, a laser beam spot diameter of 100 μm, a laser scanning speed of 500\(\mathrm{mm}/\mathrm{s}\), and a roughness of 40 μm. As illustrated in Fig. 3a, the height, width, length, weight, volume and heating surface of the manufactured CHE were obtained as 20 mm, 74 mm, 196 mm, 0.765 kg, 99.98 \({\mathrm{m}}^{3}\) and 0.345\({\mathrm{m}}^{2}\), respectively. The inlet hydraulic diameters for \(hw\) and \(cw\) \(({\mathrm{d}}_{\mathrm{ hhwi}}\) and\({\mathrm{d}}_{\mathrm{ hcwi}})\), the layer thickness (\(t\)) and the minimum flow gap (\(\delta \)) for fluid flow were taken as 10 mm, 10 mm, 0.5 mm and 0.5 mm, respectively, for the inlet parameters of the CHE’s design and analysis. On the other hand, the height, width, length, weight, volume and heating surface of the BPHE used for comparisons were measured as 40 mm, 74 mm, 192 mm, 0.845 kg, 179.9 \({\mathrm{m}}^{3}\) and 0.365\({\mathrm{m}}^{2}\), respectively.

Experimental setup and procedure

The heat transfer performance of the newly designed CHE was also experimentally investigated in the experimental setup shown in Fig. 4a, b. The experimental setup comprised two centrifugal pumps of 0.25 kW, four PT-100 temperature sensors with ± 0.1% accuracy, two 500 L reservoir tanks, an expansion tank, an electric heater of 25 kW used to supply the thermal energy of the \(hw\) tank, two turbine-type mass flow meters with ± 1% accuracy, eight ball vanes, three proportional vanes with ± 1% accuracy, four analog differential manometers with ± 1% accuracy to measure the pressure drop between the inlet and the outlet of the CHE, a PLC automation system and a water-cooled chiller of 11 kW. As seen in Fig. 4(b), during the experiments, the CHE was positioned vertically within the experimental setup. The experimental setup used in the investigations consisted of two close loops of \(hw\) and \(cw\) flow. The \(cw\) flowed upwards through the CHE, while the \(hw\) flowed downwards through the experimental setup. In the numerical analyses, the flows through the CHE were also take into account as cross-flow with both fluids unmixed. The inlet temperatures of the \(cw\) and \(hw\) circulating in the CHE remained almost constant at 15 and 60 °C, respectively by using a temperature sensor, a proportional vane and chiller system during the experiments. The \(cw\) and \(hw\) outlet temperatures in the CHE were measured with both fluids unmixed, under conditions of constant specific heat and fluid flow without phase change.

In the present study, the values given below were taken into account for numerical calculation purposes using \(Nu(Re,Pr,\mu ),\) \(h=\frac{Nu \kappa \left(T,P\right)}{{d}_{h}}\), \(Pr(T,P)\) and \(Re(\rho (T,P),\vartheta ,\mu (T,P)\)), equations: \(Pr=5.515\) for \(cw\) and \(Pr=3.5955\) for \(hw\), \(\mu =8.12\times {10}^{-4}\) kg/m-s for \(cw\) and \(\mu =5.525\times {10}^{-4}\) kg/m-s for \(hw\), an average molecular dynamic viscosity \({\mu }_{o}=6.63\times {10}^{-4}\) kg/m-s for \(cw\) and \(hw\), \(\kappa =0.6155\) W/m–K for average value of the \({T}_{cwi}\) and \({T}_{cwo}\), and \(\kappa =0.6425\) W/m–K for the average value of the \({T}_{hwi}\) and \({T}_{hwo}\). Additionally, 3D CAD model of the CHE obtained by design and analysis was used for the calculation of thermal and dynamic parameters such as \(Re\), ϑ,\(Nu\), \(h\) and \(St\) along the \({l}_{c}\) and \({l}_{h}\) which are shown in Fig. 2a, c. The average results of the calculations were given in Table 4. In addition, The temperature, velocity and pressure data were recorded from 1000 points in 0.5 mm depth of the each \(cwls\) and \(hwls\) along the \({l}_{c}\) and \({l}_{h}\) on the CHE. Consequently, as can be seen in Fig. 2a, the total heat transfer surface of the CHE was calculated as being 0.345\({\mathrm{m}}^{2}\). According to calculations performed on the CHE's 3D CAD model, the wetted perimeter of the cross-section of the \(cwls\) and\(hwls\), where the cw and hw fluids flow, is 0.1674 m. The hydraulic diameter of the cross-section of \(cwls\) and \(hwls\) was calculated as 2.351 mm. The surface area of a single face of a plate was also calculated as being 0.01725\( {\mathrm{m}}^{2}\). The cross-section area of the each \(cwl\) and \(hwl\) \({A}_{l}\) was calculated as 0.98402 \({\mathrm{cm}}^{2}\).

As can be seen in Table 4, it is observed that flows in the \(hw\) side of the CHE are turbulent flows in all\(hwls\), while those in the \(cw\) side of the CHE are laminar flows in all the\(cwls\). On the other hand, the values of the \(, Re, Nu\) and \(h\) are at a maximum in the 1st \(hwl\) of the CHE, while they are at a minimum in the 3rd \(cwl\) of the CHE.

Machine learning models

The descriptive statistics of the experimental data

In this study, \({T}_{hwo}\) and \({T}_{cwo}\), the parameters affecting the performance of CHE, were predicted using five input variables (\({T}_{hw}\), \({T}_{hwi}\), \({T}_{cw}\), \({T}_{cwi}\) and \({\dot{m}}_{cw}\)). The data ranges and total number of samples for seven different experiments for values of \({\dot{m}}_{hw}\) ranging from 6 to 12 L/min are shown in Table 5.

Following the division of the dataset into a training set and a test set, the descriptive statistical parameters of independent variables such as minimum, maximum, mean, standard deviation, skewness and kurtosis values can be seen in Table 6. The dependent variable of the dataset must have a normal distribution for the implementation of MLR (Özdamar 2004). The skewness and kurtosis coefficients can both be used to test whether a dataset has a normal distribution. It is expected that the skewness coefficient be less than 3 and the kurtosis coefficient be between − 2 and 2 for a dataset to have a normal distribution (Chemingui 2013). The results obtained show that these criteria were met.

Multiple linear regression

MLR, widely used in engineering and other sciences, is a useful statistical method for predicting the best relationship between a dependent variable and several independent variables. MLR is one of the regression models applied in machine learning. The MLR model is based on the least squares method, which minimizes the sum of the squares of differences of observed values and estimated values (Tiryaki and Aydin 2014).

The relationship between k independent variables and a Y dependent variable is given by Eq. (8). In Eq. (8),\({x}_{j}\) is the level of the second independent variable for \(=1,\dots ,k\). \(\varepsilon \) is the error term assumed to have zero mean and a normal distribution with \({\sigma }^{2}\) variance. \({\beta }_{0}, {\beta }_{1},\dots ,{\beta }_{k}\) parameters and \({\sigma }^{2}\) are assumed to be unknown; the outputs \({Y}_{1},\dots ,{Y}_{n}\) obtained for k number \({x}_{i1},\dots ,{x}_{i2},\dots ,{x}_{ik}\) input levels are estimated (Ross 2014).

The MLR model in this study used the Scikit-learn library, which was developed for machine learning applications, as well as basic Python libraries such as NumPy, Pandas, and SciPy. After the experimental raw data were collected, the data pre-processing step was performed. In this step, the variables that were to be used in the machine learning application were first selected through the feature extraction process, before the features that were statistically insignificant for the model (e.g., registration number) were removed from the dataset. Missing data can occur for many different reasons, including incorrect measurements, limited data collection, and human errors during the obtaining of the dataset. Considering the expanding data size, the multiplicity of missing data can become a major problem affecting the quality of the dataset (Qi et al. 2018). In this study, the entire dataset was analyzed and all missing data problems were resolved.

Certain statistical criteria can be used to select the appropriate model for machine learning applications. These criteria should be able to compare and rank models, in addition to explaining data or predicting a model. Although statistical measures such as R-squared and adjusted R-squared values, likelihood ratio tests, Bayes factors, Akaike information criteria (AIC) and Bayesian information criteria (BIC) can compare models, they do not measure the suitability for the prediction of a given model. Therefore, choosing a model based on these criteria will not be enough (Nicolet et al. 2017). In the process of dividing the dataset into a training set and a test set, deciding which data to choose is an important factor affecting the model selection. Machine learning algorithms have certain variables, called hyperparameters, which can increase or decrease the performance of the algorithms. When an algorithm is run with a different hyperparameter value on a training dataset, a different model is in fact being built. Since the aim is to select the model that will give the best performance in a dataset, a cross-validation (Stone 1974) method can be used to compare the performance. In this study, using a cross-validation method, the dataset was divided into 70% training and 30% test. Some machine learning algorithms require be all features of the dataset to be in the same range (Bollegala 2017). Thus, as part of the data preprocessing stage, all data in the dataset were expressed in the same value range (− 1 to 1) using feature scaling.

In the next stage, the MLR model was trained using the Scikit-learn library. There are many factors that affect dependent variables in real life; too many of these factors will have a negative effect on the usefulness of a variable and will increase its complexity. Therefore, the model only retains those attributes that have a greater effect on the dependent variable; in other words, stepwise regression can be achieved by eliminating less efficient features from the model. MLR has a number of different stepwise regression methods (John et al. 1994). In this study, the backward elimination method was used to train the MLR model. In this method, all variables are included in the model at the beginning of the algorithm. Then, the algorithm is executed by successively removing from the model those features which are greater than the specified level of significance (if more than one is the largest). Until there are no more features that are greater than the specified significance level, this process continues (Narin et al. 2014). By the end of this study, results had been obtained concerning the accuracy of the model by conducting a performance evaluation of the MLR model.

Artificial neural networks

Compared to conventional techniques, ANN is a non-linear model based on fewer assumptions and constraints, tolerant to noise and fault, with the ability to generalize (Fragkaki et al. 2012). The ANN architecture has a three-tier structure, including an input layer, a hidden layer, and an output layer. The weights connecting the neurons in the layers are the main factors of long-term information storage in ANN. As a result, the number of neurons, the number of layers and the type of links between the layers all have an important impact on the behavior of ANN (Patterson and Gibson 2017).

A simpler type of ANN architecture, known as a single-layer neural network, has only input and output layers. Thus, an ANN architecture becomes a multi-layer neural networks when hidden layers are added between the input and output layers. If there is only one hidden layer, the architecture is known as a shallow neural network. Alternatively, if there are two or more hidden layers, it is referred to as a deep neural network: a type that has recently become particularly prominent (Kim 2017).

In multi-layer neural networks, a network can be trained using various learning algorithms, such as back-propagation algorithms. A large number of numerical differences between input values during the training of an ANN can have a negative effect on the ANN’s performance. Therefore, before establishing an ANN model, the data must be normalized by expressing it within a certain value range (Chollet 2017). One of the normalization techniques in the literature is min–max normalization, as expressed in Eq. (9) (Priddy and Keller 2005).

where \({min}_{v}\) and \({max}_{v}\) is minimum and maximum values for each feature in data, \({min}_{t}\) and \({max}_{t}\) is data that linearly transformed to lie within the desired range of values.

There are many factors affecting the performance of an ANN model. These can be listed as the number of hidden layers, the number of neurons in the various layers, the type of activation function used, the normalization technique used, the type of learning algorithm used, the learning rate and the momentum factor. Experiments should be carried out adjusting these factors in order to obtain the best ANN performance. In this study, to predict the \({T}_{hwo}\) and \({T}_{cwo}\) values, seven different ANN models were chosen to be tested, in which \(\dot{{m}_{hw}}\) was specified as 6–7–8–9–10–11 and 12. The number of hidden layers for the models was set at two. The training and testing stages of the ANN models were conducted using Keras, which is a library of Python programming languages.

In Fig. 5, the (6–6) neuron model of the ANN-6 architecture was chosen as an example to illustrate in more detail the structure of the ANN architectures used in this study. The back-propagation algorithm is used for the training of the networks in the ANN architecture, and the forward propagation of this algorithm is shown in Fig. 5.

One input layer (\(i\)), two hidden layers (\(j, k\)) and an output layer (\(m\)) were used for the ANN models in this study. There were five input variables in the input layer. First, all input values were expressed within the same numerical range using min–max normalization. Then, the output values of the neurons in the first hidden layer were calculated. To this end, the multiplication by own weights of input values and of bias values was implemented as a sigmoid function. These values also composed the input values of the second hidden layer, and the output values of the second hidden layer were calculated by repeating the same process for the second hidden layer. The output values of the output layer were calculated using the output values of the second hidden layer and the weight values of the output layer. Following this stage, the calculation of the error and the back-propagation process took place. Since the epoch value was chosen as 1000, the back-propagation algorithm was terminated at the end of 1000 iterations. Once the algorithm had been terminated, the final weight and bias values for each layer were obtained. The weight values between the layers of the ANN-6 architecture are shown in Fig. 5. In the final stage, the output values obtained were converted back into the original value range using a de-normalization process.

Support vector regression

Support vector machines developed by Cortes and Vapnik (1995) are a machine learning technique which is widely used in regression applications where the dependent variable consists of continuous data as well as linear and nonlinear classification problems. A data set can be classified linearly by means of planes in three-dimensional space. \({\varvec{x}}\) and \({\varvec{w}}\) including two-dimensional vectors, The inner product of the vectors \({\varvec{x}}=({x}_{1},{x}_{2})\) and \({\varvec{w}}=({\theta }_{1},-1)\) is shown as \(\langle {\varvec{w}},{\varvec{x}}\rangle \) also \(\langle {\varvec{w}},{\varvec{x}}\rangle +{\theta }_{0}=0\) represents a hyperplane. In Fig. 6, the data of Class 1 and Class 2 are separated by hyperplanes \({{\varvec{H}}}_{1}\) and \({{\varvec{H}}}_{2}\). Sample data on hyperplanes \({{\varvec{H}}}_{1}\) and \({{\varvec{H}}}_{2}\) are called support vectors. Support vectors serve as a boundary. Two support vectors should be selected such that, both the distance between the hyperplanes \({{\varvec{H}}}_{1}\) and \({{\varvec{H}}}_{2}\) passing through these support vectors should be the largest and the samples of Class 1 and Class 2 should be able to be separated from each other in the best way. This is the main goal that support vector machines want to achieve. In Fig. 6, mathematical representation of \(\langle \mathrm{w},\mathrm{x}\rangle +{\uptheta }_{0}=0\) is used for H hyperplane. In this case, f \(\langle \mathrm{w},\mathrm{x}\rangle +{\uptheta }_{0}-\mathrm{d}=0\) can be used for \({{\varvec{H}}}_{1}\) hyperplane and \(\langle {\varvec{w}},{\varvec{x}}\rangle +{\theta }_{0}+d=0\) can be used for \({{\varvec{H}}}_{2}\) hyperplane. The distance d in these expressions can be taken as 1 for ease of calculation in this case \(\langle {\varvec{w}},{\varvec{x}}\rangle +{\theta }_{0}=1\) is obtained for \({{\varvec{H}}}_{1}\) hyperplane and \(\langle {\varvec{w}},{\varvec{x}}\rangle +{\theta }_{0}=-1\) is obtained for \({{\varvec{H}}}_{2}\) hyperplane. In order to maximize the distance 2d between the hyperplanes \({{\varvec{H}}}_{1}\) and\({{\varvec{H}}}_{2}\), and to separate the class 1 and class 2 data correctly, the optimization problem seen in the Eq. (10) must be solved. In the Eq. (10), \({y}_{i}\) represents class labels. Since the minimization problem in Eq. (10) has a quadratic objective function (\(\frac{1}{2}\langle {\varvec{w}},{\varvec{w}}\rangle \)) and a linear constraint, it can be solved by using quadratic programming techniques (Lagrange multipliers).

Linear separation of classes (Uğuz 2020)

In data sets that cannot be separated linearly, samples can be moved from a lower dimensional space (input space) to a higher dimensional space (attribute space) and thus their dimensions are changed. Suppose that the vectors in the input space x and z are represented as vectors a and b in higher dimensional space. If the value obtained as a result of the inner product of vectors (\(\langle {\varvec{a}},{\varvec{b}}\rangle \)) a and b in high dimensional space can be written in terms of the inner product of x and z vectors in the input space, the function containing this inner product expression is called the kernel.

Since some data sets are not separated linearly, core functions can be used to convert a linear model to a nonlinear model. If the objective function of any linear classification problem includes an inner product of the vectors \(\langle {{\varvec{x}}}_{{\varvec{i}}},{{\varvec{x}}}_{{\varvec{j}}}\rangle \) an appropriate \(K\left({{\varvec{x}}}_{{\varvec{i}}},{{\varvec{x}}}_{{\varvec{j}}}\right)\) kernel function can be written instead of this inner product expression. The technique used in regression applications for data sets where the dependent variable consists of continuous data is called support vector regression. In this study, a support vector regression was performed because the dependent variable consisted of continuous data.

Performance evaluation criteria and model selection

Once a machine learning model has been established, the performance of that model must be evaluated. The performance evaluation criteria in machine learning change depending on the type of application. The evaluation criteria used for regression analysis and those used for applications involving classification or clustering will be different. When conducting a performance evaluation in regression analysis, various regression metrics can be used, including \({R}^{2}\), \(MAE\), \(MSE\) and \(MedAE\) (Zheng 2015; Al-Ghobari et al. 2018). These are defined by the following equations:

where ( \(\widehat{{y}_{i}} )\) is the predicted value of the \(i^{\prime}th\) sample, \({y}_{i}\) is the corresponding true value and \({n}_{samples}\) is the number of observations.

Performance evaluation results obtained for ANN, MLR and SVM models used in this study are given in Tables 7, 12 and 13, respectively. As a result of the experiments made using all three algorithms, firstly, performance values of the training set were obtained. The results of the experiments made with the test data set achieved higher success than the results of the experiments made with the training data set. This shows that the models are not overfitting. The results seen in Tables 7, 12 and 13 include the results of the test data set. In Table 7, higher values are written in bold.

Supervised learning techniques prediction studies with regard to machine learning elementarily base on Neural Networks based techniques, Support vector machines, Statistics based techniques (Bayes approach, Multiple linear regression), Similarity based techniques (Nearest neighbor approach) and Inductive approach (Decision Trees) ecoles (Domingos 2015). In this study, experiments were carried out using algorithms belonging to three important prediction ecoles. Depending on the data set used in each scientific study, the success performances of these algorithms also differ. The same algorithms may exhibit different performances in different data sets similar to the subject of this study.

There are different approaches for model selection techniques in machine learning applications. Resampling methods seek to estimate the performance of a model (or more precisely, the model development process) on out-of-sample data. Bootstrap, Cross validation, and random train/test splits are among the common resampling model selection methods. In this study, the k-fold cross validation technique was used. Thus, during the separation of the dataset as training, verification and test set, problems that may occur in its distribution can be prevented. In literature reviews, it is seen that the data set is distributed into k number of parts by choosing the value of k between 5 and 10. For this reason, k value was chosen as 10 in this study. In the k-fold cross validation technique, each part is used in the training of the model using a separate test data set. The overall performance of the model was obtained by averaging the performance values obtained at the end of each part. This model selection technique was used before the training of the algorithms used in this study.

Results

Performances of the ANN models

The results of the performance evaluation criteria produced for the independent variables (\({T}_{hwo}\) and \({T}_{cwo}\)) of the ANN architectures composed for the seven different experiments are shown in Table 7. The different neuron combinations that first and second hidden layer from 1 to 8 were tested for each architecture and the highest performances obtained are recorded in a dark color in Table 7. The highest performance of all the architectures for \({T}_{hwo}\) belonged to the ANN-8 architecture, which had six neurons in both hidden layers. The lowest performance belonged to the ANN-11 architecture, which had seven and eight neurons in both hidden layers. The highest performance of all the architectures for \({T}_{cwo}\) belonged to the ANN-8 architecture, which had seven and eight neurons in both hidden layers. The lowest performance belonged to the ANN-11 architecture, which had seven neurons in both hidden layers.

The training of the multi-layer perceptron was carried out over 2 hidden layers. The trainings were carried out until the best performance values were obtained by giving different values to the number of iterations of the algorithm, the number of neurons in the hidden layers and the activation functions used. The iteration number was created with 20 different values between 1000 and 50,000. Sigmoid function was used as activation function. The number of neurons was determined between 5 and 10. All alternative values were tested on the training of the network and the most outstanding results obtained by testing test data sets are given in Table 7.

The experiments we made in our study gave high performance values with the current parameters. For this reason, not any value change was made on factors such as activation function, normalization technique, type of learning algorithm, the learning rate and the momentum factor, which may affect ANN performance.

Performances of the MLR models

As a result of the stepwise regression, p value and standard error values of the independent variables affecting \({T}_{hwo}\) and \({T}_{cwo}\) are shown in Tables 8 and 9, respectively. The p-value indicates the statistical significance of the regression coefficients. In this study, since the confidence interval was determined as 95%, independent variables with a p value less than or equal to 0.05 may be considered important in the regression model. For example, in the model developed for the experiment in which (\({\dot{m}}_{hw}=6\)), the p value for \({T}_{cw}\) is not included: the model led to a p value greater than 0.05 in the stepwise regression and thus the respective coefficient in the tables is left blank.

As a result of the MLR analyses performed using the seven different sets of experimental data, the equations derived for the five independent variables and the two dependent variables are shown in Tables 10 and 11, respectively.

Table 12 shows the results of the evaluation of the statistical performance of the MLR model developed to predict the \({T}_{hwo}\) and \({T}_{cwo}\) dependent variables, using four different performance evaluation metrics. The results show that both dependent variables had a slightly lower prediction performance, compared with other experiments, in the results of the experiment in which \({\dot{m}}_{hw}\)=11. The highest performance for \({T}_{cwo}\) was obtained in the prediction for the experiment in which \({\dot{m}}_{hw}\)=8. The highest performance for \({T}_{hwo}\) was obtained in the prediction for the experiment in which \({\dot{m}}_{hw}\)=12.

The graphs seen in Fig. 7 include the average of the actual \({T}_{hwo}\) and \({T}_{cwo}\) values obtained as a result of the experiments, and a comparison of the values predicted from the equations of the models shown in Tables 10 and 11. Thanks to the graphics, it is also possible to see intermediate values of\({\dot{m}}_{hw}\). \({T}_{hwo}\) and all values determined for \({T}_{cwo}\) were obtained by taking the average of the experimental samples in the data set. For example, in the data set, there are 10 different test results for \({\dot{m}}_{hw}=6.1\). \({T}_{hwo-Experiment}\) and \({T}_{cwo-Experiment}\) values in the graphs were obtained by taking the average of these 10 different test results. The values of \({T}_{hwo-Predict}\) and\({T}_{cwo-Predict}\), the other two parameters in the graphs, were created by taking the average of the experimental results produced with the model equations in Tables 10 and 11. The representation of the performance results in Table 12 on actual data can be provided with graphics. For example, looking at Fig. 7a, which includes the comparison of \({\dot{m}}_{hw}=6.8\), it can be seen that the model predicts the values of \({T}_{hwo}\) with a very high success. And the lowest prediction success in this graph seems to belong to the value of \({T}_{cwo}\) for \({\dot{m}}_{hw}=6.3\) and\({\dot{m}}_{hw}=6.6\).

Comparison of the results produced by the MLR model with the actual values a 6 ≤ \({\dot{\mathrm{m}}}_{\mathrm{hw}}\)≤6.9, b 7 ≤ \({\dot{\mathrm{m}}}_{\mathrm{hw}}\)≤7.9, c 8 ≤ \({\dot{\mathrm{m}}}_{\mathrm{hw}}\)≤8.9, d 9 ≤ \({\dot{\mathrm{m}}}_{\mathrm{hw}}\)≤9.9, e 10 ≤ \({\dot{\mathrm{m}}}_{\mathrm{hw}}\)≤10.9, f 11 ≤ \({\dot{\mathrm{m}}}_{\mathrm{hw}}\)≤11.9

Performances of the SVR models

The kernel parameter can be shown as the important parameter used in the support vector regression model. In the Scikit-learn library, the kernel parameter takes 'linear', 'poly', 'rbf', 'sigmoid' and 'precomputed'. The best results were obtained with rbf kernel. Another important parameter is the c parameter, a penalty parameter that determines the tolerance shown to incorrectly classified samples. The default value of 1.0 for this parameter was used. And the final parameter with regard to SVM is gamma (γ), which gives the coefficient of core functions. The default value of \(auto\) for this parameter was used. The results of the performance evaluation criteria produced for the independent variables (\({T}_{hwo}\) and\({T}_{cwo}\)) of the SVR architectures composed for the seven different experiments are shown in Table 13. The best of prediction scores for \({T}_{cwo}\) during testing were obtained for the value of \(\dot{{m}_{hw}}\) in value ranges of \(9.5\le {\dot{m}}_{hw}\le 10.4\) and \(11.5\le {\dot{m}}_{hw}\le 12\). The best of prediction scores during testing for \({T}_{hwo}\) was obtained for the value of \(\dot{{m}_{hw}}\) in the value ranges of \(7.5\le {\dot{m}}_{hw}\le 8.4\) and\(9.5\le {\dot{m}}_{hw}\le 10.4\).

The graphs seen in Fig. 8 include the average of the actual \({T}_{hwo}\) and \({T}_{cwo}\) values obtained as a result of the experiments for SVR model.

Comparison of the results produced by the SVR model with the actual values a 6 ≤ \({\dot{\mathrm{m}}}_{\mathrm{hw}}\)≤6.9, b 7 ≤ \({\dot{\mathrm{m}}}_{\mathrm{hw}}\)≤7.9, c 8 ≤ \({\dot{\mathrm{m}}}_{\mathrm{hw}}\)≤8.9, d 9 ≤ \({\dot{\mathrm{m}}}_{\mathrm{hw}}\)≤9.9, e 10 ≤ \({\dot{\mathrm{m}}}_{\mathrm{hw}}\)≤10.9, f 11 ≤ \({\dot{\mathrm{m}}}_{\mathrm{hw}}\)≤11.9

Conclusion

In this study concerning a compact heat exchanger (CHE) with a complex structure, manufactured using metal additive manufacturing (MAM) technology, some experiments were carried out to determine the outlet temperatures of the hot water (\(hw\)) and cold water (\(cw\)) during the testing phase of the CHE produced. Since an increase in the number of experiments conducted will have a negative effect on the manufacture time and cost of the CHE, the results of the unperformed experiments were instead predicted using artificial neural networks (ANN) multivariable linear regression (MLR) and support vector regression (SVR) methods, from data obtained from the other experiments. The experiments on the CHE were repeated for seven different \(hw\) volumetric flow rates (\({\dot{m}}_{hw}\)). In the models developed for each experiment, the source and inlet temperatures of \(hw\) and \(cw\) (\({T}_{hw}\) and \({T}_{hwi}\), and \({T}_{cw}\) and\({T}_{cwi}\), respectively), and the volumetric flow rate of \(cw\) (\({\dot{m}}_{cw}\)) were selected as input parameters for the ANN, MLR and SVR methods. Performance evaluation criteria, such as\( {R}^{2}\),\(MSE\), \(MAE\) and\(MedAe\), were used to investigate the performances of the models. The models obtained in this study could be used by other researchers for testing heat exchangers with a similar geometry. Because the CHE developed in the study is with special geometry and manufactured by MAM technology, there is no equivalent that can be compare in the literature. However, there is a limited number of studies on the usage of machine learning techniques for CHE. (Tan et al. 2009) applied the ANN model for predicting the overall the rate of heat transfer in the exchanger (\(Q\)) of a compact fin-tube heat and they found 0.6%, 0.9% and 0.9%, respectively, the MAE of the ANN model for the training, test and validation data sets. (Ermis 2008) developed a ANN model for predicting heat transfer coefficient, pressure drop and Nusselt number values. It was reported that results for heat transfer coefficient, pressure drop and Nusselt number values. \({R}^{2}\) values are found as 0.9995, 0.9952 and 0.9993, respectively. Other studies have usually been performed for conventional heat exchangers in terms of estimation of phase change characteristics and estimation of heat exchanger parameters. According to the findings of this study, no significant difference was observed between the performances of machine learning techniques. When the mean values of \({R}^{2}\) of the seven experiments were performed, ANN, MLR and SVR models were 0.960, 0.961 and 0.942, respectively.

References

Al-Ghobari, H. M., El-Marazky, M. S., Dewidar, A. Z., & Mattar, M. A. (2018). Prediction of wind drift and evaporation losses from sprinkler irrigation using neural network and multiple regression techniques. Agricultural Water Management, 195, 211–221. https://doi.org/10.1016/j.agwat.2017.10.005.

Bai, Y., Chaudhari, A., & Wang, H. (2020). Investigation on the microstructure and machinability of ASTM A131 steel manufactured by directed energy deposition. Journal of Materials Processing Technology, 276, 116410. https://doi.org/10.1016/j.jmatprotec.2019.116410.

Bhatt, P. M., Kabir, A. M., Peralta, M., Bruck, H. A., & Gupta, S. K. (2019). A robotic cell for performing sheet lamination-based additive manufacturing. Additive Manufacturing, 27, 278–289. https://doi.org/10.1016/j.addma.2019.02.002.

Bineli, A. R. R., Peres, A. P. G., Jardini, A. L., & Maciel Filho, R. (2011) Direct metal laser sintering (DMLS): Technology for design and construction of microreactors. In 6th Brazilian Conference of Manufacturing Engineerin, Caxias do Sul, RS, Brazi, April 11–15 2011

Bollegala, D. (2017). Dynamic feature scaling for online learning of binary classifiers. Knowledge-Based Systems, 129, 97–105. https://doi.org/10.1016/j.knosys.2017.05.010.

Brooks, H., & Molony, S. (2016). Design and evaluation of additively manufactured parts with three dimensional continuous fibre reinforcement. Materials & Design, 90, 276–283. https://doi.org/10.1016/j.matdes.2015.10.123.

Chabot, A., Laroche, N., Carcreff, E., Rauch, M., & Hascoët, J.-Y. (2019). Towards defect monitoring for metallic additive manufacturing components using phased array ultrasonic testing. Journal of Intelligent Manufacturing, 31, 1191–1201. https://doi.org/10.1007/s10845-019-01505-9.

Chemingui, H. (2013). Resistance, motivations, trust and intention to use mobile financial services. International Journal of Bank Marketing, 31(7), 574–592. https://doi.org/10.1108/IJBM-12-2012-0124.

Chollet, F. (2017). Deep learning with Python. Shelter Island: Manning Publications.

Cortes, C., & Vapnik, V. (1995). Support-vector networks. Machine Learning, 20(3), 273–297.

Cui, J., & Cui, Y. (2015). Effects of surface wettability and roughness on the heat transfer performance of fluid flowing through microchannels. Energies, 8(6), 5704–5724. https://doi.org/10.3390/en8065704.

Dinovitzer, M., Chen, X., Laliberte, J., Huang, X., & Frei, H. (2019). Effect of wire and arc additive manufacturing (WAAM) process parameters on bead geometry and microstructure. Additive Manufacturing, 26, 138–146. https://doi.org/10.1016/j.addma.2018.12.013.

Domingos, P. (2015). The master algorithm: How the quest for the ultimate learning machine will remake our world. United States: Basic Books.

Dong, L., Makradi, A., Ahzi, S., & Remond, Y. (2007) Finite element analysis of temperature and density distributions in selective laser sintering process. In Materials science forum, 2007 (Vol. 553, pp. 75–80): Trans Tech Publ

Ebrahimzadeh, E., Wilding, P., Frankman, D., Fazlollahi, F., & Baxter, L. L. (2016). Theoretical and experimental analysis of dynamic plate heat exchanger: non-retrofit configuration. Applied Thermal Engineering, 93, 1006–1019. https://doi.org/10.1016/j.applthermaleng.2015.10.017.

Erbay, L. B., Uğurlubilek, N., Altun, Ö., & Doğan, B. (2013). Compact heat exchangers. Engineer & The Machinery Magazine, 54(646), 37–48.

Ermis, K. (2008). ANN modeling of compact heat exchangers. International Journal of Energy Research, 32(6), 581–594.

Fluent, A. (2015). Ansys Fluent R16.1 Theory guide. Canonsburg, PA: Ansys Inc.

Foroozmehr, A., Badrossamay, M., Foroozmehr, E., & Golabi, S. I. (2016). Finite element simulation of selective laser melting process considering optical penetration depth of laser in powder bed. Materials & Design, 89, 255–263. https://doi.org/10.1016/j.matdes.2015.10.002.

Fragkaki, A., Farmaki, E., Thomaidis, N., Tsantili-Kakoulidou, A., Angelis, Y., Koupparis, M., et al. (2012). Comparison of multiple linear regression, partial least squares and artificial neural networks for prediction of gas chromatographic relative retention times of trimethylsilylated anabolic androgenic steroids. Journal of Chromatography A, 1256, 232–239. https://doi.org/10.1016/j.chroma.2012.07.064.

Garg, A., Panda, B., Zhao, D., & Tai, K. (2016a). Framework based on number of basis functions complexity measure in investigation of the power characteristics of direct methanol fuel cell. Chemometrics and Intelligent Laboratory Systems, 155, 7–18. https://doi.org/10.1016/j.chemolab.2016.03.025.

Garg, A., Sarma, S., Panda, B., Zhang, J., & Gao, L. (2016b). Study of effect of nanofluid concentration on response characteristics of machining process for cleaner production. Journal of Cleaner Production, 135, 476–489. https://doi.org/10.1016/j.jclepro.2016.06.122.

Gobert, C., Reutzel, E. W., Petrich, J., Nassar, A. R., & Phoha, S. (2018). Application of supervised machine learning for defect detection during metallic powder bed fusion additive manufacturing using high resolution imaging. Additive Manufacturing, 21, 517–528. https://doi.org/10.1016/j.addma.2018.04.005.

Hajabdollahi, H., & Seifoori, S. (2016). Effect of flow maldistribution on the optimal design of a cross flow heat exchanger. International Journal of Thermal Sciences, 109, 242–252. https://doi.org/10.1016/j.ijthermalsci.2016.06.014.

Han, X.-H., Cui, L.-Q., Chen, S.-J., Chen, G.-M., & Wang, Q. (2010). A numerical and experimental study of chevron, corrugated-plate heat exchangers. International Communications in Heat and Mass Transfer, 37(8), 1008–1014. https://doi.org/10.1016/j.icheatmasstransfer.2010.06.026.

Hudák, R., Šarik, M., Dadej, R., Živčák, J., & Harachová, D. (2013a). Material and thermal analysis of laser sinterted products. Acta Mechanica et Automatica, 7(1), 15–19.

Hussein, A., Hao, L., Yan, C., Everson, R., & Young, P. (2013). Advanced lattice support structures for metal additive manufacturing. Journal of Materials Processing Technology, 213(7), 1019–1026. https://doi.org/10.1016/j.jmatprotec.2013.01.020.

İpek, O., Kılıç, B., & Gürel, B. (2017). Experimental investigation of exergy loss analysis in newly designed compact heat exchangers. Energy, 124, 330–335. https://doi.org/10.1016/j.energy.2017.02.061.

Jiang, J., Xiong, Y., Zhang, Z., & Rosen, D. W. (2020). Machine learning integrated design for additive manufacturing. Journal of Intelligent Manufacturing. https://doi.org/10.1007/s10845-020-01715-6.

John, G. H., Kohavi, R., & Pfleger, K. (1994). Irrelevant features and the subset selection problem. In Machine Learning Proceedings 1994 (pp. 121–129). Amsterdam: Elsevier.

Kan, M., Ipek, O., & Gurel, B. (2015). Plate heat exchangers as a compact design and optimization of different channel angles. Acta Physica Polonica A, 12, 49–52. https://doi.org/10.12693/APhysPolA.128.B-49.

Kays, W. M., & London, A. L. (1984). Compact heat exchangers. New York: McGraw-Hill.

Khudheyer, A. F., & Mahmoud, M. S. (2011). Numerical analysis of fin-tube plate heat exchanger by using CFD technique. Journal of Engineering and Applied Sciences, 6(7), 1–12.

Kim, P. (2017). Matlab deep learning: With machine learning, neural networks and artificial intelligence. Berkeley, CA: Apress.

Kwon, O., Kim, H. G., Ham, M. J., Kim, W., Kim, G.-H., Cho, J.-H., et al. (2020). A deep neural network for classification of melt-pool images in metal additive manufacturing. Journal of Intelligent Manufacturing, 31(2), 375–386. https://doi.org/10.1007/s10845-018-1451-6.

Lam, C., & Bremhorst, K. (1981). A modified form of the k–ε model for predicting wall turbulence. Asme Journal of Fluids Engineering, 103, 456–460.

Li, X., Jia, X., Yang, Q., & Lee, J. (2020). Quality analysis in metal additive manufacturing with deep learning. Journal of Intelligent Manufacturing, 31, 2003–2017. https://doi.org/10.1007/s10845-020-01549-2.

Manfredi, D., Calignano, F., Ambrosio, E. P., Krishnan, M., Canali, R., Biamino, S., et al. (2013b). Direct Metal Laser Sintering: an additive manufacturing technology ready to produce lightweight structural parts for robotic applications. Metallurgia Italiana, 105(10), 15–24.

Narin, A., Isler, Y., & Ozer, M. (2014). Investigating the performance improvement of HRV Indices in CHF using feature selection methods based on backward elimination and statistical significance. Computers in Biology and Medicine, 45, 72–79. https://doi.org/10.1016/j.compbiomed.2013.11.016.

Nicolet, G., Eckert, N., Morin, S., & Blanchet, J. (2017). A multi-criteria leave-two-out cross-validation procedure for max-stable process selection. Spatial Statistics, 22, 107–128. https://doi.org/10.1016/j.spasta.2017.09.004.

Niu, X. D., Singh, S., Garg, A., Singh, H., Panda, B., Peng, X. B., et al. (2019). Review of materials used in laser-aided additive manufacturing processes to produce metallic products. Frontiers of Mechanical Engineering, 14(3), 282–298. https://doi.org/10.1007/s11465-019-0526-1.

Özdamar, K. (2004). Paket programlar ile istatistiksel veri analizi (çok değişkenli analizler). Eskişehir: Kaan Kitabevi.

Patterson, J., & Gibson, A. (2017). Deep learning: A practitioner’s approach. Sebastopol, CA: O’Reilly Media Inc.

Paul, A., Mozaffar, M., Yang, Z. J., Liao, W. K., Choudhary, A., Cao, J., et al. (2019). A real-time iterative machine learning approach for temperature profile prediction in additive manufacturing processes. In L. Singh, R. DeVeaux, G. Karypis, F. Bonchi, & J. Hill (Eds.), 2019 IEEE ınternational conference on data science and advanced analytics, Proceedings of the international conference on data science and advanced analytics (pp. 541–550).

Penumuru, D. P., Muthuswamy, S., & Karumbu, P. (2020). Identification and classification of materials using machine vision and machine learning in the context of industry 4.0. Journal of Intelligent Manufacturing, 31(5), 1229–1241. https://doi.org/10.1007/s10845-019-01508-6.

Peyre, P., Rouchausse, Y., Defauchy, D., & Regnier, G. (2015). Experimental and numerical analysis of the selective laser sintering (SLS) of PA12 and PEKK semi-crystalline polymers. Journal of Materials Processing Technology, 225, 326–336. https://doi.org/10.1016/j.jmatprotec.2015.04.030.

Priddy, K. L., & Keller, P. E. (2005). Artificial neural networks: An introduction (Vol. 68). Bellingham: SPIE Press.

Qi, Z. X., Wang, H. Z., Li, J. Z., & Gao, H. (2018). FROG: Inference from knowledge base for missing value imputation. Knowledge-Based Systems, 145, 77–90. https://doi.org/10.1016/j.knosys.2018.01.005.

Ranganayakulu, C., Luo, X., & Kabelac, S. (2017). The single-blow transient testing technique for offset and wavy fins of compact plate-fin heat exchangers. Applied Thermal Engineering, 111, 1588–1595. https://doi.org/10.1016/j.applthermaleng.2016.05.118.

Roberts, I. A., Wang, C. J., Esterlein, R., Stanford, M., & Mynors, D. J. (2009). A three-dimensional finite element analysis of the temperature field during laser melting of metal powders in additive layer manufacturing. International Journal of Machine Tools & Manufacture, 49(12–13), 916–923. https://doi.org/10.1016/j.ijmachtools.2009.07.004.

Ross, S. M. (2014). Introduction to probability and statistics for engineers and scientists. Canada: Academic Press.

Sarafraz, M. M., & Hormozi, F. (2016). Heat transfer, pressure drop and fouling studies of multi-walled carbon nanotube nano-fluids inside a plate heat exchanger. Experimental Thermal and Fluid Science, 72, 1–11. https://doi.org/10.1016/j.expthermflusci.2015.11.004.

Sheikholeslami, M., Gorji-Bandpy, M., & Ganji, D. D. (2016). Experimental study on turbulent flow and heat transfer in an air to water heat exchanger using perforated circular-ring. Experimental Thermal and Fluid Science, 70, 185–195. https://doi.org/10.1016/j.expthermflusci.2015.09.002.

Silbernagel, C., Aremu, A., & Ashcroft, I. (2019). Using machine learning to aid in the parameter optimisation process for metal-based additive manufacturing. Rapid Prototyping Journal, 26(4), 625–637. https://doi.org/10.1108/rpj-08-2019-0213.

Stathatos, E., & Vosniakos, G. C. (2019). Real-time simulation for long paths in laser-based additive manufacturing: A machine learning approach. International Journal of Advanced Manufacturing Technology, 104(5–8), 1967–1984. https://doi.org/10.1007/s00170-019-04004-6.

Stone, M. (1974). Cross-validatory choice and assessment of statistical predictions. Journal of the Royal Statistical Society: Series B (Methodological), 36(2), 111–133.

Tan, C. K., Ward, J., Wilcox, S. J., & Payne, R. (2009). Artificial neural network modelling of the thermal performance of a compact heat exchanger. Applied Thermal Engineering, 29(17–18), 3609–3617. https://doi.org/10.1016/j.applthermaleng.2009.06.017.

Tiryaki, S., & Aydin, A. (2014). An artificial neural network model for predicting compression strength of heat treated woods and comparison with a multiple linear regression model. Construction and Building Materials, 62, 102–108. https://doi.org/10.1016/j.conbuildmat.2014.03.041.

Tsopanos, S., Sutcliffe, C., & Owen, I. The manufacture of micro cross-flow heat exchangers by selective laser melting. In Fifth International Conference on Enhanced, Compact and Ultra-Compact Heat Exchangers: Science, Engineering and Technology, Hoboken, NJ, United State, September 11–16 2005

Uğuz, S. (2020). Makine öğrenmesi teorik yönleri ve Python uygulamaları ile bir yapay zeka ekolü. Isparta: Nobel academic publish.

Usta, Y., & Köylü, A. (2012). Yakıt hücrelerinde kullanılacak gözenekli paslanmaz çelik toz metal parçaların üretim parametrelerinin araştırılması. Journal of the Faculty of Engineering & Architecture of Gazi University, 27(2), 265–274.

Ventola, L., Robotti, F., Dialameh, M., Calignano, F., Manfredi, D., Chiavazzo, E., et al. (2014). Rough surfaces with enhanced heat transfer for electronics cooling by direct metal laser sintering. International Journal of Heat and Mass Transfer, 75, 58–74. https://doi.org/10.1016/j.ijheatmasstransfer.2014.03.037.

Wang, C., Tan, X., Tor, S., & Lim, C. (2020). Machine learning in additive manufacturing: State-of-the-art and perspectives. Additive Manufacturing, 36, 101538. https://doi.org/10.1016/j.addma.2020.101538.

Yang, Y., He, M., & Li, L. (2020). Power consumption estimation for mask image projection stereolithography additive manufacturing using machine learning based approach. Journal of Cleaner Production, 251, 119710. https://doi.org/10.1016/j.jclepro.2019.119710.

Zhao, D., & Guo, W. (2019). Mixed-layer adaptive slicing for robotic Additive Manufacturing (AM) based on decomposing and regrouping. Journal of Intelligent Manufacturing, 31, 985–1002. https://doi.org/10.1007/s10845-019-01490-z.

Zheng, A. (2015). Evaluating machine learning models: A beginner’s guide to key concepts and pitfalls. Sebastopol, CA: O’Reilly Media.

Acknowledgements

This research was funded by the Scientific and Technology Research Council of Turkey (TUBITAK), under project name: TUBITAK 1001, 214M070. The authors gratefully acknowledge the financial support provided by the TUBITAK.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Uguz, S., Ipek, O. Prediction of the parameters affecting the performance of compact heat exchangers with an innovative design using machine learning techniques. J Intell Manuf 33, 1393–1417 (2022). https://doi.org/10.1007/s10845-020-01729-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10845-020-01729-0