Abstract

Augmentative and alternative communication (AAC) applications may differ in their use of display and design elements. Using a multielement design, this study compared mand acquisition in three preschool-aged males with autism spectrum disorder, across three different displays in two iPad® AAC applications. Displays included a Widgit symbol button (GoTalk), a photographical hotspot (Scene and Heard), and a Widgit symbol button along with a photograph (Scene and Heard). Applications had additional design differences. Two participants showed more rapid and consistent acquisition with the photographical hotspot than with the symbol button format, but did not master the combined format. The third participant mastered all three conditions at comparable rates. Results suggest that AAC display and design elements may influence mand acquisition.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

New technology devices such as the Apple iPod Touch®, Apple iPad®, and the Apple iPhone®, are being incorporated into educational programs for people with developmental disabilities. Kagohara et al. (2013) described 15 studies that included the use of such devices in interventions for individuals with autism spectrum disorder (ASD) or intellectual disability. In eight of the studies, the devices were used as a form of alternative or augmentative communication (AAC). The use of such devices in AAC intervention is likely to advance because specialized communication-related applications for such devices are becoming more widely available (Farrall 2012; McNaughton and Light 2013). In a 2-year period from July 2010 to July 2012, the number of AAC applications available on the iTunes store increased from 21 to over 200 (Farrall 2012). While such applications have been successfully used in AAC interventions, there appears to be a growing diversity with respect to the quality and design of such applications, necessitating the need to assess the suitability of the various options for any given individual (Farrall 2012; Gosnell et al. 2011; McBride 2011; McNaughton and Light 2013).

One way in which these applications may differ is in the visual display system used to organize photographical or symbol-based vocabulary. Differences in display types on these applications may affect performance (McNaughton and Light 2013). Traditionally, most AAC systems that involve speech output (i.e., speech-generating devices or SGDs) have been designed using grid-based formats in which pictures or symbols representing concepts are displayed in rows, and touching or otherwise selecting a symbol activates a pre-stored message (Drager et al. 2003, 2004; Light et al. 2004). In order to access multiple concepts, links between pages of grids are typically configured so that pressing a symbol on one page leads to access to a second grid display with additional vocabulary items (Drager et al. 2004). These page links may be organized alphabetically, schematically (items grouped by activity locations or events), taxonomically (by categories), or semantic-syntactically (by parts of speech; Drager et al. 2003).

In contrast to grid-based systems, scene-based displays make use of “hotspot” areas. These hotspots might represent language concepts by contextually embedding them into a visual scene (Drager et al. 2003, 2004; Light et al. 2004; Wood Jackson et al. 2011). In such a system, a display might show a picture of child’s bedroom and be configured so that selecting the teddy bear symbol within the scene produces the message “I want to play with the teddy”. Links between pages would typically be organized schematically so that pressing the graphic representation of the scene would lead to a number of contextually embedded language buttons (Drager et al. 2004).

A third type of display combines features from grid-based and scene-based displays. This type of display can be referred to as a hybrid. Hybrids often combine grid-based and scene-based displays by showing a small grid of symbols, which is overlaid onto a visual scene (Light and Drager 2007). These models can incorporate embedded hotspots as well as buttons.

Some researchers (Drager et al. 2003, 2004; Light et al. 2004) have hypothesized that schematically organized scene systems place lower cognitive demands on the user than grid-based systems organized taxonomically (i.e., by categories) or schematically. It has been further hypothesized that scene-based systems may be particularly helpful for individuals with ASD due to their communication/language deficits (Shane 2006; Shane et al. 2012). More specifically, it has been suggested that scene-based systems are likely to enable individuals with ASD to better process more complex communication interactions because these types of displays provide potentially more meaningful and interesting visual supports (Light and Drager 2007; Shane 2006; Shane et al. 2012).

While AAC applications with scene-based and hybrid systems (e.g. AutisMate by SpecialNeedsWare and Scene and Heard by TBox Apps) are currently available (and in some cases specifically marketed for use by individuals with ASD), all eight studies described by Kagohara et al. (2013) used grid-based applications. In fact, seven of the eight studies used the same application (Proloquo2Go®) and only one study (Achmadi et al. 2012) taught learners to navigate between linked pages. Thus while the existing research provides some support for the use of iPod®, iPad®, and iPhone® hardware and compatible applications in educational programs for individuals with ASD, there could be value in investigating the use of different applications and display configurations. Research of this type may contribute to a better understanding of how best to apply this technology for enhancing the communication skills of individuals with ASD (Gevarter et al. 2013).

To date, there appear to be no studies that have examined the use of different applications and display formats when teaching communications skills to children with ASD via the use of iPods®, iPads®, and/or iPhones® and communication-related applications. There are however, studies that have compared scene-based and grid-based formats among typically developing children (Drager et al. 2003, 2004; Light et al. 2004; Wood Jackson et al. 2011) and among children with communication disorders (Wood Jackson et al. 2011). In two studies conducted by Drager et al. (2003, 2004), 2- and 3-year-old typically developing children were more accurate in locating vocabulary with schematically-based scene systems than with either schematically or taxonomically organized grid displays. In a similar study conducted with 4 and 5 year old children, however, Light et al. (2004) did not find differences between the children’s performances with the taxonomic grid, schematic grid, and schematic scenes. A further study by Wood Jackson et al. (2011) examined how 39 children between the ages of 2 and 6 (including 13 children with communication disorders) used different AAC displays during a shared story reading activity. Overall device activations were low, but the children with communication disorders demonstrated more frequent activations using a grid display than a visual scene in response to open ended questions. There were no statistical differences however in answering “wh” questions.

It is unclear if the results of these studies would hold for individuals with ASD. In two recent studies, individuals with ASD did not differ in their acquisition of mands when taught to use low-iconic versus high-iconic symbols or when taught to use picture versus photographic symbols (Angermeier et al. 2008; Jonaitis 2011). Belfiore et al. (1993) found that an adult participant with an intellectual disability required less time to sequence symbols presented at a closer distance than those at a farther distance. In scene-based systems, the distal location of hotspots would depend on the location of an item in a scene, whereas the location and distance of symbols in grid-based systems could vary based on the number of items on a page. In hybrid models, hotspot distances may vary, while symbols may be placed all across the same horizontal or vertical plane. In another study, fixed displays (all symbols on one page), dynamic active displays (symbols on one page linked to a second page), and dynamic passive displays (two pages with symbols linked by a “go to” symbol) were compared in terms of latency and accuracy for matching photographs to symbols on an AAC device (Reichle et al. 2000). The single participant in this study (who had developmental delays) responded faster and more accurately with fixed and dynamic active display types, particularly when discrimination between multiple symbols was required. Although not directly related to scene-based, grid-based, or hybrid displays, this research suggests that the manner in which multiple pages are linked together (likely to differ by display type) might influence accuracy and latency of responding, which is consistent with the findings of Drager et al. (2003, 2004).

As AAC applications for tablet and other touch screen devices become more entrenched into educational programs for learners with ASD, evaluations and comparisons of applications that allow for grid-based, scene-based, and hybrid models may help to elucidate recommendations regarding the selection of appropriate applications (Gevarter et al. 2013). While previous research comparing SGD display types among typically developing children has included advanced operations (e.g., navigating between multiple pages and discriminating between multiple pictures; Drager et al. 2003, 2004; Light et al. 2004; Wood Jackson et al. 2011), researchers who have developed SGD interventions for individuals with ASD and developmental disabilities often incorporate instruction of component skills or precursor stages of instruction. For instance, in order to systematically introduce skills involved in SGD use to young learners with ASD and developmental disabilities, researchers have adapted the instructional procedures of Frost and Bondy’s (2002) Picture Exchange Communication System (PECS; Beck et al. 2008; Bock et al. 2005; Boesch 2011).

Following the PECS protocol, the earliest stage of instruction for an aided system of communication would involve teaching single item un-discriminated mands (i.e., symbols or photographical representations not presented in a field; Frost and Bondy 2002). Thus, adapting the PECS protocol, the first phase of mand acquisition training using a symbol grid-based AAC system may involve teaching a learner to activate a single symbol button. Similarly, the first phase of a scene-based system may focus on teaching a learner to recognize and activate a single embedded hotspot within a photographical image. Early training of hybrid models may include teaching an individual to activate either a hotspot in a photographical image or a symbol button in a combined model. While not all individuals with ASD may require training in these earlier phases (i.e., may be able to begin instruction at more advanced phases), for others these conditions may serve as precursors to what may be conventionally considered to be true grid, scene, and hybrid models (e.g., those involving multiple symbols or images). Prior to examining the more complex differences between grid, scene, and hybrid models, it is, therefore, important to determine whether or not attributes in precursor conditions (i.e., single symbol button as a precursor to grid, single photographical hotspot as a precursor to scene, and combined symbol button and photograph as a precursor to hybrid) impact early mand acquisition.

It is important to note that while these display differences may play a role in mand acquisition, differences in application design elements not specifically related to display types in general (e.g., the use of haptic feedback or the location of navigational buttons) could also impact early mand acquisition. The influence of potential differential design elements within and across applications may particularly impact learners who are first learning how to activate devices. Thus, the role these elements may play in differential mand acquisition should also be considered in conjunction with display type differences.

In order to lay the ground work for further examinations of differences between AAC applications with more complex grid, scene, and hybrid formats, the purpose of this study was to determine: (a) whether young children with ASD can acquire un-discriminated (i.e., field of one) mands using two different iPad® AAC applications with different display and design elements, and (b) whether or not acquisition in terms of either mastery, or rate of mastery, differed based upon the application design and display.

Methods

Participants

Three males (Addie, Derek, and Liam) with ASD participated in the study. The study was approved by an institutional review board and parental consent was obtained for the children to participate. All three participants had received a medical diagnosis of ASD and had qualified to receive services for individuals with ASD following an additional independent diagnostic evaluation from a community-based program serving individuals with intellectual and developmental disabilities. Evaluation records from the community agency indicated that a psychologist had determined that all three children met DSM-IV-TR (APA 2000) criteria for both ASD and intellectual disability. The researchers reviewed assessment results on the Vineland Adaptive Behavior Scales (Sparrow et al. 2005), which master’s level behavioral therapists had completed via observation and parent report about 4 months prior to the start of the study. Based on observations, as well as parent and therapist report, the communication domain items of the Vineland were updated and rescored by the researcher to reflect communication performance and chronological age at the start of the study.

Addie was a 3.1-year-old African-American male who did not imitate or initiate vocal words or sounds (based on parent and therapist report as well as observations by the researcher). His communication domain standard score on the Vineland Adaptive Behavior Scales (Sparrow et al. 2005) was 36, indicating a low adaptive level in the area of communication. His estimated age equivalents were 1.1-years-old for receptive skills and 9-months-old for expressive skills. Addie mainly pointed, reached, led, and cried to communicate He had received intervention using the PECS protocol (Frost and Bondy 2002) through early childhood intervention (ECI) services and had just begun the Phase III PECS intervention (field of two to three) requests when his ECI services ended. His parents did not actively use the PECS system with Addie at home and it had not been introduced in his school. Addie’s family had purchased an Amazon Kindle™ tablet device and Addie was reported to have learned how to play educational games (e.g. pressing flashcard pictures to hear the label of the item, swiping between pages) on the device. His mother and ECI therapist both noted that he was highly motivated to use the device. His mother expressed particular interest in trying tablet-based AAC systems.

Derek was a 3.11-year-old white male who inconsistently imitated vocal words or approximations and very rarely spontaneously initiated vocal speech (based on parent and therapist report as well as informal observations by the researcher). He reportedly spoke few words including ball and more to make requests, but his speech was said to be inconsistent and unpredictable. His communication domain standard score on the Vineland Adaptive Behavior Scale (Sparrow et al. 2005) was 34, indicating a low adaptive level in the area of communication. His estimated age equivalents were 1.1-years-old for receptive skills and 1.6-years-old for expressive skills. Derek was reported to be able imitate a few signs, but only regularly used the sign for MORE to request. He mainly used leading, pointing, reaching and crying to communicate and occasionally hit others or banged his head on a surface when he was not given access to preferred items. Derek had not been introduced to a picture-based AAC system, but had demonstrated interest and motivation when playing simple educational games on his mother’s iPhone®. He had learned to press symbols or pictures on the iPhone® and had also learned to swipe the screen to move between pages.

Liam was a 3.6-year-old white male who was reported to consistently imitate vocal words, but rarely initiated communication with his speech. He reportedly had about eight words that were occasionally heard spontaneously. His communication domain standard score on the Vineland Adaptive Behavior Scale (Sparrow et al. 2005) was 36, indicating a low adaptive level in the area of communication. Liam’s estimated age equivalents were 1.3-years-old for receptive skills and 1.3-years-old for expressive skills. In his home and school, Liam primarily used a PECS book to communicate and was performing at a level that indicated mastery of Phase III of the PECS protocol (Frost and Bondy 2002). Liam also initiated a few signs (MORE, ALL DONE, WANT) and pointed to desired items. Despite learning these communicative behaviors, Liam often still reverted to using self-injurious behaviors (i.e., hitting his head with his hand or on a hard surface) when access to preferred items was ceased. His mother had recently purchased an iPad® and had noted that Liam was very motivated when playing simple educational games on the iPad®. He had learned how to press symbols or pictures on the iPhone® and had also learned to swipe between pages. Liam’s mother was interested in learning whether or not the tablet-based AAC systems on the iPad® or similar devices might be appropriate for him.

Setting

The sessions associated with this study occurred in the participants’ homes in rooms that the mothers considered appropriate areas to work on requesting skills. For two participants, this area was a table in their bedroom. The third participant sat on the living room floor directly in front of a floor-level shelf that often contained preferred items. The intervention lasted approximately 5–8 weeks (2–3 sessions each week). The first author, a board certified behavior analyst (BCBA) and certified special education teacher served as the interventionist for all three participants.

Materials

Materials included an Apple iPad® with the following applications: GoTalk by Attainment company (symbol button condition) and Scene and Heard by TBox Apps (photographical hotspot, and combined symbol and photograph conditions) preprogrammed with page displays corresponding to participants’ preferred items. In the GoTalk symbol button condition (Fig. 1), the application pages were set to display only one symbol button using the standard size, shape, and color that the application created for single item displays (e.g. a white rectangular button with black border taking up a majority of the screen space). A symbol for each item was selected from a Widgits symbol library available on the application. If more than one symbol was available for an item, the symbol most closely resembling the actual item (based on color, size, and other physical characteristics) was selected and used. Corresponding speech output (e.g., “raisin”) was activated by touching the area within the rectangular border of the symbol button. Although the GoTalk application allows buttons to be created using camera images or images from internet searches, a decision was made to use the Widgit symbols to create a precursor for grid-based systems that may not allow for image or camera searches. Additionally, using a photograph to create a single button may make this condition more similar to a photograph-based hotspot condition (precursor to scene) than would be expected when comparing later stage grid and scene differences (e.g. multiple buttons with individual photographs may differ more from multiple hotspots within a single photograph, than would a button with a single image, and photograph with a single hotspot).

Design elements specific to the GoTalk application (i.e. elements that may not be directly related to the use of a symbol button display in general, but may be unique to the application) included the fact that when the symbol button was pressed the image enlarged and came forward. Additionally, navigational buttons were displayed at the bottom of the screen.

For the Scene and Heard photographical hotspot condition (Fig. 2), a precursor to scene displays, a digital photograph of a preferred item placed on the table or shelf area in which the intervention was conducted was taken. The photograph was taken in such a way that the representation of the item corresponded with the size and distance of the actual item when placed in the child’s view. A blue rectangular border was placed around the desired item to create a hotspot activation area. Speech output corresponding to the item’s name was activated by touching the area within the rectangular border.

For the Scene and Heard combined photograph and symbol button condition (Fig. 3), which represented a precursor to a more advanced hybrid condition, the digital photograph used in the photographical hotspot condition was set as a background, but no rectangular borders or hotspots were placed within the photograph. Instead, the Widgit symbol used for the symbol button in the GoTalk application was placed onto the display using the application’s set size and location for symbols that are added to an image (e.g. small symbol without clear borders displayed in left hand corner of white strip at the bottom of the screen). Speech output corresponding to the item’s name was activated only by touching the small area the contained the symbol.

Design elements specific to the Scene and Heard application (i.e. elements that may not be directly related to the display formats in general, but may be more unique to the application) included the fact that a brief “click” noise was emitted prior to voice output for hotspot images only (i.e., in photographical hotspot condition), but not for symbol buttons (i.e., in combined condition), and no border was placed around symbol buttons. Additionally, a navigational button was placed in the top right-hand corner of the screen.

Experimental Design and Sessions

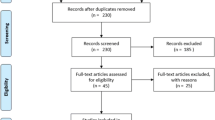

A multielement design (Kennedy 2005) was implemented in an attempt to demonstrate experimental control within each participant’s data set. A session for each of the three conditions (GoTalk symbol button, Scene and Heard photographical hotspot, and Scene and Heard combined photograph and symbol) consisted of 10 opportunities to request preferred items by selecting the corresponding symbol on the screen. The order in which the conditions were presented was counterbalanced across sessions and children to prevent sequencing effects (Kennedy 2005). Typically, three sessions, one for each condition, were implemented during each day. One of six different condition orders was randomly selected for each day, but identical orders were not repeated consecutively. A 2–5 min break occurred between sessions. Intervention for each child continued until (a) the child reached the mastery criterion of 80 % correct responding for three consecutive days across two items in all three conditions, (b) the child did not or could not reach mastery within 45 total sessions, or (c) the child had five sessions at or below 50 % correct responding in the last condition after reaching mastery in the other two conditions.

Preference Assessment

Snack, drink, and/or toys were selected based upon a two-stage preference assessment (Green et al. 2008). Parents were asked to make a list of six preferred snacks, drink, or toy items that would typically be available to the child. The six items were then directly assessed using a multiple stimulus format without replacement (DeLeon and Iwata 1996). All six items were presented by placing them directly in front of the child. The child was asked What do you want? and whatever the child touched or pointed to, and then consumed or played with, was recorded. Each child was allowed to consume a snack or drink, or play for 10 s and then that item was removed. The child was then asked What do you want?, but only given a choice of the remaining items. This process was repeated six times and the order in which each item was selected was recorded. The assessment was repeated three times over the course of three days and an average rank order for each item was computed. The three highest ranked items were selected as preferred items for the study.

Addie’s three items included his Kindle™ device set to a web page displaying a preferred cartoon video, a cup with juice, and a set of Legos. Derek’s preferred items included raisins, a blue squishy ball, and a slinky. Liam’s preferred items changed during the course of the study. Initially, based upon the multiple stimulus assessment pineapple, melon, and blueberries were selected as preferred items. After the first intervention day when blueberries were rejected, due to a change in the availability of an alternative highly preferred item, blueberries were replaced by fruit snacks. By the fourth day, as Liam was not showing any interest in pineapple and most often selecting fruit snacks, a second assessment using fruit snacks, pineapple, and melon was conducted. Liam never selected pineapple and always selected fruit snacks over melon. At this point, the use of pineapple was discontinued and a modification for selecting which item to use during each condition (see intervention procedures) was implemented.

Data Collection and Response Definitions

The percentage of correct responses per session was recorded and used to determine mastery and rate of mastery. Mastery for each application occurred when the child achieved 80 % correct responding for three sessions across at least 2 items.

Correct and Incorrect iPad® Responses

Correct responses for all applications occurred when the child independently (that is without any physical, gestural, modeled, or vocal prompts) pressed the correct location on the screen to produce the speech output within 6 s of the iPad® being placed in front of him. The following criteria were also necessary for correct responses: (a) the screen location was pressed only once and with enough pressure to produce speech output, (b) the child used only one or two fingers, (c) the child did not touch another part of iPad® prior to or less than 3 s after making the response, and (d) the child did not attempt to grab the item prior to making a correct response. Pressing only once was considered an important criterion as pressing more than once could lead to inappropriate repetitive vocal outputs or a delay in the speech output. The two finger maximum was used to discriminate between accidental or non-discriminatory hits of the iPad® that may have produced speech output. Touching a different part of the iPad® was considered incorrect as it could lead to chaining multiple incorrect touches and might cause problems such as changing the screen display or turning off the device. Responses in which the individual’s fingers hovered over, but did not physically touch an incorrect screen spot prior to a correct response within the 6 s interval were, however, still considered correct. Additionally, if a second response (e.g. multiple taps or touching another part of screen) occurred 3 s after a correct first response, it was considered researcher error (e.g. reinforcement not delivered fast enough) and this was not counted against correct responses. Grabbing the item was also considered an incorrect response as reinforcement of a grab plus an AAC response could inadvertently lead to the participant learning to grab first as part of a behavior chain. Behavioral indication responses not involving touching the iPad® or making physical contact with the reinforcing item (e.g. pointing to the item, indiscriminate vocalizations) were not considered correct nor incorrect and were ignored until the 6-s delay was up. If the individual self-corrected before a prompt was delivered, but after an incorrect response, it was still considered incorrect.

Inter-observer Agreement (IOA)

During each session, the trainer recorded responses as correct or as incorrect/no response within 6 s. All sessions were videotaped and, for each individual, a minimum of 33 % of sessions for each condition were randomly selected for IOA checks (i.e. four sessions per condition per child). Observers (two doctoral students) were trained by reviewing the operational definitions for correct and incorrect responses and viewing examples and non-examples of correct responses from videos not used for IOA. The observers then viewed the recordings selected for IOA and recorded responses. IOA was calculated using the formula agreements/agreements + disagreements × 100 %. Mean IOAs were 97.50 % (range 90–100) for Addie, 100 % for Derek, and 98.33 % for Liam (range 95–100).

Procedures

Item Selection

At the start of each new session, the child was presented with the three preferred items from the preference assessment. The first item the child reached for or pointed to was used for the subsequent 10 trials. If at any point during the 10 trials the child did not accept the item (i.e. did not consume a food or drink or hold or engage with a toy for at least 3 s) all three items were represented and whatever the child reached for was used for the remaining trials of the session. Because Liam’s preferences appeared to change during the intervention, a modification was made to his intervention. Specifically, starting with Session 13, Liam was not given a choice before the sessions. Rather two preferred items were rotated across conditions. The items were randomly selected for each session, but if Liam had two consecutive days using one item in one condition, or the item had been used for two of three sessions during that day, the other item was selected.

Instruction

After an item was selected for the session, the researcher placed the iPad® with the appropriate application page corresponding to the selected item directly in front of the child. The trainer then waited 6 s for the child to independently press the Widgit symbol button (symbol button or combined formats) or embedded hotspot (photographical hotspot format). If the child did not respond or made an incorrect response during the 6 s delay, the trainer implemented a least-to-most prompt hierarchy consisting of a gestural prompt (i.e., pointing to area on iPad® to touch), partial physical prompt (guiding the child’s hand to a position just above symbol), and full physical prompt (physically assisting the child to make the correct response). Incorrect responses that involved grabbing the item, pressing the iPad® off button, or touching a part of the iPad® that changed the display were, as necessary, physically stopped before delivering the prompt sequence. Correct and prompted responses were reinforced within 3 s by giving the child the requested item.

Procedural Integrity

Procedural integrity was assessed on four total sessions per condition per child by independent observers using a checklist that outlined each step of the procedures (e.g., did the trainer place the iPad® in front of the child, was the correct application/condition presented, did the trainer follow the prompting hierarchy correctly, and did the trainer provide reinforcement within 3 s of correct responses). The extent of procedural integrity was calculated using the formula: Number of steps correctly implemented/number of steps correctly implemented + number of steps incorrectly implemented × 100 %. Mean integrity was 98 % for Addie, 99 % for Derek, and 98 % for Liam.

Results

Addie (Fig. 4) acquired mands with the Scene and Heard photographical hotspot format within four sessions as shown in Fig. 1. He took longer to reach criterion under the GoTalk Widgit symbol button condition. This appeared to be due to frequent errors involving touching the HOME or BACK arrow buttons in the bottom left-hand corner of the screen and tapping the symbol button multiple times. Addie did not reach the acquisition criterion in the Scene and Heard combined photograph and Widgit symbol button condition. Errors throughout the intervention in this condition included pressing the photographical representation of the item rather than the symbol, as well as multiple taps. This condition was discontinued after Session 32 (Day 11) as per the decision rule that he had 5 days at or below 50 % correct responding after the other two conditions had been mastered (see “Experimental Design and Sessions” section).

Derek (Fig. 5) reached mastery under the Scene and Heard photographical hotspot condition more rapidly than under the GoTalk symbol button condition. He did not achieve mastery under the Scene and Heard combined condition. Some of Derek’s error patterns were similar to Addie’s. For instance, he similarly often pressed the photographical representation rather than the symbol in the combined condition. During the first few sessions in all conditions, Derek was activating the voice output in all conditions, but responses were most often incorrect due to his tendency to tap the symbol multiple times. The multiple taps dissipated in the Scene and Heard photographical hotspot condition, but persisted in both the GoTalk symbol button and Scene and Heard combined conditions. The variability and inconsistency in his performance in the GoTalk symbol button condition was most often due to multiple-tap errors, while errors in the Scene and Heard combined condition consisted both of multiple taps and pressing the wrong part of the screen. The Scene and Heard combined condition was discontinued at Session 39 as his performance was at 0 % (see description of condition termination under "Experimental Design and Sessions" section).

Liam reached criterion in all three conditions (Fig. 6). He reached criterion in the GoTalk symbol button condition after three sessions following modification of the reinforcement procedures (i.e., randomly rotating two items), in the Scene and Heard photographical hotspot condition after five such sessions, and in the Scene and Heard combined condition after seven such sessions. Initially, in both the Scene and Heard conditions when the less preferred item was used (melon), Liam often had errors that involved touching a button in the upright corner of the application that brought him back to a page with different scene options. Other errors included multiple taps (all conditions) and pressing the photographical representation rather than the symbol in the Scene and Heard combined condition.

Discussion

Addie and Derek showed more rapid acquisition with the Scene and Heard photographical hotspot configuration than with the GoTalk Widgit symbol button configuration and neither child reached criterion under the Scene and Heard combined condition. Liam reached the mastery criterion in all three conditions in a similar number of sessions (i.e., three, five, and seven sessions for the symbol button, photographical hotspot, and combined conditions, respectively). These findings suggest that for some children, the configuration of the display format as well as other application design elements might influence acquisition. However, this claim may be limited to the acquisition of single mands for preferred objects on an iPad®-based AAC system.

Our study is limited in that it remains unclear as to which specific components of the displays and design elements led to the differential performance observed for Addie and Derek. Unlike previous studies involving comparisons of complex scene and grid-based displays (i.e. Drager et al. 2003, 2004), one cannot suggest here that the differences in the organization of symbols (e.g. schematically versus taxonomically) accounted for the differences in performance between the three conditions for Addie and Derek (i.e., GoTalk symbol button, Scene and Heard photographical hotspot, and Scene and Heard combined configurations). One might speculate that some of these differences could be accounted for by the fact that the combined configuration has both a symbol and a photographical image, and thus the symbols do not take up most of the screen, nor are do they present clear discriminative stimuli. This might have slowed acquisition for Addie and Derek. Of course it is possible that with continued intervention Addie and Derek might have reached criterion under the combined condition. Differential results for Addie and Derek might also stem from internal design elements of the specific applications chosen (e.g., location of HOME or BACK button, and auditory or visual feedback from pressing a button) or an interaction of these variations and display differences. This possibility is consistent with the types of errors recorded. The differences in acquisition for Addie and Derek do not appear to be related to the type of symbol system used because both the GoTalk symbol button-based and Scene and Heard combined configurations used Widgit symbols.

In relation to the observed error patterns, difference in the organizational structure of the combined display (i.e., having two images to select from) appeared to impact performance in that all three children often pressed the photographical image of the desired item, rather than the symbol, which was the correct response. This type of error could again be related to the smaller image size of the symbols in the combined condition. Alternatively, this type of error might relate to the location of the symbol, or an inability to discriminate when to press the symbol and when to press the image related to a lack of clear stimulus highlighting (e.g., no border around the symbol button). Interestingly, the symbol’s location on the screen made it closer to the child than the embedded image, suggesting that, in contrast to prior research (Belfiore et al. 1993), placing symbols closer to the individual might not always have advantages and other mitigating factors may play a role (choice of images or symbols, size of images or symbols). It should be noted here that it is also possible that carryover related to experience using the photographical hotspot configuration might have influenced the results. However, if any such carryover influence was operating, it appeared to be operating only temporarily in the initial sessions. For example, while in Addie’s first photographical hotspot condition session (immediately following the combined condition) most of his errors involved pressing the left hand corner of the screen where the symbol had been location in the immediately prior combined condition. However, after this first photographical hotspot condition session he no longer made this error. Furthermore, even if carryover with multielement designs does occur, there are times when it is clinically important to understand interaction effects between two conditions (Hains and Baer 1989). Ultimately if the purpose of a more complex hybrid model (e.g. further development of the combined condition so that it includes both scene and grid-based voice output components) is to use both embedded hotspots and symbols, it would be important for individuals to be taught the conditional discriminations of when to select an embedded picture (e.g., when border is placed around it) and when to select a symbol (e.g. when no border is around item in a photographical image). The extent to which such a conditional discrimination can be taught during early acquisition, may have implications for the use of hybrid configurations during initial AAC interventions with young children with ASD.

With regard to the impact of the naturalistic representation provided by photographical images taken in natural environments (i.e. precursors to more advanced scene-based displays), it is unclear if this factor creates more motivating and interesting visual supports (as suggested by Shane 2006; Shane et al. 2012). For instance, it is also possible that clearer stimulus highlighting (e.g. blue border placed around photographical hotspot versus black border around button) in the photographical condition, rather than the difference between symbols and images alone, may also impact acquisition. Furthermore, while two participants acquired mands under the photographical hotspot condition faster than under the symbol button condition, the magnitude of the difference in terms of sessions to reach criterion were not large. The fact that all children acquired mands under both the photographical hotspot and symbol button conditions does provide support for the possibility that during early acquisition, these configurations may be comparable in terms of ease of learning.

There are also other differences that may have related to the applications themselves (e.g. GoTalk versus Scene and Heard) that might influence ease of learning under these three configurations. The location and presence of the navigational buttons, for example, appeared to influence the frequency of errors for both Addie and Liam. Addie’s errors that were initially observed with the GoTalk symbol button-based configuration were often related to pressing the HOME or BACK arrow button that were in the left-hand corner of the screen. It is possible that this was due to carryover from experience with the combined model, but Addie often did not press the left-sided symbol in the combined models. In the reverse situation, Liam often had errors with Scene and Heard photographical hotspot and combined models in which he pressed a SCENES button in the right hand corner of the screen that navigated back to a screen with different photograph options. Interestingly, he only showed this error when requesting melon (a less preferred item) and not with fruit snacks (a more preferred item). It is possible that due to Liam’s prior experience playing with iPad® games, he was attempting to navigate between screens to get to the symbol of the more preferred item. This analysis of some of the errors made suggests that the placement and design of navigational buttons might influence how children make use of these types of devices.

Another common error involved multiple tapping. Both Addie and Derek showed this error fairly often in the Scene and Heard combined, and GoTalk symbol button configurations and Liam displayed this type of error in all three conditions, but only during the initial sessions. It is possible that differences could stem from the fact that with the GoTalk application when a symbol is pressed, the image darkens and enlarges, and this might have evoked an additional response from the children. In the Scene and Heard application, touching and then releasing a hotspot (in the photographical condition) leads to a “click” noise before speech output, which may have served as a stimulus indicating that additional responses were not necessary. This “click” response did not occur when pressing symbol buttons in the Scene and Heard combined display conditions.

The results of this study suggest several directions for practice and future research. First, the data suggest that the least-to-most prompting procedure can be used to teach children with ASD to operate iPad®-based AAC, a conclusion that is consistent with previous research (van der Meer et al. 2012a, b). Our findings build on this conclusion by suggesting that it might also be important to assess an individual’s propensity to learn symbol button, photographical hotspot, and combined symbol and photographical image configurations when setting up communication interventions so as to be more responsive to the individual’s unique learning characteristics (Gevarter et al. 2013; Schlosser and Sigafoos 2006; Lee et al. in press; McNaughton and Light 2013; Mirenda 2003). For instance, while we found that the combined configuration was most problematic to teach, it is possible that some individuals might find such displays to be very easy to use and more efficient that either the symbol button-based or photographical hotspot-based options.

Our study did not identify factors responsible for the differences in acquisition evidence for Addie and Derek. Studies that aimed to do so would seem to require different applications, which are not yet available. Future applications that are capable of providing different types of feedback when symbols were selected and/or when multiple taps occurred would enable researchers to explore the influence of such factors on errors. Such discretion is not yet common in the types of applications that are relevant for AAC interventions. Another area of research may involve comparing displays with a photographical image of an item embedded (e.g. picture of cup on table with full background visible) and photographs of isolated items in a button (e.g. photo image of a cup on a white background). The impact of ASD severity, language development, and prior experience with AAC might also be studied to enable predictions as to which type of configuration is most likely to be suited to specific children.

In addition to attempting to account for differences with single item mand acquisition across different display options in a singular application, explorations of differences between more complex scene-based, grid-based and hybrid models at higher phases of acquisition are warranted. For instance, studies should examine the acquisition of mands with different display formats for discriminated requests (e.g. field of 4 items embedded as hotspots in a scene, as symbols in grid, or in a combination of symbols in a grid and hotspots embedded in a scene) and requests involving sentence construction with multiple symbols or pictures. Ultimately differences between displays when using multiple page systems organized schematically and taxonomically could be compared in terms of accuracy and speed of requesting (e.g. modifying procedures from previous studies such as Drager et al. 2003, 2004; Light et al. 2004).

References

Achmadi, D., Kagohara, D. M., van der Meer, L., O’Reilly, M. F., Lancioni, G. E., Sutherland, D., et al. (2012). Teaching advanced operation of an iPod-based speech-generating device to two students with autism spectrum disorders. Research in Autism Spectrum Disorders, 6, 1258–1264.

American Psychiatric Association (Ed.). (2000). Diagnostic and statistical manual of mental disorders: DSM-IV-TR ®. American Psychiatric Pub.

Angermeier, K., Schlosser, R. W., Luiselli, J. K., Harrington, C., & Carter, B. (2008). Effects of iconicity on requesting with the Picture Exchange Communication System in children with autism spectrum disorder. Research in Autism Spectrum Disorders, 2, 430–446.

Beck, A. R., Stoner, J. B., Bock, S. J., & Parton, T. (2008). Comparison of PECS and the use of a VOCA: A replication. Education and Training in Developmental Disabilities, 43, 198–216.

Belfiore, P. J., Lim, L., & Browder, D. M. (1993). Increasing the efficiency of instruction for a person with severe disabilities: The applicability of Fitt’s law in predicting response time. Journal of Behavioral Education, 3, 247–258.

Bock, S. J., Stoner, J. B., Beck, A. R., Hanley, L., & Prochnow, J. (2005). Increasing functional communication in non-speaking preschool children: Comparison of PECS and VOCA. Education and Training in Developmental Disabilities, 40, 264–278.

Boesch, M. C. (2011). Augmentative and alternative communication in autism: A comparison of the Picture Exchange Communication System and speech output technology. Doctoral Dissertation. Retrieved from ProQuest Dissertations & Theses (PQDT) database (UMI No. 3479313).

DeLeon, I. G., & Iwata, B. A. (1996). Evaluation of a multiple-stimulus presentation format for assessing reinforcer preferences. Journal of Applied Behavior Analysis, 29, 519–533.

Drager, K. D. R., Light, J. C., Carlson, R., D’Silva, K., Larsson, B., Pitkin, L., et al. (2004). Learning of dynamic display AAC technologies by typically developing 3-year-olds: Effect of different layouts and menu approaches. Journal of Speech, Language and Hearing Research, 47, 1133–1148.

Drager, K. D. R., Light, J. C., Speltz, J. H. C., Fallon, K. A., & Jeffries, L. Z. (2003). The performance of typically developing 2 1/2-year-olds on dynamic display AAC technologies with different system layouts and language organizations. Journal of Speech, Language and Hearing Research, 46, 298–312.

Farrall, J. (2012). What’s APPropriate: AAC Apps for iPhones, iPads and other devices. In Paper presented at the 15th biennial conference of the international society for augmentative and alternative communication (ISAAC), Pittsburgh, PA.

Frost, L., & Bondy, A. (2002). The picture exchange communication system training manual. Newark, DE: Pyramid Educational Products.

Gevarter, C., O’Reilly, M. F., Rojeski, L., Sammarco, N., Lang, R., Lancioni, G. E., et al. (2013). Comparisons of intervention components within augmentative and alternative communication systems for individuals with developmental disabilities: A review of the literature. Research in Developmental Disabilities, 34, 4415–4432.

Gosnell, J., Costello, J., & Shane, H. (2011). Using a clinical approach to answer “What communication apps should we use?”. Perspectives on Augmentative and Alternative Communication, 20, 87–96.

Green, V., Sigafoos, J., Didden, R., O’Reilly, M., Lancioni, G., Ollington, N., et al. (2008). Validity of a structured interview protocol for assessing children’s preferences. In P. Grotewell & Y. Burton (Eds.), Early childhood education: Issues and developments (pp. 87–103). New York: Nova Science Publishers.

Hains, A. H., & Baer, D. M. (1989). Interaction effects in multielement designs: Inevitable, desirable, and ignorable. Journal of Applied Behavior Analysis, 22, 57–69.

Jonaitis, C. (2011). The Picture Exchange Communication System: Digital photographs versus picture symbols. Doctoral Dissertation. Retrieved from ProQuest Dissertations & Theses (PQDT) database (UMI No. 3455172).

Kagohara, D. M., van der Meer, L., Ramdoss, S., O’Reilly, M. F., Lancioni, G. E., Davis, T. N., et al. (2013). Using iPods® and iPads® in teaching programs for individuals with developmental disabilities: A systematic review. Research in Developmental Disabilities, 34, 147–156.

Kennedy, C. H. (2005). Single-case designs for educational research. Boston: Allyn & Bacon.

Lee, A., Lang, R., Davenport, K., Moore, M., Rispoli, M., van der Meer, L., et al. (2013). Comparison of therapist implemented and iPad-assisted interventions for children with autism. Developmental Neurorehabilitation. doi:10.3109/17518423.2013.830231.

Light, J., & Drager, K. (2007). AAC technologies for young children with complex Communication needs: State of the science and future research directions. Augmentative and alternative communication, 23, 204–216.

Light, J., Drager, K., McCarthy, J., Mellott, S., Millar, D., Parrish, C., et al. (2004). Performance of typically developing four-and five-year-old children with AAC systems using different language organization techniques. Augmentative and Alternative Communication, 20, 63–88.

McBride, D. (2011). AAC evaluations and new mobile technologies: Asking and answering the right questions. Perspectives on Augmentative and Alternative Communication, 20, 9–16.

McNaughton, D., & Light, J. (2013). The iPad and mobile technology revolution: Benefits and challenges for individuals who require augmentative and alternative communication. Augmentative and Alternative Communication, 29, 107–116.

Mirenda, P. (2003). Toward functional augmentative and alternative communication for students with autism: Manual signs, graphic symbols, and voice output communication aids. Language, Speech, and Hearing Services in Schools, 34, 203–216.

Reichle, J., Dettling, E. E., Drager, K. D. R., & Leiter, A. (2000). Comparison of correct responses and response latency for fixed and dynamic displays: Performance of a learner with severe developmental disabilities. Augmentative and Alternative Communication, 16, 154–163.

Schlosser, R. W., & Sigafoos, J. (2006). Augmentative and alternative communication interventions for persons with developmental disabilities: Narrative review of comparative single-subject experimental studies. Research in Developmental Disabilities, 27, 1–29.

Shane, H. C. (2006). Using visual scene displays to improve communication and communication instruction in persons with autism spectrum disorders. Perspectives in Augmentative and Alternative Communication, 15, 8–13.

Shane, H. C., Laubscher, E. H., Schlosser, R. W., Flynn, S., Sorce, J. F., & Abramson, J. (2012). Applying technology to visually support language and communication in individuals with autism spectrum disorders. Journal of Autism and Developmental Disorders, 42, 1228–1235.

Sparrow, S. S., Cicchetti, D. V., & Balla, D. A. (2005). Vineland adaptive behavior scales (2nd ed.). Circle Pines, MN: American Guidance Service.

van der Meer, L., Didden, R., Sutherland, D., O’Reilly, M. F., Lancioni, G. E., & Sigafoos, J. (2012a). Comparing three augmentative and alternative communication modes for children with developmental disabilities. Journal of Developmental and Physical Disabilities, 24, 1–18.

van der Meer, L., Kagohara, D., Achmadi, D., O’Reilly, M. F., Lancioni, G. E., Sutherland, D., et al. (2012b). Speech-generating devices versus manual signing for children with developmental disabilities. Research in Developmental Disabilities, 33, 1658–1669.

Wood Jackson, C., Wahlquist, J., & Marquis, C. (2011). Visual supports for shared reading with young children: The effect of static overlay design. Augmentative and Alternative Communication, 27, 91–102.

Acknowledgments

No financial funding was used to support this study. Data from this study has been submitted for a research symposium at the Applied Behavior Analysis International conference to be held in May of 2014.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Gevarter, C., O’Reilly, M.F., Rojeski, L. et al. Comparing Acquisition of AAC-Based Mands in Three Young Children with Autism Spectrum Disorder Using iPad® Applications with Different Display and Design Elements. J Autism Dev Disord 44, 2464–2474 (2014). https://doi.org/10.1007/s10803-014-2115-9

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10803-014-2115-9