Abstract

We examined whether discrete trial training (DTT) could be used to identify learning impairments in mathematical reasoning in boys with fragile X syndrome (FXS). Boys with FXS, aged 10–23 years, and age and IQ-matched controls, were trained to match fractions to pie-charts and pie-charts to decimals either on a computer or with a trained behavior analyst using DTT. Participants with FXS obtained significantly lower learning rates on the fractions to pie-charts task, and were more likely to perseverate on previously reinforced responses during learning compared to controls. These data suggest that DTT can be used to identify specific learning impairments in boys with FXS, as well as other low-functioning individuals with developmental disabilities.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Studies conducted over the past 20 years have shown that individuals with fragile X syndrome (FXS), the most common known inherited form of intellectual disability, exhibit a characteristic cognitive profile of intellectual strengths and weaknesses including deficits in executive functioning, visual memory and perception, mental manipulation of visual-spatial relationships among objects, and aberrant processing of arithmetical stimuli (Bennetto et al. 2001; Cornish et al. 2004; Mazzocco 2001; Mazzocco et al. 2006; Murphy and Mazzocco 2008). In particular, mathematical reasoning skills appear to be significantly impaired in FXS, over and above general level of intellectual functioning, given that individuals with FXS have been shown to exhibit impairments in these skills in comparison to age- and IQ-matched controls (Hessl et al. 2009; Mazzocco 2001; Murphy 2009).

To date, research into the underlying learning problems associated with math impairments in FXS has focused largely on females with FXS, and in particular, those with IQ’s in the learning disability to typical development range (i.e., IQ’s > 80) (Murphy 2009; Murphy and Mazzocco 2008). This is perhaps understandable, given that females with FXS are less affected by FXS and are therefore often able to complete standardized assessments of mathematical reasoning skills. For example, Murphy and colleagues found that primary school-aged girls with FXS appeared to exhibit significant weaknesses on applied aspects of counting (such as the ability to use one-to-one correspondence when counting, or identifying the nth item in a set), yet exhibited an age-appropriate mastery of rote counting (i.e., counting in 1’s) on standardized tests of mathematical reasoning (Murphy 2009; Murphy et al. 2006). To explore whether these deficits could be detected in elementary school-aged children, Murphy and Mazzocco (2008) administered the Ranking Proportions Test (Mazzocco and Devlin 2008) to nine high-functioning girls with FXS, aged 11–14 years. In this test, participants were required to rank-order sets of ten fractions, pie-charts and decimals. These authors found that while girls with FXS were able to rank-order the set of pie-charts at grade-level performance, they evidenced impaired performance when attempting to rank-order the fractions, suggesting that girls with FXS evidenced a specific learning impairment. Much less is known about the underlying learning problems associated with math impairments in boys with FXS, however, simply because boys with FXS commonly score on the floor of standardized tests of mathematical reasoning, given the presence of a moderate to severe intellectual disability (Hessl et al. 2009). Indeed, obtaining valid assessments of math performance in boys with FXS is particularly problematic since standardized assessments are rarely designed to accommodate the learning challenges associated with testing boys with FXS.

A promising new approach to assist in identifying learning impairments in boys with FXS may be to measure performance while participants are learning new material under optimal learning conditions—i.e., on a “learning platform” (Hall et al. 2012). In a previous study, we showed that an individual’s learning rate obtained during discrete trial training (DTT)—a teaching procedure commonly employed in interventions for children with autism—could be used to provide a highly reliable, valid and sensitive measure of performance in participants with FXS (Hall et al. 2012). For example, if a participant is given two math tasks to learn, and learns one task at a significantly lower rate than the other task, this provides evidence that the individual has an impairment for learning that particular task. In DTT, a series of learning trials are presented to the participant in a highly structured and consistent manner combined with ample reinforcement and error correction. DTT thus provides a highly motivating, rewarding, and standardized learning environment, and consequently may provide a useful platform for studying the effects of learning in FXS, particularly for low-functioning individuals. Each DTT trial has five key components: (a) a discriminative stimulus (S D ), which specifies the behavior that the learner is to emit (e.g., “Touch red”, “Do this”, etc.); (b) a prompt, wherein the teacher assists the learner to provide a correct response (e.g., hand-over-hand assistance, modification of the position, size and/or intensity of the comparison stimulus to enhance the learner’s discrimination); (c) a response that the learner emits following the S D, which is either correct or incorrect; (d) a consequence that is delivered contingent on the learner’s response, which typically includes the immediate delivery of reinforcement (e.g., edible items, a token, hugs, praise, etc.) for correct responding and error correction (e.g., the display of the correct response) for incorrect responding; and (e) an inter-trial interval (ITI), which is a brief period of time (~1–5 s) that elapses between the delivery of a consequence and the next S D. Although a trial generally includes all of these components, prompts and programmed consequences typically are faded over time as the learner demonstrates improved and sustained independent performance (Smith 2001). Because DTT involves multiple learning opportunities—roughly 50–100 per hour (Howard et al. 2005), DTT maximizes the learner’s exposure to the relevant contingencies and therefore greatly facilitates acquisition of the targeted skill.

DTT has been employed successfully to teach children with developmental disabilities a variety of skills, including imitation (Lovaas et al. 1967), play (Coe et al. 1990), as well as more complex behaviors such as vocal (Krantz et al. 1981) and non-vocal (Carr et al. 1987; Carr and Dores 1981) communication, money skills (McDonagh et al. 1984; Stoddard and Sidman 1967), and simple mathematics (Maydak et al. 1995; Lynch and Cuvo 1995). To our knowledge, however, DTT has not been employed to identify the presence of learning impairments in individuals with developmental disabilities.

The purpose of the present study was therefore to provide preliminary data on the utility and feasibility of using DTT to identify impairments in math learning in boys with FXS. We employed mathematical stimuli similar to those employed in the Ranking Proportions Test (i.e., fractions, pie-charts and decimals) and incorporated them into two DTT learning tasks that could be presented either on a computer or by a teacher. In the fractions to pie-charts task, participants were required to learn equivalences between fractions and pie-charts. In the pie-charts to decimals task, participants were required to learn equivalences between pie-charts and decimals. To be successful, both tasks require that the participant count the number of filled and unfilled pieces of the pie-chart. However, in the fractions to pie-charts task, the participant is also required to relate those numbers to the numbers depicted on the fractions whereas in the pie-charts to decimals task, there is no such one-to-one correspondence.

Given that the ability to apply one-to-one correspondence when counting has been found to impaired in girls with FXS, we hypothesized that boys with FXS would obtain lower learning rates on the fractions to pie-charts task compared to the pie-charts to decimals task. Furthermore, we hypothesized that age- and IQ-matched individuals, who presumably would not show this specific impairment, would learn the two tasks at the same rate.

Methods

Participants and Setting

Participants with FXS were recruited via advertisements sent to local chapters of the National Fragile X Foundation, and from an ongoing longitudinal study of children and adolescents with FXS; all had a confirmed genetic diagnosis of FXS (i.e., >200 CGG repeats on the FMR1 gene and evidence of aberrant methylation). Control participants were recruited via community media and state-run agencies for individuals with developmental disabilities in the local area (e.g., Regional Centers in California). Controls were individually matched to children with FXS in terms of age (±3 years), IQ (±10 points) and severity of autistic symptoms (±5 points on the Social Communication Questionnaire (SCQ) (Rutter et al. 2003). Individuals who were born preterm (<34 weeks), had low birth weight (<2,000g), showed evidence of a genetic condition, exhibited sensory impairments, or had any serious medical or neurological condition that affected growth or development (e.g., seizure disorder, diabetes, congenital heart disease) were excluded. Control participants were subsequently screened for FXS to confirm that they did not have FXS.

Participants in both groups were included in the study if they: (a) were aged between 10 and 23 years, (b) had obtained scores on the floor of the Arithmetic subtest of the Wechsler Intelligence Scale for Children, Fourth Edition (WISC-IV) (Wechsler 2004), and (c) had obtained scores at chance levels (33.3 %) on tests involving fraction to pie-chart and pie-chart to decimal matching. To evaluate this last criterion, we administered two match-to-sample (MTS) pre-tests. In the first test, children were required to match fractions to pie-charts using the stimuli shown in the top two rows of Fig. 1. In the second test, children were required to match pie-charts to decimals using the stimuli shown in the bottom two rows of Fig. 1. Each test lasted 5 min and no error correction, prompting or reinforcement was given during each pre-test (see Hammond et al. 2012). All participants were required to obtain scores below 50 % on both tests to be eligible for inclusion in the present study. Ten boys with FXS, and 10 matched controls met the inclusion criteria. Table 1 shows the participant characteristics.

The mean age of the participants with FXS was 15.75 years (SD = 4.95) and the mean age of participants in the control group was 15.1 years (SD = 4.24) years. As can be seen from Table 1, both groups were also well matched in terms of IQ, severity of autistic symptoms, as well as baseline performance on each MTS pre-test. Three participants in the FXS group and four participants in the control group obtained scores on the SCQ that were considered to be in the ASD range (i.e., obtained scores of 15 or above).

Sessions were conducted in one of two rooms located within the Department of Psychiatry and Behavioral Sciences at Stanford University, each of which contained a table or desk, chairs, a laptop computer, and a computer mouse. All procedures were approved by the local Institutional Review Board and parental consent and participant assent was obtained in all cases.

Procedures

In each group, five participants received DTT on the computer, and five participants received DTT by a trained behavior analyst. Participants who received DTT on the computer did not differ from those who received DTT by the teacher on background characteristics (i.e., age, IQ, severity of autistic symptoms, and scores obtained on the MTS pre-tests). The following instructions were delivered verbally and demonstrated to all participants before training began:

Today you are going to work on some math tasks. First, you will be shown a picture or a number. You will also be asked a question. Pictures or numbers then will be displayed below, one of which will be the correct answer to the question. Your job will be to select the correct answer. Another math problem then will appear, and so on. You will also see some green tokens displayed. If you get a question correct, you will receive another token. If you get a question wrong, you will lose a token. Once you have five tokens, you get to play a fun computer game. Please try to do the best you can. And if you’re not sure of an answer, it’s okay to guess.

On the first couple of trials, participants were prompted vocally and/or physically if they did not appear to understand the procedure.

Computer-Assisted DTT

Participants who received training on the computer were required to use a Dell laptop computer equipped with a mouse. Sessions were conducted using the Discrete Trial Trainer (DT Trainer) (Accelerations Educational Software 2003) a commercially available software program designed for children with autism and other developmental disabilities. The DT Trainer contains over 200 instructional programs that are incorporated into more than 10 key instructional domains, including classification, counting, identification, math, money, sequencing, spatial relations, time, word analysis, written words, and “wh—” questions (who, what, when, etc.). For the purpose of the present study, two programs were employed: “fractions to pie charts” and “pie charts to decimals”. The stimuli shown in the top two rows of Fig. 1 were used in the fractions to pie-charts program. The stimuli shown in the bottom two rows of Fig. 1 were used in the pie-charts to decimals program. These items were the only stimuli used in the present study.

To ensure that the training procedures were consistently applied across participants, we selected the default “Intermediate Learner 1” training profile for each participant and began training in the “Random” mode. At the start of training, therefore, the computer selected one of the stimulus relations at random and then a sample stimulus was randomly selected from the six available sample items in that particular relation. On each trial, the sample stimulus was presented centrally at the top of the screen, which was accompanied by a pre-recorded vocal prompt (e.g., “Show me the correct fraction” or “Find the correct decimal”, depending on which stimulus relation had been selected). Three comparison stimuli (one being the correct matching stimulus, the other two being incorrect or “distracter” stimuli selected from the remaining five comparison stimuli in the program) were then simultaneously displayed equidistant underneath the sample stimulus for the remainder of the trial (see Fig. 2, upper left panel). If the participant responded correctly before the trial timed out (i.e., within 10 s), all comparison stimuli were removed and the correct target stimulus was presented at the middle of the screen, accompanied by pre-recorded vocal reinforcement (e.g., “Good job, you showed me one third!”) for 3 s. Multiple variants of automated reinforcement were programmed (e.g., “Awesome!”; “Nice!”; “Fantastic!”) in an effort to prevent satiation to the specific form of praise; the participant’s name also was included in the statement on 10 % of the trials (e.g., “Kevin, good job, you showed me one third!”). Following a 2-s inter-trial interval (ITI), the next trial began. If the participant responded incorrectly on a trial, the two incorrect “distracter” stimuli were removed and the correct matching stimulus remained on the screen for 3 s, accompanied by a pre-recorded vocal “reminder” of the correct matching stimulus (e.g., “One third”; “Point 33”). Following a 2-s ITI, that same sample stimulus (to which the participant had just responded incorrectly) was presented again on the next trial.

Top panel Screenshots of the trial presentation employed in the computer-assisted DTT. On an “unprompted” trial (left), the sample stimulus is displayed at the top of the screen, and the three comparison stimuli are displayed in a horizontal row at the bottom of the screen. On a “prompted” trial (right), the two incorrect distracters are moved away from the bottom of the screen to facilitate correct responding on the trial. Bottom panel Photographs of the trial presentation employed in the teacher-assisted DTT. On an unprompted trial (left), the three comparison stimuli are displayed in a horizontal row closest to the student. On a prompted trial (right), the two incorrect distracters are moved away from the student to facilitate correct responding on the trial

If the participant responded incorrectly on two successive trials to a particular item, position prompts were included on the next trial to facilitate correct responding (and hence, maintain high levels of reinforcement). These trials are called “prompted” trials. The prompting procedure involved the computer moving the distracter stimuli away from the correct matching stimulus—and closer to the sample stimulus—contingent on successive incorrect responses (see Fig. 2, upper right panel). If the participant selected a correct response on a prompted trial, the computer then faded in the distracter stimuli back in again by moving them closer to the correct stimulus on the bottom row of the screen—first with only one distracter stimulus present, and then with two distracter stimuli present—until the trial contained no prompts (i.e., all comparison stimuli were placed horizontally on the bottom row of the screen as before). In this way, the computer tracked the participant’s performance on each sample stimulus and adjusted the trial difficulty accordingly.

Following five consecutive correct responses to an item, that particular item was presented 50 % less often. If the participant subsequently responded correctly on a further five consecutive trials with that item, the item was marked “proficient” and was removed from the training array (i.e., no longer presented to the participant). However, if the participant responded incorrectly on two successive trials when that item was presented only intermittently, the item was moved back to more frequent presentation. The computer moved back and forth between training each relation, depending on performance of items within that relation. “Mixed” training was therefore employed i.e., blocks of training trials on one stimulus relation were followed by blocks of training trials on the other stimulus relation.

During training, five white circles (1 cm in diameter) were presented in a vertical column at the lower-right corner of the screen. Each circle represented a potential “token” that could be earned contingent on correct responding (see Fig. 2, upper panel). At the start of training, four of the circles were filled green. If the participant responded correctly on an unprompted trial (i.e., without prompts), a circle in the column immediately was filled green—indicating that a token had been earned for correct responding. If the participant responded incorrectly on an unprompted trial, one of the green-filled circles was changed to an open, unfilled circle (indicating that a token had been removed; response cost). If the participant did not have any tokens, all circles remained unfilled following an incorrect response, see Fig. 2, lower panel. Immediately following a correct trial in which a fifth token had been earned, one of four video games was presented for 15 s: “Whack-an-alien,” “Whack-a-spider,” “Pong,” and “Blinky.” (These games come pre-installed in the DT Trainer and require active involvement from the participant.) Following completion of the game, the next trial was initiated with all circles being unfilled. If the participant did not respond on a trial within 10 s, the word “Go” was displayed on the screen, which served as a prompt for the participant to click on the screen (using the mouse) to repeat the previously presented trial. The trial was then repeated with the same sample stimulus; however, the order of the comparison stimuli was randomly rotated.

Each session was programmed to last 15 min. If the participant responded incorrectly on the last scheduled trial, however, sessions were not terminated until a correct response was emitted. At the end of each session, the phrase “All Done!” was displayed in the center of the screen, accompanied by a pre-recorded vocal statement, “All done.” Data on the participant’s progress through the programs were then automatically saved to the computer so that the next session began at the appropriate training point. Small breaks were allowed between sessions according to the individual needs of each participant. Training continued either until (a) the computer had marked all 12 items as proficient or (b) at least 1,000 trials had been completed on the first day of training, with an additional 500 trials completed on the second day of training.

Teacher-Assisted DTT

Sessions were conducted by one of two trained female teachers who were Board Certified in Behavior Analysis. The stimuli shown in Fig. 1 were laminated onto 18 “flash” cards, each measuring 4.5″ × 3″. Each teacher implemented DTT in a manner similar to that described in the computer-assisted DTT above. At the start of training therefore, the teacher selected a stimulus relation to train (i.e., fractions to pie-charts or pie-charts to decimals), and then randomly selected a sample stimulus from the six available cards for that particular relation. At the beginning of each trial, the teacher placed the sample stimulus directly onto a white poster board measuring 28″ × 22″ that was positioned horizontally on a table in front of the participant. The teacher then placed three comparison stimuli on the board equidistant beneath the sample card (one being the correct stimulus, the other two being incorrect or “distracter” stimuli that were selected from the remaining five comparison stimuli), simultaneously delivering a vocal prompt (e.g., “show me the correct fraction” or “find the correct decimal”) (see Fig. 2, lower left panel). If the participant selected the correct stimulus (by pointing to, touching or picking up the card), the teacher removed the distracter stimuli and placed that correct stimulus directly beneath the sample stimulus for approximately 3 s. The teacher also delivered positive reinforcement (e.g., “Good job, you showed me one third!”). The teacher then removed all stimuli from the board, and initiated the next trial.

If the participant responded incorrectly on a trial, the teacher removed the two incorrect distracter stimuli and moved the correct matching stimulus directly beneath the sample stimulus. During this correction procedure, the correct matching stimulus remained on the board for approximately 3 s while the teacher simultaneously delivered a vocal “reminder” of the correct matching stimulus (e.g., “One third”; “Point 33”). The teacher then removed the stimuli from the board and initiated the next trial using the same sample stimulus.

The same advancement/fallback procedures were followed as described above for the computer-assisted DTT. Thus, if the participant had responded incorrectly on an item across two successive trials, the prompting procedure was then initiated on the next trial for that item. The teacher employed the same prompting procedure as described above by positioning the incorrect distracter stimuli further away from the correct matching stimulus and closer to the sample card, contingent on incorrect responding, and subsequently fading the prompts contingent on correct responding (see Fig. 2, lower right panel). As in the computer-assisted DTT above, if the participant obtained five consecutive correct responses to an item, that item was presented less frequently. If the participant subsequently obtained five consecutive correct responses to that item, even when it was presented less frequently, that item was marked as proficient (and removed from the training array). However, if the participant obtained two consecutive incorrect responses to that item, that stimulus was presented more frequently again. If the participant did not respond on a trial within 10 s, the teacher removed the stimuli from the board and the trial was repeated with the same sample stimulus; however, the order of the comparison stimuli was randomly rotated.

To simulate the token reinforcement and response cost components used in the DT Trainer, five green plastic circular disks (each measuring 1″ in diameter) were used as tokens. At the start of training, the teacher arranged four of the tokens in a vertical column on the right-hand side of the board and either added a token to, or removed a token from, the column contingent on correct or incorrect responding. A laptop computer was positioned to the right of the board. Once the participant had acquired five tokens, the teacher indicated to the participant that they could play one of the four computer games by clicking the mouse. While the participant was playing the game, the teacher removed the tokens from the board. Once the game had finished (following approximately 15 s), the teacher initiated the next trial with no tokens being displayed.

Throughout training, a second teacher remained in the session room and collected data on the number of trials that each item had been presented; the number of correct, incorrect, and non-responses to that item; and whether the trial was prompted or not. Based on this information, the data collector notified the teacher (typically during videogame access or ITIs) which items were to be presented on the upcoming trials, and which items (if any) should be removed from the training array.

Given the additional time required for the teacher to manually present the stimuli, remove or add tokens, and schedule videogame reinforcement, ITIs typically were much longer than 2 s (i.e., the ITI for the computerized DTT). In fact, sessions with the teacher typically lasted twice as long (~30 min) for a comparable number of trials to be presented as the computer-assisted DTT, largely due to the longer ITIs. Similar to the computer-assisted DTT, however, small breaks were allowed between sessions according to the individual needs of each participant. Training continued either until (a) all 12 items were marked as proficient or (b) at least 600–800 trials had been completed.

Recording and Analysis

For participants who received computer-assisted DTT, data were automatically recorded on a trial-by-trial basis for each task concerning whether a trial was prompted or not, the participant’s response time (in seconds), and whether the participant had responded correctly on the trial. For participants who received teacher-assisted DTT, all sessions were recorded by a digital video camera, which was positioned so that stimulus presentation and participant responding were clearly visible on each trial. After training completion, the video file was uploaded to a laptop computer, and subsequently linked directly to ObsWin: a real-time observational data capture system (Martin et al. 1998). An observer subsequently scored each participant’s video so that the same dependent measures as those obtained in the computer-assisted DTT could be extracted (see above). For each trial, trial time was calculated by subtracting the time of trial onset (in seconds)—defined as when the teacher placed the stimuli on the poster board—from the time at which the participant responded (in seconds).

For each task, learning rates were computed by dividing the total number of correct responses that participants had obtained on unprompted trials by the total trial time on both prompted and unprompted trials (in minutes). Given that ITI times and videogame times are not included in the learning rate calculation, this analysis ensured that the data obtained from the two modes of DTT delivery would be comparable. For each dependent measure, we conducted a 2 (group) × 2 (task type) mixed-model ANOVA and examined the main effects of group, task type and the interaction between group and task type. The alpha level was set at .05 in each case.

Contingency Analysis

To examine whether participants’ responses on a trial were influenced by the contingency they had received on the previous trial, we conducted a sequential analysis of the data (see Bakeman and Quera 2011). For each task, the three possible outcomes for each learning trial during DTT i.e., prompt (P), error correction (E), or reinforcement (R)—were represented as a single event stream of mutually exclusive and exhaustive codes across time as shown in the example below.

Trial, t | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 | 15 | 16 | 17 | 18 | 19 | 20 | etc. |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

Outcome | R | E | R | P | E | E | P | R | E | P | R | R | E | P | E | E | P | E | R | E | … |

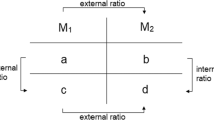

These event-sequential data are similar to those described in studies of child parallel play (Bakeman and Brownlee 1980) and marital interaction (Gottman 1979). Using the GSEQ software package (http://www2.gsu.edu/~psyrab/gseq/Download.html, version 5.1), we generated three 2 × 2 “lag 1” contingency tables for each contingency as follows:

Prompt (P) | Error correction (E) | Reinforcement (R) | ||||||

|---|---|---|---|---|---|---|---|---|

Trial t | Trial t | Trial t | ||||||

R | Not R | R | Not R | R | Not R | |||

Trial t − 1 | ||||||||

P | a | b | E | a | b | R | a | b |

Not P | c | d | Not E | c | d | Not R | c | d |

Results

Figure 3 (upper panel) shows the proportion of correct responses that participants obtained on unprompted trials for each task. In boys with FXS, the mean proportion of correct responses obtained on unprompted trials was .53 (SD = .13) on the fractions to pie-charts task and .58 (SD = .10) on the pie-charts to decimals task. In matched controls, the mean proportion of correct responses obtained on unprompted trials was .70 (SD = .12) on the fractions to pie-charts task and .64 (SD = .15) on the pie-charts to decimals task.

A mixed-model ANOVA resulted in a significant interaction between group and task type (F(1,18) = 11.90, p = .003), indicating that boys with FXS obtained a significantly lower proportion of correct responses on unprompted trials on the fractions to pie-charts task.

Figure 3 (middle panel) shows the proportion of prompted trials received by participants on each task. In boys with FXS, the mean proportion of prompted trials received was .38 (SD = .20) on the fractions to pie-charts task and .28 (SD = .23) on the pie-charts to decimals task. In matched controls, the mean proportion of prompted trials received was .23 (SD = .19) on the fractions to pie-charts task and .25 (SD = .18) on the pie-charts to decimals task. A mixed-model ANOVA resulted in a significant interaction between group and task type (F(1,18) = 6.93, p = .017), indicating that boys with FXS received a significantly higher proportion of prompted trials on the fractions to pie-charts task.

Figure 3 (lower panel) shows the learning rates obtained by participants on each task. In boys with FXS, the mean learning rate was 2.43 (SD = 1.58) on the fractions to pie-charts task and 3.13 (SD = 1.51) on the pie-charts to decimals task. In matched controls, the mean learning rate was 3.88 (SD = 2.01) on the fractions to pie-charts task and 3.69 (SD = 1.98) on the pie-charts to decimals task. A mixed-model ANOVA resulted in a significant interaction between group and task type (F(1,18) = 6.78, p = .018), indicating that boys with FXS obtained significantly lower learning rates on the fractions to pie-chart task.

Contingency Analysis

As described above, we conducted an analysis to determine whether a participant’s response on a particular trial was influenced by the contingency that they had received on the previous trial (i.e., whether they had received a prompt, error correction or reinforcement). Figure 4 shows odds ratios obtained for each contingency for each task and group.

The figure shows that, for boys with FXS, the odds of a participant selecting a correct response given that an error correction was received on the previous trial was significantly greater than chance on both tasks (odds ratios of 1.60 and 3.78 respectively). Conversely, the odds of a participant selecting a correct response on a trial given that reinforcement was received on the previous trial was significantly lower than chance on both tasks (odds ratios of .63 and .27 respectively). These data indicate that boys with FXS were able to provide a correct response immediately following an error correction. However, they were unable to do so following a previously reinforced response. For the controls, the odds of a participant selecting a correct response given a previously reinforced response was significantly higher than chance on both tasks (odds ratios of 1.48 and 1.54 respectively). These data indicated that, in contrast to boys with FXS, controls continued to select the correct response following a previously reinforced response.

Association with Age, IQ and Autistic Symptoms

Correlation analyses were conducted in each group to determine whether the learning measures obtained on each task were associated to chronological age, IQ and/or scores obtained on the SCQ. Results showed that, in the control group, there was a significant negative association between scores obtained on the SCQ and learning rates obtained on the fractions to pie-charts task (r(10) = −.735, p = .015). These data indicated that participants in this group who displayed more autistic features were slower to learn the fractions to pie-charts task. There were no other associations between participant characteristics and the learning measures.

Discussion

It is well established that individuals with FXS experience difficulties learning new skills, particularly those related to mathematical concepts and calculations. Given the significant issues involved in obtaining reliable and valid assessments of mathematical ability in boys with FXS, we examined whether a standardized teaching procedure—discrete trial training (DTT), a component of interventions commonly designed for individuals with autism—could be employed to identify learning impairments in boys with FXS. Because DTT affords a highly motivating, and structured learning environment, it seems plausible that DTT could provide a useful platform for measuring learning effects, particularly for populations of individuals who usually score on the floor of standardized tests, such as boys with FXS. Specifically, we measured the performance of boys with FXS while they were learning new (or relatively unfamiliar) math material i.e., fraction to pie-chart and pie chart to decimal equivalences.

The results of the study showed that boys with FXS were significantly slower to learn the fraction to pie-chart equivalences, obtaining significantly fewer correct responses on unprompted trials, and requiring a significantly greater proportion of prompted trials to learn the task. Given that learning fraction to pie-chart equivalences can be considered to involve the ability to use one-to-one correspondence, these findings provide an empirical demonstration that specific learning impairments in mathematical reasoning, purportedly characteristic of the syndrome in girls with FXS, can also be detected in low-functioning boys with FXS using DTT. Furthermore, given that performance on the two tasks did not differ in matched controls diagnosed with a non-specific developmental disability, the impairment detected in boys with FXS appeared to be specific to FXS. Further studies should be conducted to determine whether individuals diagnosed with other genetic syndromes associated with intellectual disability (e.g., Down syndrome, Prader-Willi syndrome) exhibit similar or different learning profiles.

The conditional probability analysis of the trial-by-trial data was quite revealing. The odds ratios obtained for the contingencies on both tasks indicated that boys with FXS were less likely to choose the correct response on a trial if they had received reinforcement for selecting a correct response on the previous trial. It thus appears that boys with FXS were simply selecting the response that had been reinforced on the previous trial, even though the sample stimulus on the current trial had changed. The impairment was not necessarily related to an inability to remember what the correct response was from the previous trial because boys with FXS were highly proficient at selecting the correct response given that they had received an error correction on the previous trial. Thus, the strategy that boys with FXS appeared to be adopting was a sub-optimal “win-stay” strategy (Solomon et al. 2011).

A fundamental issue that arises in testing individuals with developmental disabilities concerns the fact that prompting, error correction and reinforcement are usually not forthcoming during test trials on most standardized tests. That is, on each test trial, the individual is required to perform under “extinction” conditions, without contingent prompting, error correction or reinforcement. For example, on the WISC-IV (Wechsler 2004), during test administration, the instructions to the examiner state: “Do not reinforce the child’s performance by saying “good” or “right” after the child responds correctly… If the child asks for help… say “I want to see how well you can do it yourself” (p. 37). This indicates that participants are placed on extinction during the assessment. We believe that this is a highly significant issue for individuals with developmental disabilities given that prompting, error correction, and reinforcement have been shown to be critical features for facilitating and maintaining the cognitive and behavioral repertoires of individuals with developmental disabilities. If prompting, error correction and reinforcement are excluded from test trials, individuals with developmental disabilities may no longer be motivated to continue responding, and thus may be more likely to obtain scores on the floor of the test. A solution to this problem, and the one that is proposed here, is to measure performance under optimal (and motivating) conditions i.e., on a learning platform, rather than under suboptimal extinction conditions. We believe that this approach will provide a more valid measure of performance.

We included boys with FXS in the study if they had obtained scores on the floor of a standardized test of mathematical reasoning (the WISC Arithmetic subtest), and had scored below 50 % on pre-tests of fraction to pie-chart and pie-chart to decimal equivalencies. This was done to ensure that participants were relatively unfamiliar with the stimuli and therefore could be evaluated while they were learning new material. However, in adopting these criteria, we excluded several boys with FXS who obtained scores higher than 50 % on the match-to-sample pretests. The study sample may therefore not be representative of all boys with FXS and may serve as an underestimation of the performance levels of boys with FXS following DTT. The fact that we recruited a group of matched controls who also obtained scores on the floor of the WISC Arithmetic subtest does not, of course, imply that individuals with FXS do not exhibit impairments in math relative to IQ-matched controls. We wanted to determine whether math performance impairments in FXS could be detected relative to matched controls as the children were learning new material—not whether individuals with FXS were impaired at pre-test relative to matched controls. By selecting two groups that were comparable at baseline on the math tasks (and had obtained scores on the floor of a standardized test of mathematical ability), it was feasible to attribute differences to the training/independent variable and thus, determine whether individuals with FXS evidenced weaknesses in learning new math skills relative to controls, the primary purpose of the investigation. We did manage to obtain valid scores on a brief standardized test of general intellectual functioning—the Wechsler Abbreviated Scale of Intelligence (WASI) (Wechsler, 1999)—with this sample. However, this test contains only 4 subtests and does not include an assessment of mathematical reasoning.

A significant benefit of using DTT to identify potential learning impairments in individuals with developmental disabilities is that prompts are programmed into the task to ensure that responding is maintained throughout a session. Although prompting strategies are commonly included during discrimination training to reduce the likelihood that responding will come under the control of incorrect stimuli or stimulus situations, it is possible that the least-to-most (position) prompting procedure utilized in this study may have impacted the development of proper stimulus control and therefore contributed to the less-than-perfect outcomes obtained for some participants (Butter and Mulick 2001). Following successive incorrect responses, for example, the DT Trainer positions the distracter stimuli further away from the correct stimulus—yet closer to the sample stimulus—contingent on successive incorrect trials. Anecdotally, several participants were observed to consistently select these distracter stimuli—which may have been due to the fact that they were placed in closer proximity to the sample stimulus (i.e., in line with their eye orientation), relative to the correct matching stimulus.

Related to the above, we did not require an observing response to be made prior to the participant making a selection, which may have weakened stimulus control and/or strengthened prompt dependency for a subset of participants. (An observing response, which involves the participant touching or somehow identifying the sample stimulus prior to the display of the comparison stimuli, is sometimes included in DTT procedures to ensure that participants are attending to the relevant sample stimulus before making a response.) Anecdotal observations suggest that an observing response may have been needed for at least one of the participants who received computer-assisted DTT because this participant simply selected the stimulus that was positioned closest to the sample stimulus with such a brief response time as to reasonably prohibit discrimination of all stimuli depicted on the screen. Of the participants who received teacher-assisted DTT, two matched control participants needed constant reminders to look at the sample stimulus before making a selection. Finally, one participant with FXS who received teacher-assisted DTT was noted to engage in escape behavior on two occasions by pretending to fall asleep or by pulling his shirt over his face.

Given that the purpose of the study was to provide preliminary data on the utility and feasibility of using DTT to identify impairments in math learning in boys with FXS, it should be pointed out that this is not necessarily how DTT would be implemented in practice. We also recognize that only a limited range of stimuli were presented to the participants across a brief period of time. However, given these initial results, it appears that learning impairments in mathematical reasoning associated with FXS can be detected using DTT and in particular, that perseveration on previously reinforced trials during learning may inhibit the ability of boys with FXS to form one-to-one correspondences between mathematical stimuli. Early interventions that employ computerized instructional formats to target this learning problem should be considered for implementation across the school and home settings for young children with FXS.

References

Accelerations Educational Software. (2003). The Discrete Trial Trainer: User’s guide. West Columbia, SC: Accelerations Educational Software.

Bakeman, R., & Brownlee, J. R. (1980). The strategic use of parallel play: A sequential analysis. Child Development, 51, 873–878.

Bakeman, R., & Quera, V. (2011). Sequential analysis and observational methods for the behavioral sciences. Cambridge: Cambridge University Press.

Bennetto, L., Taylor, A. K., Pennington, B. F., Porter, D., & Hagerman, R. J. (2001). Profile of cognitive functioning in women with the fragile X mutation. Neuropsychology, 15(2), 290–299.

Butter, E. M., & Mulick, J. A. (2001). ABA and the computer: A review of the Discrete Trial Trainer. Behavioral Interventions, 16, 287–291.

Carr, E. G., & Dores, P. A. (1981). Patterns of language acquisition following simultaneous communication with autistic children. Analysis and Intervention in Developmental Disabilities, 1, 347–361.

Carr, E. G., Kologinsky, E., & Leff-Simon, S. (1987). Acquisition of sign language by autistic children. III: Generalized descriptive phrases. Journal of Autism and Developmental Disorders, 17(2), 217–229.

Coe, D., Matson, J., Fee, V., Manikam, R., & Linarello, C. (1990). Training nonverbal and verbal play skills to mentally retarded and autistic children. Journal of Autism and Developmental Disorders, 20(2), 177–187.

Cornish, K. M., Turk, J., Wilding, J., Sudhalter, V., Munir, F., Kooy, F., et al. (2004). Annotation: Deconstructing the attention deficit in fragile X syndrome: A developmental neuropsychological approach. Journal of Child Psychology and Psychiatry, 45(6), 1042–1053.

Gottman, J. M. (1979). Marital interaction: Experimental investigations. New York: Academic Press.

Hall, S. S., Hammond, J. L., Hirt, M., & Reiss, A. L. (2012). A ‘learning platform’ approach to outcome measurement in fragile X syndrome: A preliminary psychometric study. Journal of Intellectual Disability Research, 56(10), 947–960.

Hammond, J. L., Hirt, M., & Hall, S. S. (2012). Effects of computerized match-to-sample training on emergent fraction-decimal relations in individuals with fragile X syndrome. Research in Developmental Disabilities, 33(1), 1–11.

Hessl, D., Nguyen, D. V., Green, C., Chavez, A., Tassone, F., Hagerman, R. J., et al. (2009). A solution to limitations of cognitive testing in children with intellectual disabilities: The case of fragile X syndrome. Journal of Neurodevelopmental Disorders, 1(1), 33–45.

Howard, J. S., Sparkman, C. R., Cohen, H. G., Green, G., & Stanislaw, H. (2005). A comparison of intensive behavior analytic and eclectic treatments for young children with autism. Research in Developmental Disabilities, 26(4), 359–383.

Krantz, P. J., Zalenski, S., Hall, L. J., Fenske, E. C., & McClannahan, L. (1981). Teaching complex language to autistic children. Analysis and Intervention in Developmental Disabilities, 1, 259–297.

Lovaas, O. I., Freitas, L., Nelson, K., & Whalen, C. (1967). The establishment of imitation and its use for the development of complex behavior in schizophrenic children. Behaviour Research and Therapy, 5(3), 171–181.

Lynch, D. C., & Cuvo, A. J. (1995). Stimulus equivalence instruction of fraction-decimal relations. Journal of Applied Behavior Analysis, 28(2), 115–126.

Martin, N., Oliver, C., & Hall, S. (1998). ObsWin: Software for the collection and analysis of observational data. Birmingham: University of Birmingham.

Maydak, M., Stromer, R., Mackay, H. A., & Stoddard, L. T. (1995). Stimulus classes in matching to sample and sequence production: The emergence of numeric relations. Research in Developmental Disabilities, 16(3), 179–204.

Mazzocco, M. M. (2001). Math learning disability and math LD subtypes: Evidence from studies of Turner syndrome, fragile X syndrome, and neurofibromatosis type 1. Journal of Learning Disabilities, 34(6), 520–533.

Mazzocco, M. M., & Devlin, K. T. (2008). Parts and ‘holes’: Gaps in rational number sense in children with vs. without mathematical learning disability. Developmental Science, 11, 681–691.

Mazzocco, M. M., Singh Bhatia, N., & Lesniak-Karpiak, K. (2006). Visuospatial skills and their association with math performance in girls with fragile X or Turner syndrome. Child Neuropsychology, 12(2), 87–110.

McDonagh, E. C., McIlvane, W. J., & Stoddard, L. T. (1984). Teaching coin equivalences via matching to sample. Applied Research in Mental Retardation, 5(2), 177–197.

Murphy, M. M. (2009). A review of mathematical learning disabilities in children with fragile X syndrome. Developmental Disabilities Research Reviews, 15(1), 21–27.

Murphy, M. M., & Mazzocco, M. M. (2008). Rote numeric skills may mask underlying mathematical disabilities in girls with fragile X syndrome. Developmental Neuropsychology, 33(3), 345–364.

Murphy, M. M., Mazzocco, M. M., Gerner, G., & Henry, A. E. (2006). Mathematics learning disability in girls with Turner syndrome or fragile X syndrome. Brain and Cognition, 61(2), 195–210.

Rutter, M., Bailey, A., & Lord, C. (2003). The Social Communication Questionaire: Manual. Los Angeles: Western Psychological Services.

Smith, T. (2001). Discrete trial training in the treatment of autism. Focus on Autism and Other Developmental Disabilities, 16(2), 86–92.

Solomon, M., Smith, A. C., Frank, M. J., Ly, S., & Carter, C. S. (2011). Probabilistic reinforcement learning in adults with autism spectrum disorders. Autism Research, 4(2), 109–120.

Stoddard, L. T., & Sidman, M. (1967). The effects of errors on children’s performance on a circle-ellipse discrimination. Journal of the Experimental Analysis of Behavior, 10(3), 261–270.

Wechsler, D. (1999). Wechsler Abbreviated Scale of Intelligence. San Antonio: The Psychological Corporation.

Wechsler, D. (2004). Wechsler Intelligence Scale for Children (4th ed.). San Antonio: The Psychological Corporation.

Acknowledgments

We would like to thank the children and families for taking part in this study. This research was supported by Award Number K08MH081998 (PI: Scott Hall) from the National Institute of Mental Health and by a clinical grant from the National Fragile X Foundation (PI: Scott Hall).

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Hall, S.S., Hustyi, K.M., Hammond, J.L. et al. Using Discrete Trial Training to Identify Specific Learning Impairments in Boys with Fragile X Syndrome. J Autism Dev Disord 44, 1659–1670 (2014). https://doi.org/10.1007/s10803-014-2037-6

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10803-014-2037-6