Abstract

This paper proposes a divide and conquer skeleton which aids parallel system programmers by (1) reducing programming complexity, (2) shortening programming time, and (3) enhancing code efficiency. To do this, the proposed skeleton exploits three mechanisms of (1) work-stealing, and (2) communication/computation overlapping, and (3) architectural awareness in the proposed divide and conquer skeleton. Using the work-stealing mechanism, when a processing element reaches a low-load condition, the processing core fetches a new job from the waiting queue of other cores. The second mechanism uses special threads to enable the proposed skeleton to overlapping computations with communications. The third mechanism considers the architectural parameters of the host system e.g., size of L1 cache, network bandwidth, network latency to maximally match a divide and conquer problem with the proposed skeleton. To evaluate the proposed skeleton, three benchmarks of merge sort, fast Fourier transform, and standard matrix multiplication are developed by the proposed skeleton as well as customized programming. Experiments are done in both simulation and real implementation environments. The set of six codes are simulated using COTSon simulator and also implemented on 28 dual-core real system. Obtained results from simulations showed an average of 12.6% speed-up of the proposed skeleton as compared to the customized programming; obtained speed-up in real environment is 9.6%. Furthermore, programming aided by the proposed skeleton, is at least 70% faster than custom programming while this difference increases as the program volume increases.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Clusters of multi-core computers are becoming recently widespread due to their high processing power and high availability [1, 2]. Such systems conduct fast computation which is a challenging request in several fields of science e.g., engineering [3], data mining [1], data centers [1]. However, combining features of multi-core and cluster-based architectures results in a high complexity in the programming of these systems [4,5,6]. This complexity arises due to the following two challenges. The first challenge is different memory model in multi-core and cluster-based systems i.e., multi-core systems have a shared-memory whereas in cluster-based systems, each processing element has its own local memory [1, 7]. In fact, considering features of both memory models used in multi-core and cluster-based systems at the same time dramatically increases the programming complexity. The second challenge is that a parallel programmer has to handle a couple of control tasks e.g., creation and control of threads, load balancing, shared variables control, task scheduling, data distribution over the network [8].

Due to the mentioned points, custom programming in multi-core cluster-based systems is time consuming [9], complicated and prone to errors [10]. In addition, in custom programming, the program which is written for a specific architecture is not portable to other machines with different architectures [11]. Finally, when a custom program is written for a multi-core cluster-based system, the overall performance of the system is tightly depends on the experience and skillfulness of the programmer [11].

To tackle the mentioned drawbacks, the idea of skeleton-based programming has been proposed [3, 11] to decrease the programming complexity in multi-core cluster-based systems. An Algorithmic Skeleton is a predefined programming structure created by designer of skeleton at the first time; then, the skeleton can be used unlimitedly by several parallel programmers [12]. Because of certain structure of the skeleton, the programmer should develop his/her code according to the structure of skeleton. By this method, a programmer needs less effort to handle the relationship between program components, since the skeleton guarantees correct relation between program components in a parallel system. Every skeleton has several classes so called muscles to handle the correct functionality of the skeleton [3].

Several skeletons such as Muesli skeleton [13], Muskel [4], Fork/Join and Skandium [3, 14] have been proposed in the literature for different classes of algorithms. Muesli skeleton which is proposed in [13], offers some muscles which perform data send/receive over the network i.e., the skeleton lets the programmer to accomplish data communications in the network using the mentioned muscles. In this way, the Muesli skeleton can be used for a wide range of network topologies. Muskel skeleton [4] supports network of processing elements with single, multi, and many core per each processing element. This skeleton provides a specific muscle which gathers user preferences and runs the program based on these preferences. This skeleton does not support the class of divide and conquer algorithms [15]. Fork/Join skeleton [16] has been proposed for recursive programming model. Similar to the divide and conquer skeleton, fork/join skeleton generates some sub-problems and solve them; finally by aggregating all results, the primary problem is solved [15]. Fork/Join skeleton cannot simply support multi-core architectures [16]. Skandium skeleton which is proposed for shared memory parallel systems, has four muscles of split, condition, and merge and execution. In Skandium, a global pool of tasks is considered in which all tasks are in that thread for performance and take tasks from mentioned shared pool.

Other skeletons such as Sketo [17] and fastflow [18] have been also proposed which have a potential efficiency for a specific class of applications. Fastflow is set of custom programming mechanisms to support data stream with low latency and high bandwidth in multi-core processors [18]. This skeleton presents two mechanisms including communication channels and memory allocator to programmers. The communication channels which are half-duplex and asymmetric data streams are implemented with no memory queues. In this way, this skeleton is advantageous for particular working sets like digital signal processing that carries out sequence of conversions on input streams.

SkeTo is a constructive parallel skeleton library intended for distributed environments such as PC clusters. SkeTo provides data parallel skeletons for processing on lists, matrices, and trees structures. SkeTo enables users to write parallel programs as if they were sequential, since the distribution, gathering, and parallel computation of data are concealed within constructors of data types or definitions of parallel skeletons.

Although several skeleton have been proposed in the literature, to the best of our knowledge, there is no skeleton exactly matches features of multi-core cluster-based parallel systems. In this paper, we propose a divide and conquer skeleton for cluster of multicore computers. The proposed skeleton effectively simplifies the programming and improves the overall performance of parallel system with comparison to custom programming method.

The rest of the paper is organized as follows. In Sect. 2, the proposed skeleton and its innovations are described. In Sect. 3, simulation environment and the obtained results are presented. Finally, Sect. 4 presents the conclusions and suggestions for future works.

2 Proposed Skeleton

In the divide-and-conquer-based approach [19], a problem is considered as a task with some input and output objects. In order to solve the problem and generation of the output objects, the task may generate some child tasks based on a division factor. Then, each child task which is fed by a sub-set of input objects generates a sub-set of output objects. Similarly, each child task itself, may generate some new children according to its input and the division factor. A task also may generate some output objects by running a serial algorithm on its input objects. Every task waits for the completion of the processes of its own children, then it aggregates the results of its children to make the output.

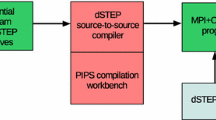

To maximize the task parallelism of the proposed skeleton, a task queue is assigned to each processing core. Tasks are selected by the core form of its local queue in a FIFO manner to be executed by the core. To compensate uneven load balanced among queues of a cluster, the proposed skeleton uses the work-stealing among the queues of a cluster [20, 21]. Figure 1 presents the block diagram of the proposed skeleton. As shown, this skeleton contains four dual-core nodes, each having its own dedicated task queue. Task executions are done from the top of the queue and work-stealing is done from the bottom of the queue.

Using the proposed skeleton, the execution of a task begins from one node in the network and continues by generating and expanding the tasks to the other nodes. Finally, the execution ends with gathering the results at start node. In the following text, the structural design and of the proposed skeleton will be discussed in details.

2.1 Muscles of the Proposed Skeleton

As mentioned, a programming skeleton is a predefined structure which aids the programmer to easily develop his/her own code. In each programming skeleton, specific classes named muscles are responsible for doing task execution, task management, task generation and so on. Although all muscles of the proposed skeleton should be exist in any instance of the skeleton, some of them should be developed by the user. In the proposed divide-and-conquer skeleton, task generation as well as task execution are done by muscles of the skeleton, the user is just required to implement the functions of division, execution and aggregation muscles. In the following text, muscles of the proposed skeleton which should be developed by the user are described in details. Code level development of the muscles for a sample of parallel code i.e., parallel merge sort algorithm are introduced in Sect. 3.3.

1-Split Muscle is used to split the input data set into K parts then will be fed into newly generated child tasks.

2-Execute Muscle, when this muscle is called, it runs the serial algorithm on the input data set and returns corresponding output data set.

3-Merge Muscle, aggregates K homogenous outputs to produce one output object:

4-netCondition Muscle, the muscle checks the input data set if it should be divided among some processing nodes of the cluster. To do this, the muscle considers the cost of task/data spit with respect to the performance gain of the split. The muscle returns a Boolean value allowing/not allowing the task/data split.

5-mcCondition Muscle, the muscle checks the input data set if it should be divided among some cores of the current processing node.

6-Input_Pack Muscle, the muscle tries to compress input data set before sending it over the network. This reduces the network traffic as well as network latency.

7-Input_Unpack Muscle, Making the required data structures from received input data compression:

8-Output_Pack Muscle, children’s output compression of one task which all its children have finished their jobs and it’s ready to aggregate before it would be sent to the other node:

9-Output_Unpack Muscle, Making the required data structures from compressed data for results of one task’s children in the destination node.

10-Input_Weight Muscle, the muscle is used to determine the volume of the input data set. Because of the high size of the input data set, the skeleton cannot calculate data volume by itself. Therefore, this muscle is needed to serve mentioned function for the skeleton.

11-Output_Weight Muscle, calculates the size of output data set.

2.2 Skeleton Initialization

At the beginning of algorithm execution, input data set which is considered as an array \(\hbox {A}= \left\{ {\hbox {A}_1 ,\hbox { A}_{. ..} ,\hbox { A}_{\mathrm{N}-1} ,\hbox {A}_\mathrm{N} } \right\} \) is located in one of the network nodes. In each step of execution, regarding the dividing factor, the input data are divided, and distributed among some nodes of network to accomplish parallel execution of the tasks in cluster level. The dividing factor determines how the input data set can be divided i.e., how many nodes subsets. This factor is an algorithm dependent factor, for example, in the merge sort algorithm, the dividing factor is 2, therefore in each step input data is divided into two parts. It means that input set A is divided into two sub-arrays \(\left\{ {A_1, A_2, \ldots , A_{\frac{N}{2}} } \right\} \) and \(\left\{ {A_{\frac{N}{2}+1}, A_{\frac{N}{2}+2}, \ldots , A_N} \right\} \).

As mentioned, the proposed skeleton is applicable for clusters of processing nodes where each node has some cores. In order to maximize the parallel computation of a task, both cluster and multi-core levels are taken into account. To this end, in the design of the proposed skeleton, two levels for load distribution are considered: the cluster level and the multi-core level.

The skeleton checks the following condition to continue dividing input data, before the execution of the algorithm.

where d is the number of times that the input data have already been divided so far, K is the division factor, and m represents the number of nodes in the cluster. At the beginning of the process, the node 0, from which the execution of the program starts, divides the input data by K if the net-condition is met and if Eq. (1) holds true. Then, it keeps the first part of the divided data for itself and assigns the remaining (\(K-1\)) parts to other nodes of the network. The destination for each part of the divided data is determined based on MPI rank using the following formula:

where r is MPI rank of the current node, \(i \in \left\{ {0, 1, 2, ...,\hbox { K}-1} \right\} \) index of generated data part, K the division factor in the user algorithm, m the number of nodes in the cluster, and d data division depth.

After receiving the input data, each node divides the data and assigns a task to it if net-condition and Eq. (1) are met. The data divided along with its assigned task are sent to a destination determined by Eq. (2). However, if one or both of the conditions do not hold true, the corresponding task or tasks are inserted in the task queues of the processing cores.

2.3 Multi-Core Level Load Balancing

The proposed skeleton adds tasks to task queue of every processing core to run tasks by cores. Allocation of a separate queue for each processing core, significantly mitigates the competition among the cores in the construction of queue [22]. Every core assigns its generated tasks into its own dedicated queue. This improves local access since the generated tasks most probably need their parents data. However, if task queue of a core is empty, the skeleton randomly selects a neighbor core and will steal the top queue task of the core. If the selected core has also the empty task queue, another random core will be examined.

Despite having a similar structure, the tasks have different input data and state variables with different values. From network point of view, some tasks cannot be transmitted due to their imposed overheads. So we classify tasks in the skeleton into network tasks and multi-core tasks. All the network and multi-core tasks are executable without difference in terms of execution scheduling. However, only network tasks can be sent to other cluster nodes to offer load balancing over the network nodes. In every step of the task division process, the output of the net-condition muscle is used to determine the child task if it is of network type or of multi-core type. The user should design the net-condition muscle considering (1) a set of architectural parameters including the average network delay, data communication bandwidth, size of L1 cache, number of cores and nodes in the system, and (2) a set of algorithmic parameters including, K, the division factor in the user algorithm, and the input data length. The set of architectural parameters are fed into the proposed skeleton via a configuration file which is read by muscles of the proposed skeleton. Programmer needs to know these parameters to develop a netcondition and mccondition muscles of the proposed skeleton.

The processing core checks the state of the task after selecting it from its queue. If the task is at the division state, the mc-condition is checked for it. If the mc-condition output is true, some children tasks are generated by eliciting the split muscle. If the output of the mc-condition has a negative value, on the other hand, a serial algorithm is performed on the input data by the Execute muscle.

According to [20], the best size for the data set to perform serial algorithm on it is the size of the level one cache of cores. Indeed, in the best case, the separation should be continued until the data size become equal to the level one cache size. In this situation, all data required by the serial algorithm is available on its local cache memory. So, in developing the design of the mc-condition muscle, size of data, and size of level one cache of processing cores should be considered. If the selected task is at the aggregation state, the Merge muscle is used to develop the aggregation of children’s results so that the output task may be created. For the lifecycle of the tasks to be controlled more simply, once a task generates its child tasks by dividing input data, it is moved outside the execution queue until all the results for its children tasks are obtained. When all children tasks are executed, the last child takes its parent into the queue in aggregation state. To realize this, all the children of one task have a pointer to their parent, and there is also a counter in the parent task to save the number of the child tasks which is not executed yet. In addition, if a child task is sent to another node of the cluster for execution, after completion, its result must sent back to its original node containing the parent.

2.4 Cluster Level Load Balancing

No matter how justly and symmetric the initial load description is, during run time there will be some avoidable imbalances [7]. For core level, work-stealing offers load balancing at a very low cost. However, to reach balanced load at the cluster level, a more complex solution is needed i.e., like work-stealing in core level, a job can be harvested from another node. This seems impossible due to the independence of the memory of the cluster nodes. So, any alternative solution to this problem must include accessibility to the tasks of other nodes. According to this and to the network platform, a client-server model is proposed to do load balancing in cluster level. That is, the nodes needing a job should request a job from the nodes with extra jobs and should wait to receive the job from them. In the proposed skeleton, when a node is idle, it sends job request to a subset of its adjacent nodes. If no job is received for the adjacent nodes, the adjacency list will be expanded to more nodes. Once a job is received, the process of sending job requests to other nodes will be stopped. In case of failure in receiving jobs from others, the node delays further requests for job by a specific time, so that the network does not become saturated by repeated requests. Equation (3) defines the adjacency list for a node.

where m denotes the number of nodes, b is random number in \(\left[ {0,\frac{m}{4}} \right] \) interval. \(a= 0\) at first and will be incremented by one when no job sent back as response of job request to reach at most to \(\lceil \frac{m}{4}\rceil \) i.e., \(a= 1, 2,...,\lceil \frac{m}{4}\rceil \).

When a job request from other nodes of the cluster is received by one node, the job-sending probability is calculated using Eq. (4) and the job request is responded by the same probability.

where w is the total weight of the node tasks and \({ highThreshold}\) is the efficiency threshold and \({ lowThreshold}\) is inefficiency threshold. If \({{\varvec{w}}}\) exceeds the efficiency threshold limit, a job will be sent to the requester node and if the total weight is less than the inefficiency threshold, a negative response will be given to the job request. To implement the work-stealing operation at node level, the cores queue is defined as a priority stack. As soon as a new task arrives at the queue, the position of the task in the queue will be determined based on its weight specified by the Input-Weight and Output-Weight muscles. More specifically, the lighter tasks have priority for multi-cores execution while the heavier tasks are set aside for work-stealing and distribution in the cluster. The management of job request from other nodes and the response to the other requests are defined apart from the algorithm execution and the only intersection of the two is the task queue.

2.5 Communication/Execution Overlapping

In order to maximize the performance of the proposed skeleton, specific threads are embedded for asynchronous communications while other parts of the skeleton are dealing with task execution. Additional threads are considered for executing the algorithm, monitoring the queue, and responding to the messages from other nodes in the cluster. The threads used in the proposed skeleton are as follows.

-

Starter thread: this thread is responsible for the execution of the algorithm and continue its job until the end of execution. This thread is generated per each core of the system at the beginning of skeleton activation.

-

Threads responsible for the low load monitoring: these threads are responsible for monitoring the total weight of the queues of nodes after the initial load distribution in clusters. If the node load level is found to be less than the low load threshold limit, as described in Sect. 2.4, it attempts to request the job from other nodes of the cluster in accordance with Eq. (3).

-

Threads dedicated to response request messages from other nodes including sending job requests, sending negative responses to job requests, declaring the start and end of the execution, and also sending the children execution results.

-

Temporary threads for sending the results to parent: when a task execution is finished, its results must be given back to its parent even if the parent resides on another node in the cluster. Therefore, a temporary thread is created for sending the results. The execution of this thread ends as soon as the results are sent.

Since the executions of threads are controlled by the operating system, as if they are done synchronously, there is an overlap in the time needed for communications and that for computations. However, because of the time needed for data compression and reconstruction at the source and destination nodes, the amount of this overlap is limited [23].

3 Simulation of the Proposed Skeleton

Since the proposed divide and conquer skeleton best matches with the problems having the same nature, the performance of the proposed skeleton is evaluated under standard divide and conquer benchmarks. Evaluations are done for matrix multiplication [24], Fast Fourier Transform (FFT) and merge sort [4, 25] algorithms. Tests are repeated for the three mentioned benchmarks developed (1) using the proposed skeleton, (2) by a custom programming. In the custom implementation, the programmer uses P-Thread to create multi-thread processing along with MPI to establish communication among computers in a network. In order to show details of the proposed skeleton, codes of muscles of the merge sort benchmark are described in Sect. 3.3.

3.1 Standard Matrix Multiplication Benchmark

In this benchmark, the primary node divides the input matrix into eight parts keeping the first part while sending the seven remaining matrices to other seven adjacent nodes. After performing the relevant calculations on its respective matrix, each of the seven nodes returns the output data to the node from which the input was sent so that all data is collected.

3.2 Fast Fourier Transform Benchmark

In Fast Fourier Transform benchmark, two arrays are obtained from the initial array. Each of these arrays can be processed in parallel for a separate task. This split will be continued until an array member of one is obtained. In collecting the data, two lists of children in a parent node are merged in a task through performing one subtraction operation and two adding operations.

3.3 Merge Sort Benchmark

In merge sort benchmark, input data of each stage is divided into two parts. Based on the nature of merge sort algorithm then, each part in turn is divided into two parts again. This process continues until only one element remains in every sub-array. Then, the sorted array for collecting is returned to parent node for collection. The parent node merges the two arranged arrays into one array. Codes of merge, execute, netcondition, and mccondition muscles are shown in Fig. 2.

3.4 Simulation Environment

A commercial simulator named COTSon [22], is used in our evaluations. The COTSon simulator developed by HP and AMD, is designed to develop codes for multi-core processors. COTSon contains a virtual network which can simulate processing, sending and receiving of data in a given network.

In the performed simulation, each cluster includes six nodes each having a four-core processor. Each node in the network utilizes a 256-MB main memory with a CPU frequency of 800 MHz. Since all the available memory in the host system is 4 GB, memory allocated to each core is 256 MB which is the same as other work [22, 26].

3.5 Simulation Results

The effects of the implementation of the custom programming and the proposed skeleton on the system speed-up are shown in Figs. 3, 4 and 5. The vertical axis represents the algorithm speed-up and the horizontal axis displays the number of nodes. As it can be seen in all simulated benchmarks, the proposed skeleton has a greater effect when implemented with 4 cores compared with the implementation of the custom programming. In balancing the costs of the software with the increase in the speed of the processing, it seems that the use of 4 nodes to be the most efficient choice.

In simulation related to merge sort can be observed as in shape, for four-node the time of custom programming is very close to the skeleton. This is due to the high amount of split factor in matrix multiplication algorithm. So the synchronization cost by increasing the number of nodes rises up drastically.

Efficiency of the mentioned benchmarks in both custom programming and the skeleton-based implementations are shown in Figs. 6, 7 and 8. In these figures, vertical axis represents the efficiency which is defined as \(\frac{{ speed\,up}}{{ number\,of\,processors}}\) for the studied algorithms. In FFT benchmark, an array of two millions of imaginary numbers is used as the input. In merge sort benchmarks, an input of 64 million one-byte numbers, and in standard matrix multiplication, arrays of 2048 in 2048 with one-byte elements are used as input.

As can be seen in Figs. 6, 7 and 8, the implementation of algorithms with the proposed skeleton show a better performance than the custom programming. This is happened due to the fact that in the custom programming, implementation the work-stealing technique cannot be used.

4 Evaluation of the Proposed Skeleton on a Real Environment

In this section, benchmarks used in simulation experiments (see Sects. 3.1, 3.2, 3.3) are evaluated on a real parallel environment. In a real clustered parallel system with 28 nodes of dual-core processors. Each node possesses a 2.8 GHz AMD dual-core processor (Athlon X2 model) along with 64 KB memory as L1 cache. Using the VMWare, an Ubuntu 12.04 64-bit version operating system is placed on each of nodes. The used network is set to be star with 100Mbps bandwidth for network channels. To increase the accuracy of results, each run has been repeated 5 times and results are average of these 5 repetitions.

Figure 9 shows algorithms speed up in custom programming and skeleton. Horizontal axis represents the number of nodes and vertical axis shows of the gained speed up. In performances of FFT, merge-sort and standard matrix multiplication have been used an input array of 16 million imaginary numbers, input array of 512 million one-byte number, and two input arrays of 8192 in 8192 one-byte, respectively.

As it can be seen in Fig. 10, skeleton efficiency for almost all conditions is better than that of custom programming. Presumably, efficiency improvement is due to choice of correct number of nodes which their first distribution has been appropriately done in custom programming. Although, it is expected that with increasing the number of nodes, the role of load balancing with work-stealing mechanism be highlighted in efficiency improvement. In FFT benchmark, regarding speed up graph in 28 nodes, speed up of skeleton is about one unit more than that of custom programming. It is due to number of system nodes i.e., 28 does not have a proper relationship with dividing factor of FFT’s algorithm. In addition, the difference in speed up might be because of the fact that scalability is less than custom programming.

Figure 10 shows efficiencies of custom programming implementation and skeleton on studied algorithms. In this figure horizontal axis represents the number of nodes and vertical axis shows the achieved efficiency which is defined in Sect. 3.5. As shown in Fig. 10, in most of conditions both methods have the same efficiencies. However, the proposed skeleton offers better efficiency when four nodes are used in the parallel system. It also can be seen that in balance between hardware and speed up, using four nodes is more cost-effective, because with increasing the skeleton’s nodes, speed up in 28 nodes is near to five. In the merge-sort benchmark the proposed skeleton always is better than custom programming in terms of efficiency. Although it would be expected that skeleton’s efficiency is higher than custom programming in 28 nodes, but maybe using the load balancing methods has negative impact on efficiency improvement of skeleton because of large increase of communication overload. In the standard matrix multiplication benchmark two input arrays of 8192 in 8192 one byte number are used which shows a great speed up in run of skeleton. Real clustered system also shows a great speed up which is better than that of simulation. This would be due to the fact that simultaneous requests to hardware devices such as network adaptor and memory in the real clustered system are responded simultaneously, however in the simulation environment such requests are answered one by one. In addition, in real clustered system, access to memory and network adaptors are more similar to random access i.e., the possibility of concurrency of requests and occupation of network bandwidth for two nodes in real cluster system is lower.

5 Conclusions

In this paper, a divide and conquer skeleton was proposed which can make parallel programming easier and faster for the programmer through hiding the complexities of controlling parallel values and value synchronization from the perspective of the programmer. The proposed skeleton is designed for the implementation of dividing and conquer algorithms on clusters of multi-core processors. The results of the simulations and implementation in real environment of the proposed skeleton revealed better performance of the proposed skeleton in comparison to the custom programming. The use of the work stealing technique in the skeleton which is hidden in the body of the skeleton does not increase the complexity of the programmer algorithm as well as compliance with architecture is major priority of the skeleton to other programming methods.

References

Rauber, T., Rünger, G.: Parallel Programming: For Multicore and Cluster Systems. Springer, Berlin (2010)

Bader, D.A., Pennington, R.: Cluster computing: applications. Int. J. High Perform. Comput. 15(2), 181–185 (2001)

Leyton, M., Piquer, J. M.: Skandium: multi-core programming with algorithmic skeletons. In: Proceedings of Euromicro Conference Distributed and Network-Based Processing, pp. 289–296 (2010)

Aldinucci, M., Danelutto, M., Kilpatrick, P.: Skeletons for multi/many-core systems. In: Proceedings of International Conference on Parallel Computing (PARCO), pp. 265–272 (2009)

Karasawa, Y., Iwasaki, H.: A parallel skeleton library for multi-core clusters. In: Proceedings of International Conference on Parallel Processing (ICPP’09), pp. 84–91 (2009)

Nitzberg, B., Lo, V.: Distributed shared memory: a survey of issues and algorithms. Computer 24(8), 52–60 (1991)

Linderman, M.D., Collins, J.D., Wang, H., Meng, T.H.: Merge: a programming model for heterogeneous multi-core systems. ACM SIGOPS Oper. Syst. Rev. 42(2), 287–296 (2008)

Kwiatkowski, J., Pawlik, M., Konieczny, D.: Parallel program execution anomalies. In: Proceedings of 2nd Workshop on Large Scale Computations on Grids (LaSCoG) (2006)

Danelutto, M.: Efficient support for skeletons on workstation clusters. Parallel Process. Lett. 11(1), 41–56 (2001)

Falcou, J., Sérot, J., Chateau, T., Lapresté, J.T.: Quaff: efficient c++ design for parallel skeletons. Parallel Comput. 32(7), 604–615 (2006)

Cole, M.I.: Algorithmic Skeletons: Structured Management of Parallel Computation. Pitman, London (1989)

Enmyren, J., Kessler, C. W.: SkePU: a multi-backend skeleton programming library for multi-GPU systems. In: Proceedings of the Fourth International Workshop on High-Level Parallel Programming and Applications, pp. 5–14 (2010)

Müller-Funk, U., Thonemann, U.W., Vossen, G.: The Münster skeleton library muesli—a comprehensive overview. In: European Research Center for Information Systems, Münster, Report 7 (2009)

Leyton, M.: Skandium parallel patterns library. http://skandium.niclabs.cl/. Accessed June (2013)

González-Vélez, H., Leyton, M.: A survey of algorithmic skeleton frameworks: high-level structured parallel programming enablers. Softw. Pract. Exp. 40(12), 1135–1160 (2010)

Lea, D.: A Java fork/join framework. In: Proceedings of the ACM 2000 Conference on Java Grande, pp. 36–43 (2000)

Tanno, H., Iwasaki, H.: Parallel skeletons for variable-length lists in sketo skeleton library. In: 15th International Euro-Par Conference on Parallel Processing, pp. 666–677

Aldinucci, M., Danelutto, M., Kilpatrick, P., Meneghin, M., Torquati, M.: Accelerating code on multi-cores with fastflow. In: 17th international Conference on Parallel processing, pp. 1070–181. Springer-Verlag, Berlin, Heidelberg

Blelloch, G.E., Chowdhury, R.A., Gibbons, P.B., Ramachandran, V., Chen, S., Kozuch, M.: Provably good multicore cache performance for divide-and-conquer algorithms. In: Proceedings of the Nineteenth ACM-SIAM Symposium on Discrete Algorithms, pp. 501–510 (2008)

Leiserson, C.E.: The Cilk++ concurrency platform. J. Supercomput. 51(3), 244–257 (2010)

Ravichandran, K., Lee, S., Pande, S.: Work stealing for multi-core HPC clusters. In: Proceedings of Euro-Par 2011 Parallel Processing, pp. 205–217. Springer Berlin Heidelberg (2011)

Argollo, E., Falcón, A., Faraboschi, P., Monchiero, M., Ortega, D.: COTSon: infrastructure for full system simulation. ACM SIGOPS Oper. Syst. Rev. 43(1), 52–61 (2009)

Quinn, M.J., Hatcher, P.J.: On the utility of communication computation overlap in data-parallel programs. J. Parallel Distrib. Comput. 33(2), 197–204 (1996)

Cooley, J.W., Tukey, J.W.: An algorithm for the machine calculation of complex Fourier series. Math. Comput. 19(90), 297–301 (1965)

Manwade, K.B.: Analysis of parallel merge sort algorithm. Analysis 1(19), 66–69 (2010)

Ryckbosch, F., Polfliet, S., Eeckhout, L.: Fast, accurate, and validated full-system software simulation of x86 hardware. Micro IEEE 30(6), 46–56 (2010)

Author information

Authors and Affiliations

Corresponding author

Additional information

This paper was in part supported by a Grant from IPM, (No. CS1395-4-06).

Rights and permissions

About this article

Cite this article

Hosseini Rad, M., Patooghy, A. & Fazeli, M. An Efficient Programming Skeleton for Clusters of Multi-Core Processors. Int J Parallel Prog 46, 1094–1109 (2018). https://doi.org/10.1007/s10766-017-0517-y

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10766-017-0517-y