Abstract

This study analyzes longitudinal data from 17 four-year institutions in the United States to determine how the distinctive instructional and learning environment of American liberal arts colleges accounts for the positive impact of liberal arts college attendance on four-year growth in critical thinking skills and need for cognition. We find that, net of important confounding influences, attending an American liberal arts college (vs. a research university or a regional institution in the United States) increases one’s overall exposure to clear and organized classroom instruction and enhances one’s use of deep approaches to learning. In turn, clear and organized classroom instruction and deep approaches to learning tend to facilitate growth in both critical thinking and need for cognition—thus indirectly transmitting the impact of attending a liberal arts college.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Small, private, liberal arts colleges in the United States have often been considered an ideal educational environment for enhancing the cognitive development of students (e.g., Astin 1999; Chickering and Reisser 1993; Clark 1970; Koblik and Graubard 2000; Pascarella and Terenzini 1991, 2005). Indeed, there is replicated evidence to suggest that such institutions maximize effective practices in undergraduate education, particularly in students’ academic programs and classroom experiences (Pascarella et al. 2004, 2005; Seifert et al. 2010). This is consistent with Leslie’s (2002) finding, based on a national postsecondary faculty database, that liberal arts college faculty in the US tend to place a higher priority on the importance of teaching than faculty at other types of American four-year institutions. In this paper, we explore the extent to which the teaching emphasis of liberal arts colleges translates into increased student cognitive growth.

Relevant literature and theoretical framework

Somewhat surprisingly, evidence for the unique impact of attendance at a liberal arts college on standardized measures of general cognitive development applicable across different institutions is inconsistent. For example, analyzing longitudinal data from the National Study of Student Learning (NSSL) collected between 1992 and 1995, Pascarella et al. (2005) were able to introduce statistical controls for an extensive battery of precollege characteristics and experiences, including a precollege measure of critical thinking. With these controls in place, attendance at an American liberal arts college had no significant impact on a standardized measure of critical thinking (Collegiate Assessment of Academic Proficiency) over the first year, or over the first three years, of postsecondary education. The comparison groups were students attending research universities and regional institutions in the U.S. There was some evidence to suggest that the three most selective of the five liberal arts colleges in the NSSL sample did provide a small significant advantage in critical thinking growth, but not beyond the first year of postsecondary education.

The small sample of American liberal arts colleges in the NSSL data (n = 5) and the datedness of the information collected (1992–1995) substantially limits the generalizability of the Pascarella et al. (2005) findings. More current longitudinal evidence reported by Arum and Roksa (2011) found that an institution’s selectivity (as measured by average student standardized test scores) was positively linked to two-year growth on the Collegiate Learning Assessment (CLA), a standardized written measure of cognitive reasoning, even with precollege CLA scores held constant. However, Arum and Roksa did not specifically consider the unique effects of liberal arts colleges in their sample. Most recently, Loes et al. (2012) analyzed the first year (2006–2007) of the longitudinal Wabash National Study of Liberal Arts Education (WNS) to estimate the impact of diversity experiences on growth in critical thinking skills, using the critical thinking test from the Collegiate Assessment of Academic Proficiency. When controls were used for student precollege critical thinking, tested precollege academic preparation, and other potentially important confounding influences, there was no evidence that liberal arts college students made greater first-year critical thinking gains than their counterparts at either research universities or regional institutions. On the other hand, analyzing essentially the same WNS first-year database as Loes et al. (2012), Nelson Laird et al. (2011) reported that students attending liberal arts colleges made greater first-year gains in need for cognition than students at research universities. Need for cognition (Cacioppo et al. 1996) in the Nelson Laird et al. (2011) study was considered a measure of inclination to inquire and continuing motivation for learning.

Inconsistent evidence on the cognitive benefits of attending an American liberal arts college, at least as measured by standardized instruments, may reflect idiosyncratic samples or the fact that most of the research focuses on the first year of college. For example, the National Study of Student Learning data was collected nearly 20 years ago and contains only a very small sample of liberal arts colleges. Similarly, the first year or two of exposure to postsecondary education may not be a sufficient time period for the impacts of liberal arts colleges to fully develop. However, another potential reason may be that the positive influence of liberal arts college attendance on general cognitive growth is more subtle and indirect than overtly direct. That is, analyses that simply consider global cognitive differences linked to attendance at liberal arts colleges, versus other types of four-year institutions, may overlook how the effects of liberal arts colleges are transmitted through the distinctive learning environments that such institutions may foster. Based on the replicated evidence provided by Pascarella et al. (2004) and Seifert et al. (2010), it would appear that much of this distinctive environment is centered in the culture of teaching and learning typical of liberal arts colleges.

In this study, we investigated how American liberal arts colleges influence measures of general cognitive growth by means of distinctive classroom instruction and student learning experiences. We analyzed data from the fourth-year follow-up of the Wabash National Study of Liberal Arts Education (WNS) and considered the effects of attendance at liberal arts colleges (versus attendance at either research universities or regional institutions) on growth in two general measures of cognitive development: critical thinking skills and need for cognition. Specifically, we hypothesized that liberal arts colleges foster greater growth on these cognitive outcomes largely by increasing the likelihood of students’ exposure to clear and organized classroom instruction and by enhancing students’ use of deep approaches to learning.

We focused on the mediating effects of clear and organized instruction and deep approaches to learning specifically because of their demonstrated empirical links with both critical thinking skills and need for cognition in the existing body of research. Students’ perceptions of clear and organized instruction have been vetted experimentally against course achievement (Hines et al. 1985; Schonwetter et al. 1995) and, in various scale forms, have been linked to growth on a standardized measure of critical thinking skills (Pascarella et al. 1996; Edison et al. 1998). More recently, receipt of clear and organized classroom instruction has also been positively associated with need for cognition—though without a control for a precollege measure of need for cognition (Jessup-Anger 2012). According to Nelson Laird et al. (2006), deep approaches to learning focus not on the mere acquisition of facts, but rather on the substance of learning and its underlying meanings. Deep approaches to learning include such things as the understanding of key concepts, understanding relationships and being able to integrate and transfer ideas from one setting to another. Deep approaches to learning have also been associated with need for cognition (Evans et al. 2003) and a disposition to think critically (Nelson Laird et al. 2008a).

We anticipated that attending a liberal arts college would increase students’ overall exposure to clear and organized instruction and increase their use of deep approaches to learning, even in the presence of controls for extensive student precollege characteristics and such college experiences as work responsibilities and major field of study. In turn, with similar controls in place, we hypothesized that receipt of clear and organized classroom instruction and increased use of deep approaches to learning would facilitate four-year growth in both critical thinking skills and need for cognition.

Methods

Samples

Institutional sample

The overall sample in the study consisted of incoming first-year students at 17 four-year colleges and universities located in 11 different states from 4 general regions of the United States: Northeast/Middle-Atlantic, Southeast, Midwest, and Pacific Coast. Institutions were selected from more than 60 colleges and universities responding to a national invitation to participate in the Wabash National Study of Liberal Arts Education (WNS). Funded by the Center of Inquiry in the Liberal Arts at Wabash College, the WNS is a large, longitudinal investigation of the effects of liberal arts colleges and liberal arts experiences on the cognitive and personal outcomes theoretically associated with a liberal arts education. The institutions were selected to represent differences in college and universities nationwide on a variety of characteristics including institutional type and control, size, selectivity, location, and patterns of student residence. However, because the study was primarily concerned with the impacts of liberal arts colleges and liberal arts experiences, liberal arts colleges were purposefully over-represented.

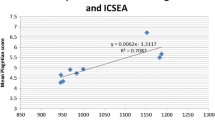

Our selection technique produced a sample with a wide range of academic selectivity, from some of the most selective institutions in the country to some that essentially used open admission practices. There was also substantial variability in undergraduate enrollment, from institutions with entering classes that averaged 2,975 students (all four-year research universities or four-year regional institutions), to institutions with entering classes that averaged 439 students (all liberal arts colleges). According to the 2007 Carnegie Classification of Institutions, three of the participating institutions were considered research extensive universities, three were comprehensive regional universities that did not grant the doctorate, and 11 were baccalaureate liberal arts colleges. All of the liberal arts colleges were private, and five of the six research universities and comprehensive institutions were public. One of the research universities was private. As a group, the 11 liberal arts colleges were slightly less selective than the six research/regional institutions. The average ACT (or SAT equivalent) score for the liberal arts colleges was about 25.7 (range = 21.4–29.5), while the corresponding average ACT (or SAT equivalent) score for the combined research/regional institutions was 26.6 (range = 21.5–31.7).

Limitations of the institutional sample

Clearly the group of institutions on which this study is based cannot be considered a representative national sample of American four-year institutions. While there were 11 liberal arts colleges in the sample, the comparison groups of research universities and regional institutions consisted of only three such institutions respectively. This constitutes an unequivocal limitation of the study. Consequently, with respect to generalizing to all four-year institutions, and particularly to research universities and regional institutions, the effects we uncovered must be viewed with substantial caution. Similarly, the context of this study is limited to the United States. Weighed against this, however, is the longitudinal (2006–2010) nature of our data, and the fact that we were able to take into account a wide range of potential confounding influences (including precollege scores on each four-year outcome variable).

Student sample

The individuals in the sample were first-year, full-time, undergraduate students participating in the WNS at each of the 17 institutions in the study. The initial sample was selected in one of two ways. First, for larger institutions, it was selected randomly from the incoming first-year class at each institution. The only exception to this was at the largest participating institution in the study, where the sample was selected randomly from the incoming class in the College of Arts and Sciences. Second, for a number of the smallest institutions in the study—all liberal arts colleges—the sample was the entire incoming first-year class. The students in the sample were invited to participate in a national longitudinal study examining how a college education affects students, with the goal of improving the undergraduate experience. They were informed that they would receive a monetary stipend for their participation in each data collection, and were also assured in writing that any information they provided would be kept in the strictest confidence and never become part of their institutional records.

Data collection

Initial data collection

The initial data collection was conducted in the late summer/early fall of 2006, with 4,193 students from the 17 institutions. This first data collection lasted between 90 and 100 minutes and students were paid a stipend of $50 each for their participation. The data collected included a WNS precollege survey that sought information on student demographic characteristics, family background, high school experiences, political orientation, life/career plans, and the like. Students also completed a series of instruments that measured dimensions of cognitive and personal development theoretically associated with a liberal arts education. One of these was the 40-minute critical thinking test of the Collegiate Assessment of Academic Proficiency (CAAP). In order to minimize the time required by each student in the data collection, and because another outcome measure was used that required approximately the same administration time, the CAAP critical thinking test was randomly assigned to half the sample at each of the 17 participating institutions. The other dependent measure in the study, Need for Cognition, was completed by all participating students. These two measures are described in greater detail below.

Follow-up data collection

The follow-up data collection on which this study is based was conducted in spring 2010 (approximately four academic years later). This data collection took about two hours and participating students were paid an additional stipend of $50 each. Two types of data were collected. The first type of data was based on questionnaire instruments that collected extensive information on students’ college experiences. This included information on students’ classroom activities, study habits, perceptions of teaching received, co-curricular involvement, interactions with faculty and student affairs staff, interactions with peers, involvement in diversity experiences, and the like. Two instruments were used to collect this data: the National Survey of Student Engagement (NSSE) and the Student Experiences Survey developed specifically for the WNS. The second type of data collected consisted of follow-up (or posttest) measures of the instruments measuring dimensions of cognitive and personal development that were first completed in the initial data collection. Information on students’ college experiences, including their perceptions of overall classroom instruction, was collected prior to information on the posttest measures. The entire data collection was administered and conducted by ACT, Inc. (formerly the American College Testing Program). [A preliminary follow-up data collection was also conducted after the first year of college (Spring 2007). A small number of participants in the 2010 data collection did not participate in the 2007 follow-up. A control for this, in the form of a dummy variable indicating participation/non-participation in the 2007 data collection, was built into all analyses. This variable had only a trivial net influence on each outcome.]

Of the original sample of 4,193 students who participated in the late summer/early fall 2006 testing, 2,212 participated in the spring 2010 follow-up data collection, for a response rate of 52.8 %. These students represented approximately 10 % of the total population of incoming first-year students at the 17 participating institutions. Of these 2,212 students, useable 2010 data for our analyses was available for 920 students on critical thinking (recall that it was randomly assigned to half the sample at each institution) and 1,914 students on need for cognition. To provide at least some adjustment for potential response bias by sex, race, academic ability, and institution in the samples analyzed, a weighting algorithm was developed. Using information provided by each participating institution on sex, race and ACT (or SAT equivalent) score, 2010 follow-up participants were weighted up to each institution’s fourth-year undergraduate population by sex, race (person of color/white), and ACT (or equivalent) quartile. While applying weights in this manner has the effect of making the samples we analyzed more representative of the institutional populations from which they were drawn by sex, race and ACT score, such weighting of the samples cannot adjust completely for non-response bias.

Dependent variables

Synthesizing much of the literature on liberal arts education King et al. (2007) developed a comprehensive model of liberal arts outcomes that embraced seven general dimensions: effective reasoning and problem solving, inclination to inquire and lifelong learning, well-being, intercultural effectiveness, leadership, moral character, and integration of learning. This study focuses on estimating the impacts of effective teaching and deep approaches to learning on the first two dimensions of the King et al. (2007) model: effective reasoning and problem solving and inclination to inquire and lifelong learning.

To tap the dimension of effective reasoning and problem solving, we used the Critical Thinking Test (CTT) from the Collegiate Assessment of Academic Proficiency (CAAP), which was developed by the American College Testing Program (ACT). The CTT is a 40-minute, 32-item instrument designed to measure a student’s ability to clarify, analyze, evaluate, and extend arguments. The test consists of four passages in a variety of formats (e.g., case studies, debates, dialogues, experimental results, statistical arguments, editorials). Each passage contains a series of arguments that support a general conclusion and a set of multiple-choice test items. The internal consistency reliabilities for the CTT range between .81 and .82 (American College Testing Program 1991). It correlates .75 with the multiple-choice Watson–Glaser critical thinking appraisal (Pascarella et al. 1995).

Inclination to inquire and lifelong learning was represented by the 18-item Need for Cognition Scale (NFC). Need for cognition refers to an individual’s “tendency to engage in and enjoy effortful cognitive activity” (Cacioppo et al. 1996, p. 197). Individuals with a high need for cognition “tend to seek, acquire, think about, and reflect back on information to make sense of stimuli, relationships, and events in their world” (p. 198). In contrast, those with low NFC are more likely to rely on others, such as celebrities and experts, cognitive heuristics, or social comparisons to make sense of their world. The reliability of the NFC ranges from .83 to .91 in samples of college students (Cacioppo et al. 1996). With samples of undergraduates, the NFC has been positively associated with the tendency to generate complex attributions for human behavior, high levels of verbal ability, engagement in evaluative responding, one’s desire to maximize information gained rather than maintain one’s perceived reality (Cacioppo et al. 1996), and college grades (Elias and Loomis 2002). The NFC is negatively linked with authoritarianism, need for closure, personal need for structure, and the tendency to respond to information reception tasks with anxiety (Cacioppo et al. 1996). Examples of constituent items on the NFC scale include: “I would prefer complex to simple problems,” “Thinking is not my idea of fun” (coded in reverse), “I like to have the responsibility of handling a situation that requires a lot of thinking,” “I really enjoy a task that involves coming up with new solutions to problems,” and “The notion of thinking abstractly is appealing to me.” The complete scale is available from the first author on request.

Independent variables

The primary independent variable was whether or not one attended a liberal arts college. This was operationalized by two dummy variables in which liberal arts college attendance was coded as 1 and attendance at either a regional institution or a research university was coded as 0. The mediating independent variables in the study were overall exposure to effective classroom instruction and deep learning experiences. Overall exposure to effective classroom instruction was defined operationally as students’ perceptions of the extent to which they were exposed to clear and organized instruction in their classes. Information on students’ perceptions of overall exposure to clear and organized instruction was gathered on the WNS Student Experiences Survey by means of a 10-item scale assessed in the 2010 follow-up data collection. The scale presented students with the following stem: “Below are statements about teacher skill/clarity as well as preparation and organization in teaching. For the most part, taking into consideration all of the teachers with whom you’ve interacted with at [institution name], how often have you experienced each?” We used the same 10-item scale of vetted reliability and validity used in previous research (Pascarella et al. 2011, 1996). The 10-item instructional clarity and organization scale has an alpha reliability of .89. Constituent items and response options are shown in Table 1.

Deep approaches to learning were operationally assessed with three scales developed by Nelson Laird et al. (2006, 2008b). The scales are based on NSSE items completed by the student sample in the spring of 2010. The three scales are termed: Higher-order learning, Integrative learning, and Reflective learning. According to Nelson Laird et al. (2008a, b) the four-item Higher-Order Learning Scale “focuses on the amount students believe that their courses emphasize advanced thinking skills such as analyzing the basic elements of an idea, experience, or theory and synthesizing ideas, information, or experiences into new, more complex interpretations’” (p. 477). The Integrative Learning Scale consists of five items and measures “the amount students participate in activities that require integrating ideas with others outside of class” (p. 477). Reflective Learning is a three-item scale that asks “how often students examined the strengths and weaknesses of their own views and learned something that changed their understanding” (p. 477). The internal consistency reliabilities of the three scales range from .72 to .82. We present the specific items constituting each of the three deep approaches to learning scales in Table 2.

Guiding conceptual model and control variables

A number of conceptual models have been offered to guide scholars in understanding the impact of college on students (e.g., Astin 1993; Pascarella 1985; Pascarella and Terenzini 1991, 2005). These models suggest that, to accurately estimate the net or unique influence of any single college experience or set of college experiences, one needs also to take into account three other sets of influences: the individual capabilities, characteristics, and experiences students bring to postsecondary education, the characteristics of the institution attended, and other college experiences which may influence or co-vary with the influence in question. This general framework guided our selection of control variables.

Student pre-college characteristics and experiences included: a pre-college (fall 2006) measure of each of the two dependent variables (i.e., critical thinking and need for cognition); ACT (or SAT equivalent) score as provided for each student in the sample by each participating institution; sex (coded as 1 = male, 0 = female); and race (coded as 1 = white, 0 = person of color). Also included in this block of variables were: parental education; a pre-college measure of academic motivation; and a pre-college measure of secondary school involvement or engagement. Detailed operational definitions of these variables, including psychometric properties, are available from the first author.

Finally, we also took into account four potentially important other student experiences during college: place of residence during college, work responsibility, academic major field of study, and co-curricular involvement. Place of residence was coded: 1 = lived in campus housing, versus 0 = lived elsewhere during the previous year. Work responsibility was defined as hours per week during the fourth year of college worked both on and off campus. There were eight response options from “0 hours” to “more than 30 hours.” Academic major field of study was operationally defined by two dummy variables: humanities, fine arts, or social science major (coded 1) versus other (coded 0); and science, technology, engineering or mathematics (STEM) major (coded 1) versus other (coded 0). Lastly co-curricular involvement was operationally defined as a student’s reported number of hours in a typical week during the previous year involved in co-curricular activities (campus organizations, campus publications, student government, fraternity or sorority, intercollegiate or intramural sports, etc.) with eight response options, ranging from “0 hours” to “more than 30 hours.” Information on all of the other college experiences was obtained during the spring 2010 data collection.

Analyses

The analyses were carried out in three stages. In the preliminary or first stage, we estimated the net effects of attendance at an American liberal arts college on clear and organized classroom instruction and on the three measures of deep learning experiences. To do this, we regressed the clear and organized instruction scale and the three deep approaches to learning scales on a regression equation that included the two dummy variables representing liberal arts college attendance (vs. research university or regional institution attendance) plus all the precollege variables and other college experience variables specified above.

In the second stage of the analyses, we estimated the unique effects of liberal arts college attendance on the primary dependent measures—end-of-fourth-year critical thinking skills and end-of-fourth-year need for cognition. Two models were estimated. In the first model, we regressed fourth-year critical thinking and need for cognition on the dummy variables representing liberal arts college attendance and all the pre-college variables and other college experiences specified above. In the second model, we simply added the clear and organized classroom instruction scale and the three deep approaches to learning scales to the first model.

In the third stage of the analyses, we estimated the indirect effects of liberal arts college attendance on fourth-year critical thinking and need for cognition as mediated through exposure to clear and organized classroom instruction and deep learning experiences. In this analysis, we estimated both the overall indirect effect through all four anticipated mediators and the individual indirect effects through each specific mediating variable.

All analyses were based on weighted sample estimates, adjusted to the actual sample size for correct standard errors. Because our regression models were detailed and had more variables than individual sampling units (i.e., 17 institutions), we could not employ procedures to statistically adjust artificially smaller standard errors for the nesting or clustering effect in our data. Consequently, we used a more stringent alpha level (p < .01 rather than p < .05) for statistical significance to reduce the probability of making a Type I Error—rejecting a true null hypothesis (Raudenbush and Bryk 2001).

With one exception, we present our results with the two dependent variables (i.e., fourth-year critical thinking skills and fourth-year need for cognition) and all continuous independent variables in standardized form. This permits the results to be interpreted as effect sizes. For continuous independent variables, the coefficients represent that part of a standard deviation change in the dependent measure for every one standard deviation increase in the independent variable, all other influences held constant. For categorical independent variables (specifically attendance at a liberal arts college), the coefficients represent that part of a standard deviation change in the dependent measure for every one unit increase in the independent variable, all other influences held constant. (The only exception to this was in the prediction of clear and organized instruction and the deep learning scales where we present metric, or unstandardized, coefficients.)

Results

Table 3 summarizes the estimated metric effects of attending an American liberal arts college (vs. a research university or regional institution) on students’ perceptions of overall exposure to clear and organized classroom instruction and deep learning experiences. With controls in place for an extensive battery of precollege characteristics and experiences, as well as for other college experiences, attending a liberal arts college was associated with a statistically significant advantage in exposure to clear and organized classroom instruction in all four analyses conducted. The corresponding unique, positive effects of liberal arts college attendance on deep learning experiences (shown in columns 2, 3, and 4 of Table 3), were statistically significant in 11 of 12 analyses. Thus, net of student precollege characteristics and academic major during college, attendance at a liberal arts college significantly enhanced exposure to clear and organized instruction and the use of deep approaches to learning.

Table 4 summarizes the effects of liberal arts college attendance on fourth-year critical thinking skills and need for cognition. As the table indicates, with controls in place for student precollege characteristics and experiences plus other college experiences (columns 2 and 5), attending a liberal arts college was associated with a significant advantage of about .160 of a standard deviation in critical thinking skills over attendance at a regional institution and about .127 of a standard deviation advantage in need for cognition over attendance at a research university. However, when exposure to clear and organized classroom instruction and deep learning experiences were added to the equations (columns 3 and 6) the estimated effects of attending a liberal arts college were reduced to non-significance. This suggests that a significant portion of the positive effect of liberal arts college attendance on our measures of cognitive growth is mediated through classroom instruction and student use of deep approaches to learning.

The estimated standardized indirect effects of liberal arts college attendance on critical thinking and need for cognition, mediated through classroom instruction and deep learning experiences, are summarized in Table 5. As the table indicates, the overall positive indirect effect of liberal arts college attendance was significant in all cases. For fourth-year critical thinking, this indirect influence was transmitted primarily through exposure to clear and organized instruction and reflective learning. For fourth-year need for cognition, the positive indirect effect of liberal arts college attendance was mediated primarily through clear and organized instruction, reflective learning, and integrative learning.

Summary and discussion

In this study, we attempted to obtain a better understanding of how attendance at an American liberal arts college might facilitate general dimensions of cognitive growth over four years of postsecondary education. Our findings suggest that a substantial part of the cognitive influence of liberal arts colleges is subtle and indirect and is transmitted through distinctive differences in the overall instructional environment of liberal arts colleges, as well as the fact that liberal arts college students have somewhat different learning experiences than their counterparts at other types of four-year institutions. Compared to students attending research universities or regional institutions in the United States, American liberal arts college students in our sample reported significantly greater overall exposure to clear and organized classroom instruction and significantly more higher-order, reflective, and integrative learning experiences. These significant advantages persisted even in the presence of controls for a wide array of potential confounding influences, such as precollege critical thinking skills or need for cognition, tested precollege academic preparation and academic motivation, parental education, academic major during college, and work responsibilities and co-curricular involvement during college. Such a finding extends previous evidence suggesting that liberal arts college faculty not only put a higher priority on teaching than do faculty at other types of four-year institutions (Leslie 2002), but also that this pedagogical focus is reflected in significantly different instructional and learning experiences by liberal arts college students (Pascarella et al. 2004, 2005; Seifert et al. 2010).

When differences in instructional and learning experiences were taken into account, liberal arts college attendance had only non-significant direct (unmediated) effects on four-year growth in critical thinking skills and need for cognition. However, attendance at a liberal arts college did have significant positive indirect effects on fourth-year critical thinking and need for cognition that were mediated by liberal arts college students’ greater exposure to clear and organized classroom instruction and increased use of deep approaches to learning. Specifically, the indirect effect of liberal arts colleges on critical thinking skills was mediated largely through clear and organized classroom instruction and reflective learning experiences. The corresponding indirect effect on need for cognition was mediated through clear and organized instruction, reflective learning, and integrative learning. These significant, positive, indirect effects of liberal arts college attendance held in comparison to both research universities and regional institutions. Our findings suggest that simply considering the direct or unmediated net effects of liberal arts college attendance on cognitive growth may be misleading in that such a procedure ignores potentially important indirect influences—particularly those centered in the instructional and learning environment of an institution.

At least one of the major findings of our investigation may have significant policy implications. The positive indirect effect of American liberal arts colleges on the two dimensions of cognitive development we considered was transmitted through overall exposure to clear and organized classroom instruction and student deep learning experiences. Given Leslie’s (2002) finding concerning the higher priority liberal arts college faculty give to teaching, higher levels of exposure to clear and organized instruction and deep learning experiences reported by liberal arts college students may reflect a selection effect that attracts faculty already most interested in teaching to careers at liberal arts colleges. However, this does not mean that the quality of teaching at institutions is determined totally by the faculty they recruit. The fact that exposure to clear and organized instruction and deep learning experiences significantly fostered four-year cognitive growth irrespective of institutional type (see Table 4), suggests that these instructional approaches may have policy implications for other types of institutions than just American liberal arts colleges.

As pointed out by Weimer and Lenze (1997) and Pascarella et al. (2011), faculty members at any type of institution can actually learn many of the constituent skills and behaviors required to implement clear and organized instruction in their courses. Similarly, there is no reason why faculty members at research universities and regional institutions could not build more assignments and projects requiring deep approaches to learning into their courses. Thus, while this study focused on understanding the effects of liberal arts colleges in an American context, our findings lend support to the potential cognitive benefits derived from the investment of resources in programs designed to enhance teaching effectiveness at any institution, and perhaps even at institutions outside the United States. This may be particularly the case if those programs assist faculty to hone pedagogical skills revolving around clarity and organization, and to develop classroom experiences that require students to employ reflective and integrative approaches to the understanding of course content.

References

American College Testing Program. (1991). CAAP technical handbook. Iowa City, IA: Author.

Arum, R., & Roksa, J. (2011). Academically adrift: Limited learning on college campuses. Chicago: University of Chicago Press.

Astin, A. (1993). What matters in college? Four critical years revisited. San Francisco: Jossey-Bass.

Astin, A. (1999). How the liberal arts college affects students. Daedalus, 128(1), 77–100.

Cacioppo, J., Petty, R., Feinstein, J., & Jarvis, W. (1996). Dispositional differences in cognitive motivation: The life of individuals differing in need for cognition. Psychological Bulletin, 119, 197–253.

Chickering, A., & Reisser, L. (1993). Education and identity (2nd ed.). San Francisco: Jossey-Bass.

Clark, B. (1970). The distinctive college. Chicago: Aldine Publishing Company.

Edison, M., Doyle, S., & Pascarella, E. (1998, November). Dimensions of teaching effectiveness and their impact on student cognitive development. Paper presented at the annual meeting of the Association for the Study of Higher Education, Miami, FL.

Elias, S., & Loomis, R. (2002). Utilizing need for cognition and perceived self-efficacy to predict academic performance. Journal of Applied Social Psychology, 32, 217–225.

Evans, C., Kirby, J., & Fabrigar, L. (2003). Approaches to learning, need for cognition, and strategic flexibility among university students. British Journal of Educational Psychology, 73, 507–528.

Hines, C., Cruickshank, D., & Kennedy, J. (1985). Teacher clarity and its relationship to student achievement and satisfaction. American Educational Research Journal, 22, 87–99.

Jessup-Anger, J. (2012). Examining how residential environments inspire the life of the mind. Review of Higher Education, 35, 431–462.

King, P., Kendall Brown, M., Lindsay, N., & VanHecke, J. (2007). Liberal arts student learning outcomes: An integrated approach. About Campus, 12(4), 2–9.

Koblik, S., & Graubard, S. (Eds.). (2000). Distinctly American: The residential liberal arts college. Brunswick, NJ: Transaction Publishers.

Leslie, D. (2002). Resolving the dispute: Teaching is academe’s core value. Journal of Higher Education, 73, 49–73.

Loes, C., Pascarella, E., & Umbach, P. (2012). Effects of diversity experiences on critical thinking skills: Who benefits? Journal of Higher Education, 83, 1–25.

Nelson Laird, T., Shoup, R., & Kuh, G. (2006, May). Measuring deep approaches to learning using the National Survey of Student Engagement. Paper presented at the annual forum of the Association for Institutional Research, Chicago, IL.

Nelson Laird, T., Garver, A., Niskode-Dossett, A., & Banks, J. (2008a, November). The predictive validity of a measure of deep approaches to learning. Paper presented at the annual meeting of the Association for the Study of Higher Education, Jacksonville, FL.

Nelson Laird, T., Shoup, R., Kuh, G., & Schwartz, M. (2008b). The effects of discipline on deep approaches to student learning and college outcomes. Research in Higher Education, 49, 469–494.

Nelson Laird, T., Seifert, T., Pascarella, E., Mayhew, M., & Blaich, C. (2011, November). Deeply affecting first-year students thinking: The effects of deep approaches to learning on three outcomes. Paper presented at the annual meeting of the Association for the Study of Higher Education, Charlotte, NC.

Pascarella, E. (1985). College environmental influences on learning and cognitive development: A critical review and synthesis. In J. Smart (Ed.), Higher education: Handbook of theory and research (Vol. 1, pp. 1–61). New York: Agathon.

Pascarella, E., Bohr, L., Nora, A., & Terenzini, P. (1995). Cognitive effects of 2-year and 4-year colleges: New evidence. Educational Evaluation and Policy Analysis, 17, 83–96.

Pascarella, E., Cruce, T., Wolniak, G., & Blaich, C. (2004). Do liberal arts colleges really foster good practices in undergraduate education? Journal of College Student Development, 45, 57–74.

Pascarella, E., Edison, M., Nora, A., Hagedorn, L., & Braxton, J. (1996). Effects of teacher organization/preparation and teacher skill/clarity on general cognitive skills in college. Journal of College Student Development, 37, 7–19.

Pascarella, E., Salisbury, M., & Blaich, C. (2011). Exposure to effective instruction and college student persistence: A multi-institutional replication and extension. Journal of College Student Development, 52, 4–19.

Pascarella, E., & Terenzini, P. (1991). How college affects students: Findings and insights from twenty years of research. San Francisco: Jossey-Bass.

Pascarella, E., & Terenzini, P. (2005). How college affects students: A third decade of research (Vol. 2). San Francisco: Jossey-Bass.

Pascarella, E., Wolniak, G., Seifert, T., Cruce, T., & Blaich, C. (2005). Liberal arts colleges and liberal arts education: New evidence on impacts. San Francisco: Jossey-Bass.

Raudenbush, S. W., & Bryk, A. S. (2001). Hierarchical linear models: Applications and data analysis methods. Thousand Oaks, CA: Sage Publications.

Schonwetter, D., Menec, V., & Perry, R. (1995, April). An empirical comparison of two effective college teaching behaviors: Expressiveness and organization. Paper presented at the annual meeting of the American Educational Research Association, San Francisco, CA.

Seifert, T., Pascarella, E., Goodman, K., Salisbury, M., & Blaich, C. (2010). Liberal arts colleges and good practices in undergraduate education: Additional evidence. Journal of College Student Development, 51, 1–22.

Weimer, M., & Lenze, L. (1997). Instructional interventions: Review of the literature on efforts to improve instruction. In R. Perry & J. Smart (Eds.), Effective teaching in higher education: Research and practice. New York: Agathon Press.

Acknowledgments

This research was supported by a Generous Grant from the Center of Inquiry in the Liberal Arts at Wabash College to the Center for Research on Undergraduate Education at the University of Iowa.

Author information

Authors and Affiliations

Rights and permissions

About this article

Cite this article

Pascarella, E.T., Wang, JS., Trolian, T.L. et al. How the instructional and learning environments of liberal arts colleges enhance cognitive development. High Educ 66, 569–583 (2013). https://doi.org/10.1007/s10734-013-9622-z

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10734-013-9622-z