Abstract

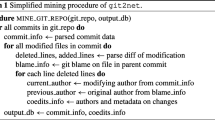

Technical collaboration between multiple contributors is a natural phenomenon in distributed open source software development projects. Macro-collaboration, where each code commit is attributed to a single collaborator, has been extensively studied in the research literature. This is much less the case for so-called micro-collaboration practices, in which multiple authors contribute to the same commit. To support such practices, GitLab and GitHub started supporting social coding mechanisms such as the “Co-Authored-By:” trailers in commit messages, which, in turn, enable to empirically study such micro-collaboration. In order to understand the mechanisms, benefits and limitations of micro-collaboration, this article provides an exemplar case study of collaboration practices in the OpenStack ecosystem. Following a mixed-method research approach we provide qualitative evidence through a thematic and content analysis of semi-structured interviews with 16 OpenStack contributors. We contrast their perception with quantitative evidence gained by statistical analysis of the git commit histories (\(\sim \)1M commits) and Gerrit code review histories (\(\sim \)631K change sets and \(\sim \)2M patch sets) of 1,804 OpenStack project repositories over a 9-year period. Our findings provide novel empirical insights to practitioners to promote micro-collaborative coding practices, and to academics to conduct further research towards understanding and automating the micro-collaboration process.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In open-source software development projects, it is common for multiple contributors to collaborate on a wide range of activities, from social activities such as submitting a forum question or bug report comment, to technical activities such as developing and reviewing code changes. This paper focuses on one of these activities, i.e., code contributions, challenging the assumptions that people have made thus far about the way in which collaboration processes exploit distributed version control systems.

Traditionally, collaborations between open-source developers take the form of so-called macro-collaborations, which focus on large-scale collaborations by entire teams on complex features for extended amounts of time, typically on dedicated feature branches (de Souza Costa et al. 2019; Costa et al. 2016; Krusche et al. 2016; Rahman 2015; Bird and Zimmermann 2012). While developers do interact during such collaborations, their contributions tend to be nicely modularized in their own commits for which they are recorded as sole author by version control systems like git.

While macro-collaboration involves multiple developers contributing one or more commits, in contrast micro-collaborative coding is a more fine-grained type of collaboration that involves multiple developers contributing to the same commit. Hence, instead of working asynchronously, micro-collaboration involves synchronous technical activities on a shared code base. This practice is claimed to have various benefits within teams, such as solving complex problems (Datta 2018; Dingsøyr et al. 2018; Kalliamvakou et al. 2015; Bick et al. 2018; Neumayr et al. 2018; Mardi et al. 2021; Sharp and Robinson 2008) and improving onboarding (Foundjem et al. 2021b). At the same time, micro-collaborations are essential both in in-person settings (cf. traditional pair programming) and when working in an online, global software development environment (as has become the norm since Covid-19), when the required contributions by the different developers are impossible to decompose cleanly into separate commits.

Despite the many advantages of micro-collaborations, and their similarity to agile practices such as pair programming, modern version control systems for online collaboration like git unfortunately do not provide built-in support to track such collaborations, simply because, by design, a git commit can only have one author and one (possibly different) committer. The author is the one who created the content, and the committer is the one who committed it to the repository. Similarly, code review systems such as GerritFootnote 1, or reviews integrated in GitHub’s pull request mechanism, only allow one individual to be the author of a code change.

As such, for a long time developers have had to come up with workarounds to still attribute micro-collaboration changes to all responsible collaborators, which is essential for accountability, copyright, etc. purposes. For example, in November 2007, the Debian community raised awarenessFootnote 2 for git to support multiple authors for a commit, and in March 2012, the Eclipse developer community expressed the need to attribute multiple authors for a commit: “In the case, for example, of pair programming, we may have a situation where multiple developers should be credited with a commit … there is no current mechanism to do this. It might be cool to be able to specify multiple values in the ‘author’ field or multiple occurrences of the author field” (Wayne Beaton).Footnote 3

Only recently, a de facto approach to acknowledge all co-authors of a patch has been integrated in GitHub,Footnote 4 GitLab,Footnote 5 etc., basically requiring developers to add specific Co-Authored-By: trailers at the end of their commit message, each one listing the name of one of the main author’s collaborators. According to the OpenStack wiki,Footnote 6 the use of such trailers is encouraged. It is even the only mechanism for micro-collaborative coding that is advertized publicly as “a convention for recognizing multiple authors, and our projects would encourage the stats tools to observe it when collecting statistics.” Moreover, according to GitHub, “the new feature responds to a growing need in organizations where collaborative coding is the norm to speed up onboarding of junior developers, to improve code quality through peer programming or code reviews, etc.”. Footnote 7

Thus far, there is little empirical evidence about the prevalence of micro-collaboration practices in large open-source development communities, and the expected and actual benefits of such practices. Worse, there is no data about the extent of micro-collaboration before commit trailers were established, nor to what extent those older practices still prevail. To address this lack of knowledge, this paper carries out an exemplar case study of micro-collaboration practices. Such exemplar case studies are a well-accepted, yet underexploited, method in empirical software engineering to gain fine-grained insights and understanding of specific phenomena (Runeson et al. 2012).

This paper studies as an exemplar case the OpenStack ecosystem,Footnote 8 a popular open-source platform for cloud computing governed by the Open Infrastructure Foundation (OIF). To do so, we follow a mixed-methods research methodology (Al-Subaihin et al. 2021) combining qualitative semi-structured interviews of OpenStack contributors with quantitative statistical analysis of historical data extracted from version control and code review environments.

First, we perform an interview study with 16 participants to answer the following research questions:

- R Q 1 :

-

How do OpenStack contributors engage in collaborative coding practices?

- R Q 2 :

-

What are the benefits of micro-collaboration?

- R Q 3 :

-

How can micro-collaboration be improved further?

Second, we perform a quantitative study on more than 900k commits and 600k Gerrit change sets of more than 1.8k OpenStack projects to validate the key findings of the qualitative study. Furthermore, we validate our qualitative and quantitative results with nine experts, all members of the Technical Committee at OpenStack. As a result, we provide the following main contributions:

-

1.

Ten qualitative findings describing the perceived practices, advantages and shortcomings of micro-collaboration practices at OpenStack;

-

2.

Empirical evidence validating five of these qualitative findings, notably we confirm that micro-collaboration correlates positively with:

-

(a)

onboarding and retention;

-

(b)

a lower likelihood of introducing bugs;

-

(c)

more and larger code reviews, patch sets and commits;

-

(a)

-

3.

We observe that the large majority of micro-collaborative changes are not explicitly marked as such using commit trailers.

The paper’s main message is that micro-collaboration matters in distributed development, hence it makes sense for open-source communities to promote and support this practice. Yet, one cannot only rely on the presence of co-author trailers in commit messages. Open-source communities could adopt the various heuristics used in our study to identify micro-collaborations hidden in Gerrit code reviews. A replication package containing all necessary details of the qualitative analysis of the interviews, as well as the data and scripts of our analyses is available (Foundjem et al. 2021a).Footnote 9

2 Background

The goal of this paper is to understand the phenomenon of micro-collaborative coding practices in open source software projects that are part of a larger software ecosystem. This requires a case study containing multiple interdependent project teams with many distinct contributors of diverse nature. Moreover, the community should be open to micro-collaborations, and have established mechanisms and tools to support it. In addition, the ecosystem should be sufficiently long-lived, and contain traceable and accurate data logs of its software development history. OpenStack satisfies all of these criteria, which is why it was selected for our case study.

2.1 About OpenStack

OpenStack is an open-source ecosystem for cloud computing that was jointly developed by NASA and Rackspace in 2010. It is available under the Apache 2.0 license, and follows a 6-month official release schedule, with releases ordered alphabetically starting with “Austin” in October 2010.Footnote 10 While this first release included only two initial projects (Nova and Cinder), OpenStack has been growing steadily over time, and currently comprises over 60 core projects.Footnote 11

As of March 12th, 2021, OpenStack’s code base of 20M lines of code involved code contributions by over 100k community members including volunteers and developers employed by over 710 companiesFootnote 12 (including large multinationals like Microsoft, Facebook, Huawei and Red Hat), spread across 187 countries. There is also a large base of companies and software ecosystems (SECOs) relying on OpenStack services, such as Apache Software Foundation, etc. OpenStack development involves over 2k projects/sub projects (Foundjem et al. 2021b), 63 of which are so-called core projects (Foundjem and Adams 2021). Zhang et al. (2021) and Zhang et al. (2020) have studied how a large-scale complex system, such as OpenStack, is developed by various companies collaborating with different OpenStack projects. They found eight models of collaboration among these companies and OpenStack that differs in their objectives and contribution performance. Despite their differences, these companies work together as an ecosystem with a common goal: to release OpenStack. The Open Infrastructure Foundation (OIF), formerly known as OpenStack Foundation, was founded in 2012 with the objectives to promote, empower and protect the OpenStack software and its community. The Foundation staff Members (FM) are the custodians of the OpenStack trademark, controlling the flow of budget and defining the goal of the Open Infrastructure Foundation. The Technical Committee (TC) members are responsible for all technical matters concerning the foundation, and they control all the upstream OpenStack projects (Zhou et al. 2020), such as the official core projects.

2.2 The OpenStack Development Workflow

The overall OpenStack collaborative development process is comparable to what one expects to find in large collaborative open source communities. The specific implementation of this workflow at OpenStack relies on a variety of tools, including: (i) a distributed version control system (git) that hosts the code base of all projects in its public repositories; (ii) a code review system (Gerrit) for patch sets and new features across all projects; (iii) a task and issue tracker (that has migrated from LaunchpadFootnote 13 to StoryboardFootnote 14); (iv) a continuous integration (CI) system (ZuulFootnote 15).

Figure 1 shows the common development workflow that OpenStack project contributors use. Each stage is numbered chronologically. In step  , as soon as a git account is set up, the contributor can select a desired upstream project and create a branch on his local environment to clone the project into. In step

, as soon as a git account is set up, the contributor can select a desired upstream project and create a branch on his local environment to clone the project into. In step  , the contributor should switch from the master branch to their local branch to make all necessary changes to the source code. Next

, the contributor should switch from the master branch to their local branch to make all necessary changes to the source code. Next  , the contributor needs to run unit tests against the changed code and commit it to a staging area. Then

, the contributor needs to run unit tests against the changed code and commit it to a staging area. Then  , the change set can be submitted to the Gerrit code review system (Mukadam et al. 2013; McIntosh et al. 2014a) and can be iteratively amended

, the change set can be submitted to the Gerrit code review system (Mukadam et al. 2013; McIntosh et al. 2014a) and can be iteratively amended  by code reviewers and the original contributor(s) through a collaborative process. When the code reviewers eventually approve the change set, the latest patch set is sent to the CI tool (Zuul) for automated testing and compilation

by code reviewers and the original contributor(s) through a collaborative process. When the code reviewers eventually approve the change set, the latest patch set is sent to the CI tool (Zuul) for automated testing and compilation  . If the CI tests are successful, the changes are merged into the upstream project

. If the CI tests are successful, the changes are merged into the upstream project  .

.

Gerrit code contribution process in OpenStack (adapted from ⋆). ⋆https://docs.opendev.org/opendev/infra-manual/latest/gettingstarted.html

Terminology-wise, a change set in Gerrit corresponds to a given feature or bug fix for which a contributor submits an initial patch set (Xia et al. 2015) for code review. The iterative code reviewing process leads to a series of subsequent patch sets, corresponding to updates or fixes to the initial patch set. This series of patch sets belongs to the same change set with a unique Change-Id identifier. These identifiers will be referred to as change identifiers henceforth. If the code changes within a patch set are co-authored, OpenStack recommends that the commit message can be used to indicate that multiple people have been working on a particular patch, using the git commit message trailer Co-Authored-By: < name > < email >.Footnote 16

2.3 OpenStack Contribution and Attribution Policy

People can contribute to the OpenStack communityFootnote 17 in many different ways and there are also different types of rewards.Footnote 18 Contributions can be technical,Footnote 19 social,Footnote 20 or administrative.Footnote 21 Technical contribution happens through an upstream project, for example by contributing to its documentation or code base, or by participating as a chair for any of the project’s technical conferences and summits. Social contribution can occur by engaging in the social life of the community, for example, by planning community events such as summits, project team gatherings, forums, etc. Contributions can also be of administrative nature, for example through participating in the OpenStack Ambassadors’ program or serving the foundation through the Technical Council (TC) or Project Team Leads (PTL). Besides, since OpenStack does not discriminateFootnote 22 among these types of contributions, but instead gives equal importance to contributors, every single contribution should count towards reward and recognition. This paper will only focus on micro-collaboration to the code base, thus, we will only investigate attribution and co-authorship in the code base.

3 Setup of Interviews with OpenStack Contributors

The research questions raised in the introduction aim to understand and document OpenStack’s practices for micro-collaboration. Are git commit message trailers used consistently? What other collaborative coding mechanisms are used frequently? What are the benefits of micro-collaboration? To address these and other related questions, we have conducted semi-structured interviews (Bird 2016) with OpenStack practitioners during the Open Infrastructure Summit (OIS) in Berlin, Germany from 13 to 15 November 2018.

3.1 Selection and Demographics of Participants

For the interviews, we required OpenStack practitioners to share their view on the potential added value that collaborative coding brings to the ecosystem. To gain different perspectives on the problem, we sought to obtain the opinion of Foundation staff Members (FM), as well as Active Technical Contributors (ATC) having contributed to at least one OpenStack project and having at least three years of experience within OpenStack.

In November 2018, we carried out an initial quantitative analysis on OpenStack’s git repositories to identify code collaborators in terms of git co-authored trailers. Based on this, we extracted a list of contributors having co-authored at least one patch set, and we sent customized emails to three senior contributors that the first author knew personally. For broader coverage and response we drafted a recruitment screener (Egelman et al. 2020) and sent it to the OpenStack developers’ mailing list as well through personal emails, inviting interested OpenStack practitioners to participate in the interview.

In total, we received 32 prospective participants for the interviews, of which 24 ATC and 8 FM (6 confirmed upfront through email confirmation, and 2 more were recruited on-site through snowball sampling (Salleh et al. 2018) upon suggestion by a senior FM). During the summit, as the interviews progressed, we noticed after the 16th participant that no additional new information was provided on top of what previous participants had said; we thus reached a point of saturation (Guest et al. 2006; Fusch and Ness 2015b), similar to how previous studies (Kim et al. 2016; Meyer et al. 2019) reached saturation at 16 and 10 interviews, respectively. In qualitative studies, saturation usually happens during data collection and analysis when no new theme emerges from any additional data collected, i.e., in our case from the interviewed participants. Therefore, we stopped the interview session and discarded the redundant interviews.

The demographics of the 16 retained respondents is shown in Table 1, with seven interviewees self-identifying as female and nine as male. The respondents were spread across different geographical regions. Only three of the respondents were contributing to OpenStack on a volunteer basis, while the other 13 were hired (9 were hired directly by companies participating in the OpenStack consortium, whereas 4 got hired through OpenStack events or summits). Respondents T1 to T11 were all active technical contributors (ATC) to project teams, while respondents B1 to B5 were additionally involved in a more organizational role at OpenStack, such as being an FM or TC member, project team leader (PTL), or member of the infrastructure (Infra) team. Their experience in OpenStack ranged from three to nine years, and they were involved in between 1 and 11 different projects.

3.2 Interview Recording and Transcription

All interviews were semi-structured and performed by the first author of this article during the 2018 OpenStack Summit in Berlin. The 16 recorded interviews lasted a median of 17 minutes. Before the interview, each respondent was asked to fill a consent form. Most of the questions were open-ended. The questionnaire is shown in Appendix ??. The interview guide was designed to include three main series of sections:

-

1.

general questions aimed to understand the background and demographics of each participant;

-

2.

questions targeted to respondents of type Bk (having an organizational role in OpenStack) to gain more insight in the mechanisms and modalities OpenStack governance has put in place to encourage the practice of collaborative coding; and

-

3.

questions targeted to respondents of either type Ti or Bk to capture the technical perspective of collaborative coding.

After the summit, the recorded interviews were distributed equally over the last three authors of this paper for transcription. The interviewer double-blinded the respondent names before providing the recordings to the transcribers. Each interview transcript was stored in a file labelled by a unique identifier associated to the respondent. Each transcriber received instructions to further anonymize the transcripts by hiding any personal data, in order to anonymize any privacy-sensitive information.

3.3 Interview Coding and Reviewing

Our qualitative methods consisted of: (1) a thematic analysis (Terzimehić et al. 2019; Lenberg et al. 2017; Cruzes and Dyba 2011) from the transcribed data to uncover themes such as the expected benefits, challenges and communication mechanisms at OpenStack; and (2) a content analysis (Arya et al. 2019; Himmelsbach et al. 2019) to understand the prevalence of emerged themes that practitioners discussed about during the interview. To uncover themes from the transcribed corpus, we started with a qualitative coding process. We identified how identical codes form categories of high-level themes. We kept track of the prevalence (i.e., popularity) of the emerged themes as they appear in each code category. Below, we provide more details.

After transcription of the 16 interviews, which form our data points at document-level, we started a two-phase process consisting of (1) coding the transcripts; and (2) reviewing the coding to reach mutual agreement. The coding phase started with an initial inductive coding (Bernard et al. 2016) conducted by one author who used two transcripts (corresponding to 10 − 15% of the total set of transcripts (DiStaso and Bortree 2012)). He assigned labels to the transcribed text, without any predetermined theory, structure or hypothesis. As the coding progressed, common themes started emerging, that were classified into code categories. The coding process continued until all transcribed text was coded. This process resulted in an initial codebook (Saldaña 2015).

Next, all authors were involved in a deductive coding process (Bernard et al. 2016) using this initial codebook as a guide. Each author independently coded the interview transcripts that were initially assigned to them. Whenever a new theme emerged during this coding, the authors would discuss, resolve and manually harmonize the resulting codebook as described below.

In the reviewing phase, each author was assigned for coding a different set of four transcripts that had already been coded in the first phase by another author. The purpose was to assess whether both coders reached a sufficient rate of agreement on the performed coding. For each code found in each transcript, value 1 was assigned if both coders agreed on the code category, and value 0 otherwise. Based on the categorical structure of the data, and the use of two raters, we used Cohen’s κ (Islam et al. 2018) to calculate inter-rater reliability (IRR) (Campbell et al. 2013). We used Landis’ agreement levels (Landis and Koch 1977) to interpret the IRR as poor, slight, fair, moderate, substantial or excellent.

To iteratively improve the IRR, we performed three rounds of negotiated agreement (Campbell et al. 2013). The first round of coding already yielded a substantial IRR of κ = 0.69. During the second round the raters negotiated the disagreements that were encountered during the first round using a combination of online and in-person discussion. After sorting out these differences the improved IRR became excellent, with κ = 0.81. In the third round, we rearranged and merged certain code categories that were considered contextually similar. This lead to a final coding structure that satisfied all raters, with a perfect coding agreement of κ = 1.

The resulting codebook, provided in our online replication package (Foundjem et al. 2021a), enables us to understand practitioners’ perspectives on collaborative coding in a complex ecosystem. Ten themes emerged from the transcribed text that depict the advantages and benefits of collaborative coding in OpenStack. Section 4 reports on these themes derived from the qualitative results together with their frequencies.

4 Qualitative Results of Interviews

T his section reports on the findings that we derived from analysing the interview transcripts. They provide qualitative evidence to answer the research questions outlined in Section 1. Numbered from F1 to F10, the findings are summarised in Table 2 and discussed in the following subsections.

4.1 R Q 1 How do OpenStack Contributors Engage in Collaborative Coding Practices?

We asked the interviewees about the collaborative coding mechanisms they were aware of, or had personal experience with, in OpenStack projects. All different mechanisms they mentioned are discussed below. Some of them correspond to macro-level collaboration, while others correspond to micro-level collaboration. In the remainder of this paper, we will narrow down our focus on micro-level collaboration between individuals.

(a) Co-Authored-By: trailers in git commits

The micro-collaborative coding mechanism that was mentioned by all 16 respondents consists of appending trailers of the form Co-Authored-By: < name > < email > to git commit messages to indicate the contributors that collaborated on a particular patch (see Section 2.2). While all respondents were aware of such commit message trailers, two of them (B1 and T5) did not participate in co-authoring activities within OpenStack, while three respondents (B1, B5, and T11) only experienced this technique outside of OpenStack.

Three respondents stated that they used such trailers when it was important to list all co-authors in a code commit that is a product of collaboration, and six participants said to systematically use the trailers during collaborative coding. Three other respondents said that they sometimes forget to add the trailers because they are not interested in the perks coming with the collaboration (e.g., credits to attend OpenStack events). Two respondents said they only use the trailers for non-trivial changes. When asked how frequently trailers were used across the OpenStack projects, only three respondents claimed that it was extremely common. The other 13 reported that the mechanism was used even though it was not a frequent practice.

(b) Gerrit code review collaboration

The second most mentioned technique for collaborative coding is through Gerrit code reviews, and could support either micro- or macro-collaboration.

Respondents mentioned the following two code review mechanisms that can be considered as micro-collaboration:

-

1.

Five respondents mentioned the practice of co-authoring by uploading a new patch set version of someone else’s patch set, under the same Gerrit change id. As such, one can download a patch under review, modify it, then re-upload a modified version that supersedes the previous patch version. This approach is easy to use and preserves the original co-authoring information. It also registers the information of the new author(s) in the final commit. Three of these respondents mentioned the use of Gerrit’s web interface for inline patch editingFootnote 23, allowing to quickly generate a new patch set for minor edits such as fixing typos.

-

2.

Four respondents considered the act of providing code review comments as a form of code collaboration, typically when they expect no credit for their contributions in the form of co-authorship. This is the most loose interpretation of micro-collaboration that we encountered, and we did not study this further.

In terms of macro-collaboration, four respondents discussed the practice of writing a separate change set dependent on someone else’s change set, such that the combination of both change sets achieves the intended functionality. The advantage of this approach is that both authors get full credits, since they each submit their own change set (and hence commits). The disadvantage is that it requires the code contributions to be cleanly decomposable, and that it introduces strong dependencies between patches. This approach is often used when the original contributor is no longer available or interested to work on the patch.

(c) git feature branches

Four respondents mentioned the macro-collaborative coding mechanism of feature branches in git. Development of certain complex features at OpenStack requires a large-scale, macro-level collaboration among cross-project teams. Such features usually take longer than expected to develop (otherwise the patch set approach mentioned previously could be used). As such, development takes place in a feature branchFootnote 24 with several iterations of commits. When development is completed, these features are later merged into the master branch. B3 explains: “You just set an official feature branch where you land those various patches and then once the branch is ready you can squash it as a single commit and merge it back to the main, with all the co-authors. So that would be: the lightweight … spontaneous … and the industrial way of doing it.”

While joint contributions could potentially involve many different communication channels, the responses highlighted that some of them were more common. Table 3 reports six different communication channels that are used for collaborating within OpenStack. IRC is the most common, followed by code review comments, communication through mailing lists and git commits. More traditional forms of private communication, such as personal emails and phone calls, appear to be less common or were simply not mentioned.

4.2 R Q 2 What are the Benefits of Micro-Collaboration?

OpenStack contributors perceive benefiting from micro-collaborative coding for a diverse set of reasons. Based on an analysis of the contents of the interview transcripts, Table 4 summarises in decreasing order of frequency the benefits that respondents perceive from micro-collaborative coding. The frequency of each benefit indicates how prevalent contributors perceive its value in collaborative coding. F3, F4, F5 and F6 are considered the most frequent benefits, totaling 75.4% of all responses. Each perceived benefit is discussed in detail below.

14 respondents consider co-authoring as a mechanism that both enables and exploits the benefits of collaboration and teamwork. For example, co-authoring was said to facilitate the planning and actual development of code contributions, to provide more confidence about the quality of a contribution, and to speed up development in areas with high complexity, even of larger contributions. T4 affirms that it is “nice to know that it wasn’t just one person thinking through the design of it and developing it but that it was multiple people collaboratively doing it”.

Co-authoring encourages diversity amongst contributing developers (in terms of thinking, skills, expertise, seniority, etc.) and provides a common platform for developers to share expertise; divide and conquer. By awarding credits for OpenStack events to all co-authors, co-authoring provides an additional incentive to make developers collaborate. Finally, T5 signaled more implicit cases of co-authoring, where a contributor is “… picking up things that have just been abandoned or left or people don’t have time for …”

All respondents emphasized the importance and benefits of collaboration and mentoring for onboarding newcomers. For example, B3 reported that collaboration makes it easier for new contributors to submit their first patch: “You notice that they might be too shy to author completely a patch, but if they can be counted as a co-author, or if they wrote the design of it or the documentation of it that does not really show in the code, then you can credit them for their work. So, I feel like it is a positive system.”

Eight respondents reported that they started co-authoring as junior developers, whereas five claimed they were already experienced. Only four respondents mostly did co-authoring with less experienced people (typically as mentor), while the other twelve respondents typically performed co-authoring while being mentored by more experienced contributors. Hence, new contributors as well as more experienced ones seem to benefit from learning from more senior contributors, allowing a better understanding of the design and gaining deeper technical details of projects.

While one respondent claimed that the person who is doing most of the work is recorded as lead author, fourteen other respondents instead claimed that the lead author would just be the person doing the final submission of the patch.

Eight respondents mentioned both the social and technical aspects that can influence contributor’s retention/abandoning/turnover. Four respondents stated that the TC is doing its utmost to improve OpenStack’s work culture, besides just being aware of unpleasant experiences that contributors have had in the past. For example, B3 said “we try to keep it fun and engaging, … make sure that people are happy contributing to OpenStack and that they want to contribute to OpenStack.” OpenStack has also put in place an onboarding program to attract new contributors and keep them engaged (Foundjem et al. 2021b). Moreover, T9 mentioned the benefits on feedback during collaboration: “… like the onboarding sessions that we do here attempt to get you familiar with the specifics of a project but the toolset in general. But I think that’s also a good way for feedback in regard to the retention; for feedback where things might not be working well. That’s how we find out.” Collaboration creates an atmosphere for contributors to share immediate feedback on their work progress, since collaborators are accountable to one another. This allows to address obstacles timely before they may lead to abandonment.

Eight respondents indicated that collaboration enhances learning and understanding of the code base, since it (i) encourages junior developers to learn the development process faster; (ii) helps to better understand other people’s context, component, expertise and problems; and (iii) facilitates better comprehension of the scope and complexity of the overall project.

Seven respondents stated that collaborative coding fosters higher-quality contributions, for example in terms of (i) coding style and clarity and (ii) code correctness. Since every contributor brings in her own specific expertise, the resulting contribution becomes more than the sum of its parts. To sustain this claim on collaborative coding, B1 asserts that “… to ensure that the code has the style that matches the rest of the library code for that particular project and that it is readable and simple and accurate, correct. So, all the usual reasons that you do code review.” In addition, B2 advocates “… When more than one person contributes, it helps the quality of the code. ”

Four respondents highlighted that collaboration increases productivity, since there is no more need to wait for other people to fix something. Instead, one can just collaborate to make things move quicker. At the same time, the fact that a group of people is working together on a contribution allows to bring larger changes faster, while everyone only needs to perform a part of the effort (less work for everyone involved). This was highlighted by B6: “… most projects do encourage that, it’s a good way that people can work together and come up with changes … than one person or they are able to get more work done than one person can do by themselves.”

Two respondents provided another perspective on productivity improvement, namely that co-authoring stimulates developers to not just reject other people’s contributions (with a high chance the rejected contributions would never make it), but instead encourages them to collaborate themselves on the broken patches. As such, a small fix could still allow a broken patch to go in, rather than the project losing out on it or stalling while waiting for improvements by the patch author. As such, this mechanism leads to an improvement of the code review culture. Related to this are the aforementioned implicit collaborations, where contributors can effectively pick up an abandoned patch or a patch people do not have time for anymore (with their permission, if needed).

Five respondents declared that co-authoring brings about individual recognition. It allows acknowledging everyone involved in a joint contribution, so that even the smallest contribution gives visibility to its contributors. Six respondents uphold that one major way of thanking joint contributors is by providing credits to them for each contribution. Such credits enable contributors to obtain a rebate on the registration cost for OpenStack events, such as a project team gathering or summit. In addition, credits allow contributors to vote or stand in elections.

Visibility and recognition also implies accountability, since in the case of a bug or other issues the responsible can be tracked easily. This is an important aspect of joint contribution, yet not everyone follows these guidelines consistently. Especially the more experienced developers would forego being recorded as a collaborator, since they already have enough visibility in the community or do not need the extra credits (they have direct access to all OpenStack events). By doing so, the accountability aspect is lost.

Furthermore, two respondents mention that the recognition provided by joint contributions positively reflects on the public image of the contributor’s company. This is why many companies actively track their employees in order to encourage such contributions. T1 highlights that it makes “… certain sense for some companies that employ contributors that having a name attached to a patch is important because it gives that company recognition in addition to the individual contributor.”

Related to F6, the provisions for accountability also provide more confidence in the quality of joint collaborations, since, in the event that one of the developers of a project leaves, the other collaborators still share a sizeable amount of knowledge and expertise about the contributed code. Hence, the organization can continue to work without being impacted.

T5 emphasises accountability during collaborative coding: “Usually most people try adding co-authored-by … so at least they have tracked accountability. And very rarely do we run in cases where people actually completely pull a patch down, recommit it without.” T3 claims collaborative coding is important to credit or encourage collaboration: “It gives credit or credit is due if many people are involved in an idea then they should all get credit for the idea.”

Accountability of co-authorship can also be important because of legal obligations. This was highlighted by respondent T3: “… giving credit is important for legal reasons … someone created some code but if there is no attribution then that causes legal problems.”

Five respondents stressed that joint contributions enable a project to deal with complex and inter-dependent multi-person patches. These are patches that cannot be decomposed into separate parts, but need to be submitted as one. Similarly, co-authoring can help to break down complex tasks for contributors with different expertise. For example, B5 reported that “The reasons in which I contributed was mostly on complex task as we applied the divide and conquer technique.”

4.3 R Q 3 How can Micro-Collaboration be Improved Further?

When respondents were asked about whether they were satisfied with how OpenStack supports collaboration and co-authoring, 15 respondents said they were generally happy, and 11 could not think of any specific drawback. When asked more specifically about things that could be improved in the processes or tools for co-authoring, responses were more diverse, as shown in Table 5. Automation, tracking and promotion account for 71% of the total demands.

The respondents raised various concerns regarding the process and tools used for co-authoring commits, and suggested mechanisms that could improve joint collaboration. For example, as Table 5 shows, ten respondents mentioned the importance of more automated tools involved in co-authored commits, for example to automatically insert trailers. Seven respondents asked for better ways for tracking (co-)authorship, since for example the Gerrit-based workflows require manual effort to look up all versions (sets) of a patch to identify all co-authors, and contributions other than source code are not tracked that well. Moreover, automating the tools should also facilitate the process of adding co-authors, standardize the information provided in git commit messages, and speed up collaborations.

Five respondents stated that the practice of co-authoring commits should be promoted more actively, to create more awareness and hence achieve more collaboration. In addition, three respondents said that the usage of a Co-Authored-By: commit trailer should be enforced when co-authoring commits. This might partly be due to lack of clear documentation of the joint collaboration process (two respondents).

Two respondents emphasized the need for better communication means during collaborative coding activities. Finally, respondent T5 advocated that collaborative coding could result in licensing violations (Mlouki et al. 2016), since more people are involved and might (accidentally) bring in source code protected by different licenses: “… There is a lot of licensing problems that might occur … You have to be generally careful. If it is already in the repo than naturally it is licensed, and they should be honoring our licenses.”

5 Setup of Quantitative Study

This section explains the setup of our quantitative study on OpenStack aiming to find statistical support for the key qualitative findings of RQ1 and RQ2 that were highlighted in gray in Table 2. We will empirically evaluate these qualitative findings on the basis of the quantitative study outlined in this section.

5.1 Extracting Micro-Collaborations

The qualitative analysis of Section 4 revealed two important sources of micro-collaboration, namely, co-authorship trailers in git commit messages, and patch sets for Gerrit change sets to which multiple individuals have contributed. This subsection presents the datasets used in our quantitative analysis.

\(\mathcal {C}_{init}\) = initial dataset of git commits

OpenDev is the integrated collaborative open source platform hosting the OpenStack code base. On the 22nd of July 2020 we cloned all 2,219 OpenDev git repositories with a total of 1,870,705 commits, in order to identify those contributors that are most involved in co-authorship activities. To gather a history of exactly nine years of OpenStack activity, we removed any activity before the 1st of July 2011 (66,015 commits) and after the 30th of June 2020 (5,503 commits). We selected July 2011 as the start for our commit data set to better align with the Gerrit dataset (described below), since Gerrit was introduced in the OpenStack workflow in July 2011Footnote 25.

We ignored 343 repositories and their 97,630 commits that corresponded to retired projectsFootnote 26, as indicated by their latest commit’s message. This left us with 1,701,557 git commits for 1,869 OpenStack projects, covering all branches including feature branches. From these, we removed another 210,462 commits corresponding to the activity of the openstack/openstack repository as it does not contain actual content, rather pointers to other repositories. As such, the initial commit dataset \(\mathcal {C}_{init}\) consists of 1,491,095 git commits in 1,868 repositories.

To determine co-authorship in this commit dataset, we proceeded as follows. For each git commit we extracted its author and committer, as well as any contribution that could be retrieved through commit message trailers signaling co-authorship. Officially, co-authored commits should use the Co-Authored-By: commit message trailer (see Section 2.2). However, we manually observed and confirmed the presence of many variations of this trailer in the OpenStack git repositories, which we also included as valid signals of co-authorship. More specifically, after conversion to lowercase we found and considered at least one instance of each of the following alternative trailers: author:, co-author:, authored-by:, co-authored:, co-author-by:, co-authored-with:, author attribution:, also-authored-by: and co-authored-by:.

\(\mathcal {P}_{init}\) = initial dataset of Gerrit change sets

The second data source of micro-collaborations in OpenStack is based on the Gerrit code review system. Micro-collaboration in Gerrit is more subtle than in git since, to signal co-authorship, we had to consider all change set instances where at least one individual other than the initial patch set submitter, modified and uploaded a patch set.

We gathered all change sets through OpenDev’s Gerrit REST APIFootnote 27. For each change set we extracted all comments, all patch sets and their comments and files touched. The extracted data contains relevant information about which contributor performed which action, such as who commented on a change set or patch set, and who authored and submitted the patch set. On the 22nd of July 2020, we fetched the change sets of all 2,219 git repositories of OpenDev with a total of 733,465 change sets. Similar to the git commit dataset \(\mathcal {C}\) we considered a 9-year observation period from the start of July 2011 (the introduction of Gerrit in OpenStack) to the end of June 2020 and removed change sets of retired projects and the openstack/openstack repository.

The initial review dataset \(\mathcal {P}_{init}\) consists of 675,159 Gerrit change sets and 2,098,269 patch sets in 1,818 projects. The new, merged and abandoned change sets correspond to 13,890, 538,381 and 122,888 change sets, respectively.

5.2 Data Cleaning

The initial datasets \(\mathcal {C}_{init}\) and \(\mathcal {P}_{init}\) presented in Section 5.1 need to be cleaned in order to merge developer identities and remove bots.

Merging identities

To avoid false positives of collaborative coding and report only true collaborative coding instances, we applied developer identity merging (Goeminne and Mens 2013) on the datasets \(\mathcal {C}_{init}\) and \(\mathcal {P}_{init}\). \(\mathcal {C}_{init}\) contains 20,312 distinct identities, composed of a name and email address, corresponding to authors, committers and persons mentioned in the commit message trailers. \(\mathcal {P}\) contains 18,392 distinct identities that typically contain a name, email and username informationFootnote 28; 5,089 of the extracted identities were missing the username.

Prior to merging identities, we manually analysed the combined dataset \(\mathcal {C}_{init}\) ∪\(\mathcal {P}_{init}\) to recover generic names and emails that are not associated with identities (e.g., root, etc, Your Name, Author Name, root@localhost.localdomain, none@none); we recovered 20 such labels in total. The list of terms was enhanced with an initial invalid name list based on the suggestions of Goeminne and Mens (2013). These names and emails where excluded when comparing terms during identity merging.

Then, the first author acquired a partial ground-truth dataset containing the list of active contributor accounts, with names and username(s) of each contributor mapped to all their email addresses, as recorded in OpenStack’s internal database. This partial dataset did not contain inactive or deleted accounts and was used as the first step to merge identities.

After this merging step, identity names, emails and usernames in the \(\mathcal {P}_{init}\) were normalized following the approach of Bird et al. (2006) and identities with at least two common tokens were manually inspected to decide whether they needed to be merged. The manual inspection was based on three criteria: (1) name/email/username labels, (2) activity statistics of each identity; and (3) recovery of OpenStack webpages for each identity to verify if the person corresponds to the identities. In case one criterion was not sufficient, then the following one was used to make a decision. For example, the identities Tom Mens <tom.mens@domain.com> and Mens Tom <tom@mens.com> do not share the same email address, but the normalized terms (Tom, Mens) in both identities indicate that they should be merged based on the first criterion. This identity merging process resulted in a final set of 17,195 merged identities in \(\mathcal {C}_{init}\) ∪\(\mathcal {P}_{init}\).

Removing bots

The second cleaning step consisted of excluding bot activity. Bots are tools performing automated processes and their accounts are disguised as real identities. In the context of identifying collaborative coding of OpenStack contributors, such automated bots should not be considered as collaborators and thus be excluded from our analysis.

To identify bots, we relied on an official OpenStack list of Gerrit accounts corresponding to botsFootnote 29. We enhanced this list in three ways. Firstly, we identified OpenStack system namesFootnote 30 and manually verified identities that contain terms stemming from the system names. Secondly, we manually identified Gerrit identities having the same name as the official bots used by continuous integration tools; this was achieved using variations of the search term “CI” in their name or username. In addition, the most active Gerrit accounts for different activity types (change set ownership, change set or patch set comments, authored or uploaded patch sets, patch set approvals, reviewing) were manually inspected to recover very active bots that might have been missed by the first two bot identification steps. Using the above process, 322 distinct bot accounts were detected in \(\mathcal {C}_{init}\) ∪\(\mathcal {P}_{init}\).

Throughout the process, we discovered 14 mixed profiles of combined human and bot activity (Golzadeh et al. 2021; Cassee et al. 2021). For example, the fictitious merged identity of John Doe <john.doe@domain.com> and John Doe CI <john.doe@domain.com> would be marked as a mixed profile, as the first identity is marked as human while the second is marked as bot.

All activity corresponding to bots and all bot-specific activity of mixed profiles were excluded, thereby effectively removing 572,544 commits from \(\mathcal {C}_{init}\), and 43,713 change sets from \(\mathcal {P}_{init}\). Moreover, 218 change sets are removed as all their patch sets were authored or uploaded by bots. This resulted in cleaned datasets \(\mathcal {C}_{clean}\) of 918,551 commits in 1,832 projects, and \(\mathcal {P}_{clean}\) containing 631,223 change sets in 1,817 projects.

5.3 Dataset Alignment

The cleaned datasets \(\mathcal {C}_{clean}\) and \(\mathcal {P}_{clean}\) still need to be aligned. We need to carry out project alignment to ensure that we only consider projects that exist in both datasets. Otherwise, we might overestimate the collaboration in one of the datasets if there are highly collaborative projects that are only present in that dataset. Second, in order to compare both datasets we need to carry out commit alignment by mapping git commits to the corresponding Gerrit change sets.

Project alignment

There are 1,804 projects in common between the commit dataset \(\mathcal {C}_{clean}\) (corresponding to 1,832 projects) and the review dataset \(\mathcal {P}_{clean}\) (corresponding to 1,817 projects). Restricting ourselves to these 1,804 projects, we obtain a final commit dataset \(\mathcal {C}\) with 917,970 git commits and a final review dataset \(\mathcal {P}\) with 631,201 Gerrit change sets. These final datasets will be used for the quantitative analyses of Section 6.

Commit alignment

To align the individual commits in \(\mathcal {C}\) with the merged code reviews in \(\mathcal {P}\), we cannot simply rely on commit hash values, since commits can be rebased or squashed (Kalliamvakou et al. 2015). As a first heuristic, we use the change identifiers (change-id trailer in the commit message) that persist when squashing, according to the OpenStack documentationFootnote 31. This heuristic allowed us to align 474,156 git commits and 490,860 Gerrit change sets corresponding to 442,509 change identifiers.

As a second heuristic, we use commit hash values to match commits in the case they have not been squashed. The heuristic aligned 102 commits and change sets that had identical commit hash values, corresponding to 108 change identifiers. This low number is due to the large number of alignments already achieved by the first heuristic. Unfortunately, the second heuristic is not robust due to rebasing and cherry-picking, as in these cases the hash value changes and there is no traceability.

Thus, a third heuristic matches the author and commit messages to align commits as these fields proved to be the most reliable to align commits in the presence of rebasing, according to German et al. (2016). This heuristic allowed us to align 22 commits and 19 change sets, corresponding to 24 change identifiers. In total, 442,637 change identifiers are aligned, corresponding to 474,280 git commits and 490,981 Gerrit change sets. These aligned commits and change sets are only used in our analyses when directly comparing the collaborative or individual activity between the two platforms and are annotated as \(\mathcal {C}\) ∩ \(\mathcal {P}\) in the remainder of the paper.

5.4 Analysing Bug-Introducing Commits

One aspect of code quality related to (F6) is the probability of introducing bug-inducing changes (BIC) when doing micro-collaboration vs. individual coding activities. Bug-inducing commits contain changes to files that introduced new bugs but unfortunately slipped through code review and were only noticed and reported later on (Fan et al. 2019; Neto et al. 2019; Borg et al. 2019). Existing work on bug-inducing changes has focused especially on predicting whether a given change introduces a bug (Śliwerski et al. 2005; An et al. 2018), as well as on heuristics to trace back from a bug-fixing change to the change that introduced the original bug (in particular the SZZ family of algorithms (Borg et al. 2019; Rodriguez et al. 2018)). We follow a 3-step methodology to mine BIC data:

5.4.1 Step 1 — Linking Bug ID to Bug Fixing Commit ID

Over the studied period, OpenStack has used both the Launchpad and Storyboard issue trackers. We mined the bug metadata (e.g., ID, date, time, and time zone of bug submission) from both tracking systems, focusing only on “confirmed” issues since those are the categories of issues for which bug fixes are being proposed. This resulted in a list of 1,194,026 issues.

We extracted and parsed all the commit messages from the commit dataset \(\mathcal {C}\) to identify bug-fix related commits. We used regular expressions to scan the commit messages for the typical trailers used by developers to communicate the bug ID being fixed (Śliwerski et al. 2005; Borg et al. 2019; Wen et al. 2019):

-

1.

[A − za − z] ∗ [∖−][Bb][Uu][Gg][:][∖s]?[#]?[∖d]{6,7}

-

2.

[Ff][Ii][Xx]?[A − Za − z]∗? :?[∖s]?[#]?[0 − 9]{6,7}

-

3.

[A − za − z] ∗ [Ll][Pp]?[:]?[∖s]?[#][0 − 9]{6,7}

The regular expressions enabled us to extract bug IDs from the commit messages. We compared the bug IDs from their corresponding issue report on LaunchPad or Storyboard to the extracted bug IDs in the commit messages. If a match was found, we linked the bug ID to the commit ID (SHA-1) of the bug fixing commits (about 1.2M in total). In doing so, we found and removed 231,726 duplicate bug IDs, leaving us with a total of 917,970 unique bug IDs linked to their corresponding bug fixing commits IDs.

5.4.2 Step 2 — Identifying BICs

To identify the BICs for the obtained bug fixing commits, we used the SZZ implementation provided by PyDriller (Spadini et al. 2018). On each bug fix commit, this SZZ variant performs git blame to identify the last commits that touched the lines fixed by the bug fix. Those commits form the initial set of candidate BICs, since they were the last ones to touch the fixed lines. However, since the actual BICs have already been made before the bugs were reported (otherwise, the bug would not exist yet), PyDriller uses the bug report date to remove the commits made after the fixed bugs were reported. If, after filtering, more than one commit remains, the algorithm considers all of them as BICs. We ran the algorithm on 917,970 commits, yielding 315,690 BICs.

5.4.3 Step 3 — Analyzing BICs

Based on the identified BICs, we aim to find out if micro-collaborative coding correlates to software quality. To do so, we use a χ2-test of independence (McHugh 2013; Satorra and Bentler 2001) with confidence level α = 0.001. This test measures how far the observed counts of a variable are from the expected counts if the null hypothesis is true. The further, the more evidence the data presents against the null hypothesis. In our case, the null hypothesis states that there is no difference in the proportion of BICs between individual and micro-collaborative coding. To report the observed and expected counts, we first count the number of observations (i.e., observed counts) for two events of interest: event A for the response variable (BIC or non-BIC), and event B for the explanatory variable (collaborative or individual coding). Next, for each of the four cases, we compute \(\text {Expected Count} = \frac {\text {Total for A} \times \text {Total for B}}{\text {Table Total}}\). This allows to calculate the χ2 statistic.

5.5 Validation of Qualitative Findings Through Multivariate Analysis

In RQ2, improvement of software quality (F6) and productivity (F7) were reported as two of the benefits of micro-collaboration. To quantitatively validate these benefits, we use both statistical tests (for F6 involving BIC) and multivariate statistical analysis on a large number of observed variables that are potentially related to quality and productivity improvement. Inspired by Siegmund et al. (2014) we carry out exploratory factor analysis (EFA) and confirmatory factor analysis (CFA) (Treiblmaier and Filzmoser 2010) with structural path analysis to analyze multivariate structures in quality and productivity improvement.

These analyses were implemented in Python using Factor AnalyzerFootnote 32 and semopy (Meshcheryakov et al. 2021). Full details are available in our replication package (Foundjem et al. 2021a).

Structural Equation Model (SEM) is a set of statistical models rooted on the assumptions of two requirements (1) the concept under consideration must be a complex multivariate relationship, and (2) the data to understand these relationships should be interval-scaled or continuous data (Gopal et al. 2005), even though Johnson and Creech (1983) argue that categorical data with more than five categories can still be considered for SEM models (Johnson and Creech 1983). Our observed variables contain a mixture of categorical and continuous data.

In order to study the relation of micro-collaboration with the quality and productivity of code contributions, we started off with a catalogue of metrics known from related work to be related to quality and/or productivity (Forsgren et al. 2021; Foundjem et al. 2021b; Izquierdo-Cortazar et al. 2017; Kononenko et al. 2016; Kovalenko and Bacchelli 2018; McIntosh et al. 2014b; 2016; Oliveira et al. 2020; Rigby et al. 2012). We then filtered out all metrics not fit for SEM models, either (1) because they were not categorical with more than five categories (Johnson and Creech 1983), not interval-scaled or not continuous (Gopal et al. 2005), (2) because more than 90% of their values were either zero or absent, or (3) because the metrics were correlated. This led us to the 17 observed variables (metrics) that are listed in Table 6. The quality metrics are measured per change set, similar to all productivity metrics, except for those marked as “po”. The latter metrics measure the productivity of the contributors involved in the change sets. Furthermore, the variables marked as “b” are used both for the quality and change-set level productivity analysis. Note that collabso is a contributor-level version of collabs, hence has a different definition. A separate model will be built for change set- and contributor-level productivity.

5.5.1 Exploratory Factor Analysis (EFA)

CFA (and SEM) assume the presence of factors, i.e., latent variables that aggregate semantically related observed variables. One can either hypothesize such factors a priori, or use Exploratory Factor Analysis (EFA) to identify factors semi-automatically. EFA basically does this by reducing the dimensionality of the observed variables such that the identified factors are explained by the principal components of the observations. Similar to Siegmund et al. (2014), we use EFA to semi-automatically extract the factors, together with the factor loadings, i.e., the degree to which each observed variable is associated with each latent variable. We do a separate EFA on the observed variables related to software quality, those related to change set productivity, and those related to contributor productivity.

Before proceeding with EFA, one needs to ascertain that the basic assumptions for factor analysis are met. To do so, we used Bartlett’s test of sphericity and the Kaiser-Meyer-Olkin (KMO) tests (Tong et al. 2012) to examine the strength between the measured variables, and how factors explain each otherFootnote 34. Bartlett’s test validates the null hypothesis that the correlation matrix of the data is identical to its identity matrix I. If this is the case, then the observed variables are unrelated and the data is therefore unsuitable for EFA (in other words, one should reject this test to continue EFA). KMO tests to what extent each observed variable could be perfectly predicted by other observed variables in the dataset.

The KMO tests revealed an average fit of the EFA model for quality (KMO = 0.75) as well as for productivity measured at change-set level (KMO = 0.73). Bartlett’s tests were significant (p < 0.0001) with χ2 = 4.2 × 10− 6 for quality and χ2 = 1.6 × 10− 6 for productivity at change-set level. However, none of the EFA tests were successful for productivity measured at contributor level (i.e., for the po variables in Table 6). Therefore, we could not carry out a multivariate analysis at that level, and instead we only conducted a univariate statistical analysis for them.

We then proceeded with EFA on the quality metrics and on the change-set level variables to determine the latent variables and their loadings, resulting in the loadings reported in Tables 7 and 8. To determine the number of factors, we used the eigenvalue rule (Treiblmaier and Filzmoser 2010). We computed the eigenvalues of the correlation matrix for both quality and (change-set level) productivity, and chose only the factors with eigenvalue ≥ 1. We obtained three latent variables for quality and two for productivity. To determine which observed variables contributed to these latent variables, we considered the absolute value of the loadings to be relevant when ≥ 0.32 (Treiblmaier and Filzmoser 2010).

The observed variables highlighted in bold in Tables 7 and 8 contribute to the corresponding latent variable. The higher the loading, the more the observed value contributes to the latent variable. We then manually assigned a label to each latent variable on the basis of the observed variables it is composed of. For example, factor automationEffort in Table 7 captures information from variables gating, status, workFlow and coreReviewers, most of which relate to the automation effort in the CI process. Note that some of the observed variables contribute to multiple latent variables.

5.5.2 Confirmatory Factor Analysis (CFA) and Structural Equation Modeling (SEM)

Based on our EFA measurement model, we want to confirm and analyze the relationships among the latent variables. This requires defining a CFA model and structural regression model (SEM) (Klem 2000; Bagozzi and Yi 2012; Igolkina and Meshcheryakov 2020) in terms of the latent variables, then performing a SEM analysis on these models to determine the relationship(s) between latent variables. A typical SEM model can enable researchers to uncover multiple regression causal relationships in a single analysis among latent variables. The left-hand side of both equations are the endogenous (i.e., dependent) variables and the right-hand sides are the exogenous (i.e., independent) variables. Therefore, endogenous variables are explained by exogenous variables.

In particular, we define multivariate regressions for quality (F6) and productivity (F7) in Eqs. 1 and 2 below:

This allows us to see how reviewQuality is explained by automationEffort and socialInteraction (1), and how reviewProductivity is explained by socialInteraction (2). These relations will enable us to validate to what extent the act of micro-collaboration impacts both reviewQuality and reviewProductivity while controlling for the other factors.

We first have to evaluate our models to either accept or reject them based on their fit. We considered the indicators proposed in the literature (Kang and Ahn 2021; Ghaiumy Anaraky et al. 2021; Bagozzi and Yi 2012; Kang and Ahn 2021; Gopal et al. 2005; Siegmund et al. 2014; Beran and Violato 2010). Cronbach’s α (Vallat 2018) explains how closely related tested items are in a group (Fan et al. 2019), which enables us to establish reliability and validity of the three factors for software quality (socialInteraction, automationEffort and reviewQuality), and the two factors for productivity at change-set level (reviewProductivity and socialInteraction). The reliability of our measurement ensures its consistency over repeated trials, and the validity indicates to what extent the individual observations rightly measure what they are supposed to measure. To accept the reliability of a model a value of α ≥ 0.7 is expected. We obtained α = 0.77 for the quality model and α = 0.74 for the productivity model, implying that both models passed this test. The SEM model fit index (Siegmund et al. 2014) tells us how well the model fits the data. For both measurements of quality and productivity our models shows good and acceptable fits (N = 608,261).

While our models did not pass the SEM χ2 index criterion (ρ < threshold of 0.05), this criterion is known to be sensitive and to easily reject models with large sample sizes and minor deviations, and is failed by most of the prior work. Instead, our models did pass more robust criteria such as Root Mean Square Error (Bagozzi and Yi 2012; Ghaiumy Anaraky et al. 2021) (0.07 ≤ 0.08), Normed-Fit Index (0.98 > 0.95), Tucker-Lewis Index (0.97 > 0.95), Comparative-Fit Index (0.98 > 0.90), and Adjusted Goodness-of-Fit Index (0.97 > 0.90). Our models were accepted based on these goodness of fit indexes and show strong support for both quality and change set-level productivity.

6 Quantitative Results

This section presents the quantitative empirical analyses that we conducted, using various kinds of statistical testing, to confirm or refute the main findings of the qualitative analysis of the interviews in Section 4. We would like to stress that, apart from the two SEM analyses (Beran and Violato 2010; Bagozzi and Yi 2012), our quantitative analysis methods can only infer correlation, and therefore do not claim any causal relationship between any of the micro-collaboration related factors studied in this section.

6.1 How do OpenStack Contributors Engage in Micro-Collaborative Coding Practices? (R Q 1)

F1 reported qualitative evidence that OpenStack contributors use two main micro-collaboration mechanisms: git commit message trailers and Gerrit change sets. Since the majority of respondents indicated that the practice of git commit trailers was infrequent, this subsection studies the prevalence of these two micro-collaboration mechanisms.

Out of all 917,970 commits in the commit dataset \(\mathcal {C}\) we only found 15,801 commits (i.e., 1.72%) with git commit trailers. On the other hand, in the 497,442 merged change sets belonging to the \(\mathcal {P}\) dataset of 631,201 change set, we found a much higher proportion of 55,794 change sets (11.2%) that can be regarded as review-based collaboration. In terms of individual patch sets (i.e., patch versions), we found that 425,736 out of the studied 1,600,019 patch sets of the considered change sets appear in collaborative change sets, indicating a 26.61% collaboration in terms of contributions to patch sets. In contrast to the trailer-based mechanism, the Gerrit-based micro-collaboration is not explicitly tagged, hence is hard to identify manually or retrace.

Figure 2 presents a violin plot of the distribution of the number of collaborators in each individual git commit and change set. Most collaborations involve two distinct contributors, accounting for over 75% of all collaboration instances in either platform. We found an outlier with as much as 49 co-authors in git commits; they were all contributors to the neutron project, performing a divide-and-conquer technique to jointly implement seven sub-featuresFootnote 35, to support some third-party APIs. The outlier with 13 collaborators in Gerrit change sets happened in the DevStack project while working on major changesFootnote 36 that aimed to make DevStack more user-friendly to install, test and use.

Table 9 presents the top ten of most collaborative projects at OpenStack. They correspond to cross-project teamsFootnote 37 that, combined together, account for 35% of the total collaborative activity. Cross-project teams such as Nova, Neutron, Cinder, Keystone and Horizon develop common features that other OpenStack projects consume. They typically manage OpenStack resources such as configuration and deployment services (Openstack-Ansible and Kolla). Despite the 35% magnitude of collaborative coding within these ten cross-project teams, five of them represent projects with the most commits (Zhang et al. 2020). In general, as observed by (Zhang et al. 2020), collaborative coding doesn’t happen frequently during OpenStack development cycles, but is used sparingly during complex features that involve multiple teams and contributors.

As micro-collaboration in OpenStack is observed in both git and Gerrit, we also investigate the overlap in micro-collaboration between both platforms. In other words, how many instances of collaboration are indicated both by git commit trailers and multiple Gerrit patch sets, and how many collaboration instances are unique to either method?

For this analysis, the dataset C ∩ P of Section 5.3 is used to compare the two platforms in an unbiased way. Out of the 442,637 aligned change identifiers, 56,202 are collaborative either on git or Gerrit and will be used for the subsequent analysis.

Table 10 presents the number of collaborative change identifiers on git or Gerrit. Our results indicate that 5,980 of these collaborative change identifiers exist in both \(\mathcal {C}\) and \(\mathcal {P}\), representing respectively 64.76% and 11.29% of retrieved micro-collaborations in each platform. This overlap indicates cases where the implicit micro-collaboration observed in Gerrit was made explicit through a commit message trailer. The remaining collaborative change identifiers, respectively 3,254 in \(\mathcal {C}\) and 46,986 in \(\mathcal {P}\), could only be found in one of both datasets. Especially the latter cases represent a risk in terms of micro-collaboration visibility. Overall, within \(\mathcal {C}\) ∪ \(\mathcal {P}\), commits alone capture 16.43% of all collaboration activity, while change sets capture as much as 94.21%.

Figure 3 shows the evolution during 07/2011– 06/2020 of the frequency of micro-collaborative change identifiers in \(\mathcal {C}\), \(\mathcal {P}\), \(\mathcal {C}\) ∪ \(\mathcal {P}\) and \(\mathcal {C}\) ∩ \(\mathcal {P}\). Figure 3(a) reveals that the amount of micro-collaboration increased significantly for \(\mathcal {P}\) and \(\mathcal {C}\) ∪ \(\mathcal {P}\), after which it decreases again. Meanwhile, \(\mathcal {C}\) and \(\mathcal {C}\) ∩ \(\mathcal {P}\) increased slowly until 06/2015, followed by a gradual decrease onwards. The drop of micro-collaboration in \(\mathcal {C}\) and \(\mathcal {C}\) ∩ \(\mathcal {P}\) seems to coincide with an overall decrease in the number of OpenStack change identifiers, since Fig. 3(b) reveals that, proportionally speaking, from 07/2015 onward \(\mathcal {P}\) continuously increases but \(\mathcal {C}\) and \(\mathcal {C}\) ∩ \(\mathcal {P}\) continuously decrease albeit at a much lower rate. Interestingly, the proportion of micro-collaboration change identifiers dropped sharply during 07/2011–06/2012 (negative slope of \(\mathcal {P}\) and \(\mathcal {C}\) ∪ \(\mathcal {P}\) ), which is likely due to the explosive growth of development activity in OpenStack in that period (not shown in Fig. 3, given the exponential growth in OpenStack development, with a yearly increase in the number of change identifiers ranging from 37% to 135% until 2019, the stable micro-collaboration ratio indicates that collaboration actually happens frequently): 6,622 change identifiers were made during 07/2011–06/2012 compared to the 17,720 change identifiers during 07/2012–06/2013.

6.2 R Q 2 What are the Benefits of Micro-Collaboration?

This section quantitatively validates the qualitative findings of perceived benefits highlighted in gray in Table 2: F4 Collaboration improves onboarding; F6Collaboration improves software quality; F7 Collaboration improves productivity; and F8 Collaboration enables recognition and accountability.

6.2.1 F4 Collaboration Improves Onboarding

A recent study by Foundjem et al. (2021b) reveals that ecosystem-level onboarding at OpenStack correlates with higher retention rate, productivity and quality. Since that study indicated that micro-collaboration enables mentor-mentee interaction, here we focus on studying to what extent micro-collaboration correlates with the retention rate of newcomers.

For each studied year of development (ranging from July to June), we use survival analysis (Rich et al. 2010; Tufano et al. 2017) to analyse the probability that contributors remain active (“survive”) for a given period of time. To avoid bias, we split our dataset using a sliding window of four years that we move forward one year at a time. This results in five consecutive periods of four years, i.e., 2011–2015, 2012–2016, … until 2015–2019. In each window, we perform a separate survival analysis considering only the contributors who had their first contributions in that window. The duration of each contributor’s activity in a given window is calculated as the time elapsed from the first contributed patch set recorded in \(\mathcal {P}\) until the last contributed patch set in the full observation period (June 2020). We compare the survival curves (Tufano et al. 2017) for contributor activity in each four-year window along two different dimensions:

-

junior versus senior: junior contributors have provided their first contribution within the last year of the considered 4-year time window; while seniors have made contributions in earlier years of that time window.

-

individual versus collaborative: individual contributors were never involved in micro-collaboration in OpenStack, while collaborative ones have been active in micro-collaboration.

To clarify this, we consider a concrete example for the time window 07/2013 to 06/2017. The contributors who contributed from July 2016 until June 2017 are considered as juniors, while the ones who contributed from July 2013 until June 2016 are seniors. The sliding window technique allows to compare the longevity of junior contributors in each time window as there is no overlap between the juniors in different time windows (juniors in one time window become seniors in the next time window). The two dimensions of contributor classification yield four different survival curves per time window for all possible pairwise combinations, shown in Fig. 4 with 99% confidence intervals.

We observe that collaborative contributors (blue/green) are active significantly longer in OpenStack than individual contributors (red/orange). This is visually confirmed by the non-overlapping confidence intervals, and statistically confirmed through log-rank tests with p < .001 (after Bonferroni correction to adjust for family-wise error rate) for all periods.

Note that the maximum longevity of junior contributors is decreasing in more recent time windows as the end of the full observation period is June 2020, thus limiting the time they could have been active. To account for multiple comparisons of juniors in one time window participating as seniors in follow-up time windows, we tested all statistical hypotheses after adjusting p-values with Bonferroni correction; the statistical differences were confirmed with adjusted p < 2.8e − 5.

We also observe that collaborative stakeholders have at least a 15% probability of surviving during the entire studied period, with collaborative seniors usually having a higher survival probability than juniors until June 2017. The survival probability of individual contributors drops below 20% after approximately 12 months, decreasing until close to 0% towards the end of the studied period. Overall, our results show that micro-collaboration correlates with developer engagement in OpenStack.

6.2.2 F6 Collaboration Improves Software Quality