Abstract

The ability to meaningfully and critically integrate multiple texts is vital for twenty-first-century literacy. The aim of this systematic literature review is to synthesize empirical studies in order to examine the current state of knowledge on how intertextual integration can be promoted in educational settings. We examined the disciplines in which integration instruction has been studied, the types of texts and tasks employed, the foci of integration instruction, the instructional practices used, integration measures, and instructional outcomes. The studies we found involved students from 5th grade to university, encompassed varied disciplines, and employed a wide range of task and text types. We identified a variety of instructional practices, such as collaborative discussions with multiple texts, explicit instruction of integration, modeling of integration, uses of graphic organizers, and summarization and annotation of single texts. Our review indicates that integration can be successfully taught, with medium to large effect sizes. Some research gaps include insufficient research with young students; inadequate consideration of new text types; limited attention to students’ understandings of the value of integration, integration criteria, and text structures; and lack of research regarding how to promote students’ motivation to engage in intertextual integration.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Twenty-first-century knowledge societies are characterized by a dramatic increase in the quantity and diversity of information sources available to the public. No longer limited to traditional information outlets, such as newspapers and publishing presses, people now acquire information from a large variety of websites and social networks, run by various groups and individuals (Pew Research Center 2016). Thus, participation in twenty-first-century knowledge societies requires, more than ever, capacities to gain informed, critical understanding of current issues and problems based on multiple information sources that reflect diverse purposes and viewpoints (Alexander and DRLRL 2012; Brand-Gruwel et al. 2009; Britt et al. 2014; Goldman et al. 2016; Leu et al. 2013). Accordingly, current curricula and assessment frameworks call for advancing learners’ capacities to meaningfully comprehend and use multiple texts (e.g., National Governors Association Center for Best Practices & Council of Chief State School Officer 2010; OECD 2016).

Comprehending multiple texts involves constructing coherent meaning from texts that present consistent, complementary, or conflicting information on the same situation, issue, or phenomenon (Bråten et al. 2018). Multiple-text comprehension involves several component processes or strategies—chiefly, task interpretation; searching, evaluating, and selecting information sources; analysis; integration; and communication or presentation (Goldman et al. 2013; Rouet and Britt 2011).

Integration is at the core of multiple text comprehension because it involves drawing on information from diverse sources in order to achieve aims such as understanding the issue or drawing reasonable conclusions. Thus, intertextual integration is a complex epistemic process that serves the achievement of important epistemic aims (Chinn et al. 2014) that are essential for informed participation in current knowledge societies. Failure to integrate multiple information sources may result in understandings or conclusions that are strongly influenced by biased or unreliable accounts, or that do not adequately consider and weigh alternative viewpoints (Bråten et al. 2011). This can have negative consequences for personal decision-making as well as for democratic deliberation on complex important problems, such as how to address the challenges of global warming. However, intertextual integration is also a very challenging process that places high demands on individuals (Rouet 2006). Hence, researchers have devoted efforts to examining ways of promoting students’ capabilities to integrate multiple texts.

The aim of this systematic review is to document and synthesize empirical research on instructional approaches and practices for improving intertextual integration. Our focus in this review is on studies that address integration of verbal information across texts, rather than integration of information within texts or integration of visual information. Research on instruction of intertextual integration has been conducted in diverse fields such as discourse studies, disciplinary literacy, and digital literacy, often with few interconnections between these fields. Thus, taking stock of the progress made in these varied areas is important for identifying promising instructional practices and remaining research gaps. To the best of our knowledge, this is the first systematic review of how intertextual integration is promoted in educational research.

In what follows, we first describe the role of intertextual integration in several prominent theoretical frameworks. We then take a closer look at how people integrate multiple texts. Finally, we briefly discuss some key factors that affect intertextual integration. In our review, we use the terms text, document, and information source interchangeably to refer to artifacts that convey information to readers (Britt and Rouet 2012). We reserve the term source for the origin of the text and the circumstances surrounding its production, such as its authorship, publication venue, date of publication, etc. (Bromme et al. 2018). We assume that texts can convey both source information and content information and that their representation can include both a source component and a content component (Rouet 2006). However, it should be noted that these terms are not consistently used in this manner in the literature we survey (see Goldman and Scardamalia 2013).

The Role of Integration in Meaning-Making from Multiple Texts

Integration plays a role in several theoretical frameworks that describe how people make meaning from multiple texts. In this section, we briefly review the role of intertextual integration in four prominent theoretical frameworks that were repeatedly cited in the papers we found (Brand-Gruwel et al. 2009; Leu et al. 2013; Rouet and Britt 2011; Wineburg 1991). Table 1 summarizes the role of integration in each of these frameworks. As can be seen in Table 1, integration is addressed using diverse terms such as corroboration, synthesis, or organization. We refer to such processes as integration when they involve connecting or combining information from different texts to achieve diverse aims.

Wineburg (1991) identified three heuristics used by historians as they read multiple documents in order to inquire into historical events: corroboration, sourcing, and contextualization. Corroboration involved comparing documents to identify agreements and discrepancies. In historical inquiry, corroboration helps establish whether information is plausible or likely. Wineburg’s analysis of expert heuristics greatly influenced subsequent research on multiple-text comprehension in history and other disciplines.

The Multiple-Document Task-based Relevance Assessment and Contenet Extraction (MD-TRACE) model by Rouet and Britt (2011) is a later model that explains how readers purposefully process and use multiple texts. The model describes five processes that unfold in a nonlinear, iterative manner: (1) constructing and updating a model of the task and its goals; (2) assessing information needs; (3) accessing, assessing, processing, and integrating information within and across documents; (4) creating and updating a task product; and (5) assessing whether the product meets the goals of the task. Recently, Britt et al. (2018) proposed an additional preliminary phase in which readers construct a representation of the reading context. According to MD-TRACE, integration occurs in step 3: As readers acquire information from a text, they combine it with information from other texts while tracking and comparing who said what, that is, while forming connections between sources and contents and among sources. Integration continues in step 4 as readers transform the contents of the texts to create a coherent task product (Rouet and Britt 2011).

Integration also plays an important role in models of digital literacy. One such prominent framework is the New Literacies of Online Research and Comprehension framework by Leu and his colleagues (Leu et al. 2013, 2007). This framework addresses online reading comprehension as a process of problem-based inquiry that involves several practices: (1) identifying important problems, (2) locating information, (3) critically evaluating information, (4) synthesizing information, and (5) communicating information. Integration occurs in the synthesis phase when learners put together an understanding of the meaning of the texts they have read and when they actively construct new texts through their reading and navigation choices (Leu et al. 2007). Integration can presumably continue in the communication phase as learners engage in writing in order to share what they have learned.

Finally, the Internet Information Problem Solving (IPS-I) model by Brand-Gruwel et al. (2009) is an information processing model that defines the skills needed to solve information problems while using the Internet. The model describes five constituent skills: (1) define information problem, (2) search information, (3) scan information, (4) process information, and (5) organize and present information. The performance of these skills also requires regulation, reading, evaluation, and computer skills. Integration is central to Brand-Gruwel et al.’s “organize and present information” phase, in which information from various information sources is combined to solve the information problem. The desired product (e.g., a poster or an essay) is used to structure the information gathered in earlier phases.

Looking across these four frameworks, several themes can be identified. First, all frameworks describe processes of connecting, combining, or organizing information from different texts as important for meaning-making. Second, integration typically follows on the footsteps of task interpretation, text selection and evaluation, and processing of the individual texts. However, these processes do not necessarily unfold in a fixed order and may be iterative. Third, integration serves the creation of a task product, such as a solution to a problem or an answer to an inquiry question, and is informed by the objectives and requirements of this product. Accordingly, we defined integration of multiple texts in this review as connecting, combining, or organizing information from different texts to achieve diverse aims such as meaning-making, problem solving, or creating new texts. As mentioned, texts can convey both content and source information. In line with current work on multiple text comprehension, we assume that successful integration of multiple texts involves connecting, combining, or organizing the contents of the texts, while also forming connections between their sources (Bråten et al. 2011; Britt et al. 2013).

How Do People Integrate Multiple Texts?

Integration processes, such as forming intertextual connections, can occur both while reading and while writing (Segev-Miller 2007). Researchers have referred to integration as a “hybrid” task because it typically requires learners to alternate between the roles of readers and writers as they shift back and forth between reading and comprehending texts, writing notes, and creating a written product (Solé et al. 2013; Spivey and King 1989). The intermingling of reading and writing is presumed to benefit learners, because writing demands can, for example, drive learners to reread the texts, elaborate them, and transform their contents (Solé et al. 2013; Wiley and Voss 1999). However, the extent of writing involved in integration tasks may vary greatly from producing an original essay, answering a short question, to just reading for understanding. Thus, writing appears to be beneficial for integration but is not necessary for integration processes to occur.

According to Spivey and King (1989), integration involves selection, organization, and connection processes: First, because learners cannot retain all of the information from a text, they select information to attend to. These selections are made based on criteria of relevance or importance. Second, learners organize the content they selected within some structure in order to construct a mental representation of the texts. When learners read single texts, they can use the text’s structure to guide their understanding. However, when reading multiple texts, learners usually have to supply a new structure to meaningfully organize the information they selected. Third, in addition to the global organization of the information, learners also connect information from the texts at a local level to form a coherent and cohesive new discourse. For example, they form conceptual and linguistic connections among propositions, clauses, and sentences (Spivey and King 1989).

As the foregoing description suggests, integration requires active and constructive transformation of content from different texts into an inclusive product (Boscolo et al. 2007; Segev-Miller 2004). Segev-Miller (2007) proposed that connecting and organizing processes can be better described as conceptual and rhetorical transformations, respectively: Conceptual transformations involve comparing the texts, formulating macropropositions that reflect the connections that were identified among the texts (e.g., “They emphasize different aspects of the issue…”), and creating new categories that did not previously exist in the texts. Rhetorical transformations are used to produce new text structures that connect ideas from multiple texts. These can range from simply listing ideas to tightly weaving ideas in a new synthesis. Additionally, linguistic transformations can be used to express the relations between the texts. These can include introducing linguistic connectors (e.g., “adds,” “disagrees”) and employing lexical repetition (e.g., “According to Spivey and King (1989), integration involves selection, organization, and connection processes. … Segev-Miller (2007) proposed that connecting and organizing processes can be...”).

These descriptions of integration processes have so far addressed how learners process the contents of multiple texts. The important contribution of the Documents Model Framework (DMF) is that good readers of multiple texts also attend to the sources of these texts as they integrate them (Britt and Rouet 2012; Britt et al. 2013; Rouet 2006). According to the DMF, when readers read multiple texts, they construct an integrated mental model, which consists of a representation of the situations or phenomena described in the texts, including overlaps and discrepancies. Additionally, good readers also construct an intertext model, which consists of a representation of the sources and their interrelations. Each document is represented in the intertext model by a source node that includes source information, such as author identity, genre, venue of publication, place and time, and so forth. Forming the intertext model involves establishing connections between sources (source-to-source links) such as relations of agreement or opposition (Rouet 2006). Source-to-source links can help interpret content overlaps and discrepancies. For example, knowing that a website was published by an anti-vaccination organization may help interpret contradictions between the claims made in that website and the claims published in a government health website. When readers connect the intertext model to the integrated mental model by constructing and tracking source-to-content links, they develop a full documents model (Rouet 2006); this helps them interpret and evaluate the contents of the texts in terms of their respective sources (Bråten et al. 2011; Britt et al. 2013).

Thus, intertextual integration involves multiple processes. These can include bottom-up automatic inferencing processes as well as top-down strategic processes, such as actively comparing texts and identifying discrepancies (Kurby et al. 2005; Stadtler et al. 2018). Furthermore, intertextual integration requires coordinating and managing multiple processes (Martínez et al. 2015; Rouet and Britt 2011). Hence, successful intertextual integration involves metacognitive planning, monitoring, and evaluation for managing reading, intertextual linking, and product construction (Afflerbach and Cho 2009; Rouet and Britt 2011; Salmerón et al. 2018; Segev-Miller 2007).

Factors Affecting Intertextual Integration

Text features can impact integration. Because information is spread over several distinct texts that are often viewed on separate pages, this information is less accessible than if it were presented in a single document (Britt et al. 2013). Hence, integration may increase in difficulty when it involves a greater number of texts. The degree of overlap between texts can also impact integration: Lower linguistic and content overlap between texts can result in reduced integration (Kim and Millis 2006; Kurby et al. 2005). Relationships between texts, such as contradiction or agreement, have also been found to impact their comprehension: Specifically, conflicts between texts can encourage readers to attend to their sources and thereby develop a more elaborate understanding of how the texts are related to each other (Braasch and Bråten 2017). The quality of integration might also be influenced by text type. For example, Rouet et al. (1996) found that providing primary documents resulted in more extensive grounding of students’ written arguments in the documents, in comparison to providing secondary documents.

Intertextual integration has also been found to be influenced by task instructions (Britt and Rouet 2012). For example, in one influential study, Wiley and Voss (1999) found that argument tasks resulted in more well-integrated essays compared to narrative tasks. The ways in which learners interpret task requirements can shape their subsequent reading decisions and actions (Rouet et al. 2017).

Learner characteristics have been found to influence their capacities to successfully comprehend multiple documents (for reviews, see Barzilai and Strømsø 2018; Bråten et al. 2018; Salmerón et al. 2018). Students’ integration skills develop substantially over primary, secondary, and tertiary education (Dutt-Doner et al. 2007; Mateos and Solé 2009; Spivey and King 1989). These developmental differences may be due to increase in linguistic and rhetorical knowledge and a greater capability to apply this knowledge (Spivey and King 1989). Researchers have also found that greater prior knowledge about the topics discussed in the texts can make it easier for learners to integrate them (Bråten et al. 2014). Disciplinary knowledge about text genres and about writing and publication practices is important for intertextual integration as well (Rouet 2006; Wineburg 1991). Integration performance has also been found to be positively associated with learners’ metastrategic knowledge about integration (Barzilai and Ka’adan 2017; Barzilai and Zohar 2012). Finally, the ways in which learners interpret multiple-text tasks may be informed by their epistemic views regarding knowledge and knowing (Bråten et al. 2011). For example, awareness that knowledge is complex, uncertain, and evolving may lead learners to pay greater attention to multiple sources and viewpoints (Barzilai and Eshet-Alkalai 2015; Bråten et al. 2011).

Goals of the Present Review

In light of the importance of intertextual integration for learning in the twenty-first century, the overarching purpose of this review was to examine what can be learned from existing empirical research about how integration of multiple texts can be promoted in educational contexts. More specifically, we were interested in three main research questions.

Our first question was, In which disciplinary contexts and using which types of texts and tasks is intertextual integration promoted? Because the study of intertextual integration has its roots in history and literacy education (e.g., Spivey and King 1989; Wineburg 1991), and because disciplinary knowledge can affect reading processes (Rouet 2006; Wineburg 1991), we examined in which academic disciplines intertextual integration is studied. Additionally, because text characteristics can influence integration (e.g., Britt and Rouet 2012; Rouet et al. 1996), we also examined the number and type of texts that students are asked to integrate and how these texts are interrelated. Finally, in light of the impact of task instructions on integration (Rouet et al. 2017), we also examined the types of integration tasks employed. Taking into account contexts, texts, and tasks is important for understanding instructional practices and identifying research gaps.

The second question was, How is intertextual integration taught? In light of the complexity of intertextual integration (Rouet 2006; Segev-Miller 2007), we were interested in documenting how it is instructed. To do so, we analyzed the foci of integration instruction, the instructional practices employed, and if and how digital technologies are utilized.

Third, we asked, What are the outcomes of intertextual integration interventions? To address this question, we examined the measures used to assess intertextual integration, the effectiveness of instructional interventions, and the characteristics of effective interventions.

Method

Search Procedures

To identify relevant studies, we searched three databases: ERIC, PsycINFO, and Google Scholar. The first two databases were selected for their systematic coverage of educational and psychological research. Google Scholar was used to supplement these databases. The search was finalized in June 2017.

We started by searching the ERIC and PsycINFO databases. After some experimentation, we developed a search string that included three groups of keywords that reflected the foci of our review: First, we included keywords related to multiple texts, e.g., multiple source*, multiple text*, and primary document*. We supplemented these keywords with terms related to information literacy, such as information skill*. We did not search for the words source or text because they resulted in a vast number of irrelevant results. Second, we included four words that are used to refer to integration in multiple-text studies, namely, integrat*, synthes*, corroborat*, and comprehen*. Third, because we sought studies that described instructional practices or interventions, we also included the keywords instruct*, teach*, learn*, and interven*. Our final search query was:

(“multiple source*” or “multiple text*” or “multiple document*” or “primary document*” or “primary source*” or “information source*” or “information problem solving” or “information skill*”) AND (corroborat* or integrat* or synthes* or comprehen*) AND (instruct* or teach* or learn* or interven*).

We searched for peer-reviewed journal articles only, because such articles are of higher quality and usually provide sufficient detail for analysis purposes. We did not limit our search by year or population. To extend the search to journals that might not be covered by ERIC and PsycINFO, we also searched Google Scholar. Because Google Scholar does not enable overly long search strings, we used a shorter query in which we included the most common keywords that recurred in relevant articles:

(“multiple source*” or “multiple text*” or “multiple document*”) AND (instruct* or learn* or teach* or interven*).

Finally, we used backward snowballing to expand the search. That is, we scanned the references of the articles we found and of several reviews related to multiple text comprehension (e.g., Brante and Strømsø 2017; Stadtler et al. 2018) to identify additional articles. These searches yielded a total of 1292 results (586 in ERIC, 411 in PsycINFO, 236 in Google Scholar, and 59 through backward snowballing).

Inclusion Criteria

Articles were selected for inclusion if they met the following cumulative criteria:

-

1.

The study examined the contribution of tasks and/or instruction to integration of multiple texts. We included studies that compared the relative benefits of various tasks as well as studies that examined instructional activities that aimed to promote integration.

-

2.

The study included at least two verbal texts that students integrated. We were not interested in examining integration of visual representations per se. However, if a study included visual representations, along with multiple texts, it was retained.

-

3.

The study was empirical and provided data on learning processes or outcomes. We included qualitative, quantitative, and mixed-method studies.

-

4.

The study was conducted in students’ L1. We did not include studies in second or foreign languages because of the unique language learning demands involved.

-

5.

The article was published in a peer-reviewed journal.

-

6.

The report was in English. However, the study could be conducted in any language.

The titles and abstracts of all of the articles identified in our searches were scanned to see if they met these inclusion criteria. If information in the abstract was insufficient to determine whether the article met the criteria, we erred on the side of caution and selected the article for further examination. Altogether, we identified 193 candidate articles based on their title and abstract. These articles were then opened and their full-text was examined to determine if they met the inclusion criteria. If we found two reports of the same study, we included only the most recent report. In this process, and excluding duplicates, we identified 61 articles for inclusion in the review.

To examine the effectiveness of integration interventions, we conducted a more in-depth analysis of a subset of (quasi-)experimental studies that included instructional activities that aimed to foster integration capabilities. These studies were selected according to the following criteria: (a) They included instructional activities that aimed to foster integration. We did not include studies that only manipulated task instructions, because we were primarily interested in identifying productive teaching practices. (b) They included a comparison group that did not receive instruction or received a different type of instruction. (c) They provided adequate statistical information on the dependent variables associated with integration. Applying these additional criteria, we identified 21 studies in which we examined the effects of integration instruction.

Coding Scheme

We developed an initial coding scheme based on the review goals and the data that could be consistently gathered from the studies. The scheme included several categories, with codes within categories. The unit of analysis was the entire paper. Categories and codes were not exclusive, that is, multiple categories and codes could apply to a single paper. The coding scheme was initially applied to a set of five studies by the second and third authors. This led to a series of refinements that were discussed by all authors. Some codes were merged and code descriptions were elaborated and clarified. We next describe the final categories and codes.

Study Design

Studies were coded descriptive if they documented teaching or learning without group or pretest-posttest comparisons, experimental or quasi-experimental if they tested the effects of instruction by comparing groups and/or pretests and posttests, and design-based research if they evaluated the design of tools supporting integration.

Instruction Designers and Providers

We examined whether the instruction was designed and delivered by researchers only, by teachers only, or by both researchers and teachers. When the identity of the experimenters or instructors was not mentioned, we assumed that the instruction was delivered by the researchers.

Education Levels

We coded four education levels: primary school (grades 1–6), lower secondary school (grades 7–9), upper secondary school (grades 10–12), and higher education.

Academic Disciplines

The disciplines that were identified in the studies were: history, science (including physics, biology, chemistry, health, and socio-scientific issues), language arts (including language and literature), social sciences (including psychology, geography, communication, education, etc.), and other (e.g., math).

Text Types

We coded three main text genre categories: primary, secondary, and literary. Primary texts were explicitly described by the authors as such or were first-hand accounts (e.g., speeches or letters). Secondary texts were explicitly described by the authors as such or were nonfiction texts that were composed after the events they described or without first-hand involvement. Because of the large number of secondary texts, we further coded these using five subcodes: textbook excerpts, websites, news, expert essays, and other. These codes were applied based on the authors’ explicit descriptions. Literary texts included works of fiction (e.g., stories or novels). Additionally, texts were coded as static visual representations if they included stationary images (e.g., photographs or charts) and as dynamic visual representations if they included moving images (e.g., videos).

Intertextual Relations

The identification of intertextual relations was based on the authors’ explicit statements, because the positions of the texts could not be consistently inferred. The relations were coded as complementary if the authors described the texts as such. Relations were coded as contrasting if the authors noted contrasts, conflicts, controversies, or disagreements between texts or mentioned that the texts presented contrasting perspectives. Otherwise, relations were coded as not mentioned.

Writing Tasks

Writing tasks were coded based on the terms used in task instructions and the authors’ explicit descriptions: Summary tasks included requests to summarize the topic or key issues. Argument tasks included requests to write arguments, present and justify positions, or identify arguments. Synthesis tasks included explicit instructions to synthesize or integrate texts. Inquiry tasks posed open-ended inquiry questions or problems that students were asked to explore using the texts. Narrative tasks included instructions to narrate or describe events or phenomena. Compare and contrast tasks included instructions to describe or present similarities and differences between texts. All other tasks were coded as other.

Foci of Instruction

This category captured the issues addressed by the instruction or the purposes of the instruction. The integration processes code was used to code studies in which students were taught how to integrate. The integration criteria code was applied when students were taught about the qualities of good integrative writing or criteria for evaluating integration. The value of integration code was used if the instruction included explicit discussion of the value, importance, or reasons for integrating multiple texts. The text structure code was applied when students were taught about text structure or organization.

Instructional Practices

We identified a wide range of instructional practices that were coded as follows: Explicit instruction of integration included providing visible and clearly expressed instruction about integration. Modeling of integration referred to demonstrations of integration while thinking aloud or describing actions. Integration process prompts were questions, cues, or reminders that guided and encouraged students to integrate multiple texts. Annotation or summarization of single texts were practices that required students to actively engage with the individual texts by taking notes, highlighting, underlining, annotating, or summarizing them. Graphic organizers were visual representations, such as tables, maps, or flow charts, which aid integration. Collaborative discussions and practice included students working in dyads or small groups with multiple texts while engaging in discussions or performing various tasks. Individual practice referred to students working alone with texts to apply what they learned. Feedback referred to teacher or automated feedback on integration processes or outcomes. All other practices were coded as other.

Digital Technology

We noted two main uses of digital technology: uses of technology to access and read texts, by browsing the Web or through dedicated software, and uses of technology to support integration, by providing online tools, prompts, instruction, or feedback. When studies did not use technologies, we coded them as technology not used.

Measurement of Integration

In line with our definition of intertextual integration, we identified measures of integration as measures that were used to examine if and how students connect, combine, or organize information from more than one text. These measures were coded based on the authors’ explicit descriptions. The recurring measures were: written essays based on multiple texts, responses to open-ended questions or multiple-choice questions that required integrating information from more than one text, intertextual verification of statements that were based on more than one text, think aloud protocols while working with multiple texts, observations of how students work with multiple texts, analysis of students’ oral discourse while working with multiple texts, and other.

Interrater Reliability

To test interrater reliability, the second and third authors independently coded 30 articles. All codes achieved a satisfactory level of interrater reliability of Cohen’s kappa > 0.80, M = 0.90, SD = 0.10. The remaining papers were divided between the authors. The first author reviewed coding by all authors to monitor consistency.

Number of Texts

We noted the number of texts used, as stated. If a study included several sets of texts, we took into account the greatest number of texts in a single set.

Statistical Analyses

Our effect size analysis procedures were similar to those used by Brante and Strømsø (2017) in their review of sourcing interventions. Effect sizes for the subset of 21 studies were calculated using the standardized mean difference statistic Hedges’ g. Positive g values indicate that the intervention group has a higher outcome score than the control group. When studies included a pretest, an adjusted effect size was calculated by subtracting the pretest effect size from the posttest effect size (Durlak 2009). In addition to effect sizes, we also documented the statistical significance of the differences, as reported by the authors. Because the dependent measures used in the studies were highly diverse, we did not consider it meaningful to calculate an overall mean effect size (Brante and Strømsø 2017).

Results

Overview of the Studies

The studies we found were published over two decades, from 1996 to 2017, and originated from the USA, Europe, and Asia. Most studies (73.8%) were experimental or quasi-experimental, 24.6% were descriptive, and one study reported design-based research (Saye and Brush 2002). We found that 57.4% of the studies were conducted by researchers (e.g., Britt and Sommer 2004). In 34.4% of the studies, researchers collaborated with teachers in designing and/or delivering instruction (e.g., Argelagós and Pifarré 2012). In these studies, instruction was usually embedded in the school curriculum. An additional 8.2% of the studies were descriptive reports of in-service teachers’ multiple-text instructional practices (e.g., Monte-Sano 2011). The education level of the participants ranged from 5th grade to higher education. However, only six studies (9.8%) included primary school participants. Integration was studied more often in lower secondary school (31.1%), upper secondary school (27.9%), and higher education (47.5%).

Intertextual Integration Across Disciplines, Texts, and Tasks

Academic Disciplines

Integration was mainly studied in the disciplines of history, sciences, social sciences, and language arts. Table 2 details the distribution of academic disciplines overall and by education level. Integration was studied more frequently in language arts in primary and lower secondary school compared to other education levels, χ2 (1, N = 61) = 7.82, p = 0.005, V = 0.36. History was more common in studies conducted in upper secondary school, χ2 (1, N = 61) = 9.64, p = 0.002, V = 0.40, and less common in higher education, χ2 (1, N = 61) = 8.06, p = 0.005, V = 0.36. In higher education, studies involved more social science topics, χ2 (1, N = 61) = 10.62, p = 0.001, V = 0.42, and less language art topics, χ2 (1, N = 61) = 4.64, p = 0.031, V = 0.28, compared to other education levels.

Text Characteristics

The number of texts was reported in 85.2% of the studies and ranged from 2 to 12, M = 4.85, SD = 2.96. We did not identify statistically significant differences in the number of texts by education level. The studies included secondary texts (83.6% of the studies), primary texts (37.7%), and literary texts (8.2%). Frequent types of secondary texts were textbook excerpts (27.9%), expert essays or book excerpts (27.9%), news articles (24.6%), and websites (24.6%). Other types of secondary texts were employed in 41.0% of the studies, such as overviews or summaries, encyclopedia entries, timelines, documentary films, and more. Primary texts included official documents, letters, memos, diaries, speeches, historical cartoons, maps, and more. Literary texts included stories, poems, or novels. We found that 24.6% of the studies involved static visual representations, such as photographs, maps, charts, or cartoons, and 13.1% involved dynamic visual representations, such as documentary and fiction films.

Intertextual Relations

In 44.3% of the studies, the authors reported using texts that presented contrasting or conflicting viewpoints, accounts, or claims. Use of complementary texts was explicitly noted in only 4.9% of the studies. Stahl et al. (1996) explained the rationale for engaging students with conflicting historical documents:

We chose the topics because they have been hotly debated by historians and politicians. Different interpretations of the event and resolution exist, allowing us to choose texts that represented several perspectives. It was the integration of various perspectives that the researchers wished to study. (p. 437)

Integration Tasks

Multiple text writing tasks were employed in 96.7% of the studies. Frequently recurring task types were argument tasks (50.8%), synthesis tasks (19.7%), inquiry tasks (19.7%), summary tasks (16.4%), compare and contrast tasks (11.5%), and narrative tasks (9.8%). In addition, in 39.3% of the studies, we found other types of tasks that usually recurred just once or twice such as requesting students to analyze or explain phenomena, write a critique, prepare a timeline, create an outline for a magazine article, write a literature review, generate questions, and create a multimedia presentation.

Some studies compared how different types of tasks might impact students’ integration processes and outcomes. These studies generally suggest that tasks that require students to actively relate or integrate ideas or claims across texts have a positive effect on integration (Cerdán and Vidal-Abarca 2008; Stadtler et al. 2014). However, the effects of specific writing tasks have tended to yield mixed results. Particularly, asking students to write arguments (develop and justify a position based on multiple texts) has been found in some studies to yield better integration outcomes than writing summaries or narratives (Le Bigot and Rouet 2007; Naumann et al. 2009; Stadtler et al. 2014; Wiley and Voss 1999). However, other studies have found that in some cases summary tasks yield similar (Bråten and Strømsø 2010; De La Paz and Wissinger 2015) or better outcomes than argument tasks (Gil et al. 2010a, b). These mixed results appear to have multiple causes: (a) The specific wording of the instructions to summarize or develop an argument can differ across studies and affect outcomes (Bråten and Strømsø 2010); (b) the effects of tasks are contingent on the specific dependent measures employed by researchers (Naumann et al. 2009); (c) differences between learners, particularly differences in prior content knowledge (De La Paz and Wissinger 2015; Gil et al. 2010a) and epistemic beliefs (Bråten and Strømsø 2010; Gil et al. 2010b), have been found to moderate task effects; and (d) the effect of task types might also be sensitive to the particular affordances provided for interacting with the texts (Kobayashi 2009).

Other studies took a less agnostic approach to writing tasks and instead focused on specific writing genres. For example, studies focused on argumentative writing provided scaffolds for developing arguments or writing argument essays (e.g., De La Paz et al. 2017), and studies focused on synthesis writing provided synthesis writing guidelines and examples of synthesis texts (e.g., Boscolo et al. 2007; Martínez et al. 2015). Such studies suggest that integration instruction can benefit from familiarizing students with the structures, conventions, and practices associated with specific genres.

How Is Integration Taught?

The Foci of Integration Interventions

Close to one quarter (23.0%) of the studies included task comparisons only, without engaging students in instructional activities. However, most studies (77.0%) included some form of instruction that aimed to foster integration. Of these instructional studies, 80.9% focused on teaching students how to integrate by providing them with various forms of strategy instruction (e.g., Boscolo et al. 2007; Maier and Richter 2014). In 21.3% of the instructional studies, researchers aimed to teach students about text structures (e.g., De La Paz 2005; Kirkpatrick and Klein 2009). For example, De La Paz et al. (2017) provided 8th grade students with instruction about the structure and components of an argumentative historical essay.

Fostering appreciation of the value and importance of integration was explicitly addressed in only 17.0% of the instructional studies (e.g., Cameron et al. 2017; De La Paz et al. 2017). For example, Barzilai and Ka’adan (2017) asked 9th grade students to discuss why one should integrate multiple information sources. Students provided various reasons, such as “to find the most accurate and correct opinion” or “to create an idea or an opinion about this information” (p. 222). Finally, only in 6.4% of the studies, students were taught what counts as a good integrative product or criteria for evaluating integration (e.g., Boscolo et al. 2007; Segev-Miller 2004). For example, Boscolo et al. (2007) discussed the characteristics of good syntheses and provided examples of good and poor synthesis writing to their undergraduate participants. The researchers described good syntheses as including “integrated information from different sources in a holistic expository giving a logical and coherent organization of contents” (pp. 424–425).

Instructional Practices for Teaching Integration

Of the 77.0% of the studies that included instructional activities, the most frequent instructional practices were engaging students in collaborative discussions and practice (57.4%), explicit instruction of integration (55.3%), providing integration process prompts (53.2%), annotation or summarization of single texts (44.7%), employing graphic organizers or representations (42.6%), modeling integration (38.3%), individual practice (34.0%), and providing feedback (21.3%). These practices are briefly described below, in order of their frequency. Typically, multiple practices were used in combination, limiting the ability to draw conclusions regarding their effectiveness. Hence, in this section, we refer to the outcomes of practices only when studies provided data about their specific effects.

Collaborative Discussions and Practice

In many studies, students worked with multiple texts in dyads or small groups (e.g., Argelagós and Pifarré 2012; Reisman 2012). Students discussed the texts and engaged in various writing activities, often guided by prompts or graphic organizers (see below). For example, De La Paz (2005) provided 8th grade students with a visual representation of a historical reasoning strategy that included sourcing and comparison prompts. Students used the historical reasoning strategy in small groups to make sense of conflicting accounts of an historical event, collaboratively reading and taking notes. Lundstrom et al. (2015) had college students read some texts individually, share what they learned with the group, organize information from the texts as a group, and create a collaborative outline for their synthesis paragraph.

Explicit Instruction of Integration

Explicit instruction took on diverse forms, including explanations by teachers (Boscolo et al. 2007; Segev-Miller 2004), providing written information (Maier and Richter 2014), video tutorials (Cameron et al. 2017; Darowski et al. 2016), and guided student discussions about the strategy (Barzilai and Ka’adan 2017). For example, Darowski et al. (2016) provided undergraduates with a video tutorial explaining how to prepare a research synthesis. Maier and Richter (2014) provided university students with information about integration strategies, such as “Figure out which parts of the texts are in conflict with each other and which parts are consistent with each other” (p. 62).

Integration Process Prompts

These prompts were questions, cues, or reminders that guided and encouraged students to integrate multiple texts as they engaged in reading, discussing, or writing. Use of such prompts was common in the studies we reviewed (e.g., Gagnière et al. 2012; Wopereis et al. 2008). Often prompts were introduced by teachers during whole-class discussions or group work. For example, VanSledright (2002) prompted the 5th graders in his class to juxtapose historical documents and weigh them. Prompts were also provided in offline and online written guides or worksheets that structured integration processes. For example, González-Lamas et al. (2016) provided secondary school students with a reading and writing guide that included cognitive and metacognitive prompts, such as “Have I thought about how I am going to organize the text?”

Summarizing and Annotating Single Texts

Students were also frequently asked to take notes, highlight, underline, or summarize individual texts as aids for comprehending the texts as a whole (e.g., Hagerman 2017; Lundstrom et al. 2015). For example, Monte-Sano (2011) described how an 11th grade history teacher taught his students to annotate documents by providing them with annotation instructions and detailed feedback on their annotations. Britt and Sommer (2004) found that asking undergraduates to summarize a text before reading a subsequent text improved integration. Kobayashi (2009) found that giving undergraduates opportunities to annotate texts, in tandem with instructions to find relations between texts, contributed to the quality of their integrative writing.

Graphic Organizers and Representations

Use of graphic organizers and representations, such as tables or maps, was a frequent instructional practice. Some graphic organizers supported organizing content from multiple texts without representing the sources of this content. For example, concept maps were used to map ideas from multiple texts (Hilbert and Renkl 2008; Kingsley et al. 2015), post-it notes were used to note and cluster important ideas (Darowski et al. 2016; Lundstrom et al. 2015), and tables were used to compare information according to various aspects (Daher and Kiewra 2016; Kirkpatrick and Klein 2009). Other graphic organizers supported organizing content from multiple texts as well as representing its sources. For example, Cameron et al. (2017) provided undergraduates with a document matrix that included columns for noting the title of the document, type of document, author, point of view, purpose of the document, main idea, and importance. Barzilai and Ka’adan (2017) asked 9th graders to create integration maps that included claims, explanations, and evidence from multiple texts as well as the reliability of each source. Finally, some researchers used visual representations of multiple-text reading or writing processes. For example, De La Paz et al. provided representations of processes of reasoning about historical documents and writing argumentative essays (De La Paz 2005; De La Paz and Felton 2010; De La Paz et al. 2017). Graphic organizers were usually employed in conjunction with other practices, limiting the ability to gauge their effectiveness. However, Hilbert and Renkl (2008) found a positive correlation between the number of correctly labeled links in concept maps that learners created based on a set of texts and the extent that they integrated information from these texts. Barzilai and Ka’adan (2017) found that creating integration maps had a positive effect on integration quality in a delayed transfer test.

Modeling Integration

Modeling integration processes, that is, demonstrating them while describing actions or thinking aloud, was a practice that teachers and researchers often used for teaching how to integrate multiple texts (e.g., Linderholm et al. 2014; Shanahan 2016). For example, González-Lamas et al. (2016) described how modeling complemented explicit instruction: “Whereas explicit teaching was focused on what to do, modeling complemented the concept by showing how to do it. To do this, the researcher served as a model for the completion of a synthesis, verbalizing aloud what she was thinking while, at the same time, explaining the processes she was implementing” (p. 56).

Providing Feedback

Another common practice for promoting integration was providing feedback on the quality of students’ integrative products (e.g., Brand-Gruwel and Wopereis 2006; Hammann and Stevens 2003). Feedback was typically provided by researchers or teachers (e.g., Boscolo et al. 2007). Britt et al. (2004) developed a tool for providing university students with immediate automatic feedback on writing from multiple documents. For example, if students were found to rely heavily on a single or few documents, feedback prompted them to consider additional documents: “It looks like this essay has information from a limited number of specific sources… This may give your reader a limited view of the story.” (p. 368). Maier and Richter (2014) found that positive performance feedback motivated students to productively employ metacognitive strategies for integrating multiple texts. The authors suggested that students’ expectations that they are likely to succeed in this challenging task may make them more willing to employ resource-demanding strategies.

Individual Practice

Finally, students were also found to engage in guided and independent individual activities in which they applied what they learned to new documents or new tasks (e.g., Boscolo et al. 2007; Martínez et al. 2015).

Uses of Digital Technologies

In 34.4% of all studies, digital technologies were used to access and view texts. Students searched for texts online or read texts using dedicated software (e.g., Cerdán and Vidal-Abarca 2008; Raes et al. 2012). Saye and Brush (2002) used the structure of a database of historical documents as a scaffold that supported forming connections among texts by organizing them in categories and hyperlinking them. In 23.0% of all studies, digital technologies more directly supported integration processes by providing instruction (e.g., Cameron et al. 2017; Weston-Sementelli et al. 2016), providing integration process prompts (e.g., Argelagós and Pifarré 2012), or providing tools for concept mapping (e.g., Hilbert and Renkl 2008).

The Outcomes of Integration Interventions

Measurement of Intertextual Integration

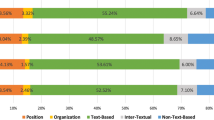

The measures we found addressed both integration processes and products. The frequencies of the measures that appeared in three or more studies, out of all the studies in the corpus, are detailed in Table 3, along with reliability coefficients, when these were reported. By far, the most frequent measure of integration products was analysis of students’ written essays. These essays were usually scored by hand (e.g., Martínez et al. 2015). In two cases, researchers used automated scoring of essays (Britt et al. 2004; Weston-Sementelli et al. 2016). Researchers also employed intertextual verification tasks that required students to correctly identify statements that combined information from more than one text or to correctly identify the existence of conflicting information (e.g., Kobayashi 2015). Some researchers used open-ended questions that directly or indirectly required integration of information (e.g., Barzilai and Ka’adan 2017) or multiple-choice questions which required combining or corroborating information from multiple texts (e.g., Hilbert and Renkl 2008). We also identified three types of measures of integration processes: think aloud protocols of students working with multiple texts (e.g., Brand-Gruwel and Wopereis 2006), video-recorded observations of student work (e.g., Mateos and Solé 2009), and analyses of students’ oral discourse while learning with multiple texts (e.g., Gagnière et al. 2012). Other measures that recurred in only one or two studies included analyses of study materials, interviews, metastrategic probes, and more. With the exception of the intertextual verification tasks and multiple-choice tests, which were employed in a minority of the studies, the measures were scored using highly diverse researcher-created coding schemes and rubrics.

The Effectiveness of Integration Interventions

A summary of the 21 intervention studies in which we examined effect sizes, including study population, intervention length, intervention description, measures, and effect sizes, is provided in Appendix A (Online Supplement). Seven of these studies included posttests only, 12 studies included pretests and posttests, and only two studies included pre-, post-, and delayed tests.

Among these studies, 15 (71.4%) reported at least one statistically significant medium to large effect (g > 0.4, Hattie 2009) on a measure associated with integration. The education level of the participants in these studies ranged from 6th grade to higher education, and the intervention length ranged from a single session to 80 hrs. Most of the positive effects were on students’ written essays, including dimensions such as structure, relations between texts, and argumentation (e.g., De La Paz and Felton 2010; González-Lamas et al. 2016). Positive effects were also found on responses to integrative open-ended or multiple-choice questions (e.g., Reisman 2012), on planning processes (e.g., use of organizers, Daher and Kiewra 2016), and on metastrategic knowledge of integration (Barzilai and Ka’adan 2017).

Characteristics of Effective Interventions

Table 4 details the educational levels, disciplines, text types, intertextual relations, number of texts, task types, instructional foci, instructional practices, and measures of the 15 effective intervention studies in comparison to all 21 intervention studies. Close to one half of the effective interventions were among upper primary and lower secondary school students (6th to 9th grades). One third took place in higher education and one fifth in upper secondary school. About one half of the effective interventions were in the discipline of history. Fewer interventions took place in language arts, social sciences, and sciences contexts. Secondary texts and contrasting intertextual relations were prevalent in these studies, similar to the entire corpus. However, the number of texts was lower than in the entire corpus, only three and half texts on average.

Effective interventions employed a variety of writing tasks including argument, inquiry, synthesis, and compare and contrast tasks. In all of these interventions, students were taught integration processes, or how to integrate; in about one third of the interventions, students also learned about text structures; and about one fifth of the interventions addressed the value of integration. None of these interventions included instruction of integration criteria. The most frequent instructional practices, employed in two thirds or more of the effective interventions, were explicit instruction of integration, collaborative discussions and practice, graphic organizers or representations, modeling integration processes, and individual practice.

Of the six interventions that did not result in statistically significant medium to large effects, two examined time spent organizing and presenting as their dependent variable. This indicator might not be sensitive enough to adequately capture instructional effects. Two other interventions that did not produce significant outcomes were only one session long.

Discussion

Learning to Integrate Across Education Levels and Disciplines

Research on promoting intertextual integration has so far addressed mostly secondary school and higher education populations. Students’ integration skills develop significantly over upper primary, lower secondary, and upper secondary school levels (Dutt-Doner et al. 2007; Spivey and King 1989). Hence, researchers may prefer to address populations that exhibit greater readiness for engaging with integration. Nonetheless, students use multiple texts for various tasks already in primary school. Additionally, because of the complexity of intertextual integration processes, it may make sense to support their acquisition in a gradual and spiral manner starting from younger ages. Two studies demonstrate that 6th graders can be successfully taught to integrate multiple texts (Martínez et al. 2015; Wissinger and De La Paz 2016). More research is needed to extend this small pool of studies, to examine if integration can be taught successfully to younger students, and to examine additional methods for advancing young students’ integration competence.

The studies we found addressed integration in diverse academic disciplines. However, different disciplines were emphasized in different education levels. Specifically, whereas history was the dominant discipline in upper secondary school, science and social science topics were more common in higher education. In upper secondary school, students may be focused on advanced disciplinary studies, and multiple texts may be perceived to be especially essential to advanced history studies. Additionally, the high proportion of history studies among the effective interventions (see Table 4) suggests that integration instruction may be more mature and developed in this discipline. In higher education, in contrast, the research populations were often social science (e.g., psychology and education) students. Hence, the topics in higher education interventions tended to address either students’ study areas or scientific and socio-scientific topics that have everyday relevance. Another noticeable trend was that integration was taught in language arts contexts mostly in primary and lower secondary school. This may reflect the greater attention devoted to language arts in lower grades. Nonetheless, because disciplinary genres and practices can substantially impact integration, a more systematic study of integration instruction across different disciplines is needed and could contribute to our understanding of the conditions under which integration skills can transfer across disciplines.

Learning to Integrate with Different Types of Texts and Tasks

In the entire corpus, students integrated about five texts on average. However, the number of texts in the (quasi)-experimental interventions we examined was lower—about three and half texts on average (see Table 4). This suggests that to successfully teach integration, researchers might decrease difficulty by providing a smaller number of texts. One of the issues we still know little about is how the number of texts can impact integration processes and their acquisition.

The texts employed in the studies were highly diverse and included a wide range of secondary, primary, and literary genres. Although the focus of our investigation was not on visual information sources, we also identified a substantial number of studies that used such information sources, e.g., charts or photographs, along with verbal information sources (e.g., Cameron et al. 2017). In one quarter of the studies, students integrated information from websites, selected by themselves or by the researchers (e.g., Raes et al. 2012). However, little information was provided about the nature of these websites or how the unique features of online texts might impact integration. Also, we found no explicit mention of social media texts (e.g., blogs, posts, or user comments) in the studies. In light of the changing nature of texts in networked societies, it may be important to begin addressing students’ capacities to integrate these nontraditional texts as well (Bråten et al. 2018).

An interesting feature of the texts that were employed in the studies was that they frequently presented conflicting viewpoints or arguments (e.g., Kobayashi 2015). This suggests that the challenge of integrating multiple texts often overlaps with the challenge of helping students understand and reconcile contrasting viewpoints. An explicit focus of many interventions was to help students meaningfully deal with such conflicts. This focus was also reflected in the high proportion of argument tasks in the body of studies. These tasks typically required students to form and justify a position based on the texts they read. To do so, students were instructed to engage in processes such as weighing source reliability and quality of arguments and evidence in order to reconcile contrasting viewpoints (e.g., Wissinger and De La Paz 2016). Nonetheless, because relations among texts are diverse, it is important that students also learn to integrate texts that present complementary or overlapping information. Students may have difficulties identifying commonalities among texts or inferring cross-textual categories. An additional future challenge may be helping students to recognize a variety of intertextual relations among texts and to adapt their integration processes accordingly.

The range and diversity of the integration tasks that students were asked to perform was striking. In addition to several frequently recurring task types, i.e., argument, synthesis, inquiry, summary, compare and contrast, and narrative tasks, we also identified numerous task types that were used more rarely. This diversity indicates that integration serves a great many purposes, and suggests that there may be value in familiarizing students with multiple types of tasks that build on multiple texts.

Some studies compared the effects of different task types on integration processes and outcomes (e.g., Gil et al. 2010a). Although much can be learned about integration from comparing different task types, the mixed results of these studies suggest that caution is needed in drawing conclusions regarding the impact of tasks. This impact appears to depend on the knowledge, beliefs, and skills that learners bring to the task, as well as the learning context. Additionally, the ways in which readers construe their reading context may affect their task interpretations (Britt et al. 2018). Thus, tasks cannot be interpreted in isolation from learners and contexts.

Another approach taken to writing tasks in the studies was to focus on a single type of writing task or genre (e.g., argument, synthesis, or compare and contrast). Such studies usually involved explanations and supports that specifically addressed the conventions and requirements of these genres (e.g., Boscolo et al. 2007). These studies suggest that students’ familiarity with text structures and styles cannot be taken for granted and that successful integration instruction might need to address these.

If, however, integration writing genres are highly diverse and if successfully teaching integration requires adequate familiarity with specific writing genres and the ways in which multiple texts can be connected within these genres, then promoting intertextual integration may be a formidable challenge in K12 education. Addressing this challenge might require rethinking the place of integration in the curriculum. Integration should not only be taught in the context of specific writing genres but should rather be systematically addressed across a range of genres, tasks, and disciplines. As students gradually learn to write in different genres in various disciplinary contexts, they can also learn to flexibly integrate multiple texts in their writing, in an appropriate style. More research is needed to examine how students might be best introduced to multiple integrative writing genres and how this might contribute to enhancing their integration competence.

Integration Instruction: What and How Do Students Learn?

A natural focus of studies that aim to promote integration is on teaching and scaffolding integration strategies. However, some of the studies we reviewed suggested several additional aspects of integration that may warrant attention. First, because integration is an effortful and complex activity, students may benefit from understanding the epistemic purposes and value of integration. For example, students can learn to appreciate why forming an opinion based on multiple information sources may be better than forming an opinion based on a single information source (Barzilai and Ka’adan 2017). It is possible that understanding of the importance of integration could also arise from repeated engagement in this practice. However, addressing the value of integration, through discussions and modeling, for example, might help make the complex and effortful processes involved in integration more meaningful to students. Second, several studies pointed to the importance of addressing students’ understandings of text structures (e.g., Kirkpatrick and Klein 2009). Understanding how texts are organized can serve both the comprehension of individual texts and the production of new integrative texts. Third, students should also be able to evaluate and revise their integrative representations and products (Rouet and Britt 2011). Hence, they may benefit from learning epistemic and communicative criteria and standards for evaluating integration outcomes. For example, students may be taught assessment criteria, such as use of intertextual macropropositions and linguistic cohesion, which they can use to self-assess their writing (Segev-Miller 2004). In terms of the MD-TRACE model (Rouet and Britt 2011), as well as the more general model of reading as problem solving (RESOLV) by Britt et al. (2018), knowledge of integration strategies, of the value and purposes of integration, of text structures, and of integration criteria can all be considered as part of the internal resources that readers can draw on to interpret contexts and tasks and to make processing decisions. However, in contrast to knowledge of integration strategies, little attention has been paid to students’ knowledge of the value and purposes of integration, of text structures, and of integration standards. Hence, an important goal for future research may be to devote more attention to these aspects and to examine whether addressing them might lead to better learning outcomes and better transfer. Additional issues that may deserve more empirical attention are how to help students better understand relations among sources as they integrate and how to promote students’ motivation to engage in intertextual integration.

Unsurprisingly, many of the instructional practices we identified in the studies (e.g., explicit instruction, modeling, collaborative practice, individual practices, and feedback) are generally recognized as good practices of strategy instruction (e.g., Graham et al. 2005). Hence, in this section, we elaborate on instructional practices that may more specifically apply to integration. One of these practices is the development of integration process prompts. In many studies, researchers and teachers designed and employed specific cognitive or metacognitive prompts for guiding integration processes (e.g., De La Paz 2005). These prompts, for example, directed students to attend to similarities and differences in the texts they were reading and to organize information from the texts (e.g., González-Lamas et al. 2016). Providing such prompts, in oral or written form, may help students to start forming connections between texts while reading and to plan selection and organization processes.

Another interesting recurring practice was engaging students in summarizing and annotating individual texts, in preparation for integration. Some initial evidence suggests that such practices can indeed benefit integration (Britt and Sommer 2004; Hagen et al. 2014; Kobayashi 2009). Summaries and annotations may help students identify and select important and relevant ideas from the texts. In addition, they may help readers develop better organized, more durable representations that can be drawn on more readily when reading subsequent texts (Britt and Sommer 2004).

A third practice that featured prominently in the studies was the use of graphic organizers. We found diverse uses of graphic organizers, including tables, maps, and post-it notes, to classify and connect information (e.g., Darowski et al. 2016). One of the challenges of dealing with multiple texts is the fact that information is spread over separate pages. Assembling information from multiple texts in a single display may help readers judiciously select information and create intertextual connections. Additionally, the structure of the organizer can scaffold categorization and linking processes by pointing to relevant elements and categories in and about the texts. Initial evidence suggests that graphic organizers can benefit integration (Barzilai and Ka’adan 2017; Hilbert and Renkl 2008). More research is needed to examine if and how graphic organizers can contribute to intertextual integration.

We found multiple examples of uses of digital technologies to promote integration, such as providing instruction or online scaffolds (e.g., Raes et al. 2012). However, the contribution of digital technologies to improving integration is still an under-researched area.

Integration Instruction: What Works and How Do We Know That?

Our review indicates that integration can be successfully taught, with medium to large effect sizes, from 6th grade to university. Five instructional practices recurred in two thirds or more of the effective intervention studies: explicit instruction of integration, collaborative discussions and practice, using graphic organizers or representations, modeling integration processes, and individual practice (see Table 4). These practices, and their combinations, may be promising directions that deserve more attention.

The predominant measure used to assess students’ integration skills was analysis of their written essays. Researchers also used, albeit less frequently, a range of additional product and process measures including intertextual verification tasks, open-ended questions, multiple-choice questions, think aloud protocols, video-recorded observations, and analyses of students’ oral discourse. Most of these measures were scored by hand using highly diverse coding schemes. The lack of agreement on dimensions and standards for assessing integration quality limits the possibilities of comparing the effects of instructional approaches and practices. More work is needed in order to develop shared definitions of integration quality.

Several additional methodological limitations constrain conclusions about the effectiveness of integration instruction: There are relatively few studies that have examined integration instruction in pre-post control group designs. Such designs are important for detecting if change has occurred and identifying causes of change. There is a particular lack of studies employing delayed assessments that can be used to examine the effects of integration over time. Additionally, because most studies combine multiple instructional practices, it is difficult to draw conclusions regarding the efficacy of specific practices.

Limitations of This Review

One limitation of our review is the diversity of terms under which integration of multiple texts has been studied in various domains. Although we did our best to identify and comprehensively address relevant terms, our searches may have failed to identify studies that do not include these terms. Another limitation is the high diversity of the studies in terms of age groups, disciplines, durations, and more. Nonetheless, this diversity also affords a broad view of how integration is addressed in various contexts by researchers and teachers. Of course, the methodological limitations mentioned in the previous section should be taken into account when considering the findings of this review. We hope that future research will address these methodological limitations and will continue broadening the understanding of how integration of multiple texts can be successfully taught. Improving students’ inclinations and capabilities to meaningfully and critically integrate information from multiple texts is vital for enabling informed participation in twenty-first-century knowledge societies.

References

References marked with an asterisk indicate studies included in the review.

Afflerbach, P., & Cho, B.-Y. (2009). Identifying and describing constructively responsive comprehension strategies in new and traditional forms of reading. In S. E. Israel & G. G. Duffy (Eds.), Handbook of research on reading comprehension (pp. 69–90). New York: Routledge.

Alexander, P. A., & DRLRL. (2012). Reading into the future: competence for the 21st century. Educational Psychologist, 47(4), 259–280. https://doi.org/10.1080/00461520.2012.722511.

*Argelagós, E., & Pifarré, M. (2012). Improving information problem solving skills in secondary education through embedded instruction. Computers in Human Behavior, 28(2), 515–526. https://doi.org/10.1016/j.chb.2011.10.024.

Barzilai, S., & Eshet-Alkalai, Y. (2015). The role of epistemic perspectives in comprehension of multiple author viewpoints. Learning and Instruction, 36, 86–103. https://doi.org/10.1016/j.learninstruc.2014.12.003.

*Barzilai, S., & Ka’adan, I. (2017). Learning to integrate divergent information sources: the interplay of epistemic cognition and epistemic metacognition. Metacognition and Learning, 12(2), 193–232. https://doi.org/10.1007/s11409-016-9165-7.

Barzilai, S., & Strømsø, H. I. (2018). Individual differences in multiple document comprehension. In J. L. G. Braasch, I. Bråten, & M. T. McCrudden (Eds.), Handbook of multiple source use (pp. 99–116). New York: Routledge.

Barzilai, S., & Zohar, A. (2012). Epistemic thinking in action: evaluating and integrating online sources. Cognition and Instruction, 30(1), 39–85. https://doi.org/10.1080/07370008.2011.636495.

*Boscolo, P., Arfé, B., & Quarisa, M. (2007). Improving the quality of students’ academic writing: an intervention study. Studies in Higher Education, 32(4), 419–438. https://doi.org/10.1080/03075070701476092.

Braasch, J. L. G., & Bråten, I. (2017). The discrepancy-induced source comprehension (d-isc) model: basic assumptions and preliminary evidence. Educational Psychologist, 52(3), 167–181. https://doi.org/10.1080/00461520.2017.1323219.

*Brand-Gruwel, S., & Wopereis, I. (2006). Integration of the information problem-solving skill in an educational programme: the effects of learning with authentic tasks. Technology, Instruction, Cognition & Learning, 4, 243–263.

Brand-Gruwel, S., Wopereis, I., & Walraven, A. (2009). A descriptive model of information problem solving while using internet. Computers & Education, 53(4), 1207–1217. https://doi.org/10.1016/j.compedu.2009.06.004.

Brante, E. W., & Strømsø, H. I. (2017). Sourcing in text comprehension: a review of interventions targeting sourcing skills. Educational Psychology Review. https://doi.org/10.1007/s10648-017-9421-7.

*Bråten, I., & Strømsø, H. I. (2010). Effects of task instruction and personal epistemology on the understanding of multiple texts about climate change. Discourse Processes, 47(1), 1–31. https://doi.org/10.1080/01638530902959646.

Bråten, I., Britt, M. A., Strømsø, H. I., & Rouet, J.-F. (2011). The role of epistemic beliefs in the comprehension of multiple expository texts: toward an integrated model. Educational Psychologist, 46(1), 48–70. https://doi.org/10.1080/00461520.2011.538647.

Bråten, I., Anmarkrud, Ø., Brandmo, C., & Strømsø, H. I. (2014). Developing and testing a model of direct and indirect relationships between individual differences, processing, and multiple-text comprehension. Learning and Instruction, 30, 9–24. https://doi.org/10.1016/j.learninstruc.2013.11.002.

Bråten, I., Braasch, J., & Salmerón, L. (2018). Reading multiple and non-traditional texts: new opportunities and new challenges. In E. B. Moje, P. Afflerbach, P. Enciso, & N. K. Lesaux (Eds.), Handbook of reading research (Vol. V). New York: Routledge.

Britt, M. A., & Rouet, J.-F. (2012). Learning with multiple documents: component skills and their acquisition. In J. R. Kirby & M. J. Lawson (Eds.), Enhancing the quality of learning: dispositions, instruction, and learning processes (pp. 276–314). New York: Cambridge University Press.

*Britt, M. A., & Sommer, J. (2004). Facilitating textual integration with macro-structure focusing tasks. Reading Psychology, 25(4), 313–339. https://doi.org/10.1080/02702710490522658.

*Britt, M. A., Wiemer-Hastings, P., Larson, A. A., & Perfetti, C. A. (2004). Using intelligent feedback to improve sourcing and integration in students’ essays. International Journal of Artificial Intelligence in Education, 14(3, 4), 359–374.

Britt, M. A., Rouet, J.-F., & Braasch, J. L. G. (2013). Documents as entities: extending the situation model theory of comprehension. In M. A. Britt, S. R. Goldman, & J.-F. Rouet (Eds.), Reading—from words to multiple texts (pp. 160–179). New York: Routledge.