Abstract

Digital competence is crucial for technology integration in education, with teacher educators playing a vital role in preparing student teachers for digitalized environments. In our conceptualization of teachers’ digital competence (TDC), we emphasize its embeddedness in a professional context. The Digital Competence for Educators (DigCompEdu) framework aligns with this understanding, yet research focusing on teacher educators is limited. To address this gap, we followed a quantitative research strategy to explore different sources of validity evidence for the DigCompEdu in a small, non-representative Hungarian teacher-educator sample (N = 183) via an online questionnaire. Our study, regarding the DigCompEdu as a measure of TDC, aims to (1) establish validity evidence based on internal structure concerns via Partial Least Squares structural equation modelling to evaluate the validity and reliability of the tool, (2) compare TDC self-categorization with test results to provide validity evidence based on the consequences of testing, and (3) explore validity evidence based on relationships of TDC with other variables such as age, technological, and pedagogical competence. Our findings reveal a significant mediating effect of professional engagement on teacher educators’ ability to support student teachers’ digital competence development. Despite the sample’s limitation, this study contributes to refining the DigCompEdu framework and highlights the importance of professional engagement in fostering digital competence among teacher educators.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

The integration of technology into education is essential for equipping students with the necessary skills for a rapidly digitizing world (Llorente-Cejudo et al., 2023; Starkey, 2020). Preparing student teachers to use digital technologies has been an enduring focus in teacher education (Falloon, 2020). This issue has gained even greater importance due to school closures during the COVID-19 pandemic (König et al., 2020).

Teacher educators have an important role in this issue, as they are digital role models for student teachers which can be a useful way to provide ideas for integrating information-communication technologies (ICT) in teaching (Røkenes & Krumsvik, 2016; Saltos-Rivas et al., 2023). Although, teacher educators often possess limited digital competence which hinders their ability to guide pre-service teachers in technology integration (Cabero-Almenara et al., 2021). A recent study exploring factors related to digital competence found a low level of digital technology use (due to a lack of pedagogical and technological knowledge) in the case of Hungarian teacher educators (Dringó-Horváth, 2018). In our study, we aim to explore this issue further, by focusing on teacher digital competence (TDC).

Insufficient exposure to digital technologies in teacher training programmes is linked to a lack of self-efficacy and use of technology among teachers (Amhag et al., 2019). Others pointed out a mismatch between the demands early-career teachers face and the preparation they receive regarding the use of digital technologies (Instefjord & Munthe, 2017). Furthermore, recent research confirms that lecturers lack the skills to use ICT for didactical purposes in university teaching (Liesa-Orus et al., 2023). In our study, we aim to explore this issue regarding the Hungarian teacher education context.

Since teacher preparation plays a pivotal role in building digitally competent teachers, there’s a need to address potential gaps in teacher educators’ digital competencies (Krumsvik, 2014; Tømte et al., 2015). However, the concept of TDC lacks consensus in operationalization (Claro et al., 2024).

To address these research gaps, our study adds to the theoretical and practical understanding of TDC focusing on teacher educators by establishing comprehensive validity evidence for measuring TDC.

The remainder of this article is organized as follows. In Section 2 we provide an overview of the concept of TDC, especially focusing on a higher education setting. We introduce frameworks describing TDC and explore previous research results. Based on the critical review of previous studies, we state the aims of our empirical research. In Section 3, we provide a detailed description of the methodology of our study. In Section 4, we describe the results of the study organized by our research questions. Next, in Section 5 we discuss the major findings, implications and limitations of our study and finally, in Section 6 we close the article by describing our conclusions.

2 Literature review

2.1 Frameworks for teacher digital competence

The literature clearly distinguishes between digital literacy, as an ability to understand and use information presented via computers and digital competence, as proficiency in using ICT in a professional context (Spante et al., 2018). This later notion of digital competence corresponds to the integration of ICT in a daily work setting which follows the concept of professional digital competence: the ability of teachers to work in a digitalized environment (e.g. teaching with digital tools, managing digital learning environments etc.) as defined by Starkey (2020). The concept of professional digital competence signals a shift towards a more complex, context-sensitive understanding of TDC (McDonagh et al., 2021; Siddiq et al., 2024).

According to Falloon (2020), there are several frameworks and models were built to assess and develop the digital capability of teachers, such as:

-

SAMR (Substitution Augmentation Modification Redefinition) model (Puentedura, 2003).

-

TPACK (Technological, Pedagogical and Content Knowledge) model (Mishra & Koehler, 2006).

-

DECK (distributed thinking and knowing, engagement, communication and community, knowledge building) framework (Fisher et al., 2012).

-

CDL (Critical Digital Literacy) framework (Hinrichsen & Coombs, 2013).

-

TEIL (Teacher Education Information Literacy) framework (Klebansky & Fraser, 2013).

-

ISTE (International Society for Technology in Education) standards for educators (https://www.iste.org/standards/for-educators).

-

Digital Competence of Educators (DigCompEdu) framework (Redecker, 2017).

-

PIC (passive, interactive, creative) – RAT (replace, amplify, transform) framework (Kimmons et al., 2020).

-

TDC (Teacher Digital Competence) framework (Falloon, 2020).

The presented models and their combinations frequently form the basis of research on digital competencies among teachers (Agyei & Voogt, 2011; Cerratto Pargman et al., 2018; Chou et al., 2020; Phillips, 2017), teachers and students (Akturk & Ozturk, 2019; Hunter, 2017; Reichert & Mouza, 2018) and teacher educators (Nelson et al., 2019). It is out of the scope of this paper to compare all the different frameworks. For a detailed analysis from a pedagogical perspective see for example the article of Kimmons et al. (2020).

Previous research using such frameworks rarely focused on the pedagogy related to digital competence (i.e. lack of focus on the context-sensitive understanding of TDC) and mainly focused on students (Zhao et al., 2021) and less on teacher educators (Krumsvik, 2014). Our study intends to fill this gap by using TPACK (as a theoretical framework), as one of the most widespread frameworks that encompasses pedagogical aspects in conceptualizing TDC, and test the validity and reliability of the DigCompEdu, a relatively new instrument that was not applied in a Hungarian context before. Since DigCompEdu is a comprehensive framework, able to grasp contextual and pedagogical factors in explaining TDC (Muammar et al., 2023), it is well-suited to the aims of this research. Next, we present the relevant aspects of the TPACK model and the DigCompEdu framework.

2.1.1 The TPACK model

The most widespread framework is the TPACK (Technological, Pedagogical and Content Knowledge) model (Mishra & Koehler, 2006). TPACK can be used as a theoretical or analytical framework to explain or interpret digital technology use and technology integration (Akturk & Ozturk, 2019; Atun & Usta, 2019; Y.-H. Chen & Jang, 2019; De Freitas & Spangenberg, 2019; Dorfman et al., 2019; Ocak & Baran, 2019). The model describes the intersections of content knowledge (CK), pedagogical knowledge (PK), technology knowledge (TK), pedagogical content knowledge (PCK), technological content knowledge (TCK), technological pedagogical knowledge (TPK) and technological pedagogical content knowledge (TPACK) depicted in Fig. 1. CK is a teacher’s understanding of the specific subject matter they teach. PK is the teachers’ knowledge of teaching methods, classroom management, student learning processes etc. TK is the teachers’ understanding of technologies, their functions and applications. PCK is the teachers’ ability to transform a given subject matter into an engaging lesson. TCK is a teacher’s understanding of how they can use technology to present the given subject matter. TPK is teachers’ knowledge of how technology can be used to transform the teaching and learning process. Finally, TPACK is a teacher’s ability to use technology for the teaching of a given subject (Mishra & Koehler, 2006). In addition, the model also emphasizes that TPACK is situated and understood in a given context, addressing Contextual Knowledge (XK) which is a teacher’s understanding of the organizational context (Mishra, 2019).

The TPACK model developed by Mishra and Koehler (Source: https://tpack.org/)

In the comprehensive, survey-based research of Nelson et al. (2019) - which took place among 842 US teacher educators across 50 US states – the authors examined the state and direction of technology integration preparation in teacher education programs. Their study showed that adoption of TPACK is generally low among teacher educators, and it is affected by complex institutional and personal factors. Development in the areas of TPACK is a contextualized process (Phillips, 2016), its enactment is socially mediated in the workplace and can be considered as professional identity development (Phillips, 2017). Clarke (2017) emphasizes the importance of self-reflection and the role of communities of practice in developing teachers’ TPACK. Development in TPACK is linked to better learning outcomes, and increased motivation and attention of students (Atun & Usta, 2019; Chandra & Mills, 2015; Chang et al., 2013; C.-C. Chen & Lin, 2016; Clarke, 2017). Therefore, it is important for student teachers to continuously reflect on their pedagogical beliefs regarding the use of digital technologies for pedagogical purposes (Otrel-Cass et al., 2012).

Besides TPACK elements and self-reflection, age is often discussed with digital competencies (Starkey, 2020). For example, Agyei and Voogt (2011) found that younger teachers reported a higher level of anxiety, while experienced teachers reported a higher level of digital competence. Guo et al. (2008) found no differences in ICT scores between digital natives and digital immigrants. Another cross-national research – including 6 European and Asian countries, and 574 teacher educators – found a correlation between age and TPACK factors, but no correlation between gender, academic level and TPACK (Castéra et al., 2020). The ambiguous results between the connection of age and TPACK are also supported by Jiménez-Hernández et al. (2020). Therefore, in our study, we will also focus on age, and technological and pedagogical competencies as determinants of teacher digital competence.

Although TPACK is a widely used framework, it is often criticized for its inaccurate and insufficient definitions, its lack of practicality (Willermark, 2018) and its lack of focus on associated competencies that would aid implementation (Falloon, 2020). The previously highlighted DigCompEdu framework offers a better-operationalised conceptualization of TDC which encompasses the professional expertise of teachers and also their professional development (Spante et al., 2018). Considering that DigCompEdu is based on extensive expert and stakeholder consultations and aims to structure existing insights and evidence into one comprehensive model that applies to all educational contexts (Caena & Redecker, 2019) it can be considered as a useful self-reflection tool to assess teacher educators’ digital competencies. The next section will describe the DigCompEdu in detail.

2.1.2 Digital competence of educators (DigCompEdu)

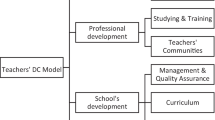

The European Commission Joint Research Centre has developed a digital competence framework, especially for education: the European Framework for the Digital Competence of Educators. The DigCompEdu helps to better understand the digital competencies teachers need to develop to meaningfully integrate digital technologies in education and support students in acquiring digital competencies (Redecker, 2017). The framework captures educators’ digital competencies in six areas (described by 22 statements altogether). The six areas (Fig. 2) cover educators’ professional and pedagogical competencies and include learners’ competencies as well:

-

Area 1: Professional Engagement: Using digital technologies for communication, collaboration and professional development.

-

Area 2: Digital Resources: Sourcing, creating and sharing digital resources.

-

Area 3: Teaching and Learning: Managing and orchestrating the use of digital technologies in teaching and learning.

-

Area 4: Assessment: Using digital technologies and strategies to enhance assessment.

-

Area 5: Empowering Learners: Using digital technologies to enhance inclusion, personalization and learners’ active engagement.

-

Area 6: Facilitating Learners’ Digital Competence: Enabling learners to creatively and responsibly use digital technologies for information, communication, content creation, wellbeing and problem-solving.” (Redecker, 2017, p. 16).

The model (according to the respondents’ strengths and weaknesses in each area) also sorts respondents into different proficiency levels of digital competence – similar to the Common European Framework of Reference for Languages which also differentiates 6 levels, from A1 to C2.

The DigCompEdu framework (Redecker, 2017, p. 16)

2.1.3 Previous studies regarding the validity of the DigCompEdu

Previous empirical studies confirmed the validity of the instrument in a Moroccan (Benali et al., 2018), German (Ghomi & Redecker, 2019) and Spanish (higher education) sample (Cabero-Almenara et al., 2020). The validity of the tool was further confirmed by Párraga et al. (2022), Llorente-Cejudo et al. (2023) and Inamorato dos Santos et al. (2023). Researchers point out potential reliability issues (e.g. regarding digital resources (Ghomi & Redecker, 2019) or suggest removing items (e.g. Párraga et al., 2022), therefore we follow the suggestions of Llorente-Cejudo et al. (2023) and revisit the validity and reliability of the instrument. Our paper accompanies the list of DigCompEdu validation studies with a Hungarian (teacher educator) sample. Validity is considered as a unitary concept examining different sources for validity evidence (Reeves & Marbach-Ad, 2016). In our study we explore validity evidence based on internal structure concerns („the degree to which the relationships among test item and test components conform to the construct on which the proposed test score interpretations are based” (AERA, 2014), consequences of testing („soundness of proposed interpretations for their intended uses” (AERA, 2014, p. 19) and relations with other variables („the relationship of test scores to variables external to the test” (AERA, 2014, p. 16).

2.2 Present study

Although the DigCompEdu was validated in several cases, some studies pointed out potential reliability and validity issues. In addition, teacher educators’ digital competence has rarely been addressed in previous studies. It is important to reevaluate these measurement tools in different contexts to provide further validity evidence, therefore in our study, we explore validity evidence for the DigCompEdu based on internal structure concerns („the degree to which the relationships among test item and test components conform to the construct on which the proposed test score interpretations are based” (AERA, 2014) regarding a Hungarian teacher educator sample.

We hypothesise that (H1) the DigCompEdu will demonstrate evidence of validity based on internal structure, as indicated by strong internal consistency and reliability measures.

Previous studies often showed that respondents overestimate their digital skills in self-reported measures (Tomczyk, 2021). The DigCompEdu instrument allows for self-categorization of the level of digital competence before completing the DigCompEdu questionnaire and directly after that. Inamorato dos Santos et al. (2023) found that respondents tended to change their perceptions to higher proficiency levels after completing the questionnaire. As Wang and Chu (2023) also highlight, self-efficacy (believing in one’s capability to plan and execute an action to reach an outcome (Bandura et al., 1999) can have a direct effect on behaviour, predicting digital competence. However, self-reflection as required for establishing the level of self-efficacy in TDC is rarely addressed in studies (Bilbao Aiastui et al., 2021). Therefore, in our study, we explore validity evidence based on the consequences of testing („soundness of proposed interpretations for their intended uses” (AERA, 2014, p. 19).

We hypothesise that (H2) the DigCompEdu will demonstrate evidence of validity based on consequences of testing, as indicated by a significant relationship between teachers’ self-categorization of their digital competency level and categorisation based on the results of the DigCompEdu.

Further studies explored TDC concerning background variables. Most notably, the systematic mapping study of Saltos-Rivas et al. (2023) explored the ambiguous results of different variables on university teachers’ digital competence. While focusing on digital competence in general, the study found a positive effect of age and teaching experience, while considering digital teaching competence, the results indicated a negative effect for both variables. This raises our attention to the established logic of the TPACK model, allowing for a complex understanding of the technological and pedagogical aspects of TDC. Therefore, in our study, we explore validity evidence based on relations with other variables („the relationship of test scores to variables external to the test” (AERA, 2014, p. 16).

We hypothesise that (H3) the DigCompEdu will demonstrate evidence of validity based on relations to other variables, as indicated by significant connections between test scores and respondents’ age, technological competence (as measured by participation in ITC training), and pedagogical competence (as measured by having teaching qualifications).

By providing further validity evidence for the DigCompEdu tool, our study could contribute to replicating the results of previous validation studies and further our understanding of TDC regarding a teacher-educator sample.

3 Methodology

3.1 Research design

The study followed a quantitative, non-experimental, correlational research design (Johnson, 2001) to establish comprehensive validity evidence for the DigCompEdu framework, focusing on teacher educators’ digital competencies. The design allows the examination of the relationships between variables as they naturally occur, without manipulation. Furthermore, in alignment with our research aim, we wanted to adapt a previously validated tool to measure TDC in a less explored target group (teacher educators).

We implemented an online questionnaire (using Qualtrics) for Hungarian teacher educators as a data collection method due to its efficiency in reaching a large number of respondents. First, we developed the research instrument, which involved a carefully planned translation process and pilot testing (described in Section 3.2). After that, the data gathering took place, for which we needed to map potential participants and send them the call for participation and the informed consent form (described in Section 3.3). The whole research process is illustrated in Fig. 3 and further detailed in the upcoming sections.

We conducted the research following general research ethics regulations, approved by the Research Ethics Committee of the corresponding author’s university. Participation was voluntary, respondents received adequate information regarding the research project and gave their consent before participation.

The following table summarizes our research aims, hypotheses and criteria for testing them (Table 1).

In the following sections, we are going to describe the research instrument, the participants of the study, the data-gathering process and the characteristics of the sample.

3.2 Research instrument

We used the DigCompEdu questionnaire developed by the Joint Research Centre of the European Commission and following the aims of our study, we relied on the higher education-specific version (CheckIn for Higher Education – The tool has been discontinued as of 31 January 2022 and an updated version is available at the JRC website.). As there was no Hungarian version available, we followed the guidelines for cross-cultural adaptation of self-report measures (Beaton et al., 2000). First, two independent experts (with native Hungarian language and a high level of English expertise) translated the English questionnaire into Hungarian (forward translation). One of the translators was an expert in digital education, the other was not. The authors, acting as an expert group consolidated the two translations, solving any discrepancies between the two versions. In the next stage, two other experts (who were naïve to the outcome measured) translated the Hungarian version back to English (back translation). The original and the new English versions were checked again for semantic, idiomatic, experiential and conceptual equivalence by the authors acting as an expert group refining the instrument. During the process, authors carefully discussed each of the 22 items to reflect the original claims in the most accurate way and at the same time ensure that they are understandable in Hungarian higher education contexts as well. The final version of the translated questionnaire was piloted via structured interviews in a cognitive testing process by 10 higher education teachers. Based on the feedback, the instrument was fine-tuned by the expert group to better reflect the peculiarities of the Hungarian language and Hungarian higher education.

The final instrument consisted of 5 blocks:

-

1.

block: demographic questions (gender, age, work tenure, teaching qualification, disciplinary area, participation in ICT-trainings),

-

2.

block: self-rate question regarding the participants’ perception of their level of digital competence,

-

3.

block: the DigCompEdu instrument (22 items in 6 areas) introduced in detail in Section 2.1.2. (The DigCompEdu instrument is in Appendix Table 10).

-

4.

block: another self-rate question, presented after completing the DigCompEdu instrument so participants could rate their level of digital competence again.

Respondents could rate each statement of the DigCompEdu on a 5-point scale where each scale point was uniquely described along with the various levels of competence. For example, for the first item of Professional Engagement, respondents could have rated their level of competence (from A1 to C2) along the following scale:

-

I rarely use digital communication channels.

-

I use basic digital communication channels, e.g. e-mail.

-

I combine different communication channels, e.g. e-mail and class blog or the department’s website.

-

I systematically select, adjust and combine different digital solutions to communicate effectively.

-

I reflect on, discuss and proactively develop my communication strategies.

The response options are described more precisely, therefore providing the opportunity for higher validity, but this aspect also makes the questionnaire more complex and would require more time to complete.

3.3 Participants of the study and data gathering

Our research focused on Hungarian teacher educators. Unfortunately, the Hungarian education system does not provide statistics regarding the number of academics teaching in different educational programmes since this is an internal human resource management decision of higher education institutions. As a method for gathering respondents for our questionnaire, we mapped the webpages of all universities which provide general teacher education in Hungary (13 HEIs out of the 63 HEIs in Hungary) and selected the e-mail addresses of those academics who teach in departments related to teacher education (Faculties of Humanities, Informatics, Natural Sciences, Institutes of Education and Psychology, departments of different subject didactics). Altogether we gathered 1675 e-mail addresses which can be considered a well-established professional guess regarding the size of the population. In the summer of 2019, the questionnaire was sent to the selected 1675 academics teaching in fields related to teacher education in universities where they provide teacher education programmes. Although we have identified and reached out to the whole population of teacher educators, our sampling is considered a non-probability sampling with voluntary responses. Data gathering lasted for 3 weeks with an additional two reminders after the first two weeks. After data cleaning (filtering out incomplete questionnaires, questionnaires that were completed in less than 5 min) we retained 183 questionnaires.

The percentage of returned questionnaires (~ 11%) raises several questions regarding possible biases, upon which our methodology should be fine-tuned (e.g. the questionnaire was too long, and not all respondents finished it; the questionnaire was sent to all employees, but only those filled in who have higher competencies in ICT or check official emails more regularly). A solution would be to decrease the complexity of the instrument by employing a simple Likert-scale version instead of the detailed descriptors. This approach would diminish the complexity of the instrument and its usability as a self-reflection tool, but it would provide more comprehensive data in terms of respondents and unlock other analysis methods that require continuous variables.

3.4 Sample

As we cannot describe the population of teacher educators (as we mentioned in the previous section) we cannot make claims regarding the representativeness of our sample. However, according to the professional estimate of the authors, the sample can be treated as balanced regarding gender, age, work tenure and teaching qualifications of respondents as the population of Hungarian teacher educators is also skewed towards females. Table 2 describes the characteristics of our sample.

Since our aims are exploratory regarding the validity and reliability of the instrument, we cannot generalize our results to the Hungarian teacher-educator population, but it is sufficient for answering the questions raised in this study.

4 Results

This study aimed to assess the reliability and validity of the DigCompEdu questionnaire and its application in the Hungarian teacher education context. We examined validity based on contemporary concepts, considering test interpretation and use. First, we explored validity evidence based on internal structure concerns (H1) and based on the consequences of testing (H2), then we examined validity evidence based on relations with other variables (age, technological and pedagogical competence as measured by ICT-training participation and teaching qualification) (H3).

4.1 Validity evidence based on internal structure concerns

Validity evidence based on internal structure concerns means „the degree to which the relationships among test items and test components conform to the construct on which the proposed test score interpretations are based” (AERA, 2014). As the DigCompEdu is a well-established tool from a theoretical perspective, we set out to evaluate this theoretical model with factor analysis using the Partial Least Squares (PLS) approach as implemented in SmartPLS 3. PLS is recommended for exploratory research and works well with small sample sizes (Hair et al., 2019; Lowry & Gaskin, 2014). Furthermore, we decided to use a PLS approach instead of a covariance-based (CB) approach because our data is non-metric (ordinal) and non-normally distributed, and our sample size is small. According to Hair et al. (2017) suggestions, in this case, a PLS approach is appropriate.

Based on the theoretical model of the DigCompEdu we draw the following factor structure for the initial analysis. Using a partially least square structural equation modelling in SmartPLS 3. (For those who are not familiar with the technical details regarding the PLS-SEM approach, the software review of Sarstedt and Cheah (2019) could offer some pointers). We employed a Factor weighting scheme using a maximum of 1000 iterations and bootstrapping (1000 subsamples) using a two-tailed significance alpha level of 0.05 and a bias-corrected and accelerated confidence interval method. We used the standardised (Z scores) values for our variables. The results of the PLS algorithm show the outer loadings of reflective factors, the path coefficients between factors and R2 measures of the explained variance of the factors by their indicators (see Fig. 4).

The SmartPLS 3 software provides path coefficients (with t statistics and p values after bootstrapping), outer loadings, R2, reliability measures (Average Variance Explained - AVE, Composite Reliability - CR, Cronbach’s Alpha) and model fit measures (Standardized Root Mean Residual - SRMR). Along with these indicators, we can assess the theoretical factor structure.

First, we consider the outer loadings of indicators on the different factors. According to Hair et al. (2019), an outer loading that is higher than 0.705 can be considered acceptable. Table 3 summarizes the outer loadings for the theoretical model.

Several items fail to reach the threshold of 0.7, the items for Area 2. Digital Resources are especially problematic. Further analysing the model, we can have a look at the path coefficients between our factors (Table 4).

The analysis shows that the path coefficients between Professional Engagement and other areas (except for Facilitating Learners’ Digital Competencies) are significant, while other areas show no significant connection to the outcome variable. Finally, we will look at the reliability measures (Table 5).

According to the rule of thumb provided by Hair et al. (2019), the internal consistency reliability of Area 2 (Digital Resources) of our model seems to be rather problematic. Problems with the factor of Digital Resources are also reported by Ghomi and Redecker (2019) in a German sample. We also identified issues concerning other factors (DR, EL, FLDC, TL) regarding their convergent validity (AVE < 0.50) as well, which prompted us to reconsider the factor structure of the instrument.

According to the results of the analysis, we considered removing items with low outer loadings (PE1, DR1, DR2, DR3, AS2, EL1, TL2, TL3, FLDC1, FLDC3). The analysis highlighted all three elements of Digital Resources (in addition to the previously highlighted issues), therefore we opted to delete this dimension as it was reported as a problematic dimension by other researchers as well (e.g. (Ghomi & Redecker, 2019). Considering the joint teaching and research focus of Hungarian academics, we were confident that the dimension of Digital Resources was more connected to respondents’ research duties (e.g. use of different internet sites and search strategies to find resources). Considering elements for Teaching and Learning, Assessment and Empowering Learners the removal of one element reduced these dimensions to two indicators which are not ideal, therefore we decided to combine them as they belong to the broader category of educators’ pedagogical competence. These elements are often grouped as it is evident for example in the article of Demeshkant, Potyrała and Tomczyk (2020). Interestingly, items removed describe such pedagogical practices that relate to collaborative learning (e.g. TL2 - I monitor my students’ activities and interactions in the collaborative online environments we use), inclusion and equity perspectives (e.g. AS2 - I analyse all data available to me to timely identify students who need additional support or EL1 - When I create digital assignments for students I consider and address potential digital problems.). These pedagogical elements are not strong in Hungarian higher education (OECD, 2017); therefore, we can expect that the lack of pedagogical consideration could affect the digital dimension as well. To better reflect academics’ digital competence, we decided to drop the elements highlighted by low outer loadings.

Regarding the path coefficients, we hypothesized that factors describing educators’ pedagogical competencies (assessment, teaching and learning, empowering learners) should be considered as one factor (digital competencies), while we retained professional engagement (as educators’ professional competencies) and facilitating learners’ digital competencies as an outcome variable. This restructuring didn’t hurt the original dimensionality of the DigCompEdu instrument since such categorisation also exists regarding the model.

The new, empirical model was also evaluated using the algorithms of SmartPLS 3 using the same settings as before. Figure 5 describes the results of our analysis.

The new model has better outer loadings than the theoretical model which are presented in Table 6.

Although the outer loadings for AS3 and FLDC4 are slightly below the threshold of 0.7 these can be accepted in this new model considering the high outer loadings of other items. Also, according to Hair et al. (2021), items with low outer loadings should only be excluded if they raise the composite reliability to more than 0.7 – which in our case was already reached. Table 7 shows the path coefficients.

Regarding the results of bootstrapping, the path coefficient between Professional Engagement and Facilitating Learners’ Digital Competencies is not significant (p = .517), while other connections are significant (p < .001).

The model also assumes a mediation effect regarding Professional Engagement through Digital Competence in Facilitating Learners’ Digital Competencies. Although the direct effect of Professional Engagement on Facilitating Learners’ Digital Competencies turned out to be not significant, the indirect effect of Professional Engagement through Digital Competence on Facilitating Learners’ Digital Competencies is significant (O = 0.678; SD = 0.180; t = 3.764; p < .001). Therefore, we have a full mediation model because the direct effect from the independent variable to the dependent variable is not significant, while the indirect effect is. Table 8 details the total effects of the model.

In the total effects model, all components are significant, therefore we can conclude that our new model adequately reflects the relationship between our factors. Table 9 details the reliability statistics of the scales.

According to the analysis, all reliability statistics are in order, therefore indicators in our new model reflect the factors adequately. The model fit statistics for our new model also seem to be satisfactory (SRMR = 0.040, 95% CI [0.042; 0.047]). To conclude the results of our PLS factor analysis, we found evidence based on internal structure concerns (H1) that support our new model, although the small sample size needs to be considered regarding the generalizability of the findings. The proposed model for the DigCompEdu takes into consideration the mediating effect of professional engagement and contains fewer items, therefore allowing for easier data gathering. Next, we examine whether the DigCompEdu framework can be used as a self-reflection tool.

4.2 Validity evidence based on the consequences of testing

Validity evidence based on the consequences of testing means the „soundness of proposed interpretations for their intended uses” (AERA, 2014, p. 19). The intended use of the DigCompEdu is a self-reflection tool for educators to assess their digital competencies.

In the questionnaire, respondents had to evaluate their level of digital competence (based on the system provided by the framework like the Common European Framework of Reference for Languages: from A1 to C2) within the same instrument, before completing the DigCompEdu part and again, after answering all the DigCompEdu items (in the same instrument). We computed their level of digital competence based on the original model (0–20 points: A1; 20–33 points: A2; 34–49 points: B1; 50–65 points: B2; 66–80 points: C1; 80–88 points: C2) and our new model as well. For that, we summed the scores of professional engagement, digital competence and facilitating learners’ digital competencies to get an overall score for DigCompEdu. To achieve a minimum score of 0 for easier interpretation and visualization, we transformed the variable by adding a score of 4.11 to every respondent’s result. Therefore, the new DigCompEdu variable ranges from 0 to 9.95, with a mean of 4.11 and a standard deviation of 2.2. The distribution of DigCompEdu differs from the normal distribution (based on the Kolmogorov-Smirnov test: p < .001). For the categorization, we used the same percentage distribution of scores as in the original model (0-2.3 points: A1; 2.3–3.8 points: A2; 3.9–5.6 points: B1; 5.7–7.4 points: B2; 7.5–9.1 points: C1; 9.1–9.95 points: C2).

We compared the four categorical variables that describe respondents’ level of digital competence (self-rating before completing the questionnaire, self-rating after completing the questionnaire, levels based on the original model, and levels based on the new model) on the following diagram (Fig. 6) which shows the percentage of respondents in each category.

Although we can examine small fluctuations regarding the pre- and post-self-ratings, we see a completely different picture if we consider the categorization based on our new model. Respondents rarely categorised themselves (N = 1) in the lowest tier (A1), while the computed scores in our new model suggest a significant portion of the respondents (N = 35) belonging to that category. While most of the respondents rated their level of digital competence as B1 (N = 68 for self-categorization; N = 45 for categorization based on scores from the new model), according to our new model, the most populated category is A2 (N = 54 for self-categorization; N = 60 for categorization based on scores from the new model). Other studies also reported an intermediate level (B1) of digital competence for higher education teachers (Cabero-Almenara et al., 2021; Inamorato dos Santos et al., 2023). To address our hypothesis (H2), we explored the contingency table of respondents’ self-categorization (after completing the DigCompEdu) and the categorization based on the scores from the new model. The results indicate a statistically significant association between the two categorical variables (χ²(25) = 202, p < .001) with a moderate association (Cramers’s V = 0.470). Based on the results self-categorization is fairly, but not perfectly, predictive of categorization based on scores from the new model.

Looking at descriptive differences, we cannot claim validity based on the consequences of testing. While the self-ratings of participants tend to be higher, results regarding the computed scores based on the proposed model indicate a lower level of digital competence. As respondents’ self-ratings and their categorization based on test scores are not congruent (e.g. several respondents identified themselves as having a B2 level of digital competence, while according to our model, they are on the A1 level) we cannot confirm the usability of DigCompEdu as a self-reflection tool (H2). Further research is necessary to gain evidence regarding validity based on the consequences of testing.

Finally, we examine our model concerning background variables to provide further validity evidence for the model.

4.3 Validity evidence based on relations with other variables

Validity evidence based on relations with other variables means „the relationship of test scores to variables external to the test” (AERA, 2014, p. 16). Using the DigCompEdu variable, which was computed based on our proposed model, we examined its relation to our background variables (age, teaching qualification, ICT training) in a sample of Hungarian teacher educators to provide further validity evidence.

Besides age, the differences in the level of digital competence can be understood using the TPACK model. The DigCompEdu framework contains complex items that are a mixture of technological, pedagogical and content knowledge areas. In our questionnaire, we asked whether participants had any teaching qualifications (no, yes) or if they had participated in any ICT-related training (no, yes, as a trainer). We can use these variables as proxies for pedagogical and technological knowledge respectively as the lack of these aspects was highlighted by Dringó-Horváth (2018) as the main impediment for Hungarian teacher educators regarding the use of digital technology. We examined the scores on DigCompEdu (according to our proposed model) concerning pedagogical (teaching qualification) and technological (participation in ICT-related training) knowledge and age. Setting up all three variables together in a general linear model we tested between-subjects effects. Based on the results of Levene’s Test of Equality of Error Variances the error variance can be treated as equal across groups (based on the median, Levene Statistic (19, 115) = 1.451, p = .117). Regarding heteroskedasticity, the variance of the errors does not depend on the values of the independent variables according to the modified Breusch-Pagan Test (χ2(1) = 3.24, p = .072). Therefore, we can proceed with the analysis and interpret the results.

The model itself proved to be significant (F(23) = 4.33, p < .001) and the included variables explained 35,7% (adjusted R2) of the variance of the DigCompEdu score. We found significant main effects for ICT Training (F(2) = 14.69, p < .001, \({\eta}_{p}^{2}\) = 0.203), Age (F(4) = 3.35, p = .012, \({\eta}_{p}^{2}\) = 0.104) but no significant main effects for Teaching Qualification (F(1) = 1.96, p = .164, \({\eta}_{p}^{2}\) = 0.017). Neither the interaction effects were significant (ICT Training * Age: F(8) = 1.38, p = .214, \({\eta}_{p}^{2}\) = 0.087. ICT Training * Teacher Qualification: F(2) = 0.18, p = .890, \({\eta}_{p}^{2}\) = 0.002. Age * Teaching Qualification: F(4) = 0.54, p = .706, \({\eta}_{p}^{2}\) = 0.018. ICT Training * Age * Teaching Qualification: F(2) = 1.14, p = .324, \({\eta}_{p}^{2}\) = 0.019). Figure 7 describes the means of DigCompEdu along the different groups examined.

Hypothesis 3 is only partially supported as teaching qualification (pedagogical competence) was not significant in our model, while age and ICT training (technological competence) showed significant main effects and explained a high portion of the variance of the DigCompEdu score.

5 Discussion

The study aimed to assess the reliability and validity of the DigCompEdu questionnaire and its application in the Hungarian teacher education context as a self-reflection tool. The analysis did not support the theoretical internal structure of the framework; therefore, a new, empirical model was proposed which takes into consideration the mediating effect of professional engagement on teacher educators’ ability to support student teachers’ digital competence development. It is not without precedent that researchers modify the general structure of the tool. Quast et al. (2023) reinterpreted the tool by operationalizing the 22 competencies and providing additional items that created dimensions like administration and professional development, lesson planning, and privacy and copyright. Considering our study as well, there is room for interpretation regarding teachers’ digital competence using the DigCompEdu framework.

Although validity evidence was found regarding the internal structure of the proposed model (H1), we failed to show validity evidence based on the consequences of testing as respondents’ self-ratings and their categorization based on test scores are not congruent (H2). Comparing the scores based on the modified model we found validity evidence based on relations with other variables (H3). Technological competence (as measured by participation in ICT training) and age were found to be significant variables explaining the variance in the DigCompEdu score, while pedagogical competence (measured as existing teaching qualification) was not.

Regarding our first hypothesis, the results further diversify the existing validity evidence regarding the intended use of the DigCompEdu instrument. Although Ghomi and Redecker (2019) reported lower reliability measures for certain areas of the DigCompEdu (Digital Resources, Assessment), overall, they confirmed the validity and reliability of the tool. This was the case in the research of Benali et al. (2018) as well, they found a lower corrected item-total correlation regarding an element from Area 2 (Digital Resources) but concluded that the DigCompEdu is a valid and reliable tool. This is the conclusion that Cabero-Almenara et al. (2020) reached as well. Another study by Cabero-Almenara et al. (2021) validated a 19-item version. Given the different number of items and methods (the cited studies employ a CB-SEM approach) we cannot compare the exact goodness of fit indices, but the ambiguous results are cause for concern.

Regarding our second hypothesis, the model fails to provide validity evidence based on the consequences of testing as we found a mismatch between participants’ self-categorization and categorization based on the actual scoring of the instrument. Our results suggest that respondents tend to overestimate their level of digital competence compared to what the actual scoring proposes. Ghomi and Redecker (2019) found that their participants usually underestimated their level of digital competence, in our study, it was the contrary.

Regarding our third hypothesis, we proposed that DigCompEdu scores are influenced by technological and pedagogical competencies and by respondents’ age as well. Analysing these connections, we can state that validity evidence based on relations with other variables can only be partially supported. We have found significant differences regarding age and technological competencies but no differences regarding pedagogical competencies. Regarding pedagogical competence, Benali et al. (2018) reported (on a descriptive level) a higher digital competence for experienced teachers (which corresponds to age as well). A large-scale study conducted by the JRC confirmed that there are no significant differences between younger and more experienced academics, nor between female and male participants (Inamorato dos Santos et al., 2023). In our study, age was found as an important variable, but teaching competence was not. Contrary to the previous finding, in our sample younger participants showed higher scores on the DigCompEdu. Cabero-Almenara et al. (2020) report a significant connection between participants’ DigCompEdu score and their participation in ICT programmes which is in line with our findings as well.

6 Conclusions

6.1 Theoretical contributions

We found ambiguous results regarding the validity of DigCompEdu as a self-reflection tool. The theoretical added value of our study is the proposed new model as it provides enough validity evidence based on internal structure concerns and partly, based on relations with other variables but fails to provide validity evidence based on the consequences of testing. This latter source of validity is important if we want to establish DigCompEdu as a valid self-reflection tool (intended use).

Our findings regarding the lack of impact of teaching qualifications on DigCompEdu scores could mean (besides the fact that not the qualification but actual pedagogical competencies that matter) a discrepancy between the synchronization of technological, pedagogical and content knowledge (TPACK) of teacher educators. Another theoretical contribution of our study is the interpretation of the DigCompEdu from the perspective of the TPACK. Previous findings also confirm that teachers tend to adapt their teacher- and teaching-centred methods when using digital technologies, instead of rethinking learning outcomes and teaching activities taking advantage of the true functionalities of digital solutions, transforming the teaching and learning process (Ocak & Baran, 2019; Paneru, 2018). Technological knowledge seems to have the greatest influence on teachers’ attitudes (Baturay et al., 2017), but knowing a technology does not automatically translate to the ability to integrate it into their teaching practice (TPACK) (Dorfman et al., 2019).

6.2 Practical implications

The study aimed to assess the reliability and validity of the DigCompEdu questionnaire and its application in the Hungarian teacher education context as a self-reflection tool from a practical perspective. In our hypotheses, we suggested that DigCompEdu is a valid self-reflection tool to measure the digital competencies of teacher educators.

Although our analysis did not support the original theoretical internal structure of the framework, we proposed a new, empirical model which takes into consideration the mediating effect of professional engagement on teacher educators’ ability to support student teachers’ digital competence development. The new model itself provides enough validity evidence based on internal structure concerns as we presented adequate reliability measures and model fit indices. Therefore, we emphasize professional development based on the nexus of TPACK and taking advantage of faculty learning communities to foster teacher educators’ professional identity development (Phillips, 2017) and the community aspect of knowledge sharing that could support self-efficacy beliefs (Blonder & Rap, 2017) e.g. through mentoring (Cerratto Pargman et al., 2018). The role of professional engagement is also strengthened by the study of Reisoğlu and Çebi (2020) in addition to the educational context, highlighted by García-Vandewalle et al. (2023).

6.3 Limitations and future research

Limitations stemming from the chosen research design are the lack of causal inference, potential confounding variables, limited generalizability and self-report bias.

Since our design adopted a correlational approach, it was not our aim to establish causal inference between our variables at this stage. In further research, it is worth addressing this issue, along with considering other variables as well (e.g. disciplinary background, attitudes toward ICT etc.).

The small sample size used in this research is also a limitation regarding the interpretation and generalisability of the results. Since our sample consists of teacher educators, further studies are needed to evaluate the instrument in other areas of higher education to gather validity evidence from a diverse population. Regarding the data analysis method, we chose Partial Least Squares factor analysis (SmartPLS 3) which is better suited to exploratory research and small sample sizes (Lowry & Gaskin, 2014).

A further limitation of this study stems from the nature of self-report questionnaires and the fact that we have used only one data source which can inflate results (Common Method Bias) (George & Pandey, 2017). Currently, there is no other data source that can be used to answer our research questions and cross-check our results. Besides, the research instrument contains unique and precise scale-points, about behavioural evidence instead of general perceptions. The possible biases regarding inflated results are further minimalised by the anonym nature of the questionnaire.

Based on these limitations our chosen research design is still justified, but further studies are recommended with careful triangulation of research methods and data sources (e.g. linking student and teacher data) to overcome them.

Our investigations can partly support our hypotheses, as we found that pedagogical competencies did not play an important role in explaining the variance of the DigCompEdu score. Further studies would be needed to gather more validity evidence and to assess the tool in other disciplinary settings of higher education.

This study raised concerns regarding the use of DigCompEdu as a self-reflection tool highlighting the lack of convergent and discriminant validity evidence among the variables of the original model. Weaker psychometric results can stem from the pragmatic nature of the tool (Mattar et al., 2022). The contradictory findings emphasize the need for a deeper analysis of validity evidence regarding the DigCompEdu tool. Following our results, further research would be necessary to explore the role of DigCompEdu in teacher educators’ self-reflection and professional development practice and to examine in detail the connections between pedagogical and digital competencies.

Data availability

The datasets used and analysed during the current study are available from the corresponding author on reasonable request.

References

AERA. (2014). Standards for Educational and Psychological Testing. In American Educational Research Association (AERA) (2014 Edition). American Educational Research Association.

Agyei, D. D., & Voogt, J. M. (2011). Exploring the potential of the will, skill, tool model in Ghana: Predicting prospective and practicing teachers’ use of technology. Part of a Special Section: Serious Games,56(1), 91–100.

Akturk, A. O., & Ozturk, H. S. (2019). Teachers’ TPACK levels and students’ self-efficacy as predictors of students’ academic achievement. International Journal of Research in Education and Science,5(1), 283–294.

Amhag, L., Hellström, L., & Stigmar, M. (2019). Teacher educators’ use of digital tools and needs for digital competence in higher education. Journal of Digital Learning in Teacher Education,35(4), 203–220. https://doi.org/10.1080/21532974.2019.1646169

Atun, H., & Usta, E. (2019). The effects of programming education planned with TPACK Framework on Learning outcomes. Participatory Educational Research,6(2), 26–36.

Bandura, A., Freeman, W. H., & Lightsey, R. (1999). Self-efficacy: The exercise of control. Journal of Cognitive Psychotherapy,13(2), 158–166. https://doi.org/10.1891/0889-8391.13.2.158

Baturay, M. H., Gokcearslan, S., & Sahin, S. (2017). Associations among teachers’ attitudes towards computer-assisted education and TPACK competencies. Informatics in Education,16(1), 1–23. https://doi.org/10.15388/infedu.2017.01

Beaton, D. E., Bombardier, C., Guillemin, F., & Ferraz, M. B. (2000). Guidelines for the process of cross-cultural adaptation of self-report measures. Spine, 25(24), 3186–3191. https://doi.org/10.1097/00007632-200012150-00014

Benali, M., Kaddouri, M., & Azzimani, T. (2018). Digital competence of Moroccan teachers of english. International Journal of Education and Development Using Information and Communication Technology,14(2), 99–120.

Bilbao Aiastui, E., Arruti Gómez, A., & Carballedo Morillo, R. (2021). A systematic literature review about the level of digital competences defined by DigCompEdu in higher education. Aula Abierta,50(4), 841–850. https://doi.org/10.17811/rifie.50.4.2021.841-850

Blonder, R., & Rap, S. (2017). I like Facebook: Exploring Israeli high school chemistry teachers’ TPACK and self-efficacy beliefs. Education & Information Technologies,22(2), 697–724.

Cabero-Almenara, J., Gutiérrez-Castillo, J. J., Palacios-Rodríguez, A., & Barroso-Osuna, J. (2020). Development of the teacher Digital competence validation of DigCompEdu Check-In questionnaire in the University Context of Andalusia (Spain). Sustainability,12(5), 6094.

Cabero-Almenara, J., Guillén-Gámez, F. D., Ruiz-Palmero, J., & Palacios-Rodríguez, A. (2021). Digital competence of higher education professor according to DigCompEdu. Statistical research methods with ANOVA between fields of knowledge in different age ranges. Education and Information Technologies,26(4), 4691–4708. https://doi.org/10.1007/s10639-021-10476-5

Caena, F., & Redecker, C. (2019). Aligning teacher competence frameworks to 21st century challenges: The case for the European Digital Competence Framework for Educators (DigCompEdu). European Journal of Education,54(3), 356–369. https://doi.org/10.1111/ejed.12345

Castéra, J., Marre, C. C., Yok, M. C. K., Sherab, K., Impedovo, M. A., Sarapuu, T., Pedregosa, A. D., Malik, S. K., & Armand, H. (2020). Self-reported TPACK of teacher educators across six countries in Asia and Europe. Education and Information Technologies,25(4), 1–17. https://doi.org/10.1007/s10639-020-10106-6

Cerratto Pargman, T., Nouri, J., & Milrad, M. (2018). Taking an instrumental genesis lens: New insights into collaborative mobile learning. British Journal of Educational Technology,49(2), 219–234.

Chandra, V., & Mills, K. A. (2015). Transforming the core business of teaching and learning in classrooms through ICT. Technology Pedagogy & Education,24(3), 285–301.

Chang, H. Y., Wu, H. K., & Hsu, Y. S. (2013). Integrating a mobile augmented reality activity to contextualize student learning of a socioscientific issue. British Journal of Educational Technology,44(3), E95–E99.

Chen, C. C., & Lin, P. H. (2016). Development and evaluation of a context-aware ubiquitous learning environment for astronomy education. Interactive Learning Environments,24(3), 644–661.

Chen, Y. H., & Jang, S. J. (2019). Exploring the relationship between self-regulation and TPACK of Taiwanese secondary In-Service teachers. Journal of Educational Computing Research,57(4), 978–1002.

Chou, C., Hung, M., Tsai, C., & Chang, Y. (2020). Developing and validating a scale for measuring teachers’ readiness for flipped classrooms in junior high schools. British Journal of Educational Technology,51(4), 1420–1435.

Clarke, A. M. (2017). A place for digital storytelling in Teacher Pedagogy. Universal Journal of Educational Research,5(11), 2045–2055.

Claro, M., Castro-Grau, C., Ochoa, J. M., Hinostroza, J. E., & Cabello, P. (2024). Systematic review of quantitative research on digital competences of in-service school teachers. Computers & Education,215, 105030. https://doi.org/10.1016/j.compedu.2024.105030

De Freitas, G., & Spangenberg, E. D. (2019). Mathematics teachers’ levels of technological pedagogical content knowledge and information and communication technology integration barriers. Pythagoras,40(1), a431. https://doi.org/10.4102/pythagoras.v40i1.431

Demeshkant, N., Potyrala, K., & Tomczyk, L. (2020). Levels of academic teachers digital competence: Polish case-study. In So, H. J. et al. (Eds.) (2020). Proceedings of the 28th International Conference on Computers in Education (pp. 591–601). Asia-Pacific Society for Computers in Education.

Dorfman, B. S., Terrill, B., Patterson, K., & Yarden, A. (2019). Teachers personalize videos and animations of biochemical processes: Results from a Professional Development Workshop. Chemistry Education Research and Practice,20(4), 772–786.

Dringó-Horváth, I. (2018). IKT a tanárképzésben: A magyarországi képzőhelyek tanárképzési moduljában oktatók IKT-mutatóinak mérése. Új Pedagógiai Szemle,9–10, 13–41.

Falloon, G. (2020). From digital literacy to digital competence: The teacher digital competency (TDC) framework. Educational Technology Research and Development,68(5), 2449–2472. https://doi.org/10.1007/s11423-020-09767-4

Fisher, T., Denning, T., Higgins, C., & Loveless, A. (2012). Teachers’ knowing how to use technology: Exploring a conceptual Framework for Purposeful Learning Activity. Curriculum Journal,23(3), 307–325. https://doi.org/10.1080/09585176.2012.703492

García-Vandewalle García, J. M., García-Carmona, M., Trujillo Torres, J. M., & Moya Fernández, P. (2023). Analysis of digital competence of educators (DigCompEdu) in teacher trainees: The context of Melilla, Spain. Technology, Knowledge and Learning, 28(2), 585–612. https://doi.org/10.1007/s10758-021-09546-x

George, B., & Pandey, S. K. (2017). We know the Yin—but where is the Yang? Toward a balanced approach on common source bias in public administration scholarship. Review of Public Personnel Administration. https://doi.org/10.1177/0734371X17698189

Ghomi, M., & Redecker, C. (2019). Digital Competence of Educators (DigCompEdu): Development and Evaluation of a Self-assessment Instrument for Teachers’ Digital Competence: Proceedings of the 11th International Conference on Computer Supported Education, pp. 541–548. https://doi.org/10.5220/0007679005410548

Guo, R. X., Dobson, T., & Petrina, S. (2008). Digital natives, digital immigrants: An analysis of age and Ict competency in teacher education. Journal of Educational Computing Research,38(3), 235–254. https://doi.org/10.2190/EC.38.3.a

Hair, J. F., Matthews, L. M., Matthews, R. L., & Sarstedt, M. (2017). PLS-SEM or CB-SEM: Updated guidelines on which method to use. International Journal of Multivariate Data Analysis, 1(2), 107–123. https://doi.org/10.1504/ijmda.2017.087624

Hair, J. F., Risher, J. J., Sarstedt, M., & Ringle, C. M. (2019). When to use and how to report the results of PLS-SEM. European Business Review,31(1), 2–24. https://doi.org/10.1108/EBR-11-2018-0203

Hair, J. F., Hult, G. T. M., Ringle, C. M., Sarstedt, M., Danks, N. P., & Ray, S. (2021). Partial least squares structural equation modeling (PLS-SEM) using R: A workbook. Springer Nature. https://doi.org/10.1007/978-3-030-80519-7

Hinrichsen, J., & Coombs, A. (2013). The five resources of critical digital literacy: A framework for curriculum integration. Research in Learning Technology,21(1), 1–16. https://doi.org/10.3402/rlt.v21.21334

Hunter, J. (2017). High possibility classrooms as a pedagogical framework for technology integration in classrooms: An inquiry in two Australian secondary schools. Technology Pedagogy & Education,26(5), 559–571.

Inamorato dos Santos, A., Chinkes, E., Carvalho, M. A. G., Solórzano, C. M. V., & Marroni, L. S. (2023). The digital competence of academics in higher education: Is the glass half empty or half full? International Journal of Educational Technology in Higher Education, 20(1), 9. https://doi.org/10.1186/s41239-022-00376-0

Instefjord, E. J., & Munthe, E. (2017). Educating digitally competent teachers: A study of integration of professional digital competence in teacher education. Teaching and Teacher Education,67, 37–45. https://doi.org/10.1016/j.tate.2017.05.016

Jiménez-Hernández, D., González-Calatayud, V., Torres-Soto, A., Martínez Mayoral, A., & Morales, J. (2020). Digital competence of future secondary school teachers: Differences according to gender age and branch of knowledge. Sustainability, 12(22), Article 22. https://doi.org/10.3390/su12229473

Johnson, B. (2001). Toward a new classification of nonexperimental quantitative research. Educational Researcher,30(2), 3–13. https://doi.org/10.3102/0013189X030002003

Kimmons, R., Graham, C. R., & West, R. E. (2020). The PICRAT model for technology integration in teacher preparation. Contemporary Issues in Technology and Teacher Education, 20(1). https://citejournal.org/volume-20/issue-1-20/general/the-picrat-model-for-technology-integration-in-teacher-preparation. Accessed 20 Sept 2023.

Klebansky, A., & Fraser, S. P. (2013). A strategic approach to curriculum design for information literacy in teacher education – implementing an information literacy conceptual Framework. Australian Journal of Teacher Education,38(11), 103–125. https://doi.org/10.14221/ajte.2013v38n11.5

König, J., Jäger-Biela, D. J., & Glutsch, N. (2020). Adapting to online teaching during COVID-19 school closure: Teacher education and teacher competence effects among early career teachers in Germany. European Journal of Teacher Education,43(4), 608–622. https://doi.org/10.1080/02619768.2020.1809650

Krumsvik, R. J. (2014). Teacher educators’ digital competence. Scandinavian Journal of Educational Research,58(3), 269–280. https://doi.org/10.1080/00313831.2012.726273

Liesa-Orus, M., Blasco, L., R., & Arce-Romeral, L. (2023). Digital competence in University lecturers: A meta-analysis of teaching challenges. Education Sciences, 13(5), 5. https://doi.org/10.3390/educsci13050508

Llorente-Cejudo, C., Barragán-Sánchez, R., Puig-Gutiérrez, M., & Romero-Tena, R. (2023). Social inclusion as a perspective for the validation of the DigCompEdu Check-In questionnaire for teaching digital competence. Education and Information Technologies,28(8), 9437–9458. https://doi.org/10.1007/s10639-022-11273-4

Lowry, P. B., & Gaskin, J. (2014). Partial least squares (PLS) structural equation modeling (SEM) for building and testing behavioral causal theory: When to choose it and how to use it. IEEE Transactions on Professional Communication,57(2), 123–146. https://doi.org/10.1109/TPC.2014.2312452

Mattar, J., Ramos, D. K., & Lucas, M. R. (2022). DigComp-based digital competence assessment tools: Literature review and instrument analysis. Education and Information Technologies,27(8), 10843–10867. https://doi.org/10.1007/s10639-022-11034-3

McDonagh, A., Camilleri, P., Engen, B. K., & McGarr, O. (2021). Introducing the PEAT model to frame professional digital competence in teacher education. Nordic Journal of Comparative and International Education (NJCIE), 5(4). https://doi.org/10.7577/njcie.4226

Mishra, P. (2019). Considering contextual knowledge: The TPACK Diagram gets an Upgrade. Journal of Digital Learning in Teacher Education,35(2), 76–78. https://doi.org/10.1080/21532974.2019.1588611

Mishra, P., & Koehler, M. J. (2006). Technological pedagogical content knowledge: A framework for teacher knowledge. Teachers College Record,108(6), 1017–1054.

Muammar, S., Hashim, K. F. B., & Panthakkan, A. (2023). Evaluation of digital competence level among educators in UAE Higher Education Institutions using Digital competence of educators (DigComEdu) framework. Education and Information Technologies,28(3), 2485–2508. https://doi.org/10.1007/s10639-022-11296-x

Nelson, M. J., Voithofer, R., & Cheng, S. L. (2019). Mediating factors that influence the technology integration practices of teacher educators. Computers & Education,128, 330–344. https://doi.org/10.1016/j.compedu.2018.09.023

Ocak, C., & Baran, E. (2019). Observing the indicators of technological pedagogical content knowledge in science classrooms: Video-based research. Journal of Research on Technology in Education,51(1), 43–62.

OECD. (2017). Supporting entrepreneurship and innovation in higher education in Hungary. OECD. https://doi.org/10.1787/9789264273344-en

Otrel-Cass, K., Khoo, E., & Cowie, B. (2012). Scaffolding with and through videos: An example of ICT-TPACK. Contemporary Issues in Technology and Teacher Education (CITE Journal),12(4), 369–390.

Paneru, D. R. (2018). Information communication technologies in teaching english as a foreign language: Analysing EFL teachers’ TPACK in Czech Elementary schools. Center for Educational Policy Studies Journal,8(3), 141–163.

Párraga, L. M., Cejudo, C. L., & Osuna, J. B. (2022). Validation of the DigCompEdu check-in questionnaire through structural equations: A study at a University in Peru. Education Sciences,12(8), 574. https://doi.org/10.3390/educsci12080574

Phillips, M. (2016). Re-contextualising TPACK: Exploring teachers’ (Non-)Use of Digital technologies. Technology Pedagogy and Education,25(5), 555–571.

Phillips, M. (2017). Processes of practice and identity shaping teachers’ TPACK Enactment in a community of practice. Education and Information Technologies,22(4), 1771–1796.

Puentedura, R. R. (2003). A matrix model for designing and assessing network-enhanced courses. Hippasus. http://www.hippasus.com/resources/matrixmodel/. Accessed 20 Sept 2023.

Quast, J., Rubach, C., & Porsch, R. (2023). Professional digital competence beliefs of student teachers, pre-service teachers and teachers: Validating an instrument based on the DigCompEdu framework. European Journal of Teacher Education, 0(0), 1–24. https://doi.org/10.1080/02619768.2023.2251663

Redecker, C. (2017). Digital competence of educators (Y. Punie, Ed.). Publications Office of the European Union.

Reeves, T. D., & Marbach-Ad, G. (2016). Contemporary test validity in theory and practice: A primer for Discipline-Based Education Researchers. CBE Life Sciences Education, 15(1). https://doi.org/10.1187/cbe.15-08-0183

Reichert, M., & Mouza, C. (2018). Teacher practices during Year 4 of a one-to-one mobile learning initiative. Journal of Computer Assisted Learning,34(6), 762–774.

Reisoğlu, İ., & Çebi, A. (2020). How can the digital competences of pre-service teachers be developed? Examining a case study through the lens of DigComp and DigCompEdu. Computers & Education, 156, 103940. https://doi.org/10.1016/j.compedu.2020.103940

Røkenes, F. M., & Krumsvik, R. J. (2016). Prepared to teach ESL with ICT? A study of digital competence in Norwegian teacher education. Computers & Education,97, 1–20. https://doi.org/10.1016/j.compedu.2016.02.014

Saltos-Rivas, R., Novoa-Hernández, P., & Rodríguez, R. S. (2023). Understanding university teachers’ digital competencies: A systematic mapping study. Education and Information Technologies,28(12), 16771–16822. https://doi.org/10.1007/s10639-023-11669-w

Sarstedt, M., & Cheah, J. H. (2019). Partial least squares structural equation modeling using SmartPLS: A software review. Journal of Marketing Analytics,7(3), 196–202. https://doi.org/10.1057/s41270-019-00058-3

Siddiq, F., Røkenes, F. M., Lund, A., & Scherer, R. (2024). New kid on the block? A conceptual systematic review of digital agency. Education and Information Technologies,29(5), 5721–5752. https://doi.org/10.1007/s10639-023-12038-3

Spante, M., Hashemi, S. S., Lundin, M., & Algers, A. (2018). Digital competence and digital literacy in higher education research: Systematic review of concept use. Cogent Education,5(1), 1519143. https://doi.org/10.1080/2331186X.2018.1519143

Starkey, L. (2020). A review of research exploring teacher preparation for the digital age. Cambridge Journal of Education,50(1), 37–56. https://doi.org/10.1080/0305764X.2019.1625867

Tomczyk, Ł. (2021). Declared and real level of digital skills of future teaching staff. Education Sciences,11(10), 619. https://doi.org/10.3390/educsci11100619

Tømte, C., Enochsson, A. B., Buskqvist, U., & Kårstein, A. (2015). Educating online student teachers to master professional digital competence: The TPACK-framework goes online. Computers & Education,84, 26–35. https://doi.org/10.1016/j.compedu.2015.01.005

Wang, Z., & Chu, Z. (2023). Examination of higher education teachers’ self-perception of digital competence, self-efficacy, and facilitating conditions: An empirical study in the context of China. Sustainability,15(14), 10945. https://doi.org/10.3390/su151410945

Willermark, S. (2018). Technological pedagogical and content knowledge: A review of empirical studies published from 2011 to 2016. Journal of Educational Computing Research,56(3), 315–343. https://doi.org/10.1177/0735633117713114

Zhao, Y., Llorente, P., & Sánchez Gómez, M. C. (2021). Digital competence in higher education research: A systematic literature review. Computers & Education,168, 104212. https://doi.org/10.1016/j.compedu.2021.104212

Funding

Open access funding provided by Eötvös Loránd University. The research presented in the study was financed by the project Education informatics in higher education (no. 20629B800) supported by Károli Gáspár University of the Reformed Church in Hungary.

The corresponding author was working under project no. PD134206 which has been implemented with the support provided from the National Research, Development and Innovation Fund of Hungary, financed under the OTKA Post-doctoral Excellence Programme funding scheme.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

None.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Horváth, L., M. Pintér, T., Misley, H. et al. Validity evidence regarding the use of DigCompEdu as a self-reflection tool: The case of Hungarian teacher educators. Educ Inf Technol (2024). https://doi.org/10.1007/s10639-024-12914-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s10639-024-12914-6