Abstract

Technologies for collaboration within the oil and gas industry, which are referred to as Integrated Operations, challenge traditional geographical, disciplinary, and organisational boundaries. Fuelled by the availability of sensor networks, faster data transfer technologies, shared data exchange formats, and collaborative work flows, Integrated Operations entail difficult transformations at the technological, social, and political levels. We describe and discuss the efforts of a Scandinavian oil and gas company to develop an information infrastructure for real-time subsea environmental monitoring. This accentuates the ongoing controversy among environmental concerns, fisheries, and the oil and gas industry. Theoretically leaning on infrastructuring and, methodologically, on the concept of infrastructural inversion, our analysis specifically targets the evolution of emergent infrastructures. We identify and discuss the increasing degree of entanglement of the infrastructuring process over time by empirically characterizing two concepts: (1) bootstrapping, which is particularly pronounced in the early stages of infrastructure evolution and involves exploring the local feasibility of subsea environmental monitoring methods and devices, and (2) enactment, which is increasingly present in the later stages of infrastructure evolution to weave environmental information into the agenda of heterogeneous oil and gas professionals.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Lophelia pertusa (Lophelia for short) is a species of cold-water coral that has built aggregations and reefs throughout the world’s oceans over the past 9,000 years. The seabed along Norway is home to the world’s largest population of Lophelia. Lophelia attracts significant attention and is vital for preserving marine ecosystems, including ecosystems in Arctic areas (Fosså et al. 2002). Meanwhile, the offshore oil and gas operations along the Norwegian coast have gradually expanded into Lophelia habitats. Environmental and fishing concerns are in conflict with the interests of the oil and gas industry (Blanchard et al. 2014; Fosså et al. 2002). Lophelia represents an interesting and vivid lens into the broader and politicised situation. We present an ethnographic study of the ongoing efforts of an international oil and gas company (NorthOil, a pseudonym) to design and develop an infrastructure for real-time subsea environmental monitoring.

Real-time environmental monitoring involves developing a comprehensive network of measuring devices, sensors, communication lines, databases and tools for analysing and presenting data. We draw on the expanding stream of infrastructure-influenced studies in CSCW (Jirotka et al. 2013; Pollock and Williams 2010; Star and Ruhleder 1994). These studies mirror the growing focus in CSCW on the large-scale and long-term support for collaboration provided by infrastructures (Ribes and Lee 2010). An infrastructure for real-time environmental monitoring facilitates increased information sharing and collaboration, but also generates conflict among the stakeholders in NorthOil (environmental advisors, drilling engineers, and production engineers) and external institutions (Norwegian Environmental Agency, marine research institutions, fishery organisations and green activists).

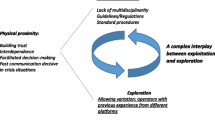

We ask the following: What is the work that makes a real-time environmental monitoring infrastructure work? We investigate the process of aligning the new capacities with the existing tools, work practices, and professions in NorthOil’s infrastructure in-the-making. We therefore draw on a notion of ‘work’ intended as the construction of order in cooperative settings (Schmidt 2011). An element of novelty of our case is that we provide a longitudinal account of the phases of development and early adoption of cooperative technologies, whereas most accounts within CSCW have traditionally analysed short-term instances of early adoption (Pollock and Williams 2010). We speak of infrastructuring as a verb rather than infrastructure as a noun to underline the blurred boundaries between phases of design, implementation, use, and maintenance in infrastructure evolution (Karasti et al. 2010). Infrastructuring highlights the ongoing, provisional and contingent work that goes into working infrastructures. Infrastructural inversion is similarly intended to unpack the “invisible” work of infrastructures (Bowker and Star 1999; Star 1999). Our analysis specifically targets the evolution of emergent infrastructures over time. We particularly focus on the increasing degree of entanglement of the infrastructure with internal and external stakeholders and agendas (cf. Bossen and Markussen 2010). We contribute by fleshing out empirical features of two concepts that highlight the time-dependent dynamics of infrastructuring: (1) bootstrapping, which is particularly pronounced in the early stages of infrastructure evolution and involves exploring the feasibility of subsea environmental monitoring methods and devices in situated settings, and (2) enactment, which is gradually present in the later stages of infrastructure evolution to weave environmental information into the agenda of heterogeneous oil and gas professionals and external actors.

Our characterization of infrastructuring invites CSCW to address the establishment of collaborative technologies as a blend of collaborative aspects and epistemological questions about the relation between instrumentation and the way we know phenomena. Accordingly, we position our theoretical foundation at the intersection between CSCW and Science and Technology Studies. Section 2 outlines our perspective on infrastructuring, specifically focusing on the time-dependent evolution of infrastructures in which bootstrapping and enactment play a role. Section 3 provides context to our case. Section 4 presents the research method and explains how our constructs (bootstrapping and enactment) are developed in a dialogue between inductive processes and our theoretical foundations. This section also provides some reflections on how we tailored our research strategy to investigate infrastructure. Section 5 presents our findings through an empirical instantiation of the constructs of bootstrapping and enactment. We discuss and draw implications for our understanding of the increasing level of entanglement of emerging infrastructures in Section 6, and Section 7 presents our concluding remarks and a reflection on the political significance of our case study.

2 An infrastructure perspective in CSCW

We adopt an information infrastructure perspective (Karasti et al. 2010; Monteiro et al. 2013; Pollock and Williams 2010). Monteiro et al. (2013, p. 576 emphasis in original) define infrastructure as a complex sociotechnical system:

“As a working definition, [information infrastructures] are characterised by openness to number and types of users (no fixed notion of ‘user’), interconnections of numerous modules/systems (i.e. multiplicity of purposes, agendas, strategies), dynamically evolving portfolios of (an ecosystem of) systems and shaped by an installed base of existing systems and practices (thus restricting the scope of design, as traditionally conceived). [Information infrastructures] are also typically stretched across space and time: they are shaped and used across many different locales and endure over long periods (decades rather than years).”

This emphasises how collaborative practices are achieved through collections of – rather than singular – artefacts (ibid). Infrastructure-influenced studies are increasingly visible in CSCW and have been employed in diverse empirical settings, such as healthcare (Bossen and Markussen 2010), cyberinfrastructures (Ribes and Lee 2010), and distributed collective practices (Turner et al. 2006). For the purposes of this paper, there are three particularly relevant aspects of the body of literature with an infrastructure perspective in CSCW: (i) studies of eScience on the collection, curation, sharing, and collaboration around scientific data (tied to our environmental data); (ii) conceptual strategies for unpacking the ‘hidden’ work of working infrastructures (tied to our aim of analysing ‘hidden’ work); and (iii) concepts that highlight the time-dependent, dynamic character of evolving infrastructures (tied to our specific focus on ‘young’ infrastructures in-the-making).

2.1 Infrastructure and eScience

eScience studies in CSCW look specifically at collaboration between different types and/or groups of scientists. This collaboration relies on creating shared, interconnected and interoperable procedures, tools and vocabularies for collectively working with scientific data (Borgman et al. 2012; Edwards et al. 2011; Karasti et al. 2006). A central theme is collaboration within a heterogeneous community of scientists. Scientific disciplines employ distinct vocabularies, methodologies, and practices that make frictionless “collaboration” anything but obvious. Borgman et al. (2012) present the complex interdependencies of environmental data sharing between scientific and technical communities. These interdependencies can be buried under tiny elements of complex systems; the definition of ‘data’ varies significantly across communities. Disciplinary boundaries may be overcome with metadata (data about data). Edwards et al. (2011) address the key role of metadata for scientific interoperability and demonstrate the prerequisite need for supplementary arenas in which scientists can meet, share, and discuss.

In the UK, eScience has for example focused on interdisciplinary collaboration between researchers in the natural sciences (de la Flor, Ojaghi, et al. 2010), clinicians (Jirotka et al. 2005), and scholars in the humanities (de la Flor, Jirotka, et al. 2010). De la Flor, Jirotka et al. (2010) report on how infrastructure supports the collaborative practices of classicists to (re)interpret a Roman tablet from the first Century AD. The authors show the integral role of the infrastructure to the classicists’ work in the process of retracing the context in which the text was produced. De la Flor, Ojaghi et al. (2010) unpack the strict interdependence between collaborative procedures and technologies when setting up distributed laboratory practices.

In sum, eScience studies of environmental data are rare despite the increasing number of portals used by scientists to share environmental and oceanographic data, e.g., the Alaska Ocean Observing System1, the Marine Explore portal for global ocean data2, the SAM-X portal to integrate marine data with the fishing industry and the oil and gas industry3, and the Barents Watch portal to the coastal and sea areas of the European High North4. An exception is Edwards (2010), who discusses the ‘machinery’ (infrastructure) that has made climate change monitoring possible. He reports on the work of climate scientists to re-examine historical records. Infrastructure embodies the difficulty of recovering the contextual information of old datasets and maintains not only interdependences and relationships but also conflicts on several levels.

2.2 Infrastructural inversion and articulation

An important theme in infrastructure-influenced studies of eScience is infrastructural inversion (Bowker 1994; Bowker and Star 1999). Bowker and Star (1999, p. 34) describe infrastructural inversion as a

“gestalt switch. (…) This inversion is a struggle against the tendency of infrastructure to disappear (except when breaking down). It means learning to look closely at technologies and arrangements that, by design and by habit, tend to fade into the woodwork (sometimes literally!).”

Infrastructural inversion should be recognised as a generalisation of the long-standing concept of ‘articulation work’ in CSCW (Schmidt and Bannon 1992), but is a basic principle rather than a defined notion like articulation. Despite the different level of granularity, both concepts highlight the constitutive role of invisible work and the necessary and non-heroic efforts of working-order technologies (Bowers 1994). According to Bowker et al. (2010, p. 99), these concepts consist of “going backstage.” (Goffman 1959) One possibility of inverting an infrastructure is when it becomes visible upon breakdown (Bowker and Star 1999). Jackson (2014) proposes to look at this moment of breaking as generative acts to transform material and human order and meaning in infrastructures. An example of an application of infrastructural inversion in CSCW is the study of a distributed network of sensing devices by Mayernik et al. (2013). The initial incompatibilities between sensors and networking equipment were subsequently “unearthed” to enable an alternative configuration with a re-focus on manual data collection and sampling practices.

eScience scholars have determined (either as ‘inversion’ or ‘articulation’) how efforts distributed across time and space establish collective routines for assessing data quality. In addition to the monitoring and maintenance of technical equipment, these studies underscore the social practices involved in ensuring data quality. Ribes and Jackson (2013) show how the practice of collecting water samples from a stream required modification to ensure that the water was sampled upstream rather than downstream to prevent contamination of the data by the person who collects the sample (e.g., dirty boots when wading into the river). Similarly, Vertesi and Dourish (2011) suggest the need to focus on the strong relationship between the context in which data are produced and acquired and the manner in which the data are shared during scientific collaboration.

2.3 Towards grasping the time-dependent evolution of infrastructures: bootstrapping and enactment

Infrastructural inversion forefronts the “invisible” work of infrastructures, which is vital for any critical study of infrastructures. The primary purpose of this paper is thus to characterise how, where, and when infrastructural inversion plays out in emergent infrastructures. A related conceptual strategy is that framed by infrastructuring. As argued by Bossen and Markussen (2010), “Discussing ‘infrastructure’ as a noun is not helpful for analytical purposes, as this suppresses the variety of material and non-material components of which it consists, the efforts required for their integration, and the ongoing work required to maintain it.” (p. 618) Furthermore, Karasti et al. (2006) use “information infrastructuring” to emphasise the crucial role of long-term evolution and continuity in complex systems (see also Pipek and Wulf 2009).

Infrastructuring and infrastructural inversion are per definition generic rather than specific in terms of the time-dependent dynamics of emergent infrastructures. There is work in CSCW on the long-term evolution of collaborative infrastructures, focusing on the temporal aspects. For example, Karasti et al. (2010) elaborate two dimensions of infrastructural inversion: space (local vs. global) and time (short vs. long term). They discuss how tensions are resolved if the global and the long term are addressed in local and short-term everyday practices. Thus, infrastructure becomes transparent when the local and the short term are simultaneously incorporated into future organisational change. In this account, infrastructuring blurs the distinction between design, implementation, and the use of infrastructure. This point is important to understand the dynamics of NorthOil’s infrastructuring work. The new monitoring infrastructure is in-the-making, encompassing moments of inception, design, prototyping, and early use. We leverage two general concepts from the literature – bootstrapping and enactment – to further detail the time dimension of infrastructuring, corresponding, as we shall see, to an increasing degree of entanglement with external stakeholders and other infrastructures. Through our analysis, we will instantiate and empirically characterise a few practical concerns associated with these concepts.

The first concept we adopt is bootstrapping. It has been proposed in the literature with slightly different meanings to address early-stage infrastructure evolution. In Information Systems, Hanseth and Lyytinen (2010; see also Skorve and Aanestad 2010) frame it with reference to a dilemma for infrastructure designers who must persuade early users to adopt the infrastructure and, simultaneously, anticipate the completeness of their solution. Bootstrapping therefore consists of an algorithm to address the gradually increasing degree of entanglement of an infrastructure-in-the-making by starting with the simplest solution possible and enrolling a critical mass of users when its use is not formally mandated or economically subsidised. Bootstrapping was also used by Bowker (1994) in Science and Technology Studies, grounded on an empirical description of the early years of an oil and gas service company that resonates with ours. Bootstrapping is a response to the necessarily imperfect fit between the map and the territory; it is “a process of getting enough measurements to do good science and enough work on the oil fields to be able to take local measurements.” (p. 33) In our interpretation, also in this sense, bootstrapping is a process to address the temporally increasing level of entanglement of an infrastructure in its earliest stages by pragmatically resolving local/global tensions. In Bowker’s account, this is performed by conjuring a set of meaningful indicators from highly situated realities while simultaneously ensuring their inclusion in a global or standardised picture. Importantly, this process applies to both measuring the natural world and enrolling social actors. Our use of bootstrapping leans on Bowker’s version – a conceptualisation that remains open-ended but clearly underlines the convergence between issues of articulating organizational work and the co-evolution of instrumentation and the phenomena under study. In our subsequent analysis, we build on but extend Bowker’s concept of bootstrapping to portray the facets of what goes into the making of an infrastructure.

A second concept that we adopt to colour the process of infrastructuring is enactment. As an infrastructure grows, aspects related to bootstrapping tend to leave increasing space to different concerns. The locally produced data have to travel across domains, sites, and work processes, where they are made ‘real’ in the sense that they are given meaning and roles. Edwards and colleagues (2011) remark that the travel of data across interfaces (between disciplines or between machines) is one that generates friction and thus consumes energy when information must be turned into a meaningful and relevant format for a heterogeneous audience. This process of enactment is fundamental for infrastructure evolution because infrastructure becomes such only in relation to organisational practice (Star and Ruhleder 1994). Enactment has been used extensively in practice-based theories, in particular, Orlikowski (2000, 2002). Orlikowski (2000) describes it as the process of putting technology into practice. Similarly, Orlikowski (2002) analyses the process of organisational knowing as one in which knowledge is enacted daily in people’s practices. In sum, her approach to enactment recognises the reciprocally constitutive relationship between knowledge and practices. While acknowledging commonalities with Orlikowski’s definitions, we lean towards the notion provided by Mol (2002) in her study of medical practice: “[L]ike (human) subjects, (natural) objects are framed as part of events that occur and plays that are staged. If an object is real this is because it is part of a practice. It is a reality enacted.” (p. 44) She investigates diseases as never isolated from the practices that stage them; their enactment is not only a matter of representational activities but also of several levels of materiality (from the microscopes to the notepads). The actors are intentionally left vague to leave space for the many subjects and objects that get their shape and actuality on the scene during the activities of enactment of a disease (ibid).

3 Case background

3.1 NorthOil and collaborative work

The Norwegian continental shelf (NCS) consists of the section of the European continental shelf that includes the Norwegian territory and encompasses portions of the North Sea, the Norwegian Sea, the Barents Sea, and the Arctic Ocean. Since its inception in 1969, Norway has developed into a robust oil and gas industry with operators, vendors, oil service providers and consultants alongside increasingly stronger governmental bodies. More than 5,000 wells have been drilled. The industry represents almost 50 % of Norway’s exports, approximately 25 % of the GNP and approximately 15 % of private sector employment. Our case company, NorthOil (a pseudonym), is one of the major operators on the NCS, with more than 20,000 employees in 36 countries.

An estimated 20–25 % of the world’s unexplored oil and gas resources are located in the Arctic region, which renders them commercially interesting for the oil and gas industry5. However, the same areas are particularly vulnerable from an environmental perspective. Decisions on where to allow and where to ban oil activities for environmental reasons are highly and continuously contested. The major part of the Arctic region and parts of the NCS offshore North Norway are currently banned. Environmental activists argue that oil and gas operators are (presently) not able to guarantee the preservation of these sensitive environments (Knol 2011). Our case of NorthOil’s efforts to establish an environmental monitoring infrastructure is part of NorthOil’s manoeuvring to open areas of the NCS that are currently banned and, at the same time, gather more knowledge about the ecosystem in general and natural variation in particular.

NorthOil has been involved in efforts to improve cross-discipline and cross-distance collaboration for several decades. Historically, NorthOil was organised around the geographical site of the field. This organisation ensured an extensive and practice-based knowledge of the local field. Responding to a more dynamic reality with smaller and short-lived oil fields, NorthOil has invested heavily in communication facilities, such as increasing data transfer bandwidth, standardising data exchange formats, real-time processing and analysis, and integrating desktop video conferencing tools and shared repositories. Over the past two decades, NorthOil has promoted several high-profiled projects to promote collaboration as part of introducing SAP, Lotus Notes, and Microsoft SharedPoint (references supressed for anonymity). Computer-supported cooperative technologies within oil and gas operations have been referred to as Integrated Operations6. Integrated Operations have significantly challenged previous geographic (e.g., on- vs. offshore), disciplinary (e.g., production vs. reservoir engineers), and organisational (e.g., drilling vs. production) boundaries (Norsk olje og gass 2005; Rosendahl and Hepsø 2013). The transformations in daily operations implied by Integrated Operations are conflictual and difficult (Hepsø 2009). However, fuelled by the significant trend towards unmanned, sensor-based, and remotely operated subsea facilities, offshore oil and gas operations are gradually displacing the roughneck handcraft tradition with an increasingly information-intensive and collaborative mode of working, which warrants a stronger CSCW attention to Integrated Operations.

3.2 Environmental monitoring on the NCS

Oil and gas activities are potentially polluting, e.g., spreading of drill cuttings (rock material removed from a borehole while drilling), drilling mud (chemicals used during drilling to control the pressure in the well) and oil spills/leakages. To receive formal permission to drill a new well, oil operators are required to establish environmental monitoring programs to assess the impact of the planned drilling activity. All installations on the NCS are regularly monitored every third year following drilling. Until now, environmental monitoring has been time-consuming and resource-demanding, with the results (i.e., the data) cumbersome to access due to fragmented and poorly integrated repositories. An environmental survey typically requires 9–12 months, from collecting samples of the seafloor, onshore laboratory analysis and producing a report. Surveys are conducted by consultants or third-party organisations to ensure independence of the oil companies. Distributed responsibility for data collection and long-term surveying results in fragmented information across multiple data sources and data formats, significantly hampering the access, sharing, and interpretation of data.

Government regulations leave the details of environmental monitoring under-specified, including what and how to sample. However, government regulations have recently been tightened and now explicitly refer to the water column, the sedimentation, and the seafloor fauna (Miljødirektoratet 2011). In addition to the identification of the environmentally sensitive flora and fauna in an area, the most common parameters in the water column that surveyors consider are oceanographic data (pressure, temperature, and salinity), the direction and speed of the water currents (to predict the dispersion of biomass or drilling discharges), turbidity (the instantaneous concentration of particles in the water column), sedimentation (the long-term accumulation of particles on the sea bed), and visual inspection of given points through pictures and videos.

3.3 Towards real-time environmental monitoring in NorthOil

There are several compounding reasons for NorthOil’s interest in establishing the infrastructure for real-time environmental monitoring that we study. First, and as outlined above, the present methods and procedures are inefficient. Second, real-time environmental monitoring is increasingly recognised to have operational (hence commercial) value. The situation with the cold-water coral Lophelia illustrates this. Despite the fishery industry accounting for 30-50 % of damage to Lophelia on the NCS (Fosså et al. 2002), there is growing public concern regarding the impact of oil and gas operations (Blanchard et al. 2014). In 2003, the North-Atlantic OSPAR Commission7 included Lophelia in its list of threatened species (OSPAR 2008). When NorthOil was recently requested to relocate its planned drilling site, a costly operation, to avoid harming a colony of Lophelia, one environmental advisor recalls, “[S]o then we needed to do something (…) to find out whether these guys [Lophelia] are sensitive or not for the [drilling] discharges.” (Environmental advisor 1) Third, very little is known about the impact of oil activities on the subsea environment in general and on Lophelia in particular. This fundamental lack of knowledge is a principal reason for banning oil activities in parts of the NCS and the Arctic. Establishing a new infrastructure for environmental monitoring is thus part of a broad endeavour to supplement the existing lack of knowledge “in a systematic, explicit and transparent manner.” (Blanchard et al. 2014, p. 319) With this open-ended agenda, NorthOil faced immediate decisions about what aspects of the environment to capture, how to perform measurements, and where to conduct measurements. Our case follows two streams of activities conducted by NorthOil.

The first stream commenced in the mid-2000s to obtain real-time environmental datasets from a small sensor network deployed on the seafloor off the shore of North Norway in an Arctic area where no oil and gas operations are currently allowed. This project is the first example in which NorthOil is proactively positioning itself within the controversies surrounding oil and gas operations. Initially started as a low-profile initiative, the project has gained significant momentum and is used to promote a knowledge-gathering process. This effort involved exploring uncharted terrain to gain experience and to configure sensors and devices for which oil and gas professionals are unfamiliar.

The second stream is a profiled initiative in collaboration with technology vendors and external environmental experts and advisors to establish an infrastructure for real-time environmental monitoring combined with daily oil and gas activities, as envisioned by Integrated Operations. One of the aims was to provide NorthOil users with a geographical information system (GIS)-based web portal with updated risk predictions for the coral reefs and the surrounding marine environment. This GIS portal is primarily targeted to provide drilling engineers with warnings of potential damage to the coral reefs and to aid the environmental coordinator, a role now filled with new responsibilities for monitoring the impact of oil and gas activities on natural resources based on real-time information.

4 Method

We use case studies as the background for this study. Consistent with the principles for interpretive methods (Klein and Myers 1999), our aim is to understand the motivations, perceptions, and actions of involved groups during everyday activities and routines. Negotiating access to a case is not automatic for oil and gas companies, which is traditionally a fairly secretive business sector.

We present a case study (see timeline in Figure 1 ) that is based on two initiatives involving NorthOil. Access to our case was dependent on a number of conditions. The first author is principally responsible for the data collection. She was granted an office space and was able to gradually recruit other relevant informants. Being a non-native Norwegian speaker, the first author had to learn Norwegian prior to and during the first months of our study, because that is the main language used at NorthOil’s research department. As the ability to understand and speak the language improved, access to additional information also improved. The second author has an extended history of research collaboration with NorthOil, including involvement with the ongoing Integrated Operations activities of NorthOil. The authors, particularly the second and the third authors, have a long history of research collaboration.

4.1 Data collection

We rely on three modes of data collection: participant observations, interviews, and document study (cf. Table 1 ).

Participant observations are based on 2 years of ethnographic observations by the first author. Since April 2012, the first author has been granted access to NorthOil’s main research department, where most of the project participants were located. After an initial period in the entrance of the department, this author currently maintains an office space with four project participants. During the 2 years since April 2012, the first author has spent an average of 2–3 days per week at NorthOil. Data collection from the participant observations was conducted in Norwegian.

The participant observations enabled by co-location provided access to formal project meetings and workshops, as well as informal discussions. They also proved crucial for identifying additional informants to approach. The participant observations provided context for the interviews and document study. In the case of informal conversations over lunch or coffee, subsequent memory-aided transcriptions were conducted as soon as practically possible. Informal conversations, which extended beyond our data collection, were the key to being accepted as a NorthOil ‘insider’. For instance, in some meetings, the project members would not always remember that the first author had an academic affiliation rather than a NorthOil affiliation. The third, and occasionally the second, author participated in the meetings and discussions about the projects.

Semi-structured interviews were conducted by the first author, sometimes aided by the second author, at NorthOil’s research centre, with occasional meetings at the headquarters of the project partners in other Scandinavian cities, to obtain a broader perspective. Informants were selected from NorthOil and gradually from among the partner companies; they were identified during the participant observations or referred by NorthOil employees. For example, 9 interviews were conducted with participants to NorthOil’s initiatives from a partner company, Quality Certification Body (QCB, a pseudonym). The informants were professionals with backgrounds in marine biology, environmental chemistry, and corporate IT with different roles. The roles included environmental advisors, project managers, senior researchers, IT advisors, and data-modelling experts. In addition, we interviewed drilling engineers. Informants were coded. We conducted 30 audio-recorded and transcribed interviews (see Table 1 ). The interviews lasted 1 hour on average and were primarily conducted in English.

Document analysis: We had access to Norwegian and English papers and electronic documents, including email, memos, slide presentations, internal reports, and minutes from meetings. Internal or publicly accessible documentation from NorthOil or competent authorities describing the strategies, plans, and norms were a resource for contextualising the economic and political context in which NorthOil operated during our study. Internal project documentation was a resource for analysing the technical description of the infrastructural components to understand the requirement specifications and the deliveries of the two projects.

Throughout this paper, we will use the term ‘project participants’ to refer to employees of NorthOil and its partner companies who were directly involved in the two infrastructure initiatives of NorthOil during the phases of infrastructure planning, implementation, and maintenance.

4.2 Reflections on our research method and its scaling

Infrastructure requires suitable scaling methods that are able to account for the balance of action, tools, and the built environment from which it is inseparable (Star 1999). A peculiarity of our case study is that the participants think in terms of a long-term and distributed infrastructure while simultaneously handling daily practical concerns. We considered this point of departure to obtain further access to data and to address the undefined spatial and temporal nature of NorthOil’s projects. According to Ribes (2014), to solve this problem, ethnographers, rather than only looking at the large-scale infrastructure, should also ask themselves how the actors on the field look at it: “The key insight in this method is the recognition that anytime there is a ‘large’ endeavour you will find actors tasked with managing the problems associated with its scale.” (p. 158) Thus, the actors can be employed to mediate our access and solve problems of scaling – i.e., for “going backstage.” (Goffman 1959) This argument is relevant for us with respect to improving data access. Because the first author was granted a pass to access and freely move in NorthOil’s offices in the research centre, she was accepted in the work place and began to identify and shadow key participants involved in different environmental monitoring-related activities. Consequently, we gained access to the Arctic observatory project, which was not initially part of our scope. This approach was also fundamental for addressing distribution. For example, the project partners are located in other Norwegian cities, and conversations have to be established with potential stakeholders in other NorthOil’s departments (e.g., the well drilling division), which are located throughout the country. For reasons related to cost and time constrains, one or a few ethnographers could not constantly travel to each of these locations on a weekly basis. We identified a subset of participants in charge of answering the same questions (namely, to find the work to make the infrastructure work) and who needed to cope with large-scale and long-term issues. One example was the way we addressed the concern of merging new routines for environmental monitoring with formal work processes. To mine all work processes used in NorthOil, which exceed 30,000, and to identify the spokespersons of every department in the company to discuss the possible integration of the new routines would have been unfeasible. Due to the good relationship established with several NorthOil employees during the participant observations, the first author was able to ‘piggyback’ on two of the participants as they performed the work package to enrol NorthOil departments to adapt existing routines. Consequently, we were able to participate in meetings held with department representatives and review and comment on the documentation from these discussions.

4.3 Data analysis

Data analysis was iterative and overlapped with data collection, thus enjoying the added flexibility identified by Eisenhardt (1989). In particular, data analysis was performed in iterations of inductive and deductive steps. Klein and Myers’ (1999) principle of dialogical reasoning indeed recognises that the researchers’ theoretical commitments necessarily affect the data collection. We interleaved theoretically driven influences by inductively responding to emerging themes from coding of the empirical data (through annotated transcripts, colour schemes, and Post-it notes). This process resulted in the interpretative template shown in Table 2 .

Our data describe the longitudinal phases of development and early use of an infrastructure that was still in-the-making. We thus followed the infrastructural inversion and traced the infrastructuring process, namely articulation efforts over time to create a working infrastructure that has not stabilised (Bowker and Star 1999). We began by identifying empirically grounded features of infrastructuring, often formulated in terms of practical concerns by our informants. In clustering these concerns, we realised that they resonated with the existing concepts of bootstrapping (generally associated with early stages of infrastructure development) and enactment (early use), of which our contribution provides a rich empirical instantiation. Our data analysis thus involved detailing the deductively given notions of bootstrapping and enactments with inductively generated clusters of concerns. We now examine the data analysis process in more detail.

In general, the issues raised by the participants were not explicitly presented as concerns, but as the need to find a balance between contrasting requirements (cf. Ribes and Finholt 2009). In the initial months of NorthOil’s initiative, for example, there was a pressing need to monitor a “nice” coral reef while finding a suitable “place for the camera.” The participants’ statements emphasised how the configuration of the equipment played a role in the initiation of the subsea monitoring infrastructure (‘Sensor configuration’, Table 2 ). Overall, these concerns were situated (e.g., relative to a small portion of the Arctic region) and pragmatic (e.g., by choosing a coral reef that “was relatively good” according to the opinion of marine biology experts).

In addition, the participants’ concerns front-staged epistemological questions related to operating the equipment from the seafloor to measure heterogeneous resources of which they did not always have profound knowledge. We identified concerns such as “fish experts… lack experience with reading the [acoustic] sensors from [the sea] floor” resulting from the current configuration of the sensors in the Arctic observatory (‘Scope vs. granularity’). The fish indeed produce different echo patterns when acoustic signals are shot from below rather than their usual position from the top (i.e., from floating fishing vessels). These concerns surfaced visibly at later stages of the development process, for instance when the Arctic observatory was merged with the institutional environmental monitoring initiative.

A connection was thus emerging between the making of an infrastructure with global aims and the need to answer situated epistemic questions pragmatically. The identified concerns were thus a vivid characterization of early-stage infrastructure development, an empirical instantiation of Bowker’s (1994) concept of bootstrapping. A reasonable reading of Bowker’s (1994) work is to interpret bootstrapping as related to the initial, exploratory, grounded, and occasionally very serendipitous (e.g., the decision to use the Arctic observatory datasets in the corporate initiative) stages of infrastructuring.

As NorthOil’s infrastructure was blurring into moments of early use, concerns of a different nature were also voiced. An explicit aim to sustain the environmental datasets and make them meaningful to oil and gas professionals clearly emerged. We inductively isolated statements that expressed concerns related to integrating environmental data management practices with the established oil and gas routines (‘Meshing of new and old’) and to data interpretation (‘Perspective taking’).

We realised that these empirical tensions constituted instances of enactment work. Paraphrasing Mol (2002, p. 44), enactment points to framing the environment on a “stage” that was acceptable for the highly formalised oil and gas domain while simultaneously leaving space for including new actors during the infrastructuring work. Our study infrastructure-in-the-making, therefore, is better grasped by Mol’s use of the concept of enactment rather than Orlikowski’s (2002).

The processes of bootstrapping and enacting are not sharply confined moments of infrastructuring. As we noticed above, concerns related to bootstrapping also surface in later stages, when the enacting work makes new questions visible to different disciplines (e.g., merging NorthOil’s two initiatives), therefore triggering new inquiries into the relationship between the sensing devices and the nature they must monitor. The concept of infrastructuring is indeed meant to blur the divisions between the traditional steps of system innovation. In general, however, we see that the features of bootstrapping are more evident in the moments of developing an infrastructure, whereas enactment is more prominent when the infrastructure moves into early adoption. We stress two aspects with this analysis. First, infrastructural inversion has a temporal nature. Our two constructs are thus an attempt to highlight that the time dimension is fundamental to understanding how infrastructures develop and spread. Second, we underline the importance of thinking of infrastructuring as constituted by the preparation (bootstrap) and presentation (enact) work to sustain the infrastructure.

5 Findings

The investigation of the work that makes an infrastructure work demands inspecting the longitudinal efforts to create some degree of order in NorthOil’s new infrastructure. Following our interpretive template (see Table 2 ), in this Section we present the results of our data analysis. First, we describe how the bootstrapping process instantiated in practice through concerns related to positioning the sensing devices (‘Sensor configuration’) first, and later to tuning the sensing capabilities to the marine environment (‘Granularity vs. scope’). Second, we present how the enactment process gradually emerged in the shape of concerns about sewing environmental data into daily oil and gas operations (‘Meshing of old and the new’) and adapting existing methodologies to interpret them (‘Perspective taking’).

5.1 Bootstrapping

In the summer of 2013, a fibre-optic cable was installed to connect a lander (i.e., an ocean observatory composed of a few networked sensors; cf. Figure 1 ) on the seafloor of North Norway to a small onshore data centre in a village along the coast of North Norway, in the Arctic region. This Arctic observatory is located at a depth of approximately 250 m and positioned 15–20 km off the coast. The first test results enabled NorthOil to analyse Lophelia coral structures in real-time (Figure 2). This prompted discussions about which parameters should be tracked and how.

5.2 Sensor configuration

The first tension emerged during the positioning of the Arctic lander on the seafloor. The lander was equipped with a camera; sensors to track pressure, temperature, salinity, and turbidity; and an echo sounder (an acoustic device to monitor moving resources). According to one project participant, the lander should be placed in the vicinity of a coral reef that is deemed to be “as interesting as possible to be put in one photographic frame.” (Environmental advisor 3) However, problems of obtaining a suitable trade-off between an “interesting” coral structure to monitor and a “safe” position for the lander soon emerged. Because only one lander was available, only one coral structure could be monitored. The participants in the Arctic observatory received a map that located all “nice coral reefs” from a research institution that collaborated with the project. Unfortunately, the map did not report the steepness of the area, where strong currents form many sand hills. The lander needs to be placed on relatively even surface. In addition, the camera had to be positioned to capture a healthy portion of the coral structure, which influenced the selection of the spot:

“We had to find something with some sort of living coral reef that was flat enough, and we went through a lot of nicer reefs that … would [have] serve[d] as … much better objects, probably also from a scientific point of view it would be much nicer… But we had to move away from them because we couldn’t find any place for the camera.” (ibid.)

Consequently, every location on the map had to be tested until the lander could be installed to monitor and photograph a coral structure that “was relatively good.” (ibid.) According to our interviewees, the “goodness” of the data constituted an empirical balance among the number of species that inhabited the coral structure, the condition of the coral, the flat position of the terrain, and the size in reference to the camera frame. The quest for this balance took the shape of an effort to establish a laboratory for collecting real-time data considered acceptable by environmental experts.

One of the deliverables of the larger real-time environmental monitoring initiative was a GIS-based web portal to provide both environmental experts and drilling engineers with real-time environmental data feeds presented in different formats. The implementation was the responsibility of a number of partner vendors, but it experienced delays and lacked real (not merely test) data. Because the Arctic lander had been connected to the shore a few weeks earlier, real-time data were becoming available. The project managers of the large-scale initiative decided some months after to start using these datasets as they were sent to the onshore data centre. The data served to create map layers inside the web portal not only for visualisation purposes but also to develop the analytical tools for modelling and analysing real-time information. What used to be a small laboratory for the hardware technology became the “Arctic laboratory” in NorthOil official documentation. The new scenario caused a bootstrapping tension that was similar to the problem encountered in the early phase of establishing the Arctic observatory. The measurements were never ‘neutral’. The materiality of the sensors and the objects (the fish) coloured the measurements. One tension, for example, emerged in relation to the physical position of the Arctic lander. Corals are static structures on the seafloor; thus, sensors that used to be employed from the sea surface had to be repurposed to be capable of operating from the seafloor. Echo sounders are routinely used in fishing vessels to detect fish. In its simplest configuration, an echo sounder measures the echo produced by an obstacle that encounters its beam, e.g., a fish. NorthOil’s project thus decided to adopt the state-of-the-art exemplar in the Arctic lander to scan a given section of water from the seafloor and track the moving resources in a 3D area of the water column, the size of which is dependent on the configuration of the echo sounder. The assumption was that it could be useful for monitoring the fish and the biomass floating around a coral reef. The measurements from the Arctic lander were collected using the new method: they tracked the echo of the beam that hit the fish’s lower part. However, two problems remained. First, the new bottom-up readings were a new data type for marine biologists involved in the latter project. They had previously experienced echo readings of fish from above rather than from the seabed (i.e., from below). The relationship between the size of a fish and the strength of its echo is dependent on the features of the fish as observed by the echo sounder. For instance, for cod, the strength of the echo measured from above is obtained through an empirically based mathematical formula to convert the echo (measured in decibels) into the size (e.g., centimetres). The corresponding formula for the measurements from below is not available to the participants in NorthOil’s project. As explicitly noted during one workshop, new expertise was required and many experiments needed to be performed to interpret the new data type. Second, this change created incompatibility with the historical data and map layers that were collected by research institutions over the years and that were based on the traditional top-down measurements from boats. To create robust knowledge about baseline environmental conditions, insight into normal variation presupposed a longitudinal perspective because temporal, seasonal, and regional variations were significant.

5.3 Granularity vs. scope

An additional concern emerged regarding the limitations of the Arctic sensors contra the ambitions of NorthOil’s larger real-time subsea monitoring initiative. The sensors on the lander were indeed limited in scope and type because they were designed for limited use compared to the larger reach required by NorthOil’s initiative. Moreover, one of the goals of NorthOil was to obtain measurements that were sufficiently granular to track the drifting of small eggs and larvae of the cod and herrings that spawn in the area following the water current. The assumption was that these organisms are more sensitive to pollution because they cannot react and swim away like fish can. Monitoring fish eggs and larvae was particularly important to the long-term goal of positioning NorthOil vis-à-vis areas presently banned from oil and gas operations. However, due to sensor limitations, the wavelength of the Arctic echo sounders was not small enough to sense the smaller biological resources, particularly in the upper part of the water column. Figure 3 illustrates this finding.

Consequently, these types of data were obtained from simulation models that had previously been developed by participating research institutions. These models were based on generic algorithms that describe the drifting of particles that follow the water currents and have been used to simulate the movement of both oil or gas droplets and biomass. To start the model, initial empirical information about the actual presence of biomass was needed, “You can come up with experience data.” (Environmental advisor 4) The bootstrapping of the modelling practice is dependent on historically layered direct observations of eggs and larvae during a specific period of the year.

To further illustrate this point, consider once again the echo sounder. Fish may respond more clearly to the signal if they have a swim bladder, which is a gas-filled internal organ that contributes to their ability to swim and that also functions as a resonating chamber to receive or emit sounds. As one environmental advisor from QCB explained,

“A big fish or a big swim bladder will return a bigger signal than a smaller one (…) Species like the mackerel, which don’t have a swim bladder, will return a very small signal. Perhaps that’s why we have come up with species with a swim bladder in this project.” (Environmental advisor 4)

The pragmatic strategy of adopting the Arctic data generated unanticipated consequences for the capabilities of NorthOil’s large-scale project, in which participants had to re-configure their expectations vis-à-vis the parameters they planned to monitor. Ideally, the relevant environmental parameters needed for monitoring would be carefully identified prior to devising methods/technologies. Instead, a strategy of improvisation had to be adopted to maximise their use of the resources and opportunities offered by the Arctic observatory. For instance, only fish with swim bladders could be monitored. The forging of the two initially independent projects (the large-scale initiative and the Arctic observatory) demonstrates how bootstrapping tensions re-surfaced over time, albeit in different forms. The need to lower NorthOil’s expectations due to the material limitations of the Arctic lander revealed a more basic need to understand the implications of each single sensor for the entire infrastructure. Where technology was insufficient, missing information had to be inferred from a combination of theoretical models and human observations.

5.4 Enactment

The real-time monitoring of the environment in the Arctic observatory was compatible with NorthOil’s strong commitment to Integrated Operations and real-time operations. As one industry leader stated, “[shifting to] real-time operations is the next revolution [in oil and gas].”11 Still, the new capabilities of environmental monitoring had to mesh with existing routines. Because new methods for enacting the subsea environment were needed, these methods had to match NorthOil’s existing set of technologies and practices.

5.5 Meshing of old and new

The introduction of new data and practices related to environmental monitoring in the installed base of NorthOil took the shape of two concerns on the organisational and technological levels: the adaptation of formal work processes to embrace environmental monitoring practices and the integration of new environmental map layers in the corporate GIS.

Daily operations in an oil and gas company are regulated by an extensive set of corporate-approved and formal work processes. For instance, NorthOil has more than 30,000 formally defined work processes. For real-time environmental monitoring to become part of daily oil and gas operations, it needs to be captured by formal procedures. Thus, new work processes had to be developed and approved, or existing work processes had to be adapted. This situation includes, but is not confined to, the interdisciplinary teams involved in the planning and drilling (drillers, drilling engineers, geologists, and geophysicists) of drilling wells, which are frequently located in the vicinity of vulnerable marine resources; the data engineers who assess the quality of the incoming data; and the environmental coordinators who monitor the impact of operations on subsea biological resources. NorthOil’s initiative blurred the distinction between the ‘technical’ and the ‘environmental’ tasks. As with general Integrated Operations, a stricter collaboration is necessary between people with environmental expertise (e.g., the environmental coordinator) and people with technical expertise (e.g., the drilling engineers), as the latter may handle environmental information on the same infrastructure: “[I]n the long run, the technical guys will accept that some of the data [they are fed] is environmentally related. Not necessarily on the same channel but maybe on the same infrastructure.” (Senior researcher 1) Recognising this need for stricter interdisciplinary collaboration, the project embarked on a formal process that was aimed at enrolling and engaging departments that are potentially affected by the availability of new and timely environmental data. This task proved challenging. Some department representatives showed a strong interest in supporting it, whereas other department representatives were more reluctant. For example, NorthOil’s well drilling division stated that their work processes were defined and rigid due to strict safety requirements that govern the construction of wells. They maintained that it was not desirable for them to significantly alter their routines. In addition, they noted that coral reefs existed in the vicinity of a minority of wells that they drilled throughout the world; thus, the modifications to work routines could not focus solely on the risk of damaging the corals. The views of the drilling engineers were strongly motivated by their traditional preoccupation with safety in conjunction with the prevention of incidents in technical equipment/systems, as one project member bluntly stated:

“[T]here will also be some issues on how you allow [environmental and technical] data to coexist because the technical information has to have priority (…) A coral might wait, a machine won’t, so to speak (…). When you are drilling the first top section [of a well], you may have shallow gas, so it’s a very [safety-]critical operation, which will take priority.” (Senior researcher 1)

Consequently, NorthOil’s project managers decided to enforce the new routines for environmental risk prevention in corporate work processes related to well planning and drilling only upon the detection of vulnerable resources.

As illustrated for drilling, forging new and environmentally oriented work tasks to existing entrenched tasks was challenging. Maps of the seabed environment have been critical to the planning and execution of offshore work. Knowledge of the seabed terrain is critical to properly install moorings and to establish the exact location of the pipelines and subsea equipment to prevent the destruction of infrastructure that has already been installed and is operational. NorthOil already had a sophisticated GIS-based infrastructure for which most of the company’s seabed infrastructure was digitally mapped. Critical maps, which existed as map layers, ranged from bathymetry (seabed topology) to subsea infrastructure with pipelines, moorings, and subsea production systems (refer to Figure 4 for an example). The new environmental maps needed to be incorporated as new map layers on the existing bathymetry and physical infrastructure with the correct coordinates. Although it had been possible to view corporate published maps through portal-like interfaces, a corporate intranet that aimed to integrate these maps into one portal was not available. In mid-2013, due to the pressure of NorthOil’s real-time environmental monitoring agenda, a corporate intranet initiative gained momentum such that relevant environmental map layers could finally be published on the existing corporate GIS-based infrastructure. According to one project member, “Maps are the main carrier of information in this project” and “It’s the maps that connect it all!” (Senior researcher 1) These statements addressed the significant variety of intended users. Map layers had to be formatted to ensure compatibility with the NorthOil corporate GIS, which describes the operational fields with the technical infrastructure, the rigs, and the pipelines. After several discussions with the technology vendors, the new environmental map layers were developed using the same GIS software engine as NorthOil’s native maps.

To summarise, the general concern of integrating new solutions with the existing norms and regulations of NorthOil involved work to balance two different trajectories: a top-down trajectory, based on a more administrative perspective (adapting the work processes), and a bottom-up trajectory, based on a technical starting point (adapting the sensor data to the maps).

5.6 Perspective taking

The establishment of environmental data management routines in NorthOil work processes had to be interleaved by engaging the users to help them understand environmental information. Specifying that the biomass concentration at a depth of 250 m in a GPS location in the North Sea at a given time is “-73.31 dB12” is not very informative for a driller. ‘Perspective taking’ refers to the cross-disciplinary process of articulating Lophelia in a risk language that is interpretable and relevant to the oil and gas users. This issue was addressed by recruiting external experts to interpret environmental information in the context of Integrated Operations. As acknowledged by one NorthOil environmental advisor during an internal meeting, “[NorthOil] does not have the internal competence to perform the [environmental] data collection and the data analysis.” (Environmental advisor 1) Environmental data had to be analysed and appropriately presented as meaningful for its diverse users. QCB, which is a reputable, third-party international organisation, enforced the connection between the technically oriented expertise of oil and gas and the expertise of other disciplines.

The process of integrating the map layers described above had to correspond with the process of integrating the adopted language. To facilitate the translation of environmental concerns to (existing) operational work routines, NorthOil’s project framed its output in the vocabulary of risk, the dominant vocabulary in safety-critical industries, such as oil and gas. This approach was based on an existing risk framework from the guidelines for coral risk assessment developed by QCB (with significant experience in environmental monitoring and a history of quality certification and assessment) and issued in 2012 upon the request of the Norwegian Oil and Gas Association. The guidelines describe a number of existing and new routines for gathering data about the location and condition of the corals in an area. Existing data (such as bathymetry) are used and combined with data collected about the corals during subsea remotely operated vehicle (ROV) surveys. During these seabed surveys, QCB’s environmental experts would actually name and categorise the corals according to their health condition. QCB’s coral-risk assessment methodology, similar to environmental monitoring in general, occurred offline rather than in real-time: “we give the different habitats a value.” (Environmental advisor 5) This “value” is the result of a combination of technical and environmental information and expertise. It provides an assessment of the condition of coral structures based on the predicted spreading of cuttings during the drilling activity to provide oil and gas companies with recommendations about whether to drill and where to discharge the particles. Only the living portions of Lophelia structures are considered and provided with unique identifiers. Dead coral structures are discarded because they cannot be damaged. An evaluation of the condition of the corals is performed using a colour palette (green, yellow, or red based on the percentage of living corals per total area of the coral structure—refer to Figure 5 top for an example). Environmental data are manually combined with operational information (e.g., the drilling plan), and weather and current forecasts are inputted into predictive modelling systems to simulate and map how the drilling discharges will disperse in the water column and sediment on the seafloor over time. As part of NorthOil’s initiative, this QCB’s traditional methodology for coral risk assessment was designated as the link between the real-time sensors in NorthOil’s (future) subsea observatories, the discharges, and the maps that portray the present and future risk for the coral structures based on tailored integration scripts. As the drilling activity begins, this integration produces an updated picture of potential changes in the impact of the drilling discharges over the coral structures, which are based, for example, on a sudden change of the water current.

Top: a reproduction of QCB’s process for mapping the condition of some coral structures based on the predicted spreading of drill cuttings. A good condition represents a high risk of pollution, which is denoted by a red triangle. Photos: MAREANO/Institute of Marine Research, Norway. Bottom: a reproduction of the “risk matrix” incorporated into the GIS web portal by QCB, in which a severe consequence is predicted for the coral structure labelled “CR-01” against a small probability of being reached by the plume of drill cuttings. Source: authors’ drawing.

Crucially, the language of risk used to present the environmental information should be compatible with that in use by the professionals of an oil and gas company; at the same time, the risk for the subsea environment that is associated with oil and gas activities should be granted the same consideration as that due to technical problems. This concern was addressed by looking at existing technical solutions and methodologies from a different perspective. First, new combinations of sensors had to provide real-time relevance to parameters that are traditionally monitored offline. Second, methodologies such as the coral risk assessment procedure should be turned into an online machinery to present the risk for the environmental resources using the well-known language of risk matrices.

The first approach consisted of sensor adaptation. The rate at which sediment particles are produced during drilling is not obvious. NorthOil and QCB researchers quickly realised that the existing sensors were not capable of directly measuring the sedimentation and transmitting the results to shore in real time. Although cameras are frequently installed on the landers deployed as part of their “standardised package,” they were under-utilised. QCB proposed the idea of installing a sediment trap on one of the test landers to enable pictures to be taken every half hour:

“We have sediment traps but… that’s just data—you, you don’t have any electronic transfer; you just gather sediment in a tube and take it off. (…) But if you connect a camera to it, (…) [t]hat’s new; it’s something nobody has used.” (Environmental advisor 6)

As a device to simplify the work of image analysis software to detect the actual level of sediment, a contrast black and white background was added behind the trap.

A second solution targeted the way environmental risk was directly displayed to heterogeneous users. It was decided to adopt QCB’s methodology of mapping the risk for a given coral reef using a risk matrix (Figure 5 bottom). This mapping was included in the metadata structure that was associated with each coral reef: corals were assigned an identity, a time, a space, a responsible person, and a condition. The metadata structure with the risk matrix included popup windows that appeared as one user clicked the structure on the GIS web portal. Generally, matrices that describe risk are a well-established tool in risk-assessment methodologies. The matrix was put into use twice: to portray the current conditions of corals prior to any drilling activities and to predict the impact during and after drilling. The matrix generated by QCB consisted of a simple 4 × 4 table, in which the expected probability of pollution was indicated on the y-axis and its consequence was indicated on the x-axis. Each cell was filled with intuitive colours (green, yellow, orange, and red) to signal the level of danger associated with each situation (e.g., low or considerable). The state of risk for a given coral structure was pinpointed in one of the cells for the calculated current pollution and the estimated future pollution.

6 Discussion

Information infrastructure studies in CSCW have reiterated how changes occur in punctuated and constrained ways (Hanseth and Lundberg 2001). Driven by our aim “What is the work that makes a real-time environmental monitoring infrastructure work?,” we reveal the work of infrastructuring, which involves the slow co-evolution of work practices and infrastructures. To highlight the (articulation) work involved, we focus on the process of infrastructuring (transitive verb) rather than the infrastructure (noun) (Bossen and Markussen 2010). The analytic lens of infrastructural inversion is helpful for detailing the process of aligning the evolving environmental monitoring infrastructure with the significant installed base of existing tools, work practices, and professional roles and responsibilities. It is thus relevant for understanding the focus of CSCW, namely the investigation of how order is constructed in cooperative settings rather than of cooperation per se (Schmidt 2011). The “gestalt switch” involved in infrastructural inversion implies “shifting the emphasis from changes in infrastructural components to changes in infrastructural relations.” (Bowker and Star, 1999, p. 99) It remains however, per definition a generic notion. In this paper, we adopt it to conceptualise the articulation efforts to establish a work infrastructure in-the-making. Inversion therefore allows us to target the evolution of ‘young’ infrastructures over time.

We operationalise inversion by characterizing infrastructuring through bootstrapping and enactment¸ two processes that are implicated in the establishment of an environmental monitoring infrastructure. Whereas bootstrapping is highly present in the early stages of infrastructure evolution, it gradually leaves room to enacting work as the infrastructure grows. However, these constructs do not represent clear-cut moments, but are heuristically helpful for understanding the unfolding of the infrastructure. They encompass aspects of cross-contextual cooperation, ‘mundane’ issues of technology design, development, and use, together with epistemological issues about remote sensing and knowing the submarine environment only through data. A strong connection is drawn between organisational and scientific work. Infrastructural inversion indeed underlines the strict interdependence between these types of work (Bowker 1994; Edwards 2010). For instance, Edwards (2010) performs an inversion to describe how climate science evolved into what it is today. He shows how climate scientists collaborate to make sense on a global scale of poorly standardised datasets through down-to-earth activities of digitising and interpolating the datasets.

While Table 2 reports our interpretive template, Table 3 provides a bird’s-eye view on the temporal unfolding of our case in terms of the increasing degree of entanglement of NorthOil’s monitoring infrastructure with the company’s installed base and other heterogeneous and originally unrelated communities of stakeholders. We emphasise how the initial subset of players (that we generally refer to as stakeholders, i.e., social groups having direct purposes in NorthOil’s initiative) increases as the infrastructure grows deeper and broader. Speaking of entanglement front-stages, on the one hand, the increasing depth and breadth of the monitoring infrastructure and, on the other hand, the interactional work processes (organisational work) that are required when new data, new phenomena, and thus new problems are introduced (scientific work) in collaborative work setting. When viewed in this way, infrastructural inversion thus helps to analyse the temporal dimension of infrastructure. However, it is in the long term that infrastructures often fail to emerge. As Ribes and Finolt (2009) demonstrate, infrastructure development interweaves heterogeneous elements that correspond to different temporal scales of infrastructure evolution. Designers of infrastructure-in-the-making may be hindered by short-term design issues that are related to the immediate corporate goals. We previously discussed how the initial choice of an echo sounder of ordinary quality on the Arctic lander subsequently hampered the ability to track small eggs and larvae, as envisioned by NorthOil’s large-scale initiative. On the other hand, the choice of an area such as North Norway without oil-related operations and using the type of open planning adopted by NorthOil (e.g., begin with a small solution, let it grow, share the results externally, and see what needs emerge), may be interpreted as a strategy to design for future growth by postponing final decisions. This design strategy is summarised by an environmental advisor from QCB:

“So we will do the best out of what’s out there and make… how to put it?… the technology ready for having more, more landers, more information, and to create more detailed map layers, and detailed information. But if you have the infrastructure ready, it’s ready for doing that.” (Environmental advisor 4)

To “have the infrastructure ready” resonates with the findings by Karasti et al. (2010), who refer to “infrastructure time”: “[A]n infrastructure occurs when here-and-now practices are afforded by temporally extended technology that can be used in an everyday, reliable fashion. Infrastructure becomes transparent when it exists as an accessible, ready-to-hand installed base that enables envisioning future usages.” (p. 400) NorthOil’s case shows that infrastructure can be bootstrapped in a corporate setting by initially relying on a limited and small-scale set of commoditised lightweight devices that, despite some adaptations, constitute a “ready-to-hand” and “accessible” installed base involving a limited set of stakeholders with well-defined purposes and a low degree of entanglement with other infrastructures. The infrastructure is subsequently pragmatically interweaved with the purposes of new stakeholders (e.g., QCB) or new purposes of the initial stakeholders (the drilling personnel), and with other corporate or external installed bases (e.g., the corporate work processes, QCB’s methodology).

6.1 Bootstrapping

When presenting the case of the oil and gas service company Schlumberger, Bowker (1994, p. 41) makes a point that is cardinal to our conception of bootstrapping: “in order to produce general science, Schlumberger needed to be local and particular.” Through bootstrapping, developers take heuristic advantage of the local/global tensions (e.g., dirty measurements at a site vs. clear-cut global parameters) by creating a ‘laboratory’ through small-scale operations. Being confined, the laboratory leaves small room for error propagation until it is black boxed into general scientific results. Bootstrapping can be read as a process to explore the practical feasibility of environmental monitoring in areas inaccessible to humans (cf. Helmreich 2009).

NorthOil’s efforts can accordingly be defined as an attempt to create laboratories insofar as they scientifically re-create subsea nature for a collaborative infrastructure in an oil and gas company. As stated by Latour and Woolgar (1986), “Scientific activity is not ‘about nature,’ it is a fierce fight to construct reality. The laboratory is the workplace and the set of productive forces, which makes construction possible.” (p. 243, emphasis in original)

NorthOil created a laboratory on a very limited portion of the seafloor offshore North Norway. As clear from Table 3 , the number of stakeholders was initially also restricted to a number of key actors with straightforward goals. This limitation of the ‘laboratory’ was the key that made it more manageable. Small room for error and low complexity were granted by a relatively small dependence on the other infrastructures, which belonged however to the uniform scientific domain of environmental science.

Performing (just) “enough work” in North Norway “to be able to take the local measurements” (Bowker 1994, p. 33) involved the installation of a minimal solution: no operational information but merely the coral and tracking of the surrounding resources using a lander equipped with a few off-the-shelf sensors. The mundane work conducted to “construct reality” was, however, not minimal. Many combinations of terrain-coral-camera were tested before finding a natural spot that “was relatively good.” In other words, after many trial-and-error steps, the results of the Arctic laboratory could be packed into presentable scientific parameters (see concern ‘Sensor configuration’ in Table 2 ).

The relative simplicity of the Arctic observatory has political and economic importance. NorthOil can gain a competitive advantage by establishing an ecological baseline of the area if authorities permit oil and gas operations. This first seed was reinforced by connecting it to a fibre-optic cable to enable the availability of data in real-time and by gathering sufficient data for later analysis. The later corporate initiative, which was more ambitious, also employed a bootstrapping strategy with available data from the Arctic observatory. The materiality of the echo sounders on the Arctic lander was questioned to test their (in)ability to “take local measurements,” for example, of eggs and larvae (‘Granularity vs. scope’). A temporary solution had to be reached by injecting the results of mathematical models and experience data. The case of fish detection is paradigmatic of how bootstrapping work shaped the real-time environmental monitoring infrastructure. Given the features of the Arctic sensors, NorthOil’s project could only rely on the detection of fish with a swim bladder. Other commercially relevant species, such as mackerel, were difficult to spot using the given equipment and were therefore ignored. However, for the moment, it was “enough work.”

Given the complex political situation around NorthOil’s activities offshore North Norway, our case emphasises one aspect of bootstrapping: the need to ensure that the measurements are considered trustworthy. Jirotka et al. (2005) demonstrate that the trustworthiness of a system is not only dependent on an awareness of others’ performance but also “forms part of the work practices through which artefacts are produced and decisions are made.” (p. 376) Therefore, it is important “to attend to the work of making systems ‘trustable’. ” (p. 375) This type of work unfolded on at least three levels.

First, the involvement of independent research institutions and third-party organisations (QCB) must be understood as a way to enforce the perception of the neutrality (hence credibility) of NorthOil’s result in the eyes of external observers. The Arctic laboratory was created to bridge the conflicting interests of the different communities: the choice to deploy the lander in a geographical position that, on the one hand, was located as far as possible from oil-related activities and, on the other hand, was a strategic point to find “interesting” coral structures and abundant environmental resources in the vicinity.