Abstract

Survey researchers often treat self-assessed understanding of climate change as a rough proxy for knowledge, which might affect what people believe about this topic. Self-assessments can be unrealistically high, however, and correlated with politics, so they deserve study in their own right. Turning the usual perspective around to view self-assessed understanding as dependent variable, problematically related to actual knowledge, casts self-assessments in a new light. Analysis of a 2016 US survey that carried a five-item test of very basic, belief-neutral but climate-relevant knowledge (such as knowing about the location of North and South Poles) finds that, at any given level of knowledge, people saying they “understand a great deal” about climate change are more likely to be older, college-educated, and male. Self-assessed understanding exhibits a U-shaped political pattern: highest among liberals and the most conservative, but lowest among moderate conservatives. Among liberal and middle-of-the-road respondents, self-assessed understanding of climate change is positively related to knowledge. Among the most conservative, however, understanding is unrelated or even negatively related to knowledge. For that group in particular, high self-assessed understanding reflects confidence in political views, rather than knowledge about the physical world.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

A rising fraction of the US public recognizes that climate change is happening now, caused mainly by human activities (Hamilton 2017; Saad 2017). The rise coincides with growing public awareness that most scientists agree on this point (Cook et al. 2016; Hamilton 2016a), which research identifies as a “gateway cognition” for acceptance of anthropogenic climate change (Ding et al. 2011; Lewandowski et al. 2013; van der Linden et al. 2015, 2017a). Ideally, recognition of anthropogenic climate change (ACC) should also reflect improved public knowledge of the science itself, but such knowledge appears thin (Leiserowitz et al. 2010). Moreover, how people answer factual questions on climate is often shaped by their underlying beliefs, rather than vice versa, undermining causal interpretation (Hamilton 2012; Kahan 2015). Without definitive measurements, climate knowledge has not been well tracked over time. Cross-sectional research provides the main avenue for its study.

The simplest hypothesis about knowledge and opinions, termed the information deficit model, posits that people fail to accept scientific conclusions about ACC because they lack good information (Suldovsky 2017). This hypothesis finds support in some experimental studies, including recent work on “inoculating” people against misinformation (Cook et al. 2017; van der Linden et al. 2017b). Non-experimental support includes survey research where education or general knowledge exhibit overall positive effects on ACC acceptance (Ehret et al. 2017; Hamilton et al. 2015a). Empirically, however, the information deficit model proves incomplete. It cannot explain the politically motivated rejection that is often directed against ACC and climate science (Dunlap et al. 2016; Dunlap and McCright 2015). Well-educated conservatives are among the most vehement opponents (Hamilton 2008, 2011, 2012; McCright and Dunlap 2011). Rejection of ACC by conservative information elites requires different hypotheses positing politically selective acquisition of information, as in the overlapping concepts of biased assimilation (Corner et al. 2012; Ehret et al. 2017; McCright and Dunlap 2011), elite cues (Brulle et al. 2012; Carmichael and Brulle 2017; Darmofal 2005), motivated reasoning (Kraft et al. 2015; Kunda 1990; Taber and Lodge 2006), compensatory control (Kay et al. 2009), or cultural cognition (Kahan et al. 2011).

Research on public opinion about climate change often finds support for both information deficit and biased-assimilation type processes, indicating that both are important—but to different degrees and among different people (Ehret et al. 2017). Evidence for information deficit processes includes the positive main effects on ACC acceptance from respondent education, and from objective knowledge measures including general science literacy as well as more focused climate knowledge (Hamilton et al. 2012). Evidence for biased-assimilation type processes includes the main (additive) effects from indicators of politics, ideology, or worldview seen in almost every study. Evidence that these processes vary across individuals includes widely replicated interaction effects with the general form information × politics, wherein the influence of information indicators, such as education, science literacy, or numeracy on ACC acceptance proves to be positive among liberals and moderates but near zero or negative among conservatives (Bolin and Hamilton 2018; Drummond and Fishhoff 2017; Hamilton 2008, 2011; Hamilton et al. 2012, 2015a; Kahan et al. 2012; McCright and Dunlap 2011). If statistical models do not allow for such interactions, their estimates for the main effects of education or knowledge measures may essentially be averaging divergent trends. Where interactions are included, main effects describe influence of education or knowledge given zero values or base categories of the politics indicators, so interpretation depends on details of variable coding.

Definitive evidence requires objective tests of knowledge—but these raise issues of content. Less definitively, researchers might just ask people how well they understand climate change, or how well informed they feel. Self-assessed understanding correlates with specific opinions in some studies, but like education, it can exhibit divergent effects depending on political identity. To the extent that self-assessment reflects physical-world or scientific knowledge, it should legitimately inform climate beliefs; but self-assessments could also reflect confidence in politically based views. Some studies nevertheless analyze self-assessed understanding as an independent variable, possibly predictive of climate change opinions (Malka et al. 2009; Hamilton 2011; McCright and Dunlap 2011).

This paper turns that perspective around to examine “understanding” as dependent variable. Survey research has established how respondent characteristics such as age, sex, education, and political identity predict beliefs about climate change. Do these background characteristics also predict self-assessed understanding? Controlling for background factors, how well does tested knowledge predict understanding? Do the effects of knowledge on understanding change with political identity, similar to the information × politics-type interaction effects widely reported for climate beliefs themselves? A nationwide US survey conducted in 2016 provides data to explore these questions.

2 Climate change views and self-assessed understanding

Two standard questions about climate change, exhibiting high reliability and criterion validity, have been asked in more than 40,000 interviews across many surveys since 2010 (e.g., Hamilton et al. 2015a). Understanding inquires how well respondents feel they understand the issue of climate change or global warming; most people say they understand “a moderate amount” or “a great deal.” Climate asks which of three statements they think is most accurate; one choice corresponds to the scientific consensus that climate change is happening now, caused mainly by human activities. Table 1 gives the wording of both questions, along with response percentages from the Polar, Environment, and Science (POLES) survey, a nationwide US 2016 survey comprising the principal data for this paper.

Researchers at the University of New Hampshire and Columbia University designed the POLES survey to assess general public knowledge and perceptions of science, with a particular interest in the Earth’s polar regions (Hamilton 2016b). Random sample cell and landline telephone interviewing for POLES took place in two stages, August and November/December 2016 (combined n = 1411). Sampling weights used for all analyses in this paper make adjustments to better represent the US population.Footnote 1

Sixty-four percent of POLES respondents agree with scientists that climate change is happening now, caused mainly by human activities (Fig. 1a). Only 29% think climate change is happening but mainly for natural reasons; few think it not changing, or admit they do not know. These results resemble those from many other surveys, and fit with an upward trend observed from 2010 to 2017 (Hamilton 2017).

Party line or ideological divisions dominate survey responses to climate change questions. Figure 1b illustrates using the four-party scheme of Hamilton and Saito (2015), which distinguishes Tea Party supporters from other groups. Although the U.S. Tea Party is an informal, decentralized organization, survey respondents who self-identify as Tea Party supporters often express significantly more rejectionist views regarding climate change and other science or environmental topics, compared with non-Tea Party Republicans (Hamilton and Saito 2015; Leiserowitz et al. 2011; Shao 2016). In the POLES data for example, most Democrats and Independents agree that climate change is happening now, caused mainly by human activities. A minority of Republicans and even fewer Tea Party supporters accept this consensus. A 59-point gap separates Democrats from Tea Party supporters.

Partisan gradients like that in Fig. 1b occur on many science or environment-related issues, although they tend to be steepest on climate change (Hamilton and Saito 2015). Self-assessed understanding follows a different pattern, however. Figure 1c shows that a majority of POLES respondents feel they understand a moderate amount about climate change, and almost one-fourth respond “a great deal.” The partisan pattern (Fig. 1d) is not monotonic but U-shaped: Democrats and Tea Party supporters most often say they understand a great deal, although substantively their opinions are opposite. Similar U shapes (not shown) occur when understanding is graphed against respondent ideology from extreme liberal to extreme conservative, or against frequency of religious service attendance from never to at least once per week.Footnote 2 “Understanding a great deal” is also most common among the small fraction who believe that climate change is not happening now. Clearly, asserting that one understands a great deal about climate change relates to political outlook; but how does it relate to knowledge?

3 Testing climate-relevant knowledge

Survey researchers operationalize science literacy using sets of basic questions with clear answers, such as whether the Earth orbits the Sun or vice versa (National Science Board 2010; Hamilton et al. 2012). The questions focus on facts with universal acceptance among scientists, but some of these—such as whether the Earth is billions of years old, or whether humans evolved from earlier forms of life—can evoke religion-based responses among non-scientists, leading to recommendations that they not be included in measures of science literacy (Roos 2014). Climate change knowledge questions face a similar problem. Basic facts about climate change, such as the human-caused buildup of greenhouse gases, the rise of global temperatures, or the melting of Arctic ice are rejected as a matter of belief by many people. In their place, alternative (false) facts have been promoted by partisan sources: for example, that recent volcanoes emit more CO2 than humans, global temperatures are not really rising, or Arctic sea ice declined but then recovered (Hamilton 2012). A climate-literacy score constructed from questions on the physical reality of climate trends makes sense from a scientific standpoint, and would certainly correlate with acceptance of ACC. Interpreting this correlation in causal terms risks circularity, however. Politically linked beliefs and sources inform response to factual questions, so that factual responses already are confounded with social identity (Kahan et al. 2012).

These identity-linked questions have answers that could be guessed (right or wrongly) from ideology or general opinions about ACC, but some other questions do not have this property. For example, the area of late-summer ice on the Arctic Ocean objectively has decreased over the past 30 years, but survey responses on this point behave partly as if one had asked people for their personal opinion about ACC. On the other hand, when asked whether the North Pole is located on land ice or sea ice, opinions about climate change whisper no clues; responses behave more like neutral knowledge (Hamilton 2015a). Similarly, asking whether sea level is rising will bring answers that reflect ACC beliefs; but those beliefs do not suggest whether sea ice or land ice could raise sea level more.

The five knowledge questions listed in Table 1 represent this second, belief-neutral type. Figure 2a–e chart the POLES survey responses. The questions range from easy to difficult: meaning of the greenhouse effect is answered correctly by 64% of the respondents, but only 18% are aware the USA has territory and people in the Arctic. Together, these five items provide a rough index of knowledge (Fig. 2f).Footnote 3

By design, these questions do not address the reality of climate change itself, but all have substantial relevance to that topic. Knowing what scientists mean by greenhouse effect is fundamental to the whole discourse, even for those who dispute its reality. Although some people give answers about whether Arctic sea ice has declined based on their political beliefs, such beliefs offer no guidance in recalling that the North Pole is located in the Arctic Ocean or the South Pole on a continent. Nor do political beliefs help to understand the different impacts on sea level from melting of Greenland and Antarctic land ice (potentially more than 60 m) compared with Arctic sea ice (a few centimeters, caused by freshening the ocean although sea ice is already floating). Changing conditions in these icy realms have been major themes in scientific and popular discussions about climate change, however, including contrarian arguments about why change is not happening. The most difficult question in this set asks whether the USA has territory and people in the Arctic. Almost 8 million km2 of Alaska lie north of the Arctic Circle, including Prudhoe Bay oilfields and the Arctic National Wildlife Refuge, along with the predominantly Iñupiat towns of Barrow (pop 4500), Kotzebue (3200), and many smaller communities. More than a dozen Arctic or subarctic Alaska communities face threats from climate-linked erosion that in some cases may force their abandonment (Bronen 2009; Hamilton et al. 2016b; Marino 2015; USACE 2009).

These five questions do not directly test knowledge of climate change, but that becomes a virtue in terms of separating them from climate change beliefs. The basic facts nevertheless are central to understanding this issue—why sea level often gets mentioned in connection with Antarctica, for example, or how the North and South Poles are different. Put another way, someone who scores poorly on this five-item quiz, unaware of polar locations or what “greenhouse effect” means, does not understand a great deal about climate change.

4 Predictors of self-assessed understanding

The knowledge index exhibits a mild though statistically significant correlation with ACC acceptance. Relationships between knowledge and self-assessed understanding, the focus of this paper, are more interesting and complex. Table 2 explores these relationships through logit regression models that test knowledge alongside other characteristics as predictors of “understanding a great deal.”Footnote 4 Models 1 and 2 employ the 2016 US POLES survey data described earlier, with variable definitions and coding as given in Table 1. Respondent political identity is represented either by four discrete parties (model 1) or alternatively by an ordinal 5-point scale from liberal to conservative (model 2). An indicator for the pre- and post-election POLES survey stages (August and November/December 2016) is included as a control, but demonstrably has no effect.Footnote 5

For an independent replication, model 3 employs 2014–2015 survey data from New Hampshire, where the climate and understanding questions had been asked alongside a subset of knowledge items (npole, spole, and sealevel). The knowledge scores for model 3 thus range from 0 to 3, unlike the 0 to 5 scores used in models 1 and 2; otherwise, variable definitions and codes are the same. Three New Hampshire surveys are pooled to provide an adequate sample for this analysis; the individual-survey indicators detect no significant differences. See Hamilton (2016a, 2016b) for more about the New Hampshire series, which closely tracks national data.

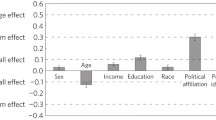

Other things being equal, men are more likely than women to say that they understand a great deal about climate change (models 1–3). Respondents who are better educated (models 1 and 3) or older (models 1 and 2) tend to express greater confidence too. Among those who scored zero on the knowledge quiz (answered no questions correctly), Tea Party supporters (model 1) and conservatives (model 3) are more inclined than members of other political groups to say they understand a great deal.Footnote 6

Tested knowledge exhibits a positive main effect on self-assessed understanding in all three models. Because knowledge also appears in the knowledge × party or knowledge × ideology interaction terms, we can interpret these main effects as the effect of knowledge among Democrats (base category of party) in model 1, or among liberals (zero value of ideology) in models 2 and 3.

All three models in Table 2 find significant interactions between knowledge and political identity, each with the same general meaning: objective knowledge is positively related to self-assessed understanding among Democrats and Independents, or among liberals and moderates—but not so among conservatives. Among the most conservative respondents, knowledge appears unrelated or even negatively related to self-assessed understanding. In models 1 and 3, conservatives with low knowledge scores appear more likely than other conservatives to say they understand a great deal about climate change.

Figure 3 visualizes this interaction as an adjusted margins plot based on model 1. The logit regression coefficients relating knowledge to understanding, adjusted for other variables in this model, are positive among Democrats (b = 0.402; odds ratio eb = 1.495), Independents (b = 0.402–0.052 = 0.350, odds ratio eb = 1.419) and, to a weaker degree, Republicans (b = 0.402–0.226 = 0.176, odds ratio eb = 1.192). The coefficient turns negative (b = 0.402–0.474 = − 0.072, odds ratio eb = 0.931) among Tea Party supporters, indicating that higher confidence in that subgroup coincides with less knowledge.

Probability of “understand a great deal,” by knowledge score and political party identification (adjusted margins plot based on model 1 of Table 2)

Such interactions could partly reflect divergent interpretations of “understanding” climate change, if for conservatives that question disproportionately evokes confidence in their political beliefs rather than physical-world knowledge. Evidence consistent with this explanation appears in the pattern of “do not know” responses. Among respondents professing a great deal of understanding about climate change, the percentage of “do not know” responses to our knowledge questions is significantly related to party and ideology, across both US and New Hampshire survey datasets. For example, 49% of Tea Party supporters claiming a great deal of understanding said they did not know the answer to one or more knowledge questions on the POLES surveys, 22% admitted not knowing two or more, and 11% did not know three or more. The comparable figures for Democrats with a great deal of understanding are 22% (one or more), 4% (two or more), and 1% (three or more). Other parties fall in between, also giving fewer “do not know” responses than Tea Partiers.

Interaction effects can be notoriously sample-specific, due to statistical problems with multicollinearity, influential observations, and multiple comparisons. Three replications in Table 2, however, provide some evidence for stability.

5 Discussion

Public acceptance that ACC is real exhibits a monotonic partisan gradient, whereas self-assessed understanding follows a U-shaped pattern (Fig. 1d). Working in opposition, these political patterns render pointless a simple correlation between “understanding” and ACC acceptance. Among the most conservative, self-assessed understanding is unrelated or even negatively related to physical-world knowledge. Analysis of “do not know” responses suggests that conservatives disproportionately interpret the understanding question as referring to their political confidence rather than science knowledge. Self-assessments thus plausibly reflect both divergent interpretations of “understanding” (political vs. scientific) and politically guided filtering of nominally scientific information.

Politically guided information filtering can be described in symmetrical terms as something “both sides do.” Some experiments find support for symmetry (Frimer et al. 2017; Washburn and Skitka 2017), whereas others report that “asymmetries abound” (Jost 2017). The education × politics interactions frequently observed with climate change opinions on surveys might be read symmetrically: more educated (or scientifically literate) partisans are more efficacious at filtering, so they acquire information that intensifies identity-appropriate views in either direction. This overlooks a basic difference in content, however. More educated Democrats and Independents, or liberals and moderates, incline toward the climate change views expressed by most scientists, so they are filtering for scientifically informed sources (Carmichael et al. 2017). Conversely, conservatives who reject this scientific consensus rely more on political sources to filter science information, or for their sense of understanding.

Other studies have noted lower conservative trust of scientists in general (Gauchat 2012; Nadelson et al. 2014) and on specific topics from climate change and evolution to vaccines and nuclear power (Hamilton 2015b; Hamilton et al. 2015b). Regarding non-science topics as well, conservatives appear to have more politically homogeneous online networks (Boutyline and Willer 2017). They tend to be more attuned to partisan cues (Bullock 2011; Carmichael et al. 2017), more politically selective in their exposure to information (Rodriguez et al. 2017), and more responsive to ideologically compatible fake news (Guess et al. 2018).

The asymmetrical interaction in Fig. 3 adds a new piece to this puzzle. Liberals and conservatives both overstate their understanding of climate change. Science-aware individuals might accept the scientific consensus, however, without necessarily understanding the evidence behind it. This point has been emphasized in research on awareness of the scientific consensus as a “gateway cognition” for recognizing the reality of anthropogenic climate change (Lewandowsky et al. 2013; Maibach et al. 2014; van der Linden et al. 2015, 2017a). Truly, understanding even a moderate amount about this topic, however, does require knowledge of science. In contrast, the minimal quiz employed here covers elementary facts that, if not already known, could be picked up through casual attention. Although self-assessments are biased high and our knowledge quiz sets the bar low, among liberals and moderates these two measures at least correlate, such that higher self-assessments tend to occur with more knowledge. Among the most conservative respondents that does not hold; higher self-assessments appear unrelated or even negatively related to physical-world knowledge.

These results have implications for science communication. Efforts at conveying scientific information may not reach people who confidently reject that science without knowing basic facts. We see many others, however, who should be more open to outreach and science communication. These include political moderates holding less committed views on climate, and expressing lower confidence in their own understanding. Self-assessed understanding reaches its lowest levels among non-Tea Party Republicans or moderate conservatives, in both surveys analyzed here. Moderates and moderate conservatives could be a focus of future research evaluating differential effects of outreach or communication strategies.

Notes

Weights take into account the regional population; number of adults and telephone numbers within households; and respondent age, sex, and race. Response rates in the August and November/December stages of the POLES survey were 27–30% for Alaska, and 15–24% for the other 49 states, as calculated by AAPOR 2016 definition 4.

Similar U-shaped relationships between political identity and high self-assessed understanding of climate change can also be found in many other national or regional survey datasets including those described by Hamilton (2012), Hamilton and Saito (2015), or Hamilton et al. (2016a, 2018a, 2018b). In each of these datasets as in Figure 1d, non-Tea Party Republicans or moderate conservatives express the least confidence in their understanding of climate change.

Principal components analysis (not shown) confirms that these five items load on the first component with roughly similar weights, so a coefficient-weighted knowledge index behaves no differently (r = 0.996) from the simple additive index.

For straightforward interpretation in terms of overconfidence, understanding is here coded as 1 for “a great deal” and 0 otherwise. Alternative specifications using ordinal codes (understanding coded from 1 “nothing” to 4 “a great deal”), estimated by probability-weighted ordered logit regression, yield generally similar conclusions. In the ordinal versions of models 1 and 3, the same predictors have significant effects, with the same signs, as their counterparts in Table 2. The knowledge × party or knowledge × ideology interaction terms are, if anything, slightly stronger in these two ordinal versions. In the ordinal version of model 2, the education effect is stronger but the knowledge × ideology interaction slightly weaker, falling short of statistical significance (p = 0.09), although with the same sign. Otherwise, the significance as well as signs of coefficients are the same for ordinal and dichotomous versions of model 2.

Nor are there significant election × party or election × ideology interactions, which were tested in alternative versions of models 1 and 2 (not shown). Previous analyses looking at different variables on the POLES surveys likewise found little difference between pre- and post-election responses (Hamilton 2017, 2018b).

Because party and ideology interact with knowledge, their main effects in Table 2 describe the effect of party or ideology when knowledge = 0, that is, when no questions are answered correctly.

References

AAPOR (2016). Standard definitions: Final disposition of case codes and outcome rates for surveys (2016 revision). T. W. Smith, Ed., American Association for Public Opinion Research

Bolin JL, Hamilton LC (2018) The news you choose: news media preferences amplify views on climate change. Environ Politics. https://doi.org/10.1080/09644016.2018.1423909

Boutyline A, Willer R (2017) The social structure of political echo chambers: variation in ideological homophily in online networks. Polit Psychol 38:551–569

Bronen R (2009) Forced migration of Alaskan indigenous communities due to climate change: creating a human rights response, pp. 68–73 in Oliver-Smith A, Shen X (eds.) Linking Environmental Change, Migration and Social Vulnerability. Bonn: UNU Institute for Environment and Human Security

Brulle RJ, Carmichael J, Jenkins JC (2012) Shifting public opinion on climate change: an empirical assessment of factors influencing concern over climate change in the U.S., 2002–2010. Clim Chang 114:169–188

Bullock JG (2011) Elite influence on public opinion in an informed electorate. Am Political Sci Review 105:496–515

Carmichael JT, Brulle RJ (2017) Elite cues, media coverage, and public concern: an integrated path analysis of public opinion on climate change, 2001–2013. Environ Politics 26(2):232–252. https://doi.org/10.1080/09644016.2016.1263433

Carmichael JT, Brulle RJ, Huxster JK (2017) The great divide: understanding the role of media and other drivers of the partisan divide in public concern over climate change in the USA, 2001–2014. Clim Chang 141:599–612. https://doi.org/10.1007/s10584-017-1908-1

Cook J, Oreskes N, Doran PT, Anderegg WRL, Verheggen B, Maibach EW, Carlton JS, Lewandowsky S, Skuce AG, Green SA, Nuccitelli D, Jacobs P, Richardson M, Winkler B, Painting R, Rice K (2016) Consensus on consensus: a synthesis of consensus estimates on human-caused global warming. Environ Res Lett 11(4). https://doi.org/10.1088/1748-9326/11/4/048002

Cook J, Lewandowsky S, Ecker UKH (2017) Neutralizing misinformation through inoculation: exposing misleading argumentation techniques reduces their influence. PLoS One. https://doi.org/10.1371/journal.pone.0175799

Corner A, Whitmarsh L, Xenias D (2012) Uncertainty, scepticism and attitudes towards climate change: biased assimilation and attitude polarisation. Clim Chang 114(3–4):463–478

Darmofal D (2005) Elite cues and citizen disagreement with expert opinion. Political Res Quarterly 58(3):381–395

Ding D, Maibach EW, Zhao X, Roser-Renouf C, Leiserowitz A (2011) Support for climate policy and societal action are linked to perceptions about scientific agreement. Nat Clim Chang 1:462–466. https://doi.org/10.1038/NCLIMATE1295

Drummond C, Fischhoff B (2017) Individuals with greater science literacy and education have more polarized beliefs on controversial science topics. Proc Natl Acad Sci. https://doi.org/10.1073/pnas.1704882114

Dunlap RE, McCright AM (2015) Challenging climate change: the denial countermovement. Pp. 300–332 in Dunlap RE, Brulle RJ (eds), Climate Change and Society: Sociological Perspectives. New York: Oxford University Press

Dunlap RE, McCright AM, Yarosh JH (2016) The political divide on climate change: partisan polarization widens in the U.S. Environment 58(5):4–23. https://doi.org/10.1080/00139157.2016.1208995

Ehret PJ, Sparks A, Sherman D (2017) Support for environmental protection: an integration of ideological-consistency and information-deficit models. Environ Politics 26(2):253–277. https://doi.org/10.1080/09644016.2016.1256960

Frimer JA, Skitka LJ, Motyl M (2017) Liberals and conservatives are similarly motivated to avoid exposure to one another’s opinions. J Exp Soc Psychol 72:1–12. https://doi.org/10.1016/j.jesp.2017.04.003

Gauchat G (2012) Politicization of science in the public sphere: a study of public trust in the United States, 1974 to 2010. Am Sociol Rev 77:167–187. https://doi.org/10.1177/0003122412438225

Guess A, Nyhan B, Reifler J (2018) Selective exposure to misinformation: evidence from the consumption of fake news during the 2016 U.S. presidential campaign. Working paper available at: https://t.co/lUN71y3DhT

Hamilton LC (2008) Who cares about polar regions? Results from a survey of U.S. public opinion. Arct Antarct Alp Res 40(4):671–678

Hamilton LC (2011) Education, politics and opinions about climate change: evidence for interaction effects. Clim Chang 104:231–242. https://doi.org/10.1007/s10584-010-9957-8

Hamilton LC (2012) Did the Arctic ice recover? Demographics of true and false climate facts. Weather, Climate, Soc 4(4):236–249. https://doi.org/10.1175/WCAS-D-12-00008.1

Hamilton LC (2015a) Polar facts in the age of polarization. Polar Geogr 38(2):89–106. https://doi.org/10.1080/1088937X.2015.1051158

Hamilton LC (2015b) Conservative and liberal views of science: does trust depend on topic? Durham, NH: Carsey School of Public Policy. http://scholars.unh.edu/carsey/252/

Hamilton LC (2016a) Public awareness of the scientific consensus on climate. SAGE Open. https://doi.org/10.1177/2158244016676296

Hamilton LC (2016b) Where is the North Pole? An election-year survey on global change. Durham, NH: Carsey School of Public Policy. http://scholars.unh.edu/carsey/285/

Hamilton LC (2017) Public acceptance of human-caused climate change is gradually rising. Durham, NH: Carsey School of Public Policy. http://scholars.unh.edu/carsey/322/

Hamilton LC, Saito K (2015) A four-party view of U.S. environmental concern. Environ Politics 24(2):212–227. https://doi.org/10.1080/09644016.2014.976485

Hamilton LC, Cutler MJ, Schaefer A (2012) Public knowledge and concern about polar-region warming. Polar Geogr 35(2):155–168. https://doi.org/10.1080/1088937X.2012.684155

Hamilton LC, Hartter J, Lemcke-Stampone M, Moore DW, Safford TG (2015a) Tracking public beliefs about anthropogenic climate change. PLoS One 10(9):e0138208. https://doi.org/10.1371/journal.pone.0138208

Hamilton LC, Hartter J, Saito K (2015b) Trust in scientists on climate change and vaccines. SAGE Open. https://doi.org/10.1177/2158244015602752

Hamilton LC, Saito K, Loring PA, Lammers RB, Huntington HP (2016a) Climigration? Population and climate change in Arctic Alaska. Popul Environ 38(2):115–133. https://doi.org/10.1007/s11111-016-0259-6

Hamilton LC, Hartter J, Keim BD, Boag AE, Palace MW, Stevens FR, Ducey MJ (2016b) Wildfire, climate, and perceptions in Northeast Oregon. Reg Environ Chang 16:1819–1832. https://doi.org/10.1007/s10113-015-0914-y

Hamilton LC, Lemcke-Stampone M, Grimm C (2018a) Cold winters warming? Perceptions of climate change in the North Country. Weather, Climate, and Soc. https://doi.org/10.1175/WCAS-D-18-0020.1

Hamilton LC, Bell E, Hartter J, Salerno JD (2018b) A change in the wind? U.S. public views on renewable energy and climate compared. Energy, Sustainability Soc 8(11). https://doi.org/10.1080/09644016.2018.1423909

Jost JT (2017) Asymmetries abound: ideological differences in emotion, partisanship, motivated reasoning, social network structure, and political trust. J Consum Psychol 27(4):546–553. https://doi.org/10.1016/j.jcps.2017.08.004

Kahan DM (2015) Climate science communication and the measurement problem. Advances Political Psychology 36(1):1–43. https://doi.org/10.1111/pops.12244

Kahan DM, Jenkins-Smith H, Braman D (2011) Cultural cognition of scientific consensus. J Risk Res 14(2):147–174. https://doi.org/10.1080/13669877.2010.511246

Kahan DM, Peters E, Wittlin M, Slovic P, Ouellette LL, Braman D, Manel G (2012) The polarizing impact of science literacy and numeracy on perceived climate change risks. Nat Clim Chang 2(10):732–735. https://doi.org/10.1038/NCLIMATE1547

Kay AC, Whitson JA, Gaucher D, Galinsky AD (2009) Compensatory control: achieving order through the mind, our institutions, and the heavens. Curr Dir Psychol Sci 18(5):264–268

Kraft PW, Lodge M, Taber CS (2015) Why people ‘Don’t trust the evidence’: motivated reasoning and scientific beliefs. Annals, American Academy Political Social Sci 658:121–133. https://doi.org/10.1177/0002716214554758

Kunda Z (1990) The case for motivated reasoning. Psychol Bull 108(3):480–498

Leiserowitz A, Smith N, Marlon JR (2010) Americans’ Knowledge of Climate Change. (New Haven, CT: Yale Project on Climate Change Communication). Available online at http://environment.yale.edu/climate/files/ClimateChangeKnowledge2010.pdf

Leiserowitz A, Maibach E, Roser-Renouf C, Hmielowski JD (2011) Politics & global warming: Democrats, Republicans, Independents, and the Tea Party. New Haven, CT: Yale Project on Climate Change Communication. Available online at http://environment.yale.edu/climate/files/PoliticsGlobalWarming2011.pdf accessed 12/5/2014

Lewandowsky S, Gignac GE, Vaughan S (2013) The pivotal role of perceived scientific consensus in acceptance of science. Nat Clim Chang 3:399–404. https://doi.org/10.1038/nclimate1720

McCright AM, Dunlap RE (2011) The politicization of climate change and polarization in the American public’s views of global warming, 2001–2010. Sociol Q 52:155–194

Maibach E, Myers T, Leiserowitz A (2014) Climate scientists need to set the record straight: there is a scientific consensus that human-caused climate change is happening. Earth’s Future 2(5):295–298. https://doi.org/10.1002/2013EF000226

Malka A, Krosnick JA, Langer G (2009) The association of knowledge with concern about global warming: trusted information sources shape public thinking. Risk Anal 229(5):633–647

Marino E (2015) Fierce climate, sacred ground. University of Alaska Press, Fairbanks

Nadelson L, Jorcyk C, Yang D, Smith MJ, Matson S, Cornell K, Husting V (2014) I just don’t trust them: the development and validation of an assessment instrument to measure trust in science and scientists. Sch Sci Math 114:76–86. https://doi.org/10.1111/ssm.12051

National Science Board (2010) Science and engineering indicators 2010 (Arlington, VA: National Science Foundation). Available online at http://www.nsf.gov/statistics/seind10/pdf/seind10.pdf

Rodriguez CG, Moskowitz JP, Salem RM, Ditto PH (2017) Partisan selective exposure: the role of party, ideology and ideological extremity over time. Translational Issues Psychological Sci 3(3):254–271. https://doi.org/10.1037/tps0000121

Roos JM (2014) Measuring science or religion? A measurement analysis of the National Science Foundation sponsored science literacy scale 2006–2010. Public Underst Sci 23(7):797–813. https://doi.org/10.1177/0963662512464318

Saad L (2017) Global warming concern at three-decade high in U.S. Gallup News. http://news.gallup.com/poll/206030/global-warming-concern-three-decade-high.aspx

Shao W (2016) Weather, climate, politics, or God? Determinants of American public opinions toward global warming. Environ Politics 26(1):71–96. https://doi.org/10.1080/09644016.2016.1223190

Suldovsky B (2017) The information deficit model and climate change communication. Climate Change Communication. https://doi.org/10.1093/acrefore/9780190228620.013.301

Taber CS, Lodge M (2006) Motivated skepticism in the evaluation of political beliefs. Am J Polit Sci 50(3):755–769

USACE (2009) Alaska Baseline Erosion Assessment: Study Findings and Technical Report. U.S. Army Corps of Engineers. http://www.poa.usace.army.mil/Portals/34/docs/civilworks/BEA/ AlaskaBaselineErosionAssessmentBEAMainReport.pdf

van der Linden SL, Leiserowitz AA, Feinberg GD, Maibach EW (2015) The scientific consensus on climate change as a gateway belief: experimental evidence. PLoS One 10(2):e0118489. https://doi.org/10.1371/journal.pone.0118489

van der Linden SL, Leiserowitz A, Maibach E (2017a) Scientific agreement can neutralize politicization of facts. Nature Human Behavior. https://doi.org/10.1038/s41562-017-0259-2

van der Linden S, Leiserowitz A, Rosenthal S, Maibach E (2017b) Inoculating the public against misinformation about climate change. Global Challenges. https://doi.org/10.1002/gch2.201600008

Washburn AN, Skitka LJ (2017) Science denial across the political divide: liberals and conservatives are similarly motivated to deny attitude-inconsistent science. Soc Psychol Personal Sci. https://doi.org/10.1177/1948550617731500

Acknowledgments

Additional climate questions on the Granite State Poll have been supported by the Carsey School of Public Policy and the Sustainability Institute at the University of New Hampshire. Any opinions, findings, conclusions, or recommendations in this paper are those of the author, and do not necessarily represent the views of the National Science Foundation or other supporting organizations.

Funding

The POLES survey and polar questions on the Granite State Poll were supported through the PoLAR Partnership grant from the National Science Foundation (DUE-1239783).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Rights and permissions

About this article

Cite this article

Hamilton, L.C. Self-assessed understanding of climate change. Climatic Change 151, 349–362 (2018). https://doi.org/10.1007/s10584-018-2305-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10584-018-2305-0